Abstract

This research aims to overcome the difficulties associated with visualizing underground utilities by proposing six interactive visualization methods that utilize Mixed Reality (MR) technology. By leveraging MR technology, which enables the seamless integration of virtual and real-world content, a more immersive and authentic experience is possible. The study evaluates the proposed visualization methods based on scene complexity, parallax effect, real-world occlusion, depth perception, and overall effectiveness, aiming to identify the most effective methods for addressing visual perceptual challenges in the context of underground utilities. The findings suggest that certain MR visualization methods are more effective than others in mitigating the challenges of visualizing underground utilities. The research highlights the potential of these methods, and feedback from industry professionals suggests that each method can be valuable in specific contexts.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Various industries, including Architectural Engineering and Construction (AEC), have been captivated by reality technologies such as Virtual Reality (VR), Augmented Reality (AR), and Mixed Reality (MR) (Stylianidis et al. 2020). These technologies establish an immersive and collaborative environment, facilitating the visualization of digital information. Notably, AR has been extensively examined due to its diverse applications across various phases of the construction project life cycle, including design, construction, finishing, inspection, and maintenance (Fenais et al. 2020; Keil et al. 2020; Nnaji and Karakhan 2020).

Leveraging reality technologies for underground utility visualization offers numerous advantages in real-world scenarios. The current practice relies on manual excavation and 2D plans on-site, where utility locators mark underground utilities with spray paint, which often disappears during excavation, leading to challenges and increased risks of damage, higher repair costs, project delays, and potential casualties. In contrast, AR/MR technology provides continuous, comprehensive visualization during excavation, seamlessly integrating with the real-world environment. This approach eliminates the need for physical markings on the ground, offering benefits from the planning phase to post-construction maintenance of utilities.

AR is a technology that overlays virtual content with the real environment, enabling hybrid visualization of digital information through devices such as smartphones, tablets, and smart glasses. However, this overlaying of virtual content over the real environment can create perceptual issues when visualizing subsurface objects (Sidani et al. 2021). Various studies have explored visual cues to improve the depth perception of subsurface objects, particularly underground utilities (Zollmann et al. 2014). However, existing AR visualization methods for underground utilities also suffer from other perceptual issues such as scene complexity, occlusion of the real environment, and the parallax effect. Scene complexity refers to the overcrowding of virtual objects, while occlusion occurs when real-world features are hidden behind virtual objects, making it difficult to identify potential hazards. The parallax effect on underground utilities is caused by user motion and utility depth, causing utilities to drift continuously until the user reaches their true horizontal position (Muthalif et al. 2022b). These challenges have not been addressed in previous studies.

While AR superimposes virtual elements onto the real-world environment, MR takes this a step further. MR seamlessly integrates virtual content with the real world by analyzing the scene’s geometry, allowing for a more immersive experience. Additionally, MR enables interaction between virtual and physical objects, creating a dynamic environment where digital and real-world elements can coexist and interact (Muthalif et al. 2022b). This technology allows digital information to be constructed virtually as holograms within the real world, enabling immersive 3D visualization (Tourlomousis and Chang 2017). MR is achievable through the utilization of MR Head Mounted Displays (HMDs) equipped with sensors like depth cameras, facilitating the creation of a real-time digital representation of the physical world. The adoption of MR has surged, especially with the advent of sophisticated HMDs like Microsoft HoloLens. These devices integrate a range of sensors, Central Processing Unit (CPU), and Holographic Processing Units (HPU), allowing for device orientation and positioning through a pre-built 3D map that captures the real-world geometry.

Past research on visualization methods for underground utilities primarily leaned on AR techniques, overlaying virtual content onto the real environment without accounting for real-world geometry (Eren and Balcisoy 2017; Piroozfar et al. 2021; Schall et al. 2010; Stylianidis et al. 2020; Zollmann et al. 2014). Furthermore, the utilized devices lacked features for an immersive experience, such as hand/eye gestures, broader fields of view, and hands-free functionalities present in MR devices. Despite the advantages of MR over AR, the potential of MR for visualizing underground utilities has not been explored. Specifically, an evaluation and comparison of different MR visualization methods in the context of underground utilities has not been done, as emphasized by Kopsida and Brilakis (2020). This paper addresses this gap by introducing and comparing six MR visualization methods and seeking feedback from industry professionals on their potential use cases. Different visualization methods can prove beneficial in various practical scenarios, spanning different stages of construction, including design, planning, excavation, construction, inspection, and maintenance.

The primary objective of this research is to introduce and evaluate state-of-the-art visualization methods that leverage advanced visual cues, with an emphasis on incorporating ground topology and facilitating seamless interaction with virtual content, all of which are made possible through MR technology. The methodology underpinning this paper includes four phases. Firstly, six interactive MR visualization methods are introduced. Following this, these proposed methods are implemented on a Microsoft HoloLens 2, an MR optical-see-through (OST) HMD. Then, these methods undergo an evaluation process by conducting a user survey involving industry professionals. This evaluation is conducted under the visual perceptual challenges identified through an in-depth literature review. Finally, the evaluation is then analyzed quantitatively to identify the benefits and limitations of the proposed MR visualization methods.

The rest of the paper is structured as follows: Sect. 2 explores past research on diverse immersive visualization methods. Section 3 introduces six visualization methods used in the user survey while Sect. 4 elaborates on the implementation of those methods. Section 5 describes the user evaluation, covering variables and experiment details, and Sect. 6 presents the results. The results are discussed in Sect. 7, with limitations of the experiment. Finally, Sect. 8 concludes the paper, offering insights into future work.

2 Related Work

Based on the literature, there are limited studies mainly focusing on using MR for subsurface utilities. However, various user studies have been conducted to assess diverse AR visualization techniques for subsurface utilities. Schall et al. (2009) performed a structured evaluation of a prototype designed for a mobile device. Their study involved professionals in the utility division, who analyzed various aspects of AR visualization for subsurface utilities, including the excavation tool. This tool creates a virtual cavity in the ground, which can enhance the depth perception of buried utilities by partially occluding them. The results of their user survey confirmed that the depth perception of buried utilities remained a significant challenge in their research. Industry experts participated in a similar study (Zollmann et al. 2012) that aimed to test their prototype developed for a tablet, to improve the depth perception of buried utilities and provide a clear spatial arrangement of real and virtual objects. The study utilized two AR visualization methods, namely a virtual pit or trench with depth contours, which created an X‑Ray visualization effect, and shadow visualization, which projected the utility’s orthogonal projection to the ground plane, including connecting lines. They also explored other AR visualization methods for subsurface utilities based on real-world image analysis (Zollmann et al. 2014), which included image-ghosting, alpha-blending, and edge-based ghosting. In another study, Eren and Balcisoy (2017) explored four AR visualization techniques, namely careless overlay, edge overlay, excavation box, and cross-sectional visualization, to mitigate depth perception issues for underground utilities. Their evaluation revealed that both excavation box and cross-sectional visualization methods performed similarly, effectively enhancing the understanding of the depth of underground utilities. Ortega et al. (2019) conducted a study comparing the effectiveness of virtual holes (similar to excavator tools/boxes in previous research) and a new approach using variable transparency levels called alpha-blending to visualize underground utilities. The transparency level is adjusted according to the distance from the user to the utility to minimize the visualization’s complexity when longer utilities are displayed. However, the evaluation involving 30 participants concluded that the excavator tool provided better depth cues than the other two methods. Based on the above research, the excavator tool method can be considered as one of the popular and effective ways to visualize underground utilities in AR.

Heinrich et al. (2019) reviewed five AR visualization methods, including pong shading, virtual mirror, depth-encoding silhouettes, pseudo-chromadepth, and supporting lines. These methods provide different depth cues to a virtual cube, and they were evaluated for their effectiveness in providing depth perception using a projective AR system. The virtual cubes were positioned inside a virtual box. The research team conducted a user survey to assess the efficiency of these visualization methods, and the results indicated that the supporting line method performed better than the other methods. This method draws lines from cubes at a different depth to the projected shadow of those cubes on a ground plane line. Becher et al. (2021) conducted a study on a combination of shadow anchors and grids to provide depth cues and better positioning of underground objects. They evaluated the effectiveness of these visualization methods in a user study, which revealed that the use of shadow anchors, along with a virtual grid on the real surface, resulted in better depth perception and less workload for the user compared to using no visual cue or only a grid without shadow anchors. This research suggests that using shadow/connecting lines can improve the depth perception of subsurface and underground objects.

The above-mentioned visualization methods have been created and evaluated using Hand Held Devices (HHD)s such as smartphones and tablets. These methods are restricted to resolving visual perception difficulties and do not take into account the actual environment’s topology, which could offer a solution to these issues. Additionally, only a small number of studies have explored the impact of topology on visualization techniques. Hansen et al. (2021) utilized an iPad equipped with Lidar sensors to enhance the blending of virtual models with real environments by incorporating ground topology. They created a visualization technique that projected underground utilities onto the ground plane, or shadows, to account for surface undulations like curbs and pits. However, this approach only displays the utilities’ projections rather than the actual utilities themselves. They also introduced “virtual daylighting,” a point cloud visualization of an excavation pit that resembles the X‑Ray box/hole tool techniques previously discussed. This approach relies on the availability of pre-captured point clouds, which is a limitation.

Muthalif et al. (2022b) reviewed existing AR visualization methods for subsurface utilities and objects and categorized them into six groups: X‑Ray view (Hansen et al. 2020; Ortega et al. 2019; Piroozfar et al. 2021), transparent view (Feiner and Seligmann 1992; Ortega et al. 2019), shadow view (Becher et al. 2021; Heinrich et al. 2019; Stylianidis et al. 2020), topo view (Cote and Mercier 2018), image rendering (Chen et al. 2010; Zollmann et al. 2014), and cross-section view (Baek and Hong 2013; Eren and Balcisoy 2017). They also examined various aspects of AR and the challenges associated with AR visualization for subsurface utilities, including a detailed discussion of visual perceptual challenges. These challenges were discussed as depth perception quality, real-world occlusion, visualization complexity, and parallax effects on subsurface utilities. Furthermore, the authors visually compared the classified AR visualization methods based on these criteria and concluded that although the existing visualization methods work well in certain settings, they have limitations in specific applications such as underground utility networks. Hence, they identified the need for effective visualization methods for underground utilities as no study has yet compared different visualization methods implemented with MR capabilities for underground utilities making this study as the first of its kind.

3 MR Visualization Methods

The visualization methods considered for this study are based on the methods developed by Muthalif et al. (2022a). These methods are categorized into two groups, namely “Overall Views” and “Specific Views,” each encompassing three visualization methods. These methods are defined in this section to explain their capabilities and differences in visualizing underground utilities. To clarify these methods, some figures and QR codes for the videos have been prepared. In addition, a sample dataset was prepared to showcase these methods by visualization of diverse underground utilities, including drinking water, recycled water, stormwater drains, sewer, gas, and electricity, as illustrated in Fig. 1.

3.1 Overall Views (Scenario One)

In the first scenario, known as the “Overall view,” the objective is to visually represent all the utilities present at the site. The three proposed visualization methods in this scenario are “General view (G),” “General + range view (GR),” and “General + elevator view (GE)”.

3.1.1 General View (G)

The (G) visualization method is a combination of the shadow view and topo view methods presented in (Muthalif et al. 2022b). The shadow view method projects underground utilities onto the ground plane vertically, aiding in horizontal location determination. This method employs connecting lines between utilities and their shadows on the ground surface. Conversely, the topo view exclusively displays shadows without revealing the actual underground utilities, providing a simpler visualization but lacking detailed utility information such as depth. Therefore, (G) integrates both methods to present underground utilities and their shadows while minimizing scene complexity. The user can choose a particular utility, triggering the highlighting of both the utility itself, its corresponding shadow, and the connecting lines, as shown in Fig. 2. Additionally, this method offers an attribute window for the selected utility, which dynamically moves with the user.

3.1.2 General + Range View (GR)

The (GR) visualization method aims to further simplify scene complexity beyond what is accomplished by (G). This method distinguishes itself from (G) by displaying shadows of unselected utilities only within a range from the user (Fig. 3). The choice of range is arbitrary, with the main objective being to evaluate the impact of having fewer virtual elements in the visualization compared to the previous method. Similar to the (G) method, selecting a utility highlights it, its complete shadow and the connecting lines. This method also includes a user-tracked attribute table for the selected utility.

3.1.3 General + Elevator View (GE)

The (GE) visualization method utilizes virtual reconstruction of the real-world environment to achieve occlusion of virtual objects located behind or beneath actual objects. This method allows the user to adjust the ground elevation virtually, providing a visualization as if the user is moving vertically. As a result, the user can easily visualize the location of underground utilities that are buried within a specific depth range. Additionally, this method allows the user to select a particular utility. when the selection is made, all other utilities disappear to reduce visual clutter in the scene, as shown in Fig. 4. Additionally, an attribute window for the selected utility, similar to the other two methods, appears and tracks the user’s head.

3.2 Specific Views (Scenario Two)

To provide a more detailed view of underground utilities in crowded areas, three visualization methods are presented in this scenario. These methods are “X-Ray Box View (X)”, “X-Ray Box + Depthslider View (XD)”, and “X-Ray Box + Clipping View (XC)”

3.2.1 X-Ray Box View (X)

The (X) visualization method introduces a virtual pit featuring depth contour lines on its walls. Within this pit, underground utilities are exclusively depicted, while their shadows extend beyond the pit. Notably, connecting lines between utilities and their shadows are exhibited along the pit walls (Fig. 5). Users can select a specific underground utility, prompting the highlighting of the utility, its shadow, connecting lines and dynamic attribute window similar to the previous methods. The pit can be moved to a desired location by the user (Fig. 11).

3.2.2 X-Ray Box + Depthslider View (XD)

The (XD) presents a similar virtual pit visualization as (X) but with the inclusion of a slider that allows users to adjust the depth of the virtual pit (Fig. 6). This feature enhances the capability to display numerous underground utilities within the desired depth. Moreover, the pit is automatically tracked to the user, unlike the (X) method where manual adjustment is required, although this tracking feature can be disabled if needed. Additionally, this method enables the selection of a specific utility, resulting in the highlighting of the utility, its corresponding shadow, and the connecting lines between them, alongside a dynamic attribute window like the previous methods.

3.2.3 X-Ray Box + Clipping View (XC)

The (XC) method uses a virtual pit similar to the previous two methods but with a unique approach. It clips the utilities inside the pit along the walls, leaving only a small portion extending from the walls (Fig. 7a). The purpose is to reduce complexity within the pit, especially in scenarios where many underground utilities are densely packed in a confined area. This method also provides a way to select a utility like the methods mentioned earlier. Once a utility is selected, the entire utility including its connecting lines and shadows is highlighted as shown in Fig. 7b. Additionally, this method includes a tracking function for the pit like in the (XD) method.

With the introduction of these methods, their implementations are explained in the next section.

4 Implementation of the Visualization Methods

In this study, we have employed a variety of tools and technologies to develop the MR application featuring our visualization methods. In this section, these tools and technologies are explained first and then, the implementation of these methods is discussed.

4.1 Tools and Technologies

The tools and technologies to develop the visualization methods include the Unity game engine, Mixed Reality Toolkit (MRTK), HoloLens 2 and the Vuforia engine. Each of these plays a crucial role in the creation of the demo application and the implementation of the visualization methods.

4.1.1 Unity Game Engine

Unity serves as our development environment for designing and building the application. It offers a robust set of tools specifically designed for creating MR applications. These tools include a user-friendly interface, a powerful physics engine, and support for scripting languages like C# and JavaScript. In addition to its core features, Unity provides access to an extensive asset store filled with pre-made assets, plugins, and tools that can accelerate development and enhance functionality. For our application, which focuses on visualization techniques, we utilized Unity Game Engine version 2019.4.28f1 to take advantage of its capabilities and facilitate efficient development.

4.1.2 MRTK

Alongside the Unity game engine, we integrated MRTK, an open-source toolkit designed to facilitate the development of MR applications. MRTK provides developers with essential tools, components, and resources tailored for platforms like Microsoft HoloLens and other MR devices. These pre-built components for common MR interactions include hand tracking, spatial mapping, gesture recognition, and spatial awareness. MRTK version 2.7.1 was used in our MR application development.

4.1.3 MR Device

MR devices can generally be classified into two main types: Video See-Through (VST) HMD and OST HMD. VST HMDs typically use built-in video cameras to capture the real world and then electronically blend virtual content with a video representation of the actual environment (Rolland et al. 1994). These MR HMDs often require an external power supply or connection to a computer due to high power consumption or performance limitations (Canon 2020; Varjo 2021). On the other hand, OST HMDs feature optical lenses in front of the user’s eyes, reflecting virtual content while enabling the user to see through them (Rolland et al. 1994). We chose the HoloLens 2 which is an OST HMD for several reasons. Its integrated power supply allows for wireless operation, enhancing user mobility. With its lightweight design and adjustable headband, the HoloLens 2 ensures comfort during extended use. Additionally, it offers a larger field of view compared to many other MR devices, providing a more immersive experience. Microsoft’s extensive developer support, which includes thorough documentation and seamless integration with Unity, further adds to its suitability for our research.

4.1.4 Digital Content Registration

Registering digital content involves placing it accurately into a physical environment. This process employs two primary methods: marker-based and markerless. In the marker-based approach, markers like images, QR codes, or physical objects are used to initially place digital content in the real world. However, this method requires precise measurement of markers both physically and digitally within the MR application (Unity project). On the other hand, markerless methods utilize sensor fusion, combining technologies such as the Global Navigation Satellite System (GNSS) for accurate positioning, Time-of-Flight (ToF) depth sensors for spatial dimensions, and Inertial Measurement Unit (IMU) for orientation and tracking. These components help estimate the MR device’s pose and integrate digital content into the physical environment. However, markerless methods often have lower positional accuracy compared to marker-based methods (Cheng et al. 2017). For this study, a marker-based method was chosen for digital content registration due to its simplicity and accuracy, which align with the experiment’s objectives.

Several Software Development Kits (SDKs) are available in the market, offering both marker-based and markerless registration methods. Examples include ARkit (iOS) (Oufqir et al. 2020), ARcore (Android) (Oufqir et al. 2020), Wikitude (Akin and Uzun 2022), Vuforia (Sarosa et al. 2019), Mapbox (Arifiani et al. 2021), and EasyAR (Bintang et al. 2020). These SDKs provide developers with tools, libraries, and resources tailored to their needs, often including functionalities like image recognition, object tracking, motion tracking, and plane detection.

In this research, we employ the Vuforia SDK which offers reliable tracking capabilities, adept at handling both simple and complex objects, which is crucial for creating immersive MR experiences. For our experiment, we utilize a Vuforia image marker measuring 30 cm by 20 cm (Fig. 8a), to achieve high accuracy in coordinate system transformation between the real world and the device. Figure 8b shows the matched feature points in the image by the HoloLens camera for the coordinate system transformation.

a Image marker (Mazzanti 2022) b Matched feature points (yellow)

4.1.5 Virtual Model for Underground Utilities

A significant challenge in utilizing MR technology to visualize underground utilities is obtaining accurate positional information to create the virtual model. Since the main objective of this study is to evaluate the effectiveness of various visualization methods in overcoming perceptual challenges, a deliberate decision was made to create synthetic utilities to showcase the proposed visualization techniques. As a result, all underground utilities depicted in these visualizations are represented as straight lines with consistent depths, allowing for a clear and straightforward demonstration (Fig. 2).

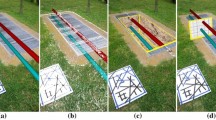

4.1.6 Video Recording of the Visualization Methods

We opted to record videos of the proposed visualization methods indoors for two main reasons: firstly, the HoloLens tends to encounter tracking issues in open outdoor environments, especially when there are not enough features nearby for mapping. Additionally, in outdoor settings, the virtual content becomes difficult to see due to glare from bright sunlight. However, this could be addressed by adding a sunshade to the visor. To ensure accurate tracking, we scanned the hall of a building using the HoloLens, thereby avoiding any potential tracking issues. Following this, we placed a printed image marker on a flat concrete slab to facilitate the transformation of the virtual model from the application’s coordinate system to the real-world coordinate system (Fig. 9). Once the anchoring process was completed, we utilized the HoloLens’s Photo/Video (PV) camera/Reality Capture to record the videos for all six visualization methods developed. These recorded videos were later edited and annotated to enhance participants’ comprehension before being embedded in an online questionnaire distributed among the participants.

4.2 Method Implementation

In this section, the implementation of the six visualization methods that were previously introduced in Sect. 3.1 is discussed. This section provides detailed insights into the development of these methods.

4.2.1 General View (G)

In (G) method, the aim is to allow users to select a specific underground utility by choosing its vertical projection/shadow on the surface. In MR, selecting a virtual element is done through raycasting, where a virtual line projected from the user’s wrist enables interaction with objects beyond arm’s reach (Muthalif et al. 2022a). Illumination of utilities is achieved using pre-existing features such as “Rim light” and “Emission” within the MRTK standard material.

4.2.2 General + Range View (GR)

The main objective of (GR) is to have fewer virtual elements in the visualization compared to other methods. The shadow projections are dynamically updated in real time as the user moves. The transparency of the shadows for unselected utilities is adjusted, ranging from 100% (invisible) to 0% (completely opaque) at a specific distance. This creates the perception that the shadows are projected only up to a certain distance (e.g. 5 m). Achieving this involves using an object material with the capability to adjust transparency based on the user’s real-time positions (Fig. 4).

4.2.3 General + Elevator View (GE)

The (GE) visualization method makes use of HoloLens’s spatial mapping ability, which is achieved through its depth cameras. This function hides virtual underground utilities behind the actual ground. By default, it visualizes a triangular mesh of the real environment mapping. However, it can be turned off in spatial mapping observer settings in MRTK, providing a clear view of the real environment, as shown in Fig. 5.

To simulate an elevator experience, users can use the slider provided in the user menu to descend through the underground, as shown in Fig. 10a. By using the slider, only the underground utilities within the chosen depth range and the actual ground level become visible, as shown in Fig. 10b.

4.2.4 X-Ray Box View (X)

The creation of the virtual pit in (X) method employs a shader configured to disable depth buffer writing and activate back-face culling. An additional feature allows users to manually reposition the virtual pit by using the index finger and thumb to pinch when the Raycast intersects with the virtual object and releasing the fingers when they reach the desired location (Fig. 11).

4.2.5 X-Ray Box + Depthslider View (XD)

The (XD) utilizes MRTK’s pinch slider button to slide the pit base to the desired depth, facilitating the exploration of utilities within specific depth ranges without cluttering the visualization with unnecessary details (Fig. 12b). Moreover, this method incorporates MRTK’s tracking functionality that eliminates the necessity of manually moving the virtual pit using HoloLens Raycast (as seen in the (X) method). Instead, the virtual pit tracks the user’s head, continuously moving along the ground surface until the user deactivates the “tracking off” feature (Fig. 12a). This tracking function enhances convenience and offers a potential solution for issues that may arise from the significant occlusion of the real world by virtual content over time. For instance, if the virtual pit remains stationary at a specific location while the user moves around, it may obstruct a substantial portion of the real environment, depending on its size. Allowing the pit to move continuously with the user enhances the likelihood of identifying potential hazards in the real environment.

4.2.6 X-Ray Box + Clipping View (XC)

The (XC) method shows all utilities within the pit with reduced complexity, this method addresses issues arising from obstructions caused by other utilities above a buried utility, a limitation not resolved in previous X‑ray box views but addressed in (XC). To achieve this, an invisible clipping box slightly smaller than the virtual pit size is utilized, which clips any objects passing through it while leaving a small portion of the object outside the box. Furthermore, this method incorporates a head-tracked pit similar to (XD). The head-tracking can be turned off when the pits need to be stationary when needed using the tracking off button (Fig. 13).

5 Evaluation

The developed visualization methods aim to address the visual perceptual challenges outlined in the literature (Muthalif et al. 2022b), including the complexity of the scene, parallax effects in utility visualization, depth perception of underground utilities, and occlusion of the real world by virtual content. Since user requirements may vary, it is difficult to create a single visualization method that can overcome all the problems. Thus, this study aims to evaluate the effectiveness of the developed MR visualization methods and determine if the identified visual perceptual issues should be considered when creating visualization techniques for underground utilities.

5.1 Participants

Professionals with experience in working with underground utilities were identified and contacted for their participation in this evaluation. After their confirmation, we shared the necessary documents explaining the research purpose and ethical considerations, along with a link to the survey questionnaire. A total of 69 professionals, including utility locating technicians, land surveyors, engineers, GIS technicians, and spatial data officers, responded to the survey. It was assumed that all participants had a similar level of familiarity with MR technology.

5.2 Dependent Variables

The evaluation of the visualization methods relied on the participants’ responses (ratings) to the questionnaire, which assessed the methods based on five criteria/factors: scene complexity, parallax effect, occlusion of the real world, depth perception, and effectiveness (Table 1). The questionnaire consisted of 15 questions, including four questions for each scenario (one and two), five questions for comparing visualization methods from both scenarios and two questions requesting the participant’s profession and contact details. To ensure fairness, participants were shown three videos before answering questions in each scenario. The first three questions asked the participants to “compare and rate” each method based on the five criteria, while the fourth question in each scenario asked the participants to explain their ratings and provide feedback. To assist with understanding the methods, participants could play the videos multiple times if needed.

The assessment of the visualization methods differed slightly between scenario one and scenario two. In scenario one, the entire MR scene was evaluated based on the five criteria, while scenario two focused on the visualization inside the virtual pit. To make it easier for participants, two slightly different descriptions were provided on separate questionnaire pages. For scenario one, a six-point Likert scale (Appendix, Fig. 19) was used to record the participants’ responses to all the visualization methods. An option “Not applicable” was given since the (GE) method only visualizes utility above ground, making depth perception irrelevant for this visualization method. In contrast, the “Not applicable” option was not provided in scenario two as all three visualization methods could be assessed in terms of all criteria. Therefore, a five-point Likert scale was provided for scenario two. Additionally, text spaces were provided in the questionnaire for participants to provide their comments and feedback in each scenario.

The questionnaire included two questions that asked participants to evaluate a visualization method from each scenario in terms of depth perception (G, X) and parallax effect (GE, X). In addition, two more questions were included to evaluate the effectiveness of the two scenarios for different purposes:

-

“Which scenario is more effective for excavation purposes?”

-

“Which scenario is more effective for general visualization purposes?”

5.3 Statistical Analysis

An online questionnaire was created and the web link to the survey was sent to the participants who agreed to participate. After receiving a satisfactory number of responses (69), the data was extracted from and saved on local drives for analysis.

5.3.1 Data Cleaning and Conversion

The questionnaire responses, which were in text format, were reviewed for data cleaning. Although 69 participants responded to the questionnaire, 15 responses had to be removed during the data cleaning process due to several reasons to prevent bias in the results, such as incomplete answers, the same responses to each question indicating insufficient time to view the videos and read the content, inconsistent or contradictory responses, and irrational answers. Hence, 54 responses were selected and used as outlier-free data for analysis. These responses were then converted into numerical values based on Fig. 14 to facilitate quantitative analysis. These numerical values represent the scores for each visualization method on the survey criteria.

5.3.2 Analysis Method

We utilized IBM SPSS software, a commonly used statistical tool, to analyze the converted responses. However, before analyzing the data, it was necessary to check whether the data met the assumptions of normality (i.e., a normal distribution of data) and homogeneity of variance (i.e., equal variance between groups) to determine the appropriate analysis method (parametric or nonparametric) for each survey criterion. In parametric analysis, normality and homogeneity of variance assumptions must be met. To test for normality, the Kolmogorov-Smirnov test (Massey 1951) was conducted on each variable factor. The results in Table 2 indicated that the Sig. values were significantly lower than 0.05 (95% confidence level), leading to the rejection of the null hypothesis and concluding that the data was not normally distributed. Therefore, homogeneity of variance test was not necessary, and a nonparametric analysis method was used to determine significant differences between visualization methods for each variable factor.

6 Results

The experiment’s results were analyzed using the Friedman test, a commonly used nonparametric statistical analysis method for assessing ordinal data such as ranks, ratios, and intervals of dependent variables from the same group of individuals or samples (Field and Hole 2002). This is also called the “method of ranks” (Sheldon et al. 1996). Unlike parametric tests, the Friedman test hypothesizes about the median instead of the mean, making it less sensitive to outliers and more appropriate for this experiment, which used the Likert scale for data collection. However, the Friedman test alone cannot identify which group has a significant difference from the others when there are three or more variables, requiring a post hoc test. In this study, the Wilcoxon signed-rank test with Bonferroni correction was used, a standard nonparametric data method that adjusts the p-value for multiple pairwise comparisons (Benavoli et al. 2016). This correction prevents type I error, which falsely rejects null hypotheses due to sampling error, and type II error, which fails to reject the null hypothesis when it should (Sedgwick 2012). While the Bonferroni correction can have adverse effects, it is commonly applied to smaller sample sizes (Armstrong 2014).

6.1 Scenario One

The chi-square and asymptotic significance values (Asym.sig) from the Friedman tests for the three visualization methods in scenario one are presented on the left side of Table 3. The middle section of the table displays the pairwise Z‑score and asymptotic significance values obtained from the Wilcoxon signed-rank test for each pair of visualization methods. The right side of the table indicates significant differences between each visualization method with respect to the survey criteria, using Bonferroni’s corrected p-value of 0.017, and identifies which method has a higher rank in each pair.

Based on Table 3, the survey criteria indicate that all three visualization methods in scenario one have performed similarly, except for the “real-world occlusion” criterion, where (GR) outperformed (G) and (GE) (bold Asymp.sig values). This is because (GR) only visualizes virtual content (utilities’ shadows) up to a certain range (5 m) and provides better visibility of the real world beyond that range. As a result, participants found it useful for reducing “real-world occlusion.” The depth perception was only evaluated for (G) and (GR), as (GE) visualizes underground utilities lifted above the ground, making depth perception irrelevant. Therefore, the Friedman test was not applied for this comparison, and the p-value for rejecting the null hypothesis was set to 0.05. A boxplot in Fig. 15 represents the responses for all three visualization methods in scenario one across the five survey criteria.

6.2 Scenario Two

The responses obtained for the visualization methods in scenario two differed significantly from those in scenario one. Table 4 indicates that “Scene complexity”, “Parallax effect”, and “Effectiveness” had asymptomatic significance values that were less than 0.05 (bold Asymp.sig values). The Wilcoxon Signed-Rank Test showed that (XC) was rated higher than (X) and (XD) in terms of “Scene complexity” and “Parallax effect,” indicating that participants found it helpful to clip utilities within the virtual pit to reduce scene complexity and parallax effect. The three visualization methods performed similarly in terms of “Real-world occlusion” and “Depth perception” as their asymptomatic significance values from the Friedman test were above 0.05. In terms of overall “Effectiveness,” (XD) was rated higher than (X) according to the Wilcoxon test, indicating that the ability to adjust the depth of the virtual pit is more effective. However, after applying the Bonferroni corrections, which adjusted the p-value to 0.017, it was concluded that there were no significant differences between (XC) and (X). Figure 16 shows a boxplot of all the responses obtained for the visualization methods against all five survey criteria.

6.3 Scenarios One and Two

Two additional questions were posed to the participants to assess the “depth perception” and “parallax effect” for two pairs of visualizations (X-G) and (X-GE) from both scenarios. The results of the Wilcoxon test in Table 5 indicate that (X) is better in terms of depth perception than (G), while there is no significant difference in the confusion caused by the parallax effect between (X) and (GE). As only two visualization methods are being compared separately, the Bonferroni correction is not applied, and the p-value for this test is 0.05. Figure 17 shows the boxplot of the responses obtained for this pairwise evaluation. The possible use cases for scenarios one (G) and two (X) are presented in Fig. 18, demonstrating that the visualization methods in scenario two are preferable for excavation purposes. In contrast, there is no clear winner between scenarios one and two for general visualization purposes, such as preliminary inspection for planning works. Therefore, the choice of methods for general visualization purposes is subjective and dependent on the user’s individual preference.

7 Discussion

The results of the experiment highlight that users recognize the significance and impact of the parallax effect, scene complexity, and real-world occlusion in visualizing underground utilities using MR. Unlike many prior studies that concentrated solely on improving depth perception (Becher et al. 2021; Eren and Balcisoy 2017; Heinrich et al. 2019; Kalkofen et al. 2009; Ping et al. 2020). this research emphasizes the importance of considering these additional factors in the design and development of new methods. Furthermore, the methods employed in this study provide more innovative ways of interacting with virtual elements compared to earlier techniques, which limited users to holding handheld devices and interacting solely on a display. Furthermore, distinct methods exhibit the potential for application in various use cases. While this study focused on excavation scenarios and general visualization, the key insight from the experiment is that each visualization method holds the potential to convey distinct information across diverse use cases.

The statistical analysis of scenario one indicates that only one survey criterion had significant differences among the three visualization methods. In contrast, the results of scenario two show that there were significant differences in three survey criteria among the visualization methods. Further discussions based on participant feedback and prior experience with MR technology can shed light on these results.

7.1 Scenario One

The survey results indicate that there were no significant differences in “scene complexity” between the three visualization methods. Many participants reported that the virtual content was presented in a less cluttered manner, making it easier to interpret. For instance, both (G) and (GR) depict utility shadows using narrow and parallel lines. However, in a real-life scenario where utilities cross and overlap, (GR) may be more effective as it limits visualization to a 5 m range, reducing complexity. (GE) simplifies the scene by hiding all but the selected utility, making it comparable to the other two methods. It may perform well when numerous overlapping utilities require visualization because it allows users to adjust the depth range and hide unimportant utilities.

(GE) was mainly created to study the impact of parallax on underground utilities by elevating them above ground. However, it did not receive higher ratings than the other two methods. Participant feedback suggests that the visual cue provided by the connecting lines between the utilities and shadows (G, GR) and the actual ground (GE) helps reduce confusion caused by the “parallax effect” when users move. Only a few participants reported confusion due to the parallax effect in (G, GR), and (GE) was preferred as resolution.

Most participants expressed concern about real-world occlusion, which is a safety issue. Therefore, (GR) received higher ratings than (G, GE) as it provides the most real-world visibility. Participants provided negative feedback for (GE) in terms of real-world occlusion as the size of the utilities increases when raised above ground, causing more occlusion than (GR). However, this can be controlled by not lifting the utilities too high above the ground and keeping them lower unless a close look at the selected utility is required.

Since (GE) raises the utilities above ground level, it was not evaluated for “depth perception” since the utilities are not visualized below ground. Although participants rated (G) and (GR) similarly in terms of depth perception, it is important to note that the videos were recorded indoors on a plain concrete platform without any above-ground features such as trees or curbs. Therefore, the results may vary when real-world objects are present, as the virtual utilities may appear over the objects, leading to poor depth perception. However, (GE) behaves differently as it uses the real-world construction. When the utility is raised above the ground, it allows for the occlusion of the virtual utility by the closer real object, providing more convenient visualization.

The participants’ feedback did not show any significant difference in the “effectiveness” of the three visualization methods, as each method had its advantages and disadvantages based on the user’s specific needs. For example, some participants rated (G) higher as it provided a comprehensive view of all utilities at the site, while others preferred (GR) due to its less cluttered visualization. However, some participants also pointed out that (GR) had limitations in terms of range and could not show the location of utilities beyond a specific range.

Similarly, (GE) received higher ratings from participants who valued more control over the visualization and the ability to bring complex areas with many utility fittings up to eye level, including vertical bends and pipe joints. However, (G) and (GR) visualized utilities underground, which may not be the most suitable for this purpose.

Participants also raised safety concerns, particularly for (GE), as it could block the real world’s view depending on the number of utilities visualized above-ground, requiring the user to stop walking until selecting a utility. Despite some confusion with (GE), participants acknowledged its potential in designing and visualizing indoor subsurface utilities such as wires and pipes within walls and HVAC systems.

7.2 Scenario Two

The ratings for the visualization methods in scenario two differed significantly, unlike in scenario one. Participants rated (XC) higher than the other two methods regarding “scene complexity” within the virtual pit, as it clips the underground utilities along the pit walls, except for a small portion, resulting in less clutter. (XC) received better ratings due to its simplicity. Although (XD) received positive feedback, it was not significant enough to rate higher than (X) after the p-value was adjusted (0.017). However, (XD) offers flexibility in reducing the scene complexity more than (X) by enabling the user to adjust the virtual pit’s depth range to their liking.

Regarding the “parallax effect” within the virtual pit, (XC) again received higher ratings than the other two methods because it has minimal shifting of utilities’ extensions from the pit walls. (XD) and (X) still cause some confusion due to the parallax effect on the utilities within the pit. However, (XD) was rated better than (X) because it allows the utilities buried deeper and not in the concerned depth range, resulting in less confusion.

The ratings of the three visualization methods in terms of “real-world occlusion” were not significantly different, as they all have the same size of the virtual pit and block a significant portion of the real world behind it. Thus, all methods were evaluated similarly. Some participants suggested increasing the transparency of the virtual pit, but this may lead to a decrease in the scene complexity by partially visualizing the real world behind the pit.

In contrast, participants were satisfied with the “depth perception” of the utilities within the pit for all three methods, and the ratings were almost the same and higher than the ratings in scenario one. The participants’ feedback supports this observation by highlighting that the contour lines within the virtual pit and the “connecting lines” along the pit’s wall from the shadows to the corresponding utilities enhance the “depth perception” of the utilities.

According to the analysis, (XD) was rated higher than (X) in terms of overall “effectiveness,” while (XC) did not show a significant difference compared to (X) based on the Wilcoxon signed-rank test with the adjusted p-value (0.017). However, it was believed that both (XD) and (XC) would perform better with the addition of the “tracking” function. Participants noted that tracking could increase safety awareness, whereas in (X), users must manually move the virtual pit, which could cause distractions from the real world. Some participants were not satisfied with the jittering effect caused by the tracked pits in the video, although this can be mitigated by reducing the virtual pit’s tracking refresh rate and color intensity during user movement.

Although (XC) performed better than (XD) in terms of “scene complexity” and “parallax effect,” and both methods received similar ratings for “depth perception” and “real-world occlusion,” there was no significant difference in overall “effectiveness” between the two methods. This may be because (XD) provides complete information on all utilities within a specific depth range, including tee-junctions and other joints, while (XC) reduces the amount of virtual content within the virtual pit and only displays one utility at a time for complete visualization. This feature allows users to see the entire utility regardless of its depth without being blocked by other utilities buried above it, making it more suitable for areas with multiple utilities at different depths. Therefore, there is a trade-off between “scene complexity” and visualizing more information.

7.3 Scenarios One and Two

The comparison between (G) and (X) in scenario two demonstrates that the methods perform significantly better than those in scenario one in terms of “depth perception.” As a result, the majority of participants preferred (X) over (G) for excavation purposes where clear visualization of all underground utilities at a specific location is critical. Although (X) controls the drift of utilities inside the virtual pit through pit size, while (GE) depends on the user’s viewpoint, there was no significant difference between them in terms of confusion caused by the “parallax effect.” However, when deciding which method is more suitable for “general visualization purposes,” the choice depends on several factors, including the user’s profession, personal preferences, and the job’s nature.

7.4 Limitations

During the experiment, some participants noted that their perceptions of the visualization could change with continuous use, as the ratings were based on their initial impressions. Additionally, since participants came from various professions in the spatial industry, their perceptions of different visualizations varied slightly. For example, the way a utility locator needs to view the underground utilities may differ from that of a GIS officer who mainly works in the office.

Moreover, as a part of a pre-screening process, 15 responses were excluded from the analysis for various reasons. These reasons include incomplete answers, identical responses to every question (which suggests insufficient time to view the videos and read the content), inconsistent or contradictory responses, and irrational answers.

Furthermore, it should be noted that these methods may be used in an outdoor environment, but estimating the position and orientation of the device in an open environment is a challenge. Thus, the experiment was conducted indoors to minimize the localization problem. Additionally, we assume that all participants had a similar level of familiarity with MR technology, and their responses were not weighed based on their experience.

8 Conclusion and Future Work

In this study, six new MR visualization methods were introduced and categorized into two scenarios: the first scenario includes the “General view” (G), “General + Range view” (GR), and “General + Elevator view” (GE), which provide overall views, while the second scenario includes the “X-Ray box view” (X), “X-Ray Box + Depthslider view” (XD), and “X-Ray box + Clipping view” (XC), which provide specific views. A user survey involving industry professionals was conducted to assess these methods based on five criteria: “scene complexity”, “parallax effect”, “real-world occlusion”, “depth perception”, and “overall effectiveness”. Additionally, pairs from both scenarios were evaluated in terms of “depth perception” and “parallax effect”.

The analysis of the results indicates that in scenario one, (GR) received a higher rating for “real-world occlusion” compared to (G) and (GE). Participant feedback affirmed that all three methods performed equally well in their own way. In scenario two, there were significant differences, with (XC) receiving the highest ratings for “scene complexity” and “parallax effect” compared to the other two methods. Additionally, (XD) outperformed (X) in terms of overall “effectiveness.” A comparison of visualization methods in scenarios one and two shows that (X) provides better depth perception than (G). Moreover, (X) received significantly higher ratings than (G) for excavation purposes.

The feedback provided by the participants affirms that these methods can be effectively employed in various situations. Furthermore, there were suggestions to enhance these methods, including:

-

Combining (GE) with (GR) or increasing utility transparency while walking, to decrease real-world occlusion.

-

Adding a function similar to (XD) to alter the depth of (XC) within the virtual pit to further simplify it.

-

Scaling the virtual pit in and out utilizing two HoloLens raycasts at the same time to alter its size (not demonstrated in the videos).

-

Providing more MR sliders to modify the transparency level and distance range of (GR).

-

Using standard color codes (AS5488—2013) for utilities.

-

Using dashed lines for shadows to minimize scene complexity.

-

Fixing the user-tracked attribute window in the field of view’s corner to prevent collision with other virtual content.

-

Including arrows in (G)/(GR) pointing down in the “connecting lines” and pointing up towards the underground utilities in (GE).

-

Adding attribute filters such as size, materials, type, and depth for more advanced visualization suitable for complex indoor and outdoor areas with many utilities.

Moving forward, efforts should be directed towards implementing these visualization techniques, along with the suggested enhancements, in real-world settings using actual data. This could involve incorporating GNSS receivers with HoloLens, utilizing data captured by utility-locating devices, and merging data from various sources, such as pre-captured point clouds, as-built surveys, and available pdf plans, to generate virtual content.

Data Availability Statement

The datasets generated during and/or analysed during the current study are available from the corresponding author on reasonable request.—Responses to the survey questionnaire

References

Akin M, Uzun Y (2022) Virtual and augmented reality concepts. Curr Stud Artif Intell Virtual Real Augment Real 57:

Arifiani S, Manurung M, Ginardi RVH (2021) The use of location-based augmented reality as information media in the digital map application of Jakabaring sport city. Penelitian Ilmu Komputer Sistem Embed Log 9(2):103–118

Armstrong RA (2014) When to use the B onferroni correction. Ophthalmic Physiologic Optic 34(5):502–508

Baek J‑M, Hong I‑S (2013) The design of an automatically generated system for cross sections of underground utilities using augmented reality. Int J Smart Home 7(6):255–264. https://doi.org/10.14257/ijsh.2013.7.6.25

Becher C, Bottecchia S, Desbarats P (2021) Projection grid cues: an efficient way to perceive the depths of underground objects in augmented reality. Springer, Cham

Benavoli A, Corani G, Mangili F (2016) Should we really use post-hoc tests based on mean-ranks? J Mach Learn Res 17(1):152–161

Bintang MA, Harwahyu R, Sari RF (2020) SMARIoT: augmented reality for monitoring system of internet of things using easyAR. Paper presented at the 2020 4th International Conference on Informatics and Computational Sciences (ICICoS).

Canon (2020) Mreal display MD-20. https://global.canon/en/technology/mr2019.html

Chen J, Granier X, Lin N, Peng Q (2010) On-line visualization of underground structures using context features. Proceedings of the 17th ACM Symposium on Virtual Reality Software and Technology, Hong Kong, pp 167–170 https://doi.org/10.1145/1889863.1889898

Cheng J, Chen K, Chen W (2017) Comparison of marker-based AR and marker-less AR: a case study on indoor decoration system. Paper presented at the Lean and Computing in Construction Congress (LC3): Proceedings of the Joint Conference on Computing in Construction (JC3).

Cote S, Mercier A (2018) Augmentation of road surfaces with subsurface utility model projections. Paper presented at the 2018 IEEE Conference on Virtual Reality and 3D User Interfaces (VR).

Eren MT, Balcisoy S (2017) Evaluation of X‑ray visualization techniques for vertical depth judgments in underground exploration. Vis Comput 34(3):405–416. https://doi.org/10.1007/s00371-016-1346-5

Feiner SK, Seligmann D (1992) Cutaways and ghosting satisfying visibility constraints indynamic 3d illustrations. Vis Comput 8(5):292–302. https://doi.org/10.1007/BF01897116

Fenais AS, Ariaratnam ST, Ayer SK, Smilovsky N (2020) A review of augmented reality applied to underground construction. J Inf Technol Constr 25:308–324. https://doi.org/10.36680/j.itcon.2020.018

Field A, Hole G (2002) How to design and report experiments. SAGE

Hansen LH, Wyke SS, Kjems E (2020) Combining reality capture and augmented reality to visualise subsurface utilities in the field. Paper presented at the ISARC. Proceedings of the International Symposium on Automation and Robotics in Construction.

Hansen LH, Fleck P, Stranner M, Schmalstieg D, Arth C (2021) Augmented reality for subsurface utility engineering, revisited. IEEE Trans Visual Comput Graphics 27(11):4119–4128

Heinrich F, Bornemann K, Lawonn K, Hansen C (2019) Depth perception in Projective augmented reality: an evaluation of advanced visualization techniques. Paper presented at the 25th ACM Symposium on Virtual Reality Software and Technology.

Kalkofen D, Mendez E, Schmalstieg D (2009) Comprehensible visualization for augmented reality. IEEE Trans Vis Comput Graph 15(2):193–204. https://doi.org/10.1109/TVCG.2008.96

Keil J, Korte A, Ratmer A, Edler D, Dickmann F (2020) Augmented reality (AR) and spatial cognition: effects of holographic grids on distance estimation and location memory in a 3D indoor scenario. J Photogramm Remote Sens Geoinform Sci 88(2):165–172. https://doi.org/10.1007/s41064-020-00104-1

Kopsida M, Brilakis I (2020) Real-time volume-to-plane comparison for mixed reality-based progress monitoring. J Comput Civ Eng 34(4):4020016. https://doi.org/10.1061/(ASCE)CP.1943-5487.0000896

Massey FJ (1951) The Kolmogorov-Smirnov test for goodness of fit. J Am Stat Assoc 46(253):68–78. https://doi.org/10.1080/01621459.1951.10500769

Mazzanti D (2022) AR image marker. https://www.dariomazzanti.com/uncategorized/ar-image-target-generation-vuforia/

Muthalif MZA, Shojaei D, Khoshelham K (2022a) Resolving perceptual challenges of visualizing underground utilities in mixed reality. Int Arch Photogramm Remote Sens Spatial Inf Sci. https://doi.org/10.5194/isprs-archives-XLVIII-4-W4-2022-101-2022

Muthalif MZA, Shojaei D, Khoshelham K (2022b) A review of augmented reality visualization methods for subsurface utilities. Adv Eng Inform 51:101498

Nnaji C, Karakhan AA (2020) Technologies for safety and health management in construction: current use, implementation benefits and limitations, and adoption barriers. J Build Eng 29:101212. https://doi.org/10.1016/j.jobe.2020.101212

Ortega S, Wendel J, Santana J, Murshed S, Boates I, Trujillo A, Suárez J (2019) Making the invisible visible—strategies for visualizing underground infrastructures in Immersive environments. Int J Geoinform. https://doi.org/10.3390/ijgi8030152

Oufqir Z, El Abderrahmani A, Satori K (2020) ARKit and ARCore in serve to augmented reality. Paper presented at the 2020 International Conference on Intelligent Systems and Computer Vision (ISCV).

Ping J, Thomas BH, Baumeister J, Guo J, Weng D, Liu Y (2020) Effects of shading model and opacity on depth perception in optical see-through augmented reality. J Soc Info Display 28(11):892–904. https://doi.org/10.1002/jsid.947

Piroozfar P, Judd A, Boseley S, Essa A, Farr ERP (2021) Augmented reality (AR) for utility infrastructure: an experiential development workflow. Springer, Cham

Rolland J, Holloway R, Fuchs H (1994) Comparison of optical and video see-through, head-mounted displays. Proceedings of SPIE—The International Society for Optical Engineering. https://doi.org/10.1117/12.197322

Sarosa M, Chalim A, Suhari S, Sari Z, Hakim H (2019) Developing augmented reality based application for character education using unity with Vuforia SDK. Paper presented at the Journal of Physics: Conference Series.

Schall G, Mendez E, Kruijff E, Veas E, Junghanns S, Reitinger B, Schmalstieg D (2009) Handheld augmented reality for underground infrastructure visualization. Pers Ubiquit Comput 13(4):281–291. https://doi.org/10.1007/s00779-008-0204-5

Schall G, Schmalstieg D, Junghanns S (2010) VIDENTE-3D visualization of underground infrastructure using handheld augmented reality.

Sedgwick P (2012) Multiple significance tests: the Bonferroni correction. BMJ. https://doi.org/10.1136/bmj.e509

Sheldon MR, Fillyaw MJ, Thompson WD (1996) The use and interpretation of the Friedman test in the analysis of ordinal-scale data in repeated measures designs. Physiother Res Int 1(4):221–228

Sidani A, Matoseiro Dinis F, Duarte J, Sanhudo L, Calvetti D, Santos Baptista J, Soeiro A (2021) Recent tools and techniques of BIM-based augmented reality: a systematic review. J Build Eng 42:102500. https://doi.org/10.1016/j.jobe.2021.102500

Stylianidis E, Valari E, Pagani A, Carrillo I, Kounoudes A, Michail K, Smagas K (2020) Augmented reality geovisualisation for underground utilities. J Photogramm Remote Sens Geoinform Sci 88(2):173–185. https://doi.org/10.1007/s41064-020-00108-x

Tourlomousis F, Chang RC (2017) Dimensional metrology of cell-matrix interactions in 3D microscale fibrous substrates. Procedia Cirp 65:32–37. https://doi.org/10.1016/j.procir.2017.04.009

Varjo (2021) XR‑3 and VR‑3 user guide. https://varjo.com/products/xr-3/

Zollmann S, Schall G, Junghanns S, Reitmayr G (2012) Comprehensible and interactive visualizations of GIS data in augmented reality. Springer, Berlin, Heidelberg

Zollmann S, Grasset R, Reitmayr G, Langlotz T (2014) Image-based X‑ray visualization techniques for spatial understanding in outdoor augmented reality. Paper presented at the Proceedings of the 26th Australian Computer-Human Interaction Conference on Designing Futures: the Future of Design.

Acknowledgements

This research work is funded by the Research Training Program Scholarship provided by the Australian Commonwealth Government and the University of Melbourne.

Funding

Open Access funding enabled and organized by CAUL and its Member Institutions

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

1.1 Supplemental Materials

The GitHub repository for the HoloLens app is accessible via the following link: https://github.com/Mabdulmuthal/MRUtilities.

The videos of the MR visualization methods (G), (GR), (GE), (X), (XD), (XC) are available online:

Rights and permissions

Open Access This article is licensed under a Creative Commons Attribution 4.0 International License, which permits use, sharing, adaptation, distribution and reproduction in any medium or format, as long as you give appropriate credit to the original author(s) and the source, provide a link to the Creative Commons licence, and indicate if changes were made. The images or other third party material in this article are included in the article’s Creative Commons licence, unless indicated otherwise in a credit line to the material. If material is not included in the article’s Creative Commons licence and your intended use is not permitted by statutory regulation or exceeds the permitted use, you will need to obtain permission directly from the copyright holder. To view a copy of this licence, visit http://creativecommons.org/licenses/by/4.0/.

About this article

Cite this article

Abdul Muthalif, M.Z., Shojaei, D. & Khoshelham, K. Interactive Mixed Reality Methods for Visualization of Underground Utilities. PFG (2024). https://doi.org/10.1007/s41064-024-00295-x

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s41064-024-00295-x