Abstract

The proponents of embodied cognition often try to present their research program as the next step in the evolution of standard cognitive science. The domain of standard cognitive science is fairly clearly circumscribed (perception, memory, attention, language, problem solving, learning). Its ontological commitments, that is, its commitments to various theoretical entities, are overt: cognition involves algorithmic processes upon symbolic representations. As a research program, embodied cognition exhibits much greater latitude in subject matter, ontological commitment, and methodology than does standard cognitive science. The proponents of embodied cognition to explain the aspects of human cognition are using the importance of embodied interaction with the environment, which is a dynamic relation. The cause of disagreement between these two approaches is regarding the role assumed by the notion of representation. The discussion about the contrast between embodied cognition and standard cognitive science is incomplete without Gibson’s ecological theory of perception and connectionist account of cognition. I will briefly contrast these important theories with computational view of cognition, highlighting the debate over role of representation. Embodied cognition has incorporated rather extensively a variety of insights emerging from research both in ecological psychology and in connectionism. The way I have followed to contrast embodied cognition with standard cognitive science, involves concentration on those several themes that appear to be prominent in the body of work that is often seen as illustrative of embodied cognition. This strategy has the advantage of postponing hard questions about “the” subject matter, ontological commitments, and methods of embodied cognition until more is understood about the particular interests and goals that embodied cognition theorists often pursue. This approach might show embodied cognition to be poorly unified, suggesting that the embodied cognition label should be abandoned in favor of several labels that reflect more accurately the distinct projects that have been clumped together under a single title. Alternatively, it might show that, in fact, there are some overarching commitments that bring tighter unity to the various bodies of work within embodied cognition that seem thematically only loosely related. The contrast between these two approaches is highlighted not only the basis of a priori argument but major experiments have been mentioned, to show the weight of the assumptions of both the contrasting approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The proponents of embodied cognition often try to present their research program as the next step in the evolution of standard cognitive science. The domain of standard cognitive science is clearly circumscribed (perception, memory, attention, language, problem solving, learning). Its ontological commitments, that is, its commitments to various theoretical entities, are overt: cognition involves algorithmic processes upon symbolic representations. Furthermore, cognitive scientists employ standardized methodological practices for revealing features of these algorithmic processes and representations, such as reaction time experiments, recall tasks, dishabituation paradigms. Moreover, these ontological commitments, methodological practices, and subject matters serve to constrain each other, helping to clarify even more precisely the nature of cognitive science. As a research program, embodied cognition exhibits much greater latitude in subject matter, ontological commitment, and methodology than does standard cognitive science. The domain of embodied cognition certainly overlaps that of cognitive science, but seems also to include phenomena that might hold little interest for a cognitive scientist [e.g., the development of stepping behavior in infants (Thelen and Smith 1994)]. In pace with this greater diversity in subject matter are more roughly defined theoretical commitments and fewer uniform methodological practices. In sum, the ties between subject matter, ontological commitments, and methodology are, within embodied cognition, longer and looser than they are within standard cognitive science. Yet, this state of affairs is no reason to dismiss or disparage embodied cognition. Today’s research program may be tomorrow’s reigning theory. However, embodied cognition’s status as a research program does invite special caution when considering claims that it might replace or supersede standard cognitive science. The first section provides an introduction to exemplary experiments, responsible for the formation of core assumption behind the standard cognitive science.

The discussion about the contrast between embodied cognition and standard cognitive science is incomplete without Gibson’s ecological theory of perception and connectionist account of cognition. I will briefly contrast these important theories with computational view of cognition, highlighting the debate over role of representation. Embodied cognition has incorporated rather extensively a variety of insights emerging from research both in ecological psychology and in connectionism. Gibson’s work, as well as some basic principle of connectionism, has paid dividends directly toward the development of embodied cognition. The conceptual tools that Gibson and connectionist research bring to the study of cognition plow a ground of new possibilities into which an embodied approach to cognition might take root. No longer much we equate psychological processes with symbolic computation within the confine of the brain. Recognition that interactions between the body and the world enhance the quality of stimuli available for psychological processing, and that processing need not take place over a domain of neurally realized symbolic representations, invites a rethinking of body’s place in psychology.

In the final section, I will present bird’s eye view of claims and arguments of both the approaches, highlighting the contrast from the perspective of role assumed by representation. The way I have followed to contrast embodied cognition with standard cognitive science, involves concentration on those several themes that appear to be prominent in the body of work that is often seen as illustrative of embodied cognition. This strategy has the advantage of postponing hard questions about “the” subject matter, ontological commitments, and methods of embodied cognition until more is understood about the particular interests and goals that embodied cognition theorists often pursue. This approach might show embodied cognition to be poorly unified, suggesting that the embodied cognition label should be abandoned in favor of several labels that reflect more accurately the distinct projects that have (misleadingly) been clumped together under a single title. Alternatively, it might show that, in fact, there are some overarching commitments that bring tighter unity to the various bodies of work within embodied cognition that seem thematically only loosely related.

Core Assumption and Exemplars of Standard Cognitive Science

The advent and rise of standard cognitive science can be seen as coincided with the rise of the digital computers in mid of the twentieth century. Cognitive scientists have modeled their theories based on the functioning of a computer system. It had a huge impact upon the claims, and assumption of standard cognitive science (Shapiro 2007). Some of the important assumptions are as following.

-

1.

The stimuli for psychological processes are impoverished. They are “short” on the information that is necessary for an organism to interact successfully with its environment.

-

2.

The second assumption cognitive scientists make is that psychological processes must make inferences, educated guesses, about the world on the basis of incomplete information. These inferences might deploy assumptions about the environment in order to extract correct conclusions from the data.

-

3.

The inferential processes characteristic of psychology are best conceived as involving algorithmic operations over a domain of symbolic representations. Cognition proceeds by encoding stimuli into symbolic form, manipulating these symbols in accord with the rules of a mental algorithm, and then producing a new string of symbols that represents the conclusion of the algorithmic process.

Consider, now, some exemplars of experimental set up which led to the development of these core assumptions of standard cognitive science. These projects are (1) Newell and Simon’s General Problem Solver; (2) Sternberg’s work on memory recall; and (3) computational analyses of perception. Despite the diverging explanatory targets of these three enterprises, they are remarkably similar in how they conceive of the process of cognition, and in their commitments to how cognition should be studied.

Newell and Simon’s General Problem Solver

In the early 1960s, Newell and Simon created a computer program they called General Problem Solver (GPS), the purpose of which was not only to solve logic problems, but to solve them in the same way that a human being would (1961: 1976). That is, the program was intended to replicate the internal thought processes that a human being undertakes when solving a logic problem. As such, just as Newell and Simon claim, GPS is a theory of human thinking (1961: 2016). Examination of some of the details of GPS thus provides us with a picture of what the mind looks like, for a cognitive scientist.

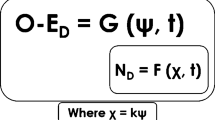

Because the purpose of GPS was to replicate the stages involved in human problem-solving abilities, its assessment required that the problem solving procedure it used be tested against the problem solving procedures that human beings use. Thus, Newell and Simon asked human subjects to “think out loud” while solving logic problems. For instance, a subject was shown a logical expression, such as

and was asked to transform this expression into

The subject was also provided with various transformational rules of the sort that would be familiar to anyone with a background in simple sentential logic. No interpretation was offered for the logical expressions. Subjects merely had to identify the rules that would transform one syntactical object into another and then apply them. Obviously, transformations of expressions like (1) into others like (2) are a simple matter for any suitably programmed general purpose computer. As Newell and Simon describe, a computer:

is a symbol-manipulating device, and the symbols it manipulates may represent numbers, words, or even nonnumerical, nonverbal patterns. The computer has quite general capacities for reading symbols or patterns presented by input devices, storing symbols in memory, copying symbols from one memory location to another, erasing symbols, comparing symbols for identity, detecting specific differences between their patterns, and behaving in a manner conditional on the results of its processes. (1961: 2012)

The hypothesis that motivates GPS as a theory of human thinking is that thought processes are just like those processes that take place within a computer:

We can postulate that the processes going on inside the subject’s skin– involving sensory organs, neural tissue, and muscular movements controlled by the neural signals – are also symbol-manipulating processes; that is, patterns in various encodings can be detected, recorded, transmitted, stored, copied, and so on, by the mechanisms of this system. (1961: 2012)

Finally, given that human thought processes are computational, i.e., involve the manipulation of symbols, GPS provides a model of human cognition just in case the kinds of computations it uses to solve a problem are similar to the computations that take place in a human brain, where these latter computations become visible through Newell and Simon’s “thinking out loud” experimental protocol.

Sternberg’s Analysis of Memory Scanning

Once one conceives of mental processes as computational—as consisting of operations over symbolic representations—certain questions about how the mind works, and particular approaches to its investigation, become quite natural. This is not to say that similar questions or methods did not exist prior to the computational turn, but surely the rise of the computer in the 1950s and 1960s brought with it a fresh vocabulary, and an assemblage of computationally inspired ideas that influenced and encouraged a way of doing psychology. Sternberg’s work on memory scanning in the late 1960s is a splendid illustration of this point.

Consider the following task. A subject is asked to memorize a list of numerals, where this list might contain from one to six members. The subject is then exposed to a single numeral and asked whether the numeral appears in the list that she has memorized. If the subject judges that the numeral is among those on the list, she pulls a lever to indicate a positive response. If the subject does not remember the numeral as being among those on the list, she pulls a different lever to indicate a negative response.

Although this task is limited to the recall of numerals, it is plausible that the same processes of memory retrieval are operative in less artificial domains. For instance, perhaps your routine is to jot down a list of items to purchase before going to your grocery store, only to find, once you arrive there, that you have left the list on the kitchen table. Fortunately, there were only six items on the list and you are pretty sure you remember them all. As you cruise the aisles, you pick up the products whose names you wrote on the list. To be sure, there are differences in this task and the one Sternberg designed. Rather than being exposed to a test stimulus and then judging whether it is on the list, you have to actively seek the items on the list. Nevertheless, the task does require the retrieval of information, and the basic structure of the task does seem quite similar. For instance, you may even end up standing in front of the corn meal in the baking aisle and asking yourself “Did I need corn meal?” In answering this question, you might find yourself mentally scanning your shopping list.

But now suppose you wanted to write a computer program for performing Sternberg’s retrieval task. There are two search algorithms to consider. The first, exhaustive search, requires that you compare the test stimulus to each item on the memorized list before rendering the judgment that the stimulus is or is not a member of the list. The second search strategy, self-terminating search, requires that the test stimulus be compared with each item on the memorized list until a positive match is made, at which point the search terminates.

The exhaustive search strategy appears to be less efficient. To return to the shopping analogy, what would be the point, once having picked up the corn meal, of going through a mental rehearsal of the rest of the items on the list if corn meal was the second item to pop into your head. Once you retrieve the information that corn meal is something you wish to purchase, why bother asking whether the item in your hand is also chicken stock, or cheese, and so on.

Still, the appearance of inefficiency, although an argument against programming a computer with an exhaustive search strategy of retrieval, is no argument that human memory retrieval is self-terminating rather than exhaustive. Discovering which search strategy human memory scanning employs was the focus of Sternberg’s groundbreaking investigations.

Sternberg reasoned that both search strategies require a fixed amount of processing time independent of the number of items on the list that subjects were asked to memorize. For example, regardless of whether there are two or six items on the list that a subject has memorized, the task of deciding whether the test stimulus is a member of the list requires that the subject first identify the test stimulus (e.g., identify the stimulus as a “4” or as an “8”), and also that the subject formulate a response (positive or negative) and decide on the appropriate action (pulling one lever or the other). Importantly, all mental operations take some amount of time to perform, but the identification of the test stimulus, and the performance of a response will take the same amount of time, regardless of differences in the size of the list that the subject must encode and whether the search is exhaustive or self-terminating.

The next assumption Sternberg made concerned the effect that list size has on the time required for a subject to complete the retrieval task. The subject’s reaction time is the amount of time that elapses from the moment that the subject is exposed to the test stimulus until the moment that the subject pulls a response lever. Because both exhaustive and self-terminating search strategies assume that the searching process is serial, i.e., that a comparison between the test stimulus and the items in memory proceeds consecutively, then an exhaustive search and a self-terminating search should take the same amount of time, for a given list size, if the test stimulus is not a member of the memorized list. For instance, if the subject has memorized the list {2, 5, 3, 8} and then is exposed to the test stimulus {4}, the subject must compare the test stimulus to every member of the list before being in position to render a negative judgment. Thus, test stimuli that are not in the memorized list fail to distinguish between exhaustive and self-terminating searches.

Crucially, however, subjects’ reaction times will differ depending on whether they use an exhaustive or self-terminating search when the test stimulus does match a member of the memorized list. Reaction time for an exhaustive search, on a given list size, will remain the same for positive and negative results because in both cases the test stimulus must be compared to every member of the list. A positive response resulting from a self-terminating search, however, will take on average only half the time that an exhaustive search requires. This is because, on average, a self-terminating search will end at the middle of the memorized list, for a given list size. Thus, subjects’ reaction time for positive responses does provide evidence for distinguishing exhaustive from self-terminating search strategies.

Data show, as expected, that response times are longer depending on the number of digits the subject was asked to memorize. But, remarkably, they also show that the curve fitting positive responses has the same slope as the curve fitting negative responses. This evidence favors the hypothesis that subjects conducted an exhaustive search, comparing the test stimulus against each member of the memorized set regardless of whether a match is discovered prior to reaching the end of the set.

Sternberg’s work is notable for a number of reasons. First, like Newell and Simon’s hope for GPS, Sternberg’s experimental design is intended to yield information about the “program” that underlies a cognitive task. Indeed, it is not difficult to describe a program for each of the alternative search strategies that Sternberg considers. The experimental methodology Sternberg uses to uncover the mind’s programs is perhaps more reliable than the method of “thinking out loud” on which Newell and Simon depend. There are doubtless many layers of cognition that intervene between the unconscious processes involved in problem solving and the verbalization of thought that Newell and Simon used as evidence for the veracity of GPS. In as much as this is true, support wanes for the claim that GPS reveals something of the “mysteries” of thinking processes or, at any rate, of the mysteries of thinking processes that take place unconsciously.

In contrast, Sternberg’s reaction time measure does apparently reflect unconscious cognitive processing. The measure shows that comparison of the test stimulus with items of the memorized set takes approximately 38 ms per item. This is quite fast. So fast, Sternberg claims, that “the scanning process seems not to have any obvious correlate in conscious experience. Subjects generally say either that they engage in a self-terminating search, or that they know immediately, with no search at all, whether the test stimulus is contained in the memorized list” (1969: 428). This comment raises another problem with Newell and Simon’s procedure. Apparently subjects’ conscious reports can in fact misrepresent the unconscious processes that occur in cognitive activity. Whereas Sternberg’s subjects might claim to have engaged in a self-terminating search (or no search at all!), the reaction time measurements tell a different story. Given this inconsistency between the subjects’ reports and the experimental data, there is further reason to doubt that the reports on which Newell and Simon rely accurately reflect the unconscious processes that humans use when solving logic problems.

In sum, although the methods that Newell and Simon and Sternberg use to reveal the nature of human thought differ, they share a commitment to the idea that cognitive processes are computational processes. A cognitive task can be made the subject of a means-end analysis, broken into regimented stages, and solved through the application of determinate procedures to symbolic representations.

The Computational Vision Program

So far, we have seen examples of standard cognitive science in the areas of problem solving and memory recall. An examination of how a cognitive scientist approaches the study of vision will round out our discussion of standard cognitive science. Cognitive scientists conceive of the visual system as a special kind of problem solver. The question vision theory addresses is this: How does stimulation on the retina become a perception of the world? Cognitive scientists also emphasize the apparent fact that the patterns of stimulation on the retina do not carry enough information on their own to determine a unique visual perception. The visual system must therefore embellish visual inputs, make assumptions, and draw inferences in order to yield a correct solution, i.e., an accurate description of the visual scene. Richard Gregory, a vision scientist whose work predates but anticipates much of the current work in computational vision, makes this point nicely: “Perceptions are constructed, by complex brain processes, from fleeting fragmentary scraps of data signaled by the senses” (1972: 707). Research in computational vision consists mainly in describing the problems that vision must solve and in devising algorithms that suffice to transform “fleeting fragmentary scraps of data” into our rich visual world.

The retina is a concave surface, like the inside of a contact lens, and so the pattern of light projecting onto it is roughly two-dimensional. Surfaces differing in size and shape can project identical patterns of light in two dimensions. Somehow, the visual system must identify which one of an infinite number of possible surfaces is the actual cause of a given retinal image. And, whereas the laws of optics make possible a computation of the two-dimensional image that a given surface will produce on the retina, the inverse calculation—from image to external surface—is impossible. Vision, in a sense, must do the impossible. It must do inverse optics.

Yet, of course, vision is largely successful. The task of vision becomes tractable, computational vision theorists argue, because certain facts about the world can be exploited to reduce the number of possible visual descriptions of the world from many to one. By building into their algorithms rules that represent these real-world constraints, the nut of inverse optics might be cracked. Marr and Poggio’s work on stereo vision is a paradigm in this respect. When a pair of eyes fixates on a nearby object, the object will reflect light to a point on each retina. However, often the positions of these points on the two retinas are not the same. If it were possible to lay the left retina atop the right, you would find that the elements of the image on the left retina do not all rest on top of the same elements of the image on the right retina.

This fact is easy enough to confirm. If you hold a pencil at arm’s length between you and a more distant object, perhaps a doorknob, and alternately close one eye and then the other, you will notice that if the pencil appears to the left of the doorknob when viewing it through the right eye, it may appear to the right of the doorknob when viewing it through the left eye. This shows that the location of the image of the pencil relative to the image of the doorknob is not in the same position on each retina.

That disparity information can be used to compute relative depth is well known. The calculation is actually quite simple, relying on some basic trigonometry and requiring nothing more than information about the degree of disparity (measured in either radians or arc seconds), the distance between the eyes, and the angle of the lines of sight. However, recovering the necessary information for the calculation is not at all simple. The problem is that the visual system must be able to match the points of an image on one retina with points of the image on the other retina in order to identify the precise degree of disparity.

In response to this problem of identifying corresponding points on each retinal image, Marr and Poggio (1976) developed an algorithm that made use of two assumptions about the physical world that were then implemented as rules within the algorithm. The first assumption was that each point on a retinal image corresponds to exactly one point in the physical world. Because this is true, each point on the left retina must correspond to exactly one point on the right retina, yielding the first rule:

(R1) Uniqueness: there is just one disparity value for each point on a retinal image.

The second assumption was that surfaces in the world tend to be cohesive rather than diffuse (i.e., more like solids than clouds) and are generally smooth, so that points on a surface near to each other tend to lie along the same plane (i.e., surfaces are more likely to have smooth discontinuities than they are to be like a tire tread). From this assumption the second rule follows:

(R2) Continuity: disparity will typically vary smoothly.

Together, these rules constrain the space of possible solutions, preventing errant matches and zeroing in on the correct ones. The details of the algorithm are complicated, but familiarity with them is not necessary for present purposes. The salient points are these. Computational theories of vision constitute a dominant approach to explaining vision in standard cognitive science. Like the other examples of cognitive science we have seen, vision is conceived as involving a series of stages, each of which involves procedures with well-defined initial and end states. While we have been examining just one such procedure, the derivation of depth from disparity, vision researchers have taken a computational approach to a range of other visual processes. As Rosenfeld, a computer vision scientist, describes: “A variety of techniques have been developed for inferring surface orientation information from an image; they are known as ‘shape from X’ techniques, because they infer the shape of the surface … from various clues … that can be extracted from the image” (1988: 865). Some of these techniques compute shape from information about shading, or shape from motion, or shape from texture. In each case, information on the retina marks the starting point, assumptions about the world are encoded as rules that constrain the set of possible solutions, and then an algorithm is devised that takes as input a symbolic representation of features on the retina, and produces as output a symbolic description of the physical world. As with problem solving and memory scanning, the guiding idea is that vision is a computational task, involving the collection and algorithmic processing of information.

Gibson’s Point of View

The quickest route to Gibson’s theory of perception is to see it as a repudiation of the idea that the visual system confronts an inverse optics problem. Recall that cognitivist theories of perception suppose the stimuli for perception to be impoverished. Because the retinal image underdetermines the shape of the surface that causes it, shape information must be derived from the sum of retinal information together with various assumptions about the structure of the world. In the discussion of stereo vision, we saw that the computation of relative depth from disparity information required assumptions about the uniqueness of the location of objects and the tendency of objects to be cohesive and their surfaces smooth. Without the addition of these assumptions, information on the retinal image is insufficient to determine facts about relative depth. In these cases and others, perception is conceived as an inferential process—reconstructing a whole body from a bare skeleton.

Gibson (2014) denies the inadequacy of perceptual inputs. Of course, it can be demonstrated mathematically that an infinite number of differently shaped surfaces can produce a single retinal image. Hence, Gibson cannot be denying that the retinal image is by itself insufficient to provide knowledge of one’s visual surroundings. Instead, Gibson denies that the retinal image is the proper starting point for vision. Rather, the inputs to vision are various invariant features of structured light, and these features can be relied on to specify unambiguously their sources. Gibson takes his research to show

that the available stimulation surrounding an organism has structure, both simultaneous and successive, and that this structure depends on sources in the outer environment … The brain is relieved of the necessity of constructing such information by any process … Instead of postulating hat the brain constructs information from the input of a sensory nerve, we can suppose that the centers of the nervous system, including the brain, resonate to information.

(1966: 267)

Gibson’s detractors (e.g., Fodor and Pylyshyn 1981) portray ecological psychology as merely a form of dressed-up behaviorism. Although Gibson does not avail himself of many of the methodological tools or vocabulary of the behaviorist school, he does share with behaviorists a hostility toward explanations that posit representations, mental images, and other internal states. Not surprisingly, the same questions that haunt the behaviorist also fall hard on Gibson: What are the mechanisms by which information is picked up? How does an organism organize its response? How does an organism learn to make use of information? Gibson’s theory, never attempts to peer into the “black box” of the cranium, has no use for the cognitivist’s conceptual tool kit, and this is one reason for critics’ dismissive attitude toward Gibson (e.g., Fodor and Pylyshyn 1981).

Contribution of Connectionism to the Debate on Representation

As connectionists see matters, the brain appears to be less like a von Neumann-style computer than it does a connectionist computer. This view of things is in part no accident: Connectionist machines were inspired by the vast connectivity that the brain’s billions of neurons exhibit, and so it is hardly a shock to be told that brains resemble connectionist computers more so than they do von Neumann computers. In defense of this assertion, connectionists note, first, that brain function is not “brittle” in the way that a standard computer’s functioning is. If you have ever tried to construct a Web page using HTML code then you have a sense of this brittleness Ramsey et al. (1991). Misplacing a single semicolon, or failing to insert a closing parenthesis, or typing a “b” instead of an “l,” can have devastating effects on the Web page. Programs typically work or they do not, and the difference between working and not can often depend on the presence of a single character. But brains typically do not behave in this all-or-nothing manner. Damage to a brain might degrade a psychological capacity without rendering it useless, or might have no noticeable effect at all. Connectionist computers exhibit similar robustness—the loss of some connections might make no difference to the computer’s capacities, or might only attenuate these capacities.

Second, von Neumann computers operate serially—one operation at a time. This fact imposes a severe constraint on the speed these computers can achieve. Consider how this constraint might manifest itself in a brain. If the basic processing unit of the brain—a neuron—requires 1–3 ms to transmit a signal, and if psychological abilities (such as recognition, categorization, response organization, and so on) can unfold in as little as a few hundred milliseconds, then the brain must be capable of producing these abilities in no more than a few hundred steps. This is remarkable, for simulations of these abilities on standard computers often require many thousands of steps. Connectionist machines avoid this speed constraint by conducting many operations simultaneously, so that in the time a standard computer takes to perform a hundred operations, a connectionist machine can perform many times that.

Insofar as this characterization of connectionist computation is correct, there is available an alternative to the rules and representation approach to computation that has predominated in cognitive science. Whereas the standard scientist conceives of representations as akin to words in a language of thought, and of cognition as consisting in operations on language-like structures, connectionists conceive of representations as patterns of activity, and of cognition as a process in which a network of connections settles into a particular state depending on the input it has received and the connection weightings it has, through training, acquired. What is more, the tasks for which connectionist nets have been devised reflect long-standing areas of interest among cognitive scientists. Nets have been designed that transform present tense English into past tense, that categorize objects, that transform written language into speech, and so on. Connectionist successes in these domains have at least strained and, just as plausibly, put the lie to the standard scientist’s mantra that standard computationalism is the only game in town.

Of course, standard cognitive scientists have not sat idly by as connectionists encroach on their territory. Fodor and Pylyshyn (1988) have been especially ferocious in their criticisms of connectionism. They argue that because representations within a connectionist net are distributed across patterns of activity, they are unsuited to decomposition into components, and thus cannot accommodate linguistic structures. Insofar as connectionist nets are unable to model linguistic structures, they will fail as a model of thought, for thought, according to many standard scientists, takes place in an internal language. On the other hand, if connectionist nets can model linguistic structures, they must be relegated to the status of mere implementations of psychological processes, where it falls to the theories and methods of standard cognitive science to understand these processes.

In response, connectionists have sought to show how linguistic structures can appear in connectionist nets. David Chalmers, for instance, designed a connectionist network that transforms sentences in the active voice into sentences in the passive voice and does so, he claims, without ever resorting to discrete, symbolic, representations (Chalmers 1990). Moreover, Chalmers argues that his network is not simply an implementation of a classical computational system, because there is never a step in the connectionist processing that decomposes the sentences into their constituent parts, as would have to occur if the sentences were represented in the network as concatenations of linguistic symbols (Chalmers 1993). The debate between connectionists and so-called classicists continues. In the present context, connectionism is significant for the starkly distinct portrait of cognition it offers to that of standard cognitive science. Ultimately, connectionism’s success depends on how much of cognition it is able to explain without having to avail itself of a language-like conceptual apparatus.

Making Case for Embodied Cognition

Interest in embodiment—in “how the body shapes the mind,” as the title of Gallagher (2005) neatly puts it—has multiple sources. Chief among them is a concern about the basis of mental representation. From a foundational perspective, the concept of embodiment matters because it offers help with the notorious “symbol-grounding problem,” that is, the problem of explaining how representations acquire meaning (Anderson 2003; Harnad 1990; Niedenthal et al. 2005). This is a pressing problem for cognitive science. Theories of cognition are awash in representations, and the explanatory value of those representations depends on their meaningfulness, in real-world terms, for the agents that deploy them. A natural way to underwrite that meaningfulness is by grounding representations in an agent’s capacities for sensing the world and acting in it:

Grounding the symbol far ‘chair’, for instance, involves both the reliable detection of chairs, and also the appropriate reactions to them The agent must know what sitting is and be able to systematically relate that knowledge to the perceived scene, and thereby see what things (even if non-standardly) afford sitting. In the normal course of things, such knowledge is gained by mastering the skill of sitting (not to mention the related skills of walking, standing up, and moving between sitting and standing), including refining one’s perceptual judgments as to what objects invite or allow these behaviors; grounding ‘chair’, that is to say, involves a very specific set of physical skills and experiences. (Anderson 2003, pp. 102–103)

This approach to the symbol-grounding problem makes it natural to attend to the role of the body in cognition. After all, our sensory and motor capacities depend on more than just the workings of the brain and spinal cord; they also depend on the workings of other parts of the body, such as the sensory organs, the musculoskeletal system, and relevant parts of the peripheral nervous system (e.g., sensory and motor nerves). Without the cooperation of the body, there can be no sensory inputs from the environment and no motor outputs from the agent hence, no sensing or acting. And without sensing and acting to ground it, thought is empty.

This focus on the sensorimotor basis of cognition puts pressure on a traditional conception of cognitive architecture. According to what Hurley (2002) calls the “sandwich model,” processing in the low-level peripheral systems responsible for sensing and acting is strictly segregated from processing in the high-level central systems responsible for thinking, and central processing operates over amodal representations. On the embodied view, the classical picture of the mind is fundamentally flawed. In particular, that view is belied by two important facts about the architecture of cognition: first, that modality-specific representations not amodal representations, are the stuff out of which thoughts are made; second that perception, thought, and action are co constituted, that is, not just causally but also constitutively interdependent.

Supposing, however, that the sandwich model is retired and replaced by a model in which cognition is sensorimotor to the core, it does not follow that cognition is embodied in the sense of requiring a body for its realization, for it could be that the sensorimotor basis of cognition resides solely at the central neural level, in sensory and motor areas of the brain. To see why, consider that sensorimotor skills can be exercised either online or offline (Wilson 2002). Online sensorimotor processing occurs when we actively engage with the current task environment, taking in sensory input and producing motor output. Offline processing occurs when we disengage from the environment to plan, reminisce, speculate, daydream, or otherwise think beyond the confines of the here and now. The distinction is important, because only in the online case is it plausible that sensorimotor capacities are body dependent. For offline functioning, presumably all one needs is a working brain.

Accordingly, we should distinguish two ways in which cognition can be embodied: online and offline (Niedenthal et al. 2005; Wilson 2002). The idea of online embodiment refers to the dependence of cognition—that is, not just perceiving and acting but also thinking—on dynamic interactions between the sensorimotor brain and relevant parts of the body: sense organs, limbs, sensory and motor nerves, and the like. This is embodiment in a strict and literal sense, as it implicates the body directly. Offline embodiment refers to the dependence of cognitive function on sensorimotor areas of the brain even in the absence of sensory input and motor output. This type of embodiment implicates the body only indirectly, by way of brain areas that process body-specific information (e.g., sensory and motor representations).

To illustrate this distinction, let us consider a couple of examples of embodiment effects in social psychology (Niedenthal et al. 2005). First, it appears that bodily postures and motor behavior influence evaluative attitudes toward novel objects. In one study, monolingual English speakers were asked to rate the attractiveness of Chinese ideographs after viewing the latter while performing different attitude-relevant motor behaviors (Cacioppo et al. 1993). Subjects rated those ideographs they saw while performing a positively valenced action (pushing upward on a table from below) more positively than ideographs they saw either while performing a negatively valenced action (pushing downward on the tabletop) or while performing no action at all. This looks to be an effect of online embodiment, as it suggests that actual motor behaviors, not just activity in motor areas of the brain, can influence attitude formation.

Contrast this case with another study of attitude processing. Subjects were presented with positively and negatively valenced words, such as love and hate, and asked to indicate when a word appeared either by pulling a lever toward themselves or by pushing it away (Chen and Bargh 1999). In each trial, the subject’s reaction time was recorded. As predicted, subjects responded more quickly when the valence of word and response behavior matched, pulling the lever more quickly in response to positive words and pushing the lever away more quickly in response to negative words. Embodiment theorists cite this finding as evidence that just thinking about something—that is, thinking about something in the absence of the thing itself—involves activity in motor areas of the brain. In particular, thinking about something positive, like love, involves positive motor imagery (approach), and thinking about something negative, like hate, involves negative motor imagery (avoidance). This result exemplifies offline embodiment, insofar as it suggests that ostensibly extramotor capacities like lexical comprehension depend to some extent on motor brain function—a mainstay of embodied approaches to concepts and categorization (Glenberg and Kaschak 2002; Lakoff and Johnson 1999).

The distinction between online and offline embodiment effects makes clear that not all forms of embodiment involve bodily dependence in a strict and literal sense. Indeed, most current research on embodiment focuses on the idea that cognition depends on the sensorimotor brain, with or without direct bodily involvement. Relatively few researchers in the area highlight the bodily component of embodied cognition. A notable exception is Gallagher’s (2005) account of the distinction between body image and body schema. In Gallagher’s account, a body image is a “system of perceptions, attitudes, and beliefs pertaining to one’s own body” (p. 24), a complex representational capacity that is realized by structures in the brain. A body schema, on the other hand, involves “motor capacities, abilities, and habits that both enable and constrain movement and the maintenance of posture” (p. 24), much of which is neither representational in character nor reducible to brain function. A body schema, unlike a body image, is “a dynamic, operative performance of the body, rather than a consciousness, image, or conceptual model of it” (p. 32). As such, only the body schema resides in the body proper; the body image is wholly a product of the brain. But if Gallagher is right, both body image and body schema have a shaping influence on cognitive performance in a variety of domains, from object perception to language to social cognition.

So far, in speaking of the dependence of cognition on the sensorimotor brain and body, we have been speaking of the idea that certain cognitive capacities depend on the structure of either the sensorimotor brain or the body, or both, far their physical realization. But dependence of this strong constitutive sort is a metaphysically demanding relation. It should not be confused with causal dependence, a weaker relation that is easier to satisfy (Adams and Aizawa 2008; Block 2005). Correlatively, we can distinguish between two grades of bodily involvement in mental affairs: one that requires the constitutive dependence of cognition on the sensorimotor brain and body, and one that requires only causal dependence. This distinction crosscuts the one mooted earlier, between online and offline embodiment. Although the causal/constitutive distinction is less entrenched than the online/offline distinction, especially outside of philosophy circles, it seems no less fundamental to an adequate understanding of the concept of embodiment. To see why, note that the studies described previously do not show that cognition constitutively depends on either the motor brain or the body. The most these studies show is some sort of causal dependence, in one or both directions. But causal dependencies are relatively cheap, metaphysically speaking. For this reason, among others, it may turn out that the import of embodiment for foundational debates in cognitive science is less revolutionary than is sometimes advertised (Adams and Aizawa 2008).

Contrasting Themes of Embodied Cognition with Standard Cognitive Science

Embodied cognition thesis is a result of dissatisfaction from the core assumptions of standard cognition, proponents of embodied cognition believe that human cognition is shaped by the aspect of the body beyond the brain along with continuous interaction with the environment. Proponents of embodied cognition mainly pose two objections against the assumptions of standard cognitive science. One is regarding the role and importance of the body. Standard cognitive scientist assumes brain as the center of cognition, on the other hand, proponents of embodied cognition give equal importance to the motor system, perception system of body, brain, and environment.

The second objection is to posit representation model of cognition, embodied cognition propose that aspects of cognition can be explained even without any appeal to representation and algorithms. Embodied cognition is mainly explained through the coupling relationship of brain, body, and the environment.

In order to support their claim proponents of embodied cognition look for the convergent evidence gained from robotic, artificial intelligence, linguistics, neuroscience, philosophy, and dynamical system. The research going on under the motivation of embodied cognition can be grouped into following three themes (Shapiro 2007) depending upon the focus of respective research program.

-

1.

Conceptualization According to this theme of the embodiment, the body of an organism limit or constraint the concept that the organism will have about the world. So organism having a different kind of body will conceptualize the world differently depending on their bodies.

-

2.

Replacement This theme of embodiment replaces the need for representation in cognition assuming that it is not required as organism’s body is interacting with the environment.

-

3.

Constitution Contrasting with standard cognitive, which believes that body or the world merely plays a causal role in the cognitive process, constitution theme, believes that body and the world play a constitutive role. The difference between constitution and causation can be understood by one simple example of oxygen. Oxygen is a constituent of water along with hydrogen. But oxygen is cause in the explosion since explosion may not take place without the presence of oxygen.

The exposition so far, can help one in having a general picture about the core assumption about both the approaches. In this paper, it is not possible to go into the details of research taking place under the different themes of embodied cognition. I will contrast these three themes with the standard cognitive science in a concise way, summarizing the results of research in various themes from embodied cognition with the objections from the quarters of standard cognitive science.

The Conceptualization and Standard Cognitive Science

Conceptualization, sees a connection between the kind of body an organism possesses and the concepts it is capable of acquiring. The connection is a constraining one: properties of the body determine which concepts are obtainable. Conceptualization predicts that at least some differences between types of body will create differences in conceptual capacities. Different kinds of organism will “bring forth” different worlds. For Varela et al. (1991) color experience exemplifies these ideas. Facts about neurophysiology determine the nature of an organism’s color experience. Were the neurophysiological facts to differ, so would the experiences of color. For Lakoff and Johnson (1999; 2008), facts about the body determine basic concepts, which then participate in metaphors, which in turn permeate just about every learned concept. For Glenberg and Kaschak (2002), an understanding of language, which reflects an understanding of the world, builds from the capacity to derive affordances, the meanings of which are a function of the properties of bodies. Common to all these thinkers is the conviction that standard cognitive science has not, and cannot, illuminate certain fundamental cognitive phenomena—color perception, concept acquisition, language comprehension—because it neglects the significance of embodiment.

The recent discovery of canonical and mirror neurons in the premotor cortex of some primates, including human beings, has been embraced by many embodied cognition researchers as evidence that there is a common code for perception and action, and thus objects are conceived partly in terms of how an organism with a body like so would interact with them. The premotor cortex is the area of the brain that is active during motor planning. Its processing influences the motor cortex, which is responsible for executing the motor activities that comprise an action. The canonical neurons and mirror neurons in the premotor cortex are bimodal, meaning that their activity correlates with two distinct sorts of properties (Rizzolatti and Craighero 2004; Gabarini and Adenzato 2004; Gallese and Lakoff 2005). The same canonical neuron that fires when a monkey sees an object the size of a tennis ball is the one that would fire were the monkey actually to grasp an object of that size. If the monkey is shown an object smaller than a tennis ball, one that would require a precision grip rather than a whole-hand grip, different canonical neurons would fire. Thus, canonical neurons can be quite selective. Their activation reflects both the particular visual properties of objects the monkey observes (size and shape) and the particular motor actions required to interact with these objects.

This interpretation of the neurophysiological data has an obvious tie to Conceptualization. How one interacts with objects in the world and which actions one can expect to accomplish, depend on the properties of one’s body. Thus, the same ball that is represented as graspable-with-finger-and-thumb by one kind of primate might be represented as graspable-with-whole-hand by a smaller kind of primate. Similarly, whether an organism represents a given sequence of movements as an action may well depend on whether its body is capable of producing the same or similar sorts of action. Perhaps the large primate sees another as reaching for the fruit with a rake, but the smaller primate sees only the larger primate moving the rake. The more general idea is that cognition is embodied insofar as representations of the world are constituted in part by a motor component, and thus are stamped with the body’s imprint.

Some of the research and arguments that have been used to bolster Conceptualization have various problems. One challenge is to make a case for Conceptualization that does not reduce to triviality. VTR face this problem: of course color experience is a product of neurophysiological processes; of course color experiences would differ were these processes to differ. VTR’s “best case” of embodiment appears to express little more than a rejection of dualism. Glenberg, et al.’s defense of Conceptualization is also in danger of sliding into triviality. The experimental evidence they collect in support of the indexical hypothesis might more easily be explained as the result of an ordinary sort of priming effect than as the product of a multistage process involving perceptual symbols, affordances, and meshing. Additionally, the experimental task Glenberg, et al.’s subjects perform may not really be testing their ability to understand language but instead testing whether they can imagine acting in a manner that the sentence describes.

Although the prospects for Conceptualization do not, appear hopeful, if standard cognitive science has shortcomings as Gibson, and connectionism suggests that it may indeed, the work we have studied in this section at best only hints at what they may be. Continuing study of canonical and mirror neurons may someday reveal that properties of organisms’ bodies are somehow integrated with their conceptions of objects and actions. Worth bearing in mind is that Conceptualization is only one theme that embodied cognition researchers pursue. Now it is time to look at the second theme of embodied cognition.

Replacement and Standard Cognitive Science

Of the three themes around which I am organizing discussion of embodied cognition, Replacement is most self-consciously opposed to the computational framework that lies at the core of standard cognitive science. The two main sources of support for Replacement come from (1) work that treats cognition as emerging from a dynamical system; and (2) studies of autonomous robots. A dynamical system is any system that changes over the time, examples could be of the economy, weather, and environment and so forth so on. Proponents of a dynamical model of cognition use exemplar of Watt Centrifugal Governor to make people understand the relationship of coupling to the emergence of cognition.

Watt’s centrifugal governor was invented by James Watt to regulate or govern the power of steam coming from the boiler. There are two ball-shaped structure, known as flyballs. The axis which connects flyballs is called flywheel, the two are connected with another structure known as throttle valve. When the speed of the flyball slows, throttle valve opens providing more power and spinning the flywheel with a greater speed which produces centrifugal force to flyball, and they move upward. But when there is an excess amount of power, the coupling regulates the throttle to shut a bit causing the reduction in power. This process goes on continuously providing a constant amount of power for a constant output.

Now let us imagine how a computer scientist or standard cognitive scientist would approach to problem of regulation of power. They will try to develop algorithms containing steps about desired speed, optimum speed, of flyball, flywheel and throttle valve. One main point in this solution is to notice the role played by representation. In order to execute their algorithm they will got to make representation of speed of various elements involved and will save it in somewhere. But there was no requirement of representation in the solution provided by Watt’s governor as the regulation of power was the result of the coupling between flyball, flywheel, and throttle.

On the similar grounds proponents of embodied cognition ague that emergence of cognition is also a product of coupling relation between brain, body and environment. According to the dynamic theorist of cognition, the embodiment is an interaction between body and the nervous system and situatedness is an interaction between the agent and the environment. Brain, body, and environment itself are a dynamical system, dynamic interaction among them results in the emergence of cognition (Van Gelder 1995).

The second major support to the replacement thesis comes from the studies of autonomous robots and part of motivation comes from artificial intelligence. One of the pioneers of robotics of our times Brooks (1991) applied some of the theories of embodied cognition and developed many effective and efficient robots without equipping them with representational and computational power. Advocates of Replacement see embodiment and situatedness, rather than symbol manipulation, as the core explanatory concepts in their new cognitive science. The emphasis on embodiment is intended to draw attention to the role an organism’s body plays in performing actions that influence how the brain responds to the world while at the same time influencing how the world presents itself to the brain. Similarly, the focus on situatedness is meant to reveal how the world’s structure imposes constraints and opportunities relative to the type of body an organism has, and thus determines as well the nature of stimulation the brain receives.

To date, the sorts of behavior that dynamicists have targeted, and the capacities of robots built on the principles that inspired Brooks’s subsumption architecture, represent too thin a slice of the full cognitive spectrum to inspire much faith that embodiment and situatedness can account for all cognitive phenomena. Nevertheless, even if Replacement ultimately fails in its goal to topple standard cognitive science, there is no reason to dismiss as unimportant the contributions it has so far produced. Research programs like Thelen’s, Beer (2014), and Brooks’s have added to our understanding of cognition. Some tasks that were once thought to imply the existence of rules, models, plans, representations, and associated computational apparatus, turn out to depend on other tricks. For this reason, rather than Replacement, embodied cognition might do better to set its sights on rapprochement.

Constitution and Standard Cognitive Science

Proponents of the Constitution see themselves as upsetting the traditional cognitivist’s apple cart. The main point of disagreement concerns the constituents of the mind. Whereas standard cognitive science puts the computational processes constituting the mind completely within the brain, if Constitution is right, constituents of cognitive processes extend beyond the brain. Some advocates of Constitution thus assert that the body is, literally, part of the mind. More radically, some who defend Constitution claim that the mind extends even beyond the body, into the world. This latter view goes by the label extended cognition, and has been the source of some of the liveliest debate between embodied cognition theorists and more traditional cognitivists. Many theories have been proposed by various scholars in support of constitution theme of embodiment. The most important theory regarding this regard is a theory of perceptual experience developed by psychologist O’Regan together with philosopher Noe (O’Regan and Noë 2001). They give the example of vision and articulate following important points.

-

1.

“The experience of vision is actually constituted by a mode of exploring the environment” (2001: 946).

-

2.

“In seeing, specifying the brain state is not sufficient to determine the sensory experience because we need to know how the visual apparatus and the environment are currently interacting. There can, therefore, be no one-to-one correspondence between visual experience and neural activations. Seeing is not constituted by activation of neural representations” (2001: 966).

-

3.

“We propose that experience does not derive from brain activity. Experience is just the activity in which the exploring of the environment consists. The experience lies in the doing” (2001: 968).

It is very clear from the above points they reject the algorithmic way of explaining vision with a focus on activity and active exploration of the environment along with brain and body, which clearly indicate the constitutive role of the body and the world. Philosophers Clark and Chalmers (1998) have also made a strong case for the constitution theme by popularizing the concept of extended cognition. Their most famous parity argument in this regard concerns a duck. The argument is like this, if it walks like a duck, quacks like a duck, and flies like a duck, it is a duck. We can understand the intuition behind this argument by a simple example. Suppose there are two Ph.D. students in a course work, when they go to their professor for the discussion concerning course work, one student carries a notebook and other doesn’t. Now the student who carried the notebook is writing important points on his notebook while the other student is memorizing important points. For Clark and Chalmers, there will be no difference between the cognitive elements in both the activity. The notebook is also extended part of your cognition which goes beyond the reach of the brain.

One can object to extended cognition from the perspective of brain in a vat scenario, in which brain is put into a vat filled with nutrients, and the brain is being provided simulation of real world through powerful supercomputer, the simulation is so powerful that the brain is in illusion of living in a real world as an existing person without having any realization of his state. This brain-in-a-vat scenario suggests an argument that might seem to get at the heart of the Constitution question.

Argument from Envatment

-

1.

If processes outside the brain (e.g., in the body or world) are constituents of cognition then the brain alone does not suffice for cognition.

-

2.

An envatted brain suffices for cognition.

Therefore, processes outside the brain are not constituents of cognition.

Clark (2008) has suggested a counterargument as an extension of thought experiment to envatment argument. Applying his suggestion we can extend the thought experiment to the scenario in which part of the brain responsible for vision, is now no longer functioning, it has again been replaced by a computer having a similar function in order to maintain the illusion. Similarly, every part of brain can be replaced by simulation of advanced computers. In such case, even brain ceases to play any role in cognition. If we further explore the possibility of the extension of the thought experiment, it would give weird intuitions. So no longer envatment argument retains its force, which suggests that there is no principled reason which suggests that cognition cannot extend beyond brain to body or the world.

Similar to envatment argument, motley crew argument) (Shapiro 2010) is given against extended cognition hypothesis.

Motley Crew Argument

-

1.

There are cognitive processes wholly in the brain that are distinct from processes crossing the bounds of the brain.

-

2.

Processes within the brain are well-defined, in the sense that they can be an object of scientific investigation, whereas processes that cross the bounds of the brain are not well-defined—are a motley crew—and so cannot be an object of scientific investigation.

Therefore, cognitive science should limit its investigations to processes within the brain.

We can try to dodge the motley crew argument with the help of Rob Wilson’s idea of wide computationalism (Wilson 1994, 2004). His idea is very interesting and different from other arguments and counter arguments in a sense that it has reconciled some of the core assumptions of both the approach. On the one hand, wide computationalism grants that cognitive processes are computational in nature, which is an important assumption of standard cognitive science. At the same time, it allows the possibility of cognitive processes as computational process extends beyond the brain, which is an important point of the constituent theme of embodiment.

We can take help of an example to make sense of wide computationalism. Suppose two people are asked to multiply two digits. One person takes the help of notebook and the table he has memorized, the other person performs the multiplication in his head only without any external aid. Wilson’s point is that there is no difference in the computation. One is realized in neurons and other is realized in the paper. Therefore, cognition although being computational can extend beyond the brain.

Objections to Constitution point to a need for various clarifications. There is confusion over how to understand parity arguments for Constitution. Critics have labored to show that extended cognitive systems differ importantly from brain-constrained cognitive systems—they lack marks of the cognitive. However, parity arguments need not carry the burden of showing that extended systems meet marks of the cognitive. They seek to show only that those things we would be willing to recognize as constituents of a cognitive system might as well be outside the brain as inside. That the system is cognitive in the first place is offered as a given. Finally, also important for understanding Constitution is a proper characterization of the role that external constituents play in cognitive systems. Whether cognitive systems can be at once extended and legitimate objects of scientific investigation depends on whether there is a scientific framework in which they are proper kinds. Wilson’s wide computationalism suggests such a possibility, trading on the fact that extended cognitive systems that look like messy hybrids from one perspective can look like tightly unified systems from a computational perspective.

Conclusion

An examination of some paradigm work in standard cognitive science reveals commitments to a computational theory of mind, according to which mental processes proceed algorithmically, operating on symbolic representations. Cognitive tasks have determinate starting and ending points. Because cognition begins with an input to the brain and ends with an output from the brain, cognitive science can limit its investigations to processes within the head, without regard for the world outside the organism. Nevertheless, assumption based on exemplars of standard cognitive science started getting challenges from new conception of cognition arising from Gibson’s theory of perception and connectionist accounts of cognition. Gibson conceived brain as a controller and organizer of activities that result in the extraction of information than as a computer for processing this information. But Gibson’s description of psychology leaves unaddressed fundamental questions about how information is picked up and used. We might add another complaint at this point, viz. that Gibson’s focus is on perception, and the extent to which Gibsonian ideas might apply to areas of cognition not involved in perception—problem solving, language, memory, and so on—is at best unclear. Advent of connectionism provides a framework for understanding a form of computation to which the vocabulary of inference is poorly suited, and in which representations exist outside a medium of discrete symbolic expressions. Connectionist success in different domain challenges the standard scientist’s mantra that standard computationalism is the only game in the town. Most fundamentally, the conceptual tool that Gibson and connectionist research bring to the study of cognition laid the foundation of embodied cognition.

Contrast between different themes of embodied cognition and standard cognition, reveals many aspects of various research programs falling under the umbrella of embodied cognition. The goal of Conceptualization is to show that bodies determine, limit, or constrain how an organism conceives its world. The phenomena we have seen supporters of Conceptualization discuss—color perception, categorization, language comprehension—are all squarely within the domain of standard cognitive science. Of greater import, the experimental results on which Conceptualization rests can as well be explained by standard cognitive science, making one wonder why the tried and true should be abandoned in favor of something less certain. One reason to prefer the standard explanations to the Conceptualization explanations is that they conserve explanatory concepts that have a proven track record. Insofar as the explanatory tools of Conceptualization are still developing, whether they can apply equally well across the range of cognitive phenomena is, at this point, anyone’s guess. In contrast, standard cognitive science has shown its power to unify under the same explanatory framework phenomena as diverse as perception, attention, memory, language, problem solving, categorization, and so on. Each of these subjects has been the target of explanations in terms of computations involving rules and representations. Conceptualization fails to make a case against symbolic representation, and so finds no strength in another’s weakness.

The goal of Replacement is to show that the methods and concepts of standard cognitive science should be abandoned in favor of new methods and conceptual foundations. Replacement’s challenge to standard cognitive science cannot be as easily assessed as Conceptualization’s. Certainly those advocating Replacement see themselves in competition with standard cognitive science. Replacement explanations tend to deploy the mathematical apparatus of dynamical systems theory or envision cognition as emerging from the non-additive interactions of simple, mindless, sense–act modules. Emphasis is on interactions among a nervous system, a body, and properties of the world. Because Replacement views organisms as always in contact with the world, there is no need to represent the world. Also, because the interactions from which cognition emerges are continuous, with the timing of events playing a crucial role, a computational description of them cannot possibly be adequate. Research within embodied cognition that has Replacement as its goal has brighter prospects than Conceptualization. Both claim to compete with standard cognitive science, but the former more so than the latter can plausibly claim victories in this competition. Work in Replacement does not yet hold forth the promise of full-scale conquest, leaving standard cognitive scientists with the happy result that they now have in their grasp a greater number of explanatory strategies to use in their investigations of cognition.

Unlike Conceptualization and Replacement, Constitution, should not be construed as competing with standard cognitive science. As Wilson and Clark (2009) understand Constitution, it is an extension of standard cognitive science, one that the practitioners of standard cognitive science did not anticipate, but one that proper appreciation of the organism’s recruitment of extracranial resources renders necessary for understanding cognition. In one sense, then, Constitution is nothing new. This is clearest in Wilson’s conception of wide computationalism, which clings to the computational obligations that are central to standard cognitive science while revealing how parts of an organism’s body or world might meet these obligations no less adequately than parts of the brain. Clark too is transparent in his belief that the study of cognition should continue to avail itself of the explanatory strategies that standard cognitive science has deployed with great success, although he is quick to acknowledge the value that alliances with connectionism and dynamical systems theory will have in a more complete understanding of cognition. As Adams and Aizawa (2009) have noted, whether the constituents of cognition extend beyond the brain is a contingent matter. That they do, Clark and Wilson reply, means not that the methods and concepts of standard cognitive science must be rejected, but only refitted. Constitution and standard cognitive science are not in competition because one can pursue Constitution with the assistance of explanatory concepts that are central to standard cognitive science. This could not be said of Conceptualization and Replacement. However, that Constitution and standard cognitive science agree to a large extent about how to explain cognition is not to say that Constitution doesn’t compete with standard cognitive science in other respects. There is competition with respect to what should be included in explanations of cognition. Hobbling standard cognitive science, Constitution proponents aver, is its brain-centrism—its assumption that the constituents of cognition must fit within the boundaries of the cranium. Because standard cognitive science is unwilling to extend its explanations to incorporate non-neural resources, it will often fail to see the fuller picture of what makes cognition possible, or will be blind to cognition’s remarkable ability to self-structure its surrounding environment.

However, work on Conceptualization is ongoing, and neuroscientific findings promise to energize some of its basic assumptions. Replacement too remains an active area of research, and, as happened with the development of connectionism, time may reveal that far more of cognition lends itself to Replacement-style explanations than one could originally have foreseen. Finally, critics continue to assail Constitution, and perhaps various of its metaphysical consequences for personal identity will prove to be unacceptable. The foregoing investigation of embodied cognition is clearly only a starting point for its further examination.

Change history

04 September 2020

This article has been retracted. Please see the retraction notice for more detail: https://doi.org/10.1007/s40961-018-0159-5.

References

Adams, F., & Aizawa, K. (2008). The bounds of cognition. Hoboken: Wiley.

Adams, F., & Aizawa, K. (2009). Why the mind is still in the head. In P. Robbins & M. Aydede (Eds.), Cambridge handbook of situated cognition (pp. 78–95). Cambridge: Cambridge University Press.

Anderson, M. L. (2003). Embodied cognition: A field guide. Artificial Intelligence, 149(1), 91–130.

Beer, R. D. (2014). Dynamical systems and embedded cognition. In K. Frankish & W. Ramsey (Eds.), The Cambridge handbook of artificial intelligence (pp. 128–148). Cambridge: Cambridge University Press.

Block, N. (2005). Action in perception by Alva Noë. The Journal of Philosophy, 102(5), 259–272.

Brooks, R. A. (1991). Intelligence without representation. Artificial Intelligence, 47(1–3), 139–159.

Cacioppo, J. T., Priester, J. R., & Berntson, G. G. (1993). Rudimentary determinants of attitudes: II. Arm flexion and extension have differential effects on attitudes. Journal of Personality and Social Psychology, 65(1), 5.

Chalmers, D. (1990). Syntactic transformations on distributed representations. Connection Science, 2, 53–62.

Chalmers, D. 1993). Connectionism and compositionality: why fodor and pylyshyn were wrong. Philosophical Psychology, 6, 305–319.

Chen, M., & Bargh, J. A. (1999). Consequences of automatic evaluation: Immediate behavioral predispositions to approach or avoid the stimulus. Personality and Social Psychology Bulletin, 25(2), 215–224.

Clark, A. (2008). Supersizing the mind: embodiment, action, and cognitive extension. Oxford: Oxford University Press.

Clark, A., & Chalmers, D. (1998). The extended mind. Analysis, 58(1), 7–19.

Fodor, J., & Pylyshyn, Z. (1981). How direct is visual perception? some reflections on gibson’s ecological approach. Cognition, 9, 139–196.

Fodor, J. A., & Pylyshyn, Z. W. (1988). Connectionism and cognitive architecture: a critical analysis. Cognition, 28(1–2), 3–71.

Gabarini, F., & Adenzato, M. (2004). At the root of embodied cognition: Cognitive science meets neurophysiology. Brain and Cognition, 56, 100–106.

Gallagher, S. (2005). How the body shapes the mind. Oxford: Oxford University Press.

Gallese, V., & Lakoff, G. (2005). The brain’s concepts: The role of the sensory-motor system in reason and language. Cognitive Neuropsychology, 22, 455–479.

Gibson, J. J. (1966). The senses considered as perceptual systems. Prospect Heights: Waveland Press, Inc.

Gibson, J. J. (2014). The ecological approach to visual perception: Classic edition. New York: Psychology Press.

Glenberg, A. M., & Kaschak, M. P. (2002). Grounding language in action. Psychonomic Bulletin & Review, 9(3), 558–565.

Gregory, R. (1972). Seeing as thinking: an active theory of perception. London Times Literary Supplement, 23, 707–708.

Harnad, S. (1990). The symbol grounding problem. Physica D: Nonlinear Phenomena, 42(1–3), 335–346.

Hurley, S. L. (2002). Consciousness in action. Cambridge: Harvard University Press.

Lakoff, G., & Johnson, M. (1999). Philosophy in the flesh: the embodied mind and its challenge to western thought (Vol. 4). New York: Basic Books.

Lakoff, G., & Johnson, M. (2008). Metaphors we live by. Chicago: University of Chicago Press.

Marr, D., & Poggio, T. (1976). Cooperative computation of stereo disparity. Science, 194, 283–287.

Newell, A., & Simon, H. (1961). Computer simulation of human thinking. Science, 134, 2011–2017.

Niedenthal, P. M., Barsalou, L. W., Winkielman, P., Krauth-Gruber, S., & Ric, F. (2005). Embodiment in attitudes, social perception, and emotion. Personality and Social Psychology Review, 9(3), 184–211.

O’Regan, J., & Noë, A. (2001). A sensorimotor account of vision and visual consciousness. Behavioral and Brain Sciences, 24, 939–1031.