Abstract

We present a pseudo-panel model and argue that the control variable approach is subject to the many instrument problem, since it uses the predicted value of the endogenous variable. We show how the bias can be analytically characterized. Finally, we demonstrate the problems of split sample cross fitting.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

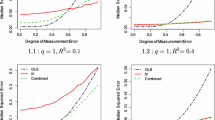

The “control variable” approach has been used in various nonlinear models to address the endogeneity problems.Footnote 1 The purpose of this paper is to examine (i) whether the control variable approach is also subject to the bias problem due to the many instruments problem as pointed out by Bekker (1994) for the linear models, and if so, (ii) whether the cross-fitting advocated by the modern machine learning type estimatorsFootnote 2 eliminates such bias. The many instrument problem is essentially a problem of bias due to many nuisance parameters, which can be understood by using the incidental parameters problem in the panel data. Using a pseudo-panel analysis,Footnote 3 we demonstrate that (i) the control variable approach is indeed subject to the many instrument problem; and (ii) the cross-fitting does not remove the bias. The negative result arises primarily because the control variable approach essentially used the fitted value of the endogenous variable which creates a finite sample bias problem. The bias and its correction in the control variable approach can in principle be understood from the perspective of the quite large general literatureFootnote 4 on bias correction, but because our focus is on the situation where there is a large number of instruments, we use a pseudo-panel analysisFootnote 5 and answer these questions.

The bias of the control variable approach is relatively straightforward to understand. In linear simultaneous equations models, it is well-known that the 2SLS is equivalent to a control variable estimator. See, e.g., Hausman (1978). Therefore, it is quite natural to expect that the control variable approach is subject to the bias problem even in nonlinear models. The problem of cross fitting is not as immediately obvious, which we try to answer using the pseudo-panel analysis in the current paper. In the current section, we just explain what the cross fitting means in control variable estimation, and why it may be intuitively appealing.

Bias in the 2SLS estimator in finite samples has been long recognized. Nagar (1959) proposed the first estimator to remove this finite sample bias.Footnote 6 As has been recognized more recently, the bias can be especially important in the many instrument problem which occurs often with increased size of data sets as Bekker (1994) and Hahn and Hausman (2002, 2003) demonstrate and Hansen et al. (2008) explore empirically. The bias problem in 2SLS is quite important with a number of subsequent papers proposing methods to remove the finite sample bias. The Nagar estimator removes bias by analytically adjusting the estimating equation, and it only holds for linear models. For a linear model

with the instrument matrix Z, the usual 2SLS estimator solves

while Nagar (1959) estimator solves

where \(\widehat{X}=PX\), \(\widetilde{X}=\left( P-\frac{k}{n-k}Q\right) X\), and \(P=Z\left( Z^{\prime }Z\right) ^{-1}Z^{\prime }\) and \(Q=I-P\) denote the usual projection matrices. Here, n and k denote the number of observations and the number of instruments. Note that Nagar’s bias correction \(\frac{k}{n-k}Q\) is roughly proportional to k/n, which can be understood to be the ratio between the “number of nuisance parameters” and the sample size, where the nuisance parameters here are the first stage OLS coefficients.

This approach can be motived by observing that the moment underlying 2SLS is biased

where \(\sigma _{\varepsilon \eta }\) denotes the covariance between the ith elements of \(\varepsilon \) and \(\eta \), while the moment underlying Nagar’s estimator is unbiased

The lack of bias in Nagar (1959) moment can be understood from the perspective that the noise of estimating the instrument \(\widehat{X}\) used in the moment (1) for 2SLS is correlated with the error \(\varepsilon \) in the second stage, which is eliminated by using the instrument \(\widetilde{X}\). As such, we can understand the cross-fit estimator using sample splitting as sharing a similar spirit as Nagar (1959) estimator. Specifically, we can see that the moment underlying the cross fit estimator

has an unbiased moment, i.e., \(E\left[ \check{X}^{\prime }\left( y-X\theta \right) \right] =0\). Here,

where \(n=2m\), we split the sample into two equal sized subsamples, and \(\widehat{\pi }_{\left( 1\right) }\) and \(\widehat{\pi }_{\left( 2\right) }\) are first stage estimators based on the first and second subsamples.

We ask whether such an interpretation would lead to a reasonable inference for nonlinear models. Our conclusion is that it does not. We show that it is impossible in general to remove the bias of the moment equation by manipulating the first stage estimator alone. We do this analysis by considering nonlinear models of endogeneity with many instruments, and showing that the moment equation with the cross fit estimator does not eliminate a bias due to nonlinearity, and as a consequence, does not have the desired unbiasedness property.

Pseudo-Panel Model

Our model of interest is nonlinear models with endogeneity such as the probit model with endogenous regressors, where

and \(\left( \varepsilon _{i},\eta _{i}\right) \) have a bivariate normal distribution. The model has a built-in nonlinearity, and therefore, the endogeneity is probably best handled by the control variable approach. In the particular case of probit models, Rivers and Vuong (1988) solved the problem by writing

which generates a consistent estimator as long as \(\left( \varepsilon _{i},\eta _{i}\right) \) have a bivariate normal distribution. (We assume that \(\zeta _{i}\) has a standard normal distribution, i.e., the parameters \(\delta \) and \(\rho \) reflect such normalization.)

In order to examine the consequence of many instruments, we adopt the strategy of interpreting the nuisance parameters (due to many instruments) as incidental parameters similar to the fixed effects in panel data. Therefore, we consider a special case that has a panel representation:

This is a model where the first stage is characterized by n dummy instruments, and \(\alpha _{i}\) denotes the first stage coefficient for the ith dummy instrument, i.e., \(\pi =\left( \alpha _{1},\alpha _{2},\ldots ,\alpha _{n}\right) \).

The usual two step estimator can be understood to be the method of moments estimator

where

and \(\varPhi \) denotes the cumulative distribution function of a standard normal distribution.

Bias of Panel Two Step Estimator

In this section, we review the panel literature, and discuss how the bias of panel data estimator can be interpreted. The framework in this section provides a basis of understanding the problem of cross-fit estimator presented in Sect. 5.

The model in the previous section is a special case of the nonlinear panel data estimator

For reasons that will become clearer later, we use the symbol M to denote the time series dimension of the panel data. Hahn and Newey (2004) and Arellano and Hahn (2007, 2016) are among the few who analyzed the finite sample bias from the large n, large T asymptotic approximation point of view. For our purpose, it is useful to make an explicit assumption that the fixed effects \(\gamma \) are multi-dimensional, and that v is of the same dimension as \(\gamma \). We let J denote \(\dim \left( \theta \right) \).

We provide a brief summary of the finite sample bias from the literature. It is convenient to analyze the general panel estimator in terms of the efficient score

where

Here, the \(E\left[ \underline{v}_{it}^{\gamma _{i}}\right] =E\left[ \partial \underline{v}\left( z_{it},\theta _{0},\gamma _{i0}\right) / \partial \gamma _{i}^{\prime }\right] \) and \(E\left[ \underline{u}_{it} ^{\gamma _{i}}\right] =E\left[ \partial \underline{u}\left( z_{it},\theta _{0},\gamma _{i0}\right) / \partial \gamma _{i}^{\prime }\right] \) are evaluated at the ‘truth’. The asymptotic distribution of \(\sqrt{nM}\left( \widehat{\theta }-\theta \right) \) is asymptotically normal with variance equal to

and mean equal to

where

for

and \(\underline{b}_{i,j}\) and \(\underline{U}_{it,j}\) denote the j-th components of \(\underline{b}_{i}\) and \(\underline{U}_{it}\). In other words, the 1/M bias is given by the formula \(\underline{B}/M\). Here, the 1/M bias denotes the approximate bias of \(\widehat{\theta }\) based on the asymptotic bias (5) of \(\sqrt{nM}\left( \widehat{\theta }-\theta \right) \). Because the number of fixed effects is equal to n, and the sample size is equal to nM, we can see that the ratio between the “number of nuisance parameters” and the sample size is 1/M, and that it is of the same order of magnitude of the bias of 2SLS as discussed by Nagar (1959).

Applying this result to the two-step estimation case where \(M=T\) and the fixed effects are scalars,

we have the asymptotic variance of \(\sqrt{nT}\left( \widehat{\theta } -\theta \right) \) equal to

and the approximate bias equal to

where

Further Analysis of the Bias Formula

In this section, we analyze the formula (13) in two important models, and show that the bias formula simplifies for linear models, but not in the probit models. In Sect. 5, we will use this difference to illustrate why the bias in the probit models cannot be removed by cross fitting.

Because \(E\left[ v_{it}U_{it}^{\alpha _{i}}\right] =E\left[ v_{it} u_{it}^{\alpha _{i}}\right] -\varDelta _{i}E\left[ v_{it}v_{it}^{\alpha _{i} }\right] \), and \(E\left[ U_{it}^{\alpha _{i}\alpha _{i}}\right] =E\left[ u_{it}^{\alpha _{i}\alpha _{i}}\right] -\varDelta _{i}E\left[ v_{it}^{\alpha _{i}\alpha _{i}}\right] \), we can see that the bias formula simplifies a little bit if \(\varDelta _{i}=0\) or \(v_{it}^{\alpha _{i}}\) is constant. Under this condition, we can see

The condition \(\varDelta _{i}=0\) is satisfied if \(E\left[ u_{it}^{\alpha _{i} }\right] =0\), i.e., under Neyman orthogonality. The condition that \(v_{it} ^{\alpha _{i}}\) is constant is satisfied if \(v_{it}\) is an affine function of \(\alpha _{i}\).

In order to understand these conditions, consider the panel model with n dummy IV’s

If our 2SLS estimator solves

we see that \(v_{it}^{\alpha _{i}}=-1\). We also see that \(E\left[ u_{it}^{\alpha _{i}}\right] =E\left[ y_{it}-x_{it}\theta \right] =E\left[ \varepsilon _{it}\right] =0\) so the condition \(\varDelta _{i}=0\) is also satisfied. The 2SLS for the pseudo panel model is special because \(u_{it}^{\alpha _{i}\alpha _{i}}=0\). This implies that the bias formula is very simple and satisfies \(b_{i}= -E\left[ v_{it}u_{it}^{\alpha _{i} }\right] / E\left[ v_{it}^{\alpha _{i}}\right] \). This plays an important role in understanding the properties of split sample cross fitting for 2SLS.

We should recognize that these conditions are not satisfied for the probit model with endogenous regressors. In fact, the special nature of 2SLS, i.e., \(u_{it}^{\alpha _{i}\alpha _{i}}=0\), can be argued to be an implication of the IV type interpretation of 2SLS. If the 2SLS is interpreted to be a regression using the fitted value from the first stage as a regressor in the second stage, we see that the 2SLS solves

Here, we an easily see that \(u_{it}^{\alpha _{i}\alpha _{i}}\ne 0\) in general. In general, control variable approach requires that it be used as a regressor, so we should expect \(u_{it}^{\alpha _{i}\alpha _{i}}\ne 0\) in general.

Split Sample Cross Fit Estimator

In this section, we will use the framework of Sect. 3, and analyze the bias of the cross fit estimator after sample splitting. We assume that the sample is split into two, and we use the estimate \(\alpha _{i}\) from one subsample to be used as part of u in the second half of the sample cross fit. In other words, in order to understand the issue, we will assume now that the data consists of

i.e., we will assume that \(T=2M\), and write q and r for the first and second half of the observations. We will write the split sample cross fit estimator as

with the recognition that \(\widehat{\alpha }_{1,i}\) and \(\widehat{\alpha } _{2,i}\) are estimators of \(\alpha _{1,i}=\alpha _{2,i}=\alpha _{i}\). In order to see the resemblance to the panel model, we will write it

In other words, the split sample cross fit estimator can be analyzed by adopting a perspective that the fixed effects are multidimensional. The result for the multi-dimensional fixed effects is already available from Arellano and Hahn (2016), which we will utilize here.

It can be shownFootnote 7 that the efficient score is

where the \(\varDelta _{i}\) is identical to the one in (11). Note that at \(\alpha _{1,i}=\alpha _{2,i}=\alpha _{1,i}\), we see that the counterparts of \(\underline{U}\) and \(\underline{\mathcal {I}}_{i}\) are

where the U and \(\mathcal {I}_{i}\) on the RHS are identical to the ones in (10) and (12). We therefore see that the asymptotic distribution of \(\sqrt{nM}\left( \widehat{\theta }-\theta \right) \) is normal with variance equal to

It follows that the asymptotic distribution of \(\sqrt{nT}\left( \widehat{\theta }-\theta \right) =\sqrt{n\left( 2M\right) }\left( \widehat{\theta }-\theta \right) =\sqrt{2}\sqrt{nM}\left( \widehat{\theta }-\theta \right) \) is normal with variance equal to

In other words, the asymptotic variance of \(\sqrt{nT}\left( \widehat{\theta }-\theta \right) \) does not change.

As for the bias, we see that the counter part of \(\underline{b}_{i}\) is given by

so

and the implied bias is

We now compare the bias of the split sample cross fit estimator with the full sample estimator. We first rewrite the bias (9) with the full sample plug in estimator as

using \(U_{it}^{\alpha _{i}}=u_{it}^{\alpha _{i}}-\varDelta _{i}v_{it}^{\alpha _{i}}\). Comparing (17) with (18), we can see that the split sample cross fit affects the bias in three ways:

-

1.

It eliminates the bias \( -E\left[ v_{it}u_{it}^{\alpha _{i} }\right] / E\left[ v_{it}^{\alpha _{i}}\right] \) due to the correlation between \(v_{it}\) and \(u_{it}^{\alpha _{i}}\).

-

2.

It magnifies the bias \(\varDelta _{i}E\left[ v_{it}v_{it} ^{\alpha _{i}}\right] / E\left[ v_{it}^{\alpha _{i}}\right] \) due to the correlation between \(v_{it}\) and \(v_{it}^{\alpha _{i}}\) by a factor of two.

-

3.

It magnifies the bias \( \frac{1}{2}E\left[ U_{it}^{\alpha _{i}\alpha _{i}}\right] E\left[ v_{it}^{2}\right] / \left( E\left[ v_{it}^{\alpha _{i}}\right] \right) ^{2}\) due to the variance of \(v_{it}\) by a factor of two.

This is all intuitive. The finite sample bias is due to the noise of estimating \(\alpha _{i}\), which may be correlated with the second stage moment u. The split sample cross fit estimator severs this correlation, which explains the first effect. On the other hand, the split sample estimator effectively uses half the sample size for estimation of each \(\alpha _{i}\), which leads to the second and third effects.

We saw that in the pseudo panel 2SLS (15) with the IV interpretation, \(\varDelta _{i}=0\) and \(u_{it}^{\alpha _{i}\alpha _{i}}\). This implies that the bias of the full sample estimator takes a simple form \( -E\left[ v_{it}u_{it}^{\alpha _{i}}\right] / E\left[ v_{it}^{\alpha _{i}}\right] \), and it is completely eliminated by the split sample cross fit.

Note that \(E\left[ v_{it}v_{it}^{\alpha _{i}}\right] =0\) if \(v_{it} ^{\alpha _{i}}\) is constant as in (3). Even then, we should expect that (i) the bias is not removed by the cross fitting in general, although (ii) it is removed in the special case where \(E\left[ u_{it}^{\alpha _{i}\alpha _{i}}\right] =0\).

Getting back to our panel rendition of the probit model with endogenous regressor, we see that

where

where we use

It can be seen that \(U_{it}^{\alpha _{i}\alpha _{i}}\ne 0\), so we cannot expect the cross fitting estimator to remove the bias.

Note that the probit model is just one of the examples where the control variable is used as part of a nonlinear regression. We should therefore expect that (i) the control variable based estimator to have the many IV problem, and (ii) the problem is not solved by cross fitting. (In fact, the pseudo panel 2SLS (15) with the regression interpretation would be such that the bias due to \(u_{it}^{\alpha _{i}\alpha _{i}}\) will not be eliminated by the split sample cross fit.)

Modified Objective Function for the Second Step

In this section, we review the panel literature, discuss a method of bias removal in the context of control variable estimation, which suggests how the bias can be corrected in principle. One can conjecture with high confidence that the bias can be removed from traditional methods of bias correction such as jackknifeFootnote 8, but it may be useful to find a simple alternative to these computationally intensive procedures. Panel literature discussed various methods of bias correction in the recent past, so one can imagine that it would work even in non-panel settings with some modifications. It is not clear how to frame an asymptotic sequence of models such that the biases in non-panel models with a large number of nuisance parameters can be easily understood and corrected, which we leave for future research.

Consider the moment (16) of the linear model, with a twist that the fitted value from the first stage is used as a regressor in the second stage. In particular, assume that \(x_{it}=\alpha _{i}+\eta _{it}\), which implies that

and that the bias formula (13) takes the form

If we further assume that \(\eta _{it}\) is i.i.d. over i and t, the formula further simplifies to

In Sect. 5, we saw that the term \(E\left[ \eta _{it} u_{it}^{\alpha _{i}}\right] \) can be eliminated by sample split cross fit, but the second term actually gets magnified.

We consider changing the moment equation altogether, adopting Arellano and Hahn (2007) proposal to correct the bias of the moment equation. For this purpose, we assume that the moment u is obtained in the maximization of some objective function

with respect to \(\theta \), i.e., assume that

We then have

This suggests that we can adopt the proposal in Arellano and Hahn (2007), and consider maximizing

where \(\widehat{\sigma }_{\eta }^{2}=\frac{1}{nT}\sum _{i=1}\sum _{t=1}^{T}\left( x_{it}-\widehat{\alpha }_{i}\right) ^{2}\) with the corresponding moment equationFootnote 9

Summary

Using a pseudo-panel model, we have demonstrated that the control variable approach is subject to the many instrument problem, since it uses the predicted value of the endogenous variable. It is essentially the same bias problem analyzed by Cattaneo et al. (2019), who advocated the use of the jackknife to remove the higher order bias. It would be interesting to develop a method of analytic bias correction in the non-panel setting, which we leave for future research.

Data Availability

Not applicable.

Code Availability (Software Application or Custom Code)

Not applicable.

Notes

See, e.g., Chernozhukov et al. (2017).

It is essentially the same bias problem analyzed by Cattaneo et al. (2019). Their analysis is predicated on the assumption that the first stage takes the form of an OLS estimation, which may be restrictive for certain applications. In order to facilitate the analysis, we present a pseudo-panel model to make the same point, and demonstrate the problems of split sample cross fitting. The panel analogy makes it easy to understand the reason why the split sample cross fitting does not remove the bias.

See Arellano and Hahn (2007), e.g., for a survey of the panel literature.

Biases in the finite samples may be removed either by correcting the estimator or by fixing the moments (estimating equation). The interpretation of Nagar’s estimator is that it is a result of fixing the moment.

Algebraic details are collected in the appendix.

In the case where

$$\begin{aligned} \psi \left( z_{it},\theta ,\widehat{\alpha }_{i}\right) =-\frac{1}{2}\left( y_{it}-\widehat{\alpha }_{i}\theta \right) ^{2}, \end{aligned}$$which corresponds to the 2SLS for the linear model

$$\begin{aligned} x_{it}&=\alpha _{i}+\eta _{it}\\ y_{it}&=x_{it}\theta +\varepsilon _{it}, \end{aligned}$$we can show that the moment equation (19) produces Nagar’s estimator. Algebraic details are collected in the appendix, which also contains a discussion of the relationship to Cattaneo et al. (2019).

References

Arellano, M., and J. Hahn. 2007. Understanding Bias in Nonlinear Panel Models: Some Recent Developments. In Advances in Economics and Econometrics, ed. R. Blundell, W.K. Newey, and T. Persson. Cambridge: Cambridge University Press.

Arellano, M., and J. Hahn. 2016. A Likelihood-based Approximate Solution to the Incidental Parameter Problem in Dynamic Nonlinear Models with Multiple Effects. Global Economic Review 45: 251–274.

Bekker, P.A. 1994. Alternative Approximations to the Distributions of Instrumental Variable Estimators. Econometrica 92: 657–681.

Blundell, R.W., and J.L. Powell. 2004. Endogeneity in Semiparametric Binary Response Models. Review of Economic Studies 71: 655–679.

Cattaneo, M., M. Jansson, and X. Ma. 2019. Two-Step Estimation and Inference with Possibly Many Included Covariates. Review of Economic Studies 86: 1095–1122.

Chernozhukov, V., D. Chetverikov, M. Demirer, E. Duflo, C. Hansen, W.K. Newey, and J.M. Robins. 2017. Double/Debiased Machine Learning for Treatment and Structural Parameters. Econometrics Journal 21: C1–C68.

Cox, D.R., and E.J. Snell. 1968. A General Definition of Residuals. Journal of the Royal Statistical Society Series B (Methodological) 30: 248–275.

Firth, D. 1993. Bias Reduction of Maximum Likelihood Estimates. Biomelrika 80: 27–38.

Hahn, J., and J. Hausman. 2002. A New Specification Test for the Validity of Instrumental Variables. Econometrica 70: 163–189.

Hahn, J., and J. Hausman. 2003. Weak Instruments: Diagnosis and Cures in Empirical Econometrics. American Economic Review: Papers and Proceedings 93: 118–125.

Hahn, J., and W. Newey. 2004. Jackknife and Analytical Bias Reduction for Nonlinear Panel Models. Econometrica 72: 1295–1319.

Hahn, J., G. Kuersteiner, and W. Newey. 2019.: “Bias Correction and Higher Order Efficiency”. Unpublished Working Paper.

Hansen, C., J. Hausman, and W. Newey. 2008. Estimation with Many Instrumental Variables. Journal of Business & Economic Statistics 26: 398–422.

Hausman, J.A. 1978. Specification Tests in Econometrics. Econometrica 46: 1251–1271.

Nagar, A.L. 1959. The Bias and Moment Matrix of the General k-Class Estimators of the Parameters in Simultaneous Equations. Econometrica 27: 575–595.

Rilstone, P., V.K. Srivastava, and A. Ullah. 1996. The Second-Order Bias and Mean Squared Error of Nonlinear Estimators. Journal of Econometrics 75: 369–395.

Rivers, D., and Q.H. Vuong. 1988. Limited Information Estimators and Exogeneity Tests for Simultaneous Probit Models. Journal of Econometrics 39: 347–366.

Funding

None.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflicts of interests

None.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendices

Detailed Derivations for Section 5

Noting that

where the \(\varDelta _{i}\) is identical to the one in (11), we see that the efficient score is

Note that at \(\alpha _{1,i}=\alpha _{2,i}=\alpha _{1,i}\), we see that the counterparts of \(\underline{U}\) and \(\underline{\mathcal {I}}_{i}\) are

where the U and \(\mathcal {I}_{i}\) on the RHS are identical to the ones in (10) and (10). We therefore see that the asymptotic distribution of \(\sqrt{nM}\left( \widehat{\theta }-\theta \right) \) is normal with variance equal to

where we used independence between \(q_{it}\) and \(r_{it}\), which implies

As for the bias, we simplify notations a little bit by assuming that \(J=1\), and recognize that the counter parts of the components of \(\underline{b}_{i}\) are given by

so

and

We also have

and

so

Combining (20) and (21), we see that the counterpart of (8) is given by

Detailed Derivations for Section 6

In this case, we see that

so the moment equation (19) is now

Because \(\widehat{\alpha }_{i}=\overline{x}_{i}\) in this case, we can now write the moment as

Noting that

we can rewrite the moment as

or

We now show that Nagar’s formula \(\left( X^{\prime }\left( P-\frac{k}{N}M\right) X\right) ^{-1}X^{\prime }\left( P-\frac{k}{N}M\right) y\) reduces to the expression above for our particular case with n dummy instruments. Because

where \(\ell \) is the T-dimensional column vector of ones, we have

Noting that \(k=n\) and \(N=nT\) in this case, we obtain

To conclude, Nagar’s estimator can be understood to be the variation/generalization of (19).

We also discuss the higher order bias in non-panel setting. Although the higher order properties of 2SLS are well known, it would be interesting to compare it with the recent analysis of general two-step estimators developed by Cattaneo et al. (2019). For

with

where \(\widehat{\mu }_{i}=z_{i}^{\prime }\widehat{\pi }\) is the fitted value in the regression of the first stage \(x_{i}=w_{i}^{\prime }\pi +\eta _{i}\) is regressed on \(w_{i}\), we see that \(\dot{m}\left( z_{i},\theta ,\mu _{i}\right) =y_{i}-2\mu _{i}\theta \) and \(\ddot{m}\left( z_{i},\theta ,\mu _{i}\right) =-2\theta \) in Cattaneo et al. (2019) notations, so their bias is equal to

divided by \(E\left[ \mu _{i}^{2}\right] \).

We will simplify their expression a bit, using the fact that \(\sum _{j=1} ^{N}p_{ij}^{2}\) is the \(\left( i,i\right) \)-element of \(PP^{\prime }\), and P is symmetric and idempotent, i.e.,

which allows (22) to be rewritten as

Because

we have

we obtain further simplification of (22)

Therefore, their bias reduces to

i.e., the usual bias of 2SLS.

The idea behind (19) and their bias (24), if applied to the current framework, suggest that we may consider a modified moment equation

which yields Nagar’s estimator.

Rights and permissions

About this article

Cite this article

Hahn, J., Hausman, J. Problems with the Control Variable Approach in Achieving Unbiased Estimates in Nonlinear Models in the Presence of Many Instruments. J. Quant. Econ. 19 (Suppl 1), 39–58 (2021). https://doi.org/10.1007/s40953-021-00262-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40953-021-00262-y