Abstract

This paper aims to provide a Melnikov-like function that governs the existence of periodic solutions bifurcating from period annuli in certain families of second-order discontinuous differential equations of the form \(\ddot{x}+\alpha \textrm{sign}(x)=\eta x+\varepsilon \;f(t,x,\dot{x})\). This family has attracted considerable attention from researchers, particularly in the analysis of specific instances of \(f(t,x,\dot{x})\). The study of this type of differential equation is motivated by its significance in modeling systems with abrupt state changes, both in natural and engineering contexts.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction and statement of the main result

This paper is dedicated to the investigation of periodic solutions for a specific family of second-order discontinuous differential equations given by

where \(\alpha\), \(\eta\), and \(\varepsilon\) are real parameters, and f is a \(\mathcal {C}^{1}\) function that is \(\sigma\)-periodic in the variable t. This class of differential equations has been the subject of extensive research in the past decade, primarily due to its relevance in various engineering phenomena characterized by abrupt state changes. For instance, in electronic systems, Eq. (1) can describe an oscillator in the presence of a relay [8], while in mechanical systems, it can model an automatic pilot for ships [2]. Throughout this paper, we assume that \(\varepsilon\) is a small parameter. Consequently, the differential Eq. (1) for \(\varepsilon =0\) is referred to as the unperturbed differential equation.

The investigation of periodic solutions for specific cases of the differential Eq. (1) has been a topic of interest in the research literature. For example, in [8], periodic solutions of (1) have been analyzed under the assumption of \(\eta =0\) and \(f(t,x,\dot{x})=\sin (t)\), while in [4], the focus was on the damped equation, i.e., \(\eta \ne 0\). The authors in [9] explored the existence of periodic solutions of (1) using topological methods, employing a generalized version of the Poincaré-Birkhoff Theorem for this purpose. Periodic orbits were also studied for a specific instance of Eq. (1) in [10], where \(f(t, x, \dot{x})=p(t)\) is assumed to be periodic and of class \(\mathcal {C}^{6}\). A similar approach is taken in [15], but with the assumption that \(\eta =0\) and p(t) is a Lebesgue integrable periodic function with a vanishing average. In both of the latter works, the primary objective was to establish the boundedness of all solutions of the respective differential equations.

The Melnikov method [11] is a fundamental tool for determining the persistence of periodic solutions in planar smooth differential systems under non-autonomous periodic perturbations. It involves constructing a bifurcation function known as the Melnikov function, which has its simple zeros corresponding to periodic solutions that bifurcate from a period annulus of the differential system. The Melnikov function is obtained by expanding a Poincar’e map, typically the time T stroboscopic map, into a Taylor series. In the smooth context, this map inherits the regularity of the flow.

The Melnikov analysis has been employed in investigating the existence of crossing periodic solutions in non-smooth differential systems, as demonstrated in [1, 3, 6, 13, 14]. Following the direction set by these references, our primary objective is to derive an explicit expression, using a Melnikov procedure, for a function that governs the existence of periodic solutions in (1) when the unperturbed equation exhibits a period annulus.

Due to the discontinuous nature of (1), verifying the regularity of the time \(\sigma\) stroboscopic map associated with it presents challenges. To address this, we introduce time as a variable and utilize the discontinuous set generated by the sign function as a Poincaré section. This approach enables the construction of a smooth displacement function. An analogous approach has been previously explored by J. Sotomayor in his thesis [16] for autonomous differential equations. This function quantifies the distance between the positive forward flow and the negative backward flow where both intersect the discontinuous set. Subsequently, a Melnikov-like function is obtained by expanding this displacement function into a Taylor series.

The remainder of this section is dedicated to exploring the general notion of solutions of the differential Eq. (1) (see Sect. 1.1), classifying the unperturbed differential equation (see Sect. 1.2), and finally presenting the statement of our main result (see Sect. 1.3). Additionally, an application of our main result is provided (see Sect. 1.4).

1.1 Filippov solutions

To better understand the meaning of a solution to the differential Eq. (1), we make a variable change by defining \(\dot{x}=y\). This change results in the following first order differential system

The solutions of the differential system (2) are defined according to the Filippov convention (see [5, Sect. 7]), which exist for every initial condition. For this reason, we will refer to (2) as a Filippov system. Consequently, the solutions of (1) are derived from the solutions of the Filippov system (2), ensuring existence for all possible initial conditions.

Taking into account the sign function present in (2), we can decompose the differential system into the following ones:

Each one of the differential systems presented in (3) are of class \(\mathcal {C}^{1}\) within its respective domain of definition, ensuring the uniqueness of solutions for each differential system in (3). We shall focus our attention on solutions of the differential systems in (3) that intersect the region of discontinuity transversely. Under these conditions, solutions of (2) are obtained by concatenating solutions of (3), which establishes the global uniqueness property in such cases. A more detailed discussion on this fact is postponed to Sect. 2, and we suggest [7] for further topics on this theory.

1.2 Analysis of the unperturbed Filippov system

Before presenting our main results, we provide an analysis of the unperturbed Filippov system, that is, for \(\varepsilon =0\):

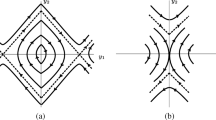

which matches

when restricted to \(x\ge 0\) and \(x\le 0\), respectively. We notice that, when \(\alpha \ne 0\), the line \(\Sigma =\{(x,y)\in \mathbb {R}^2 / x=0 \}\) represents the set of discontinuity of \(X_0\). Moreover, except for \(y=0\), the line \(\Sigma\) corresponds to a crossing region of \(X_0\), meaning that the trajectories of \(X_0\) are formed by concatenating the trajectories of \(X^{+}\) and \(X^{-}\) along \(\Sigma\). Additionally, if we consider the involution \(R(x,y)=(-x,y)\), we notice that \(X^-(x,y)=-RX^+(R(x,y))\) and \(\text {Fix}(R)\subset \Sigma\), which means that the Filippov vector field \(X_0\) is R-reversible. In the classical sense, a smooth planar vector field X is said to be R-reversible if it satisfies \(X(x,y)=-R(X(R(x,y)))\). The geometric meaning of this property is that the phase portrait of \(X_0\) is symmetric with respect to \(\text {Fix}(R)\). Furthermore, by considering the involution \(S(x,y)=(x,-y)\), we verify that both \(X^{+}\) and \(X^{-}\) are S-reversible in the classical sense. This indicates that the trajectories of \(X^{+}\) and \(X^{-}\) exhibit symmetry with respect to \(\text {Fix}(S)=\{(x,y)\in \mathbb {R}^{2}:y=0\}\) (see Figs. 1, 2 and 3).

Remark 1

Let us denote \(\textbf{x}=(x,y)\) and \(\textbf{a}=(0,\alpha )\). Then, taking \(A=\left( \begin{array}{cc} 0 &{} 1\\ \eta &{} 0 \end{array}\right)\), the vector fields \(X^{+}\) and \(X^{-}\) can be rewritten as follows

for \(\textbf{x}\in \Sigma ^{+}=\{x>0\}\) and \(\textbf{x}\in \Sigma ^{-} =\{x<0\}\), respectively. By denoting the solutions of \(X^{+}\) and \(X^{-}\), as \(\Gamma ^{+}(t,\textbf{z}_0)\) and \(\Gamma ^{-}(t,\textbf{z}_0)\), respectively, with initial conditions \(\textbf{z}_0\in \Sigma ^{+}\) and \(\textbf{z}_0\in \Sigma ^{-}\), respectively, we notice that both solutions can be explicitly expressed by means of the variation of parameters as follows

where \(e^{At}\) is the exponential matrix of At given by

The R-reversibility of \(X_0\) implies that \({\Gamma ^-(t,\textbf{z}_0)=R(\Gamma ^+(-t,R\cdot \textbf{z}_0))}\) and

Now let us consider \(\textbf{x}_0=(0,y_0)\), with \(y_0>0\). For the sake of simplicity, we denote by \(\Gamma ^{+}(t,y_0)=(\Gamma _1^{+}(t,y_0),\Gamma _2^{+}(t,y_0))\) and \(\Gamma ^{-}(t,y_0)=(\Gamma _1^{-}(t,y_0),\Gamma _2^{-}(t,y_0))\) the solutions of \(X^{+}\) and \(X^{-}\), respectively, having \(\textbf{x}_0\) as the initial condition. Taking Remark 1 and the expression for \(e^{At}\) given in (4), the solutions of \(X^{+}\) having \(\textbf{x}_0\) as initial condition are given by

where \(\omega =\sqrt{-\eta }\), for \(\eta <0\). As previously mentioned, the R-reversibility of \(X_0\) allows us to easily obtain the expressions of \(\Gamma ^{-}(t,y_0)\) just by considering the relation

Next, we classify all possible geometric configurations that \(X_0\) can take by varying the values of \(\alpha\) and \(\eta\). Additionally, we provide a detailed description of the singularities of \(X_0\) within the context of Filippov systems (see [7] ). By denoting \(p=(0,0)\), \(p^+=(\alpha /\eta ,0)\), and \(p^-=(-\alpha /\eta ,0)\), for \(\eta \ne 0\), the geometric configurations of \(X_0\) are as follows:

- (C1):

-

\(\eta >0\) and \(\alpha >0\): In this case, the points p, \(p^+\), and \(p^-\) are the singularities of X, with p being an invisible fold–fold point and also a center-type. The points \(p^+\) and \(p^-\) are both admissible linear saddles (see Fig. 1);

- (C2):

-

\(\eta >0\) and \(\alpha =0\): In this case, \(X_0\) corresponds to a smooth vector field having p as the only singularity of saddle-type (see Fig. 1);

- (C3):

-

\(\eta >0\) and \(\alpha <0\): In this case, the only singularity of \(X_0\) is p and it is a visible fold–fold (see Fig. 1);

- (C4):

-

\(\eta =0\) and \(\alpha >0\): In this case, the only singularity of \(X_0\) is p, which corresponds to an invisible fold–fold as well as a center (see Fig. 2);

- (C5):

-

\(\eta =0\) and \(\alpha =0\): In this case, \(X_0\) is a smooth vector field with \(\{y=0\}\) being the set of its critical points (see Fig. 2);

- (C6):

-

\(\eta =0\) and \(\alpha <0\): In this case, p is a visible fold–fold of \(X_0\) and also its only singularity (see Fig. 2);

- (C7):

-

\(\eta <0\) and \(\alpha >0\): In this case, the point p is an invisible fold–fold and also a center of \(X_0\) (see Fig. 3);

- (C8):

-

\(\eta <0\) and \(\alpha =0\): In this case, \(X_0\) is a smooth vector field and it has p as the only singularity being a center-type (see Fig. 3);

- (C9):

-

\(\eta <0\) and \(\alpha <0\): In this case, the point p is a visible fold–fold, and the points \(p^+\) and \(p^-\) are both linear centers of \(X_0\). These points are the only singularities of \(X_0\) (see Fig. 3).

In the cases (C1), (C4), (C7), (C8), and (C9), we notice the existence of a region composed by a family of periodic orbits (see Figs. 1, 2 and 3). This region is called period annulus and it will be denoted by \(\mathcal {A}_i\), with \(i\in \{1,4,7,8,9\}.\) For each one of these cases, there exist a half-period function, \(\tau ^{+}(y_0)\) (resp. \(\tau ^{-}(y_0)\) ), for \(y_0>0\), providing the smallest (resp. greatest) time for which the solution \(\Gamma ^+(t,y_0)\) (resp. \(\Gamma ^-(t,y_0)\)), with \((0,y_0)\in \mathcal {A}_i\), reaches the discontinuous line \(\Sigma\) again. In each of these cases, the half-period function is given by \(\tau ^{+}(y_0)= \tau _0(y_0)\) and \(\tau ^{-}(y_0)= -\tau _0(y_0)\), where, using the S-reversibility of \(X^{+}\), the function \(\tau _0(y_0)\) is the solution of the boundary problem

The expression for \(\tau_0\) is given by

It is noteworthy that in cases (C7) and (C9) the boundary problem above provides infinitely many solutions. Nevertheless, due to the dynamics of the unperturbed Filippov system near \(y_0=0\), the solutions to be considered must satisfy the conditions \(\lim _{y_0\rightarrow 0^{+}}\tau _0(y_0) =0\) in case (C7) and \(\lim _{y_0\rightarrow 0^{+}}\tau _0(y_0) =2\pi /\omega\) in case (C9).

Taking into account the S-reversibility of both \(X^{+}\) and \(X^{-}\), along with Eq. (7), we can deduce that

which will play an important role throughout this work.

Remark 2

We notice that the origin \(p=(0,0)\) in case (C8) as well as the points \(p^+=(\alpha /\eta ,0)\) and \(p^-=(-\alpha /\eta ,0)\) in case (C9) correspond to linear centers, which have been consistently studied in the literature (see, for instance, [12]). For this reason, from now on, we will not consider these centers in our study.

For \(i\in \{1,4,7,9\}\), we denote by \(\mathcal {D}_i\), the interval of definition of \(\tau _0\), and by \(\mathcal {I}_i\) the image of \(\tau _0\). From the possible expressions of \(\tau _0\) in (8), it can be observed that \(\tau '_0(y_0)>0\) in \(\mathcal {D}_i\), for \(i\in \{1,4,7\}\), and \(\tau '_0(y_0)<0\) in \(\mathcal {D}_9\). Consequently, \(\tau _0\) is a bijection between \(\mathcal {D}_i\) and \(\mathcal {I}_i\) for \(i\in \{1,4,7,9\}\). Its inverse is given by

Although the expressions for \(v(\sigma )\) coincide in cases (C7) and (C9), the functions are not the same. Indeed, the domains do not coincide and \(\alpha\) assumes distinct signs in each case.

1.3 Main result

As usual, the Melnikov method for determining the persistence of periodic solutions provides a bifurcation function whose simple zeros are associated with periodic solutions bifurcating from a period annulus. In what follows we are going to introduce this function for the differential Eq. (1).

Recall that \(f(t,x,\dot{x})\) is a \(\mathcal {C}^{1}\) function, \(\sigma -\)periodic in the variable t. Let \(\Gamma (t,y_0)\) and \(v(\sigma )\) be the functions defined in (6) and (10), respectively. We define the Melnikov-like function \(M:\mathbb {R}\rightarrow \mathbb {R}\) as

where

with \(\omega =\sqrt{-\eta }>0,\) for \(\eta <0\). Notice that, since \(f(t,x,\dot{x})\) is \(\sigma\)-periodic in t, the Melnikov-like function M is \(\sigma\)-periodic. We derive the expression of M in the proof of the following result concerning periodic solutions of the differential Eq. (1). Its proof is postponed to Sect. 2.

Theorem A

Suppose that for some \(i\in \{1,4,7,9\}\) the parameters \(\alpha\) and \(\eta\) of the differential Eq. (1) satisfy the condition (Ci) and that \(\sigma /2\in \mathcal {I}_i\). Then, for each \(\phi ^*\in [0,\sigma ]\), such that \(M(\phi ^*)=0\) and \(M'(\phi ^*)\ne 0\), there exists \(\overline{\varepsilon }>0\) and a unique smooth branch \(x_{\varepsilon }(t)\), \(\varepsilon \in (-\overline{\varepsilon },\overline{\varepsilon })\), of isolated \(\sigma\)-periodic solutions of the differential Eq. (1) satisfying \((x_0(\phi ^*),\dot{x}_0(\phi ^*))=(0,v(\sigma /2))\).

Remark 3

It is important to emphasize that periodic solutions obtained in Theorem A bifurcate from the interior of the period annulus \(\mathcal {A}_i\) for \(i\in \{1,4,7,9\}\). In case (C9), the two homoclinic orbits joining \(p=(0,0)\) constitute the boundary of \(\mathcal {A}_9\). Therefore, their persistence are not being considered in Theorem A, which is elucidated by the conclusion \(\dot{x}_0(\phi ^*)= v(\sigma /2)\in \mathcal {D}_9=(0,\infty )\).

1.4 Example

In order to illustrate the application of Theorem A, we examine the following differential equation

This equation was previously studied in [4], where the authors provided conditions on \(\alpha\), \(\eta\), and \(\beta\) to determine the existence of a discrete family of simple periodic solutions of (13). By assuming \(f(t,x,\dot{x})=\sin (\beta t)\) in (1), we reproduce a similar result as in [4, Theorem 2.1.1], as follows.

Proposition 4

Given \(n\in \mathbb {N}\), there exists \(\overline{\varepsilon }_n>0\) such that, for every \(\varepsilon \in (- \overline{\varepsilon }_n, \overline{\varepsilon }_n)\), the differential Eq. (13) has n isolated \(\frac{2\pi }{\beta }(2k-1)\)-periodic solutions, for \(k\in \{1,\ldots ,n\}\), whose initial conditions are \((x_{\varepsilon }^{k}(0),\dot{x}_{\varepsilon }^{k}(0))=\left( 0,\frac{\alpha }{\sqrt{\eta }}\tanh (\frac{\pi \sqrt{\eta }\,(2k-1)}{\beta }) \right) +\mathcal {O}(\varepsilon )\).

The main difference between both results is that Proposition 4 is based on perturbation theory, while Theorem 2.1.1 in [4] provides a precise upper bound for \(\varepsilon\) by means of direct computations.

Proof

In Eq. (13), the parameters \(\alpha\) and \(\eta\) are satisfying condition (C1). Let us define \(\sigma _i=2\pi \beta ^{-1} i\), for \(i\in \mathbb {N}\). Since \(f(t,x,\dot{x})=\sin (\beta t)\) is \(\sigma _1\)-periodic in t, it is natural that \(f(t,x,\dot{x})\) is also \(\sigma _i\)-periodic in t. Besides that, for every \(i\in \mathbb {N}\), we have \(\sigma _i/2\in \mathcal {I}_1\). Then, for \(i\in \mathbb {N}\), we compute the Melnikov function, defined as (11), corresponding to the period \(\sigma _i\). This function takes the form

Notice that, if i is odd, then

while for even values of i, \(M_i(\phi )=0\). Given \(n\in \mathbb {N}\), we observe that \(M_{2k-1}(0)=0\) and \(M_{2k-1}'(0)\ne 0\) for each \(k\in \{1,\ldots ,n\}\). Applying Theorem A, it follows that, for each \(k\in \{1,\ldots ,n\}\), there exists \(\varepsilon _k>0\) and a unique branch \(x_{\varepsilon }^{k}(t)\), \(\varepsilon \in (-\varepsilon _k,\varepsilon _k)\), of isolated \(\overline{\sigma }_k\)-periodic solutions of the differential Eq. (13) satisfying \((x_{\varepsilon }^{k}(0),\dot{x}_{\varepsilon }^{k}(0))=(0,\nu (\overline{\sigma }_k)/2 )+\mathcal {O}(\varepsilon )\), where \(\overline{\sigma }_k=\sigma _{2k-1}\). We see that, for each \(k\in \{1,\ldots ,n\}\), the periods \(\overline{\sigma }_k\) are pairwise distinct, indicating that \(\nu (\overline{\sigma }_{k_1}/2) \ne \nu (\overline{\sigma }_{k_2}/2)\) whenever \(k_1\ne k_2\). Therefore, by considering \(\overline{\varepsilon }_n=\min _{1\le k\le n} \{\varepsilon _k\}\), we conclude the proof of the proposition. \(\square\)

2 Proof of Theorem A

In order to prove Theorem A, we consider the extended differential system associated (2), given by

which, due to the \(\sigma\)-periodicity of \(f(\theta ,x,y)\) in \(\theta\), has \(\mathbb {S}_{\sigma }\times \mathbb {R}^{2}\), with \(\mathbb {S}_{\sigma }=\mathbb {R}/\sigma \mathbb {Z}\), as its extended phase space. The differential system (14) matches

when it restricted to \(x\ge 0\) and \(x\le 0\), respectively. The solutions for the differential systems in (15) with initial condition \((\theta _0,0,y_0)\), for \(y_0 >0\), are given by the functions

and

respectively, where \(\varphi ^{\pm }(\tau ,\theta _0,y_0;\varepsilon ) =( \varphi ^{\pm }_1(\tau ,\theta _0,y_0;\varepsilon ), \varphi ^{\pm }_2(\tau ,\theta _0,y_0;\varepsilon ) )\) is the solution for the Cauchy problem

with \(\textbf{x}_0=(0,y_0)\) and \(F(t,\textbf{x})=(0,f(t,\textbf{x}))\). Since the switching plane \(\Sigma '=\{(\theta ,x,y)\in \mathbb {R}^{3}:x=0\}\), with \(y\ne 0\), is a crossing region for the differential system (14), it follows that solutions of (14) arise from the concatenation of \(\Phi ^{+}\) and \(\Phi ^{-}\) along \(\Sigma '\) when these solutions intersect \(\Sigma '\) transversely, as previously mentioned. We denote this concatenated solution by \(\Phi (\tau ,\theta _0,y_0;\varepsilon )\).

2.1 Construction of the displacement function

Taking (9) into account, we notice that,for \(y_0 > 0\),

and

According to the Implicit Function Theorem, there exist smooth functions \(\tau ^{+}:\mathcal {U}_{(\theta _0,y_0;0)}\rightarrow \mathcal {V}^{+}_{\tau ^{+}_0(y_0)}\) and \(\tau ^{-}:\mathcal {U}_{(\theta _0,y_0;0)}\rightarrow \mathcal {V}^{-}_{\tau ^{-}_0(y_0)}\), where \(\mathcal {U}_{(\theta _0,y_0;0)}\) and \(\mathcal {V}^{\pm }_{\tau ^{\pm }_0(y_0)}\) are small neighborhoods of \((\theta _0,y_0;0)\) and \(\tau ^{\pm }_0(y_0)\), respectively. These functions satisfy

for every \((\theta ,y;\varepsilon )\in \mathcal {U}_{(\theta _0,y_0;0)}\).

Thus, the functions \(\tau ^{+}(\theta _0,y_0;\varepsilon )\) and \(\tau ^{-}(\theta _0,y_0;\varepsilon )\), combined with the solutions (16) and (17) of the extended differential system (14), allow us to construct a displacement function, \(\Delta (\theta _0,y_0;\varepsilon )\), that “controls” the existence of periodic solutions of (1) as follows

since \(\tau ^{-}(\theta _0,y_0;\varepsilon )\) is \(\sigma\)-periodic in \(\theta _0\). The displacement function \(\Delta (\theta _0,y_0;\varepsilon )\) computes the difference in \(\Sigma '\) between the points \(\Phi ^{+}(\tau ^{+}(\theta _0,y_0;\varepsilon ),\theta _0,y_0;\varepsilon )\) and \(\Phi ^{-}(\tau ^{-}(\theta _0,y_0;\varepsilon ),\theta _0+\sigma ,y_0;\varepsilon )\) (see Fig. 4). Thus it is straightforward that if \(\Delta (\theta _0^{*},y_0^{*};\varepsilon ^{*})=0\), for some \((\theta ^{*},y_0^{*};\varepsilon ^{*})\in [0,\sigma ]\times \mathbb {R}^{+}\times \mathbb {R}\), then the solution \(\Phi (\tau ,\theta _0^{*},y_0^{*};\varepsilon ^{*})\) is \(\sigma\)-periodic in \(\tau\), meaning that \(\Phi (\tau ,\theta _0^{*},y_0^{*};\varepsilon ^{*})\) and \(\Phi (\tau +\sigma ,\theta _0^{*},y_0^{*};\varepsilon ^{*})\) are identified in the quotient space \(\mathbb {S}_{\sigma }\times \mathbb {R}^{2}\). Furthermore, from the definition of \(\Phi ^{+}\) and \(\Phi ^{-}\) in (16) and (17), respectively, we have that

In what follows, we provide preliminary results concerning the main ingredients constituting the displacement function \(\Delta (\theta _0,y_0;\varepsilon )\).

2.2 Preliminary results

This section is dedicated to presenting preliminary results regarding the solutions of (18) and the time functions \(\tau ^+(\theta _0,y_0;\varepsilon )\) and \(\tau ^+(\theta _0,y_0;\varepsilon )\) mentioned earlier. We begin by providing a result concerning the behavior of the solutions of (18) as \(\varepsilon\) approaches to zero.

Proposition 5

For sufficiently small \(|\varepsilon |\), the function \(\varphi ^{\pm }(t,\theta _0,y_0;\varepsilon )\) writes as

where \(\Gamma ^{+}(t,y_0)\) and \(\Gamma ^{-}(t,y_0)\) are the functions given in (6) and (7), respectively, and

Proof

Since \(\varphi ^{\pm }(t,\theta _0,y_0;\varepsilon )\) is the solution to the Cauchy problem (18), then it must satisfy the integral equation

which, by expanding in Taylor series around \(\varepsilon =0\), gives us

Then, taking into account the expression for \(\varphi ^{\pm }(t,\theta _0,y_0;\varepsilon )\) in (21) and the computations above, we have that

which implies that \(\psi ^{\pm }\) is the solution to the Cauchy problem

Then, the general formula for solutions of linear differential equations yields relationship (22). \(\square\)

In what follows, we describe the behavior of the time functions \(\tau ^{+}(\theta ,y;\varepsilon )\) and \(\tau ^{-}(\theta ,y;\varepsilon )\) satisfying (20).

Proposition 6

Let \(\tau ^{\pm }(\theta _0,y_0;\varepsilon )\) be the time satisfying Eq. (20). Then, for sufficiently small \(|\varepsilon |\) and \(y_0>0\) we have

with

Proof

By expanding (20) in Taylor series around \(\varepsilon =0\), we have

which implies that

Then Eq. (23) together with (19) conclude the proof of the proposition. \(\square\)

The following result plays an important role in describing the behaviour of \(\varphi ^{\pm }_2\) around \(\varepsilon =0\). It is important to mention that, in our context, we identify vectors with column matrices.

Proposition 7

Let us consider \(v_1(t)\) and \(v_2(t)\) as the lines of the matrix \(e^{A t}\). Then for every \(y_0>0\), the following identity holds

where \(\tau _0(y_0)\) is the half-period function defined in (8).

Proof

We define the auxiliary matrix-valued function

which is continuously differentiable for every \(t\in \mathbb {R}\). By differentiating \(\beta (t)\), we have that

Computations above imply that \(\beta (t)\) is a constant function in each one of its entries, that is,

for every \(t\in \mathbb {R}\). In particular,

and this concludes the proof of the proposition. \(\square\)

In the following discussion, we provide key relationships for the fundamental components that appear in the expressions of \(\varphi ^{+}_2(\tau ^{+}(\theta _0,y_0;\varepsilon ),\theta _0,y_0;\varepsilon )\) and \(\varphi ^{-}_2(\tau ^{-}(\theta _0,y_0;\varepsilon ),\theta _0+2\sigma ,y_0;\varepsilon )\) for sufficiently small values of \(|\varepsilon |\). We start by formulating a more detailed expression for \(\psi ^+(t,\theta _0,y_0)\). By taking relation (22) into account, we have

where

This remark leads us to the following result.

Lemma 8

For sufficiently small \(|\varepsilon |\), the function \(\varphi ^{+}_2(\tau ^{+}(\theta _0,y_0;\varepsilon ),\theta _0,y_0;\varepsilon )\) writes as

Proof

By expanding \(\varphi ^{+}_2(\tau ^{+}(\theta _0,y_0;\varepsilon ),\theta _0,y_0;\varepsilon )\) in Taylor series around \(\varepsilon =0\), we have that

where

Thus, by considering the relationship (24), the function \(\xi ^+(\theta _0,y_0)\) can be rewritten as follows

where the last equality above is obtained after Proposition 7. Then, taking into account (9), the proof of the lemma is completed. \(\square\)

In order to obtain analogous results as those achieved for \(\varphi ^{+}_2(\tau ^{+}(\theta _0,y_0;\varepsilon ),\theta _0,y_0;\varepsilon )\) in the context of the function \(\varphi ^{-}_2(\tau ^{-}(\theta _0,y_0;\varepsilon ),\theta _0+\sigma ,y_0;\varepsilon )\), we follow the previously outlined procedure. Then, by taking into account that F(t, x, y) is a \(\sigma\)-periodic function in t and relationship (5), we notice that

where

Similarly proceeding as in Lemma 8, we provide the following result concerning the behavior of \(\varphi ^{-}_2(\tau ^{-}(\theta _0,y_0;\varepsilon ),\theta _0+\sigma ,y_0;\varepsilon )\) around \(\varepsilon =0\).

Lemma 9

For sufficiently small \(| \varepsilon |\), the function \(\varphi ^{-}_2(\tau ^{-}(\theta _0,y_0;\varepsilon ),\theta _0+\sigma ,y_0;\varepsilon )\) writes as

Before we proceed with the proof of Theorem A, let us perform some essential computations which will play an important role in deriving the desired Melnikov-like function. Let \(I^+(t,\theta _0,y_0)\) and \(I^-(t,\theta _0,y_0)\) be the integrals defined in (25) and (26), respectively. We remind that \(F(t,x,y)=(0,f(t,x,y))\). Taking \(u(t)=(u_1(t),u_2(t))\) to be the second column of \(e^{-At}\), that is,

with \(\omega =\sqrt{-\eta }\), it follows that

where we are defining \(g(s,\theta _0,y_0):= f(\theta _0+s,\Gamma ^+(s,y_0))+f(\theta _0-s,R\Gamma ^+(s,y_0))\). Notice that \(g(s,\theta _0,y_0)\) is \(\sigma\)-periodic in \(\theta _0\).

2.3 Conclusion of the proof of Theorem A

The task of obtaining a point \((\theta _0,y_0;\varepsilon )\) that directly makes the function \(\Delta\) vanish is quite challenging. Thus, in our approach, we proceed with a Melnikov-like method, which basically consists in computing the Taylor expansion of \(\Delta (\cdot ,\cdot ;\varepsilon ) =0\) around \(\varepsilon =0\) up to order 1 and solving the resulting expression. In this direction, we start by examining the first component of the function \(\Delta\), which, after its Taylor expansion around \(\varepsilon =0\), is given by

Let \(i \in \{1,4,7,9\}\) be fixed such that the parameters \(\alpha\) and \(\eta\) satisfy condition (Ci) and \(\sigma /2\in \mathcal {I} _i\). Since \(\tau _0\) is a bijection between \(\mathcal {D}_i\) and \(\mathcal {I} _i\), there exist \(y_0^{*}\in \mathcal {D}_i\) such that \(\tau _0(y_0^{*})=\sigma /2\). Additionally, as discussed in Sect. 1.2, \(\frac{\partial \Delta _1}{\partial y_0}(\theta_0,y^*_0;0)=2\tau _0'(y_0^{*})\ne 0\). Therefore, from compactness and the Implicit Function Theorem, there exist \(\varepsilon _1> 0\), \(\delta _1>0\), and a unique \(\mathcal {C}^1\)-function \({\overline{y}:\mathbb{S}_{\sigma}\times(-\varepsilon _1,\varepsilon _1)\rightarrow (y^*_0-\delta _1,y^*_0+\delta _1)}\) such that \(\overline{y}(\theta_0,0)=y^*_0\) and \({\Delta _1(\theta_0,\overline{y}(\theta_0,\varepsilon );\varepsilon )=0}\), for every \(\varepsilon \in (-\varepsilon _1,\varepsilon _1) \; \mbox{and every} \; \theta_0\in\mathbb{S}_{\sigma}\).

By substituting \(\overline{y}(\theta_0,\varepsilon )\) into \(\Delta _3(\theta _0,y_0;\varepsilon )\), and taking into account Lemmas 8 and 9, we have, for sufficiently small \(|\varepsilon |\),

where \(I^{+}(\sigma ,\theta _0,y_0^{*})\) and \(I^{-}(\sigma ,\theta _0,y_0^{*})\) are the integrals defined in (25) and (26), respectively. We can then define the function, for \(|\varepsilon |\) sufficiently small,

which, after being expanded in Taylor series around \(\varepsilon =0\), gives us

with

The identity (27) and the fact that \(y_0^{*}=v(\sigma /2)\), allow us to rewritten \(M(\theta _0)\) as follows

and this lead us to the expression stated in (11), with the auxiliary function \(U(t,\sigma /2)=\langle (\alpha , v(\sigma /2)),u(t)\rangle\) expressed in (12). Notice that the \(\sigma\)-periodicity of \(g\left( s,\theta _0,v\left( {\sigma }/{2}\right) \right)\) in \(\theta _0\) implies that M is \(\sigma\)-periodic, which enables us to restrict our analysis to the interval \([0,\sigma ]\). Now suppose that \(\phi ^*\in [0, \sigma ]\) is such that \(M(\phi ^*)=0\) and \(M'(\phi ^*)\ne 0\). Then, by the Implicit Function Theorem, there exist \(0< \overline{\varepsilon }<\varepsilon _1\) and a branch \(\overline{\phi }(\varepsilon )\) of simple zeros of M satisfying \(\overline{\phi }(0)=\phi ^*\) and \(M(\overline{\phi }(\varepsilon ))=\tilde{\Delta }_3(\overline{\phi }(\varepsilon );\varepsilon )=0\), for every \(\varepsilon \in (-\overline{\varepsilon },\overline{\varepsilon })\). Back to the solution of the differential system (14), we have that \(\Phi (\tau ,\overline{\phi }(\varepsilon ),\overline{y}(\overline{\phi }(\varepsilon ),\varepsilon );\varepsilon )\) is a \(\sigma\)-periodic solution of (14), whenever \(\varepsilon \in (-\overline{\varepsilon },\overline{\varepsilon })\).

Notice that, by defining

and taking into account that \(\dot{x}_{\varepsilon }(t)= \Phi _3(t,\overline{\phi }(\varepsilon ),\overline{y}(\varepsilon );\varepsilon )\), where \(\Phi _2\) and \(\Phi _3\) are the second and third components of \(\Phi\), respectively, we have that

It concludes the proof of Theorem A.

References

Andrade, K.S., Cespedes, O.A., Cruz, D.R., Novaes, D.D.: Higher order Melnikov analysis for planar piecewise linear vector fields with nonlinear switching curve. J. Differ. Equ. 287, 1–36 (2021)

Andronov, A.A., Vitt, A.A., Khaĭkin, S.E.: Theory of oscillators. Dover Publications Inc., New York (1987). Translated from the Russian by F. Immirzi, Reprint of the 1966 translation

Bastos, J.L., Buzzi, C.A., Llibre, J., Novaes, D.D.: Melnikov analysis in non-smooth differential systems with nonlinear switching manifold. J. Differ. Equ. 267(6), 3748–3767 (2019)

da Silva, C.E.L., Jacquemard, A., Teixeira, M.A.: Periodic solutions of a class of non-autonomous discontinuous second-order differential equations. J. Dyn. Control Syst. 26(1), 17–44 (2020)

Filippov, A.F.: Differential Equations with Discontinuous Righthand Sides, vol. 18 of Mathematics and its Applications (Soviet Series). Kluwer Academic Publishers Group, Dordrecht (1988) Translated from the Russian

Granados, A., Hogan, S.J., Seara, T.M.: The Melnikov method and subharmonic orbits in a piecewise-smooth system. SIAM J. Appl. Dyn. Syst. 11(3), 801–830 (2012)

Guardia, M., Seara, T., Teixeira, M.: Generic bifurcations of low codimension of planar Filippov systems. J. Differ. Equ. 250(4), 1967–2023 (2011)

Jacquemard, A., Teixeira, M.: Periodic solutions of a class of non-autonomous second order differential equations with discontinuous right-hand side. Physica D 241(22), 2003–2009 (2012)

Jiang, F., Ji, Z., Wang, Y.: Periodic solutions of discontinuous damped duffing equations. Nonlinear Anal. Real World Appl. 47, 484–495 (2019)

Kunze, M., Küpper, T., You, J.: On the application of KAM theory to discontinuous dynamical systems. J. Differ. Equ. 139(1), 1–21 (1997)

Mel’nikov, V.K.: On the stability of a center for time-periodic perturbations. Trudy Moskov. Mat. Obšč. 12, 3–52 (1963)

Novaes, D.D.: Perturbed damped pendulum: finding periodic solutions via averaging method. Rev. Bras. Ens. Fís. 35(1), 01–07 (2013)

Novaes, D.D.: On the Hilbert number for piecewise linear vector fields with algebraic discontinuity set. Physica D 441, 133523 (2022)

Novaes, D.D., Seara, T.M., Teixeira, M.A., Zeli, I.O.: Study of periodic orbits in periodic perturbations of planar reversible Filippov systems having a twofold cycle. SIAM J. Appl. Dyn. Syst. 19(2), 1343–1371 (2020)

Novaes, D.D., Silva, L.V.M.F.: Invariant tori and boundedness of solutions of non-smooth oscillators with Lebesgue-integrable forcing term. Z. Angew. Math. Phys. 75(1), 10 (2024)

Sotomayor, J.: Estabilidade estrutural de primeira ordem e variedades de Banach. Tese de doutorado - IMPA, IMPA (1964)

Funding

DDN is partially supported by São Paulo Research Foundation (FAPESP) Grants 2022/09633-5, 2019/10269-3 and 2018/13481-0, and by Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq) Grant 309110/2021-1. LVMFS is partially supported by São Paulo Research Foundation (FAPESP) Grant 2018/22398-0.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

On behalf of all authors, the corresponding author states that there is no conflict of interest.

Additional information

Communicated by Marco Antonio Teixeira.

Dedicated to Jorge Sotomayor (in memoriam).

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Novaes, D.D., Silva, L.V.M.F. A Melnikov analysis on a family of second order discontinuous differential equations. São Paulo J. Math. Sci. (2024). https://doi.org/10.1007/s40863-024-00436-5

Accepted:

Published:

DOI: https://doi.org/10.1007/s40863-024-00436-5