Abstract

The current study attempts to investigate the influence of virtual learning communities (VLCs) on behavior modification, through the bullying paradigm, using natural language processing (NLP) techniques. The key question is whether individual learners that bully in their physical learning community (PLC) can be able to exhibit a behavior modification, if integrated in a VLC. Results indicate that the attempted "integration" could be a promising framework to behavior modification via a virtual community. Furthermore, machine learning is employed for the automatic detection of aggressive behavior that can facilitate the timely teacher's intervention, without him having to manually scan through the textual dataset. To the authors' knowledge, this is the first time such a linguistic and behavioral analysis for bullying detection is applied to VLCs. Another innovative challenge is the language targeted in the analysis, namely Greek.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Learning communities are not just groups that learn through the collaboration process. Time is a critical factor for the community building allowing its members to create their common history, beliefs, values, and trust and to experience sense of community (Hiltz 1985; McMillan and Chavis 1986; Preece and Maloney-Krichmar 2003; Rheingold 1993; Rovai 2002a). This sense, being very important because it is negatively correlated with delinquency and victimization (Battistich and Hom 1997), is empowered through the communication and the social interactions among the students (Dawson 2006). Learning Communities enable behavior modification through internal processes (McMillan and Chavis 1986; Rovai 2002a). Interaction among the community members promotes learning, resulting in deeper understanding. It also motivates them to transform their roles and their behavior (Rovai 2002a). A robust community defines its rules and modifies the actions of its (new) members (Rovai 2002a). Systematic instruction (teacher's active participation and problem-based activities) contributes to the creation of learning communities which in turn contribute to behavior modification. Results show that systematic instruction prevents aggressive behavior in physical learning communities (PLCs) (Doumen et al. 2008; Thomas et al. 1968; Vreeman and Carroll 2007). Physical class was the best known learning community structure (Scardamalia and Bereiter 1994).

Nowadays new web tools and technologies provide the opportunity to extend PLCs on the Internet, as VLCs. Web-based collaborative learning tools empower learning process and promote interaction among the participants (Barana et al. 2017; García-García et al. 2017; Jeong et al. 2017; Ng 2017). Participants receive satisfaction through their active participation in VLCs, having the ability to transform their roles. Sense of community is existent in virtual communities as well (McInnerney and Roberts 2004). Their members create bonds, they feel membership and influence each other creating norms, they share common values, and they trust each other (Koh and Kim 2003). This sense of community is experienced mainly through their communication (dialogic interaction) (Blanchard and Markus 2004; McInnerney and Roberts 2004). Community building is supported through the dialogue interactions among the members (Rovai 2002a, 2002b). Language in a VLC provides a common symbol system (communication code), which is a basic element for a community (McMillan and Chavis 1986). In sociocultural theories, this code is often mentioned as inner speech (Sokolov 1972; Vygotksy 2008).

Rovai and Jordan (2004) suggest that blended learning (face to face interaction combined with distance interaction) produces stronger sense of community than the traditional or the online courses. Nevertheless, sense of community is not ensured in VLCs. Therefore, building the community sense in VLCs is imperative for the teachers. Instruction and project design are the critical factors, while the medium is not. A community is cohesive when it is able to influence its members (McMillan and Chavis 1986). In that context, it is of great importance to investigate whether individual learners that exhibit aggressive behavior (bullying) are able to modify their behavior, if integrated into a VLC.

Bullying is as a major universal problem (Dinakar et al. 2012; Klomek et al. 2011). It is considered as a sociocultural phenomenon with systemic nature (Ortega et al. 2012). It affects various groups and communities of people (Hinduja and Patchin 2017; Englander and Muldowney 2007; Erdur-Baker 2010). Systematic intervention is therefore essential, as single level interventions are ineffective (Fekkes et al. 2004; Vreeman and Carroll 2007). Cyberbullying (the digital form of bullying) has been widely expanded through the Internet (Antoniadou and Kokkinos 2015; Nahar et al. 2013). Bullying and cyberbullying are different, but not separate (Chen and Luppicini 2017; Englander and Muldowney 2007; Erdur-Baker 2010). They are both correlated with depression and suicide (Dinakar et al. 2012; Klomek et al. 2011; Ortega et al. 2012). Cyberbullying is mainly expressed through language (dialogues). Bullying behavior may also arise in Computer Supported Collaborative Learning (CSCL) environments. Detection of such behavior type is critical while establishing PLCs in the Internet as VLCs. Nevertheless there is lack of research regarding bullying behavior in CSCL context. It is therefore essential to analyze language (dialogues) in VLCs in order to detect potential bullying incidents.

Language in communities usually obtains the characteristics of inner speech. The term "inner speech" was introduced by the cultural-historical school of psychology (Sokolov 1972; Vygotsky 2008). Despite inner speech is the speech for "the self", it also appears for announcement among people being in a common psychological state (Vygotsky 2008). Under certain circumstances (common situation or community), it is present in the external speech as abbreviated and elliptical language, or even silence. The analysis of inner speech in VLCs includes analyzing phrases and words as parts of the external speech, and actions and interactions on the artifacts (Sokolov 1972). Language and artifacts, spread throughout the community, turn into property of the consciousness of the individuals who contribute to the collective work (Leontyev 2009). Inner speech is a common linguistic code, being elliptical, abbreviated and peculiar. It is an indicator of robust communication, mainly existing in robust communities. Cyberbullying incidents are expected to be minimized in such types of communities.

Natural language processing (NLP)

Timely detection of cyberbullying is very important. NLP methods have already been used aiming at this task. Research in this field has given results so far in locating bullying (Özel et al. 2017; Nahar et al. 2013; Reynolds et al. 2011), or harassment episodes (Yin et al. 2009), or identifying roles of the participants in them (Vanhove et al. 2013; Xu et al. 2012). Özel et al. (2017) applied machine learning techniques aiming to detect cyberbullying on Turkish messages in social networks (Twitter and Instagram). Nahar et al. (2013) proposed (i) a classification model for detecting harmful posts in social networks (Kongregate, Slashdot and MySpace) and (ii) a cyberbullying network, a graph model used to identify predators and victims. Other works (Reynolds et al. 2011) aimed at the detection of cyberbullying in website posts (Formspring) using machine learning techniques. Yin et al. (2009) proposed a machine learning approach for identifying harassment in chat rooms (Kongregate) and discussion fora (MySpace). Designing of a platform for the automatic detection of harmful content or high risk behavior in social networks was also proposed (Vanhove et al. 2013). In addition to the detection, user profiles could be flagged by the domain services. NLP techniques were used by Xu et al. (2012) targeting to analyze the bullying traces (media posts) in social media (Twitter). They distinguished bullying posts from other posts, they identified the roles of the participants and the roles of the authors of these bullying posts. They performed sentiment analysis on the participants in a bullying episode aiming at the distinction between bullying and teasing. Finally, they used Latent topic models in order to extract the main topics in the bullying traces. There are also works that attempted to locate language standards (Dadvar and De Jong 2012) or to analyze the emotions of the participants (Sanchez and Kumar 2011), while other researchers proposed live control systems on social networks using virtual agents regulations combined with user evaluation and behavior modification (Bosse and Stam 2011). Dadvar and De Jong (2012) applied machine learning methods for cyberbullying detection using linguistic features on a dataset of MySpace posts. Sanchez and Kumar (2011) used sentiment analysis on posts in Twitter, aiming at the bullying detection as well. Bosse and Stam (2011) built a system composed of normative agents in order to detect violent behavior. Specifically, they used rewards and punishments for reinforcing the desired behavior. The system was used within Club Time Machine, a virtual environment for children (ages 6–12).

Applying NLP techniques to social media leads mainly to behavioral treatment: stimulus-reaction and temporary effects motivated by external stimuli. Basic reasons, due to the media nature, are potential lack of specific sociocultural context (Menesini et al. 2012) and unstructured data (Xu et al. 2012). Addressing these techniques in VLCs, refer to learning as capable to cause permanent effects, due to its internal – self choice – character (Garrison 1997). In conclusion, while existing work refers mainly to social media context, using quantitative data, disregarding the subjectivity and the need of adaptation of analytical models by language/country (Flor et al. 2016; Menesini et al. 2012; Ortega et al. 2012), it reveals that NLP tools offer the ability to detect bullying behavior in VLCs. Sparse research regarding NLP and Machine Learning (henceforth called Artificial Intelligence (AI) techniques) use to automatically identify behaviors in learning communities offer a major research challenge. Research so far in this field refers to predicting reputation in VLCs (Chamba-Eras et al. 2016), providing personalized recommendation systems (Song and Li 2019) or automatically detecting the mutual relatedness among learning objects (Fioravera et al. 2018).

In the current research, basic principles of sociocultural theories are applied in VLCs (Engeström 1999; Leontyev 2009; Sokolov 1972; Vygotsky 2008): how teacher's active participation, community's influence (interactions among the members of the VLC: artifacts, dialogues, teacher's active participation) and inner speech affect behavior modification. As language is correlated with the communities as well as with the cyberbullying, we apply linguistic analysis aiming at recognizing behavior patterns: signals and experiences are recorded in speech and artifacts, as "emotional marks" (Leontyev 2009, p. 167) of living inside the VLC. The latter arise when authentic collaborative activities take place and they benefit the community and all its members (Rovai 2002a).

We propose linguistic analysis on VLCs aiming to answer the following research questions: Do the individual (new) members, that bully in their PLC, exhibit a behavior modification, if integrated in a VLC? Is the linguistic behavior of these new members affected by the community's speech? Furthermore, the features derived from the qualitative linguistic analysis are then used in a machine learning setup with well-known classification algorithms, chosen to automatically label student utterances as "bullying" or "no bullying". This experimental setting aims at addressing the following research challenge: Are the linguistic features chosen to model the student text suitable for automatically identifying bullying behavior?

Methods

Context of the study

In the present study, two (2) VLCs participated, henceforth called VLC-1 and VLC-2. In the VLC-1 case study, a PLC of sixteen (16) (K-12) participants was transformed into a VLC, through the Wikispaces web-based collaborative learning environment. Participants had been partners (classmates) for five (5) years. Physical classrooms are communities and their members have feelings of belonging and trust. They also have duties, obligations, and commitment to shared goals (Rovai 2002a). Members of this community exhibited aggressive behavior in the physical hypostasis of the community, as it had already been observed by their teachers.

VLC-2 was created in order to implement an educational cultural project, also using the Wikispaces platform. Participants were mixed: an already existing PLC of twenty-one (21) participants (being partners/classmates for over six (6) years) and another team of nine (9) persons, members of VLC-1, that exhibited aggressive behavior (as was observed by the teachers, and constituted a common assumption within the school community). Both communities were transformed into virtual ones during the same academic period.

The study process

VLC-1: The virtual community was mainly used in order to facilitate communication and collaboration among its members. During a seven (7)-month period, they created and uploaded artifacts, (videos, documents, presentations, pictures), and they used the collaborative platform as a chat forum. Two (2) teachers were involved, having an active instructive role.

VLC-2: The topic of the educational cultural project was selected by the participants. It was associated with the art and the culture of the place they lived in. The project took place during a four (4) month period. Two (2) teachers were involved, having an active instructive role. In the beginning, participants were divided into ten (10) groups consisting of three (3) persons each (two (2) persons of the already existing community and one (1) person of VLC-1 - who exhibited aggressive behavior in the past). Each group selected a subtopic of the project and collaborated in order to search for information and create artifacts (presentations, videos, documents, posters) about it. Problem-based activities implemented in small groups help students to create connections among them, enhancing the sense of community (Rovai 2002a; Vreeman and Carroll 2007).

The project was enriched with authentic activities (visiting museums, exhibitions, or creating paintings). A blended learning approach was applied, providing the ability for the group members to collaborate either in their PLC, or (mainly) through the CSCL environment. This hybrid method (face to face interaction and online activities) gives better learning results and promotes a stronger sense of community (Rovai and Jordan 2004). Face to face (offline) interaction in VLCs is very important for the participants: they create bonds among them, they receive feedback, reinforcement, and reward. Consequently, it affects positively the sense of belonging in the virtual community (Koh and Kim 2003).

Activities and dialogues of the participants were collected from the log files provided by the Wikispaces.

Analysis methods

Data preprocessing

AI techniques (using RapidMiner Studio) were applied on the communication data of the online communities VLC-1 and VLC-2. The first preprocessing step was data anonymization. Secondly, sentence boundaries (full stops) were detected. Dialogues were segmented into periods. As a result, a dataset of five hundred (500) dialogue segments for VLC-1 and a dataset of eighty-three (83) dialogue segments for VLC-2 were created. Despite the fact that this amount of dialogues can be considered small, they are authentic humanistic data collected under real conditions. Two (2) experts, one internal - participating actively in the project - and one external, annotated each example as "bullying" or "no bullying" (Table 1). Positive labeling by one annotator resulted in labeling an example as "bullying". This selection was done due to the sensitivity of the task. False positives are preferable as a result (Ioannidis et al.2011; Reynolds et al. 2011), than avoiding to detect bullying behavior.

Annotation of the dialogue segments was in some cases obvious. Commenting on a peer's artifact: "Perfectttttttttttttt!" was annotated as "no bullying". In other cases ambiguation was existent: "It sucks, horrible" (Table 1). This comment was considered by the internal annotator as a token of bullying behavior, taking into account that it was an offensive comment publicly expressed (in front of the community).

Letters were transformed into lowercase; tokenization was performed, generating n-grams (unigrams). In this way, unstructured data (wiki dialogues) were transformed into a binary term vector. Furthermore, stopwords removal, stemming, pruning of terms with a frequency below 2 and above 100, and filtering tokens according to length (maximum word length: 25 characters, minimum: 4 characters) were applied in order to reduce the number of features.

The Greek language posed certain challenges in the preprocessing stage. It is a language with complex morphology, and it has a lot of potential forms for each root. Words take different inflected forms, depending on their grammatical case. In many cases, a root with different suffixes creates words with very different meaning. Additionally, the Greek language is not sufficiently supported by the NLP infrastructure, like linguistic resources and processing tools. The Greek stemmer developed by Ntais (2006) was used in our task. This stemmer (available online: https://deixto.com/greek-stemmer/) has some limitations: (i) It uses only capital letters in order to solve the problem of the "moving" stress mark on the stems of the Greek words. In this case, some words may be pronounced in different ways, with different meanings each time; (ii) it deals only with inflectional endings and not with the derivational endings, despite the fact that the Greek language is rich in derivative words.

Inter-annotator agreement

In VLC-2 both annotators agreed at 100% rate that no bullying instances had occurred. In VLC-1, the first annotator (internal) labeled 42 out of the 500 examples as bullying (8.4%), while the second one (external) labeled 59 examples as bullying (11.8%). Annotators agreed at 447 dialogues out of the 500 in total (agreement rate: 89%). The inter-annotator agreement rate is considered as high (Artstein and Poesio 2008) given that: (a) the second annotator was an external entity to the community and (b) there are no objective criteria to define the annotation result, increasing the complexity and the subjectivity of the task. Positive labeling of either one annotator gave the result of 15.4% of the dialogues as bullying instances. Annotation examples are provided in Table 2.

Dialogues

Dialogues of both VLCs were evaluated by the same annotators on a scale of 0 to 20. Relevance to the topic, communication effectiveness, amount of threads, linguistic quality, semantic complexity or simplicity were the main criteria for this evaluation. Pages containing single posts not related to the project were rated as low-level communication scale (grade 2 or 3). In contrast, pages with numerous, comprehensive and clear posts offering support to colleagues were rated as high-level communication scale (grade 20).

Artifacts

The artifacts of both VLCs were evaluated on the same scale (0–20). Their evaluation was conducted in accordance with their relevance to the topic, their quality and complexity, the design and their aesthetics. Pages containing single artifacts at a primitive stage were rated as low-level performance (grade 2 or 3). Other pages though, that contained comprehensive and thorough artifacts with high aesthetics, were rated as high-level performance (grade 20).

Machine learning model

In the process described in the previous sections, annotators relied on linguistic features and qualitatively identified aggressive behavior. These features were then used in a machine learning setting that aimed to detect aggressive behavior automatically. As an implication, the model built by the machine learning algorithm could be used in VLCs for timely locating aggressive behavior, giving this way teachers the ability to intervene (Dinakar et al. 2012). It is usually difficult for teachers to monitor and manually detect aggressive behavior in an online environment. It is therefore critical to offer them hints of aggressive behavior or of bullying episodes (McInnerney and Roberts 2004), that have been detected automatically.

The Laplace correction parameter was selected in both the Naïve Bayes and the Naïve Bayes Kernel algorithms. In contrast to Naive Bayes, the Naive Bayes (Kernel) operator can be applied on numerical attributes. A kernel is a weighting function used in non-parametric estimation techniques (John and Langley 1995). Rule induction is an operator that learns a pruned set of rules with respect to the information gain from the given example set. Information gain was the criterion for selecting attributes and numerical splits, the sample ratio and the pureness were set at 0.9, and the minimal prune benefit at 0.25. The gain ratio criterion was selected for the Decision Tree classifier, generating a decision tree model with a maximal depth of 20. A gradient-boosted model is an ensemble of either regression or classification tree models. Both are forward-learning ensemble methods that obtain predictive results through gradually improved estimations. Trees were maximally 20 in number, with their maximal depth set to 5.

Deep Learning is based on a multi-layer feed-forward artificial neural network (Cao et al. 2018; Deng and Yu 2014; Hai-Jew 2019; Schmidhuber 2015). It is trained with stochastic gradient descent using back-propagation. The network may contain numerous hidden layers. Each computed node trains a copy of the global model parameters on its local data with multi-threading (asynchronously), and contributes periodically to the global model via model averaging across the network. The operator starts a 1-node local H2O cluster (Candel et al. 2016; Suleiman and Al-Naymat 2017) and runs the algorithm on it. Despite using one node, the execution is parallel.

Results

Participation

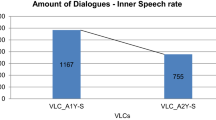

Members of VLC-2 exchanged a minimum amount of dialogues (83) during a four (4)-month period (Table 3). This is probably due to the fact that speech inside the VLCs adopts the characteristics of inner speech: participants speak as much as needed, since they already constitute a well-structured, robust community (Sokolov 1972, pp. 70–88; Vygotsky 2008, pp. 365–436). New members participated in these dialogues at a 13% rate. At the same time, these members participated in their class (VLC-1) dialogues (Table 4) at a 38% rate.

Aggressive behavior

Aggressive behavior was non-existent in VLC-2. In VLC-1 aggressive behavior existed at a 15.4% rate. Similarly to the participation (mentioned above), new members exhibited aggressive behavior in their class (VLC-1) at a 81% rate (Table 4). One third (33%) of their dialogues was annotated as "bullying".

Results show that the same nine (9) members who exhibited bullying behavior in their PLC, appear to be more active and exhibit bullying behavior when acting in a familiar virtual environment (VLC-1). Aggressive behavior in this VLC was rather high (15.4%), as both annotators agreed at a level of 89%. This attitude changed radically when they were called to incorporate themselves in a "well-collaborating" VLC with different shared values (VLC-2). A t test was conducted between VLCs, in order to compare participation and bullying behavior of the nine (9) common members that exhibited aggressiveness. There was a significant difference in the scores for the common members between the VLCs in participation, t(144) = 5.63, p < .05 and in bullying behavior, t(499) = 8.40, p < .05. This is important since they concern the same members under different conditions.

Teacher's active participation

The dialogues of each VLC were analyzed in order to investigate the degree of the teachers' active participation. Teachers participated in VLC-1 at a 14% rate (72 dialogue segments out of a total of 500), while in VLC-2 the rate was 43% (36 dialogue segments out of a total of 83) (Fig. 1). There was a significant difference in the teachers' participation between the two VLCs (confirmed by the t-test: t(96) = 5.09, p < .05).

Artifacts

The mean value for artifacts of VLC-1 was 4.25, and 15.82 for VLC-2 (Fig. 2). A significant difference at the quality of the artifacts between the two VLCs was observed. This difference was confirmed as significant by the applied t test as well: t(14) = 6.90, p < .05. This is an interesting result, considering that success strengthens the bonds among the members of the community (McMillan and Chavis 1986), leading them to accept the norms of the community, by modifying their (aggressive) behavior (Rovai 2002a).

Dialogues

The mean value for dialogues of VLC-1 was 5.75, and 5.36 for VLC-2. It is obvious that there was no significant difference between the VLCs (also confirmed by the t test).

It should be noticed that these low grades result from different reasons. In VLC-1 dialogues were mainly irrelevant to the topic, while in VLC-2 absence of dialogues gave this poor result.

Behavior of the integrated members

It is important to compare the behavior of the integrated members between the two VLCs. There were nine (9) aggressive members in common between them. In VLC-1 they co-existed with the members of their PLC. They worked in a familiar environment and interacted with their peers, being already partners (classmates) with them for five (5) years. In VLC-2 these nine (9) members were involved in a process of integration in an already structured, robust community. The following Figs. 3, 4, and 5 exhibit the behavior of these members in the two VLCs.

In VLC-1 the "common" members, feeling intimate, they participated at a 64,60% rate in the dialogues. While in VLC-2, probably influenced by the integration process, they participated at a 13.25% rate in the dialogues. Analyzing aggressive behavior dialogues showed that (in VLC-1), 80.52% of them was manifested by the "common" members. Almost one third (32.98%) of these dialogues expressed by "the common" members concern aggressive behavior.

Automatic detection of aggressive behavior

The dataset consisted of five hundred (500) learning instances, i.e., student utterances. Attributes (i.e., word unigrams) were initially 648 in number and Boolean. After the preprocessing steps (described in the "Data Preprocessing" section above), they were reduced to 45 word features. In Tables 5 and 6, confusion matrices and comparative results of machine learning algorithms are demonstrated, that were employed for the classification of a dialogue in VLC-1 as "bullying" or "no bullying".

The deep learning algorithm (applying tenfold cross-validation with stratified sampling) gave the best results regarding our concept.

In Deep Learning1, tenfold cross-validation with stratified sampling was applied. Furthermore, Deep Learning2 had the (non-linear) function, used by the neurons in the hidden layers, set to the Exponential Rectifier Linear Unit function (ExpRectifier). This algorithm gave better results for class 1 (bullying), which was the "sensitive" one. According to previous work (Ioannidis et al. 2011; Reynolds et al. 2011), performing worse in class 0 (no bullying) was preferable, due to its lower impact. Instead of missing a bullying instance, an algorithm that was biased towards class 1 was chosen. Regarding other metrics (precision), another algorithm giving better quantitative results could have been chosen, but with less qualitative results.

Discussion

Data on this study were collected during a collaborative activity: (a) dialogues among members of VLC-2 (VLC of 21 learners which integrated 9 learners with aggressive behavior) and (b) dialogues among members of VLC-1 (VLC with aggressive behavior). It is interesting that in VLC-2 no aggressive behavior was exhibited. It seems that these nine (9) participants who exhibited aggressive behavior both in their physical and virtual community (VLC-1) remained "silent" and modified their behavior accepting the norms of the new community (VLC-2); "silence" was the main linguistic feature of the context of the new community (VLC-2: 83 dialogues vs VLC-1: 500 dialogues). Silence was due to abbreviated external speech, derived as a unique communication code. Being classmates for over six (6) years, they were able to collaborate with minimum dialogue amount-inner speech (Sokolov 1972; Vygotsky 2008). But what happened with the nine (9) new members?

It seems that they hesitated to express themselves freely, fearing their rejection by the community (McInnerney and Roberts 2004). The integrated (new) members preferred to remain silent, feeling in awe before the community. The community defined its rules and imposed on the behavior modification of the new members (Rovai 2002a). This behavior modification, exhibited in a VLC implemented in a CSCL environment, was self-propelled, internally motivated, aiming at the acceptance and the community membership. Aggressive members, having the right of the free choice, were cautious fearing of non-acceptance by the community. They chose to remain silent and focused on the shared project. This led to self-sustaining and permanent behavior modification.

VLC-2 was a PLC extended in the Internet as VLC through the CSCL environment. Hybrid (face to face and virtual) interaction facilitated the community to collaborate both in the physical and the virtual hypostasis. As a result, integration of bullies was both physical and virtual.

Members of VLC-2 communicated as much as needed, due to their inner speech. Using less dialogue, they created artifacts significantly better than those of VLC-1. In contrast, VLC-1 members prated, behaved aggressively and produced low-level artifacts. It is therefore important to notice that the common members of these two VLCs showed such a different behavior (in producing artifacts, in the amount and quality of the dialogues and in aggressiveness). The operating framework of these two VLCs determined their actions. It was important that the integration of the new aggressive members did not trigger aggressive behavior inside the community. New members were minority in the VLC-2. Ratio of these nine (9) new integrated members to the total members (21) had a significant effect to the result (integration). As has been shown in previous research, integration of a higher ratio of new members was not successful (Nikiforos et al. 2020).

Lack of resources (NLP tools) for the Greek language posed difficulties on data preprocessing. Machine Learning algorithms provided promising results on the bullying detection in the VLCs. All of them achieved high accuracy (min 86.20%) and F1 measure (min 91.91%). Deep Learning performed better on predicting the sensitive class (bullying). For this reason it is regarded as more suitable for the current task. Despite the other algorithms also provided promising predictive results for the total of the instances, results for the sensitive class were not satisfactory. In general, these promising results need to be tested on a larger dataset.

Bullying detection in VLCs through AI techniques (Machine Learning and NLP) is the main contribution of this research paper. Nevertheless, results are not emphasized as they are in a preliminary stage and are based on a small amount of data. Despite this fact, and taking into account the differences in the context, comparison with previous research is attempted. Our results can be considered promising, since F-1 measure ranges from 0.919 to 0.967 (Table 6). Attempting automatic detection of cyberbullying in social networks, Zhao et al. (2016) achieved F-1 = 0.78 rate, Agrawal and Awekar, (2018) achieved F-1 = 0.95, Badjatiya et al. (2017) reached F-1 = 0.93, Nandhini and Sheeba's (2015) best performance was F-1 = 0.98, Tommasel et al. (2018) achieved F-1 = 0.595, while Di Capua et al. (2016) and Haidar et al. (2017) achieved F-1 = 0.74 and F-1 = 0.927, respectively.

The current case study is a part of a collective case study research (1 out of 5 case studies). All these case studies refer to the bullying behavior in VLCs under different conditions. Consequently, more machine learning experiments will be performed analyzing data from the total of these case studies.

Implication

Community rules were internally built, leading to the creation of its culture. New members faced community's common linguistic code -inner speech, resulting on their reduced speech (silence). Fear appears to be their motive. This is an interesting finding, a framework to modify aggressive behavior in a more permanent way: integrating independent participants in a VLC is a procedure that motivates them to behave according to the community rules, thus modifying their primitive motive.

An online detection system collecting data from CSCL environments and providing alerts for bullying incidents is a practical implication of this work. This will result in safer use of web-based collaborative tools, minimizing the risk for the students. Especially in the case of bullying, both the detection and the early intervention are critical. Considering the difficulty/ inability of the teacher to maintain constant monitoring a VLC, the contribution of a system for bullying detection would be significant.

Finally, many researchers in CSCL field emphasize on the importance of discourse in technologically mediated learning communities (Bereiter and Scardamalia 2018; Hod et al. 2018; Stah and Hakkarainen 2019). It is worthy to investigate inner speech and its qualities as the form of changing in VLCs.

Conclusions

The main contribution of the present research is the study on the influence of VLCs to behavior modification using AI techniques, mainly in the context of sociocultural learning theories. The results presented indicate that fear (of non-acceptance by the community) could be the motivation for bullies in order to remain silent during their integration into a virtual community. Ratio of the new members to be integrated to the total members of the VLC contributed in the behavior modification. Problem-based activity motivated students to collaborate. They communicated effectively and they created artifacts relevant to the project. Teacher's active participation helped them to remain focused on their duties. This could be a promising framework to modify aggressive behavior in a more permanent way: integrating independent participants in a VLC is a procedure that motivates them to behave according to the community rules.

Additionally, AI techniques for linguistic analysis on communication data of online communities were applied. Results showed that bullying detection in VLCs is feasible. Machine learning methods can facilitate the timely teacher's intervention, without him having to manually scan through the textual dataset. Algorithms used in this study predicted aggressive behavior (bullying) at a high accuracy rate. In particular, Deep Learning algorithm performed better in detecting the sensitive class (bullying). To the authors' knowledge, this is the first time such an analysis is attempted in VLCs, and on Greek data. Detecting bullying incidents in CSCL environments is also innovative. Our method will be also applied and validated in the next case studies of our project.

References

Agrawal, S., & Awekar, A. (2018, March). Deep learning for detecting cyberbullying across multiple social media platforms. In European Conference on Information Retrieval (pp. 141–153). Springer, Cham. https://doi.org/10.1007/978-3-319-76941-7_11.

Antoniadou, N., & Kokkinos, C. M. (2015). A review of research on cyber-bullying in Greece. International Journal of Adolescence and Youth, 20(2), 185–201. https://doi.org/10.1080/02673843.2013.778207.

Artstein, R., & Poesio, M. (2008). Inter-coder agreement for computational linguistics. Computational Linguistics, 34(4), 555–596. https://doi.org/10.1162/coli.07-034-R2.

Badjatiya, P., Gupta, S., Gupta, M., & Varma, V. (2017, April). Deep learning for hate speech detection in tweets. In Proceedings of the 26th international conference on World Wide Web companion (pp. 759–760). https://doi.org/10.1145/3041021.3054223.

Barana A., Brancaccio A., Esposito M., Fioravera M., Marchisio M., Pardini C. & Rabellino S. (2017). Problem solving competence developed through a virtual learning environment in a European context. In Proceedings of the 13th international scientific conference “eLearning and Software for Education”, 1, 455–463. https://doi.org/10.12753/2066-026X-17-067.

Battistich, V., & Hom, A. (1997). The relationship between students' sense of their school as a community and their involvement in problem behaviors. American Journal of Public Health, 87(12), 1997–2001. https://doi.org/10.2105/AJPH.87.12.1997.

Blanchard, A. L., & Markus, M. L. (2004). The experienced sense of a virtual community: Characteristics and processes. ACM Sigmis Database, 35(1), 64–79. https://doi.org/10.1145/968464.968470.

Bereiter, C., & Scardamalia, M. (2018). Fixing Humpty-Dumpty: Putting higher-order skills and knowledge together again. In L. Kerslake & R. Wegerif (Eds.), Theory of teaching thinking: International perspectives (pp. 72–87). London, UK: Routledge.

Bosse, T., & Stam, S. (2011). A normative agent system to prevent cyberbullying. Web Intelligence and Intelligent Agent Technology (WI-IAT). IEEE/WIC/ACM International Conference, 2, 425–430. https://doi.org/10.1109/WI-IAT.2011.24.

Candel, A., Parmar, V., LeDell, E., and Arora, A. (2016, September). Deep Learning with H2O. Retrieved from https://h2o.ai/resources.

Cao, W., Wang, X., Ming, Z., & Gao, J. (2018). A review on neural networks with random weights. Neurocomputing, 275, 278–287. https://doi.org/10.1016/j.neucom.2017.08.040.

Chamba-Eras, L., Arruarte, A., & Elorriaga, J. A. (2016, November). Bayesian networks to predict reputation in Virtual Learning Communities. In 2016 IEEE Latin American Conference on Computational Intelligence (LA-CCI) (pp. 1–6). IEEE. https://doi.org/10.1109/LA-CCI.2016.7885721.

Chen, B., & Luppicini, R. (2017). The new era of bullying: A phenomenological study of university students' past experience with cyberbullying. International Journal of Cyber Behavior, Psychology and Learning (IJCBPL), 7(2), 72–90. https://doi.org/10.4018/IJCBPL.2017040106.

Dadvar, M., & De Jong, F. (2012). Cyberbullying detection: A step toward a safer internet yard. In Proceedings of the 21st international conference On World Wide Web (pp. 121–126). ACM. https://doi.org/10.1145/2187980.2187995.

Dawson, S. (2006). A study of the relationship between student communication interaction and sense of community. The Internet and Higher Education, 9(3), 153–162. https://doi.org/10.1016/j.iheduc.2006.06.007.

Deng, L., & Yu, D. (2014). Deep learning: methods and applications. Foundations and Trends in Signal Processing, 7(3–4), 197–387. https://doi.org/10.1561/2000000039.

Di Capua, M., Di Nardo, E., & Petrosino, A. (2016, December). Unsupervised cyber bullying detection in social networks. In 2016 23rd International Conference on Pattern Recognition (ICPR) (pp. 432–437). IEEE. https://doi.org/10.1109/ICPR.2016.7899672

Dinakar, K., Jones, B., Havasi, C., Lieberman, H., & Picard, R. (2012). Common sense reasoning for detection, prevention, and mitigation of cyberbullying. ACM Transactions on Interactive Intelligent Systems (TiiS), 2(3), 18. https://doi.org/10.1145/2362394.2362400.

Doumen, S., Verschueren, K., Buyse, E., Germeijs, V., Luyckx, K., & Soenens, B. (2008). Reciprocal relations between teacher–child conflict and aggressive behavior in kindergarten: A three-wave longitudinal study. Journal of Clinical Child & Adolescent Psychology, 37(3), 588–599. https://doi.org/10.1080/15374410802148079.

Engeström, Y. (1999). Activity Theory and individual and social transformation. In Engeström Y. et al. (Εds). Perspectives on activity theory: Learning in doing: Social, cognitive & computational perspective (pp. 19–39). New York: Cambridge University Press.

Englander, E. K., & Muldowney, A. M. (2007). Just Turn the Darn Thing Off: Understanding Cyberbullying. In: Proceedings of persistently safe schools: The 2007 national conference on safe schools.

Erdur-Baker, Ö. (2010). Cyberbullying and its correlation to traditional bullying, gender and frequent and risky usage of internet-mediated communication tools. New Media & Society, 12(1), 109–125. https://doi.org/10.1177/1461444809341260.

Fekkes, M., Pijpers, F. I., & Verloove-Vanhorick, S. P. (2004). Bullying: Who does what, when and where? Involvement of children, teachers and parents in bullying behavior. Health Education Research, 20(1), 81–91. https://doi.org/10.1093/her/cyg100.

Fioravera, M., Marchisio, M., Di Caro, L., & Rabellino, S. (2018). Alignment of content, prerequisites and educational objectives: towards automated mapping of digital learning resources. In The 14th International Scientific Conference eLearning and Software for Education (Vol. 4, pp. 335–342). https://doi.org/10.12753/2066-026X-18-261.

Flor, M., Yoon, S. Y., Hao, J., Liu, L., von Davier, A. A. (2016). Automated classification of collaborative problem solving interactions in simulated science tasks. In Proceedings of the 11th workshop on innovative use of NLP for building educational applications (pp. 31–41). San Diego, California. Association for Computational Linguistics. June 16.

García-García, C., Chulvi, V., & Royo, M. (2017). Knowledge generation for enhancing design creativity through co-creative Virtual Learning Communities. Thinking Skills and Creativity, 24, 12–19. https://doi.org/10.1016/j.tsc.2017.02.009.

Garrison, D. R. (1997). Self-directed learning: Toward a comprehensive model. Adult Education Quarterly, 48(1), 18–33. https://doi.org/10.1177/074171369704800103.

Hai-Jew, S. (2019). Running a ‘Deep Learning’ artificial neural network in RapidMiner Studio. C2C Digital Magazine, 1(10), 17.

Haidar, B., Chamoun, M., & Serhrouchni, A. (2017). A multilingual system for cyberbullying detection: Arabic content detection using machine learning. Advances in Science, Technology and Engineering Systems Journal, 2(6), 275–284.

Hiltz, S. R. (1985). Online communities: A case study of the office of the future. Bristol: Intellect Books.

Hinduja, S., & Patchin, J. W. (2017). Cultivating youth resilience to prevent bullying and cyberbullying victimization. Child Abuse & Neglect, 73, 51–62. https://doi.org/10.1016/j.chiabu.2017.09.010.

Hod, Y., Bielaczyc, K., & Ben-Zvi, D. (2018). Revisiting learning communities: Innovations in theory and practice. Instructional Science, 46(4), 489–506. https://doi.org/10.1007/s11251-018-9467-z.

Ioannidis, J. P., Tarone, R., & McLaughlin, J. K. (2011). The false-positive to false-negative ratio in epidemiologic studies. Epidemiology, 22(4), 450–456. https://doi.org/10.1097/EDE.0b013e31821b506e.

Jeong, H., Cress, U., Moskaliuk, J., et al. (2017). Joint interactions in large online knowledge communities: The A3C framework. IJCSCL, 12(2), 133–151. https://doi.org/10.1007/s11412-017-9256-8.

John, G. H., & Langley, P. (1995). Estimating continuous distributions in Bayesian classifiers. In Proceedings of the eleventh conference on uncertainty in artificial intelligence (pp. 338–345). Morgan Kaufmann Publishers Inc.

Klomek, A. B., Sourander, A., & Gould, M. S. (2011). Bullying and suicide. Psychiatric Times, 28(2), 1–6.

Koh, J., & Kim, Y. G. (2003). Sense of virtual community: A conceptual framework and empirical validation. International Journal of Electronic Commerce, 8(2), 75–94. https://doi.org/10.1080/10864415.2003.11044295.

Leontyev, A. (2009). Activity and Consciousness. Marxists Internet Archive. Retrieved from https://www.marxists.org/archive/leontev/works/activity-consciousness.pdf.

Menesini, E., Nocentini, A., Palladino, B. E., Frisén, A., Berne, S., Ortega-Ruiz, R., et al. (2012). Cyberbullying definition among adolescents: A comparison across six European countries. Cyberpsychology, Behavior, and Social Networking, 15(9), 455–463. https://doi.org/10.1089/cyber.2012.0040.

McInnerney, J. M., & Roberts, T. S. (2004). Online learning: Social interaction and the creation of a sense of community. Educational Technology & Society, 7(3), 73–81.

McMillan, D. W., & Chavis, D. M. (1986). Sense of community: A definition and theory. Journal of Community Psychology, 14(1), 6–23. https://doi.org/10.1002/1520-6629(198601)14:1%3c6:AID-JCOP2290140103%3e3.0.CO;2-I.

Nahar, V., Li, X., & Pang, C. (2013). An effective approach for cyberbullying detection. Communications in Information Science and Management Engineering, 3(5), 238. https://doi.org/10.15680/IJIRCCE.2016.0404305.

Nandhini, B. S., & Sheeba, J. I. (2015). Online social network bullying detection using intelligence techniques. Procedia Computer Science, 45, 485–492. https://doi.org/10.1016/j.procs.2015.03.085.

Ng, J. C. (2017). Interactivity in virtual learning groups: Theories, strategies, and the state of literature. International Journal of Information and Education Technology, 7(1), 46–52.

Nikiforos, S., Tzanavaris, S., & Kermanidis, K. L. (2020). Virtual learning communities (VLCs) rethinking: Collaboration between learning communities. Education and Information Technologies. https://doi.org/10.1007/s10639-020-10132-4.

Ntais, G. (2006). Development of a Stemmer for the Greek Language. (Master Thesis, Stockholm University, Royal Institute of Technology, Department of Computer and Systems Sciences, pp. 1–40).

Ortega, R., Elipe, P., Mora-Merchán, J. A., Genta, M. L., Brighi, A., Guarini, A., et al. (2012). The emotional impact of bullying and cyberbullying on victims: A European cross-national study. Aggressive Behavior, 38(5), 342–356. https://doi.org/10.1002/ab.21440.

Özel, S. A., Saraç, E., Akdemir, S., & Aksu, H. (2017, October). Detection of cyberbullying on social media messages in Turkish. In Computer science and engineering (UBMK), 2017 international conference on (pp. 366–370). IEEE. https://doi.org/10.1109/ubmk.2017.8093411.

Preece, J., & Maloney-Krichmar, D. (2003). Online communities. In J. Jacko & A. Sears (Eds.), Handbook of human–computer interaction (pp. 596–620). Mahwah: Lawrence Erlbaum.

Reynolds, K., Kontostathis, A., & Edwards, L. (2011). Using machine learning to detect cyberbullying. In Machine learning and applications and workshops (ICMLA), 2011 10th international conference on (Vol. 2, pp. 241–244). IEEE. https://doi.org/10.1109/ICMLA.2011.152.

Rheingold, Η. (1993). The virtual community: Homesteading on the electronic frontier. New York: MIT.

Rovai, A. P. (2002a). Building sense of community at a distance. The International Review of Research in Open and Distributed Learning, 3(1), 1–16.

Rovai, A. P. (2002b). Sense of community, perceived cognitive learning, and persistence in asynchronous learning networks. The Internet and Higher Education, 5(4), 319–332.

Rovai, A. P., & Jordan, H. (2004). Blended learning and sense of community: A comparative analysis with traditional and fully online graduate courses. The International Review of Research in Open and Distributed Learning, 5, 2.

Sanchez, H., & Kumar, S. (2011). Twitter bullying detection. Series NSDI, 12, 15–15.

Scardamalia, M., & Bereiter, C. (1994). Computer support for knowledge-building communities. The Journal of Learning Sciences, 3(3), 265–283. https://doi.org/10.1207/s15327809jls0303_3.

Schmidhuber, J. (2015). Deep learning in neural networks: An overview. Neural Networks, 61, 85–117. https://doi.org/10.1016/j.neunet.2014.09.003.

Sokolov, A. (1972). Inner speech and thought. New York: Plenum Press.

Suleiman, D., & Al-Naymat, G. (2017). SMS spam detection using H2O framework. Procedia Computer Science, 113, 154–161. https://doi.org/10.1016/j.procs.2017.08.335.

Song, B., & Li, X. (2019). The research of intelligent virtual learning community. International Journal of Machine Learning and Computing, 9, 621.

Stahl, G., & Hakkarainen, K. (2019). Theories of CSCL. In U. Cress, C. Rosé, A. Wise, & J. Oshima (Eds.), International handbook of computer-supported collaborative learning. New York: Springer.

Tommasel, A., Rodriguez, J. M., & Godoy, D. (2018, August). Textual aggression detection through deep learning. In Proceedings of the first workshop on trolling, aggression and cyberbullying (TRAC-2018) (pp. 177–187). Santa Fe, USA.

Thomas, D. R., Becker, W. C., & Armstrong, M. (1968). Production and elimination of disruptive classroom behavior by systematically varying teacher's behavior. Journal of Applied Behavior Analysis, 1(1), 35–45. https://doi.org/10.1901/jaba.1968.1-35.

Yin, D., Xue, Z., Hong, L., Davison, B. D., Kontostathis, A., & Edwards, L. (2009). Detection of harassment on web 2.0. Proceedings of the Content Analysis in the WEB, 2, 1–7.

Vanhove, T., Leroux, P., Wauters, T., & De Turck, F. (2013, May). Towards the design of a platform for abuse detection in osns using multimedial data analysis. In Integrated Network Management (IM 2013), 2013 IFIP/IEEE International Symposium on (pp. 1195–1198). IEEE.

Vreeman, R. C., & Carroll, A. E. (2007). A systematic review of school-based interventions to prevent bullying. Archives of Pediatrics & Adolescent Medicine, 161(1), 78–88. https://doi.org/10.1001/archpedi.161.1.78.

Vygotsky, L. S. (2008). Thought & language, (A. Rodi, Transl.). Athens: Gnosi.

Xu, J. M., Jun, K. S., Zhu, X., & Bellmore, A. (2012, June). Learning from bullying traces in social media. In Proceedings of the 2012 conference of the North American chapter of the association for computational linguistics: Human language technologies (pp. 656–666). Association for Computational Linguistics.

Zhao, R., Zhou, A., & Mao, K. (2016, January). Automatic detection of cyberbullying on social networks based on bullying features. In Proceedings of the 17th international conference on distributed computing and networking (pp. 1–6). https://doi.org/10.1145/2833312.2849567.

Funding

This research did not receive any specific grant from funding agencies in the public, commercial, or not-for-profit sectors.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki Declaration and its later amendments or comparable ethical standards. This article does not contain any studies with animals performed by any of the authors.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Nikiforos, S., Tzanavaris, S. & Kermanidis, KL. Virtual learning communities (VLCs) rethinking: influence on behavior modification—bullying detection through machine learning and natural language processing. J. Comput. Educ. 7, 531–551 (2020). https://doi.org/10.1007/s40692-020-00166-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40692-020-00166-5