Abstract

Introduction

Medical information is expanding at exponential rates. Practicing physicians must acquire skills to efficiently navigate large bodies of evidence to answer clinical questions daily. How best to prepare medical students to meet this challenge remains unknown. The authors sought to design, implement, and assess a pragmatic evidence-based medicine (EBM) course engaging students at the transition from undergraduate to graduate medical education.

Materials and Methods

An elective course was offered during the required 1-month Capstone medical school curriculum. Participants included one hundred sixty-eight graduating fourth-year medical students at Emory University School of Medicine who completed the course from 2012 to 2018. Through interactive didactics, small groups, and independent work, students actively employed various electronic tools to navigate medical literature and engaged in structured critical appraisal of guidelines and meta-analyses to answer clinical questions.

Results

Assessment data was available for 161 of the 168 participants (95.8%). Pre- and post-assessments demonstrated students’ significant improvement in perceived and demonstrated EBM knowledge and skills (p < 0.001), consistent across gender and specialty subgroups.

Discussion

The Capstone EBM course empowered graduating medical students to comfortably navigate electronic medical resources and accurately appraise summary literature. The objective improvement in knowledge, the perceived improvement in skill, and the subjective comments support this curricular approach to effectively prepare graduating students for pragmatic practice-based learning as resident physicians.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Within the practice-based learning and improvement competency, the Accreditation Council of Graduate Medical Education (ACGME) mandates that resident physician trainees learn how to search for and apply scientific knowledge efficiently in order to guide medical decisions at the point of care [1]. With the exponential growth of peer-reviewed medical publications—annually greater than 1 million new PubMed references [2]—clinicians cannot become familiar with all relevant publications in their field. To excel in practice-based learning, future clinicians must develop skills to locate resources rapidly and stay current with published literature affecting their individual practice. Many medical schools have adopted evidence-based medicine (EBM) curricula that teach students to interpret literature; however, less often do such curricula train students how to efficiently use electronic tools to locate high-quality evidence among available resources and how to rapidly critically appraise literature to immediately impact clinical practice at the point-of-care.

Recognizing the need for a consistent approach to teaching EBM, in 2003, the Conference of Evidence-Based Health Care Teachers and Developers outlined the minimum criteria necessary for evidence-based practice curricula. Their Sicily Statement maintains that curricula must contain core elements of the five-step model of evidence-based practice, including “translation of uncertainty to an answerable question, systematic retrieval of best evidence available [and] critical appraisal of evidence.” [3] Since that time, EBM courses have continued to address students’ knowledge, skills, and attitudes through various didactic methods and in many different learning environments [4]. Unfortunately, how best to teach EBM to future practitioners remains unclear. A recent systematic review was unable to determine the most effective educational theory on which to base EBM courses, in part because of profound variability in course duration, population, content, and timing [5]. Due to this heterogeneity, as well as inadequate descriptions of curricula and lack of validated test measures, researchers have been unable to draw conclusions about the superiority of particular EBM educational methods [4, 6,7,8]. Furthermore, a recent study demonstrated that performance in EBM deteriorates during the fourth year of medical school, further suggesting a need for curricular reform in this cohort of students [9]. We describe the “Capstone Evidence Based Medicine” course (henceforth called Capstone EBM) for graduating medical students as a novel and pragmatic approach to teach students transitioning to graduate medical education how to effectively maintain engagement with high-quality published literature at the point of care.

Materials and Methods

In 2011, Emory University School of Medicine incorporated a required 1-month “Capstone” curriculum for all graduating medical students to better facilitate their transition from student to physician. As part of this curriculum, faculty proposed “Selectives” (elective workshops during the Capstone curriculum), with themes that included “How to Be a Good Doctor,” “Communicating Effectively,” and “Professional & Personal Well-being.” Students personalized their Selective schedules in addition to participating in other required portions of the Capstone curriculum. From 2012 to 2018, 168 graduating students (representing approximately 18% of all graduates over 7 years) chose the Capstone EBM course as part of their Capstone curriculum.

Faculty with expertise in EBM designed the Capstone EBM course (Fig. 1) as a selective offering that aimed to help graduating students develop the more advanced and practical EBM skills that are necessary for clinical practice as physicians. Learning objectives anticipated that upon completion of the course, students would be able to (1) use high-quality electronic “pull” resources to find evidence-based answers to clinical questions at the point of care; (2) use electronic “push” resources to help keep up with the relevant literature in their field or within topic(s) of interest; (3) identify and utilize mobile device applications to help make point-of-care practice efficient, evidence-based, and practical; and (4) critically appraise meta-analyses and guidelines to incorporate evidence into clinical practice. While students had received limited portions of the Capstone EBM curriculum much earlier in their training as part of the medical school’s larger EBM curriculum (Fig. 2), our Capstone course offered the novel opportunity to apply practical searching skills and summary-literature interpretation to clinical practice.

Schematic of 4-year integrated evidence based medicine curriculum, by year (M1 to M4) and by curricular phase (foundations to translation). Timeline of the Emory University School of Medicine curriculum from the first (M1) through fourth (M4) years. Capstone, during which the Capstone EBM course is offered, is included on the upper right of the figure. 1st row (blue) = EBM training throughout the curriculum. 2nd row (yellow) = year of medical school training. 3rd row = phase of medical school curriculum (foundations = pre-clinical; applications = traditional core clinical rotations; discovery = research 5 months; translations = traditional senior year clinical rotations and electives; Capstone = 1-month required Capstone course for all students). The asterisk indicates very limited/minimal structured EBM training during core clinical rotations

In preparation for the course, students were assigned background reading from (a) selected chapters of JAMA Evidence Users’ Guides to the Medical Literature [10], and (b) a representative faculty-selected meta-analysis and guideline. During day 1 of the course, faculty facilitated interactive didactic sessions focused on electronic resources, as well as small group sessions, composed of 10–16 medical students and an overseeing clinical faculty member, where students practiced structured critical appraisal of a meta-analysis and a clinical practice guideline.

During the intersession between day 1 and day 2 of the course, students individually investigated different online “push” resources (e.g., ACP Journal Wise®, NEJM Journal Watch) and “pull” resources (e.g., DynaMed Plus®, UpToDate®). They also designed clinical questions, searched for summary literature to answer these questions, and worked in small groups (2–4 students per group) to critically appraise literature for application to clinical scenarios.

During day 2 of Capstone EBM, individual students presented the positives and negatives of various “pull” and “push” resources they had investigated. Then, student small groups presented critical appraisals of self-selected meta-analysis or guideline—used to answer their clinical questions—followed by peer and faculty discussion.

Program Evaluation

In order to gauge the effectiveness of achieving course objectives, a 10-item pre-assessment and a similar but distinct 10-item post-assessment were administered before and after completion of the Capstone EBM course, respectively (Appendix Fig. 1 and Appendix Fig. 2). Four 5-point Likert scale questions assessed students’ self-efficacy to use online “push” resources; to search for high-quality summary literature efficiently and effectively; and to interpret guidelines and meta-analyses to answer clinical questions. Additional questions assessed students’ knowledge of the quality of various evidence-based resources and their ability to critically appraise summary literature.

Statistical Methods

Categorical comparisons of demographics were tested with the Chi-square test. Comparisons between Likert scale opinions by subgroups including time, graduation year, gender, and specialty were conducted using the Mann-Whitney test or Kruskal-Wallis test. The one-sample t-test was used to compare knowledge scores between pre- and post-course.

Differences in pre-course knowledge by subgroup were tested with a 2-sample t-test or the Wilcoxon signed-rank test when the normality assumption was not upheld. Knowledge gain by subgroup was tested with analysis of covariance (ANCOVA) with pre-course knowledge scores used as covariate. Tests for interactions were also conducted.

This project was granted exemption status by its institutional review board.

Results

Complete assessment data was available for 161 of the 168 participants (95.8%). Capstone EBM students were well represented across specialties, with 71% of students matched to generalist specialties to start residency training. There was approximately equal gender distribution in the Capstone EBM course, but a significantly higher proportion of the women (compared with men) had matched to generalist specialties (81% vs. 63%, p = 0.02). Fourteen percent of students were graduating with dual degrees (Table 1).

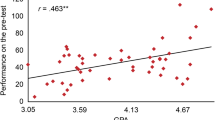

Students’ self-efficacy to use push resources, to search the literature, and to interpret guidelines and meta-analyses to answer clinical questions significantly improved after course completion (p < 0.001 for each Likert scale question; Fig. 3) compared with before the course. Overall, students’ average knowledge assessment score significantly improved (47% vs. 68% correct on pre- vs. post-assessments, p < 0.001); these gains in knowledge were significant for accessing literature and interpreting meta-analyses (p < 0.001), but not for interpreting guidelines (p = 0.72) (Fig. 4). Pre- to post-course changes in self-efficacy and improvements in knowledge were not impacted by year, gender, or future specialty; furthermore, there was no interaction between gender and the generalists group with respect to post-assessment knowledge scores (p = 0.21 for interaction).

Changes in student self-efficacy (perceived comfort level) with each component of the Capstone EBM course. Objective domain from pre-assessment to post-assessment, questions 1–4 (Q1–Q4). Pre, pre-assessment question average Likert scale (1–5) score; Post, post-assessment question average Likert scale (1–5) score. Questions (5-point Likert scale from strongly disagree to strongly agree): Q1, question 1 reflects comfort level with utilizing electronic resources that send information to users automatically (i.e., “push” resources). Q2, question 2 reflects comfort level with utilizing electronic resources to search for high-quality evidence answers to clinical question (“pull” resources). Q3, question 3 reflects comfort level with interpreting practice guidelines. Q4, question 4 reflects comfort level with interpreting meta-analyses

Graph of changes in evidence based medicine knowledge and skills scores, by topic, from pre-assessment to post-assessment of the Capstone EBM Course: Pre, pre-assessment score (%); Post, post-assessment score (%); Push/Pull, searching focused pre- and post-assessment questions; Guide, Guideline-focused pre- and post-assessment questions; Meta-analysis, Meta-analysis focused pre- and post-assessment questions

Representative student comments about the EBM Capstone course included the following: “one of the most useful things I had in medical school,” “I feel much more comfortable with searching the literature,” and “Incredibly helpful and something I will use as a resident.” Based on independent Capstone course evaluations and the School of Medicine’s formal exit interview and survey comments, curriculum leadership recently incorporated portions of the Capstone EBM course into an earlier phase of the 4-year medical school curriculum for all students.

Discussion

In this study, we hypothesized that our undergraduate medical education Capstone EBM course would lead to significant improvement in participants’ EBM knowledge, skill, and confidence. Our findings demonstrate that an interactive and pragmatic EBM course can empower students to comfortably navigate electronic medical resources and accurately appraise summary literature—skills that are vital to trainee success as they transition from students to resident physicians.

We designed this Capstone course with the support of literature in educational theory that explores the most effective ways to teach EBM. For example, our course incorporated all four components that Maggio et al. [11] suggest as successful EBM teaching methods, including learning tasks (answering a clinical question using relevant literature); supportive information (pre-reading JAMAevidence®); procedural information (providing a step-by-step outline for how to critically appraise guidelines and meta-analyses); and part-task practice (quickly searching resources using the PICO format). Our course utilized a variety of teaching methods, including classical didactic, interactive audience participation, small-group collaborative learning sessions, case-based clinical scenarios, and student-led teaching of their peers. Our ability to achieve success in improving students’ knowledge and skill by using a blended approach is in line with the current medical education literature. Systematic reviews have found that no single educational method to teach EBM is superior to another, suggesting that a combination might be the best approach [4, 6, 8]. Indeed, Kyriakoulis et al. [12] found that using a “multifaceted approach” may be the most effective method of teaching EBM. This is further supported by an overview of systematic reviews that suggested that a blended curriculum such as ours, that integrates clinical scenarios, leads to improvements in students’ EBM knowledge, attitude, and skills [13].

Our study’s strengths include a relatively large student sample size, which allowed us to detect a significant effect for improvement in both student perceptions of their own skills and objective knowledge gains. Graduating students participating in the course spanned many years (2012–2018) and pursued a variety of specialties (17 total), indicating generalizability of our findings to all medical students.

Our study has several limitations. First, pre- and post-test data were missing on several individuals who completed the course, although they represent less than 5% of our sample size and are thus unlikely to have affected our overall results. Second, while we measured the effectiveness of our course by comparing students’ EBM self-efficacy, knowledge, and skills before and after completion of the elective, we did not use a validated instrument to do so. Unfortunately, a large systematic review demonstrated that there is a paucity of validated instruments that assess EBM education comprehensively, and development of these tools is needed [14]. Third, we did not investigate the underlying motivations behind why some students, but not others, chose to be enrolled in this course. While there is risk that underperforming medical students might have selected this course to augment their skills to match other graduating medical students, we believe that the relatively high enrollment of students attaining dual degrees (14%, Table 1) suggests that course enrollment included students with sufficient, or even strong, baseline EBM skillset prior to the course. Fourth, we did not include an assessment of the impact of our course on students’ practical application of EBM skills once they became resident physicians. This has been a limitation noted by other EBM medical education studies [6, 15], and is most likely due to the difficulty of maintaining contact with students after graduation.

Lastly, we did not incorporate a control group to compare pre- and post-course EBM knowledge and skills between those who enrolled in the course and those who did not. Incorporation of a control group would have allowed us to assess selection bias, as it is possible that those who voluntarily enrolled in the course were more confident and skilled than their peers in EBM at baseline, or vice versa. One way to compensate for this lack of a control group would be to assess students’ prior exposure to biostatistics, epidemiology, or their general electronic literacy, elements of “clinical maturity” that might affect EBM competency [16]. Since biostatistics and epidemiology are integrated into the first 2-year curricula at our institution (Fig. 2), we are fairly confident that students in our study had comparable exposure to basic EBM concepts prior to enrolling in the Capstone EBM course.

Conclusion

A Capstone EBM course led to significant improvement in graduating medical students’ practical EBM knowledge, attitudes, and skills as they entered postgraduate training. These improvements were consistent across gender and various matched specialties, suggesting generalizability of our findings. We provide a detailed description of our intervention so that others might consider applying this framework to their own EBM courses.

References

The Accreditation Council for Graduate Medical Education and The American Board of Internal Medicine. The Internal Medicine Milestone Project: A Joint Initiative of the Accreditation Council for Graduate Medical Education and the American Board of Internal Medicine. Available at https://www.acgme.org/Portals/0/PDFs/Milestones/InternalMedicineMilestones.pdf. Accessed 15 Mar 2020.

Dunn AG, Coiera E, Mandl KD. Is Biblioleaks inevitable? J Med Internet Res. 2014;16(4):e112. https://doi.org/10.2196/jmir.3331 [published Online First: 2014/04/24].

Dawes M, Summerskill W, Glasziou P, et al. Sicily statement on evidence-based practice. BMC Med Educ. 2005;5(1):1. https://doi.org/10.1186/1472-6920-5-1 [published Online First: 2005/01/07].

Maggio LA, Tannery NH, Chen HC, et al. Evidence-based medicine training in undergraduate medical education: a review and critique of the literature published 2006–2011. Acad Med. 2013;88(7):1022–8. https://doi.org/10.1097/ACM.0b013e3182951959 [published Online First: 2013/05/25].

Ramis MA, Chang A, Conway A, et al. Theory-based strategies for teaching evidence-based practice to undergraduate health students: a systematic review. BMC Med Educ. 2019;19(1):267. https://doi.org/10.1186/s12909-019-1698-4 [published Online First: 2019/07/20].

Ahmadi SF, Baradaran HR, Ahmadi E. Effectiveness of teaching evidence-based medicine to undergraduate medical students: a BEME systematic review. Med Teach. 2015;37(1):21–30. https://doi.org/10.3109/0142159X.2014.971724 [published Online First: 2014/11/18].

Flores-Mateo G, Argimon JM. Evidence based practice in postgraduate healthcare education: a systematic review. BMC Health Serv Res. 2007;7:119. https://doi.org/10.1186/1472-6963-7-119 [published Online First: 2007/07/28].

Ilic D, Maloney S. Methods of teaching medical trainees evidence-based medicine: a systematic review. Med Educ. 2014;48(2):124–35. https://doi.org/10.1111/medu.12288 [published Online First: 2014/02/18].

Heidemann LA, Keilin CA, Santen SA, et al. Does performance on evidence-based medicine and urgent clinical scenarios assessments deteriorate during the fourth year of medical school? Findings from one institution. Acad Med. 2019. https://doi.org/10.1097/ACM.0000000000002583 [published Online First: 2019/01/15].

RD GG, Meade MO, Cook DJ, editors. Users’ guides to the medical literature: a manual for evidence-based clinical practice. 3rd ed. New York: McGraw-Hill; 2015.

Maggio LA, Cate OT, Irby DM, et al. Designing evidence-based medicine training to optimize the transfer of skills from the classroom to clinical practice: applying the four component instructional design model. Acad Med. 2015;90(11):1457–61. https://doi.org/10.1097/ACM.0000000000000769 [published Online First: 2015/05/21].

Kyriakoulis K, Patelarou A, Laliotis A, et al. Educational strategies for teaching evidence-based practice to undergraduate health students: systematic review. J Educ Eval Health Prof. 2016;13:34. https://doi.org/10.3352/jeehp.2016.13.34 [published Online First: 2016/10/18].

Young T, Rohwer A, Volmink J, et al. What are the effects of teaching evidence-based health care (EBHC)? Overview of systematic reviews. PLoS One. 2014;9(1):e86706. https://doi.org/10.1371/journal.pone.0086706 [published Online First: 2014/02/04].

Shaneyfelt T, Baum KD, Bell D, et al. Instruments for evaluating education in evidence-based practice: a systematic review. JAMA. 2006;296(9):1116–27. https://doi.org/10.1001/jama.296.9.1116 [published Online First: 2006/09/07].

Hecht L, Buhse S, Meyer G. Effectiveness of training in evidence-based medicine skills for healthcare professionals: a systematic review. BMC Med Educ. 2016;16:103. https://doi.org/10.1186/s12909-016-0616-2 [published Online First: 2016/04/06].

Ilic D, Diug B. The impact of clinical maturity on competency in evidence-based medicine: a mixed-methods study. Postgrad Med J. 2016;92(1091):506–9. https://doi.org/10.1136/postgradmedj-2015-133487 [published Online First: 2016/02/13].

Acknowledgments

The authors wish to thank Sheryl Heron, MD, J. Richard Pittman, MD, and David Schulman, MD, MPH, for their contributions to this manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The following authors have no conflicts of interest to disclose: Caitlin Anderson MD MPH, John Haydek MD, Lucas Golub MD, Traci Leong PhD, Dustin T. Smith MD, Jason Liebzeit MD. Daniel D. Dressler MD MSc discloses royalties from McGraw-Hill Education.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Caitlin R. Anderson and John Haydek are co-lead authors

Electronic Supplementary Material

ESM 1

(DOCX 41 kb)

Rights and permissions

About this article

Cite this article

Anderson, C.R., Haydek, J., Golub, L. et al. Practical Evidence-Based Medicine at the Student-to-Physician Transition: Effectiveness of an Undergraduate Medical Education Capstone Course. Med.Sci.Educ. 30, 885–890 (2020). https://doi.org/10.1007/s40670-020-00970-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40670-020-00970-9