Abstract

Computer-assisted or e-learning modules can be an effective mode of education, particularly if they incorporate interactivity and feedback. However, such modules can face barriers to adoption by faculty, particularly when they involve the use of new software. In this report, we describe the generation of e-learning modules derived using standard features of PowerPoint™ that include feedback and require active engagement by students. These modules, that we term Interactive PowerPoints™ or IPPs, have been used in a variety of settings within the pre-clerkship years of a standard medical (MD) curriculum. Our analysis demonstrates that use of IPPs as supplementary material can enhance performance on standard multiple choice exam questions and are at least equivalent in efficacy to didactic lectures for delivery of specific concepts. In addition, use of IPPs for practice Genetics problems resulted in a significant increase in student perceptions of comprehension and ability to apply knowledge. Finally, students indicated a distinct preference for blended learning experiences incorporating IPPs. Thus, IPPs represent a low-cost, easily adoptable e-learning mechanism that provides for an efficient and interactive learning experience for medical students.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Computer-assisted learning or e-learning in medical education incorporates a variety of formats from simple recorded lectures to interactive tutorials, computer-based simulations, and virtual patients [1–3]. Such formats are increasingly being incorporated at multiple levels of medical education, often as part of a blended learning experience where e-learning is combined with more traditional formats such as lectures [4–6]. Blended learning provides at least equivalent learning outcomes to that of traditional educational formats but results in higher student satisfaction and confidence in achievement of learning objectives. Specifically in regard to the e-learning component, most studies indicate that e-learning can be as effective as traditional content delivery for knowledge acquisition [1, 3, 7–9]. The advantages of e-learning consist of the ability of students to regulate their pace of learning and an increase in student satisfaction with their learning experience [3, 8]. However, it is clear that the quality of e-learning modules varies widely, and the ability to incorporate interactivity and feedback are important components leading to improved learning outcomes [1, 9]. In these cases, e-learning modules can mimic aspects of active learning, which has been shown to significantly improve student performance [10, 11].

The development of high-quality e-learning modules that incorporate these features may require the generation of specific software or the use of targeted commercially available software that could create a barrier to widespread adoption by teaching faculty [12–14]. To address this issue, we have utilized specific features of PowerPoint™ to generate a series of interactive e-learning modules that we term Interactive PowerPoints™ or IPPs. From the faculty perspective, IPPs are simple to generate using software they are already familiar with, thus removing a barrier to widespread adoption. The IPPs are designed so that students are required to actively engage in order to complete the module, providing an interactive experience for the learner. In addition, opportunities for feedback and the ability to link out to published work or specific web sites are incorporated. In this report, we present an analysis of the efficacy of IPPs used in a variety of formats. For example, IPPs were utilized as supplementary material to didactic lectures addressing specific concepts that have historically been difficult for students to grasp in a lecture format. Other IPPs were developed to enable application of knowledge acquired in lecture, to replace didactic lecture material, or to supplement Medical Microbiology laboratory sessions in a peer-to-peer learning environment. Our analysis of the efficacy of IPPs consisted of course evaluations, performance on multiple choice questions (MCQs), and a survey to assess comprehension of basic science concepts and competency in the application of this knowledge pre- and post-introduction of the IPPs. Our results show that IPPs are at least equivalent to traditional formats in knowledge acquisition and, when used as supplementary material, can significantly enhance student performance on MCQs. The IPPs also provided students with increased comprehension and the ability to apply their knowledge. In addition, the students indicated a distinct preference for blended learning experiences incorporating IPPs. Thus, IPPs represent a low-cost, easily adoptable e-learning mechanism that provides for an efficient and interactive learning experience for medical students.

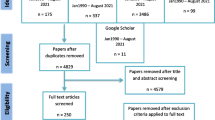

Methods

Generation of IPPs

The Interactive PowerPoints™ were generated using the standard Microsoft package (details provided are for Microsoft PowerPoint™ Professional Plus 2013). Action buttons were used to navigate between slides and were inserted by clicking on the “Insert” tab and selecting “Action Buttons” within the “Shapes” option. Once the Action button was inserted into the slide, a dialog box opens enabling hyperlinking to a specific slide within the presentation. For multiple choice questions, answers were added as separate text boxes, the text box highlighted, “Insert” again selected, and the “Hyperlink” option selected. From the Hyperlink dialog box, “Place in This Document” was selected. At that point, the answer was hyperlinked to a specific slide within the presentation with either a “correct” or “incorrect” answer linked to the appropriate MCQ option. Once the presentation was complete, enforcement of interactivity required additional steps. First, “on mouse click” must be deselected in the “Advance Slide” section of the “Transitions” tab and “Apply to All” selected. Under the “Slide Show” tab, “Set Up Slide Show” was chosen and the “Show Type” “Browse at a Kiosk” selected. Finally, the document must be saved as a “PowerPoint™ Show” or .pps file.

IPPs for Self-Directed Learning

IPPs were developed as material for “Cell Structure and Function”—a traditional lecture-based course for M1 students. The IPPs were made freely available to students on a secure education portal so that they could review them multiple times at their convenience. It was estimated that each IPP would take a maximum of 10 min to complete. Assessment of the efficacy of these modules for the acquisition of knowledge was performed by analysis of MCQ data on standard exams by using chi-squared analysis using Yates correction for continuity.

Additional IPPs were generated in 2012 for the Genetics section of the same course, based on a series of practice problems designed to apply knowledge obtained in lecture. The same practice problems had been provided to M1 students in prior years as a written Word document, with hand-drawn answers posted upon the completion of the lecture material. A survey distributed to these two groups of students was designed to assess general learning preference, content organization, the level of feedback provided during the active learning session, level of confidence in the conceptual information, and skill development using a standard 5-point Likert scale (see Table 1 for survey questions). This study was assessed by the Institutional Review Board of Eastern Virginia Medical School and determined to be exempt from review. Students who elected to participate were asked to review one of the Practice Problems that they had received when they took the relevant M1 class and then asked to complete an online survey administered using Qualtrics software (Qualtrics, Provo, UT). In total, 259 students who had used the “paper” version of the Practice Problems (M3 and M4 students) and 292 students who had used the IPPs (M1 and M2 students) were asked to participate. A total of 93 students completed the survey (17 % overall participation rate), with 49 M3 and M4 students (19 %) and 44 M1 and M2 students (15 %). The responses for the two groups were assessed by question-based one-way ANOVA followed by Bonferroni’s multiple comparison test.

IPPs for Peer-to-Peer Learning

IPPs were generated for the Medical Microbiology and Immunology course for use in blended learning sessions combining clinical case scenarios, pharmacology (the use of antibiotics), and laboratory identification of bacteria. Students completed the IPPs in class as a group and were provided with live specimens (i.e., agar plates, slides, chemical sensitivity tests, etc.) used to identify the bacteria. After the labs were completed, the electronic cases are made available on a secure server for review. As part of the standard course evaluation, we polled the students for their opinions regarding the IPPs. Of 119 second year medical students, 69 participated in the course evaluation (response rate of 58 %).

Results and Discussion

General Description of IPPs

The IPPs were designed to break down problems or concepts into discrete sections, incorporating MCQs into each section. Incorrect MCQ answers were hyperlinked to slides that provided additional information to assist in answering questions and then hyperlinked back to the original question. Students were thus required to provide a correct answer in order to proceed to the next section of the IPP. Correct MCQ answers were hyperlinked to slides that gave additional feedback, reinforcing the reasoning behind the correct answer choice. In some cases, hyperlinks were added to images within the slides or links were used to provide definitions or access to relevant publications and web sites. A key aspect of the IPPs is the elimination of the opportunity for students to simply “scroll through” the presentation. Rather, the IPPs required active engagement by the students to complete the module.

As an example, we outline the IPP generated for the Genetics practice case used as the basis for our survey. This case was included in the course “Cell Structure and Function” for M1 students. The curriculum at Eastern Virginia Medical School (EVMS) at the time of the introduction of this material was primarily by discipline with a heavy emphasis on didactic lectures. The Genetics section of the Cell Structure and Function course was the first in the curriculum to move to online lectures and modules, with supplementary small groups and including the IPPs. Thus, the IPPs were in the context of a module that relied heavily on self-directed learning. Other IPPs were used in conjunction with standard didactic lectures, demonstrating the versatility of this approach. The original question for the IPP case consisted of the following presented to the students in a word document:

-

“A man and a woman are carriers for maple syrup urine disease (MSUD). They are expecting their first child.

-

1.

Draw the pedigree and indicate their genotypes using ‘A’ for the dominant allele and ‘a’ for the recessive allele.

-

2.

What is the probability for their child to have MSUD?

-

3.

If only one member of the couple was a known carrier for MSUD, how would that change the probability in part 2 (Assume they are both of non-consanguineous, Pennsylvanian Mennonite descent)?”

-

1.

The complete IPP for this case has now been published [15]. In Fig. 1, we provide an outline of the organization of the IPP for this case. Note that this format allowed us to provide a link to an external reference with more information about the clinical manifestations of MSUD and the underlying causes of this disorder. Incorrect answers were linked to slides that either provided an explanation of why the answer was incorrect or more information so that the student can answer the question successfully, for example, provision of a standard Punnett square for question 2 or a link to an external site to obtain information on carrier frequency for question 3. Within the incorrect answer slides, the phrase “Click here to try again” was hyperlinked within the presentation to the slide containing the original question. Thus, students were unable to proceed with the case until the correct answer was selected as no action button or hyperlink was included that enabled them to proceed directly to the next question from the incorrect answer slide. Correct answers were also hyperlinked to slides that provided an explanation of the answer to reinforce the concepts before proceeding to the next question.

It is felt that a key element of the success of the IPPs is the incorporation of immediate feedback for both correct and incorrect answers. Giving feedback to learners is important to reinforce learning and feedback and can take the form of verification and elaboration [16]. Verification is the information regarding the correctness of the learner’s answer, while elaboration is the process of providing information toward a correct answer. In this study, learners were provided with feedback that provided both verification and elaboration of the correct answer. Further, it has been found that immediate delivery of feedback provided an instructional advantage [17] and is also more effective for decision-making tasks [18]. The decision-making tasks included in the pedigree construction IPPs were reinforced by the immediate feedback given to learners verifying the correctness of their answers and an elaboration of the incorrect response and the correct answer. Finally, feedback is an essential part of self-regulated learning. The self-regulated learner understands the goal of learning, is motivated to learn, and takes control of their own learning [19]. Self-regulated learning is key to student achievement, and feedback is a primary determinant of self-regulated learning [20].

Effect of IPPs on Performance on MCQs

IPPs were generated for the Cell Structure and Function Course for first year medical students as supplementary material, designed to enhance understanding of concepts that were traditionally hard to grasp in a lecture format. In one specific example, an IPP was generated that illustrated concepts associated with the orientation of transmembrane proteins. Figure 2a shows the responses to an MCQ related to this topic prior to (2011 and 2012) and after (2013 and 2014) introduction of the supplementary IPP by two groups of students: those enrolled in the MD class and students in the 1-year Medical Master’s program at EVMS, a post-baccalaureate program designed to enhance student competitiveness for medical and dental schools. There was a clear and statistically significant improvement in performance on the MCQ by the medical students (n = 438, p < 0.025). In addition, while not statistically significant, there is a clear trend toward improved performance on the MCQ by the Medical Masters students after the introduction of the IPP (n = 173, p = 0.069). The reason for the difference in the two classes is unclear, although the smaller sample size of the Medical Masters class may play some role. It should be noted in this case that all students received the material included in this supplementary IPP as part of the didactic lecture and that prior to 2012 the same material was presented solely in lecture format. This implies that the improved performance on the exam question results from a subset of students utilizing the supplemental tool and therefore having a greater understanding of the topic.

Student performance on MCQ questions before and after introduction of IPPs. a Average MCQ scores (±SD) for Medical (MD Class) and Medical Masters students (MM Class) on the same MCQ before (2011–2012 (11/12)) and after (2013–2014 (13/14)) the introduction of a supplementary IPP to help explain membrane protein topology. b Average scores on the same MCQs for two topics taught in standard lecture format (2012, white bars) or using IPPs (2013, grey bars). Statistically significant differences in the mean are indicated

IPPs were also developed for the same course to replace didactic lecture material for two specific topics: the function of the Lac Operon (topic 1) and iron regulation of gene expression (topic 2). In this case, students were informed that the material within the IPPs would be assessed on the exam. Importantly, our analysis reveals no difference in student performance on related MCQs whether the material was presented as an IPP or in a lecture format (Fig. 2b). Thus, our data shows that IPPs are at least equivalent to didactic modes of content delivery and can even enhance student learning of difficult or complex concepts.

Use of IPPs for Knowledge Application

In 2012, IPPs were developed to replace a series of practice problems written for the Genetics curriculum, focused on the application of knowledge acquired in lecture. Prior to 2012, the practice problems were presented to students as a simple word document, with hand-drawn answers provided at a later date. These practice problems addressed pedigree construction, genetic risk assessment, concordance, and the Hardy-Weinberg law. As identical learning material had been provided to medical students in two different formats, we developed a survey to directly assess the efficacy of the two modes of content delivery. The survey was designed to assess general learning preference, content organization, the level of feedback provided, level of confidence in the conceptual information, and skill development (Table 1). No significant trends were observed when assessing general learning preference (data not shown), although this was perhaps surprising given generational trends to observe that more students indicated a preference to working out problems on paper rather than using a computer.

For questions 2 to 5, each set of ANOVA analyses showed significant differences for the means among the groups. However, we focused our analysis on pairwise differences between M1/M2 and M3/M4 groups for each question as this provided a more robust data set. The second question in the survey was designed to assess student’s perception of breaking down the practice problems into discrete sections on their ability to complete the problem correctly (Table 1). Surprisingly, we found no significant differences in the pairwise comparisons between the M1/M2 and M3/M4 groups for any of these questions (Fig. 3a). Our expectation was that students would prefer the ability to work through the problems in discrete manageable sections as afforded by the IPPs based on cognitive load theory [21]. One possible reason for this difference between our expectations and the survey results is that the sample practice problem provided to students at the time of the survey was a relatively simple problem (Fig. 1) and more complex practice problems might reveal a more distinct benefit in this regard. Alternatively, due to the time elapsed and additional training that our M3 and M4 students have undergone since their first year experience, these students may have a higher threshold for what would be considered a complex genetic risk assessment problem, and thus, breaking down the problem into sections would be of little benefit for these students. In the future, we could perform a randomized control trial using the two modes of content delivery to a matched set of students to distinguish any effects of the sectioning on learning.

Student responses to survey questions shown in Table 1 regarding the use of IPPs in application of knowledge. The responses of M1/M2 students (black circles) and M3/M4 students (grey circles) to survey questions 2 (a), 3 (b), 4 (c), and 5 (d) detailed in Table 1 are shown as a dot-plot with the mean and standard deviation indicated. The students in the M1/M2 class had received identical learning material in the IPP format. Statistically different responses are indicated above each cluster of responses. The Likert scale for each question is indicated as strongly disagree (SD), disagree (D), neutral (N), agree (A), and strongly agree (SA)

Questions 3 and 5 of the survey assessed the efficacy of feedback and the impact on student’s perceptions of their ability to apply knowledge. While there were no differences in how students ranked feedback for the two methods of content delivery, there was a significant improvement in the student’s perceived ability to apply their knowledge when using the IPPs. Specifically, the students felt that the IPPs were a better modality to learn the appropriate tools to use when assessing genetic inheritance risk (Fig. 3b). The case sent to the students in advance of the survey relied heavily on the use of a Punnett square for this analysis. Again, students perceived an enhanced ability to apply this tool when using the IPPs (Fig. 3d). Perhaps of greater importance, the IPPs enhanced students’ understanding of the underlying principles that were applied in the practice problem (Fig. 3c). These principles were presented in the IPP as a component of feedback with the MCQs and thus represent a distinct advantage of the IPPs versus the previous format. This finding is also consistent with studies demonstrating that interactivity and feedback are important for enhanced learning outcomes for e-learning modules [1, 9].

Use of IPPs in Peer-to-Peer Learning

IPPs have also been developed for use in a peer-to-peer learning environment as a component of a Medical Microbiology course for M2 medical students. These laboratory sessions include IPPs with case studies that incorporate both Microbiology and Pharmacology and are designed for the students to work through in small groups. In addition, physical specimens (i.e., agar plates, slides, chemical sensitivity tests, etc.) relating to each case study are provided for students to interpret. Importantly, the IPPs are made available after class for further review. Course evaluations show that almost all of the students either agree of strongly agree that this format (termed E-Labs) provided a positive learning experience (97 %) and enhanced their understanding of both the clinical presentation (96 %) and treatment of infectious disease (85 %, Table 2). Thus, the IPPs in the context of the E-Labs make an effective tool to integrate various disciplines. In regard to a preference for the E-Labs versus the traditional wet lab structure, the majority of students were neutral (49 %), although 41 % of the students agreed or strongly agreed that the statement that the E-Labs were superior. This supports the notion that at a minimum, the E-Labs are a valuable supplement to the traditional wet labs. In addition, a majority of students (82 %) felt that having the physical specimens available enhanced their learning experience. This finding is consistent with studies that show a preference for blended learning experiences [4–6]. Finally, the majority of students agreed that working through the E-Labs as a group enhanced their learning experience, although student comments included in the evaluations indicated that the ability to review the E-Labs after the in-class session was also useful as a study aid in preparation for exams.

Conclusions

It is clear that active learning and self-directed learning modalities play an important role in the retention and application of knowledge in medical education. In addition, computer-assisted learning (or e-learning) has become an increasingly utilized tool in medical education and those that incorporate active learning provide greater value to the learner. Various other software programs are available to develop interactive modules or exercises, but typically this software requires programming or video-editing skills. Examples include Flash programming and iBook development. Baatar and Piskurich [23] developed an immune response interactive learning module using Flash [22]. Flash animation requires a level of skill not usually found in teaching faculty members, thus providing a barrier to adoption. Another example is an iBook that can be created with imaging, electronic flashcards, and questions to be answered afterward [23]. There is a specialized level of knowledge required to develop resources using iBook as with other multimedia tools. Other software such as Articulate Storyline can provide a financial barrier. With the IPPs, we have been able to develop unique self-directed, interactive learning modules to enhance medical education that are efficient, effective, and easy to prepare with minimal barriers to faculty adoption. To our knowledge, there has been only one other report of the use of IPPs in medical education [24]. While only 38 % of the students completed all three modules in that study, there was a significant increase in pre-test score vs. post-test score (p < 0.001) and high student rankings for utility, user friendliness, and the overall learning experience. However, in that case, the IPPs were simply used to deliver educational content with a subsequent quiz on the content. In contrast, the IPP modules we have developed are novel in that the MCQs are incorporated into the module itself. Our data from exam questions shows that the IPPs are at least as effective as lecture as a primary mode of content delivery and can enhance student performance. Results of our survey show significant increases in both comprehension of material and the perception of students in their ability to apply learning material. Finally, we show that IPPs can be used in multiple formats (content delivery, supplementary material, and in peer-to-peer learning) to enhance the student learning environment.

References

Greenhalgh T. Computer assisted learning in undergraduate medical education. BMJ. 2001;322(7277):40–4.

Lewis KO, Cidon MJ, Seto TL, Chen H, Mahan JD. Leveraging e-learning in medical education. Curr Probl Pediatr Adolesc Health Care. 2014;44(6):150–63. doi:10.1016/j.cppeds.2014.01.004.

Ruiz JG, Mintzer MJ, Leipzig RM. The impact of E-learning in medical education. Acad Med: J Assoc Am Med Coll. 2006;81(3):207–12.

Dankbaar ME, Storm DJ, Teeuwen IC, Schuit SC. A blended design in acute care training: similar learning results, less training costs compared with a traditional format. Perspect Med Educ. 2014;3(4):289–99. doi:10.1007/s40037-014-0109-0.

Daunt LA, Umeonusulu PI, Gladman JR, Blundell AG, Conroy SP, Gordon AL. Undergraduate teaching in geriatric medicine using computer-aided learning improves student performance in examinations. Age Ageing. 2013;42(4):541–4. doi:10.1093/ageing/aft061.

Sadeghi R, Sedaghat MM, Sha AF. Comparison of the effect of lecture and blended teaching methods on students’ learning and satisfaction. J Adv Med Educ Prof. 2014;2(4):146–50.

Hudson JN. Computer-aided learning in the real world of medical education: does the quality of interaction with the computer affect student learning? Med Educ. 2004;38(8):887–95. doi:10.1111/j.1365-2929.2004.01892.x.

Longmuir KJ. Interactive computer-assisted instruction in acid–base physiology for mobile computer platforms. Adv Physiol Educ. 2014;38(1):34–41. doi:10.1152/advan.00083.2013.

Triola MM, Huwendiek S, Levinson AJ, Cook DA. New directions in e-learning research in health professions education: report of two symposia. Med Teacher. 2012;34(1):e15–20. doi:10.3109/0142159X.2012.638010.

Freeman S, Eddy SL, McDonough M, Smith MK, Okoroafor N, Jordt H, et al. Active learning increases student performance in science, engineering, and mathematics. Proc Natl Acad Sci U S A. 2014;111(23):8410–5. doi:10.1073/pnas.1319030111.

Prince M. Does active learning work? A review of the research. J Eng Educ. 2004;93(3):223–31.

DeBate RD, Cragun D, Severson HH, Shaw T, Christiansen S, Koerber A, et al. Factors for increasing adoption of e-courses among dental and dental hygiene faculty members. J Dent Educ. 2011;75(5):589–97.

Hendricson WD, Panagakos F, Eisenberg E, McDonald J, Guest G, Jones P, et al. Electronic curriculum implementation at North American dental schools. J Dent Educ. 2004;68(10):1041–57.

Zayim N, Yildirim S, Saka O. Instructional technology adoption of medical faculty in teaching. Stud Health Technol Inform. 2005;116:255–60.

Kerry J, Chisholm E. Self-directed active learning modules for pedigree analysis and genetic risk assessment. MedEdPORTAL Publications; 2015. doi:10.15766/mep_2374-8265.10172.

Kulhavy RW, Stock WA. Feedback in written instruction: the place of response certitude. Educ Psychol Rev. 1989;1(4):279–308.

Azevedo R, Bernard RM. A meta-analysis of the effects of feedback in computer-based instruction. J Educ Comput Res. 1995;13(2):111–27.

Jonassen DH, Hannum WH. Research-based principles for designing computer software. Educ Technol. 1987;27:7–14.

Paris S, Paris A. Classroom applications of research on self-regulated learning. Educ Psychol. 2001;36(2):89–101.

Butler DL, Winne PH. Feedback and self-regulated learning: a theoretical synthesis. Rev Educ Res. 1995;65(3):245–81.

Young JQ, Van Merrienboer J, Durning S, Ten Cate O. Cognitive load theory: implications for medical education: AMEE guide No. 86. Med teacher. 2014;36(5):371–84. doi:10.3109/0142159X.2014.889290.

Baatar D, Piskurich J. Immune response to an allergen/helminth: an interactive learning module. MedEdPORTAL Publications; 2015. doi:10.15766/mep_2374-8265.10158.

Hardesty L. Breast imaging fundamentals: an interactive textbook (iBook and PDF Versions). MedEdPORTAL Publications; 2015. doi:10.15766/mep_2374-8265.10169.

Gaikwad N, Tankhiwale S. Interactive E-learning module in pharmacology: a pilot project at a rural medical college in India. Perspect Med Educ. 2014;3(1):15–30. doi:10.1007/s40037-013-0081-0.

Acknowledgments

The authors would like to thank Stephen Buescher, MD, and Elizabeth Chisholm for provision of source material used to generate the E-Labs and Genetics practice problems, respectively.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Esquela-Kerscher, A., Krishna, N.K., Catalano, J.B. et al. Design and Effectiveness of Self-Directed Interactive Learning Modules Based on PowerPoint™. Med.Sci.Educ. 26, 69–76 (2016). https://doi.org/10.1007/s40670-015-0191-x

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40670-015-0191-x