Abstract

The current study evaluated the effectiveness of a mobile application, Camp Discovery, designed to teach receptive language skills to children with autism spectrum disorder based on the principles of applied behavior analysis. Participants (N = 28) were randomly assigned to an immediate-treatment or a delayed-treatment control group. The treatment group made significant gains, p < .001, M = 58.1, SE = 7.54, following 4 weeks of interaction with the application as compared to the control group, M = 8.4, SE = 2.13. Secondary analyses revealed significant gains in the control group after using the application and maintenance of acquired skills in the treatment group after application usage was discontinued. Findings suggest that the application effectively teaches the targeted skills.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Advancements in technology continue to transform day-to-day living for individuals across populations, including individuals with autism spectrum disorder (ASD). The use of technology to assist individuals with ASD has been investigated in a variety of capacities, including augmented and alternative communication (Still, Rehfeldt, Whelan, May, & Dymond, 2014); prompting tools to assist with organization, self-management, time management, and task completion (Cihak, Wright, & Ayres, 2010; Gentry, Wallace, Kvarfordt, & Lynch, 2010; Riffel et al., 2005); and picture, audio, and video modeling to train a variety of skills (Mechling, Gast, & Seid, 2009). Preliminary research has also shown potential for computer-based intervention (CBI), which is software developed to provide treatment via built-in mechanisms, such as instructional tools, immediate feedback, and data collection (Khowaja & Salim, 2013; Knight, McKissick, & Saunders, 2013; Pennington, 2010; Ramdoss et al., 2011; Ramdoss et al., 2012; Ramdoss et al., 2011).

There are a number of advantages to using CBI to assist individuals with ASD. First, computers and mobile devices are fairly accessible and affordable (Kagohara et al., 2013; Knight et al., 2013; Ramdoss, Lang, et al., 2011). Next, individuals with ASD may exhibit increased attention and motivation (Moore & Calvert, 2000), as well as fewer stereotypic behaviors, while interacting with CBI than compared to traditional one-to-one instruction (Knight et al., 2013; Pennington, 2010). Other advantages of CBI include the portability of devices and ease of customization to meet individual needs (Kagohara et al., 2013; Knight et al., 2013). Finally, because computers and mobile devices are commonplace within community settings, these devices are less stigmatizing to individuals with ASD (Kagohara et al., 2013; Mechling, 2011; Ramdoss, Lang, et al., 2011).

CBI may offer additional advantages for providing treatment to individuals with ASD. Comprehensive applied behavior analysis (ABA) programs are generally conducted in a combination of one-to-one and group formats, at a high intensity (e.g., between 30 and 40 h per week), for multiple years (Behavior Analyst Certification Board, 2014; Eldevik et al., 2009; Reichow, Barton, Boyd, & Hume, 2012). CBI may be a viable, cost-effective approach to augmenting portions of treatment. CBI may help clinicians design more efficient treatment plans by enabling certain skills to be taught via CBI methods, allowing behavioral therapists to focus on skills that require in-person treatment. In addition, parents and teachers can take advantage of every available hour by having their children or students learn through CBI programs during times when behavioral therapists are not available.

Some disadvantages and clinical considerations to using CBI in clinical and educational settings have been suggested as well. For example, the use of CBI may be isolating, limiting the opportunities for social interaction and verbal communication for individuals with ASD (Khowaja & Salim, 2013; Pennington, 2010; Ramdoss, Lang, et al., 2011; Ramdoss, Mulloy, et al., 2011). However, Pennington (2010) suggests that providing structured access to CBI in group settings may reduce the potential for isolation. Devices may also be misused by individuals with ASD to engage in restricted, repetitive behaviors (Ramdoss, Lang, et al., 2011; Ramdoss, Mulloy, et al., 2011). Supervised use of CBI may help mitigate these risks. For example, King, Thomeczek, Voreis, and Scott (2014) conducted an exploratory observation on the use of iPad® tablets in a classroom for six students with ASD and found educator presence to increase appropriate academic application usage by 20%. Another concern is the potential for challenging behavior upon removal of a device, although behavioral therapists and parents may be trained to manage such behaviors as they would with problem behaviors maintained by access to any preferred item. Finally, although research in the area is limited, increased screen time has been linked to metabolic (Boone, Gordon-Larsen, Adair, & Popkin, 2007; Hardy, Denney-Wilson, Thrift, Okely, & Baur, 2010) and sleep concerns (Hale & Guan, 2015), emphasizing the need for supervised and restricted access.

Although there is a substantial amount of anecdotal support for the use of CBI in teaching individuals with ASD, research in the area is limited (Knight et al., 2013). A number of literature reviews have explored the use of CBI to teach a variety of skills to children with ASD, including academic skills (Knight et al., 2013; Pennington, 2010), communication skills (Ramdoss, Lang, et al., 2011), literacy skills (Ramdoss, Mulloy, et al., 2011), reading comprehension (Khowaja & Salim, 2013), and social skills (Ramdoss et al., 2012). Although preliminary evidence for CBI shows promise, there are a number of limitations with existing research. First, there are few studies investigating CBI (Pennington, 2010; Ramdoss, Lang, et al., 2011). Second, existing studies include small sample sizes (Khowaja & Salim, 2013; Knight et al., 2013; Ramdoss, Lang, et al., 2011) and lack experimental control (Khowaja & Salim, 2013; Knight et al., 2013; Pennington, 2010). Furthermore, a large portion of CBI research investigates products that are not currently available (i.e., software was designed specifically for the study, software has been discontinued; Ramdoss, Lang, et al., 2011). Other studies involve available software that would require user modifications in order to be implemented as an intervention (e.g., Microsoft PowerPoint®; Ramdoss, Lang, et al., 2011; Ramdoss, Mulloy, et al., 2011). Thus, there is a paucity of research investigating software that is currently on the market and specifically designed to teach skills (Kagohara et al., 2013; King et al., 2014; Ramdoss, Lang, et al., 2011). Two commercially available products have some empirical support. Baldi® is an interactive, computer-animated tutor designed to teach vocabulary to children with ASD (Bosseler & Massaro, 2003; Massaro & Bosseler, 2006). TeachTown® is a computer program that incorporates the principles of ABA (Whalen, Liden, Ingersoll, Dallaire, & Liden, 2006; Whalen et al., 2010). Although these products show promise, evaluation of other commercially available software is warranted.

Despite the growing popularity of mobile devices and the overwhelming number of mobile applications available on the marketplace, there remains a lack of research on applications used to teach children with ASD (Kagohara et al., 2013; Knight et al., 2013). Furthermore, it is difficult for consumers to gauge the degree to which these applications were developed from known principles of learning. The Center for Autism and Related Disorders (CARD) recently developed an application, Camp Discovery, designed to address the specific learning needs of individuals with ASD using the principles of ABA (Cooper, Heron, & Heward, 2007). Although Camp Discovery is based on evidence-based principles of learning, no research has been conducted to evaluate if the mobile application is an effective teaching tool. Thus, the purpose of the present study is to investigate the effectiveness of Camp Discovery in teaching receptive language skills across a variety of domains to children with ASD.

Methods

Participants and Setting

Participants were children with ASD receiving ABA services from a community-based behavioral health agency, which operates centers in multiple states. Participants were required to meet the following inclusion criteria: (a) have a diagnosis of ASD (American Psychiatric Association, 2013), autistic disorder (AD; American Psychiatric Association, 2000), pervasive developmental disorder–not otherwise specified (PDD-NOS; American Psychiatric Association, 2000), or Asperger’s disorder (AsD, American Psychiatric Association, 2000) by a licensed professional (e.g., licensed psychologist, pediatrician, neurologist); (b) be 1 to 8 years old; (c) have a minimum of 100 unknown targets covered in the mobile application; (d) have demonstrated fine motor ability sufficient to independently hold and operate an iPad® tablet; (e) have no parent-reported visual or auditory impairments; and (f) have English as their primary household language.

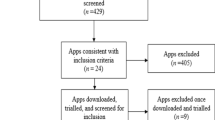

All procedures performed in this study, including methods for recruitment, underwent institutional review board approval. Caregivers of children receiving ABA services from seven geographically diverse treatment centers were contacted if their child was within the targeted age range and if their child’s supervising clinician judged the mobile application to be appropriate for the child based on his or her level of functioning. Sixty caregivers were approached, of which 45 decided to enroll their child into the study. A total of five participants were excluded from the study because they demonstrated mastery of a substantial portion of the content covered in the mobile application, indicating that they would likely not benefit significantly from the protocol. An additional 12 participants were dropped from the study due to availability-related limitations (i.e., sessions could not be conducted regularly due to scheduling conflicts). Figure 1 depicts the flow of participants through each stage of the study.

A total of 28 children completed the study. Participants included 24 males and 4 females. The average age of the participants was 69.29 months old, ranging from 30 to 107 months. Participants in this study resided in the states of California, Louisiana, and New York. Participant information and demographic variables are provided in Table 1. Twenty participants had a diagnosis of AD, seven had a diagnosis of ASD, and one had a diagnosis of PDD-NOS. According to the Diagnostic and Statistical Manual of Mental Disorders, Fifth Edition, individuals with well-established diagnoses of AD, AsD, or PDD-NOS should be given the diagnosis of ASD (American Psychiatric Association, 2013). In addition, all 28 participants were verbal, according to the criterion defined by Lord, Risi, and Pickles (2004), which was verified via a clinical records review. As a part of their normal clinical services, all 28 participants received standardized testing that involved the Vineland-II (Sparrow, Cicchetti, & Balla, 2005) and a language assessment, including the Peabody Picture Vocabulary Test, Fourth Edition (Dunn & Dunn, 2007); the Rossetti Infant-Toddler Language Scale (Rossetti, 1990); Preschool Language Scales, Fifth Edition (Zimmerman, Steiner, & Pond, 2011); Mullen Scales of Early Learning (Mullen, 1995); the Test of Language Development–Primary, Fourth Edition (Hammill & Newcomer, 2008); or the Receptive-Expressive Emergent Language Test, Third Edition (Bzoch, League, & Brown, 2003).

Probes and intervention sessions were conducted by a research assistant in the participant’s home or at the participant’s treatment center, whichever site was more convenient for the participant. Probes took anywhere between 1 and 3 h and were conducted across one or multiple sessions performed within a 1-week period. Treatment sessions occurred for 3 h per week (i.e., three 1-h sessions) for 4 weeks, separate from ongoing ABA sessions. Research assistants were practicing behavioral therapists who received extensive training in ABA treatment for ASD. Training included 15 h of e-learning and 25 h of classroom-style lecture and role-play, followed by an exam to test competency of training material. The research assistants also completed at least 24 h of supervised fieldwork and underwent a field evaluation. For a more detailed description of the behavioral therapist training, see Granpeesheh, Tarbox, Najdowski, and Kornack (2014).

Mobile Application

Camp Discovery is a mobile application available for iOS and Android phones and tablets (CARD, 2018). The application incorporates modified discrete-trial training (DTT) procedures and other behavioral principles of ABA to teach receptive language targets across different lessons. The application uses modified DTT procedures to introduce and randomly rotate targets with rules that dictate when a target is considered mastered. The DTT procedures, based on those described by Granpeesheh et al. (2014), were adapted for a gameplay format. In Camp Discovery, learning rounds are made up of numerous trials, in which a user is asked to identify a specific target (e.g., “Touch happy.”). Variations of the instruction are given (e.g., “Touch happy,” Find happy,” and “Which is happy?”) trial to trial for generalization purposes. Following each instruction, users are given a response time of 5 s to identify the target. Depending on the lesson, two or three targets are introduced in a given learning round. Each target is first introduced alone via mass trial. Prompting is used when each target is introduced, which involves manipulating the size of the target stimuli on the screen. A most-to-least prompting schedule is applied starting with an extra-large image for high-level prompts, followed by a large image for low-level prompts, then a standard-sized image for no prompt. Once the first target is successfully introduced via mass trial (i.e., it is identified correctly in three consecutive trials), the second target is introduced via mass trial. After the second target is successfully introduced, the two targets are randomly rotated, meaning the instruction presented in the trials will switch off between these targets at random.

Each target is presented in a field of two stimuli (i.e., with a single distractor). Distractors are randomly selected from a pool of stimuli in a given lesson, which may include the other targets from the current learning round, previously mastered targets, or targets that will appear in later learning rounds. The most-to-least prompting schedule discussed previously is applied during the initial trials of random rotation. To complete random rotation, both targets must be identified correctly in two consecutive trials with no prompting present. For some lessons (e.g., Opposites), learning rounds end here, after the targets are successfully identified in a field of two. For these lessons, a contrast between two stimuli (e.g., big vs. small) is necessary. For the majority of lessons, a third target is introduced via mass trial. Once successfully introduced, the third target is randomly rotated with the initial two targets. Each target is presented in a field of three stimuli (i.e., with two additional distractors). To complete the learning round, and master the targets, each target must be identified correctly in two consecutive trials with no prompting present. Figure 2 includes screenshots from the Camp Discovery application that depicts some of the processes described previously.

Screenshots from the Community Helpers lesson depicting (a) mass trial with high-level prompting, (b) random rotation with one distractor and low-level prompting, and (c) random rotation with two distractors and no prompting. Screenshots also show the different stimuli used for the target (i.e., doctor) for generalization

Throughout the learning round, both reinforcement and error correction are provided to support users. Reinforcement is provided for all correct responses in the form of verbal praise via voice-overs (e.g., “Amazing,” “Fantastic,” “Good work”) and visual animations that occur across the screen with accompanying sound effects. Although the type of reinforcement provided (i.e., visual animation with sound effect) is limited, a preference assessment is administered prior to learning rounds to individualize the animations that occur for reinforcement. The preference assessment involves a virtual switchboard, where the user can interact with each animation. The animations selected most frequently by the user during the preference assessment appear as reinforcement during the learning round. Additional motivation is provided in the form of minigames following each learning round. Interactive and visually stimulating minigames (e.g., balloon pop, fireworks) may be played for a duration of 1 min. In addition to reinforcement, an error-correction procedure is used throughout each learning round to support users if they answer incorrectly or do not answer at all. First, verbal feedback is given via a voice-over (e.g., “That’s not it,” “Not quite,” “Try again,” “Time’s up”). Then, in subsequent trials, the size of the target stimuli is manipulated, as described previously, to prompt the user to answer correctly. If an incorrect response occurs on a low-level prompt or when no prompt is present, most-to-least prompting will be applied in the following trials beginning with the high-level prompt, followed by the low-level prompt, then no prompt. If an incorrect response occurs on a high-level prompt, the target is sent back into mass trail. The target will then move through the prompting schedule in mass trail and again in random rotation.

There are a wide variety of lessons, including Objects, Colors, Community Helpers, Body Parts, Numbers, Emotions, Shapes, Actions, Locations, Letters, Gender, Wh- Discrimination, Counting and Quantities, Quantitative Concepts, Quantitative Comparisons, Prepositions, Functions, Plurals, Features, What Goes With, Categories, Negation, Attributes, Sight Words, Sound Discrimination, Telling Time, Money, Describe, Opposites, Daily Activities, Adverbs, Ordinal Numbers, Calendar, Phonics, and more. Each lesson includes different levels that address matching, sorting, and/or receptive language skills. The levels focusing on receptive language skills were evaluated in the present study.

Experimental Design and Group Assignment

A randomized, controlled design was used in the present study. Participants were randomly assigned to either an immediate-treatment (IT) group or delayed-treatment control (DTC) group. As such, all participants received treatment individually via the mobile application, and the participants in the DTC group were not deprived of any potential learning benefits. A total of 15 participants were assigned to the IT group and 13 were assigned to the DTC group.

After an initial probe (i.e., pretreatment probe, described in the Procedures section), the IT group began interacting with the mobile application (described in detail in the Procedures section), whereas the DTC group continued with treatment as usual with no manipulations. After 4 weeks, both groups received a second probe (i.e., posttreatment for the IT group; pretreatment for the DTC group) to determine if learning took place in the presence or absence of the mobile application. Following the probe, the DTC group entered the treatment phase while the IT group discontinued use of the mobile application, receiving only treatment as usual. After 4 weeks, both groups were administered a final probe to determine if any learning occurred (i.e., DTC group), as well as to evaluate whether previously acquired skills were maintained (i.e., IT group) without access to the mobile application.

Dependent Measure and Interobserver Agreement

The dependent measure was performance during a probe of targets conducted pre- and posttreatment. The targets, or learning objectives, measured were single receptive labels (see Table 2 for examples of lessons and targets). Probes provide a brief baseline measure of a child’s skill level (Granpeesheh et al., 2014). The number of learned targets was used to measure short-term outcomes because standardized language assessments are not designed to detect rapid changes at short intervals (Granpeesheh, Dixon, Tarbox, Kaplan, & Wilke, 2009).

Interobserver agreement data were collected for 8 of the 28 total participants (i.e., 28.57%) during each of their three probe sessions. Two observers (i.e., trained research assistants) simultaneously but independently scored all participant responses during each probe session. Percentage of agreement was calculated by dividing the total number of agreements by the total number of agreements plus disagreements and multiplying by 100 (Kazdin, 1982). Overall, interobserver agreement averaged 99.09% for all sessions and ranged from 97.94% to 100%.

Procedures

Target Selection

Each participant’s supervising clinician was consulted prior to the probes to determine potential skill areas that were appropriate for instruction based on the participant’s level of functioning and did not overlap with current or foreseeable treatment objectives that would be addressed during the course of this study. Because each participant was receiving ABA services at the time of the study, procedures were followed to ensure that targets addressed by the mobile application were not simultaneously targeted within treatment as usual. First, supervising clinicians were kept blind to the initial probe results and the specific targets selected to address using the mobile application. Second, supervisors were instructed to report any changes made to a participant’s treatment plan to the study investigators over the course of the study. If at any point during the study a participant’s treatment-as-usual objectives overlapped with targets being addressed by the mobile application, the participant’s data would have been excluded from analysis. This potential conflict did not arise for any participant. Participants’ parents were also kept blind to the probe results and the specific targets addressed throughout treatment. Parents were instructed not to use the mobile application with their child outside of treatment sessions. Although efforts were made to ensure that participants’ targets did not overlap with their ongoing treatment objectives in home and clinical settings, the current study did not control for potential learning in other settings (e.g., school).

Pre- and Posttreatment Probes

The research assistant provided an instruction (e.g., “Touch happy”) and presented a field of stimuli (i.e., correct stimulus, one or two distractors, depending on the lesson). The same stimuli used in the mobile application were printed and used during the probe sessions. Stimuli were approximately 3 in. × 3 in. (7.6 cm × 7.6 cm) and included either illustrations or photographs, depending on the lesson. Probes were conducted lesson by lesson, and the order in which lessons and targets were probed was random. During probe sessions, reinforcement was not provided for correct responses nor was corrective feedback provided for incorrect responses. Reinforcement was only provided for maintaining attention and exhibiting appropriate behavior. Reinforcers were identified via a preference assessment conducted by the research assistant.

Each target was probed across at least three trials to determine whether it was known or unknown. Targets with probe scores of 0 to 1/3 were considered unknown. Scores of 3/3 were considered known. Two additional trials were performed for targets with scores of 2/3, in which case scores of 2/5 or 3/5 were considered unknown and scores of 4/5 were considered known. Each participant experienced three total probe sessions. The first probe was used to identify approximately 100 unknown targets that were covered within the mobile application’s learning content. Subsequent probes assessed the targets identified as unknown during the initial probe.

Treatment: Camp Discovery

Prior to a treatment session, the research assistant set up and customized the participant’s Camp Discovery account. Application settings were adjusted to ensure that the participant only worked on the targets identified as unknown during his or her initial probe. That is, if a participant had both known and unknown targets within a given lesson, known targets were disabled within the application settings to ensure that only unknown targets would be introduced during treatment.

To initiate a treatment session, the research assistant handed the participant the iPad® tablet with Camp Discovery open and instructed the participant to select one of the target lessons. If the participant did not independently select a lesson, the research assistant arbitrarily selected one of the participant’s target lessons. The research assistant did not provide prompting or error correction for incorrect responses nor reinforcement for correct responses; however, the research assistant did provide reinforcement for positive behaviors (e.g., focusing, listening, interacting with the application appropriately). If the participant was nonresponsive for three consecutive trials or was using the application or device inappropriately, the research assistant provided the least intrusive prompt to redirect the participant’s attention to the application (e.g., verbal, gestural, physical prompt). If a participant lost interest in or became frustrated with a lesson (e.g., engaged in noncompliance, tantrum, or whining behavior), the research assistant prompted the participant to select a different target lesson. Participants were not required to spend a specific amount of time on any one lesson. That is, if a participant sustained attention on a particular lesson, the participant worked on a single lesson for the duration of the treatment session. Finally, breaks were provided, as needed, to keep the participant engaged throughout each treatment session. Breaks lasted no longer than 5 min, during which time participants could engage in a preferred activity unrelated to Camp Discovery. There was no limit on the number of breaks a participant could take during a given treatment session.

Results

Data were analyzed using SPSS® (Version 23). Groups did not significantly differ on age, t(26) = 0.96, p = .35, proportion male, χ2(1) = 1.53, p = .22, diagnosis, χ2(2) = 1.01, p = .61, nor on any of the standardized assessments, suggesting that the groups were equivalent at the start of the study.

A difference score was calculated for each participant by subtracting the number of known targets in Probe 2 from the number of known targets in Probe 1. This resulted in the number of targets that the participant had not known at the start of the study but had mastered by the second probe. On average, participants learned significantly more in the IT group, M = 58.1, SE = 7.5, than in the DTC group, M = 8.4, SE = 2.1. As shown in Table 3, this difference was significant, t(16.2) = 6.34, p < .001, and revealed a large effect size, d = 2.33 (Cohen, 1988). Although not a part of the primary analysis, a dependent-samples t-test was run to evaluate for change within the DTC group following treatment. As a group, the number of unknown targets significantly decreased between Probe 2, M = 105.15, SE = 4.53, and Probe 3, M = 54.77, SE = 6.35. This difference was significant, t(12) = 6.81, p < .001, and showed a large effect size, d = 2.53. To evaluate for maintenance of learned targets, a dependent-samples t-test was run to evaluate for change within the IT group following the maintenance phase. On average, IT participants lost 1.1 previously mastered targets during the 1-month delay between Probe 2, M = 48.6, SE = 8.1, and Probe 3, M = 49.7, SE = 8.5. This loss was not statistically significant, t(14) = −0.48, p = .64. Figure 3 depicts the average number of unknown targets for each group at Probes 1, 2, and 3.

Discussion

The present study evaluated the effectiveness of a mobile application designed to teach skills to children with ASD using the principles of ABA. Participants made significant gains following 12 h of CBI gameplay over the course of 4 weeks. Furthermore, participants maintained the acquired skills following a 1-month delay without additional access to the application. The results of the present study have several implications for ASD treatment. Like previous research studies (Bosseler & Massaro, 2003; Whalen et al., 2010), CBI was shown to be effective in teaching receptive language skills to children with ASD. Further, the current findings show that participants demonstrated relatively high rates of learning over a short duration (i.e., 12 h in 1 month). Finally, CBI may increase the efficiency and accessibility of treatment for ASD. Each of these implications will be discussed in turn.

Study participants mastered an average of 4.54 targets per hour of gameplay. This is a relatively high rate of mastery when contrasted to in-person ABA programs, which have shown average rates of mastery to be approximately 0.1 to 0.25 learning objectives per hour (Dixon et al., 2016; Granpeesheh et al., 2009; Linstead et al., 2017). Although these rates of mastery are target level and include receptive language-learning objectives, like those included in the current study, it should be noted that they also include a variety of learning objectives with varying degrees of difficulty, ranging from fundamental to advanced, which could impact the time required for mastery. Nevertheless, the difference in the reported rates of mastery is considerable. There are multiple factors that may account for an increased rate of learning with CBI. First, it has been suggested that CBI automates processes that are often considered cumbersome to conduct, including delivery and fading of prompts, reinforcement, and data collection (Ramdoss, Lang, et al., 2011). It is possible that this automation removes the potential for human error and may enable untrained individuals to deliver instruction (Pennington, 2010). Research investigating whether CBI improves treatment integrity as compared to human-delivered treatment is warranted. It is also possible that individuals with ASD may find the multisensory experience of CBI reinforcing (Pennington, 2010). Given the noted high rate of mastery, CBI may increase the overall efficiency of ASD treatment interventions.

With the increased prevalence of ASD (Autism and Developmental Disabilities Monitoring Network, 2016; Zablotsky, Black, Maenner, Schieve, & Blumberg, 2015), there is a lack of well-qualified clinicians (e.g., Board Certified Behavior Analysts, speech-language pathologists) able to provide treatment for children with ASD (Love, Carr, Almason, & Petursdottir, 2009). This creates a need for alternative means by which to augment treatment. CBI applications available on mobile devices remove the barrier that access to local clinicians presents. These applications bring ASD treatment to wherever the device is located.

There are limitations to the present study. First, the small sample size should be noted, as this will limit generalizability. However, the sample size in the present study (N = 28) is among the largest to be used in a randomized, controlled trial of CBI for teaching skills to individuals with ASD. Further, the present study did not control for differing levels of ASD severity and had a relatively wide range in participant age. These factors may have an impact in how individuals with ASD respond to the application. It is possible that participants with lower ASD severity mastered more targets because of child-specific traits (e.g., age, IQ, language skills), rather than the treatment alone. However, when individual participant performance is reviewed, it is evident that all participants mastered targets in response to the treatment. Finally, the lack of treatment fidelity data collected during treatment sessions is a significant limitation of the current study. Future researchers should include these data to strengthen the reliability of their results.

Although CBI applications ideally would be used without supervision by a clinician, to ensure treatment integrity within the present study, a trained research assistant was present at all sessions to help maintain focus and manage challenging behaviors. Some participants in this study did exhibit challenging behaviors (e.g., self-stimulatory behavior, noncompliance) that required redirection. Although the current findings suggest that the teaching procedures incorporated into the application are effective with one-to-one behavior management, it is unknown if similar gains would be achieved if a child was to use the application with little or no supervision. The current findings are preliminary, and more research is needed to determine if the application could be used to successfully supplement or augment traditional one-to-one treatment. If supervision is needed to maintain the application’s efficacy, it is possible that a parent with behavior management training could supervise CBI from home to supplement treatment. It may also be the case that the application could be effectively used with very little supervision, which would enable delivery of treatment to more than one individual per clinician. That is to say, CBI methods may allow one behavioral therapist to oversee multiple children working independently on their individualized lessons. These are all empirical questions for future research.

In addition, future studies should evaluate how well the acquired skills from the application generalize to other stimuli found in the natural environment. The present study implemented multiple stimuli per target to increase generalization, but it is unclear if participants applied the learned skills to the natural environment. It is also important to consider CBI versus in-person ABA treatment. If CBI is found to show a higher rate of mastery at a lower intensity and duration for specific skills when compared to in-person treatment, then clinicians can design more effective treatment plans and allocate in-person treatment hours to target skills that cannot be taught through CBI. Overall, this would increase the efficiency of ASD treatment and optimize outcomes.

CBI research has primarily focused on basic literacy skills, including matching, receptive identification, and spelling (Knight et al., 2013; Pennington, 2010). A challenge for future CBI development is to target more complex skills, which currently require highly trained therapists. Other technologies, such as virtual reality (Yang et al., 2018) and robotics (Diehl, Schmitt, Villano, & Crowell, 2012; Villano et al., 2011), are beginning to be harnessed in the hopes of targeting these more advanced skills (e.g., social skills). By applying known principles of behavior with these advances in technology, CBI to supplement treatment for complex skills is likely within reach. There will certainly always be a need for in-person treatment, especially considering the social interaction and social communication deficits that make up the core symptoms of ASD. With that said, as technology advances, there may be aspects of treatment that no longer require one-to-one attention. Although the present findings are preliminary, they add to the current research indicating that CBI may be a viable, cost-effective method of teaching receptive language skills to individuals with ASD. If more evidence-based CBI programs are available via mobile applications, they can be utilized to supplement ongoing interventions or to deliver treatment to those without access to services. Furthermore, it is critical that researchers continue to develop and evaluate interactive, game-based CBI programs, such as Camp Discovery, to augment existing treatment practices.

References

American Psychiatric Association. (2000). Diagnostic and statistical manual of mental disorders (4th ed., text rev.). Washington, DC: Author.

American Psychiatric Association. (2013). Diagnostic and statistical manual of mental disorders (5th ed.). Arlington, VA: Author.

Autism and Developmental Disabilities Monitoring Network. (2016). Prevalence and characteristics of autism spectrum disorder among children aged 8 years: Autism and developmental disabilities monitoring network, 11 sites, United States, 2012. Morbidity and Mortality Weekly Report Surveillance Summaries, 65, 1–23. https://doi.org/10.15585/mmwr.ss6503a1.

Behavior Analyst Certification Board. (2014). Applied behavior analysis treatment of autism spectrum disorder: Practice guidelines for healthcare funders and managers (2nd ed.). Retrieved from http://bacb.com/wp-content/uploads/2017/09/ABA_Guidelines_for_ASD.pdf

Boone, J. E., Gordon-Larsen, P., Adair, L. S., & Popkin, B. M. (2007). Screen time and physical activity during adolescence: Longitudinal effects on obesity and young adulthood. International Journal of Behavioral Nutrition and Physical Activity, 4, 1–10. https://doi.org/10.1186/1479-5868-4-26.

Bosseler, A., & Massaro, D. W. (2003). Development and evaluation of a computer-animated tutor for vocabulary and language learning in children with autism. Journal of Autism and Developmental Disorders, 33, 653–672. https://doi.org/10.1023/B:JADD.0000006002.82367.4f.

Bzoch, K. R., League, R., & Brown, V. L. (2003). Receptive-expressive emergent language test (3rd ed.). Austin, TX: Pro-Ed.

Center for Autism and Related Disorders. (2018). Camp discovery (version 4.3) [Mobile application software]. In Retrieved from http://campdiscoveryforautism.com/.

Cihak, D. F., Wright, R., & Ayres, K. M. (2010). Use of self-modeling static-picture prompts via a handheld computer to facilitate self-monitoring in the general education classroom. Education and Training in Autism and Developmental Disabilities, 45, 136–149.

Cohen, J. (1988). Statistical power analysis for the behavioral sciences (2nd ed.). Hillsdale, NJ: Lawrence Erlbaum.

Cooper, J. O., Heron, T. E., & Heward, W. L. (2007). Applied behavior analysis (2nd ed.). Upper Saddle River, NJ: Prentice Hall.

Diehl, J. J., Schmitt, L. M., Villano, M., & Crowell, C. R. (2012). The clinical use of robots for children with autism spectrum disorders: A critical review. Research in Autism Spectrum Disorders, 6, 249–262. https://doi.org/10.1016/j.rasd.2011.05.006.

Dixon, D. R., Linstead, E., Granpeesheh, D., Novack, M. N., French, R., Stevens, E., … Powell, A. (2016). An evaluation of the impact of supervision intensity, supervisor qualifications, and caseload on outcomes in the treatment of autism spectrum disorder. Behavior Analysis in Practice, 9, 339–348. https://doi.org/10.1007/s40617-016-0132-1.

Dunn, L. M., & Dunn, D. M. (2007). Peabody picture vocabulary test (4th ed.). Minneapolis, MN: Pearson Assessments.

Eldevik, S., Hastings, R. P., Hughes, J. C., Jahr, E., Eikeseth, S., & Cross, S. (2009). Meta-analysis of early intensive behavioral intervention for children with autism. Journal of Clinical Child and Adolescent Psychology, 38, 439–450. https://doi.org/10.1080/15374410902851739.

Gentry, T., Wallace, J., Kvarfordt, C., & Lynch, K. B. (2010). Personal digital assistants as cognitive aids for high school students with autism: Results of a community-based trial. Journal of Vocational Rehabilitation, 32, 101–107. https://doi.org/10.3233/JVR-2010-0499.

Granpeesheh, D., Dixon, D. R., Tarbox, J., Kaplan, A. M., & Wilke, A. E. (2009). The effects of age and treatment intensity on behavioral intervention outcomes for children with autism spectrum disorders. Research in Autism Spectrum Disorders, 3, 1014–1022. https://doi.org/10.1016/j.rasd.2009.06.007.

Granpeesheh, D., Tarbox, J., Najdowski, A. C., & Kornack, J. (Eds.). (2014). Evidence-based treatment for children with autism: The CARD model. Waltham, MA: Elsevier.

Hale, L., & Guan, S. (2015). Screen time and sleep among school-aged children and adolescents: A systematic literature review. Sleep Medicine Reviews, 21, 50–58. https://doi.org/10.1016/j.smrv.2014.07.007.

Hammill, D. D., & Newcomer, P. (2008). Test of language development–primary (4th ed.). Austin, TX: Pro-Ed.

Hardy, L. L., Denney-Wilson, E., Thrift, A. P., Okely, A. D., & Baur, L. A. (2010). Screen time and metabolic risk factors among adolescents. Archives of Pediatrics and Adolescent Medicine, 164, 643–649. https://doi.org/10.1001/archpediatrics.2010.88.

Kagohara, D. M., van der Meer, L., Ramdoss, S., O’Reilly, M. F., Lancioni, G. E., Davis, T. N., … Sigafoos, J. (2013). Using iPods® and iPads® in teaching programs for individuals with developmental disabilities: A systematic review. Research in Developmental Disabilities, 34, 147–156. doi:https://doi.org/10.1016/j.ridd.2012.07.027.

Kazdin, A. (1982). Single-case research designs: Methods for clinical and applied settings. New York, NY: Oxford Press University.

Khowaja, K., & Salim, S. S. (2013). A systematic review of strategies and computer-based intervention (CBI) for reading comprehension of children with autism. Research in Autism Spectrum Disorders, 7, 1111–1121. https://doi.org/10.1016/j.rasd.2013.05.009.

King, A. M., Thomeczek, M., Voreis, G., & Scott, V. (2014). iPad® use in children and young adults with autism spectrum disorder: An observational study. Child Language Teaching and Therapy, 30, 159–173. https://doi.org/10.1177/0265659013510922.

Knight, V., McKissick, B. R., & Saunders, A. (2013). A review of technology-based interventions to teach academic skills to students with autism spectrum disorder. Journal of Autism and Developmental Disorders, 43, 2628–2648. https://doi.org/10.1007/s10803-013-1814-y.

Linstead, E., Dixon, D. R., French, R., Granpeesheh, D., Adams, H., German, R., … Kornack, J. (2017). Intensity and learning in the treatment of children with autism spectrum disorder. Behavior Modification, 41, 229–252. https://doi.org/10.1016/j.rasd.2009.06.007.

Lord, C., Risi, S., & Pickles, A. (2004). Trajectory of language development in autism spectrum disorders. In M. L. Rice & S. F. Warren (Eds.), Developmental language disorders: From phenotypes to etiologies (pp. 7–29). Mahwah, NJ: Lawrence Erlbaum.

Love, J. R., Carr, J. E., Almason, S. M., & Petursdottir, A. I. (2009). Early and intensive behavioral intervention for autism: A survey of clinical practices. Research in Autism Spectrum Disorders, 3, 421–428. https://doi.org/10.1016/j.rasd.2008.08.008.

Massaro, D. W., & Bosseler, A. (2006). Read my lips: The importance of the face in a computer-animated tutor for vocabulary learning by children with autism. Autism, 10, 495–510. https://doi.org/10.1177/1362361306066599.

Mechling, L. C. (2011). Review of twenty-first century portable electronic devices for persons with moderate intellectual disabilities and autism spectrum disorders. Education and Training in Autism and Developmental Disabilities, 46, 479–498.

Mechling, L. C., Gast, D. L., & Seid, N. H. (2009). Using a personal digital assistant to increase independent task completion by students with autism spectrum disorder. Journal of Autism and Developmental Disorders, 39, 1420–1434. https://doi.org/10.1007/s10803-009-0761-0.

Moore, M., & Calvert, S. (2000). Brief report: Vocabulary acquisition for children with autism: Teacher or computer instruction. Journal of Autism and Developmental Disorders, 30, 359–362. https://doi.org/10.1023/A:1005535602064.

Mullen, E. M. (1995). Mullen scales of early learning. Circle Pines, MN: American Guidance Service.

Pennington, R. C. (2010). Computer-assisted instruction for teaching academic skills to students with autism spectrum disorders: A review of literature. Focus on Autism and Other Developmental Disabilities, 25, 239–248. https://doi.org/10.1177/1088357610378291.

Ramdoss, S., Lang, R., Mulloy, A., Franco, J., O’Reilly, M., Didden, R., & Lancioni, G. (2011). Use of computer-based intervention to teach communication skills to children with autism spectrum disorders: A systematic review. Journal of Behavioral Education, 20, 55–76. https://doi.org/10.1007/s10864-010-9112-7.

Ramdoss, S., Machalicek, W., Rispoli, M., Mulloy, A., Lang, R., & O’Reilly, M. (2012). Computer-based interventions to improve social and emotional skills in individuals with autism spectrum disorders: A systematic review. Developmental Neurorehabilitation, 15, 119–135. https://doi.org/10.3109/17518423.2011.651655.

Ramdoss, S., Mulloy, A., Lang, R., O’Reilly, M., Sigafoos, J., Lancioni, G., & El Zein, F. (2011). Use of computer-based interventions to improve literacy skills in students with autism spectrum disorders: A systematic review. Research in Autism Spectrum Disorders, 5, 1306–1318. https://doi.org/10.1016/j.rasd.2011.03.004.

Reichow, B., Barton, E. E., Boyd, B. A., & Hume, K. (2012). Early intensive behavioral intervention (EIBI) for young children with autism spectrum disorders (ASD). Cochrane Database of Systematic Reviews, 10, 1–60. https://doi.org/10.1002/14651858.CD009260.pub2.

Riffel, L. A., Wehmeyer, M. L., Turnbull, A. P., Lattimore, J., Davies, D., Stock, S., & Fisher, S. (2005). Promoting independent performance of transition-related tasks using a palmtop PC-based self-directed visual and auditory prompting system. Journal of Special Education Technology, 20, 5–14. https://doi.org/10.1177/016264340502000201.

Rossetti, L. M. (1990). In ) (Ed.), The Rossetti infant-toddler language scale: A measure of communication and interaction. East Moline, IL: LinguiSystems.

Sparrow, S., Cicchetti, D., & Balla, D. (2005). Vineland-II: Vineland adaptive behavior scales: Survey forms manual (2nd ed.). Circle Pines, MN: American Guidance Services.

Still, K., Rehfeldt, R. A., Whelan, R., May, R., & Dymond, S. (2014). Facilitating requesting of skills using high-tech augmentative and alternative communication devices with individuals with autism spectrum disorders: A systematic review. Research in Autism Spectrum Disorders, 8, 1184–1199. https://doi.org/10.1016/j.rasd.2014.06.003.

Villano, M., Crowell, C. R., Wier, K., Tang, K., Thomas, B., Shea, N., … Diehl, J. J. (2011, March). DOMER: A Wizard of Oz interface for using interactive robots to scaffold social skills for children with autism spectrum disorders. Paper presented at the 6th ACM/IEEE International Conference on Human-Robot Interaction, Lausanne, Switzerland. https://doi.org/10.1145/1957656.1957770

Whalen, C., Liden, L., Ingersoll, B., Dallaire, E., & Liden, S. (2006). Behavioral improvements associated with computer-assisted instruction for children with developmental disabilities. Journal of Speech and Language Pathology and Applied Behavior Analysis, 1, 11–26. https://doi.org/10.1037/h0100182.

Whalen, C., Moss, D., Ilan, A. B., Vaupel, M., Fielding, P., Macdonald, K., … Symon, J. (2010). Efficacy of TeachTown: Basic computer-assisted intervention for the intensive comprehensive autism program in Los Angeles unified School District. Autism, 14, 179–197. https://doi.org/10.1177/1362361310363282.

Yang, Y. J. D., Allen, T., Abdullahi, S. M., Pelphrey, K. A., Volkmar, F. R., & Chapman, S. B. (2018). Neural mechanisms of behavioral change in young adults with high-functioning autism receiving virtual reality social cognition training: A pilot study. Autism Research, 11, 713–725. https://doi.org/10.1002/aur.1941.

Zablotsky, B., Black, L. I., Maenner, M. J., Schieve, L. A., & Blumberg, S. J. (2015). Estimated prevalence of autism and other developmental disabilities following questionnaire changes in the 2014 National Health Interview Survey. National Health Statistics Reports, 87, 1–20.

Zimmerman, I. L., Steiner, V. G., & Pond, R. E. (2011). Preschool language scales (5th ed.). San Antonio, TX: Pearson Clinical Assessment Group.

Acknowledgments

We thank Claire Burns, Jasper Estabillo, Jina Jang, Kathryn Marucut, Lauren Whitlock, and Nicholas Marks for their assistance collecting data for this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors work at the Center for Autism and Related Disorders, the developer of Camp Discovery.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Rights and permissions

About this article

Cite this article

Novack, M.N., Hong, E., Dixon, D.R. et al. An Evaluation of a Mobile Application Designed to Teach Receptive Language Skills to Children with Autism Spectrum Disorder. Behav Analysis Practice 12, 66–77 (2019). https://doi.org/10.1007/s40617-018-00312-7

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40617-018-00312-7