Abstract

In academia, citations received by articles are a critical metric for measuring research impact. An important aspect of publishing in academia is the ability of the authors to navigate the review process, and despite its critical role, very little is known about how the review process may impact the research impact of an article. We propose that characteristics of the review process, namely, number of revisions and time with authors during review, will influence the article’s research impact, post-publication. We also explore the moderating role of the authors’ social status on the relationship between the review process and the article’s success. We use a unique data set of 434 articles published in Marketing Science to test our propositions. After controlling for a host of factors, we find broad support for our propositions. We develop critical insights for researchers and academic administrators based on our findings.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Although Leibnitz could stake a viable claim to the title, most of the world considers Newton as the inventor of calculus because he was the first to publish his ideas [23]. Similar evidence of who gets recognition for novel ideas has been reported in social sciences as well [27]. In the field of marketing, researchers have investigated a variety of issues, including but not limited to the role of author diversity (e.g., [29]), author reputation, article and expositional quality (e.g., [30]) on impact and cross-referencing across journals (e.g., [32]), among others. Others have studied the evolution of journals (e.g., [18]) and the discipline itself (e.g., [3]). However, a critical element that is missing from current understanding of why articles are successful is how the review process may itself shape the success of a published article. We seek to address this issue by investigating the role of the review process in determining the research impact of published articles at a top marketing journal.

Academic articles that propose novel ideas and result in the development of path-breaking ideas could be expected to get the most attention and consequently higher citations in the long run. Building on this notion, we investigate how an iterative knowledge development process, such as the journal review process, can impact post-publication impact. In the academic context, knowledge is a public good the value of which is enhanced with diffusion. Further, diffusion is in fact a unique performance metric of knowledge goods, namely, the impact of a published article on subsequent research, as measured through citations (e.g., [5, 30]). Being first to publication may allow academic articles to gain reader mindshare and help garner citations. As the review process by its very nature is time consuming, we propose that the time involved in the review process may itself impact the citations received by the published articles in the long run.

However, characteristics that make research articles successful are not always observable. For related knowledge products such as patents, the proprietary knowledge becomes codified, and the patenting process is normative. A third-party patent examiner determines whether citations referred to by the patent are truly relevant prior art. For journal articles though, the role of similar gatekeepers (e.g., reviewers and editors) is much broader, such that they undertake an iterative review process and provide feedback. Accordingly, no normative mechanism determines the impact that a published article subsequently has.

Although there are no studies within the field of marketing that have looked at the explicit role of the review process, prior analyses of the peer review process in other disciplines [17, 23] have mainly focused on the fairness of the process as a critical determinant and found mixed results [1, 7, 8, 10, 25, 28]; we, in contrary, adopt a different perspective. As acceptance into a top-tier journal is a necessary but not sufficient step for ultimate success, we consider the role of peer review and its impact on performance after acceptance, to further understanding of success in this domain.

We posit that the review process is one of the primary drivers of article’s impact after acceptance, measured by the citations that a published article receives. By focusing on the review process, we attempt to delineate the underlying dynamics that shape the value of the article subsequent to publication. It is not unusual for an article to change significantly through the review process. The iterative interaction between the authors and the reviewers as captured by the dynamics of the review process enables the gatekeepers (reviewers and editors) to shape this transformation [4, 15]. Furthermore, the review process as a driver of value creation elevates peer review beyond its filtering role for accepting and rejecting articles. Because the review process shapes the manuscript as it evolves through multiple rounds, we expect it to influence the published article’s performance as well. Using data pertaining to the peer review process employed by Marketing Science, a top-tier journal, we systematically investigate its effect on a universally accepted performance metric of academic research impact, i.e., citations after publication. We also identify boundary conditions that reveal how the management of differences in perception between the two sides, i.e., author and reviewer, can enhance or dampen performance, based on different dimensions of the author team’s social status, which we pose as moderators for the value that the article creates.

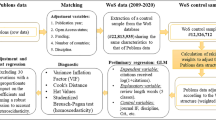

We test our model on a unique data set of 434 articles published in Marketing Science from 1996 to 2009. To estimate the effects on research impact, we control for multiple author, article, and editorial process characteristics using a mixed effects negative binomial regression model. Our results offer preliminary support to the central notion that elements of the review process may influence the article’s research impact. Specifically, we find that time spent in the review process diminishes research impact, but the author team’s social status moderates these effects. To address the issue of unobserved heterogeneity due to incoming quality of the submitted articles, we conduct a robustness analysis using a latent class regression model based on finite mixture theory that validates the findings of our theorized model.

In the next section, we first briefly elaborate on the mechanism of the peer review process and develop arguments for the role of various factors that determine research impact of published articles. Then, we describe our data source, empirical model, and the results. We then discuss our findings, followed by implications of our research to journal editors, academic administrators, and authors.

1 Conceptual Background

1.1 Peer Review Process

The origins of peer reviews extend to the seventeenth century, when scientists began to worry about claims of ownership on new discoveries, as well as the veracity of evidence underlying any such discovery [17, 23]. Early peer reviews helped confirm that such findings were legitimate and that the rightful claimant received acknowledgment as the owner of the discovery or invention. By the early 1900s, the peer review process was well established in the natural sciences and began to make inroads into the social sciences. Yet most early social science journals relied on just a few editors who made decisions about the manuscripts themselves. Only after World War II has the review process at most journals evolved to include editorial boards and a formal review process, overseen by editors. Regardless of the field of endeavor, it may be said that researchers are driven by three main objectives: knowledge creation, knowledge dissemination, and recognition for their efforts [9, 15, 16]. Such recognition, rarely, is directly associated with pecuniary benefits, yet the battle for intellectual property rights has been ferocious, and publications constitute a cornerstone of success in academia and research intensive organizations.

Most research into the antecedents of research impact investigates whether the review process is influenced by particular factors, such as author characteristics, as opposed to universal characteristics, such as the nature of the research (e.g., [5, 30]). Moreover, research on the outcomes of the peer review process has mainly defined the review process as a selection mechanism. That is, the role of the editors and reviewers is to uphold the standards of the focal journal as gatekeepers who determine whether an article will be accepted for publication (e.g., [2, 7, 10]). This decision is observable, whereas the feedback process and interactions between the review and author teams may be equally significant and difficult-to-observe. Gatekeepers might not only select articles for publication but also shape the manuscripts to make them “publishable,” which should affect their subsequent impact. This impact, or the performance of research articles, consists of the effect on subsequent research (e.g., [5, 30]). Research impact is used as a performance indicator in tenure and promotion decisions, similar to the use of patent citations to determine the impact of knowledge on subsequent knowledge creation [6].

In particular, we acknowledge that the review process is a set of iterative interactions between the reviewer team and authors and helps resolve differences in perceptions of how and to what degree the article creates value (e.g., [19]). As we focus on published articles, we assume that the reviewer team agreed that the article provided some value, that is, met some minimum threshold in the earlier stages to be granted revise and resubmit(s) in subsequent rounds. Our notion of this difference is meditated on the idea that the review team agrees that the article has potential for publication and is willing to work with the author team in resolving differences and finding a common ground that will allow the article to reach its potential and meet (or exceed) the standards of publication for the journal concerned. Thus, the differences between the author and reviewer teams are essentially about how far each side sees the article is from publication-ready state. Such divergence in initial starting positions is often about attention to detail, rigor, and how best to convey the ideas of the article. In this article, we argue that heterogeneity in how the author and reviewer team manage these differences can be critical. As an article makes its journey through the review process, the authors incorporate several ideas based on review team suggestions. The new infusion of knowledge often reshapes the article from its original form. Since the final published version is very often different from the initial submission, we argue that the review process should affect the ultimate performance of the article.

While past research has emphasized the importance of the review process in selection of articles for publication, our research emphasizes the importance of the review process beyond publication i.e., on post-publication research impact. We focus on a set of parameters of the review process, hitherto ignored, to argue that the underlying adaptation that the article undergoes in the process determines its eventual performance. Adaptation to reviewer suggestions changes the article in ways that the final product is publication worthy while the original version was not. The required changes to the article, to make it suitable for publication, reflect the managing of differences between the authors and the review team regarding what the article should say and how. We can assume that some consensus was reached between the author team and the review team on the what and how because otherwise the articles would not have been accepted for publication. If the core idea was weak or without potential, the articles would never achieve the favorable outcome of publication. Thus, to characterize the adaptation process, we focus more on differences between the author and review teams over how to present idea(s) in the article.

The degree of adaptation that the article undergoes is a reflection of the degree of differences between the author and the reviewer teams about how to present the core idea. Complete convergence would imply the immediate acceptance of the manuscript in the very first round. Such instances are extremely rare; most articles go through multiple review rounds before finally being deemed acceptable for publication. For published articles, iterative interactions between the two sides enable a resolution of their differences. Furthermore, in most peer-reviewed journals, reviewers remain anonymous even after the article’s publication, to protect the integrity of the process, so reviewers do not benefit directly from the publication. Thus, the onus of a successful integration of the review team’s suggestions and managing differences lies, perhaps, more with the authors than with the reviewers.

We now elaborate on the mechanisms through which critical elements of the review process may influence research impact.

1.2 Time Spent in the Review Process

Just as time to market is critical to performance [26], the novelty of an article may diminish as the time before it is published increases, relative to other articles. Merton [24] describes the phenomenon of singletons and multiples, arguing that it is no coincidence when multiple articles in the same area of scientific discovery appear around the same time, published by people working independently. Foundational research, which researchers use to spark their problem-solving or innovation activity, often sparks similar thinking across the domain, such that Leibnitz and Newton were working on calculus at around the same time, and Darwin and Wallace offered similar ideas on evolution at nearly the same time. However, only one researcher usually attains greater prominence often because he or she is first to market with the idea and thus gains mindshare among the audience. This was the case with both Newton and Darwin who got their work out before Leibnitz and Wallace.

Academic scholars at universities are in a race against time to publish the requisite number of articles, and with impact, to be rewarded with tenure. However, emerging research in the domain of academic knowledge development is indicating that research impact is becoming a critical element of evaluating academic publications (e.g., [30]). As articles that reach publication stage are more likely to be noticed and read, it is reasonable to expect that, all else being equal, as time spent in the review process increases, the subsequent research impact of the published article is going to be diminished.

1.3 Consensus Building Between the Author and Review Teams

Manuscripts submitted to journals for review generally follow certain norms. The review team has the responsibility to critique the article and suggest areas of improvement. There is also an implicit understanding that the authors should demonstrate due diligence in responding to these comments, even though the reviews may just be suggestions. As each member of the review team works independently, it is not always the case that there is high degree of convergence in their suggestions. Subsequently, the editorial team then undertakes an analysis of the reviewer comments and, based on his/her independent judgment of the article, makes a decision that indicates whether the authors may undertake a revision. This iterative interaction continues until some convergence results and the review team (along with editor) is convinced that the article meets the journal’s standards for publication. With each revision, the resubmission invitation occurs only if the review team believes that the differences between the author and review teams have decreased relative to the prior round. If the progress shown by the author team in improving the article towards being publishable does not meet the reviewer team’s expectations, the article is likely to be rejected from further consideration.

Clearly, the manuscript benefits from the many rounds of iterative feedback that occurs between the author team and the review team. The benefits of multiple check points in the review process are well established in the new product development (NPD) literature [11]. The most obvious parallel is the user-generated open source software paradigm where beta testers, focus groups, and potential customers often provide feedback during the development process to improve the product as it moves through various stages (e.g., [20]). For academic articles submitted to a journal (and ultimately accepted for publication), the review process entails a collaboration designed to make the article worthy of publication, similar to a strategic alliance that must find common ground for it to work effectively. The key difference, driven by the double blind review process that is followed in most journals, is that the collaborators—i.e., the author team and the review team (other than the editor) are not aware of each other’s identity. Thus, the interactions between author and review teams represent a unique case of managing differences purely attributed to task [14]. The key to the differences between the two groups lies in the different incentives that the two groups have as the article moves through the review process. Authors are primarily driven by the motive of publishing, a necessary step to gain recognition in academia. Review team members, on the other hand, are driven to uphold the standards of the journal [15]. Thus, revisions represent the underlying mechanism of difference resolution between the collaborators i.e., authors and review team.

We attempt to gauge the degree of differences between the author and review teams using the number of revisions that an article undergoes in the review process. The number of revisions is the number of attempts by the reviewers and authors to overcome their differences, which can have a positive effect by allowing the authors to voice their opinions and defend their work, as well as incorporate feedback to improve their chances of acceptance, which in turn can enhance subsequent research in the domain [15].

An increasing number of revisions might also represent two aspects of the review process. First, ideas presented by the authors may go against the conventional wisdom in the discipline akin to breakthrough inventions. In this case, managing differences likely requires a greater number of revisions. Assuming there is strength in the idea, the review process serves to shape the article (including improved execution) and thus enable it to achieve broader application, appeal, and generalizability for further research. This is similar to facilitating open source product development by means of rigorous, quick, and frequent feedback from users (e.g., [13]). Accordingly, greater difference in perception should benefit the ultimate performance of the article.

On the other hand, the number of revisions could increase if an article, following a path of sustaining innovation did not execute the idea very well in the first place. In this case though, the reviewers may focus more narrowly on improving the execution to meet the norms of publication rather than on presenting the idea in a generalized manner that helps the idea appeal to diverse streams of research. Both mechanisms lead to increased revisions. In the first case, increasing number of revisions is associated with higher subsequent impact. However, in the second case, increasing number of revisions could potentially reduce the future impact of research.

These two competing mechanisms could essentially shape the journey of an article as it winds through the review process, thereby affecting its eventual research impact.

In the context of academic research, there are two distinct features of the quality of the outcome that merit discussion. First, published research is inherently an experience good. Its performance, attributed to impact, can only be gauged over time after publication when it spawns future research. Second, the notion of quality of an idea can vary from one researcher to another. Thus, there exist no prescriptions in determining or capturing the quality of a manuscript as it goes through the review process.

In this research, we attempt to capture the author team’s quality by focusing on the social network position of the authors. Prior research has looked into the role of how collaboration might impact the quality of research [22]. Following this, we conceptualize author’s status in the network of academic co-authorships as an important indicator of observable quality. By focusing on different dimensions of author status as drivers of quality of the manuscript, we are able to identify boundary conditions that moderate how differences between the author and reviewer team affect research impact.

1.4 Moderating Role of Author’s Social Status

Prior research on knowledge creation notes the effects of the productivity and status of authors [2, 7]; we hypothesize that heterogeneity in author teams’ social status affects the impact of the number of revisions and time with the author team on research impact. As academic reputation is built on co-authorships and citations, we consider the co-authorship network to measure the author’s social status. Following prior research on the role of social network influence (e.g., [20]), we characterize the author team’s social status in the co-authorship network using the two critical measures of eigenvector centrality and closeness centrality (e.g., [31]).

Eigenvector centrality of the author team measures the centrality of the author team by not only considering their direct co-authorships but also to what extent their co-authors are central in the broader community of marketing scholars. Thus, it provides a complete picture of the author team’s social status after taking into account the authors’ second and higher order network connections to other prolific scholars in the discipline. This measure acts as a surrogate for author team quality as it provides a measure of the author team’s social standing in the community because teams with a lower score on this measure have not been co-authors with prolific scholars in the field, whereas those with a higher score have. We use this as a measure of productivity status.

The second measure, closeness centrality of the author team, captures the degree of nearness, or reach, of the author team to other marketing scholars. This measure captures the directness of relationships between the author team and the broader community of marketing academics. This measure acts as a surrogate of author team quality as it provides a measure of the team’s reach in the community and teams that score higher on this measure have the ability to spread their work to a wider audience because of their closeness and direct relationships to others in the discipline. We use this as a measure of reach status.

These qualities provide measures of author quality because authors endowed with such skills should, more likely, be able to benefit from the feedback provided by reviewers in each round, because they can quickly identify the areas on which they should focus in their revisions. First, if the article has a novel idea and revisions are an opportunity to broaden its applications, more experienced authors will recognize and quickly grasp, from the reviewer comments, the potential focus and direction of further efforts. Thus, experienced authors should benefit more from increased revisions in shaping the article. Alternatively, if the execution of a strong idea demands several rounds of revision, authors with the experience of reaching out to their co-authors quicker can grasp the importance of reviewer comments more effectively and focus their effort accordingly.

Further, as the review process unfolds over time, the authors get a chance to reflect on the comments provided by the review team and make related changes to the manuscript in the subsequent submissions. However, authors with higher reputation should be far more capable to leverage the time between submissions i.e., the time they spend on the revision or the time with authors to make the necessary changes to the manuscript. However, we do not make any proposition regarding time with reviewers because when the manuscript is under review, the authors are more likely to work on other projects and wait for the review team’s comments and therefore their productivity and citation reputation cannot influence the effect of review time on research impact.

When the reviewer feedback is primarily about the author’s inability to meet journal standards on idea implementation yet, it is the experienced authors who would go beyond the scope of the review to improve not just the implementation but also the overall generalizability of the idea. Thus, overall, we expect that the author team’s prior productivity and cited reputation should weaken the negative impact of time spent in the review process and strengthen (weaken) the positive (negative) effect of number of reviews on research impact.

In what follows, we provide a detailed description on data collection, measures, estimation of the empirical model, followed by a description of the results.

2 Methodology

2.1 Data Sources

We collected our data from Marketing Science, a leading business journal in the field that is accepted by researchers in North American universities as an “A” journal. Relative to peer journals in the field, Marketing Science is considered a premier journal for publishing articles that use state-of-the-art methodology to resolve important marketing problems being faced by managers. The Journal Citation Index is consistently high at both immediate and 5-year impact factors for this journal. We collected the following data at the article level: date of submission, date of acceptance, time with reviewers, time with authors, number of revisions, and identity of the associate editor who accepted the article. Our data on Marketing Science provides information for individual articles published between 1996 and 2009, the entire period over which the data was available publicly.

Our choice of restricting the sample of articles to a single journal was driven largely by a need to control for heterogeneity across processes, lead times, editorial review board changes, and editor tenures, number of pages allocated to an article all of which vary across journals. Failing to control for such heterogeneity could create disruptions and affect the review time differentially across journals. In addition, because Marketing Science is held in high regard and greatly respected in the research domain, the time management incentives for reviewers and authors are aligned. Reviewers are either members of the editorial board or serve on an ad-hoc basis, but both groups have incentives to do a “good job” on the articles they receive. Members of the editorial review board also may be driven by an incentive to become an area editor, and ad-hoc reviewers likely aim to become members of the editorial board. Thus, we assume, reasonably for the context of the research sample, that even ad-hoc reviewers are also motivated to review articles in a manner consistent with standards upheld by the journal. The high prestige associated with being a member of the editorial review board or an area editor for the journal we investigate further justifies our assumption about the motives of all reviewers.

The other stakeholder in this process is the author. Publishing in a top-tiered journal offers reputational benefits and high regard, so authors that receive requests to revise and resubmit likely use their time carefully, depending on the degree of work needed to revise the article. Recall that by studying the research impact of published articles, we do not address the underlying selection process but rather emphasize heterogeneity in the impact of selected articles through the adaptation that the article undergoes in the review process. That is, we seek to address the question of how the review process influences why some articles have a greater impact than others, given that all the articles were considered good enough to be published.

Finally, we turned to several other sources of data. The ISI Web of Science’s Social Science Citation Index (SSCI) provides information on citations received by articles published in Social Science journal. Furthermore, we used publications in all major peer journals of the Social Science journal to base our measures of the prior productivity and citation reputation of authors of articles in our sample.

2.2 Measures

Dependent Variable

A published article has impact only when other articles cite it [30]; however, the article citing the focal article must be published for the citation to appear in the SSCI index. Google impact ratings consider citations by working articles, but the standards are more conservative for SSCI. That is, the number reported by SSCI is a cumulative count of the number of citations received from articles published in the journals represented by the SSCI, subsequent to publication of the focal article, and we use that number as the measure of research impact. This citation index is a broad, generalized measure that includes journals with varying impact factors and readership. Thus, our dependent variable measure is a fairly robust and accurate representation of research impact. We measured the outcome variable at the end of 2011. As articles accumulate citations overtime, we control for the number of years an article has been out, post-publication.

Independent Variables

We use the average number of monthsFootnote 1 an article was with the review team in between reviews as a measure of the time with review team. Marketing Science, like most journals, uses an electronic database to track reviewer times, with reminders sent automatically to reviewers to indicate an upcoming deadline or a past due review. Reviewers have no incentive to delay the process, though they may ask for additional time if they believe the review requires extra effort and cannot be finished within the time specified. The time allocated for a particular review does not vary for the journal; by focusing on one journal, we reduce any additional noise that could arise due to different reviewing standards.

We also recognize that time with the review team is not a perfect measure. It reflects the time taken by multiple reviewers, such that our measure actually captures the slowest reviewer’s time—that is, it corresponds not to the mean review time but to maximum review time. Moreover, reviewers likely do not work on reviewing the article for the entire time they have it, though most reviewers likely take the entire time allocated for their reviews. To the extent that the time allocated for the review process is the same for the journal, our choice of this measure is a conservative one and reduces the likelihood of heterogeneity across review teams being important in the regression analysis. Thus, though the measure is not perfect, it offers a reasonable compromise, considering our research objectives and the practical constraints of data availability.

We measure the degree of differences between author and review team as the number of revisions that an article undergoes before it is accepted for publication. Each additional round of revision means that the review team and the author team could not fully resolve the differences, but progress was made towards finding a common ground. Not all revisions require the same degree of effort though. On average, earlier revisions require greater effort of both the reviewers and the authors, compared with later revisions. Because of the contingent nature of revisions, we also expect published articles to undergo more revisions than the general population, but because we only investigate articles published in one journal, we do not expect any systematic bias in the number of revisions across published articles.

We measure the time with author team as the number of month the article was with the author team in between reviews. Publications improve chances for mobility, promotion, and tenure appointments. Especially for a high impact journal such as Marketing Science, authors have incentives to submit a revision quickly. Irrespective of deadlines, authors would prefer to resubmit the manuscript as soon as it is done.

Moderators

To measure the social status of the authors, we constructed a co-authorship network of all the authors who were in our sample based on publications at the five leading marketing journals—Journal of Consumer Research, Journal of Marketing research, Journal of Marketing, Management Science, and Marketing Science for the entire time period of these journals existence. Following prior empirical studies that use network analysis [20, 31], we use the measures of eigenvector centrality and closeness centrality to represent different aspects of the author team’s social status.

We calculated eigenvector centrality of the author team as the sum of eigenvector centralities of the authors. The measure of eigenvector centrality captures the extent to which the author team has been productive, not merely in writing numerous articles, but also based on how productive the other authors who were connected to the focal authors were. Thus, it is a robust measure of social status that takes into account the productivity of the authors in the overall network of marketing scholars. A higher value on this measure indicates productivity status. Closeness centrality is a measure of the reach of the author team in the network of scholars and indicates the nearness of the authors to other scholars in the overall network. A higher value on this measure indicates reach status.

We measure citation reputation of the author team as the sum of citations that prior work by the authors of the article received in the 5 years preceding to the publication of the focal article in Marketing Science. We gathered this data from the ISI Web of Science database and include citations by articles published in journals that appear in this database. A high citation count in the preceding 5 years indicates greater citation reputation of the author team.

Controls

We measure author team size as the number of authors on the focal article. This variable is necessary as a scaling variable since we use average measures of prior productivity of the author team. To control for the possibility that articles that draw on ideas from different journals have greater generalized applicability and thus greater impact, we measure the boundary spanning of the focal article. For this measure, we calculate the percentage of citations in the focal article that refer to articles not published in Marketing Science.

The age of knowledge used in the focal article reflects the median age of the cited references. We include this variable in our regression model to control for the possibility that articles that build on more recent knowledge may have a greater impact.

Furthermore, we consider reference intensity based on references in the focal article. When the focal article has increased references, it implies either that the research domain is a crowded field or that the authors are drawing from an increased scope of knowledge. The number of references in the article is used as a measure of scope of knowledge.

We measure the perceived importance of the article by its number of pages. Most journals establish strict page limits and issue a specific caveat that a manuscript with important contributions may take more space. Thus, the number of pages is an appropriate measure of the perceived importance of the article.

Further, articles published in special issue often attract attention and hence may enjoy increased citations. We control for this effect by including a dummy variable that is coded 1 for special issues and 0 otherwise. Next, it is also possible that articles that appear first in an issue might get greater attention and consequently higher number of citations, and we control for this by including a dummy variable that is coded as 1 for articles that appear as the first article in an issue.

Finally, over the time span of the study, from 1996 to 2009, we observed four different editors-in-chief at the helm of the journal—John Hauser, Richard Staelin, Brian Ratchford, and Steve Shugan, who called upon a total of 26 area editors. As each editorial regime has its own unique system, guidelines, and preferences for the review process, idiosyncratic of the editor, we included dummies that control for these different regimes. We included three editor regime dummies in our regressions, with the dummy for the last editor regime as the omitted category.

We included a host of area editor characteristics as additional controls because of the degree of influence that area editors have in the review process; this could mean that papers processed by experienced area editors are probably better and therefore experience higher success. We controlled for the area editor’s overall experience post PhD, number of institutions worked at, whether they were tenured, their gender, whether they worked for a public or private university and finally, we included fixed effects for the university from which the area editors obtained their PhD.

2.3 Model

Model Specification

The dependent variable in our research, number of citations, is a count measure. We first examined the summary statistics to investigate if a Poisson model could fit the data; however, the over-dispersion of our dependent variable prompted us to choose a negative binomial model instead.

It is possible that we may have not accounted for unobservable characteristics, which may affect incoming quality of the manuscript at the time of submission that may influence our results. Incoming quality may be due to unobservable variables that cannot be measured such as whether the article underwent peer review at other journals, conferences, informal peer reviews, etc. To alleviate this concern, we use a two-pronged approach. First, we included a host of observable characteristics that are correlated with inherent quality of the article—these included author, paper, and a rich set of area editor characteristics.

While the use of observed variables, along with a set of controls, can capture some degree of heterogeneity in the incoming quality of articles, it is possible that there are other unobservable variables that cannot be measured and hence cannot be controlled for. However, we model this unobserved heterogeneity driving performance using a latent class negative binomial regression model (e.g., [12]). Latent class regression models that are based on finite mixture model theory [21] allow researchers to investigate the presence of such unobserved heterogeneity in regression effects. We started with a single class model, same as the aggregate random effects MLE model, and then estimated a two-class and a three-class model. The model with two-latent classes provided the best fit to the data based on fit criteria, suggesting the presence of some degree of unobserved heterogeneity in the data. However, the pattern of results in the mixed effects negative binomial model that we used to test our hypotheses and the results from the two-latent class regression model were consistent and did not affect the validity of our findings.Footnote 2 Therefore, we retained the estimates from the mixed effects negative binomial model.

We present the manuscript-level descriptive statistics, along with the correlation matrix for the sample, in Table 1. The mean number of citations across manuscripts in our sample was 18.11, and the mean number of revisions was 2.48. The average number of months spent with the review team was 15.89, and the average number of months with the author team was 9.51. In Table 2, we present the results from the negative binomial regression model.

Model Selection

We first estimated model I with only the control variables and then estimated model II by adding the main effects of number of revisions, time with review team, effects of the two social network measures and the interaction effects between the review characteristics and the social network measures. Model II outperformed model I on fit criteria.

3 Results

We present the estimates from model II in Table 3. We find support to our central notion that the review process, as characterized by the time with reviewers (b = −0.33, p < 0.10) and the number of revisions (b = −0.11, p < 0.01), plays a significant role in influencing the article’s post-publication impact. It seems that, controlling for everything else, the delay caused in getting the article to publication penalizes articles in their impact. However, we do find that the author team’s productivity status positively impacts post-publication impact (b = 0.33, p < 0.01).

With respect to the interaction between the review characteristics and author team’s status, we find that productivity status mitigates the negative effect of number of revisions (b = 0.18, p < 0.01) and reach status mitigates the negative effect of time with reviewers (b = 0.35, p < 0.01). It seems that authors who enjoy higher social status among their peers by virtue of their productivity are able to use the revisions to improve the quality of the paper, whereas authors with higher reach status are able to use the review time to do the same. Our results provide evidence that although the main effects of the review process are per se negative, a counterintuitive finding, authors with high social status are less affected by this than those with a low social status.

We also find evidence for other interesting effects concerning the article and the area editor’s influence that merit discussion. Interestingly, time spent with the authors in between reviews does not have a statistically significant effect on post-publication impact. Counterintuitively, articles that span multiple sub-domains tend to have a lower impact (b = −0.54, p < 0.05) whereas articles that cite old knowledge also seem to be penalized (b = −0.04, p < 0.01). However, articles that have a broad scope in their application (b = 0.01, p < 0.01) and those with higher perceived importance (b = 0.02, p < 0.01), as measured by article length, gather more citations post-publication. The size of the author team has a negative effect on citations (b = −0.08, p < 0.10) whereas being the first article in an issue or being part of a special issue does not seem to have any effect.

We included a rich set of control variables measured at the area editor level in the hope of capturing additional heterogeneity in the article and the review process. Among these variables, we find that the area editors’ overall work experience (b = −0.02, p < 0.05) and number of institutions worked at (b = −0.06, p < 0.10) have a negative effect on article impact. Interestingly, articles processed by area editors at private universities, compared to public universities, seemed to fare worse in post-publication impact (b = −0.40, p < 0.01). Neither the area editor’s gender nor their tenure status had an effect on citation count. In terms of the fixed effects, there were noticeable differences based on the area editor’s PhD granting institution, article’s publication year, and the tenure of the chief editor.

As the negative binomial regression model is log-linear, we translate the coefficient estimates to multiplicative effects for the predicted citation counts. In our sample, the expected log count increase due to a unit increase in time with review team is −0.031. That is, for every additional month with the review team, the expected citation count decreases by 3.1 %, keeping all the other variables constant. All else being equal, for every additional round, the article is in review, and the article’s post-publication impact decreases by 11.48 %. Interestingly, authors who have high social status in their community benefit such that for a unit increase in their productivity status, the article’s post-publication impact increases by 25.21 %.

For the other variables, adding an additional author to the author team decreased research impact by 10.5 %, using older citations in the article decreased research impact by 7.9 %, while broadening scope of the research, as measured by number of citations in the published article, increased research impact by 0.6 %. Finally, perceived importance as measured by the length of the published article increased research impact by 2.06 %. As the mode of the citation count for published research is zero, our results help quantify the economic significance of the review process for an article’s subsequent impact.

4 Discussion

Knowledge development and dissemination is an important aspect of academic research, and consequently, scholars are rewarded for their ability to publish path-breaking research at top academic journals. Although much is known about the role of author characteristics and article quality on research impact, there is very little understanding on the role of the review process in shaping future research. Our emphasis in this article has been on understanding the organizational practice of peer review as a determinant of research impact. Because the stakeholders in the peer review process vary across research articles, we are able to investigate how heterogeneity in the implementation of organizational practices across articles can drive post-publication impact.

Our study has several implications. At the broadest level, our hypotheses and findings suggest that, all else being equal, the greater the number of revisions to reach the final acceptance stage, the lower its impact. Further, although marginally significant, the effect of time with reviewers has a negative effect on impact. Thus, more iterations and time delay can penalize an article’s impact post-publication.

Yet, reputed authors, with their extensive experience, can reduce the downside of a protracted review process. Specifically, we find that the authors’ productivity status can mitigate the ill-effects of the number of revisions whereas reach status mitigates the ill-effects of time with reviewers. It seems that reputed authors with high social status are able to use the increased number of revisions and time with reviewers to their advantage and morph the paper into an impactful one. An alternative explanation could be that these reputed authors have shown a mastery over writing impactful papers, such that the focal article builds on their own prior research. Thus, citations to the focal article (authored by a highly reputed scholar) are likely to appear in conjunction with other articles the author already has published.

Our findings in turn provide insights into the institution of peer review—though we caution that these questions must be raised with an understanding of why it was introduced in the first place [16]. To protect intellectual property rights and grant recognition to scholars, in a period when printing costs were prohibitive and journals were few, the peer review process offered the best means for authors to disseminate their work, gain feedback, and be recognized. In such a context, more time between submission and acceptance was not as important, and most institutions focused solely on the number of publications because dissemination was difficult and unwieldy. Yet the context clearly has changed radically. In the modern era of rapid information dissemination, made possible by ubiquitous technological tools, performance metrics for scholarly articles focus not only on publication but also on their impact after publication. Therefore, it becomes critical for knowledge products to get to market and achieve recognition quicker. Our results shed light on how the institution of peer review affects this relatively new but important performance metric.

The peer review process appears to exhibit traits similar to those of models of open innovation, such as the open source product development model, which rely on quick, frequent feedback from users and continuous availability of works in progress (e.g., [20]). Perhaps it would make sense then to implement quick, multiple rounds of review that enable authors to retain intellectual property rights but also get their work to market faster, as already adopted by several leading journals. Many journals, in recent years, have already put processes in place to achieve faster turnaround times through electronic submissions and careful monitoring of both author and reviewer times.

Our findings raise an important overall question that has implications for journal editors and authors. On the one hand, the current form lends credibility to publications and improves research quality because it enables the manuscript a tremendous opportunity in that it can benefit from the influence of specialist gatekeepers (reviewers), handpicked by editors to uphold the standards of the journal. On the other hand, our results show that a review process with too many iterations in particular has significant downside to the article’s performance especially when the author team needs increased amounts of time to manage the resolution of differences.

From a theoretical perspective, scholarly articles published in academic journals are an important knowledge good that advance science and contribute to the development of society; thus, it is critical that we understand the incentives that operate during the review process because it helps determine the success of products in this domain. We have taken an important first step by studying the key characteristics of the review process and their influence on product success and in so doing have answer a few questions but raise many more.

From a practical perspective, our findings provide critical insights to various stakeholders, including authors, editors, and members of tenure committees at research universities. For authors, the news is clear: Their reputation enables them to reduce the negative effects of the review process on research impact. So early success might help breed future success. Doing novel research that draws on broad domains seems to help, but having large author teams and relying on very old research hurts. Editors thus might be cognizant of the authors’ seniority when they shepherd articles through the review process. If additional rounds of revision are required for an article submitted by authors with either low productivity status or low reputation, editors should realize that these rounds reduce the potential impact of the article. Finally, tenure and promotion committees at research universities can use these findings as an important judgment tool for assessing the impact of their faculty’s research.

4.1 Limitations

We recognize several limitations of our study. First, the data are drawn exclusively from Marketing Science, one top-tier journal in the social sciences, so our findings are somewhat narrow in terms of their generalizability. However, considering the reputation of Marketing Science in terms of citation impact, our findings likely pertain to other journals with strong track records for publishing high quality, rigorous academic work.

Second, we use average review time between reviews as a measure, even though reviewers likely do not spend all the time they have the manuscript reviewing the article. Thus, we observe simply the time spent by the review team on the article and cannot make inferences about the effort or distribution of individual reviewers for each manuscript. The practical limitations of data availability restrict our ability to obtain a more fine-grained measure. Moreover, this measure does not indicate the mean time spent by the reviewers but rather the time needed by the slowest reviewer. To the extent that reviewer teams are assigned randomly, the individual characteristics of the reviewers should not matter; however, we had no information on individual reviewers and thus cannot control for any reviewer-specific characteristics.

We would also like to explicitly acknowledge concerns regarding endogeneity arising due to omitted variable bias. This could be an issue specifically because as researchers, we do not explicitly observe the quality or “refinement” of a manuscript at the time of its submission to the journal. This measure could theoretically impact both the outcome variable, citations post-publication, and the primary theoretical variable of interest—time in the review process. Given the privacy of the review process, we could not access data concerning desk rejection rates or additional data provided by the authors such as if the manuscript was submitted elsewhere, which could have helped gauging the quality measure. To mitigate the impact of this unobserved variable, we adopted a rich set of observable characteristics at the author, paper, and the AE level hoping to pick up as much variation as possible. Furthermore, to provide some evidence that this is not a major concern, we estimated a model in which we explicitly modeled unobserved heterogeneity using a latent class regression framework. The results were consistent with the proposed model yet cannot provide conclusive evidence because the model explicitly assumes that the unobserved variables are uncorrelated with the explanatory variables, which may be violated in reality. Future research could perhaps consider accounting for the submission-time quality measure by requesting published authors for additional information on the manuscript status.

Finally, we acknowledge that our characterization of the review process may not be a highly accurate representation because of the idiosyncratic nature of each article’s review journey. Some of these issues may be better captured with access to data on reviewer notes and author responses. However, given the data limitations, we capture the critical elements of the process through our measures (i.e., number of rounds, author and review time) and control for a variety of article and author traits in examining the role of the review process on an article’s post-publication impact, lending confidence to our findings.

4.2 Further Research

The characteristics of the peer review process influence the potential impact of an article and thus offer opportunities for further research in this domain. First, the role and characteristics of individual reviewers could affect the time manuscripts spend with different review teams. Second, we need to clarify the role of editors, their guidelines, and their advice to the review team and how they affect the process. Third, we call for research that expands the scope to include more journals.

An interesting theme that emerges in our analysis is the role of the area editor in the review process. For example, our analysis shows that articles processed by area editors employed at private universities have lower post-publication impact compared to articles that were processed by area editors employed at public universities. Logically, it does not make sense why this could happen, unless, of course, if papers are being systematically assigned to specific area editors which could result in this finding. Future research could explore deeper on the role of area editors in shaping the review process; a specific theme could be the social status of the area editor.

Articles published in academic journals are an important public good that benefit society overall and understanding the mechanics behind the performance of these products is critical. We believe that we have taken an important step by investigating the role of the peer review process in determining the impact of this critical public good and hope to have both addressed and raised at least some interesting research questions in this domain.

Notes

Data was available in days, but we used months for ease of representation of the scale of the coefficients.

The results from this robustness analysis are available upon request.

References

Azar OH (2004) Rejections and the importance of first response times. Int J Soc Econ 31(3):259–74

Bakanic V, McPhail C, Simon RJ (1987) The manuscript review and decision-making process. Am Sociol Rev 52(5):631–42

Baumgartner H, Pieters R (2003) The structural influence of marketing journals: a citation analysis of the discipline and its subareas over Tim. J Mark 67(April):123–39

Benda WGG, Tim C, Engels E (2011) The predictive validity of peer review: a selective review of the judgmental forecasting qualities of peers, and implications for innovation in science. Int J Forecast 27(1):166–82

Bergh DD, Perry J, Hanke R (2006) Some predictors of SMJ article impact. Strateg Manag J 27(1):81–100

Bessen J, Maskin E (2009) Sequential innovation, patents, and imitation. RAND J Econ 40(4):611–35

Beyer JM, Chanove RG, Fox WB (1995) The review process and the fates of manuscripts submitted to AMJ. Acad Manage J 38(5):1219–60

Black N, van Rooyen S, Godlee F, Smith R, Evans S (1998) What makes a good reviewer and a good review for a general medical journal? JAMA 280(3):231–33

Carpenter MA (2009) Editor’s comments: mentoring colleagues in the craft and spirit of peer review. Acad Manage Rev 34(2):191–95

Cole S, Simon G, Cole JR (1988) Do journal rejection rates index consensus? Am Sociol Rev 53(1):152–56

Cooper RG (2008) Perspective: the stage gate® idea to launch process—update, what’s new, and NexGen systems. J Prod Innov Manag 25(3):213–32

DeSarbo WS, Jedidi K, Sinha I (2001) Customer value analysis in a heterogeneous market. Strateg Manag J 22(9):845–57

Grewal R, Lilien GL, Mallapragada G (2006) Location, location, location: how network embeddedness affects project success in open source systems. Manag Sci 52(7):1043–56

Hinds PJ, Bailey DE (2003) Out of sight, out of sync: understanding conflict in distributed teams. Organ Sci 615–32

Horrobin DF (1990) The philosophical basis of peer review and the suppression of innovation. JAMA 263(10):1438

Kassirer JP, Campion EM (1994) Peer review: crude and understudied, but indispensable. JAMA 272(2):96–97

Kronick DA (1990) Peer review in 18th-century scientific journalism. JAMA 263(10):1321

Lehmann DR (2005) Journal evolution and the development of marketing. J Public Policy Mark 24(1):137–42

Lewis MW, Welsh AM, Dehler GE, Green SG (2002) Product development tensions: exploring contrasting styles of project management. Acad Manage J 45(3):546–64

Mallapragada G, Grewal R, Lilien G (2012) User-generated open source products: founder’s social capital and time to product release. Mark Sci 31(3):474–92

McLachlan GJ, Peel D (2000) Finite mixture models. Wiley, New York

Medoff MH (2003) Collaboration and the quality of economics research. Labour Econ 10(5):597–608

Merton RK (1957) Priorities in scientific discovery: a chapter in the sociology of science. Am Sociol Rev 22(6):635–59

Merton RK (1961) Singletons and multiples in scientific discovery: a chapter in the sociology of science. Proc Am Philos Soc 105(5):470–86

Rooyen V, Susan FG, Evans S, Smith R, Black N (1998) Effect of blinding and unmasking on the quality of peer review: a randomized trial. JAMA 280(3):234–37

Schoonhoven CB, Eisenhardt KM, Lyman K (1990) Speeding products to market: waiting time to first product introduction in new firms. Adm Sci Q 35(1)

Simonton DK (2002) Great psychologists and their times: scientific insights into psychology’s history. Am Psychol Assoc

Smith PG (1999) From experience: reaping benefit from speed to market. J Prod Innov Manag 16(3):222–30

Stremersch S, Verhoef PC (2005) Globalization of authorship in the marketing discipline: does it help or hinder the field? Mark Sci 24(4):585–94

Stremersch S, Verniers I, Verhoef PC (2007) The quest for citations: drivers of article impact. J Mark 71(3):171–93

Swaminathan V, Moorman C (2009) Marketing alliances, firm networks, and firm value creation. J Mark 73(5):52–69

Tellis GJ, Chandy RK, Ackerman DS (1999) In search of diversity: the record of major marketing journals. J Mark Res 36(February):120–31

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mallapragada, G., Lahiri, N. & Nerkar, A. Peer Review and Research Impact. Cust. Need. and Solut. 3, 29–41 (2016). https://doi.org/10.1007/s40547-015-0060-1

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40547-015-0060-1