Abstract

Purpose of Review

There are several aspects of donor selection for heart transplantation that are particularly challenging. Information about the donor is often limited and relayed through a review of selected chart snippets and tests. The donor heart must work immediately unlike kidney transplantation where delayed function is common and not life-threatening. This review focuses on recent findings in the literature which are distilled for the transplant clinician to be eminently practical to advise teams as they consider which donor factors are important in individual situations.

Recent Findings

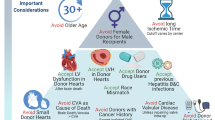

Left ventricular dysfunction has many causes and particularly in younger donors may resolve with time. Such donors can be utilized with excellent results. Donor age is still a critically important risk factor for outcome post-transplant though it is additive with other factors such as left ventricular hypertrophy and anticipated ischemic time. Donor size is another important factor and recent work suggests that calculation of predicted heart mass is superior to simple weight, height, and gender in reaching a decision about donor sizing. Recent work suggests that even donors with non-critical coronary lesions (50% or less) may be utilized with similar outcomes, including a similar incidence of progression of vasculopathy. Diabetes appears to be a manageable comorbidity particularly with careful screening. With the increase in drug-related deaths, a recent study looking at more than 23,000 accepted donors found no difference in long-term survival with multiple drugs in the toxicology screen of the donor which may allow increased use of such donors. Finally, we review the details of donation after circulatory determination of death (DCD). Currently, this is confined to certain specialized centers but this is anticipated to become more common worldwide and enhance the number of transplants over time.

Summary

Current evidence is reviewed and summarized to allow the busy transplant clinician to be up to date on the latest information regarding large and smaller important studies in this field. It is hoped that this review helps teams maximize their use of appropriate donors.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Heart transplantation has become the preferred therapy for eligible patients with end-stage heart failure. The supply of suitable donors has always been insufficient to meet the expanding demand. Heart allografts must function reasonably well in the immediate post-operative period. There are multiple ways to evaluate the donor heart including echocardiography, coronary angiography which are all subject to interpretation and can be subjective, as opposed to kidney transplantation where laboratory values and in some cases biopsy results are available to guide decisions. The net result is that relatively few (about a third) of potential heart donors are actually used for transplantation, while the waiting list grows ever longer. The purpose of the current manuscript is to update the reader concerning recent criteria for donor heart selection. We have divided the paper into distinct sections.

Graft Abnormalities

Left Ventricular Dysfunction

Decreased left ventricular ejection fraction (LVEF) on echocardiographic evaluation of the donor’s heart is one of the main reasons for not using the graft (normal range for LVEF is 55 to 70%) [1, 2]. Acute severe left ventricular dysfunction associated with acute brain injury is reported in more than 40% of patients [3]. The etiology of ventricular dysfunction caused by brain death is not well understood and is likely similar to Takotsubo cardiomyopathy, which is an acute decrease in LVEF in response to extreme emotional stress [4]. It has been proposed and well accepted that this process is due to an abrupt increase in endogenous catecholamines, resulting in microvascular coronary vasoconstriction, myocardial stunning, and a characteristic histopathological lesion of contraction band necrosis. LVEF may improve with the supportive care of the donor [5, 6•]. Recovery may take hours to days with appropriate donor management. Madan et al. compared donors with initially normal ejection fraction (EF) to 427 donors with LVEF < 40% that subsequently improved to > 50%. They noted that there was no difference in mortality, primary graft failure, or coronary arterial vasculopathy (CAV) in the improved hearts compared to those with LVEF > 50% [7]. With marginally reduced LVEF, protocols using stress echocardiography have been reported to predict improvement in donor heart function following transplantation [3, 8, 9]. In potential donors with suspected transient left ventricular dysfunction, institution of donor management protocols, waiting for hours to days, and repeat echocardiographic evaluation may result in better left ventricular (LV) function and use as a cardiac graft.

Donor Characteristics

Age

Age of the donor is a significant factor while assessing the suitability of a heart for transplantation (HT). Data from ISHLT Registry demonstrated worse outcomes at 1, 5, and 10 years after HT with increasing age, likely due to a higher probability of acquiring comorbidities such as diabetes, hypertension, hyperlipidemia, coronary artery disease (CAD), and heart failure [10]. Also, the combination of advanced age and donor heart ischemic time of greater than 4 h was a strong predictor of mortality.

Fear of increasing donor age causing poor outcomes was documented in Europe. A donor score index utilizing the Eurotransplant data showed that compared to a donor less than 45 years, a donor aged 45–54 years led to a twofold risk of donor nonacceptance that increased to the 4.7-fold risk of nonacceptance for a donor between 55 and 59 years [11]. Regarding survival, the RADIAL score data showed donor age more than 30 years predicted primary graft dysfunction (PGD), and a donor age more than 40 years is a risk factor for poor recovery from PGD and higher in-hospital mortality [12]. In summary, increasing age especially in combination with increasing ischemic time predicts higher post-cardiac transplant mortality.

Ischemic Time

Ischemic time has long been recognized as one of the most important determinants of survival post-transplant. Its effect is particularly noted on early survival and also interacts with donor age [13]. Recent changes to the US transplant allocation scheme emphasize broader sharing of organs which has led to longer ischemic times for many patients. Whether this will lead to significant changes in the post-transplant mortality observed in the USA is unclear [14,15,16,17,18,19,20]. An exciting development is the recent FDA approval of the Transmedics Organ Care System to transport donor hearts where the ischemic time is forecast to be prohibitive. The specific wording of the approval is “The OCS Heart System is indicated for the preservation of brain death (DBD) donor hearts deemed unsuitable for procurement and transplantation at initial evaluation due to limitations of prolong ed cold static cardioplegic preservation (e.g., > 4 h of cross-clamp time).” This system adds expense and staffing issues to consider, but does offer the possibility for increased organ utilization in the future [21].

Impact of Donor Size and Gender

Traditionally, size matching has been based on attempts to match weight and height between the donor and the recipient. The ISHLT Guidelines (from 2010) recommend limiting donor/recipient weight differences to < 30%,and to < 20% if there is a female donor for a male recipient [22]. However, recent data suggests that weight is not an accurate marker for cardiac size, especially in obese recipients, and donor-recipient weight differences may not impact outcomes [23].

“Gender mismatch” is thought by many to be a surrogate for size mismatch that in fact may lead to lower survival rates than gender-matched recipients. An analysis of 60,584 patients in the UNOS registry studying the effect of donor-recipient sex mismatch on heart transplant outcomes showed that male recipients of female donor hearts had a 10% higher mortality rate than did male recipients of male hearts [24]. Kaczmarek et al. showed in 67,855 pts; the male recipient/female donor carried a higher risk for early mortality [25]. One-year survival was highest in male recipients of male donor hearts (84%) and lowest in male recipients of female donor hearts (79%). The survival after 1 year was comparable suggesting that gender influenced mainly short-term outcomes.

However, Reed and colleagues used UNOS registry data to examine size matching with predicted heart mass (pHM) ratio derived from equations integrating donor size, age, and gender. They defined predicted heart mass ratio as pHM = (pHMrecipient − pHMdonor/pHMrecipientt) × 100. They reported that a suitably sized heart from a female donor results in similar outcomes as a male donor heart [26]. Bergenfeldt et al. showed no difference in mortality related to appropriate vs. inappropriate weight-matched donor-recipient pairs (≤ 30% vs. > 30% weight difference) [27]. Inappropriate weight matching was a risk factor for short- and long-term mortality in non-obese recipients, but not in obese recipients. Kransdorf and colleagues examined pHM in the UNOS registry in a more contemporary cohort and divided matches by septiles [28••]. They conclusively showed that pHM ratio is a robust predictor of mortality and its use has become widespread.

In summary, gender mismatch is a misnomer. Gender is not the determinant of outcome in gender mismatch heart transplantation. Cardiac mass matching eliminates the concern.

Hypertension and Left Ventricular Hypertrophy

Hypertension is a risk factor for comorbidities such as coronary artery disease, left ventricular hypertrophy (LVH). Donor heart LVH is an increased risk for coronary artery vasculopathy (CAV) and death [29]. Donors with LVH are more often older, male, and have a history of hypertension [29,30,31]. Some studies have noted that at 12 to 24 months, there is no longer LVH after transplantation [30], LVH alone is not a risk factor for mortality, though there is also an increased risk of death with LVH with donors older than 55 years and when the ischemic time exceeds 4 h.

Coronary Artery Disease in the Donor

Earlier studies suggest that the presence of coronary artery disease in the donor increases the risk of progression within the first year of transplant, particularly if the donor is older than 50 years [29]. Donor hearts with mild or moderate CAD with preserved ejection fraction can be transplanted but recipients may require revascularization or percutaneous intervention. A coronary angiogram is the most accurate way to assess donor CAD and is recommended for donors with risk factors for CAD (i.e., hypertension, diabetes, age greater than 45 years, smoking, and cocaine history) [22]. More recently, Lechiancole and colleagues reported a series of 289 heart transplants from a single European center. Fifty-seven of the donors had moderate CAD (less than 50% stenosis in one or more coronary vessels). There was no difference in 10-year survival nor the development of ISHLT CAV grade 2 or higher [32•]. This provocative work suggests that the risk of using donors with established CAD may not be as high as previously considered.

Diabetes

Diabetes mellitus is a well-known risk factor for the development of CAD and therefore is a factor carefully considered when evaluating a donor. Survival is not different with selected diabetic donors, though evaluation including coronary angiography is common [33]. Recent data suggests CAV may be more common with a diabetic donor [15, 34].

Malignancy

Donors with a history of malignancy represent a dilemma for transplant teams with evidence of transmission based on case reports and small series. Ultimately, the balance between risk and benefit is weighed closely as with most transplant-related decisions [35, 36]. A recent report from the UNOS Disease Transmission Advisory Committee listed 70 cases of possible disease transmissions of malignancies over the period 2008–2017 [37].

Toxic Substances and the Heart Donor

A variety of substances may lead to cardiac injury, including tobacco, alcohol, cocaine, amphetamines, and opiates. The United Network for Organ Sharing (UNOS) registry specifically captures the use of cocaine, alcohol, and unspecified illicit drugs for each donor, which has allowed analyses of the survival associated with such donors. Jayarajan and colleagues analyzed the UNOS registry from 2000–2010 and identified 2274 donors with cocaine use from a pool of 19,636 total donors [38]. Heart transplant recipient survival was similar for donors with prior, current, or no use of cocaine.

Vieira et al. reported an analysis of the International Society for Heart and Lung Transplantation (ISHLT) Thoracic Transplant database [39], a worldwide registry. Data for 24,430 adult de novo transplants between 2000 and 2013 was gathered, yielding 3246 transplants (13.3%) where the donor had a history of cocaine use. Of these, 1477 (45.5%) were classified as current users. The authors reported that there was no decrease in survival with these donors. Also, there was no increase in allograft rejection or the occurrence of cardiac allograft vasculopathy.

Amphetamines are well known to lead to cardiotoxic effects, particularly methamphetamine [40,41,42,43]. Since all donors are screened with echocardiography, whether the use of an apparently normal donor with a history of amphetamine use has remained controversial. The only published information was limited to case reports and series published in abstract form.

Baran and colleagues analyzed 23,748 donors from the UNOS registry between 2007 and 2017 and specifically examined the donor toxicology data field, classifying the use of more than 20 drugs [44••]. In addition, they examined the UNOS fields for drug use which have been analyzed in the past by other groups and they analyzed combinations of toxic drugs to assess the effect of multiple drugs on donor outcomes. They concluded that no single drug was associated with worsened survival and even combinations of multiple drugs were associated with similar survival of recipients of drug-free donors over 10 years post-transplant.

Alcohol is commonly found in donors, and acute intoxication is less of an issue than chronic use. The 2010 ISHLT Guidelines for the Care of Heart Transplant Recipients indicate “In light of current information, the use of hearts from donors with a history of ‘alcohol abuse’ remains uncertain, but it should probably be considered unwise” [22]. However, a 2015 report from the UNOS registry examined nearly 15,000 transplants from 2005–2012 (15.2% with heavy alcohol use) and there was no difference in survival between heavy alcohol donors and others [45]. The recent report using donor toxicology data concluded that donor alcohol use was not associated with increased mortality [44••].

Tobacco use is relatively common but there are no guidelines regarding heart transplant donors [22, 46]. Recently, Hussain and colleagues reported an analysis of the ISHLT Thoracic registry of 26,390 heart transplants from 2005–2016, specifically focusing on tobacco use [47]. The authors also incorporated propensity matching to account for other differences between donors with smoking and those without. They found decreased 5-year survival compared to those without a history of smoking (73.7% vs. 78.1%; p < 0.001). Graft failure was more common (27.1% vs. 22.5%; p < 0.001) and the incidence of CAV (35.5%% vs. 28.6%; p < 0.001) and acute rejection (44.9% vs. 41.8%; p = 0.002) was elevated for the donor smoking cohort compared to the non-smoking cohort at 5 years [47]. The propensity-matched cohort consisted of 4572 transplant recipients. In this group, survival and graft failure were worse with donors who smoked but CAV and rejection were not different. The limitations are that smoking is not quantitated in the registry nor did the authors include whether donors had a coronary angiography prior to donation.

Recently Loupy and colleagues reported on a novel analysis using 1301 heart transplant patients across 3 European and one large US center [48]. Using latent class mixed model statistical analysis, the authors were able to derive and validate several trajectory groups for the development of CAV. Importantly, donor tobacco use was a strong correlate of trajectories with the more rapid development of CAV. From these recent reports, a donor history of heavy chronic smoking should prompt very careful examination of such a potential donor.

Expansion of the Donor Pool by Utilizing Hepatitis C-infected Donors

With the advent of direct-acting anti-viral therapies, the use of hepatitis C-infected donors is changing from a rarity to a commonplace event. Protocols vary, but many centers will treat recipients following documented infection and determination of hepatitis C genotype [49,50,51,52,53,54]. Issues with this approach have been the cost of the direct-acting anti-viral therapy and concerns about drug interactions. Over time, it is likely that hepatitis C-infected hearts will be routinely used given positive results though surveillance for long-term issues remains an important aspect to follow [55, 56].

Donation Following Circulatory Determination of Death: Novel Donor Pool

Following the initial report in 2014 of successful heart transplantation from donors after circulatory determination of death (DCD donors) using machine perfusion to both resuscitate and transport the donor heart [57], there has been a rapid growth of this form of heart transplantation. Successful programs have now been established in multiple centers across Europe and North America.

Compared with the brain-dead donor (DBD) donor, the DCD donor presents some unique challenges. The potential DCD donor is still alive at the time of referral. Applying the principle of “primum non nocere,” this places limits on what ante-mortem investigations and interventions are permissible. While simple non-invasive tests such as transthoracic echocardiography are allowed in most jurisdictions, more invasive ante-mortem investigations such as coronary angiography and procedures (e.g., the placement of perfusion cannulas for the institution of normothermic regional perfusion) may not be.

Following the withdrawal of life support, there is uncertainty whether the donor will progress to death within a timeframe that allows recovery of a viable donor heart. In contrast, to preclinical studies of DCD donation, when progression to circulatory arrest after withdrawal of life support (WLS) is typically rapid [58, 59], clinical experience has shown that the progression to circulatory arrest after withdrawal of life support in the human DCD donor is far less predictable [60]. In addition, there is ongoing uncertainty and debate regarding the timing of the onset of myocardial ischemia following the withdrawal of life support. While some donors progress rapidly, others maintain a period of hemodynamic stability or undergo a hypertensive phase (similar to the Cushing’s reflex that is seen during brain death) before progression to circulatory arrest.

Several prediction scores have been developed with the aim of determining in advance whether a DCD donor will progress to circulatory arrest following WLS within a timeframe that permits recovery of viable organs for transplantation [61]. At present, they have limited utility for heart donation as they predict the time from WLS to circulatory arrest rather than the functional warm ischemic time (FWIT) from the critical hemodynamic change that marks the onset of myocardial ischemia to circulatory arrest. Currently, variables impacting on the donor’s heart ischemic time need to be considered on a case-by-case basis before deciding on whether to send a retrieval team to the donor hospital.

Two hemodynamic measures have been utilized by clinical programs to mark the onset of myocardial ischemia—systolic blood pressure and systemic arterial saturation. Based on their preclinical studies, the Sydney program has used a systolic BP of < 90 mmHg to mark the onset of myocardial ischemia after WLS [58]. The Papworth program and most others have used a systolic BP < 50 mmHg to mark the onset of myocardial ischemia which usually occurs within 1–2 min of the systolic BP falling below 90 mmHg [62]. Some US centers have used an arterial saturation of < 70% to mark the onset of ischemia; however, the accuracy of this measurement after WLS has been questioned [60]. The subsequent progression to circulatory arrest, mandated stand-off period, transfer to the operating room, and retrieval determines the FWIT. The FWIT ends either with the flush of the donor’s heart with cold preservation fluid in the case of direct procurement (DP) or with the recommencement of the circulation in the case of normothermic regional perfusion. With both protocols, the DCD heart is usually fully recoverable if the FWIT is 30 min or less.

DCD donor selection criteria vary between institutions. The upper age limit for the Sydney program increased from 40 years of age at the commencement of the program in 2014 to 55 years of age in 2018 with the oldest donor in this program being 54 years of age [63••, 64]. The upper age limit for the Papworth program is currently 50 years of age although the oldest donor transplanted was 57 years of age (Stephen Large MD, personal communication) [62]. Preclinical studies suggest that there is an adverse interaction between increasing age of the DCD donor and functional warm ischemic time with the aged heart being more susceptible to ischemia/reperfusion injury [65]; however, at present, there is an insufficient clinical experience to determine whether the tolerable FWIT decreases with age.

An echocardiogram should be obtained in all prospective DCD donors. Echocardiographic criteria for heart donation are essentially the same as for the DBD donor with regard to left ventricular size, function and hypertrophy, and any structural abnormalities [66]. Any degree of left ventricular systolic dysfunction in the most recent echocardiogram taken prior to WLS should be regarded as a contra-indication to DCD heart donation.

Troponin and other biomarkers are often elevated in potential DCD donors particularly those who have attempted suicide by hanging. At present, there is no evidence that elevated troponins predict early graft dysfunction or failure after transplantation.

Donor comorbidities such as hypertension and diabetes should be approached in the same way as they are for DBD donors.

Conclusions

More than 50 years following the first human heart transplantation, we still have much to learn about the selection of donors. Heart transplantation remains a field where information is limited, topics controversial, and opinions widely held in the absence of definitive evidence. The selection of heart donors encompasses a complex calculus involving many variables. We have not included all of these. Transplant centers use donor data to optimize the selection and thus the chance for survival of their potential recipients. There is no substitute for compulsive scrutiny of all data available on each potential donor keeping in mind that any single risk factor may be misleading and registry as well as single-institution data provide guidelines that must be used with care. One cannot assume that all young donors, or donors taking cocaine, or all donors with LVEF > 50, etc. will provide hearts that function well. As we all have seen one may no longer assume that female donors should not be used in male recipients. Gender is not an important factor, heart size is. The donor must be reviewed and assessed in relation to each recipient and the severity of illness of each recipient must be evaluated each time a donor’s heart is available. The surgeon and/or cardiologist must ultimately balance the risks and benefits in favor of the specific recipient based upon contemporary data.

References

Papers of particular interest, published recently, have been highlighted as: • Of importance •• Of major importance

Dujardin KS, McCully RB, Wijdicks EF, et al. Myocardial dysfunction associated with brain death: clinical, echocardiographic, and pathologic features. J Heart Lung Transplant. 2001;20:350–7.

Zaroff JG, Babcock WD, Shiboski SC. The impact of left ventricular dysfunction on cardiac donor transplant rates. J Heart Lung Transplant. 2003;22:334–7.

Bombardini T, Arpesella G, Maccherini M, et al. Medium-term outcome of recipients of marginal donor hearts selected with new stress-echocardiographic techniques over standard criteria. Cardiovasc Ultrasound. 2014;12:20.

Garcia-Dorado D, Andres-Villarreal M, Ruiz-Meana M, Inserte J, Barba I. Myocardial edema: a translational view. J Mol Cell Cardiol. 2012;52:931–9.

Oras J, Doueh R, Norberg E, Redfors B, Omerovic E, Dellgren G. Left ventricular dysfunction in potential heart donors and its influence on recipient outcomes. J Thorac Cardiovasc Surg. 2020;159:1333-1341.e6.

Poptsov V, Khatutskiy V, Skokova A, et al. Heart transplantation from donors with left ventricular ejection fraction under forty percent. Clin Transplant. 2021;35:e14341. The authors report use of highly selected heart donors with LVEF under 40 %. Of 47 hearts examined, 27 were used for transplant with good results. This illustrates that some dysfunctional donors can be utilized by experienced teams with close scrutiny of the donor and meticulous post-operative support.

Madan S, Saeed O, Vlismas P, et al. Outcomes after transplantation of donor hearts with improving left ventricular systolic dysfunction. J Am Coll Cardiol. 2017;70:1248–58.

Bombardini T, Gherardi S, Leone O, Sicari R, Picano E. Transplant of stunned donor hearts rescued by pharmacological stress echocardiography: a “proof of concept” report. Cardiovasc Ultrasound. 2013;11:27.

Kono T, Nishina T, Morita H, Hirota Y, Kawamura K, Fujiwara A. Usefulness of low-dose dobutamine stress echocardiography for evaluating reversibility of brain death-induced myocardial dysfunction. Am J Cardiol. 1999;84:578–82.

Axtell AL, Fiedler AG, Chang DC, et al. The effect of donor age on posttransplant mortality in a cohort of adult cardiac transplant recipients aged 18–45. Am J Transplant. 2019;19:876–83.

Smits JM, De Pauw M, de Vries E, et al. Donor scoring system for heart transplantation and the impact on patient survival. J Heart Lung Transplant. 2012;31:387–97.

Sabatino M, Vitale G, Manfredini V, et al. Clinical relevance of the International Society for Heart and Lung Transplantation consensus classification of primary graft dysfunction after heart transplantation: Epidemiology, risk factors, and outcomes. J Heart Lung Transplant. 2017;36:1217–25.

Lund LH, Khush KK, Cherikh WS, et al. The Registry of the International Society for Heart and Lung Transplantation: Thirty-fourth Adult Heart Transplantation Report-2017; Focus Theme: Allograft ischemic time. J Heart Lung Transplant. 2017;36:1037–46.

Goff RR, Uccellini K, Lindblad K, et al. A change of heart: preliminary results of the US 2018 adult heart allocation revision. Am J Transplant. 2020;20:2781–90.

Hsich EM, Blackstone EH, Thuita LW, et al. Heart transplantation: an in-depth survival analysis. JACC Heart Fail. 2020;8:557–68.

Jawitz OK, Fudim M, Raman V, et al. Reassessing recipient mortality under the new heart allocation system: an updated UNOS registry analysis. JACC Heart Fail. 2020;8:548–56.

Hoffman JRH, Larson EE, Rahaman Z, et al. Impact of increased donor distances following adult heart allocation system changes: a single center review of 1-year outcomes. J Card Surg. 2021;36:3619–28.

Kilic A, Mathier MA, Hickey GW, et al. Evolving trends in adult heart transplant with the 2018 heart allocation policy change. JAMA Cardiol. 2021;6:159–67.

Lebreton G, Coutance G, Bouglé A, Varnous S, Combes A, Leprince P. Changes in heart transplant allocation policy: “unintended” consequences but maybe not so “unexpected….” Asaio j. 2021;67:e69–70.

Patel JN, Chung JS, Seliem A, et al. Impact of heart transplant allocation change on competing waitlist outcomes among listing strategies. Clin Transplant. 2021;35:e14345.

Baran DA. How to save a life: ex vivo heart preservation. Asaio j. 2021;67:869–70.

Costanzo MR, Dipchand A, Starling R, et al. The International Society of Heart and Lung Transplantation Guidelines for the care of heart transplant recipients. J Heart Lung Transplant. 2010;29:914–56.

Martinez-Selles M, Almenar L, Paniagua-Martin MJ, et al. Donor/recipient sex mismatch and survival after heart transplantation: only an issue in male recipients? An analysis of the Spanish Heart Transplantation Registry. Transpl Int. 2015;28:305–13.

Khush KK, Kubo JT, Desai M. Influence of donor and recipient sex mismatch on heart transplant outcomes: analysis of the International Society for Heart and Lung Transplantation Registry. J Heart Lung Transplant. 2012;31:459–66.

Kaczmarek I, Meiser B, Beiras-Fernandez A, et al. Gender does matter: gender-specific outcome analysis of 67,855 heart transplants. Thorac Cardiovasc Surg. 2013;61:29–36.

Reed RM, Netzer G, Hunsicker L, et al. Cardiac size and sex-matching in heart transplantation : size matters in matters of sex and the heart. JACC Heart Fail. 2014;2:73–83.

Bergenfeldt H, Stehlik J, Höglund P, Andersson B, Nilsson J. Donor-recipient size matching and mortality in heart transplantation: influence of body mass index and gender. J Heart Lung Transplant. 2017;36:940–7.

Kransdorf EP, Kittleson MM, Benck LR, et al. Predicted heart mass is the optimal metric for size match in heart transplantation. J Heart Lung Transplant. 2019;38:156–65. This paper established the utility of predicted heart mass generated by calculations and proved its relation to post transplant outcomes.

Wever Pinzon O, Stoddard G, Drakos SG, et al. Impact of donor left ventricular hypertrophy on survival after heart transplant. Am J Transplant. 2011;11:2755–61.

Goland S, Czer LS, Kass RM, et al. Use of cardiac allografts with mild and moderate left ventricular hypertrophy can be safely used in heart transplantation to expand the donor pool. J Am Coll Cardiol. 2008;51:1214–20.

Marelli D, Laks H, Fazio D, Moore S, Moriguchi J, Kobashigawa J. The use of donor hearts with left ventricular hypertrophy. J Heart Lung Transplant. 2000;19:496–503.

Lechiancole A, Vendramin I, Sponga S, et al. Influence of donor-transmitted coronary artery disease on long-term outcomes after heart transplantation - a retrospective study. Transpl Int. 2021;34:281–9. The authors demonstrate excellent long-term outcomes for hearts with coronary artery disease as compared to donors without coronary disease from a single European center. They illustrate that fears of CAD progressing rapidly in donors with existing disease is not confirmed in practice.

Taghavi S, Jayarajan SN, Wilson LM, Komaroff E, Testani JM, Mangi AA. Cardiac transplantation can be safely performed using selected diabetic donors. J Thorac Cardiovasc Surg. 2013;146:442–7.

Fluschnik N, Geelhoed B, Becher PM, et al. Non-immune risk predictors of cardiac allograft vasculopathy: results from the U.S. organ procurement and transplantation network. Int J Cardiol. 2021;331:57–62.

Desai R, Collett D, Watson CJ, Johnson P, Evans T, Neuberger J. Cancer transmission from organ donors-unavoidable but low risk. Transplantation. 2012;94:1200–7.

Doerfler A, Tillou X, Le Gal S, Desmonts A, Orczyk C, Bensadoun H. Prostate cancer in deceased organ donors: a review. Transplant Rev (Orlando). 2014;28:1–5.

Kaul DR, Vece G, Blumberg E, et al. Ten years of donor-derived disease: a report of the disease transmission advisory committee. Am J Transplant. 2021;21:689–702.

Jayarajan S, Taghavi S, Komaroff E, et al. Long-term outcomes in heart transplantation using donors with a history of past and present cocaine use. Eur J Cardiothorac Surg. 2015;47:e146–50.

Vieira JL, Cherikh WS, Lindblad K, Stehlik J, Mehra MR. Cocaine use in organ donors and long-term outcome after heart transplantation: an International Society for Heart and Lung Transplantation registry analysis. J Heart Lung Transplant. 2020;39:1341–50.

Civelli VF, Sharma R, Sharma O, Sharma P, Heidari A, Singh S. Methamphetamine-induced Takotsubo cardiomyopathy with hypotension, resolved by low-dose inotropes. Am J Ther. 2019;28:e498–501.

Schwarzbach V, Lenk K, Laufs U. Methamphetamine-related cardiovascular diseases. ESC. Heart Fail. 2020;7:407–14.

Varian KD, Gorodeski EZ. The other substance abuse epidemic: methamphetamines and heart failure. J Card Fail. 2020;26:210–1.

Zhao SX, Seng S, Deluna A, Yu EC, Crawford MH. Comparison of clinical characteristics and outcomes of patients with reversible versus persistent methamphetamine-associated cardiomyopathy. Am J Cardiol. 2020;125:127–34.

Baran DA, Lansinger J, Long A et al. Intoxicated donors and heart transplant outcomes: long-term safety. Circ Heart Fail 2021:Circheartfailure120007433. This is the only paper to look at the toxicology of donors from the UNOS data set and conclusively shows that donor drug use in otherwise acceptable donors is associated with similar long-term survival.

Taghavi S, Jayarajan SN, Komaroff E, et al. Use of heavy drinking donors in heart transplantation is not associated with worse mortality. Transplantation. 2015;99:1226–30.

Kobashigawa J, Khush K, Colvin M, et al. Report From the American Society of Transplantation Conference on Donor Heart Selection in Adult Cardiac Transplantation in the United States. Am J Transplant. 2017;17:2559–66.

Hussain Z, Yu M, Wozniak A, et al. Impact of donor smoking history on post heart transplant outcomes: a propensity-matched analysis of ISHLT registry. Clin Transplant. 2021;35:e14127.

Loupy A, Coutance G, Bonnet G, et al. Identification and characterization of trajectories of cardiac allograft vasculopathy after heart transplantation: a population-based study. Circulation. 2020;141:1954–67.

Aslam S, Grossi P, Schlendorf KH, et al. Utilization of hepatitis C virus-infected organ donors in cardiothoracic transplantation: an ISHLT expert consensus statement. J Heart Lung Transplant. 2020;39:418–32.

Kahn JA. The use of organs from hepatitis C virus-viremic donors into uninfected recipients. Curr Opin Organ Transplant. 2020;25:620–5.

Kilic A, Hickey G, Mathier M, et al. Outcomes of adult heart transplantation using hepatitis C-positive donors. J Am Heart Assoc. 2020;9:e014495.

Reyentovich A, Gidea CG, Smith D, et al. Outcomes of the treatment with glecaprevir/pibrentasvir following heart transplantation utilizing hepatitis C viremic donors. Clin Transplant. 2020;34:e13989.

Schlendorf KH, Zalawadiya S, Shah AS, et al. Expanding heart transplant in the era of direct-acting antiviral therapy for hepatitis C. JAMA Cardiol. 2020;5:167–74.

Smith DE, Chen S, Fargnoli A, et al. Impact of early initiation of direct-acting antiviral therapy in thoracic organ transplantation from hepatitis C virus positive donors. Semin Thorac Cardiovasc Surg. 2021;33:407–15.

Gidea CG, Narula N, Reyentovich A et al. Increased early acute cellular rejection events in hepatitis C-positive heart transplantation. J Heart Lung Transplant 2020.

Madan S, Patel SR, Jorde UP. Cardiac allograft vasculopathy and secondary outcomes of hepatitis C-positive donor hearts at 1 year after transplantation. J Heart Lung Transplant 2020.

Dhital KK, Iyer A, Connellan M, et al. Adult heart transplantation with distant procurement and ex-vivo preservation of donor hearts after circulatory death: a case series. Lancet. 2015;385:2585–91.

Iyer A, Chew HC, Gao L, et al. Pathophysiological trends during withdrawal of life support: implications for organ donation after circulatory death. Transplantation. 2016;100:2621–9.

White CW, Lillico R, Sandha J, et al. Physiologic changes in the heart following cessation of mechanical ventilation in a porcine model of donation after circulatory death: implications for cardiac transplantation. Am J Transplant. 2016;16:783–93.

Scheuer SE, Jansz PC, Macdonald PS. Heart transplantation following donation after circulatory death: expanding the donor pool. J Heart Lung Transplant 2021.

Xu G, Guo Z, Liang W, et al. Prediction of potential for organ donation after circulatory death in neurocritical patients. J Heart Lung Transplant. 2018;37:358–64.

Messer S, Cernic S, Page A, et al. A 5-year single-center early experience of heart transplantation from donation after circulatory-determined death donors. J Heart Lung Transplant. 2020;39:1463–75.

Chew HC, Iyer A, Connellan M, et al. Outcomes of donation after circulatory death heart transplantation in Australia. J Am Coll Cardiol. 2019;73:1447–59. This is a comprehensive report about the DCD heart program in Sydney, Australia with comparison to non-DCD heart transplants.

Nadel J, Scheuer S, Kathir K, Muller D, Jansz P, Macdonald P. Successful transplantation of high-risk cardiac allografts from DCD donors following ex vivo coronary angiography. J Heart Lung Transplant. 2020;39:1496–9.

Villanueva JE, Chew HC, Gao L, et al. The effect of increasing donor age on myocardial ischemic tolerance in a rodent model of donation after circulatory death. Transplant Direct. 2021;7:e699.

Copeland H, Hayanga JWA, Neyrinck A, et al. Donor heart and lung procurement: a consensus statement. J Heart Lung Transplant. 2020;39:501–17.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

Dr. Copeland reports receiving consulting fees from Bridge to Life, and her spouse is a paid consultant for Syncardia. Dr. Baran reports consulting fees from Abiomed, Getinge, Livanova, and Abbott. He is on the Steering Committee for CareDx and Procyrion. He is a speaker for Pfizer. None of the authors has conflicts to report.

Ethical Approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Baran, D.A., Mohammed, A., Macdonald, P. et al. Heart Transplant Donor Selection: Recent Insights. Curr Transpl Rep 9, 12–18 (2022). https://doi.org/10.1007/s40472-022-00355-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40472-022-00355-4