Abstract

In this work, we investigate some qualitative properties of a stochastic dynamical model for tuberculosis with case detection. Using appropriately formulated stochastic Lyapunov functions, we derive sufficient conditions for the existence (and uniqueness) of an ergodic stationary distribution of the positive solutions of the model, guaranteeing persistence of the disease in the presence of case detection. We also obtained conditions that will allow for the eradication of the disease from the population. Using numerical simulations, we were able to illustrate the analytical results obtained herein.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Tubersulosis (TB), an airborne disease, continues to pose serious health burden on several countries, especially developing countries, in the world. According to the World Health Organization (WHO), about one-quarter of the world’s population are latently infected with TB and only about 5−15% of such individuals go on to develop active TB during their lifetime [1]. Conditions such as a compromised immune systems, infection with HIV, and diabetes, increases an infected person’s chance of progressing to active tuberculosis [1].

In 2018, the WHO reported that 30 high burden countries accounted for about 87% of new TB cases globally, with 8 countries accounting for two thirds of the new TB cases, namely, India, China, Indonesia, Philippines, Pakistan, Nigeria, Bangladesh and South Africa [1]. Also, in 2018, about 10 million people fell ill with active TB worldwide, with approximately 5.7 million men, 3.2 million women and 1.1 million children [1]. However, the good news is that TB can be treated effectively [1].

Increased TB case detection rates have resulted in quick identification of active cases and subsequent treatment. In fact, about 58 million lives were saved through effective TB diagnosis and treatment between 2000 and 2018 [1].

Mathematical models have become very useful tools used in gaining insight into the dynamics of infectious diseases, such as cholera outbreaks [2], malaria [3], co-infections in a target population such as HIV and Sphilis [4], and HPV [5]. Mathematical models for Tuberculosis are in abundance in literature, such as those found in [6, 7]. The work in [6] investigated the effect of case detection on the dynamics of TB in a population where the direct observation therapy strategy (DOTS) is being implemented for effective TB control. In [7], a TB model was formulated to investigate the role of key parameters on improving the TB case detection rate; it was shown that parameters such as quick identification (and reportage) of likely TB cases and very low testing and treatment costs can affect TB case detection.

Studies have shown that the spread of infectious diseases, such as tuberculosis, are always affected by environmental noise, especially in a small population and during the initial stage of an epidemic [8] and deterministic mathematical models do not take into cognizance the effect of these fluctuations on disease spread. To take into account the effect of environmental noise on the dynamics of infectious diseases, stochastic models have been formulated and deployed in infectious disease modelling. For example, the work in Liu and Jiang [8] investigated the dynamics of tuberculosis using a stochastic mathematical model for a population where vaccination and treatments are available, while in [9], the authors formulated a stochastic TB model for a population that has incidences of antibiotic resistance. The work in [10] provides some theoretical results to a formulated stochastic model for SIR diseases with vaccination and vertical transmission while Zhao and Jiang [11] formulated and analyzed a stochastic model for SIS diseases whereby vaccination is present in the population. Few articles, such as those in [8, 9], have shown the existence of an ergodic stationary distribution for stochastic tuberculosis models. Hence, this work seeks to add to such body of knowledge as it strives to investigate the existence of an ergodic stationary distribution for a stochastic tuberculosis model with case detection as well as derive conditions for disease eradication. Moreover, it will be interesting to investigate the impact of low and high intensity stochastic noise on the dynamics of TB in a population with case detection efforts.

This work is organized as follows. In Sect. 2, we will present the stochastic mathematical model for TB with case detection while, in Sect. 3, we will provide the sufficient condition required for the existence and uniqueness of the global positive solution of model. Section 4 will provide the proof of the existence of a unique ergodic stationary distribution under certain conditions. In Sect. 5, we will derive conditions for disease eradication and in Sect. 6, we will give some numerical simulations to illustrate the analytical results in this work. Section 7 will give some concluding remarks and future directions.

2 The TB stochastic mathematical model

In this work, we will analyze a stochastic version of a modified mathematical model from the work in [6]. As stated above, the work in [6] mathematically assessed the impact of case detection on the dynamics of TB in a population where the direct observation therapy strategy (DOTS) is being implemented. We obtain the modified version of the model in [6] by assuming insignificant treatment failure and fast progression rates, in the light of improved detection and sustained treatment rates observed in several countries in recent times [1]. Hence, the modified deterministic mathematical model for tuberculosis with case detection is of the form:

In the model (2.1), \(S(t), E(t), I_1(t), I_2(t)\), and T(t) are the number of susceptible individuals, the exposed (latently infected) individuals, the undetected infectious individuals (in the presence of the DOTS programme), the detected infectious individuals (under the DOTS programme) and the treated/recovered individuals in the population, respectively, at time t, so that \(N =S+E+I_1+I_2+T\) is the total population.

Let \(\Lambda \) be the recruitment rate into the susceptible class. We assume that \(\mu \) is the per capita natural mortality rate for all individuals in the population. Let \(d_1\) be the TB-induced mortality rate for the undetected infectious TB cases (with the DOTS programme in place) and \(d_{2}\) being the death rate for the detected TB cases. Also, let \(\beta \) be the disease transmission rate with \(\alpha \) being a modification parameter which accounts for the relative susceptibility to re-infection by treated individuals. We further assume that k is the rate of progression of the individuals in the latent stage to active tuberculosis. We assume that \(\omega \) (\(0<\omega <1\)) is the fraction of infectious TB cases that are detected and treated under the DOTS program while the remaining \((1-\omega )\) is the fraction of the infectious individuals who are not detected under the DOTS programme. In this formulation, we assume that disease transmission occurs with a bilinear incidence rate. We further assume that infectious individuals recover at the rates \(r_1\) and \(r_2\) (for those in the \(I_1\) and \(I_2\) classes, respectively).

It is easy to show that the region

is a positively invariant set and a global attractor of system (2.1).

The model (2.1) has a disease-free equilibrium (DFE), given by \(E^* = (S^*,E^*,I_1^{*},I_2^{*},T^*) = (\Lambda /\mu ,0,0,0,0)\). Following the next generation matrix approach in [12], we have that the effective reproduction number of the model (2.1) is given by

By virtue of Theorem 2 in [12], we have that the DFE is locally asymptotically stable whenever \({\mathcal {R}}_0 < 1\) and unstable if \({\mathcal {R}}_0 > 1\). Using similar approaches in [6], we can show the existence or otherwise of the endemic equilibrium when \({\mathcal {R}}_0 > 1\).

We will now include stochastic perturbations (motivated by the work in [9]) in the base deterministic model (2.1), by assuming that the perturbations are of the white noise type and are directly proportional to the sub-populations, \(S,E,I_1,I_2,T\), influenced on the derivatives \({\dot{S}},{\dot{E}},\dot{I_1},\dot{I_2}\), and \({\dot{T}}\), so that we now have the stochastic version of (2.1) as follows:

where \(B_i(t)\), \(i=1,\ldots ,5\), are mutually independent standard Brownian motions with \(B_i(0) = 0\) and \(\sigma _i^2 > 0\), \(i=1,\ldots ,5\), denote the intensities of the \(B_i\)’s.

3 Preliminaries

In this work, let \((\Omega ,{\mathcal {F}},\{{\mathcal {F}}_t\}_{t\ge 0},P)\) be a complete probability space with filtration \(\{{\mathcal {F}}_t\}_{t\ge 0}\) satisfying the usual conditions as discussed in [13, 14]. Consider the n-dimensional stochastic differential equations (SDEs)

with initial value \(x(t_0) = x_0\in {\mathbb {R}}^n\), \(x\in {\mathbb {R}}^n\) (with \(x \ge 0\)) and B(t) is the m-dimensional standard Brownian motion defined on \((\Omega ,{\mathcal {F}},\{{\mathcal {F}}_t\}_{t\ge 0},P)\). Let the family of all nonnegative functions V(x, t) defined on \({\mathbb {R}}^n \times [t_0,\infty ]\), such that V(x, t) is continuously twice differentiable in x and once in t, be denoted by \({\mathcal {C}}^{2,1}({\mathbb {R}}^n\times [t_0,\infty ];{\mathbb {R}}_+)\). Then, the differential operator \({\mathcal {L}}\) of (3.1) is defined by

If \({\mathcal {L}}\) acts on \(V\in {\mathcal {C}}^{2,1}({\mathbb {R}}^n\times [t_0,\infty ];{\mathbb {R}}_+)\), then

where \(V_t = \frac{\partial V}{\partial t}\), \(V_x = \left( \frac{\partial V}{\partial x_1}, \frac{\partial V}{\partial x_2},\ldots ,\frac{\partial V}{\partial x_n}\right) \), and \(V_{xx} = \left( \frac{\partial ^2 V}{\partial x_i \partial x_j}\right) _{n\times n}\).

By Itô’s formula [14], if \(x\in {\mathbb {R}}^n\), then

Let X(t) be a regular time-homogeneous Markov \(\{E_x\equiv {\mathbb {R}}^l\}\) process in an l-dimensional Euclidean space, \(E_l\), described by the following SDE

The diffusion matrix is defined as

Then, we have the following as in [13].

Lemma 3.1

[13] The Markov process X(t) has a unique ergodic stationary distribution \(\pi (.)\) if there exists a bounded open domain \(Z\subset {\mathbb {R}}^d\) with regular boundary \(\Gamma \), having the following properties:

- \(\text {H}_1\):

-

: there is a positive number M such that the diffusion matrix A(x) is strictly positive definite \(\forall \) \(x\in Z\) i.e., such that \(\sum _{i,j=1}^{l}a_{ij}(x)\xi _{i}\xi _{j}\ge M|\xi |^2\), \(x\in Z\), \(\xi \in {\mathbb {R}}^l\).

- \(\text {H}_2\):

-

: there exists a nonegative \({\mathcal {C}}^2\)-function V such that \({\mathcal {L}}V\) is negative for any \({\mathbb {R}}^l\setminus Z\).

4 Existence and uniqueness of positive solutions

Since the variables \(S,E,I_1,I_2\) and T in (2.2) represent human sub-populations, we expect that they be nonnegative for all time. Following the same line of reasoning and methodology in articles such as [8,9,10] and [15], we claim the following.

Theorem 4.1

For any initial condition \((S(0),E(0),I_1(0),I_2(0),T(0))\in {\mathbb {R}}_{+}^{5}\), there exists a unique positive solution \(S(t),E(t),I_1(t),I_2(t),T(t)\) of (2.2) on \(t\ge 0\) and the solution will remain in \({\mathbb {R}}_{+}^{5}\) with probability one, almost surely(a.s.).

Proof

As the coefficients of (2.2) are locally Lipschitz continuous, it follows that for any initial condition (\(S(0),E(0),I_1(0),I_2(0),T(0))\in {\mathbb {R}}_{+}^{5}\), there is a unique local solution (\(S(t),E(t),I_1(t),I_2(t),T(t)\)) on \([0, \tau _e]\) where \(\tau _e\) is the explosion time [14]. For this solution to be global, it is required that \(\tau _e = \infty \) a.s. Let \(z_0\) be sufficiently large such that \(S(0),E(0),I_1(0),I_2(0)\), and T(0) all lie within the interval \([\frac{1}{z_0}, z_0]\). For each integer \(z \ge z_0\), define the stopping time

It is clearly discernable that \(\tau _z\) is increasing as \(z\rightarrow \infty \). Now, let \(\tau _\infty = \lim _{z\rightarrow \infty }\tau _z\), so that \(\tau _\infty \le \tau _e\) a.s. Now we need to show that \(\tau _\infty = \infty \) a.s. to conclude that \(\tau _e = \infty \) a.s. so that \(S(t),E(t),I_1(t),I_2(t),T(t)\in {\mathbb {R}}_{+}^{5}\) a.s. for \(t\ge 0\). If this assertion is false, then there is a pair of constants \({\hat{T}}\) and \(\epsilon \in (0,1)\) such that \({\mathbb {P}}\{\tau _\infty \le {\hat{T}}\}>\epsilon \). Hence, there is an integer \(z_1\ge z_0\) such that

Define a \({\mathcal {C}}^2\)-function \(U:{\mathbb {R}}_{+}^{5}\rightarrow {\mathbb {R}}_{+}\) by

where the positive constants, a and b, will be determined shortly. Note that the nonnegativity of U is guaranteed since \(v-1-\ln v\ge 0\), for any \(v>0\).

Applying Itô’s formula to U yields

where \({\mathcal {L}}U:{\mathbb {R}}_{+}^{5}\rightarrow {\mathbb {R}}_{+}\) is given by

which, after several calculations reduces to

Now, choose

such that \((a + b\alpha )\beta - (\mu +d_1) \le 0\) and \((a + b\alpha )\beta \gamma - (\mu +d_2)\le 0\). Then, we have that

where W is a constant. Hence, we now have that

Integrating both sides of (4.3) from 0 to \(\tau _z\wedge {\hat{T}} = \text {min}\{\tau _z,{\hat{T}}\}\) and taking expectation results in

Hence,

Now, let \(\Phi _z = \{p\in \Phi :\tau _z(p)\le {\hat{T}}\}\) for \(z\ge z_1\) and according to (4.1), we have \({\mathbb {P}}(\Phi _z)\ge \epsilon \). Since, for every \(p\in \Phi _z\), there exists \(S(\tau _z,p)\), or \(E(\tau _z,p)\), or \(I_1(\tau _z,p)\), or \(I_2(\tau _z,p)\), or \(T(\tau _z,p)\) equals either z or \(\frac{1}{z}\), it follows that \(S(\tau _z,p)\), \(E(\tau _z,p)\), \(I_1(\tau _z,p)\), \(I_2(\tau _z,p)\), or \(T(\tau _z,p)\) is no less than either \(z-a-a\ln \frac{z}{a}\) or \(\frac{1}{z}-a-a\ln \frac{1}{za} = \frac{1}{z}-a+\ln (za)\) or \(z-b-b\ln \frac{z}{b}\) or \(\frac{1}{z}-b-b\ln \frac{1}{zb} = \frac{1}{z}-b+\ln (zb)\) or \(z-1-\ln z\) or \(\frac{1}{z}-1-\ln \frac{1}{z} = \frac{1}{z}-1+\ln z\). Hence, we have that

Following (4.4), we get that

where \(I_{\Phi _z}\) stands for the indicator function of \(\Phi _z\). Letting \(z\rightarrow \infty \) leads to the contradiction

Therefore, we must have that \(\tau _\infty = \infty \) a.s. This completes the proof. \(\square \)

5 Disease persistence

We will now derive conditions that will guarantee the existence (and uniqueness) of an ergodic stationary distribution of the solutions to the model (2.2), which shows that the disease will persist in the population for all time; by persistency in this model, we mean that the disease will remain perpetually in the population for all time i.e., there will be TB infected individuals in the population for all time.

Define the parameter

We claim the following:

Theorem 5.1

For any initial value \((S(0),E(0),I_1(0),I_2(0),T(0))\in {\mathbb {R}}_{+}^{5}\), the system (2.2) has a unique ergodic stationary distribution \(\pi (.)\) if and only if \({\mathcal {R}}_0^s > 1\).

Proof

To prove Theorem 5.1, all we need to do is to ensure that the two conditions in Lemma 3.1 holds.

The diffusion matrix of (2.2) is given by

Obviously, the matrix A is positive definite for any compact subset of \({\mathbb {R}}_{+}^{5}\).

Now, choose

Hence, we have that

where \((S,E,I_1,I_2,T)\in {\bar{D}}_\sigma \) and \(\xi = (\xi _1,\xi _2,\xi _3,\xi _4,\xi _5)\in {\mathbb {R}}_{+}^{5}\). Therefore, condition \(\text {H}_1\) in Lemma 3.1 is satisfied. Next we prove condition \(\text {H}_2\) in Lemma 3.1.

Now, we need to construct a \({\mathcal {C}}^2\)-function \({\bar{V}}:{\mathbb {R}}_{+}^{5}\rightarrow {\mathbb {R}}\) in the form:

where each \(V_i\), \(i=1,\ldots ,6\), is uniquely obtained, and \(M>0\) is a sufficiently large number satisfying the following condition

with B and \(\psi \) to be determined.

Note that \({\bar{V}}(S,E,I_1,I_2,T)\) tends to \(\infty \) as \((S,E,I_1,I_2,T)\) approaches the boundary of \({\mathbb {R}}_{+}^{5}\). Hence, it must be bounded from below and this is achieved at a point \((S^o,E^o,I_1^o,I_2^o,T^o)\) in the interior of \({\mathbb {R}}_{+}^{5}\). Then we define a \({\mathcal {C}}^2\)-function \({\tilde{V}}:{\mathbb {R}}_{+}^{5}\rightarrow {\mathbb {R}}\) as follows

Define

where \(\nu \), a sufficiently small constant, satisfies \(0<\nu <\frac{2\mu }{\sigma _1^2\vee \sigma _2^2 \vee \sigma _3^2 \vee \sigma _4^2 \vee \sigma _5^2}\) and the \(a_i\)’s, \(i=1,\ldots ,5\), in \(V_1\) are positive constants to be determined.

Applying Itô’s formula to \(V_1\), we have

Now, choose

Then, we have that

where \(\psi = ({\mathcal {R}}_0^s - 1) > 0\).

Next, applying Itô’s formula to \(V_2\), we have

Applying Itô’s formula to \(V_3\), we have

Next, applying Itô’s formula to \(V_4\), we have that

Applying Itô’s formula to \(V_5\), we have

Next, applying Itô’s formula to \(V_6\), we have

where

Hence, using (5.4) - (5.9), we have that

which can be written as

where B is defined by

Recall that, as stated earlier in (5.2), M must satisfy \(-M\psi + B\le -2\).

Let us define a bounded close set

with \(0<\epsilon<<1\), sufficiently small. Then, in \({\mathbb {R}}^5_{+}\backslash {\mathbf {Z}}_\epsilon \), we can choose \(\epsilon \) such that

where C is a positive constant to be determine later.

Next, to complete the requirement of condition \(H_2\) of Lemma 3.1, we show that

To do this, we divide \({\mathbb {R}}^5_{+}\backslash {\mathbf {Z}}\epsilon \) into 11 domains, thus

Clearly, \({\mathbf {Z}}_\epsilon ^c = {\mathbb {R}}^5_{+}\backslash {\mathbf {Z}}_\epsilon ={\mathbf {Z}}_1 \cup {\mathbf {Z}}_2\ldots \cup {\mathbf {Z}}_{11}\).

Now, proving that \({\mathcal {L}}{\tilde{V}} \le -1\) for any \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_\epsilon ^c\) is equivalent to proving it on each of the eleven domains \({\mathbf {Z}}_1,\ldots , {\mathbf {Z}}_{11}\).

Consider the case where \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_1\). From (5.11), we have that

which follows from (5.14), where

Next, we consider the case where \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_2\). From (5.11), we have that

which follows from (5.2) and (5.15).

Next, we consider the case where \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_3\). From (5.11), we have that

which follows from (5.16) and (5.27), for sufficiently small \(\epsilon \).

Now, consider the case where \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_4\). From (5.11), we have that

which follows from (5.17) and (5.27).

Now, if \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_5\), then using (5.11), we have that

which follows directly from (5.18) and (5.27).

Next, consider the case when \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_6\); then using (5.11), we have that

which follows directly from (5.19) and (5.27).

Now, consider the case when \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_7\); then using (5.11), we have that

which follows from (5.21) and (5.27).

Now, consider the case when \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_8\); then using (5.11), we have that

which follows from (5.23) and (5.27).

Considering the case when \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_9\), using (5.11), we have that

which follows from (5.21) and (5.27).

Considering the case when \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_{10}\), using (5.11), we have that

which follows from (5.21) and (5.27).

Finally, consider the case when \((S,E,I_1,I_2,T)\in {\mathbf {Z}}_{11}\). Therefore, using (5.11), we have that

which follows from (5.25) and (5.27).

Clearly, from (5.26), (5.28) - (5.37), we have shown that, for sufficiently small \(\epsilon \),

Hence, condition \(H_2\) of Lemma 3.1 holds. Therefore, it follows, that the model (2.2) is ergodic and has a unique stationary distribution \(\pi (.)\) \(\square \)

Note that Theorem 5.1 shows that the model (2.2) has a unique ergodic stationary distribution \(\pi (.)\) if \({\mathcal {R}}_0^s > 1\). Recall that the expression for \({\mathcal {R}}_0^s\) is the same as that for the control reproduction number for the deterministic version of (2.2), \({\mathcal {R}}_0\), when we do not take into cognisance the effect of white noise (with \(\sigma _i = 0\), \(i=1,\ldots ,5\)). Basically, when \({\mathcal {R}}_0 > 1\), the deterministic model (2.1) will have a unique endemic equilibrium, so that disease persistence is guaranteed.

6 Disease eradication

Now, we derive necessary and sufficient conditions for eradication of tuberculosis in a population where case detection is being implemented. Of course, this results in a disease-free state for the stochastic system (2.2). At this stage, we will define

To derive the sufficient conditions for disease eradication, we will be making use of Lemmas 2.1 and 2.2 in [11]. For convenience, we restate them as follows:

Lemma 6.1

[11] Let \((S(t),E(t),I_1(t),I_2(t),T(t))\) be the solution to the model (2.2) with initial value \((S(0),E(0), I_1(0),I_2(0),T(0))\in {\mathbb {R}}_{+}^{5}\). Then,

Moreover,

Lemma 6.2

[11] Assume that \(\mu > \frac{(\sigma _1^2\vee \sigma _2^2\vee \sigma _3^2\vee \sigma _4^2\vee \sigma _5^2)}{2}\). Let \((S(t),E(t),I_1(t),I_2(t),T(t))\) be the solution to the model (2.2) with initial value \((S(0),E(0),I_1(0),I_2(0),T(0))\in {\mathbb {R}}_{+}^{5}\). Then,

Lemmas 6.1 and 6.2 can be proven using the same approaches implemented in [11]. Now, using the information in Lemmas 6.1 and 6.2, we claim the following.

Theorem 6.1

Let \((S(t),E(t),I_1(t),I_2(t),T(t))\) be the solution to the model (2.2) with initial value \((S(0),E(0),I_1(0), I_2(0),T(0))\in {\mathbb {R}}_{+}^{5}\). If

then the disease will die out exponentially with probability one, i.e.,

with

Proof

From the equation describing the rate of change of S(t) in (2.2), we have that

Dividing (6.1) through by t and taking limits results in

Hence, it follows that

Define

By applying Itô’s formula, we have that

Integrating (6.4) from 0 to t and diving by t gives

Taking the superior limit of (6.5), and using (6.3), we have that

which shows that

This completes the proof \(\square \)

Remark 6.1

It is imperative to note that the threshold parameters, \({\mathcal {R}}_0^s\) and \(\hat{{\mathcal {R}}_0^s}\), for determining the existence of an ergodic stationary distribution (and therefore provides the basis for concluding that the disease will persist for all time) and the condition for disease eradication, respectively, are not ”reproduction numbers” of the model (2.2) in the classical sense of the derivation and use of reproduction numbers in epidemiological modelling using deterministic nonlinear ordinary differential equation models. They are simply parameters that are derived from the direct application of the techniques used in determining the existence of a stationary distribution and for investigating conditions that will result in disease eradication in the target population.

Remark 6.2

It is interesting to note that the parameter for determining the existence or otherwise of the stationary distribution, \({\mathcal {R}}_0^s\), is a function of the case detection parameter, \(\omega \); hence, the case detection parameter plays a role in the condition that allows for the persistence of the disease. However, for the parameter used for determining condition for disease eradication, \(\hat{{\mathcal {R}}_0^s}\), we see that this parameter is not a function of the case detection parameter, \(\omega \); hence, regardless of what the value of \(\omega \) is, the disease will be eradicated as far as the conditions in Theorem 6.1 are satisfied.

7 Numerical simulations

Using the Milstein’s Higher Order Method (MHOM) [16], we carry out some numerical simulations of the model (2.2) to illustrate the analytical results presented herein. The convergence of the MHOM is well discussed in [16]. The MHOM has a strong order of convergence, which is higher than the Euler Maruyama (EM) method, a well known numerical method for solving stochastic differential equations, due to the addition of a correction to the stochastic increment in the EM, which then gave rise to the MHOM [16]. The correction is due to the fact that the traditional Taylor expansion must be modified in the case of Itô’s Calculus [16]. By virtue of the Milstein’s Higher Order Method [16], we obtain the following discretization form of model (2.2):

where \(\triangle t > 0\) is time increment, and \(\chi _{j,i}\), \(j = 1, 2,\ldots ,5\) are the independent Gaussian random variables which follow the distribution N(0, 1).

For the simulations, we use the following initial condition\((S(0),E(0),I_1(0),I_2(0),T(0))\) = (0.2, 0.2, 0.3, 0.2, 0.2). Note that the choice of parameter values that follows are made simply to illustrate the analytical results herein.

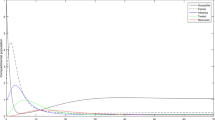

To numerically prove the existence of an ergodic stationary distribution, we choose the following parameters, \(\mu =0.02042\), \(\Lambda =0.8\), \(\beta = 3.9\), \(\gamma =0.5\), \(\alpha =1\), \(k=0.008\), \(\omega =0.41\), \(d_1=0.365\), \(r_1=0.2\), \(d_2=0.22\), \(r_2=1.5\), \(\sigma _1^2=0.2\), \(\sigma _2^2=0.2\), \(\sigma _3^2=0.2\), \(\sigma _4^2=0.3\), \(\sigma _5^2=0.3\). Using these parameter values, we have that \({\mathcal {R}}_0^s = 1.7394 > 1\). Therefore, as seen in Fig. 1, Theorem 5.1 holds as we observe that the model 2.2 has a unique ergodic stationary distribution \(\pi (.)\) which implies that, for all time, the disease persists in the population.

The paths of \(S(t), E(t), I_1(t), I_2(t)\) and T(t) of model (2.2), when \(\mu =0.02042\), \(\Lambda =0.8\), \(\beta = 3.9\), \(\gamma =0.5\), \(\alpha =1\), \(k=0.008\), \(\omega =0.41\), \(d_1=0.365\), \(r_1=0.2\), \(d_2=0.22\), \(r_2=1.5\), with noise intensities given as \(\sigma _1^2=0.2\), \(\sigma _2^2=0.2\), \(\sigma _3^2=0.2\), \(\sigma _4^2=0.3\), \(\sigma _5^2=0.3\). The red plots represents the solutions of the perturbed system (2.2) while the blue plots are for the unperturbed system (2.1). (Color figure online)

To numerically show the possibility of disease eradication, we choose the following parameters, \(\mu =0.85\), \(\Lambda =0.6\), \(\beta = 0.7\), \(\gamma =0.5\), \(\alpha =1.5\), \(k=0.005\), \(\omega =0.11\), \(d_1=0.365\), \(r_1=0.2\), \(d_2=0.22\), \(r_2=1.5\), \(\sigma _1^2=0.8\), \(\sigma _2^2=0.6\), \(\sigma _3^2=1\), \(\sigma _4^2=1.2\), \(\sigma _5^2=1.4\). Using these parameter values, we have that \(\hat{{\mathcal {R}}_0^s} = -1.4510 < 1\), with the condition \(\mu = 0.85 > \frac{(\sigma _1^2\vee \sigma _2^2\vee \sigma _3^2\vee \sigma _4^2\vee \sigma _5^2)}{2} = 0.7\). Therefore, the disease will be eradicated from the population in this case.

In Fig. 1, we observe that the disease persisted (for all time) for small noise intensities. However, as seen in the calculated threshold in this section, the disease will be eradicated for all time, for larger white noise intensities.

8 Discussions and conclusion

This work presents some qualitative study of a stochastic mathematical model for tuberculosis with case detection. Using appropriately constructed stochastic Lyapunov functions, we derived sufficient conditions for the existence and uniqueness of an ergodic stationary distribution of the positive solutions of the model, thereby guaranteeing the persistence of the disease in the population for all time. We also derived sufficient conditions that would result in disease eradication for all time. From the analytical results, we observe that the condition for disease eradication is independent of the case detection parameter (\(\omega \)) whereas the condition for disease persistence is a function of the case detection parameter, showing the effect case detection has on the overall stochastic dynamics of the model. Using some numerical simulations, we illustrated the theoretical results obtained in this work. We also demonstrated, using the numerical simulations, that small noise intensities will lead to the existence of the ergodic stationary distribution, which eventually allows for the disease to persist in the population, whereas for large white noise, the disease was eradicated from the population for all time, hereby showing the significant effect environmental noise can have on the dynamics of tuberculosis in a population where efforts are in place to increase TB case detection. This agrees with the conclusions reached from the numerical simulations in [8].

It will be nice to investigate the effect of intrinsic fluctuations or noise on some other tuberculosis models. For example, what would be the effect of white noise on the dynamics of tuberculosis in a population where genetic heterogeneity (susceptibility and disease progression) affects the disease burden? Also, it is imperative to investigate the effect of intrinsic fluctuations on the impact of awareness, by the susceptible and latently infected individuals, on TB control. We leave these for future work.

References

Tuberculosis Fact Sheet. www.who.int/news-room/fact-sheets/detail/tuberculosis. (2020). Acessed 27 Aug 2020

Isere A, Osemwenkhae J, Okuonghae D (2014) Optimal control model for the outbreak of cholera in Nigeria. Afr J Math Comput Sci Res 7(2):24–30

Lou Y, Zhao X-Q (2010) A climate-based malaria transmission model with structured vector population. J SIAM Appl Math 70:2023–2044

Nwankwo A, Okuonghae D (2018) Mathematical analysis of the transmission dynamics of HIV syphilis co-infection in the presence of treatment for syphilis. Bull Math Biol 80(3):437–492

Omame A, Umana RA, Okuonghae D, Inyama SC (2018) Mathematical analysis of a two-sex human papillomavirus (HPV) model. Int J Biomath 11(7):1850092

Okuonghae D, Aihie V (2008) Case detection and direct observation therapy strategy (DOTS) in Nigeria: its effect on TB dynamics. J Biol Syst 16(1):1–31

Okuonghae D, Omosigho SE (2011) Analysis of a mathematical model for tuberculosis: what could be done to increase case detection. J Theor Biol 269:31–45

Liu Q, Jiang D (2019) The dynamics of a stochastic vaccinated tuberculosis model with treatment. Phys A 527:121274

Liu Q, Jiang D, Hayat T, Alsaedi A (2018) Dynamics of a stochastic tuberculosis model with antibiotic resistance. Chaos Solitons Fractals 109:223–230

Miao A, Zheng J, Zhang T, Pradeep BGSA (2017) Threshold dynamics of a stochastic SIR model with vertical transmission and vaccination. Comput Math Methods Medicine. https://doi.org/10.1155/2017/4820183

Zhao Y, Jiang D (2014) The threshold of a stochastic SIS epidemic model with vaccination. Appl Math Comput 243:718–727

van den Driessche P, Watmough J (2002) Reproduction numbers and sub-threshold endemic equilibria for compartmental models of disease transmission. Math Biosci 180:29–48

Khasminskii R (1980) Stochastic stability of differential equations. Sijthoff and Noordhoff, Dordrecht

Mao X (1997) Stochastic differential equations and applications. Horwood Publishing, Chichester

Sene N (2020) Analysis of the stochastic model for predicting the novel coronavirus disease. Adv Differ Equ. https://doi.org/10.1186/s13662-020-03025-w

Higham DJ (2001) An algorithmic introduction to numerical simulation of stochastic differential equations. SIAM Rev 43(1):525–546

Acknowledgements

We are grateful to the anonymous reviewers for their insightful comments which have improved the quality of this work.

Funding

This work did not receive any funding.

Author information

Authors and Affiliations

Contributions

The work was 100% carried out by the author.

Corresponding author

Ethics declarations

Conflict of interest

The author declare that there is no conflict of interest regarding the publication of this paper.

Availability of data and material

No specific data or unique material was used for this work.

Code Availability

The codes used for the simulations in this work may be made available on request.

Rights and permissions

About this article

Cite this article

Okuonghae, D. Analysis of a stochastic mathematical model for tuberculosis with case detection. Int. J. Dynam. Control 10, 734–747 (2022). https://doi.org/10.1007/s40435-021-00863-8

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40435-021-00863-8