Abstract

In this work, an inertial Halpern-type algorithm involving monotone operators is proposed in the setting of real Banach spaces that are 2-uniformly convex and uniformly smooth. Strong convergence of the iterates generated by the algorithm is proved to a zero of sum of two monotone operators. Furthermore, an application of the method to image recovery problems is presented. In addition, a numerical example on the classical Banach space \(l_{\frac{3}{2}}(\mathbb {R})\) is presented to support the main theorem. Finally, the performance of the proposed algorithm is compared with that of some existing algorithms in the literature.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The variational inclusion problem (1) which is to

where A and B are respectively, single and set valued mappings on a real Hilbert space H, has attracted the interest of many authors largely due to the fact that models arising from image restoration, machine learning and signal processing can be recast to fit the setting of (1). Problem (1) is called monotone inclusion problem, when A and B are monotone operators. In the literature, many authors have developed mathematical algorithms for approximating solutions of problem (1) when such solutions exist (see, e.g., Takahashi et al. 2012; Kitkuan et al. 2019; Yodjai et al. 2019; Abubakar et al. 2020a; Chidume et al. 2020a). Assuming existence of solutions, one of the early methods developed for approximating solutions of problem (1) is the forward-backward algorithm (FBA) introduced by Passty (1979). The FBA generates its iterates in the setting of problem (1) under maximal monotonicity requirement on A, B and \((A+B)\) by solving the recursive equation:

where \(\{\nu _n\}\subset (0,\infty )\). Weak convergence of the iterates generated by the FBA (2) has been obtained by many authors (see, e.g., Passty 1979). Passty (1979) noted that for the special case when B is the indicator function of a nonempty closed and convex set, Lions (Lions 1978) also established weak convergence of the iterates generated by (2). Over the years, some modifications have been made to the FBA to get strong convergence in the setting of real Hilbert spaces (see, e.g., Takahashi et al. 2012; Adamu et al. 2021; Phairatchatniyom et al. 2021). However, a Series Editor of Mathematics and Its Applications, Kluwer Academic Publishers, Hazewinkle, made the following remark “... many and probably most, mathematical objects and models do not naturally live in Hilbert space”, see, (Cioranescu et al. 2012) pg. viii. To further support his claim, interested readers may see, for example (Alber and Ryazantseva 2006; Shehu 2019) for some nontrivial and interesting examples of monotone operators and convex minimization problems in the setting of real Banach spaces.

There are two ways in which monotonicity on Hilbert spaces can be moved to Banach spaces. An extension of a monotone map A defined on a Hilbert space will be called accretive on a Banach space E if the mapping \(A: E \rightarrow 2^E\) satisfies the following condition:

where \(q>1\) and \(J_q\) is the duality mapping on E (interested readers may see, e.g., (Alber and Ryazantseva 2006) for explicit definition of \(J_q\) and some of its properties on certain Banach spaces). In the literature, extension of the inclusion problem (1) involving accretive operators have been considered by many researchers (see, e.g., Chidume et al. 2021a; Qin et al. 2020; Adamu et al. 2022a; Luo 2020).

The other extension of a monotone map A defined on a Hilbert space H is when the operator maps a Banach space E to subsets of its dual space, \(E^*\) and satisfies the following condition:

the name monotone is maintained. Many research efforts have been devoted toward extending the the inclusion problem (1) to involve monotone operators in the setting of Banach space. However, only a few success have been recorded (see, e.g., Shehu 2019; Kimura and Nakajo 2019; Cholamjiak et al. 2020). In 2019, in the setting of a real Banach space E that is 2-uniformly convex and uniformly smooth, Shehu (2019) established strong convergence of the iterates generated by algorithm (3) defined by:

to a solution of problem (1) under the assumption that B is maximal monotone, A is monotone and L-Lipschitz continuous, \(\{\nu _n\}\) is a sequence of positive real numbers which satisfies some appropriate conditions, and \(\{\alpha _n\}\subset (0,1)\) with \( \lim _{n\rightarrow \infty } \alpha _n=0\) and \( \sum _{n=1}^\infty \alpha _n=\infty \).

Also, in the same year, under the same Banach space considered by Shehu (2019) and Kimura and Nakajo (2019) established strong convergence of the iterates generated by algorithm (4) defined by:

In this work, we introduce a new projection free inertial Halpern-type algorithm in the setting of real Banach spaces that are uniformly smooth and 2-uniformly convex. We proved strong convergence of the iterates generated by our algorithm to a solution of problem (1). In addition, we used our algorithm in the recovery process of some degraded images and compared its performance with the algorithms (3) of Shehu (2019) and (4) of Kimura and Nakajo (2019). Finally, we give a numerical example in the classical Banach space \(l_3(\mathbb {R})\) to support our main Theorem and the Theorems of Shehu (2019), and Kimura and Nakajo (2019).

2 Preliminaries

In this section, we will introduce some notions and results established in Banach spaces that will be required in proving our main theorem. It is well-known that any normed linear space E with conjugate dual space, \(E^*\) has a duality map associated to it. In this work, we will need the normalized duality map \(J: E \rightarrow 2^{E^*} \) which one can find its explicit definition in, for example, (Alber and Ryazantseva 2006) and some of its nice properties on some normed spaces are given therein. Also, the well-known Alber’s functional \(\phi \) defined on a smooth space \(E\times E \rightarrow \mathbb {R}\) by

which is central in estimations involving J on smooth spaces will be needed. As a consequence of this definition, the following are immediate for any \(p,r,z\in E \) and \(\tau \in [0,1]\)

-

P1:

\((\Vert p \Vert -\Vert r\Vert )^2 \le \phi (p,r)\le (\Vert p\Vert +\Vert r\Vert )^2,\)

-

P2:

\(\phi (p, J^{-1}(\tau Jr+(1-\tau )Jz)\le \tau \phi (p,r) + (1-\tau ) \phi (p,z), \)

-

P3:

\(\phi (p,r)=\phi (p,z)+\phi (z,r)+2\langle z-p,Jr-Jz\rangle ,\)

where J and \(J^{-1}\) are the normalized duality maps on E and \(E^*\), respectively (see, e.g., Nilsrakoo and Saejung 2011 for a proof of properties P1, P2 and P3). Also, we will need the mapping \(V: E\times E^* \rightarrow \mathbb {R}\) defined by

in our estimations. Observe that \(V(p,p^*)=\phi (p,J^{-1}p^*)\). Thus, we shall use them interchangeably as the need arise in the course of the proof of our main theorem. In addition, the generalized projection defined by \(z=\Pi _C x \in C\) such that \(\phi (z,x)=\inf _{y\in C}\phi (y,x)\) where C is a nonempty closed and convex subset of a smooth, strictly convex and reflexive real Banach space will appear in our proof. Moreover, the resolvent operator for a set valued monotone operator that is maximal, \(B: E \rightarrow 2^{E^*}\), defined as \(J_\lambda ^B=\big (J+\lambda B)^{-1}J,\) for all \(\lambda >0\) on a smooth, reflexive and strictly convex Banach space, E will be used in our estimations.

Lemma 2.1

In Alber and Ryazantseva (2006), it was established that in a smooth, reflexive and strictly convex real Banach space, the generalized projection \(\Pi _C\) has the following property:

for any \(y\in C\), where \(x \in E\) and \(z=\Pi _C x\).

Lemma 2.2

On a smooth, reflexive and strictly convex Banach space, E with dual \(E^*\), the following inequality

was established in Alber and Ryazantseva (2006) for all \(p\in E\) and \(p^*,v^*\in E^{*}\).

Lemma 2.3

On a Banach space, E that is 2-uniformly smooth, it is shown in Xu (1991) that one can find \(\gamma >0\) such that

holds \(\forall ~p,r\in E\).

Lemma 2.4

(Kamimura and Takahashi 2002)

The Alber’s functional \(\phi \) has the property that \(\lim _{n\rightarrow \infty } \phi (u_n,v_n )= 0\) implies \(\lim _{n\rightarrow \infty }\Vert u_n-v_n \Vert = 0,\) whenever \(\{u_n\}\) or \(\{v_n\}\) is a bounded sequence in a Banach space that is smooth and uniformly convex.

Lemma 2.5

It was established in Nilsrakoo and Saejung (2011) that the Alber’s functional \(\phi \) has the following property:

in a Banach space that is uniformly smooth E, for any \(\tau \in [0, 1],~ u \in E\) and p, r are in a bounded subset of E, for some convex, continuous and strictly increasing function g that is fixed at 0.

Lemma 2.6

For real sequences \(\{ m_n \}\), \(\{ \zeta _n \}\), \(\{ \mu _n \}\) and \(\{c_n\}\) that satisfy:

where \(\{ m_n \}, \{c_n\}\subset [0,\infty )\) and, \(\sum _{n=0}^\infty c_n <\infty \) \( \{\zeta _n\}\subset [0,1]\) with the condition \(\sum _{n=0}^\infty \zeta _n=\infty , \) and \(\lim _{n\rightarrow \infty } \zeta _n=0\) and finally, \( \limsup _{n\rightarrow \infty } \mu _n \le 0\). It was shown in Hong-Kun (2002) that \(\lim _{n \rightarrow \infty } m_n=0 .\)

Lemma 2.7

Given a subsequence \(\{x_{n_j}\}\) of a nondecreasing sequence \( \{x_n\}\subset \mathbb {R}\) which satisfies \(x_{n_j}<x_{n_j+1}\) for all \(j\ge 1\). The following conclusions were established in Maingé (2010): there exists some nondecreasing index \(\{m_k\}_{k\ge 1}\subset \mathbb {N}\)

Lemma 2.8

For nonnegative sequences \(\{m_n\}, ~\{b_n\}\) and \(\{c_n\}\) that can be expressed as

if \( \sum _{n=1}^\infty c_n < \infty \) and \(0 \le b_n \le b < 1\), for all \(n\ge 1\), in Alvarez (2004) the authors proved that

-

\(\displaystyle \sum _{n = 1}^\infty [m_n - m_{n-1}]_+ < + \infty ,\) where \([t]_+=\max \{t,0\}; \) and limit of \(\{m_n\}\) exists.

Lemma 2.9

In Kimura and Nakajo (2019) the authors established that for a set-valued maixmal monotone operator B and an \(\alpha \)-inverse strongly operator A in the setting of a 2-uniformly convex and uniformly smooth real Banach space, the operator \(T_\lambda v:= (J+\lambda B)^{-1}(Jv-\lambda Av),\) \(\lambda >0\) has the following properties:

-

(i)

\(F(T_\lambda )=(A+B)^{-1}0,\) where \(F(T_\lambda )\) denote the set of fixed points of \(T_\lambda \).

-

(ii)

\(\phi (u, T_\lambda v) \le \phi (u,v)-(\gamma -\lambda \beta ) \Vert v-T_\lambda v\Vert ^2-\lambda \Big (2\alpha -\frac{1}{\beta }\Big )\Vert Av-Au\Vert ^2,\)

for any \(\beta >0\), \(u\in F(T_\lambda )\), \(v\in E\) and \(\gamma \) is as defined in Lemma 2.3

Remark 1

Observe that given \(\alpha >0\), there exists \(\lambda _0>0\) such that \(\frac{\gamma }{\lambda _0}>\frac{1}{2\alpha }.\) Thus, one can choose \(\beta >0\) such that \(\frac{1}{2\alpha }<\beta < \frac{\gamma }{\lambda _0}\). Hence, from (ii) we have

Lemma 2.10

Given initial points \(r_0,r_1\) in a 2-uniformly convex and uniformly smooth real Banach space, E, the following estimate concerning the sequence \(v_n:=J^{-1}\big (Jr_n+\mu _n(Jr_n-Jr_{n-1})\big )\) was established in Adamu et al. (2022b)

where \( w\in E\), \(\{\mu _n\}\subset (0,1)\) and \(\gamma \) is as defined in Lemma 2.3. For completeness, we shall give the proof here.

Proof

Using property P3, we have

Also, by Lemma 2.3, one can estimate \(v_n\) as follows:

Putting together equation (7) and inequality (9), we get

From (8), this implies that

\(\square \)

3 Main result

Theorem 3.1

Let E be a 2-uniformly convex and uniformly smooth real Banach space with dual space, \(E^*\). Let \(A: E \rightarrow E^*\) be an \(\alpha \)-inverse strongly monotone and \(B: E \rightarrow 2^{E^*}\) be maximal monotone. Assume the solution set \(\Omega = (A+B)^{-1}0 \ne \emptyset \), given \(a_0, a_1, u \in E\), generate \(\{a_n\}\subset E\) by:

where \(0<\tau _n\le \bar{\tau _n}\) and \( \bar{\tau _n}= {\left\{ \begin{array}{ll} \min \Big \{ \tau , \frac{\sigma _n}{\Vert Ja_n-Ja_{n-1}\Vert ^2}, \frac{\sigma _n}{\phi (a_n,a_{n-1})}\Big \}, &{} a_n\ne a_{n-1} , \\ \tau , &{} \text {otherwise}, \end{array}\right. }\)

\(\tau \in (0,1)\) and \(\{\sigma _n\}\subset (0,1)\) such that \(\sum _{n=1}^\infty \sigma _n<\infty \), \(0<\nu _n< 2\alpha \gamma \), with \(\gamma \) as defined in Lemma 2.3, \(\{\mu _n\} \subset (0,1)\) such that \( \lim _{n\rightarrow \infty } \mu _n=0\) and \( \sum _{n=1}^\infty \mu _n=\infty \), \(\{\varepsilon _n\}\subset (0,1)\) is nondecreasing. Then, \(\{a_n\}\) converges strongly to \(z\in \Omega \).

Proof

We begin the proof by showing that \(\{a_n\}\) is bounded. Let \(z\in \Omega \). Then, using P2, Remark 1 and Lemma 2.10, we obtain that

If the maximum is \(\phi (z,u)\), we are done. Else, one can find an \(n_0\in \mathbb {N}\) such that for all \(n\ge n_0\),

By Lemma 2.8, \(\{\phi (z,a_n)\}\) has a limit. Hence, using P1, it is easy to deduce that \(\{a_n\}\) bounded. Thus, \(\{y_n\}\) and \(\{w_n\}\) are bounded.

Next, we show that \( \lim _{n\rightarrow \infty } a_n=z,\) where \(z=\Pi _\Omega u\) and \(\Pi \) is the generalized projection. Using Lemma 2.5 and Remark 1 we obtain the following estimate:

Thus,

Since for any sequence in \(\mathbb {R}\), it is either monotone or one can construct a monotone subsequence from it, to complete the proof, we shall first assume there exists an \(n_0\in \mathbb {N}\) such that

Then, from inequality (14), we deduce that

Furthermore, observe that

Therefore,

We will state here (without a proof to avoid unnecessary repetition) that the set of all weak limits of any subsequence of \(\{a_n\}\) is contained in \((A+B)^{-1}0\). The proof is standard, interested readers may see, e.g., page 10 of Kimura and Nakajo (2019) for this proof.

Let \(z^*\) be a weak limit of \(\{a_n\}\). Then one can find a subsequence \(\{a_{n_k}\}\subset \{a_n\}\) such that

since \(z=\Pi _\Omega u\). Thus, by (15) we deduce that

Now, have all the tools we need prove that \( \lim _{n\rightarrow \infty } a_n =\Pi _\Omega u\). Using Lemmas 2.5, 2.2 and 2.10, and Remark 1 we get

By Lemma 2.6, we deduce from (18) that \( \lim _{n\rightarrow \infty }\phi (z,a_n)=0.\) Which implies that \( \lim _{n\rightarrow \infty } a_n=z\) as a consequence of Lemma 2.4.

If the assumption above is false for the sequence \(\{a_n\}\) then necessarily, one can find a subsequence \(\{a_{m_j}\}\subset \{a_n\}\) such that

By Lemma 2.7, we have that

From inequality (14), using this index \(\{m_k\}\subset \mathbb {N}\) we have

If follows using same argument as we did above that

From inequality (17), we get

By Lemma 2.6, we deduce from (19) that \( \lim _{k\rightarrow \infty } \phi (z,a_{m_k})=0\). Thus,

Therefore \( \limsup _{k\rightarrow \infty } \phi (z,a_k)=0\) and so, by Lemma 2.4, \( \lim _{k\rightarrow \infty } a_k=z\). This completes the proof. \(\square \)

4 An application and a numerical example

The goal of image recovery techniques is to restore an original image from a degraded observation of it. The convex optimization associated with image recovery problem is

where f is a convex differentiable functional on a real Hilbert space H. Since the solution may vary for any degraded image, problem (20) inherits ill-posedness. To restore well-posedness, regularization techniques are employed. That is, one can obtain a stable solution by introducing a regularization term in (20) to get the following problem:

where \(\nu >0\) is a regularization parameter and g is a regularization function which maybe smooth or nonsmooth. In this work, we consider the classical image recovery problem which is modeled by

where \( x, \,w\) and b are original image, noise and observed image, respectively, and L is a linear map. Problem (21) is ill-posed due to the nature of L. We will use the \(l_1\)-regularizer to solve problem (21) via the model

By setting \(Ax:=\nabla (\dfrac{1}{2}\Vert Lx-b\Vert ^2)=L^T(Lx-b)\) and \(Bx:=\partial (\nu \Vert x\Vert _1)\). Thus, a zero of \((Ax+Bx)\) is an equivalent solution of (22). Hence, we will use algorithm (3) of Shehu (2019), (4) of Kimura and Nakajo (2019) and our proposed algorithm (11) to find a solution of (22).

In our numerical experiments, we used the MATLAB blur function “P=fspecial(’motion’,30,40)” and added random noise. In algorithm (3) of Shehu (2019), we set \(x_1=L x + w\) and \(\nu _n= 0.0001\) and \(\alpha _n=\frac{1}{n+1}\), in algorithm (4) of Kimura and Nakajo (2019), we set \(\nu _n=0.00001\), \(\gamma _n=\frac{1}{n+1}\), u to be zeros, \(x_1=L x + w\). In our proposed algorithm (11) we choose \(\tau _n=0.95\), \(\nu _n=0.0001\) \(\mu _n=\frac{1}{10n+1}\) \(\varepsilon _n=\frac{n}{n+1}\), \(x_0\) to be zeros, \(u=x_1=L x + w\). Finally, we used a tolerance of \(10^{-5}\) and maximum number of iterations (n) to be 100, for all the algorithms. The original test images (Abubakar, Barbra, Abdulkarim) their degradation and restoration via algorithms (3), (4) and (11) are presented in Fig. 1.

Key to Fig. 1. In Fig. 1, the first column presents the original test images followed by the distortion via random noise and motion blur. In the third, fourth and fifth columns, the restored test images via algorithms (3), (4) and (11) are presented, respectively.

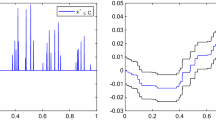

Observe that it will not be easy for one to tell which algorithm performed better in the restoration process from Fig. 1. To distinguish the performance of the algorithms, we use the signal to noise ratio (SNR). It is defined as:

where x is the test image and \(x_n\) is its estimate. Using SNR performance metric, the higher the SNR value for a restored image, the better the restoration process via the algorithm. In Table 1 and Fig. 2, we present the performances of algorithms (3), (4) and our proposed algorithm (11) in restoring the test images.

Graphical illustrations of the SNR values present in Table 1

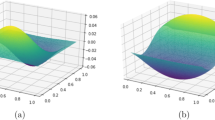

Example 1

(An Example in \(l_{\frac{3}{2}}(\mathbb {R})\)) Consider the subspace of \(l_{\frac{3}{2}}(\mathbb {R})\) defined by

Let \(k=3\). Let \(A: M_3(\mathbb {R}) \rightarrow M_3^*(\mathbb {R})\) and \(B: M_3(\mathbb {R}) \rightarrow M_3^*(\mathbb {R})\) be defined by

It is not difficult to verify that A is \(\frac{1}{4}\)-inverse strongly monotone and B is maximal monotone. Furthermore, observe that A is also 4-Lipschitz continuous. In addition, the solution set \(\Omega =\{ (-0.5,-0.333,-0.166, 0, 0, \ldots ) \}\). In algorithm (3) of Shehu (2019), we set \(\alpha _n=\frac{1}{10,000n+1}\), in algorithm (4) of Kimura and Nakajo (2019), we set \(\gamma _n=\frac{1}{10,000n+1}\), in our proposed algorithm (11), we set \(\tau =0.001\), \(\tau _n=\bar{\tau _n}\), \(\sigma _n=\frac{1}{(n+1)^3}\) and \(\mu _n=\frac{1}{10,000n+1}\). We set u to be zeros in \(M_3(\mathbb {R})\), \(a_0=(0,1,4,0,0,0, \ldots ),\) \(a_1=(4,0,2,0,0,0, \ldots )\). We set maximum number of iterations \(n=300\) and tolerance to be \(10^{-5}\). Finally, we study the behaviour of the algorithms as we vary the values of \(v_n\) (see, Table 2).

5 Discussion

From the results of presented in Table 2, we observe that the choice of \(\nu _n=0.1\) gave the best approximation for algorithm (3) of Shehu (2019). While for algorithm (4) of Kimura and Nakajo (2019) the choice of \(\nu _n=0.3\) gave the best approximation. Finally, the choice of \(v_n=0.6\) or \(v_n=0.7\) gave the best approximation for our proposed algorithm (11). In this experiment, we saw that the step-size \(\nu _n\) has a great influence in approximating the solution for each algorithm. However, for the best choice of \(v_n\) with respect to each algorithm, our proposed algorithm (11) has the least number of iterations compared to algorithm (3) of Shehu (2019) and algorithm (4) of Kimura and Nakajo (2019).

6 Conclusion

This work presents a new inertial Halpern-type algorithm for solving problem (1) in certain Banach spaces. The proposed method was used in the restoration process of some distorted images. Furthermore, a numerical example in \(l_{\frac{3}{2}}(\mathbb {R})\) is presented to support the main theorem. Finally, the performance of the proposed algorithm is compared with that of some existing algorithms and from the simulations presented in Figs. 1 and 2, and Tables 1 and 2, the proposed algorithm appears to be competitive and promising.

Data availability

Data sharing is not applicable to this article.

References

Abubakar J, Sombut K, Ibrahim AH et al (2019) An accelerated subgradient extragradient algorithm for strongly pseudomonotone variational inequality problems. Thai J Math 18(1):166–187

Abubakar J, Kumam P, Ibrahim Abdulkarim H, Padcharoen A (2020a) Relaxed inertial Tseng’s type method for solving the inclusion problem with application to image restoration. Mathematics 8(5):818

Abubakar J, Kumam P, Rehman HU, Ibrahim AH (2020b) Inertial iterative schemes with variable step sizes for variational inequality problem involving pseudomonotone operator. Mathematics 8(4):609

Abubakar J, Kumam P, Taddele GH, Ibrahim AH, Sitthithakerngkiet K (2021) Strong convergence of alternated inertial CQ relaxed method with application in signal recovery. Comput Appl Math 40(8): 1–24

Abubakar J, Kumam P, Garba AI, Abdullahi MS, Ibrahim AH, Wachirapong J (2022) An efficient iterative method for solving split variational inclusion problem with applications. J Ind Manage Optim 18(6):4311

Adamu A, Adam AA (2021) Approximation of solutions of split equality fixed point problems with applications. Carpathian J Math 37(3):381–392

Adamu A, Deepho J, Ibrahim AH, Abubakar AB (2021) Approximation of zeros of sum of monotone mappings with applications to variational inequality problem and image processing. Nonlinear Funct Anal Appl 26:411–432

Adamu A, Kitkuan D, Padcharoen A, Chidume CE, Kumam P (2022a) Inertial viscosity-type iterative method for solving inclusion problems with applications. Math Comput Simul 194:445–459

Adamu A, Kitkuan D, Kumam P, Padcharoen A, Seangwattana T (2022b) Approximation method for monotone inclusion problems in real banach spaces with applications. J Inequal Appl 2022(1):1–20

Alber Y, Ryazantseva I (2006) Nonlinear ill posed problems of monotone type. Springer, Berlin

Alvarez F (2004) Weak convergence of a relaxed and inertial hybrid projection-proximal point algorithm for maximal monotone operators in Hilbert space. SIAM J Optim 14(3):773–782

Chidume CE, Ikechukwu SI, Adamu A (2018) Inertial algorithm for approximating a common fixed point for a countable family of relatively nonexpansive maps. Fixed Point Theory Appl 2018(1):9

Chidume CE, Adamu A, Minjibir MS, Nnyaba UV (2020a) On the strong convergence of the proximal point algorithm with an application to Hammerstein equations. J Fixed Point Theory Appl 22(3):1–21

Chidume CE, Adamu A, Okereke LC (2020b) Strong convergence theorem for some nonexpansive-type mappings in certain banach spaces. Thai J Math 18(3):1537–1548

Chidume CE, Kumam P, Adamu A (2020c) A hybrid inertial algorithm for approximating solution of convex feasibility problems with applications. Fixed Point Theory Appl 2020(1):1–17

Chidume CE, Adamu A, Nnakwe MO (2020d) Strong convergence of an inertial algorithm for maximal monotone inclusions with applications. Fixed Point Theory Appl 2020(1):1–22

Chidume CE, Adamu A, Kumam P, Kitkuan D (2021a) Generalized hybrid viscosity-type forward-backward splitting method with application to convex minimization and image restoration problems. Numer Funct Anal Optim pp 1–22

Chidume CE, Adamu A, Nnakwe MO (2021b) An inertial algorithm for solving Hammerstein equations. Symmetry 13(3):376

Cholamjiak P, Sunthrayuth P, Singta A, Muangchoo K (2020) Iterative methods for solving the monotone inclusion problem and the fixed point problem in banach spaces. Thai J Math 18(3):1225–1246

Cioranescu Ioana (2012) Geometry of Banach spaces, duality mappings and nonlinear problems, vol 62. Springer, Berlin

Hong-Kun X (2002) Iterative algorithms for nonlinear operators. J Lond Math Soc 66(1):240–256

Ibrahim AH, Kumam P, Abubakar AB, Adamu A (2022) Accelerated derivative-free method for nonlinear monotone equations with an application. Numer Linear Algebra Appl 29(3): e2424

Kamimura S, Takahashi W (2002) Strong convergence of a proximal-type algorithm in a banach space. SIAM J Optim 13(3):938–945

Kimura Y, Nakajo K (2019) Strong convergence for a modified forward–backward splitting method in banach spaces. J Nonlinear Var Anal 3(1):5–18

Kitkuan D, Kumam P, Martínez-Moreno J (2019) Generalized Halpern-type forward–backward splitting methods for convex minimization problems with application to image restoration problems. Optimization, pp 1–25

Lions P-L (1978) Une méthode itérative de résolution d’une inéquation variationnelle. Isr J Math 31(2):204–208

Lorenz DA, Pock T (2015) An inertial forward–backward algorithm for monotone inclusions. J Math Imaging Vis 51 (2): 311–325

Luo Y (2020) Weak and strong convergence results of forward–backward splitting methods for solving inclusion problems in banach spaces. J Nonlinear Convex Anal 21(2):341–353

Maingé P-E (2010) The viscosity approximation process for quasi-nonexpansive mappings in Hilbert spaces. Comput Math Appl 59(1):74–79

Nilsrakoo W, Saejung S (2011) Strong convergence theorems by Halpern–Mann iterations for relatively nonexpansive mappings in banach spaces. Appl Math Comput 217(14):6577–6586

Pan C, Wang Y (2019) Convergence theorems for modified inertial viscosity splitting methods in banach spaces. Mathematics 7(2):156

Passty GB (1979) Ergodic convergence to a zero of the sum of monotone operators in Hilbert space. J Math Anal Appl 72(2):383–390. https://doi.org/10.1016/0022-247X(79)90234-8

Phairatchatniyom P, Rehman H, Abubakar J, Kumam P, Martínez-Moreno J (2021) An inertial iterative scheme for solving split variational inclusion problems in real Hilbert spaces. Bangmod Int J Math Comput Sci 7(2):35–52

Polyak BT (1964) Some methods of speeding up the convergence of iteration methods. USSR Comput Math Math Phys 4(5):1–17

Qin X, Cho SY, Yao J-C (2020) Weak and strong convergence of splitting algorithms in banach spaces. Optimization 69(2):243–267

Shehu Y (2019) Convergence results of forward-backward algorithms for sum of monotone operators in banach spaces. Res Math 74(4):1–24

Taddele GH, Gebrie AG, Abubakar J (2021) An iterative method with inertial effect for solving multiple-set split feasibility problem. Bangmod Int J Math Comput Sci 7(2):53–73

Takahashi W, Wong N-C, Yao J-C et al (2012) Two generalized strong convergence theorems of Halpern’s type in Hilbert spaces and applications. Taiwan J Math 16(3):1151–1172

Xu H-K (1991) Inequalities in banach spaces with applications. Nonlinear Anal Theory Methods Appl 16(12):1127–1138. https://doi.org/10.1016/0362-546X(91)90200-K

Yodjai P, Kumam P, Kitkuan D, Jirakitpuwapat W, Plubtieng S (2019) The halpern approximation of three operators splitting method for convex minimization problems with an application to image inpainting. Bangmod Int J Math Comput Sci 5(2):58–75

Acknowledgements

The authors will like to thank the referees for their esteemed comments and suggestions which helped in the improvement of the presentation of this paper. The second author acknowledges with thanks, the King Mongkut’s University of Technology Thonburi’s Postdoctoral Fellowship and the Center of Excellence in Theoretical and Computational Science (TaCS-CoE) for their financial support. The third and fourth authors acknowledge with thanks, the Department of Mathematics and Applied Mathematics at the Sefako Makgatho Health Sciences University.

Funding

This research was financially supported by Rajamangala University of Technology Phra Nakhon (RMUTP) Research Scholarship.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Communicated by Antonio José Silva Neto.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Muangchoo, K., Adamu, A., Ibrahim, A.H. et al. An inertial Halpern-type algorithm involving monotone operators on real Banach spaces with application to image recovery problems. Comp. Appl. Math. 41, 364 (2022). https://doi.org/10.1007/s40314-022-02064-1

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-022-02064-1