Abstract

Recently, Sugihara et al. (Numer Linear Algebra Appl 27:1-25, 2020) studied the right preconditioned MINRES method for solving symmetric singular linear systems. In this paper, we discuss the left and right preconditioned MINRES method for symmetric singular linear systems with singular preconditioners. The convergence of the preconditioned MINRES method are proved under the condition of proper splitting. Numerical results demonstrate the effectiveness of the preconditioned MINRES with singular preconditioners.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Consider the following large sparse system of linear equations:

where \({\mathscr {A}}\in {\mathbb {R}}^{n\times n}\) is symmetric and singular, i.e., \(rank({\mathscr {A}})=m<n\), \(x,b\in {\mathbb {R}}^{n}\). \({\mathscr {R}}({\mathscr {A}})\) and \({\mathscr {N}}({\mathscr {A}})\) denote the range and the null space of \({\mathscr {A}}\), respectively. In this paper, we discuss the situation that (1) is consistent, i.e., \(b\in {\mathscr {R}}({\mathscr {A}})\).

For the solution of the singular linear systems, Brown and Walker (1997) firstly studied the preconditioned GMRES method. Hayami et al. (2010) and Morikuni and Hayami (2015) discussed the GMRES method for least squares problems. To solve a symmetric linear system, one often use minimum residual (MINRES) method (Paige and Saunders 1975), which is mathematically equivalent to the generalized minimal residual (GMRES) method (Saad and Schultz 1986; Saad 2003; William 2015). For general singular linear systems, Hayami and Sugihara (2011) provided a geometrical view of Krylov subspace methods applied to singular systems by decomposing the algorithm into the \({\mathscr {R}}({\mathscr {A}})\) component and the \({\mathscr {R}}({\mathscr {A}})^{\perp }\) component. For solving symmetric singular linear systems, Sugihara et al. (2020) discussed right preconditioned MINRES method with symmetric positive definite (SPD) preconditioner \({\mathscr {M}}\). When the singular system is consistent and the initial guess \(x^{(0)} \in {{\mathscr {R}}({\mathscr {A}})} \), then the right preconditioned MINRES algorithm can be decomposed into the \({\mathscr {R}}({\mathscr {A}})\) component, and the \({\mathscr {R}}({\mathscr {A}})^{\perp _{{\mathscr {M}}^{-1}}}\) component is zero, here \({\mathscr {R}}({\mathscr {A}})^{\perp _{{\mathscr {M}}^{-1}}}\) is the orthogonal complement of \({\mathscr {R}}({\mathscr {A}})\) with respect to the inner product \((,)_{{\mathscr {M}}^{-1}}\).

Generally speaking, when we look for a preconditioner for solving a linear system, we often hope it can approximate the coefficient matrix well. So, when the linear system is nonsingular, we choose a nonsingular matrix as the preconditioner. When the linear system is singular, then taking a singular matrix as the preconditioner may be more reasonable. In Zhang (2010), Zhang and Shen (2013), Zhang and Wei (2010), we studied singular preconditioners for solving singular linear systems. In this paper, we discuss the left and right preconditioned MINRES method for solving (1) with symmetric positive semi-definite (SPSD) preconditioners.

The rest of this paper is organized as follows. In Sect. 2, we present the left preconditioned MINRES algorithm. In Sect. 3, we give the convergence analysis for left preconditioned MINRES method. In Sect. 4, we present the right preconditioned MINRES algorithm. In Sect. 5, we give the convergence analysis for right preconditioned MINRES method. Numerical experiments are presented in Sect. 6. We give two numerical examples to illustrate the effectiveness of MINRES with singular preconditioners. Finally, some conclusions are drawn in Sect. 7.

2 Left preconditioned MINRES method

In this section, we study the left preconditioned MINRES method for solving (1) with singular preconditioners. First we give a brief introduction for MINRES (Paige and Saunders 1975). Let \(x^{(0)}\) be the initial guess and \(r^{(0)}=b-{\mathscr {A}}x^{(0)}\) be the initial residual. MINRES is an iterative method that finds an approximate solution \(x^{(k)}\) which satisfies

where \(K_{k}({\mathscr {A}},r^{(0)})=span(r^{(0)},{\mathscr {A}}r^{(0)},...,{\mathscr {A}}^{k-1}r^{(0)})\) is a Krylov subspace.

Definition 2.1

(Lee et al. 2006) For any \(x, y\in {\mathbb {R}}^{n}\), we define \((x,y)_{S}=y^{T}Sx\), where S is SPSD. Then we can define a semi-norm by follows:

Definition 2.2

(Berman and Plemmons 1974) The splitting \({\mathscr {A}}={\mathscr {M}}-{\mathscr {N}}\) is called a proper splitting of \({\mathscr {A}}\), if \({\mathscr {R}}({\mathscr {A}})={\mathscr {R}}({\mathscr {M}}), {\mathscr {N}}({\mathscr {A}})={\mathscr {N}}({\mathscr {M}})\).

We assume that the preconditioner \({\mathscr {M}}\) is SPSD and \({\mathscr {A}}={\mathscr {M}}-{\mathscr {N}}\) is a proper splitting. Let \({\mathscr {M}}^{+}\) be the Moore-Penrose inverse of \({\mathscr {M}}\), that is

where \((\cdot )^{T}\) denotes the transpose of a matrix or a vector.

With the preconditioner \({\mathscr {M}}\), we consider to solve the following preconditioned system instead of the original system (1).

Notice when \({\mathscr {A}}={\mathscr {M}}-{\mathscr {N}}\) is a proper splitting, then (1) and (3) are equivalent, that is, the solution sets of (1) and (3) are identical (cf. Zhang (2010)).

It is known that \({\mathscr {M}}{\mathscr {M}}^{+}\) is the orthogonal projection onto \({\mathscr {R}}({\mathscr {M}})\) and notice \({\mathscr {R}}({\mathscr {A}})={\mathscr {R}}({\mathscr {M}})\), then \({\mathscr {M}}{\mathscr {M}}^{+}{\mathscr {A}}={\mathscr {A}}\). Since \({\mathscr {A}}\) and \({\mathscr {M}}\) are both symmetric, it holds \(({\mathscr {M}}^{+}{\mathscr {A}}x,y)_{{\mathscr {M}}}=y^{T}{\mathscr {A}}x=(x,{\mathscr {M}}^{+}{\mathscr {A}}y)_{{\mathscr {M}}}\). Thus \({\mathscr {M}}^{+}{\mathscr {A}}\) is self-adjoint with respect to \((,)_{{\mathscr {M}}}\), so we can apply MINRES if the semi-norm in Definition 2.1 is taken with respect to \({\mathscr {M}}\). Hence, we can find an approximate solution \(x^{(k)}\), which satisfies

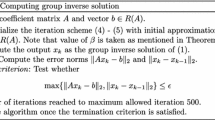

We show the left preconditioned MINRES algorithm as follows.

3 Convergence analysis of left preconditioned MINRES method

In this section, we discuss the convergence properties of the left preconditioned MINRES with the preconditioner \({\mathscr {M}}\).

Theorem 3.1

Denote \({\mathscr {R}}({\mathscr {A}})^{\perp }|_{S}=\{z|(z,y)_{S}=0,\forall y\in {{\mathscr {R}}{({\mathscr {A}})}}\}\). If S is a SPSD matrix, \({\mathscr {A}}=S-(S-{\mathscr {A}})\) is a proper splitting, then it holds \({\mathscr {R}}({\mathscr {A}})^{\perp }|_{S}={\mathscr {N}}(S)\).

Proof

First, for any \( z\in {{\mathscr {N}}(S)}\), \(Sz=0\) holds. Hence, for any \(y\in {{\mathscr {R}}{({\mathscr {A}})}}\), \(y^{T}Sz=0\), then \( z\in {\mathscr {R}}({\mathscr {A}})^{\perp }|_{S}\). So \({\mathscr {N}}(S)\subseteqq {\mathscr {R}}({\mathscr {A}})^{\perp }|_{S}\).

Next, we will prove \({\mathscr {R}}({\mathscr {A}})^{\perp }|_{S}\subseteqq {\mathscr {N}}(S) \). Since S is a SPSD matrix, it holds \({\mathbb {R}}^{n}={\mathscr {R}}(S)\overset{\perp }{\oplus } {\mathscr {N}}(S)\). So there exist \(x_{1}\in {\mathscr {N}}(S)\) and \(y_{1}\in {\mathscr {R}}(S)\) such that \(z=x_{1}+y_{1}\) holds. Therefore, for any \( z\in {{\mathscr {R}}({\mathscr {A}})^{\perp }|_{S}}\) and any \( y\in {{\mathscr {R}}{({\mathscr {A}})}}\), it holds

Noticing that \(S=U{\hat{\Sigma }}U^{T}\), where \({\hat{\Sigma }}=diag({\hat{\sigma }}_{1},...,{\hat{\sigma }}_{m},0,...,0)\in {\mathbb {R}^{n\times n}}\), \(\hat{\sigma }_{i}>0, i=1,2,...,m\) and U is an orthogonal matrix. S can be decomposed as

in which

Since \({\hat{\sigma }}_{i}^{\frac{1}{2}}>0\), \(i=1,2,...,m\), it holds \(S^{\frac{1}{2}}\) is SPSD. It is also easy to see \({\mathscr {N}}(S)={\mathscr {N}}(S^{\frac{1}{2}})\).

Since \({\mathscr {A}}=S-(S-{\mathscr {A}})\) is a proper splitting, by Definition 2.2 we have \({\mathscr {R}}({\mathscr {A}})={\mathscr {R}}(S),{\mathscr {N}}({\mathscr {A}})={\mathscr {N}}(S)\). Then we let \(y=y_{1}\) in (4), it holds

Since \(y_{1}\in {\mathscr {R}}(S)\) and \({\mathscr {N}}(S)={\mathscr {N}}(S^{\frac{1}{2}})\), then \(y_{1}=0\). It follows that \(z=x_{1}\in {\mathscr {N}}(S)\). So \({\mathscr {R}}({\mathscr {A}})^{\perp }|_{S}\subseteqq {\mathscr {N}}(S)\). Hence, \({\mathscr {R}}({\mathscr {A}})^{\perp }|_{S}={\mathscr {N}}(S)\) holds, which finishes the proof. \(\square \)

Lemma 3.2

(Saad and Schultz 1986) If \({\mathscr {A}}\in {{\mathbb {R}}^{n\times n}}\) is nonsingular, then GMRES terminates in at most n steps.

Theorem 3.3

Suppose \({\mathscr {M}}\) is a SPSD matrix and \({\mathscr {A}}={\mathscr {M}}-{\mathscr {N}}\) is a proper splitting, \(rank({\mathscr {A}})=m\). Then the left preconditioned MINRES determines a solution x of

without breakdown, and terminates in at most m steps for all \(b\in {\mathscr {R}}({\mathscr {A}})\) and all initial guess \(x^{(0)}\in {{\mathscr {R}}({\mathscr {A}})}\).

Proof

Since \({\mathscr {M}}\) is SPSD, it holds \({\mathscr {M}}=V{\Sigma }V^{T}\), where \(\Sigma =diag(\sigma _{1},...,\sigma _{m},0,...,0)\in {{\mathbb {R}}^{n\times n}}\), \(\sigma _{i}>0, i=1,2,...,m\) and V is an orthogonal matrix. Then we have \({\mathscr {M}}^{+}=V{\Sigma }^{+}V^{T}\), \({\Sigma }^{+}=diag(\sigma _{1}^{-1},...,\sigma _{m}^{-1},0,...,0)\). \({\mathscr {M}}\) and \({\mathscr {M}}^{+}\) can be decomposed as

in which

Since \(\sigma _{i}^{\frac{1}{2}}>0\), \(\sigma _{i}^{-\frac{1}{2}}>0\), \(i=1,2,...,m\), we know \({\mathscr {M}}^{+\frac{1}{2}}\) and \({\mathscr {M}}^{\frac{1}{2}}\) are SPSD. Since \({\mathscr {A}}={\mathscr {M}}-{\mathscr {N}}\) is a proper splitting, then it holds

and

Let \(q_{1}, q_{2},\text {...}, q_{m}\) be the orthonormal basis of \({\mathscr {R}}({\mathscr {A}})\) with respect to the Euclidean inner product, \( q_{m+1}, q_{m+2},..., q_{n}\) be the orthonormal basis of \({\mathscr {N}}({\mathscr {A}})\) with respect to the Euclidean inner product .

Let

We define the matrices \( Q_{1}, Q_{2}, \hat{Q}_{1}, \hat{Q}\) as follows:

We demonstrate \({\hat{q}}_{1},{\hat{q}}_{2},\text {...},{\hat{q}}_{m}\) are the orthonormal basis of \({\mathscr {R}}({\mathscr {A}}) \) with respect to \((,)_{{\mathscr {M}}}\) as follows.

From (11), it holds \({\hat{q}}_{i}\in {\mathscr {R}}({\mathscr {M}}^{+\frac{1}{2}})\), \(i=1,2,...,m\) and

then we have \({\mathscr {M}}^{\frac{1}{2}}{\hat{Q}}_{1}={\mathscr {M}}^{\frac{1}{2}}{\mathscr {M}}^{+\frac{1}{2}}Q_{1}\). Noticing that \({\mathscr {R}}( Q_{1})={\mathscr {R}}({\mathscr {A}})\), then from (9), it holds \({\mathscr {R}}( Q_{1})={\mathscr {R}}({\mathscr {M}}^{\frac{1}{2}})\). Since \({\mathscr {M}}^{\frac{1}{2}}{\mathscr {M}}^{+\frac{1}{2}}\) is the projection onto \({\mathscr {R}}({\mathscr {M}}^{\frac{1}{2}})\), it holds \({\mathscr {M}}^{\frac{1}{2}}{\mathscr {M}}^{+\frac{1}{2}} Q_{1}= Q_{1}\). Hence,

By (12) and (13), we have \(rank({\hat{Q}}_{1})=rank(Q_{1})=m\). This implies \({\hat{q}}_{1},{\hat{q}}_{2},\text {...},{\hat{q}}_{m}\) are the basis of \({\mathscr {R}}({\mathscr {A}})\) and \({\hat{Q}}\) is nonsingular.

Since

and in the same way, when \(i\not =j\), \((\hat{q}_{i},\hat{q}_{j})_{{\mathscr {M}}}=0 \), \(i, j=1,2,\text {...},m\). Then we have

Therefore, \({\hat{q}}_{1},{\hat{q}}_{2},\text {...},{\hat{q}}_{m}\) are the orthonormal basis of \({\mathscr {R}}({\mathscr {A}})\) with respect to \((,)_{{\mathscr {M}}}\) .

From (14), we obtain

Evidently,

From (8) and noticing \({\mathscr {R}}(Q_{2})={\mathscr {N}}({\mathscr {A}})\), we have \({\mathscr {M}}Q_{2}=0\). So it holds

Because \({\hat{Q}}\) is nonsingular, we obtain

Noticing \({\mathscr {M}}^{+}{\mathscr {M}}\hat{Q}_{1}=\hat{Q}_{1}\), then it holds

Let \(\hat{r}={\mathscr {M}}^{+}b-{\mathscr {M}}^{+}{\mathscr {A}}x\), \(\hat{r}\in {\mathscr {R}}({\mathscr {A}})\) holds. Therefore, there exists \(\hat{r}_{1}\in {\mathbb {R}}^{m}\) such that

Together with (15), it holds

Let

Then

Noticing \({\mathscr {A}} Q_{2}=0\), it holds

Since \(rank({\mathscr {A}})=m\) and \(\hat{Q}\) is nonsingular, then \(rank(\hat{Q}^{T}{\mathscr {A}}\hat{Q})=m\). Together with (25), we have \(rank(\hat{A}_{1})=rank(\hat{Q}^{T}{\mathscr {A}}\hat{Q})=m\). That is, \(\hat{A}_{1}\) is nonsingular.

For solving a symmetric linear system, GMRES is mathematical equivalent to MINRES. Since GMRES determines a least squares solution without breakdown for nonsingular systems (Saad and Schultz 1986). So MINRES determines a least squares solution without breakdown for the symmetric nonsingular system \(\hat{A}_{1}\hat{z}_{1}=\hat{b}_{1}\). By Lemma 3.2, MINRES converges to the solution of

in at most m iterations.

Therefore, the left preconditioned MINRES determines the solution \(x^{(k)}\) of

without breakdown for all \(b\in {\mathscr {R}}({\mathscr {A}})\) and all \(x^{(0)}\in { {\mathscr {R}}({\mathscr {A}})}\), and terminates in at most m steps, which finishes the proof. \(\square \)

Since both b and \(x^{(0)}\) are in \({\mathscr {R}}({\mathscr {A}})\), then we can decompose the left preconditioned MINRES (Algorithm 1) into the \({\mathscr {R}}({\mathscr {A}})\) component, and \({\mathscr {R}}({\mathscr {A}})^{\perp }|_{\mathscr {M}^{+}}\) component is zero, see the following decomposed left preconditioned MINRES algorithm (Algorithm 2). Algorithm 2 is equivalent to MINRES applied to \({\hat{A}}_{1}{\hat{z}}_{1}={\hat{b}}_{1}\), and then compute \(x={\hat{Q}}_{1}{\hat{z}}_{1}\).

Notice that if the conditions in Theorem 3.3 are satisfied, then \(\hat{z}^{(j)}_{1}\) converges to \(\hat{A}^{-1}_{1}\hat{Q}^{T}_{1}b\) and \(x^{(j)}\) converges to \(\hat{Q}_{1}\hat{A}^{-1}_{1}\hat{Q}^{T}_{1}b\).

When the linear system (1) is a SPSD system, we now estimate the convergence rate of left preconditioned MINRES algorithm (Algorithm 1), or equivalently Algorithm 2. Recalling the proof for Theorem 3.3, \(\hat{A}_{1}=\hat{Q}^{T}_1{\mathscr {A}}\hat{Q}_{1}\) is nonsingular. Then \(\hat{A}_{1}\) is SPD since \({\mathscr {A}}\) is SPSD. So there exist a orthogonal matrix \(\Phi \) and a diagonal matrix \(\Theta =diag(\theta _1,\theta _2,\cdot \cdot \cdot ,\theta _m)\) with \(0<\theta _1\le \theta _2\le \cdot \cdot \cdot \le \theta _m\) such that \(\hat{A}_{1}=\Phi \Theta \Phi ^T\).

Theorem 3.4

Assume the conditions in Theorem 3.3 are satisfied and \({\mathscr {A}}\) is SPSD. Then the residual norm \(||\hat{r}^{(j)}_{1}||_{2}=||\hat{b}_{1}-\hat{A}_{1}\hat{z}^{(j)}_{1}||_{2}\) achieved by the jth step of Algorithm 2 satisfies the inequality

Proof

Let \({\mathbb {P}}_j\) be the polynomial set of degree not exceeding j that satisfies the constraint \({\mathbb {P}}_j(0)=1\). It is known that it holds

Let \(p_1(\mu )=1+\alpha \mu \in {\mathbb {P}}_1\). Then \(p^j_1(\mu )\in {\mathbb {P}}_j\). Obviously it holds

Notice

and for any \(\alpha <0\) it holds

Then

Take \(\alpha =-\frac{\theta _1}{\theta ^2_m}\), then we have

Together with (27), (28) and (29) we obtain (26), which finishes the proof. \(\square \)

4 Right preconditioned MINRES method

Using the preconditioner \({\mathscr {M}}\) defined in Sect. 2, we consider to solve the following system of linear equations.

Making use of Definition 2.1, we define a semi-norm

Notice \(({\mathscr {A}}{\mathscr {M}}^{+}x,y)_{{\mathscr {M}}^{+}}=y^{T}{\mathscr {M}}^{+}{\mathscr {A}}{\mathscr {M}}^{+}x= (x,{\mathscr {A}}{\mathscr {M}}^{+}y)_{{\mathscr {M}}^{+}}\), which means \({\mathscr {A}}{\mathscr {M}}^{+}\) is self-adjoint with respect to \((,)_{{\mathscr {M}}^{+}}\). We apply MINRES to solve (30) with the semi-norm \(||.||_{{\mathscr {M}}^{+}}\), that is, find an approximate solution \(z^{(k)}\) such that

and then compute the solution \(x^{(k)}={\mathscr {M}}^{+}z^{(k)}\).

We show the right preconditioned MINRES algorithm as follows.

5 Convergence analysis of right preconditioned MINRES method

Lemma 5.1

(Zhang 2010) If \({\mathscr {R}}({\mathscr {A}})={\mathscr {R}}({\mathscr {M}})\), then

(1) \({\mathscr {R}}(({\mathscr {M}}^{+}{\mathscr {A}} )^{T})={\mathscr {R}}({\mathscr {A}}^{T})\); (2) \({\mathscr {R}}({\mathscr {M}}^{+}{\mathscr {A}})={\mathscr {R}}({\mathscr {M}}^{T})\).

Theorem 5.2

Suppose \({\mathscr {M}}\) is a SPSD matrix and \({\mathscr {A}}={\mathscr {M}}-{\mathscr {N}}\) is a proper splitting, \(rank({\mathscr {A}})=m\). Then right preconditioned MINRES which determines a solution \(x={\mathscr {M}}^{+}z\) of

without breakdown, and terminates in at most m steps for all \(b\in {\mathscr {R}}({\mathscr {A}})\) and all \(z^{(0)}\in {\mathbb {R}}^{n}\).

Proof

Recalling \(q_{i}\), \(Q_{1}\) and \(Q_{2}\) defined in Sect. 3, let

Define the matrices \( \tilde{Q}_{1}, \tilde{Q}\) as follows:

From (32), it holds \({\tilde{q}}_{i}\in {\mathscr {R}}({\mathscr {M}}^{\frac{1}{2}})\), \(i=1,2,...,m, \) and we obtain

Then we have \({\mathscr {M}}^{+\frac{1}{2}}\tilde{Q}_{1}={\mathscr {M}}^{+\frac{1}{2}}{\mathscr {M}}^{\frac{1}{2}}Q_{1}\). Noticing that \({\mathscr {R}}( Q_{1})= {\mathscr {R}}(({\mathscr {M}}^{\frac{1}{2}})^{T})\) and \({\mathscr {M}}^{+\frac{1}{2}}{\mathscr {M}}^{\frac{1}{2}}\) is the projection onto \({\mathscr {R}}(({\mathscr {M}}^{\frac{1}{2}})^{T})\), so it holds \({\mathscr {M}}^{+\frac{1}{2}}{\mathscr {M}}^{\frac{1}{2}} Q_{1}= Q_{1}\). Hence,

By (33) and (34), we have \(rank(\tilde{Q}_{1})=rank(Q_{1})=m\). This implies \({\tilde{q}}_{1},\tilde{q}_{2},\text {...},{\tilde{q}}_{m}\) are the basis of \({\mathscr {R}}({\mathscr {A}})\) and \({\tilde{Q}}\) is nonsingular.

Since

and in the same way, when \(i\not =j\), \((q_{i},q_{j})_{{\mathscr {M}}^{+}}=0 \), \(i, j=1,2,\text {...},m.\) Then we have

Therefore, \({\tilde{q}}_{1},{\tilde{q}}_{2},\text {...},{\tilde{q}}_{m}\) are the orthonormal basis of \({\mathscr {R}}({\mathscr {A}})\) with respect to \((,)_{{\mathscr {M}}^{+}}\) .

From (35), we obtain

Evidently,

Noticing \({\mathscr {M}}^{+}Q_{2}=0\), so it holds

Because \({\tilde{Q}}\) is nonsingular, we have

Let \({\tilde{r}}=b-{\mathscr {A}}x\). Since \(b\in {\mathscr {R}}({\mathscr {A}})\), \({\tilde{r}}\in {\mathscr {R}}({\mathscr {A}})\) holds. Therefore, there exists \({\tilde{r}}_{1}\in {\mathbb {R}}^{m}\) such that

Together with (36), we have

Let

Then

Since \({\mathscr {A}}={\mathscr {M}}-{\mathscr {N}}\) is a proper splitting, then from Lemma 5.1, it holds

Hence, we have

Since

then we have \(rank({\mathscr {M}}^{+}{\mathscr {A}}{\mathscr {M}}^{+})=m\). Since \({\tilde{Q}}\) is nonsingular, it holds \(rank(\tilde{Q}^{T}{\mathscr {M}}^{+}{\mathscr {A}}{\mathscr {M}}^{+}{\tilde{Q}})=m\).

Noticing \({\mathscr {M}}^{+}Q_{2}=0\), it holds

Then, we have \(rank({\tilde{A}}_{1}) =rank(\tilde{Q}^{T}{\mathscr {M}}^{+}{\mathscr {A}}{\mathscr {M}}^{+}{\tilde{Q}})=m\). Therefore, \({\tilde{A}}_{1}\) is nonsingular.

For solving a symmetric linear system, GMRES is mathematical equivalent to MINRES. Since GMRES determines a least squares solution without breakdown for nonsingular systems (Saad and Schultz 1986). Therefore, MINRES determines a least squares solution without breakdown for symmetric nonsingular systems. From Lemma 3.2, MINRES converges to the solution of

in at most m iterations.

Therefore, the right preconditioned MINRES which determines a solution \(x={\mathscr {M}}^{+}z\) of

without breakdown and terminates in at most m steps for all \(b\in {\mathscr {R}}({\mathscr {A}})\) and all \(z^{(0)}\in {\mathbb {R}}^{n}\). \(\square \)

Since both b and \(x^{(0)}\) are in \({\mathscr {R}}({\mathscr {A}})\), then we can decompose the right preconditioned MINRES (Algorithm 3) into the \({\mathscr {R}}({\mathscr {A}})\) component, and \({\mathscr {R}}({\mathscr {A}})^{\perp }|_{\mathscr {M}^{+}}\) component is zero, see the following decomposed right preconditioned MINRES algorithm (Algorithm 4). Algorithm 4 is equivalent to MINRES applied to \({\tilde{A}}_{1}{\tilde{z}}_{1}={\tilde{b}}_{1}\), and then compute \(x={\mathscr {M}}^{+}{\tilde{Q}}_{1}{\tilde{z}}_{1}\).

Notice that if the conditions in Theorem 5.2 are satisfied, then \({\tilde{z}}^{(j)}_{1}\) converges to \(\tilde{A}^{-1}_{1}{\tilde{Q}}^{T}_{1}{\mathscr {M}}^{+}b\) and \(x^{(j)}\) converges to \({\mathscr {M}}^{+}{\tilde{Q}}_{1}{\tilde{A}}^{-1}_{1}\tilde{Q}^{T}_{1}{\mathscr {M}}^{+}b\).

Similar to Theorem 3.4 we can estimate the convergence rate of right preconditioned MINRES algorithm, here omitted. In this paper, we use MINRES to solve the left preconditioned system (3) and the right preconditioned system (30), respectively. Hayami et al. (2010) studied BA-GMRES (AB-GMRES), which have similar meanings with left preconditioned MINRES (right preconditioned MINRES). That is, BA-GMRES use GMRES to solve the left preconditioned system (3), AB-GMRES use GMRES to solve the right preconditioned system (30), respectively. AB-GMRES and BA-GMRES both determine the least squares solutions for singular linear systems.

6 Numerical results

In this section, we give three numerical examples of singular saddle point problems to illustrate the effectiveness of MINRES with singular preconditioners. That is, we consider the following system of linear equations:

where A is a SPD matrix, B is rank deficient. All the numerical experiments are implemented in MATLAB (version R2018b) on a PC computer with Intel(R) Core(TM) i5-10500 CPU 3.10 GHz, 16.0 GB memory. We denote the elapsed CPU time (in second) by CPU, the iteration numbers by IT, and the norm of the residuals by RES, respectively. In our computations all the initial guesses \({x}^{(0)}\) are taken by zero vectors, and the computations are terminated once the stopping criterion

is satisfied.

Example 6.1

(Elman 1999; Zhang 2010) Discretize Navier–Stokes equations by “maker and cell ”(MAC) finite difference scheme, we can obtain the following linear system:

where

\({\widetilde{A}}\) is nonsymmetric and positive definite. We denote \(s=2l(l-1)\), \(t=l^2\) in this example, \(rank(B)=t-1\). To ensure the (1,1)-block matrix be SPD, we take the coefficient matrix as follows:

where \(A=\dfrac{{\widetilde{A}}+{\widetilde{A}}^{T} }{2}, b={\mathscr {A}}e_{s+t}\), \(e_{s+t}=(1,1,...,1)^{T}\in R^{s+t}\).

Example 6.2

(Chao and Zhang 2014) Consider the following saddle point problem:

where

in which

where \(h=\dfrac{1}{l+1}\), \(e_{l^{2}/2}=(1,1,...,1)^{T}\in {\mathbb {R}}^{l^{2}/2}\), \(b={\mathscr {A}}e_{s+t}\), where \( e_{s+t}=(1,1,...,1)^{T}\in R^{s+t}, s=2l^{2}, t=l^{2}+2\). Then A is SPD, \(rank(B)=t-2\).

Example 6.3

(Shen and Huang 2011) Consider the following Stokes equations:

where the vector function u is the velocity while the scalar function p is the pressure of the fluid, \(\Omega \) is an open bounded domain. We use the IFISS software written by Silvester et al. (2012) to discretize the leaky two-dimensional lid-driven cavity problems in a square domain \((-1, 1)\times (-1, 1)\), and the boundary conditions on the side and bottom are Dirichlet no-flow conditions. A finite element subdivision based on uniform grids of square elements is taken. Then we can obtain a kind of saddle-point problem with the form (50), with the (1,1) block A of the generated coefficient matrix be SPD, and the (1,2) block B be rank deficient, see (Shen and Huang 2011) for detail. We still denote the order of A by \(s\times s\) and B by \(t\times s\), respectively.

Proposition 6.4

(Zhang 2010) For \({\mathscr {A}}= \begin{bmatrix} A &{} B^{T} \\ B &{} 0 \end{bmatrix}\), where \(A\in {{\mathbb {R}}^{s\times s}}\) is SPD, \(B\in {{\mathbb {R}}^{t\times s}}, t \le s\), If M is SPD in the block diagonal preconditioner \({\mathscr {M}}=\begin{bmatrix} M &{} 0 \\ 0 &{} BM^{-1}B^{T} \end{bmatrix}\), then \({\mathscr {A}}={\mathscr {M}}-({\mathscr {M}}-{\mathscr {A}})\) is a proper splitting.

Notice if \(M_{1}\) and \(M_{2}\) are SPD in the preconditioner \({\mathscr {M}}=\begin{bmatrix} M_{1} &{} 0 \\ 0 &{} BM_{2}^{-1}B^{T} \end{bmatrix}\), then \({\mathscr {A}}={\mathscr {M}}-({\mathscr {M}}-{\mathscr {A}})\) is also a proper splitting, and it holds that \({\mathscr {M}}\) is SPSD. We list four suitable singular preconditioners in Table 1, that is \({\mathscr {M}}_I\), \({\mathscr {M}}_{II}\), \({\mathscr {M}}_{III}\) and \({\mathscr {M}}_{IV}\).

We test preconditioned MINRES with different preconditioners, including the SSOR preconditioner \({\mathscr {M}}_{SSOR}\) (Sugihara et al. 2020) and the preconditioners listed in Table 1. We also test the preconditioned GMRES with SSOR preconditioner \({\mathscr {M}}_{SSOR}\), the preconditioners listed in Table 1, and the constraint preconditioner \({\mathscr {M}}_{const}\) (Zhang and Shen 2013), respectively. The drop tolerance of the incomplete Cholesky factorization is 0.001. The SSOR preconditioner \({\mathscr {M}}_{SSOR}\) is defined by follows.

where L is the strictly lower triangular part of \({\mathscr {A}}\), and \(D=\begin{bmatrix} D_{1} &{} O \\ O &{} I_{t} \end{bmatrix}\), \(D_{1}\) is the diagonal part of A. The constraint preconditioner \({\mathscr {M}}_{const}\) is defined by follows:

where \(M_l\)=\(diag(\beta _1,\beta _2,...,\beta _n)\), where \(\beta _{j}\) is 1-norm of the jth row of A, \(j=1,2,...,n\).

Numerical results can be found in Tables 2, 3 and 4. LMINRES and RMINRES in these tables denote left preconditioned MINRES and right preconditioned MINRES, respectively. It can be seen that for the same preconditioner, iteration numbers and CPU times of preconditioned MINRES are almost less than that of preconditioned GMRES. Among the four peconditioners in Table 1, \({\mathscr {M}}_{II}\) preconditioned MINRES performs better than that of the other three cases. In addition, \({\mathscr {M}}_{II}\) preconditioned MINRES always performs better than \({\mathscr {M}}_{SSOR}\) preconditioned MINRES and \({\mathscr {M}}_{const}\) preconditioned GMRES. By comparing left preconditioning and right preconditioning techniques with MINRES, we see the iteration number of them are almost the same, but the elapsed CPU time of left preconditioned MINRES are almost less than that of right preconditioned MINRES. Notice in Tables 2, 3 and 4, if CPU>5000s or IT>1500, then the numerical results are replaced by “+”. And “s+t” represents the order of the corresponding coefficient matrices.

7 Conclusions

We have presented the left and right preconditioned MINRES method for solving symmetric singular linear systems. We take the singular preconditioners under the condition of proper splitting, and give the convergence analysis for preconditioned MINRES. It shows that the left and right preconditioned MINRES converges to a solution when the system is consistent. Numerical results have demonstrated the effectiveness of the preconditioned MINRES with singular preconditioners.

References

Berman A, Plemmons RJ (1974) Cones and iterative methods for best least squares solutions of linear systems. SIAM J Numer Anal 11:145–154

Brown PN, Walker HF (1997) GMRES on (nearly) singular systems. SIAM J Matrix Anal Appl 18:37–51

Chao Z, Zhang N-M (2014) A generalized preconditioned HSS method for singular saddle point problems. Numer Algorithms 66:203–221

Elman HC (1999) Preconditioning for the steady-state Navier-Stokes equations with low viscosity. SIAM J Sci Comput 20:1299–1316

Hayami K, Sugihara M (2011) A geometric view of Krylov subspace methods on singular systems. Numer Linear Algebra Appl 18:449–469

Hayami K, Yin J-F, Ito T (2010) GMRES methods for least squares problems. SIAM J Matrix Anal Appl 31:2400–2430

Lee Y-J, Wu J-B, Xu J-C, Zikatanov L (2006) On the convergence of iterative methods for semidefinite linear systems. BIT Numer Math 28:634–641

Morikuni K, Hayami K (2015) Convergence of inner-iteration GMRES methods for rank-deficient least squares problems. SIAM J Matrix Anal Appl 36:225–250

Paige CC, Saunders MA (1975) Solution of sparse indefinite systems of linear equations. SIAM J Numer Anal 12:617–629

Saad Y (2003) Iterative methods for sparse linear systems. SIAM, Philadelphia

Saad Y, Schultz MH (1986) GMRES: a generalized minimal residual algorithm for solving nonsymmetric linear systems. SIAM J Sci Stat Comput 7:856–869

Shen S-Q, Huang T-Z (2011) A symmetric positive definite preconditioner for saddle-point problems. Int J Comput Math 88:2942–2954

Silvester DJ, Elman HC, Ramage A (2012) IFISS: Incompressible Flow Iterative Solution Software. http://www.manchester.ac.uk/ifiss

Sugihara K, Hayami K, Zheng N (2020) Right preconditioned MINRES for singular systems. Numer Linear Algebra Appl 27:1–25

William F (2015) Numerical Linear Algebra with Applications-Using MATLAB, Elsevier Inc

Zhang N-M (2010) A note on preconditioned GMRES for solving singular linear system. BIT Numer Math 50:207–220

Zhang NM, Wei Y (2010) On the convergence of general stationary iterative methods for range-Hermitian singular linear systems. Numer Linear Algebra Appl 17:139–154

Zhang N-M, Shen P (2013) Constraint preconditioners for solving singular saddle point problems. J Computat Appl Math 238:116–125

Acknowledgements

The authors are grateful to the referees and the editor for their very detailed comments and suggestions, which significantly improved this paper.

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Jinyun Yuan.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Nai-Min Zhang is supported by National Natural Science Foundation of China under Grant No. 61572018.

Rights and permissions

Springer Nature or its licensor holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Hong, LY., Zhang, NM. On the preconditioned MINRES method for solving singular linear systems. Comp. Appl. Math. 41, 304 (2022). https://doi.org/10.1007/s40314-022-02007-w

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-022-02007-w