Abstract

We study the inverse problem of recovering the potential q(x) from the spectrum of the operator \(-y''(x)+q(x)y(a),\) \(y^{(\alpha )}(0)=y^{(\beta )}(1)=0,\) where \(\alpha ,\beta \in \{0,1\}\) and \(a\in [0,1]\) is an arbitrary fixed rational number. We completely describe the cases when the solution of the inverse problem is unique and non-unique. In the last case, we describe sets of iso-spectral potentials and provide various restrictions on the potential under which the uniqueness holds. Moreover, we obtain an algorithm for solving the inverse problem along with necessary and sufficient conditions for its solvability in terms of characterization of the spectrum.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

We study the inverse problem of recovering the complex-valued potential \(q(x)\in L_2(0,1)\) from the spectrum of the boundary value problem \(L=L(q(x),a,\alpha ,\beta )\) of the form

where \(\lambda \) is the spectral parameter and \(\alpha ,\beta \in \{0,1\},\) while \(a\in [0,1]\cap {{\mathbb {Q}}}.\) The latter means that \(a=j/k,\) where \(j+1,k\in {{\mathbb {N}}}\) and \(j\le k.\) We always assume that j and k are mutually prime. The case of irrational a requires a separate investigation. The operator \(\ell \) is called the Sturm–Liouville-type operator with frozen argument.

The most complete results in the inverse spectral theory have been obtained for the classical Sturm–Liouville operator (see, e.g., Borg 1946; Marchenko 1986; Levitan 1987; Freiling and Yurko 2001) and later on for higher order differential operators (Beals et al. 1988; Yurko 2002). For operators with frozen argument as well as for other classes of non-local operators the classical methods of the inverse spectral theory do not work.

Equation (1) belongs to the class of the so-called loaded differential equations. Loaded equations appear in mathematics, physics, mathematical biology, etc. (see, e.g., Nakhushev 2012; Lomov 2014 and references therein). For example, Eq. (1) arises in modelling heat conduction in a rod of unit length possessing an interior distributed heat source described by the function q(x) whose power is proportional to the temperature at some its fixed point. Analogously, one can model the oscillatory process with a damping external force proportional to the displacement of the oscillatory system at a fixed point (e.g., where the displacement sensor is installed).

Inverse spectral problems for operators with frozen argument were studied in Albeverio et al. (2007), Nizhnik (2009, 2010, 2011), Bondarenko et al. (2019) and Buterin and Vasiliev (2019). In Albeverio et al. (2007) and Nizhnik (2009, 2010) in connection with the theory of diffusion processes, the cases \(a=1\) and \(a=1/2\) were investigated with a special nonlocal boundary condition guarantying self-adjointness of the corresponding operator. In Nizhnik (2011), an analogous situation has been studied for a first-order operator. In Bondarenko et al. (2019), the boundary value problem L was considered in the case of \(j=1,\) i.e. when \(a^{-1}\in {{\mathbb {N}}}.\) In particular, it was established that unique solvability of the inverse problem depends on the values of \(\alpha ,\,\beta \) and sometimes on the parity of \(k=a^{-1}.\) According to this, in Bondarenko et al. (2019), there were highlighted two cases: degenerate and non-degenerate ones. It was established that in the degenerate case asymptotically kth part of the spectrum degenerates, i.e. each kth eigenvalue carries no information on the potential. For example, the Dirichlet boundary conditions (i.e. when \(\alpha =\beta =0)\) correspond to the degenerate case for all \(k\in {{\mathbb {N}}}.\) In Buterin and Vasiliev (2019), it was proved that this remains true for an arbitrary rational a.

In the present paper, we comprehensively study all types of boundary conditions (2) in the case of an arbitrary rational a. We completely describe the degenerate and non-degenerate cases. Moreover, we obtain algorithms for solving the inverse problem along with necessary and sufficient conditions for its solvability in terms of characterization of the spectrum. In the degenerate case, we describe sets of iso-spectral potentials and present various possible restrictions on the potential under which the uniqueness holds. We note that accepting arbitrary rational a enriches the description of degenerate and non-degenerate cases comparing with the situation when \(j=1.\) Moreover, observing the rational case also allows one to notice that the amount of information on the potential carried by the spectrum may significantly vary between arbitrarily close values of a. However, for a tending through the positions, corresponding to the degenerate case, to any fixed value this informativity always grows (see Remark 4).

We also note that the operator \(\ell \) is a particular case of the operator with deviating argument

with \(\eta (x)\equiv a\) in the case of \(\ell .\) Some aspects of inverse problems for the operator \(\ell _1\) with \(\eta (x)=x-\tau \) (i.e. with the constant delay \(\tau >0)\) have been studied in Freiling and Yurko (2012); Yang (2014); Vladičić and Pikula (2016); Buterin and Yurko (2019); Bondarenko and Yurko (2018) and other works.

The paper is organized as follows. In the next section, we introduce and study the characteristic function of L and derive the so-called main equation of the inverse problem. In Sect. 3, we study solvability of the main equation and describe the non-degenerate and degenerate cases according to whether the main equation is uniquely solvable or not, respectively. In Sect. 4, properties of the spectrum of L are established. In Sect. 5, we prove the uniqueness theorem, obtain a constructive procedures for solving the inverse problem along with necessary and sufficient conditions for its solvability, and provide a complete description of iso-spectral potentials in the degenerate case.

2 Characteristic function and main equation

Let \(C(x,\lambda ), \ S(x,\lambda )\) be solutions of Eq. (1) under the initial conditions

Put \(\rho ^2=\lambda .\) Then it is easy to check that

Clearly, eigenvalues of the boundary value problem L coincide with zeros of the entire function

which is called the characteristics function of L.

Put \(b:=1/k\) and let \(f(t)\in L_2(0,1).\) Consider the following shift and involution operators:

where \(t\in (0,b)\) and \(\nu ={\overline{1,k}}.\) We introduce the operators \(Q,R:L_2(0,1)\rightarrow (L_2(0,b))^k\) by the formulae

where T is the transposition sign. Obviously, the operators Q and R are bijective.

Remark 1

Since the spectrum of the problem \(L(q(x),a,\alpha ,\beta )\) coincides with the one of \(L(q(1-x),1-a,\beta ,\alpha ),\) without loss of generality, we assume that \(0\le a\le 1/2,\) i.e. \(2j\le k.\)

Lemma 1

The characteristic function \(\Delta _{\alpha ,\beta }(\lambda )\) of the problem L has the form

if \(\alpha =\beta ,\) and

if \(\alpha \ne \beta .\) Moreover, the function \(W_{\alpha ,\beta }(t)\) is determined by the formula

where \(A_{0,1}=2(-1)^{\beta +1}\alpha \) for \(k=1,\) while for \(k>1:\) \(A_{j,k}=(a_{m,n})_{m,n={\overline{1,k}}}\) is a square matrix of order k, such that

Here the items \((s_1)\)–\((s_4)\) correspond to subdiagonals, consisting of equal elements, namely 1,

Proof

Substituting the representations (3) into (4) for \(\alpha =\beta \) and using the formulae

we obtain the representation

Changing the variables of integration, we get

Analogously, for \(\alpha \ne \beta \) we obtain

Formulae (12) and (13) imply (7) and (8), respectively, where the function \(W_{\alpha ,\beta }(t)\) has the form

According to Remark 1, for \(k=1\) we have \(a=0\) and, hence, by virtue of (11) and (14), we arrive at (9) for \(k=1.\)

Let \(k>1.\) Note that the last equality in (7) can be obtained by the direct calculation using (14) for \(\alpha =\beta =0.\) It is also a simple corollary from the entireness of the function \(\Delta _{0,0}(\lambda ).\)

Acting by the operator Q on the both sides of (14) and using (5), (6) we get

Indeed, rewrite (14) in the form

Then, by virtue of the first formula in (5), we have

- (i)

for \(\nu ={{\overline{1,j}}}:\)

$$\begin{aligned}&Q_\nu W_{\alpha ,\beta }(t)\nonumber \\&\quad =\frac{(-1)^{\alpha \beta }}{2} \left\{ \begin{array}{l}q (t+(k-j+\nu -1)b)+dq((k-j-\nu +1)b-t) \;\text {for odd}\;\nu ,\\ q((k-j+\nu )b-t)+dq(t+(k-j-\nu )b) \;\text {for even}\;\nu ; \end{array}\right. \end{aligned}$$ - (ii)

for \(\nu ={\overline{j+1,k-j}}:\)

$$\begin{aligned}&Q_\nu W_{\alpha ,\beta }(t) \nonumber \\&\quad =\frac{(-1)^{\alpha \beta }}{2} \left\{ \begin{array}{l} cq ((k+j-\nu +1)b-t) +dq ((k-j-\nu +1)b-t) \;\text {for odd}\;\nu ,\\ cq (t+(k+j-\nu )b) +dq (t+(k-j-\nu )b) \;\text {for even}\;\nu ; \end{array}\right. \end{aligned}$$ - (iii)

for \(\nu ={\overline{k-j+1,k}}:\)

$$\begin{aligned}&Q_\nu W_{\alpha ,\beta }(t) \nonumber \\&\quad =\frac{(-1)^{\alpha \beta }}{2}\left\{ \begin{array}{l} c (q ((k+j-\nu +1)b-t ) +q (t+(\nu -1-k+j)b )) \;\text {for odd}\;\nu ,\\ c (q (t+(k+j-\nu )b ) +q ((\nu -k+j)b-t)) \;\text {for even}\;\nu . \end{array}\right. \end{aligned}$$

Summarizing (i)–(iii) and using the second formula in (5), we arrive at (15). Finally, taking (10) and bijectivity of Q into account, we arrive at (9) for \(k>1.\) \(\Box \)

The relation (9) can be considered as an equation with respect to the function q(x), which is uniquely solvable if and only if \(\det {A_{j,k}}\ne 0.\) We call it main equation of the inverse problem.

3 Degenerate and non-degenerate cases

In Sect. 5, we reduce our inverse problem to solving the main Eq. (9). Here we study the solvability of (9). The case \(k=1\) is obvious. Let \(k>1.\) To calculate \(\det {A_{j,k}},\) we introduce the numeration \(\gamma =\gamma (m,n)\) (the so-called \(\gamma \)-numeration) of non-zero elements \(a_{m,n}\) from 1 up to 2k by the following rule:

For example, the \(\gamma \)-numeration for the matrix \(A_{3,7}\) can be demonstrated in the following way:

where asterisks indicate zero elements.

By definition, we have

where \(\sigma =(\sigma (s))_{s={\overline{1,k}}}\) ranges over all possible permutations and \(\mathrm {sign}(\sigma )=(-1)^{n_\sigma },\) where, in turn, \(n_\sigma \) is the number of inversions in the permutation \(\sigma .\)

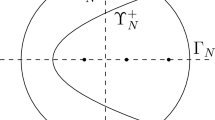

Let us prove that there exist only two permutations for which \(a_{s,\sigma (s)}\ne 0\) for all \(s={\overline{1,k}}.\) For this purpose, we consider the undirected graph G with the set of vertices \(V= \{v_s\}_{s=1}^{2k}.\) We assume that any two vertices \(v_{s_1}\) and \(v_{s_2}\) are connected by an edge if and only if the \(s_1\)th and \(s_2\)th elements (in \(\gamma \)-numeration) of the matrix \(A_{j,k}\) belong to one and the same row or column. According to this, all edges can be subdivided into row-edges and column-edges.

Since any row or column in \(A_{j,k}\) possesses precisely two nonzero elements, degrees of all vertices in G equal to 2. Hence, the graph G consists of non-intersecting Eulerian cycles. Moreover, G possesses only one Eulerian cycle, i.e. the following lemma holds.

Lemma 2

The graph G is a bipartite Eulerian cycle with interlacing odd and even vertices.

Proof

From (16), it follows that the sum of \(\gamma \)-numbers of two nonzero elements in one and the same column equals to \(2j+1\) or \(2j+2k+1.\) It can be seen by summing the \(\gamma \)-numbers of elements having one and the same m but belonging to different subdiagonals in (16). Moreover, it is obvious that the sum of \(\gamma \)-numbers of nonzero elements in one and the same row always equals to \(2k+1.\) Consequently, any two vertices are connected by an edge only if they have different parities. Thus, the graph G is bipartite and, hence, so is each its Eulerian cycle.

Let us start traversal of the graph G by passing from the vertex \(v_1\) to \(v_{2j}.\) Then it is possible to return to \(v_1\) only from the vertex \(v_{2k}\) and only after passing some even number 2s, \(1\le s\le k,\) of vertices, including the starting one. It remains to prove that \(s=k.\) Indeed, in our path, obviously, the passing from any \((2n-1)\)th visited vertex to the 2nth one is always accomplished via a column-edge, while the passing from a 2nth one to the \((2n+1)\)th one is made via a row-edge. Let us show by induction that the \(\gamma \)-number of any 2nth visited vertex is congruent to 2nj modulo 2k. Since the second visited vertex is \(v_{2j},\) for \(n=1\) this statement is obvious. Assume that it is true for some \(n\le s-1.\) Then according to the first part of the proof, the \(\gamma \)-number of the \((2n+1)\)th visited vertex is congruent to \(1-2nj\) modulo 2k. Therefore, the \(\gamma \)-number of the \(2(n+1)\)th visited one is congruent to \(2j+1-(1-2nj)=2(n+1)j\) modulo 2k. This implies that 2k and 2sj have to be congruent modulo 2k. Since j and k are mutually prime, it is possible only when \(s=k.\) \(\Box \)

Note that during the proof of Lemma 2 we did not use the assumption made in Remark 1.

From Lemma 2 it follows that there are precisely two permutations \(\sigma _1\) and \(\sigma _2\) generating nonzero summands in (17): \(\sigma _1\) includes elements with odd \(\gamma \)-numbers while \(\sigma _2\) includes ones with even \(\gamma \)-numbers. The following lemma holds.

Lemma 3

The determinant of the matrix \(A_{j,k}\) can be calculated by the formula

Proof

Note that subdiagonals \((s_1)\)–\((s_4)\) form a figure which is symmetric with respect to the main diagonal. Consider the case of even \(k+j.\) From the oddness of j it follows that the elements having \(\gamma \)-numbers \((j+1)/2\) and \(k+(j+1)/2\) lie on the main diagonal. By virtue of the oddness of k, these elements belong to different permutations \(\sigma _1\) and \(\sigma _2,\) where they generate cycle-loops. The other \(2k-2\) elements can be divided into \(k-1\) pairs so that elements in each pair are symmetric with respect to the main diagonal. Obviously, the elements in each pair belong to one and the same permutation \((\sigma _1\) or \(\sigma _2)\) and form a cycle of length 2. Thus, each permutation \(\sigma _\nu ,\,\nu =1,2,\) has one cycle-loop and \((k-1)/2\) cycles of length 2. Since \(\text {sign}(\sigma ) = (-1)^{k - p(\sigma )}\) for each permutation \(\sigma \) consisting of k elements and having \(p(\sigma )\) cycles, we obtain \(\text {sign}(\sigma _1)=\text {sign}(\sigma _2)=(-1)^{(k-1)/2}\). It is easy to see that the product \(a_{1, \sigma _1(1)}\ldots a_{k, \sigma _1(k)}\) includes \((j+1)/2\) elements equal to 1, \((k-1)/2\) elements equal to c and \((k-j)/2\) elements equal to d. The other product includes the remaining nonzero elements. Hence, (17) gives (18) for even \(k+j.\) The case of even k can be treated similarly.

In the case of even j, nonzero elements of the matrix \(A_{j,k}\) also form pairs symmetric with respect to the main diagonal, but elements in each pair correspond to different permutations. This causes some difficulties in finding \(\text {sign}(\sigma _\nu ),\,\nu =1,2.\) For this reason, one can use another approach for finding \(\det A_{j,k}.\) Note that in the first part of the proof we did not use the assumption made in Remark 1. Determine the matrix \(B_{k-j,k}\) by interchanging the nth and the \((k-n+1)\)th columns in \(A_{j,k}\) for \(n=\overline{1,(k-1)/2}.\) Obviously, \(\det A_{j,k}=(-1)^{(k-1)/2}\det B_{k-j,k},\) while the matrix \(B_{k-j,k}\) has the same structure, as \(A_{k-j,k}\) has, but with only difference that 1’s and d’s are mutually replaced. Thus, one can calculate \(\det B_{k-j,k}\) as in the first part of the proof. \(\Box \)

Remark 2

Consider also the matrix \(A_{j,k}'=(a_{m,n}')_{m,n={\overline{1,k}}},\) differing from \(A_{j,k}\) in only one element: \(a_{k,k-j+1}'=0\) while \(a_{m,n}'=a_{m,n}\) for \((m,n)\ne (k,k-j+1).\) It is easy to see that

Indeed, \(\det {A_{j,k}'}\) can be obtained by zeroing precisely one summand in the formula for \(\det {A_{j,k}}.\) In particular, formula (19) implies \({{\,\mathrm{rank}\,}}A_{j,k}\ge k-1.\)

By virtue of (11) and Lemma 3, the unique solvability of the main equation (9) depends on values of \(\alpha \) and \(\beta \) as well as on the parity of j and k. There are two cases: non-degenerate and degenerate ones, depending on whether the main equation is uniquely solvable or not, respectively. According to (11) and (18), the degenerate case occurs when one of the following groups of conditions is fulfilled:

while the non-degenerate case includes the remaining groups of conditions:

According to Remark 1, this classification holds also for all possible \(j>k/2.\)

4 Properties of the spectrum

In the degenerate case, the function \(W_{\alpha ,\beta }(t)\) determined in (9) has some structural properties connected with the degeneracy of the matrix \(A_{j,k}\) and described in the following lemma.

Lemma 4

In the degenerate case, the following relations hold.

- (I)

For \(\alpha =\beta =0:\)

$$\begin{aligned} \sum _{\nu = 0}^{[(k-1)/2]} W_{0,0}( 2\nu b + t) + \sum _{\nu = 1}^{[k/2]} W_{0,0}( 2\nu b - t ) = 0 \quad \text {a.e. on}\; (0, b), \end{aligned}$$(22)where [x] denotes the entire part of x;

- (II)

for \(\alpha = 0\), \(\beta =1\) and even j, as well as

- (III)

for \(\alpha =1,\) \(\beta =0\) and even \(k+j\):

$$\begin{aligned} \sum _{\nu = 0}^{(k-1)/2} (-1)^\nu W_{\alpha ,\beta }(2\nu b+t)=\sum _{\nu =1}^{(k-1)/2}(-1)^\nu W_{\alpha ,\beta }(2\nu b-t) \quad \text {a.e. on}\; (0, b); \end{aligned}$$(23) - (IV)

for \(\alpha =\beta =1\) and even k:

$$\begin{aligned} \sum _{\nu = 0}^{k/2-1} (-1)^\nu W_{1,1}( 2\nu b + t ) + \sum _{\nu = 1}^{k/2} (-1)^\nu W_{1,1}(2\nu b - t ) = 0, \quad \text {a.e. on}\; (0, b). \end{aligned}$$(24)

Proof

For \(k=1\) the assertion is obvious, let \(k>1.\) According to (5), we have

Thus, (22) is equivalent to the relation

which, according to (9), follows from

Analogously, (23) as well as (24) follow from

We note that (25) is obvious. Indeed, according to (10) and (11), in each column of the matrix \(A_{j,k}\) there are precisely two nonzero elements, namely \(+1\) and \(-1.\) Hence, the sum of all rows of \(A_{j,k}\) is a zero-row, which is equivalent to (25). Thus, (22) is proven.

Let us prove (26) in subcases (II) and (III). Put \(\delta (n):=(1-(-1)^n)/2\) and \(\theta :=\delta (j-m).\) Then, according to (10) and (11), for \(2j<k\) we calculate

which gives (26) for \(\alpha +\beta =1.\) In subcase (IV), relation (26) can be proved similarly. \(\Box \)

Theorem 1

The spectrum of L with account of multiplicity has the form \(\{\lambda _n\}_{n\ge 1},\) where

Moreover, in the degenerate case, a kth part of the spectrum degenerates in the following sense:

- (I)

For \(\alpha =\beta =0\):

$$\begin{aligned} \lambda _{kn}=(\pi kn)^2, \quad n\in {\mathbb {N}}; \end{aligned}$$(28) - (II)

for \(\alpha =0,\) \(\beta =1\) and even j, as well as

- (III)

for \(\alpha =1,\) \(\beta =0\) and even \(k+j\):

$$\begin{aligned} \lambda _{k(n-1/2)+1/2}=(\pi k)^2\Big (n-\frac{1}{2}\Big )^2, \quad n \in {\mathbb {N}}; \end{aligned}$$(29) - (IV)

for \(\alpha =\beta =1\) and even k:

$$\begin{aligned} \lambda _{k(n-1/2)+1}=(\pi k)^2\Big (n-\frac{1}{2}\Big )^2, \quad n\in {{\mathbb {N}}}. \end{aligned}$$(30)

Proof

The existence of a countable set of eigenvalues of the form (27) can be established by the standard approach involving Rouché’s theorem (see, e.g., Freiling and Yurko 2001). Let us consider subcases (II) and (III) and prove (29). Expand \(W_{\alpha ,\beta }(t)\) into the Fourier series

Note that k is odd in both subcases (II) and (III). Substituting (31) together with the relation

into (23), we get

a.e. on (0, b), where

Since \(\cos (2n-1)\pi (k-\nu )b=-\cos (2n-1)\pi \nu b\) and \((-1)^{k-\nu } = -(-1)^\nu ,\) we have

It is obvious that \(T_n=k\) for all \(n={k(s-1/2)+1/2},\) \(s\in {{\mathbb {N}}}.\) For all other n, one can calculate \(T_n\) as the real part of the sum of the first k terms of a geometric progression:

Hence, by virtue of (32), we arrive at

By virtue of the orthogonality of the functional system \(\{\sin \pi k(s-1/2)t\}_{s\ge 1}\) in \(L_2(0,b),\) we obtain \(a_{k(s-1/2)+1/2}=0\) for all \(s\in {{\mathbb {N}}},\) which along with (8) and (31) give (29). The formulae (28) and (30) in subcases (I) and (IV), respectively, can be obtained in a similar way. \(\Box \)

By the standard method (see, e.g., Freiling and Yurko 2001), using the Hadamard factorization theorem one can prove the following assertion.

Lemma 5

Any function \(\Delta _{\alpha ,\beta }(\lambda )\) of the form (7) or (8), depending on \(\alpha ,\beta \in \{0,1\},\) is determined by its zeros \(\lambda _n,\,n\ge 1,\) uniquely. Moreover, the following representation holds:

5 Solution of the inverse problem

To formulate the uniqueness theorem, along with the boundary value problem \(L=L(q(x),a,\alpha ,\beta )\) we consider a problem \({\tilde{L}}=L({\tilde{q}}(x),a,\alpha ,\beta )\) of the same form but, generally speaking, with a different potential \({\tilde{q}}(x).\) We agree that if a certain symbol \(\gamma \) denotes an object related to the problem L, then this symbol with tilde \({\tilde{\gamma }}\) denotes the corresponding object related to \({\tilde{L}},\) and \({\hat{\gamma }}:=\gamma -{\tilde{\gamma }}.\) The following uniqueness theorem holds.

Theorem 2

In the non-degenerate case, if \(\{\lambda _n\}_{n\ge 1}=\{{\tilde{\lambda }}_n\}_{n\ge 1},\) then \(q(x)={\tilde{q}}(x)\) a.e. on (0, 1), i.e. specification of the spectrum uniquely determines the potential.

In the degenerate case, let \(k>1\) and suppose that there exists an operator \(K:L_2(0,b)\rightarrow L_2(0,b)\) with invertible \(I+K,\) such that

Then coincidence of the spectra \(\{\lambda _n\}_{n\ge 1}=\{{\tilde{\lambda }}_n\}_{n\ge 1}\) also implies \(q(x)={\tilde{q}}(x)\) a.e. on (0, 1).

Proof

By virtue of Lemma 5, we have \(\Delta _{\alpha ,\beta }(\lambda )\equiv {\tilde{\Delta }}_{\alpha ,\beta }(\lambda )\) and, hence, Lemma 1 gives \(W_{\alpha ,\beta }(t)={\tilde{W}}_{\alpha ,\beta }(t)\) a.e. on (0, 1). In the non-degenerate case, unique solvability of the main equation (9) implies the assertion of the theorem.

Consider the degenerate case. Let \(t' = t\) for even k and \(t' = b-t\) for odd k. From (5) and (35), it follows that

in \(L_2(0, b)\). Since \(W_{\alpha ,\beta }(t)={\tilde{W}}_{\alpha ,\beta }(t)\) a.e. on (0, 1), by virtue of (9) and (10), we get

which along with (36) implies

Using the invertibility of \(I+K\) we obtain \(R_{k-j} {\hat{q}}(t)=0\) and, hence, \(R_{k-j+1}{\hat{q}}(t)=0\) a.e. on (0, b). Therefore, subtracting the main equation for the problem \({\tilde{L}}:\)

termwise from the main Eq. (9), we obtain \(A'_{j,k}R{\hat{q}}(t)=0\) a.e. on (0, b), where the matrix \(A'_{j,k}\) is determined in Remark 2. By virtue of the invertibility of the matrix \(A'_{j,k}\) and the operator R, we arrive at the assertion of the theorem in the degenerate case. \(\Box \)

Note that here and below, for any operator A, the designation A(f(t)) with f(t) in parentheses (unlike Af(t)) means that the variable t in A(f(t)) is mute, i.e., for example, the relation \(g(t)=A(f(t)),\) \(t\in (0,t_1),\) may be non-equivalent to \(g(t_1-t)=A(f(t_1-t)),\) \(t\in (0,t_1).\)

Remark 3

In particular, if \(K=I,\) then the condition (35) is equivalent to the local evenness of the potential with respect to the point a, i.e. \(q(a-t)=q(a+t)\), \(0<t<b.\) However, the case of locally odd with respect to a potentials is not covered by (35) and not eligible. Indeed, if \(K=-I,\) then, e.g., the spectrum of L(q(x), 1 / 2, 0, 0) coincides with the one of L(0, 1 / 2, 0, 0) and, hence, carries no information on q(x). The case of constant K (i.e. K(f) is independent of f) corresponds to a priori specification of q(x) on the subinterval \((a-b,a).\)

Moreover, from Remark 2 and the proof of Theorem 2 it can be seen that instead of (35) one can use other restrictions ensuring uniqueness of solution of the inverse problem, e.g.

for some \(\nu \in \{1,\ldots ,j-1\},\) where the operator \(I+K: L_2(0,b)\rightarrow L_2(0,b)\) is invertible; or

for some \(\nu \in \{j,\ldots ,k-j-1\},\) where the operator \(I+cdK: L_2(0,b)\rightarrow L_2(0,b)\) is invertible; or

for some \(\nu \in \{k-j,\ldots ,k-1\},\) where \(I+dK: L_2(0,b)\rightarrow L_2(0,b)\) is an invertible operator. For example, (35) coincides with (37) for \(\nu =0.\)

The following lemma contains the inverse assertion to Lemma 5. Moreover, while Theorem 1 actually means that fulfilling one of the conditions (22)–(24) implies fulfilling one of (28)–(30), respectively, the following lemma also inverts this statement.

Lemma 6

-

(i)

Fix \(\alpha ,\beta \in \{0,1\}.\) Let arbitrary complex numbers \(\lambda _n,\) \(n\ge 1,\) of the form (27) be given. Then there exists a function \(W_{\alpha ,\beta }(t)\in L_2(0,1)\) such that the function \(\Delta _{\alpha ,\beta }(\lambda )\) determined by (34) has the form (7) or (8) depending on whether \(\alpha =\beta \) or \(\alpha \ne \beta ,\) respectively.

-

(ii)

For combinations of \(\alpha ,\) \(\beta \) and k satisfying one of the groups of conditions in (20): if additionally the corresponding condition in (28)–(30) of degeneration of the numbers \(\lambda _n\) is fulfilled, then this function \(W_{\alpha ,\beta }(t)\) satisfies one of the conditions (22)–(24), respectively.

Proof

The first assertion can be proved by the well-known method (see, e.g., Lemma 3.4.2 in Marchenko 1986 or Lemma 3.3 in Buterin 2007). It remains to prove (ii). Consider subcases (II) and (III), (I) and (IV) can be treated in a similar way. Substituting (29) into (8), we get

i.e. in the Fourier series (31) we have \(a_n=0,\) if \(n/k-1/(2k)+1/2\in {{\mathbb {N}}}.\) Hence, according to (33), we get

which along with (32) give (23). \(\Box \)

Along with Theorem 1, the following theorem means that the properties of the spectrum stated in Theorem 1 are necessary and sufficient conditions for solvability of the inverse problem, i.e. they completely characterize the spectrum of the boundary value problem L in both the degenerate and in the non-degenerate cases.

Theorem 3

Non-degenerate case: Let \(\alpha ,\) \(\beta \), k and j satisfy one of the groups of conditions in (21). Then for an arbitrary sequence of complex numbers \(\{\lambda _n\}_{n\ge 1}\) of the form (27), there exists a unique complex-valued function \(q(x)\in L_2(0,1)\) such that \(\{\lambda _n\}_{n\ge 1}\) is the spectrum of the boundary value problem \(L(q(x),j/k,\alpha ,\beta ).\)

Degenerate case: Let \(\alpha ,\) \(\beta \), k and j satisfy one of the groups of conditions in (20). Then for an arbitrary sequence of complex numbers \(\{\lambda _n\}_{n\ge 1}\) of the form (27) satisfying the corresponding degeneration condition in (28)–(30) there exists a function \(q(x)\in L_2(0,1)\) (not unique) such that \(\{\lambda _n\}_{n\ge 1}\) is the spectrum of the boundary value problem \(L(q(x),j/k,\alpha ,\beta ).\)

Proof

Using the sequence \(\{\lambda _n\}_{n\ge 1}\) of the form (27) construct the function \(\Delta _{\alpha ,\beta }(\lambda )\) by formula (34). By virtue of Lemma 6, this function \(\Delta _{\alpha ,\beta }(\lambda )\) has the representation (7) or (8), depending on the values \(\alpha \) and \(\beta ,\) with a certain function \(W_{\alpha ,\beta }(t)\in L_2(0,1).\) In the non-degenerate case the main equation (9) with this \(W_{\alpha ,\beta }(t)\) has a unique solution \(q(x)\in L_2(0,1).\) It is easy to see that \(\{\lambda _n\}_{n\ge 1}\) is the spectrum of the boundary value problem \(L(q(x),j/k,\alpha ,\beta ).\)

In the degenerate case, by virtue of Lemma 6, this function \(W_{\alpha ,\beta }(t)\) also satisfies the corresponding condition in (22)–(24). Let us show the solvability of the main equation (9), which, in turn, is equivalent to the equation \(Y=A_{j,k}X\) with \(Y=(y_1,\ldots ,y_k)^T=2(-1)^{\alpha \beta } Q W_{\alpha ,\beta }(t)\) and the unknown column \(X=Rq(t).\) Consider subcases (II)–(IV). Then, according to (5), (23) and (24), we have

which along with (26) and \({{\,\mathrm{rank}\,}}A_{j,k}=k-1\) (see Remark 2) give \({\mathrm {rank}}[A_{j,k},Y]={\mathrm {rank}}A_{j,k}.\) Since \(A_{j,k}\) is a numerical matrix, elements of X and Y belong to one and the same linear space (in our case \(L_2(0,b)).\) Hence, the equation \(Y=A_{j,k}X\) has a solution \(X\in (L_2(0,b))^k.\) Put \(q(x)=R^{-1}X\) and consider the problem \(L:=L(q(x),j/k,\alpha ,\beta )\) with this q(x). Obviously, \(\{\lambda _n\}_{n\ge 1}\) is the spectrum of L. For subcase (I) the arguments are similar. \(\Box \)

Remark 4

Theorems 1 and 3 also imply that the amount of information on the potential carried by the spectrum may significantly vary between arbitrarily close values of a. Indeed, while, e.g., for \(\alpha =\beta =0\) and \(a=a_0:=1/2\) a half of the spectrum degenerates, for \(a=a_k:=(k-1)/(2k)\) with even k so does only its 2kth part, but meanwhile \(a_k\rightarrow a_0\) as \(k\rightarrow \infty .\)

However, it is easy to see that for any \(a_0\in [0,1]\) and for all \(n\in {{\mathbb {N}}}\) there exists \(\varepsilon >0\) such that the spectrum of the problem \(L(q(x),a,\alpha ,\beta )\) with fixed \(\alpha ,\,\beta \in \{0,1\}\) can degenerate, generally speaking, only in its k-th part with \(k>n\) as soon as \(|a-a_0|<\varepsilon .\) Moreover, for \((\alpha ,\beta )\ne (0,0),\) in each vicinity of \(a_0\) there are infinitely many a’s such that the corresponding problems \(L(q(x),a,\alpha ,\beta )\) belong to the non-degenerate case.

The proof of Theorem 3 is constructive and gives algorithms for solving the inverse problem. The following algorithm can be applied in the non-degenerate case.

Algorithm 1

Let the spectrum \(\{\lambda _n\}_{n\ge 1}\) of a boundary value problem \(L(q(x),a,\alpha ,\beta )\) in the non-degenerate case be given.

- 1.

Construct the function \(\Delta _{\alpha ,\beta }(\lambda )\) by formula (34).

- 2.

Calculate the function \(W_{\alpha ,\beta }(x),\) inverting the Fourier transform in the corresponding representation (7) or (8).

- 3.

Find q(x) as a solution of the main equation (9), i.e. \(q(x)=2(-1)^{\alpha \beta }R^{-1}A_{j,k}^{-1}QW_{\alpha ,\beta }(x).\)

In the degenerate case one can use the following algorithm. Since in this case the solution of the inverse problem is not unique, for definiteness the algorithm is confined to the class of potentials obeying the additional condition (35).

Algorithm 2

Let the spectrum \(\{\lambda _n\}_{n\ge 1}\) of a boundary value problem \(L(q(x),a,\alpha ,\beta )\) along with the operator K in (35) be given.

- 1.

Construct the function \(\Delta _{\alpha ,\beta }(\lambda )\) by formula (34).

- 2.

Calculate the function \(W_{\alpha ,\beta }(x),\) inverting the Fourier transform in the corresponding representation (7) or (8).

- 3.

Calculate q(x) on the interval \((a-b,a)\) by the formula

$$\begin{aligned} q(a-x)=K\Big ((I+K)^{-1}\Big (2(-1)^{\alpha \beta +\beta +1}W_{\alpha ,\beta }(1-x)\Big )\Big ), \quad 0<x<b. \end{aligned}$$ - 4.

Put

$$\begin{aligned} f(x)=\left\{ \begin{array}{l}q(a-x),\;\;\hbox {for even} \;\;k,\\ q(a-b+x),\;\;\hbox {for odd} \;\;k, \end{array}\right. \quad 0<x<b, \end{aligned}$$and find q(x) on the entire interval (0, 1) by the formula

$$\begin{aligned} q(x)=R^{-1}(A_{j,k}')^{-1}Y_1(x), \quad Y_1(x)=2(-1)^{\alpha \beta }QW_{\alpha ,\beta }(x) - (\underbrace{0,\ldots ,0}_{\hbox {k-1 zeros}},cf(x))^T, \end{aligned}$$where the matrix \(A_{j,k}'\) is determined in Remark 2.

In the degenerate case, Algorithm 2 also allows one to describe the set of all iso-spectral potentials q(x), i.e. of those for which the corresponding problems \(L(q(x),a,\alpha ,\beta )\) (with fixed \(a,\,\alpha \) and \(\beta )\) have one and the same spectrum \(\{\lambda _n\}_{n\ge 1}.\) For this purpose, in the third step of Algorithm 2 one should use a constant operator K, i.e. for which there exists a function \(p(x)\in L_2(0,b)\) such that

for all \(f(x)\in L_2(0,b).\) Indeed, the following theorem holds.

Theorem 4

If the function p(x) in (38) ranges over \(L_2(0,b),\) then the corresponding functions q(x) constructed by Algorithm 2 form the set of all iso-spectral potentials for the given spectrum \(\{\lambda _n\}_{n\ge 1}.\)

Proof

It is clear that for the operator K of the form (38) and for any \(p(x)\in L_2(0,b),\) Algorithm 2 gives iso-spectral potentials q(x) with \(q(x)=p(a-x)\) a.e. on \((a-b,a).\) On the other hand, by virtue of Theorem 2, for a fixed spectrum the function q(x) on \((a-b,a)\) uniquely determines itself on the rest part of the interval (0, 1). Hence, no other iso-spectral potentials exist. \(\Box \)

References

Albeverio S, Hryniv RO, Nizhnik LP (2007) Inverse spectral problems for non-local Sturm–Liouville operators. Inverse Probl 23:523–535

Beals R, Deift P, Tomei C (1988) Direct and inverse scattering on the line, mathematica surveys and monographs, 28. AMS, Providence

Bondarenko N, Yurko V (2018) An inverse problem for Sturm–Liouville differential operators with deviating argument. Appl Math Lett 83:140–144

Bondarenko NP, Buterin SA, Vasiliev SV (2019) An inverse spectral problem for Sturm–Liouville operators with frozen argument. J Math Anal Appl 472(1):1028–1041

Borg G (1946) Eine Umkehrung der Sturm–Liouvilleschen Eigenwertaufgabe. Acta Math 78:1–96

Buterin SA (2007) On an inverse spectral problem for a convolution integro-differential operator. Results Math 50(3–4):173–181

Buterin SA, Vasiliev SV (2019) On recovering a Sturm–Liouville-type operator with the frozen argument rationally proportioned to the interval length. J Inverse Ill-Posed Probl 27(3):429–438

Buterin SA, Yurko VA (2019) An inverse spectral problem for Sturm–Liouville operators with a large constant delay. Anal Math Phys 9(1):17–27

Freiling G, Yurko VA (2001) Inverse Sturm-Liouville problems and their applications. NOVA Science Publishers, New York

Freiling G, Yurko VA (2012) Inverse problems for Sturm–Liouville differential operators with a constant delay. Appl Math Lett 25:1999–2004

Levitan BM (1987) Inverse Sturm–Liouville problems, Nauka, Moscow, 1984; English transl. VNU Sci. Press, Utrecht

Lomov IS (2014) Loaded differential operators: convergence of spectral expansions. Differ Equ 50(8):1070–1079

Marchenko VA (1986) Sturm–Liouville operators and their applications, Naukova Dumka, Kiev, 1977. English transl, Birkhäuser

Nakhushev AM (2012) Loaded equations and their applications. Nauka, Moscow

Nizhnik LP (2009) Inverse eigenvalue problems for nonlocal Sturm–Liouville operators. Methods Funct Anal Top 15(1):41–47

Nizhnik LP (2010) Inverse nonlocal Sturm–Liouville problem. Inverse Probl 26:125006

Nizhnik LP (2011) Inverse spectral nonlocal problem for the first order ordinary differential equation. Tamkang J Math 42(3):385–394

Vladičić V, Pikula M (2016) An inverse problem for Sturm–Liouville-type differential equation with a constant delay. Sarajevo J Math 12(24) no.1, 83–88

Yang C-F (2014) Inverse nodal problems for the Sturm–Liouville operator with a constant delay. J Differ Equ 257(4):1288–1306

Yurko VA (2002) Method of spectral mappings in the inverse problem theory. Inverse and Ill-posed Problems Series. VSP, Utrecht

Acknowledgements

This research was supported by the Ministry of Education and Science of Russian Federation (Grant 1.1660.2017/4.6).

Author information

Authors and Affiliations

Corresponding author

Additional information

Communicated by Luz de Teresa.

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Buterin, S., Kuznetsova, M. On the inverse problem for Sturm–Liouville-type operators with frozen argument: rational case. Comp. Appl. Math. 39, 5 (2020). https://doi.org/10.1007/s40314-019-0972-8

Received:

Revised:

Accepted:

Published:

DOI: https://doi.org/10.1007/s40314-019-0972-8

Keywords

- Sturm–Liouville-type operator

- Functional-differential operator

- Frozen argument

- Inverse spectral problem