Abstract

In this paper, we consider Marshall and Olkin’s family of distributions. The parent (baseline) distribution is taken to be a scaled family of distributions. Two models: (i) modified proportional hazard rate scale and (ii) modified proportional reversed hazard rate scale, are considered. Some stochastic comparison results in terms of the usual stochastic, hazard rate and reversed hazard rate orders are studied to compare order statistics formed from two sets of independent observations following these models. Most of the sufficient conditions are obtained depending on various majorization-type partial orderings. Further, the setup with multiple-outlier model is taken. Various stochastic orders between the smallest and largest order statistics are developed. Several numerical examples are provided to illustrate the effectiveness of the established theoretical results.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

A flexible family of statistical models is often required for the purpose of data analysis. Various methods have been developed to add flexibility to a given statistical distribution. For example, one may consider powers of a given survival or distribution function to introduce a new family of distribution functions. Based on this notion, several useful lifetime distributions such as Weibull, exponentiated Weibull, gamma, exponentiated exponential and many others have been introduced in the literature. For additional discussion, we refer to Azzalini [2] and Mudholkar and Srivastava [14]. Besides these, Marshall and Olkin [12] developed a method to add an extra parameter to any distribution function, which results in a new family of distributions. Let F(x) and \({\bar{F}}(x)=1-F(x)\) be the distribution and survival functions of a baseline distribution, respectively. We assume that the distributions have nonnegative support. Then,

where \(x,\alpha \in \mathcal {R^+}\) are distribution functions of the new families of distributions. Here, \(\alpha \) is called the tilt parameter. Note that the family with distribution function \(H(x;\alpha )\) can be deduced from that with distribution function \(G(x;\alpha )\) if we substitute \(1/\alpha \) in place of \(\alpha \). Very recently, Balakrishnan, Barmalzan and Haidari [3] considered proportional hazard rate and proportional reversed hazard rate models as the baseline distributions in \(G(x;\alpha )\) and \(H(x;\alpha )\), respectively, to introduce two new statistical models. They called these as the modified proportional hazard rate and modified proportional reversed hazard rate models, respectively. These are given by

where \(x,\alpha ,\lambda ,\eta \in \mathcal {R^+}\). In (1.2), \(\lambda \) and \(\eta \) are, respectively, known as the proportional hazard rate and proportional reversed hazard rate parameters. It is noted that when \(\alpha =1\), the distribution functions \({G}(x;\alpha ,\lambda )\) and \({H}(x;\alpha ,\eta )\) in (1.2) reduce to the proportional hazard rate and proportional reversed hazard rate models, respectively. We consider proportional hazard rate and proportional reversed hazard rate models for the scale family of distributions in \({G}(x;\alpha ,\lambda )\) and \({H}(x;\alpha ,\eta )\), respectively. Note that introduction of a scale parameter leads to the accelerated life model. Henceforth, the resulting new models are called as modified proportional hazard rate scale (MPHRS) and modified proportional reversed hazard rate scale (MPRHRS) models, respectively. The distribution functions of these models are given by

where \(x,\alpha ,\lambda ,\eta ,\theta \in \mathcal {R^+}\). We denote \(X\sim MPHRS(\alpha ,\lambda ,\theta ;{\bar{F}})\) and \(X\sim MPRHRS(\alpha ,\eta ,\theta ;F)\) if X has the distribution functions \({G}(x;\alpha ,\lambda ,\theta )\) and \({H}(x;\alpha ,\eta ,\theta )\), respectively. These distribution functions reduce to the models given by (1.5) and (1.6), respectively, in Balakrishnan, Barmalzan and Haidari [3] when the scale parameter \(\theta \) is equal to one. Further, when \(\theta =1\) and \(\alpha =1\), the models in (1.3) reduce to the proportional hazard rate and proportional reversed hazard rate models.

In this paper, we derive various stochastic orders between order statistics arising from heterogeneous MPHRS and MPRHRS models. Order statistics are often useful in various areas such as life testing, reliability theory, operations research and statistical inference. If \(X_{1},\ldots ,X_{n}\) are n random observations, then its order statistics are denoted by \(X_{1:n}\le \cdots \le X_{n:n}\). For an \((n-k+1)\)-out-of-n system, the kth order statistic \(X_{k:n}\) corresponds to the system lifetime. Specifically, for \(k=1\) and n, the order statistics \(X_{1:n}\) and \(X_{n:n}\) represent lifetimes of the series and parallel systems, respectively. So far, several attempts have been made for the stochastic comparisons of the order statistics. Below, we present a few recent contributions. Barmalzan et al. [5] established sufficient conditions in comparing the smallest claim amounts in terms of the usual stochastic and hazard rate orders for a general scale model. Bashkar et al. [6] discussed stochastic comparisons of the extreme order statistics arising from independent heterogeneous exponentiated scale samples. Torrado [18] established various ordering results for the comparisons of minimum and maximum order statistics arising from scale models when one set of scale parameters majorizes the other. Besides these, recently, many authors have studied stochastic comparison results between order statistics arising from various lifetime distributions. For instance, see Kundu et al. [11], Chowdhury and Kundu [7], Balakrishnan, Nanda and Kayal [4], Nadeba and Torabi [15], Kayal [9] and Zhang et al. [19].

For \(i=1,\ldots ,n\), let \(\{X_{1},\ldots ,X_{n}\}\) and \(\{Y_{1},\ldots ,Y_{n}\}\) be two sets of n independent random observations such that \(X_{i} \sim MPHRS(\alpha _{i},\lambda _{i},\theta _{i};{\bar{F}}) \) or \(MPRHRS(\alpha _{i},\eta _{i},\theta _{i};F)\) and \(Y_{i} \sim MPHRS(\beta _{i},\mu _{i},\delta _{i};{\bar{F}})\) or \(MPRHRS(\beta _{i},\gamma _{i},\delta _{i};F)\). In this setup, we compare the order statistics (smallest, largest and kth) arising from these two sets in terms of several stochastic orderings. The concept of stochastic orders has a wide variety of applications in survival analysis, reliability theory, insurance and operations research. Note that in various real-life situations, the technical systems are usually of complex structure. Due to this, we often face difficulties in studying system lifetime’s characteristics. One solution in this direction is to replace some components by new components and then approximate the important characteristics such as survival, hazard rate and reversed hazard rate functions. The theory of stochastic orderings plays an important role in this purpose. For relevant details on various stochastic orderings and their applications, we refer to Shaked and Shanthikumar [17].

The paper is arranged as follows. In Sect. 2, we present some preliminary results. It mainly recalls some basic definitions. Section 3 contains main results dealing with some stochastic orders between order statistics arising from two sets of random variables which follow the MPHRS model. Similar results for the MPRHRS model are also obtained. We consider multiple-outlier models in Sect. 4 and establish some comparisons between minimum and maximum order statistics in terms of the usual stochastic, hazard rate, reversed hazard rate and likelihood ratio orderings. Section 5 concludes the paper with some remarks.

In this paper, we only focus on the random variables which are defined on \(\mathcal {R^+}\). Throughout, the terms ‘increasing’ and ‘decreasing’ are used in nonstrict sense. The ratio of two functions whenever it occurs is well defined. Further, ‘\(\overset{sign}{=}\)’ is used to denote both sides of the equality have the same sign.

2 Preliminaries

In this section, we review some well-known definitions and concepts on stochastic orderings and majorizations, which are mainly used throughout the text. Various types of stochastic orders have been developed and studied by many authors in the literature. We consider some of them. Suppose X and Y are two nonnegative random variables having probability density functions \(f_X\) and \(f_Y\), cumulative distribution functions \(F_X\) and \(F_Y\), survival functions \({{\bar{F}}}_X=1-F_X\) and \({{\bar{F}}}_Y=1-F_Y\), hazard rates \(r_X=f_X/{{\bar{F}}}_X\) and \(r_Y=f_Y/{{\bar{F}}}_Y\), and reversed hazard rates \({{\tilde{r}}}_X=f_X/F_X\) and \({{\tilde{r}}}_Y=f_Y/F_Y\), respectively.

Definition 2.1

A random variable X is said to be smaller than Y in the

-

likelihood ratio order (denoted by \(X\le _{\text {lr}}Y\)) if \(f_Y(x)/f_X(x)\) is increasing in x,

-

hazard rate order (denoted by \(X\le _{\text {hr}}Y\)) if \(r_X(x)\ge r_Y(x)\) for all x,

-

reversed hazard rate order (denoted by \(X\le _{\text {rh}}Y\)) if \({{\tilde{r}}}_X(x)\le {{\tilde{r}}}_Y(x)\) for all x,

-

usual stochastic order (denoted by \(X\le _{\text {st}}Y\)) if \({{\bar{F}}}_X(x)\le {{\bar{F}}}_Y(x)\) for all x.

It is well known that the likelihood ratio order implies hazard rate and reversed hazard rate orders, and hazard rate and reversed hazard rate orders imply the usual stochastic order. Interested readers may refer to Shaked and Shanthikumar [17] for wide discussions on various stochastic orderings and relations between them. Now, we discuss few majorization and related orders which are useful in the subsequent sections. Note that the notion of majorization is quiet useful in establishing various important inequalities. We refer to Marshall et al. [13] for comprehensive discussion on this topic. Let \({\mathbb {A}} \subset {\mathbb {R}}^{n},\) where \({\mathbb {R}}^{n}\) is an n-dimensional Euclidean space. Consider \({\varvec{a}} = \left( a_{1},\ldots ,a_{n}\right) \) and \({\varvec{b}} = \left( b_{1},\ldots ,b_{n}\right) \) to be two n-dimensional vectors belonging to \({\mathbb {A}}\). Further, we assume the order coordinates of the vectors \({\varvec{a}}\) and \({\varvec{b}}\) are \(a_{1:n}\le \cdots \le a_{n:n}\) and \(b_{1:n}\le \cdots \le b_{n:n}\), respectively.

Definition 2.2

A vector \({\varvec{a}}\) is said to be

-

majorized by another vector \({\varvec{b}},\) (denoted by \({\varvec{a}}\preceq ^{m} {\varvec{b}}\)), if for each \(k=1,\ldots ,n-1\), we have \(\sum _{i=1}^{k}a_{i:n}\ge \sum _{i=1}^{k}b_{i:n}\) and \(\sum _{i=1}^{n}a_{i:n}=\sum _{i=1}^{n}b_{i:n}\),

-

weakly submajorized by another vector \({\varvec{b}},\) denoted by \({\varvec{a}}\preceq _{w} {\varvec{b}}\), if for each \(k=1,\ldots ,n\), we have \(\sum _{i=k}^{n}a_{i:n}\le \sum _{i=k}^{n}b_{i:n}\),

-

weakly supermajorized by another vector \({\varvec{b}},\) denoted by \({\varvec{a}}\preceq ^{w} {\varvec{b}}\), if for each \(k=1,\ldots ,n\), we have \(\sum _{i=1}^{k}a_{i:n}\ge \sum _{i=1}^{k}b_{i:n}\),

-

p-larger than the vector \({\varvec{b}}\), denoted by \({\varvec{a}}\succeq ^{p}{\varvec{b}}\) if \(\prod _{i=1}^{k}a_{i:n} \le \prod _{i=1}^{k}b_{i:n}\) for \(k=1,\ldots ,n\),

-

reciprocally majorized by another vector \({\varvec{b}},\) denoted by \({\varvec{a}}\preceq ^{rm}{\varvec{b}}\), if \(\sum _{i=1}^{k}a_{i:n}^{-1}\le \sum _{i=1}^{k}b_{i:n}^{-1}\) for all \(k=1,\ldots ,n\).

It is clear that \({\varvec{a}}\preceq ^{m} {\varvec{b}}\) implies both \({\varvec{a}}\preceq _{w} {\varvec{b}}\) and \({\varvec{a}}\preceq ^{w} {\varvec{b}}.\) Also, \({\varvec{a}}\preceq ^{w} {\varvec{b}}\) implies \({\varvec{a}}\preceq ^{p}{\varvec{b}}.\) The converse is not always true. For example, \((2,3)\preceq ^{p}(1,5.5)\). In this case, it is easy to see that weakly majorization order does not hold. Further, we present definition of the Schur-convex and Schur-concave functions.

Definition 2.3

A function \(g:{\mathbb {R}}^n\rightarrow {\mathbb {R}}\) is said to be Schur-convex (Schur-concave) on \({\mathbb {R}}^n\) if \({\varvec{a}}\overset{m}{\succeq }{\varvec{b}}\Rightarrow g({\varvec{a}})\ge ( \le )g({\varvec{b}})\) for all \({\varvec{a}}, {\varvec{b}} \in {\mathbb {R}}^n.\)

Henceforth, we use the notations: \((i)~{\mathcal {D}}_{+}=\{(x_1,\ldots ,x_n):x_{1}\ge x_{2}\ge \cdots \ge x_{n}>0\}\) and \((ii)~{\mathcal {E}}_{+}=\{(x_1,\ldots ,x_n):0<x_{1}\le x_{2}\le \cdots \le x_{n}\}.\) Further, we denote \(\varvec{1}_{n}\) for the n-dimensional vector \((1,\ldots ,1)\). The following lemma is useful to prove some results in next section.

Lemma 2.1

[16] Suppose \(X_1,\ldots ,X_n\) are independent random variables with \(X_i\sim {{\bar{F}}}_{X}(x;\theta _i),\theta _i>0\) for \(i=1,\ldots ,n.\) Further, suppose \({{\bar{F}}}_{X}(x;\theta _i)(F_{X}(x;\theta _i))\) are differentiable. If \(\ln {{\bar{F}}}_{X}(x;\theta _i)(\ln F_{X}(x,\theta _i))\) is monotone and convex in \(\theta _i,\) then the survival functions of \(X_{k:n},k=1,\ldots ,n,\) are Schur-convex (Schur-concave) in \((\theta _1,\ldots ,\theta _n),\) where \(X_{1:n},\ldots ,X_{n:n}\) are order statistics constructed from \(X_i\)’s, \(i=1,\ldots ,n.\)

3 Main Results

In this section, we develop some stochastic orderings between the lifetimes of two \((n-k+1)\)-out-of-n systems associated with heterogeneous MPHRS and MPRHRS components. The heterogeneity has been considered in the model parameters. First, consider the reliability systems with MPHRS-distributed components.

3.1 Results for MPHRS Model

This subsection deals with some stochastic orderings between order statistics of two sets of independent observations having heterogeneous MPHRS models. At first, we present results for the maximum order statistics. Note that the maximum order statistic can be interpreted as the lifetime of a parallel system. Let \(\{X_{1},\ldots ,X_{n}\}\) be a set of independent random variables with \(X_{i}\sim MPHRS(\alpha _i,\lambda _i,\theta _i;{\bar{F}})\), \(i=1,\ldots ,n\). For convenience, we denote \(\varvec{X}\sim MPHRS(\varvec{\alpha },\varvec{\lambda },\varvec{\theta };{\bar{F}})\), where \(\varvec{X}=(X_{1},\ldots ,X_{n}),\varvec{\alpha }=(\alpha _1,\ldots ,\alpha _n),\varvec{\lambda } =(\lambda _1,\ldots ,\lambda _n)\) and \(\varvec{\theta }=(\theta _1,\ldots ,\theta _n)\). The cumulative distribution function of the maximum order statistic \(X_{n:n}\) is

where \(\bar{\alpha _i}=1-\alpha _{i},~i=1,\ldots ,n\). The following theorem provides sufficient conditions for the usual stochastic ordering between maximum order statistics. The two sample sets have equal tilt parameters and common hazard rate parameters. Denote the hazard rate of the baseline distribution with distribution function F by \(r^{*}(u)=f(u)/{\bar{F}}(u),u>0\).

Theorem 3.1

Assume \(\varvec{ X}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\lambda },\varvec{\theta };{\bar{F}})\) and \({\varvec{Y}}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\lambda },\varvec{\delta };{\bar{F}})\), where \(0<\alpha \le 1\) and \(\varvec{\theta },\varvec{\lambda },\varvec{\delta }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Then,

-

(i)

\({\varvec{\theta }}\succeq ^p\varvec{\delta }\Rightarrow X_{n:n}\ge _{\text {st}} Y_{n:n}\), provided \(u r^*(u)\) is decreasing in u,

-

(ii)

\(\frac{1}{{\varvec{\theta }}}\succeq ^{rm}\frac{1}{\varvec{\delta }}\Rightarrow X_{n:n}\ge _{\text {st}} Y_{n:n}\), provided \(u^2 r^*(u)\) is decreasing in u.

Proof

(i) Let \(v_{i}=\ln \theta _{i},~i=1,\ldots ,n.\) Then, Eq. (3.1) can be rewritten as

where \(\varvec{v}=(v_{1},\ldots ,v_{n})\). On differentiating (3.2) with respect to \(v_{i}\) partially, we get

which is nonnegative. So, \(\phi _{1}(\varvec{v})\) is increasing in each \(v_{i}\). Consider \(1\le i \le j\le n\). Then, under the assumption made, \(\theta _{i}\ge (\le )\theta _{j}\) implies \(e^{v_{i}}\ge (\le )e^{v_{j}}\) and \(\lambda _{i}\ge (\le )\lambda _{j}\). Now, using Lemma A.1 and Lemma A.2, and the decreasing property of \(u r^*(u)\), it can be shown that

Thus, from Lemma 3.1 (Lemma 3.3) of Kundu et al. [11], it can be shown that \(\phi _{1}(\varvec{v})\) is Schur-concave in \(v_{i} \in {\mathcal {D}}_+({\mathcal {E}}_+)\) for \(i=1,\ldots ,n\). Hence, the result follows from Khaledi and Kochar [10]. This completes the proof of the first part. We now proceed to the proof of the second part of this theorem.

(ii) To prove the second part of the theorem, we rewrite \(F_{X_{n:n}}(x)\) as

where \(\varvec{w}=(w_1,\ldots ,w_n)\) with \(w_{i}=1/\theta _{i},~i=1,\ldots ,n\). Differentiating (3.4) with respect to \(w_{i}\) partially, we obtain

which is at most zero. This shows that \(\phi _2\left( 1/\varvec{ w} \right) \) is decreasing in \(w_{i},~i=1,\ldots ,n\). Assume \(1\le i\le j\le n\). Then, \(x/w_{i}\ge (\le )x/w_{j}\) and \(\lambda _{i}\ge (\le ) \lambda _{j}\). Thus, from Lemma A.1 and Lemma A.2, and the decreasing property of \(u^2 r^*(u)\), it is easy to show that

The above inequalities confirm that \(\phi _2(1/{\varvec{w}})\) is Schur-concave in \(w_i\in \mathcal {E_+}(\mathcal {D_+})\) for \(i=1,\ldots ,n\). Hence, the required result follows from Lemma 2.1 of Hazra et al. [8]. This completes the proof of the theorem. \(\square \)

Remark 3.2

It can be shown that \(\frac{1}{{\varvec{\theta }}}\succeq ^{rm}\frac{1}{\varvec{\delta }}\) holds if and only if \(\varvec{\theta }\succeq _{w}\varvec{\delta }\). Thus, from Theorem 3.1(ii), we have an alternative result as follows. Under the assumptions made as in Theorem 3.1, \(\varvec{\theta }\succeq _{w}\varvec{\delta }\Rightarrow X_{n:n}\ge _{\text {st}} Y_{n:n}\), provided \(u^2 r^*(u)\) is decreasing in u.

Remark 3.3

Consider the baseline distribution with distribution function \(F(x)=1-x^{-\alpha },~x\ge 1,\alpha >0\). Then, \(ur^{*}(u)\) is nonincreasing in \(u\ge 1\). Thus, Theorem 3.1(i) can be applied in comparing the lifetimes of two parallel systems having MPHRS-distributed components with baseline distribution function \(F(x)=1-x^{-\alpha },~x>1,\alpha >0\).

In the following theorem, we show that the maximum order statistic of a set of independent random variables is smaller than that of another set of independent random variables in terms of the usual stochastic ordering when the tilt parameter vector of the first set weakly supermajorizes that of the second set. Here, we assume that both sets have common scale parameters.

Theorem 3.4

Assume \({\varvec{X}}\sim MPHRS({\varvec{\alpha }},{\lambda }\varvec{1}_{n},\varvec{\theta };{\bar{F}})\) and \({\varvec{Y}}\sim MPHRS({\varvec{\beta }},{\lambda }\varvec{1}_{n},\varvec{\theta };{\bar{F}})\), where \(\varvec{\theta },\varvec{\alpha },\varvec{\beta }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Then, \({\varvec{\alpha }}\succeq ^w\varvec{\beta }\Rightarrow X_{n:n}\le _{\text {st}} Y_{n:n}\).

Proof

The reliability function of \(X_{n:n}\) is

Differentiating (3.6) with respect to \(\alpha _i\), we obtain

which is at least zero. Thus, \({\bar{F}}_{X_{n:n}}(x)\) is increasing in \(\alpha _i,i=1,\ldots ,n\). Further, under the assumptions made and Lemma A.3, for \(1\le i \le j \le n\), it can be shown that \(\frac{\partial {\bar{F}}_{X_{n:n}}(x)}{\partial \alpha _i}-\frac{\partial {{\bar{F}}}_{X_{n:n}}(x)}{\partial \alpha _j}\le (\ge )0.\) Thus, from Kundu et al. [11], the function \({\bar{F}}_{X_{n:n}}(x)\) given in (3.6) is Schur-concave in \(\alpha _i\in \mathcal {D_+}(\mathcal {E_+})\) for \(i=1,\ldots ,n.\) Utilizing this, the rest of the proof follows from Theorem A.8 of Marshall et al. [13]. \(\square \)

We consider an example to describe the effectiveness of Theorem 3.4.

Example 3.5

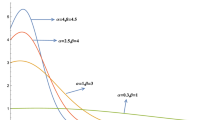

Consider a baseline distribution with survival function \(\bar{F}(x)=x^{-\gamma },~x>1,\gamma >0\). This is known as the Pareto distribution. Take \((\theta _1,\theta _2,\theta _3)=(1,2,3),~ (\alpha _1,\alpha _2,\alpha _3)=(0.1,0.3,0.5),~(\beta _1,\beta _2,\beta _3)= (0.3,0.6,0.7)\), and \(\lambda =1.\) These satisfy all the conditions of Theorem 3.4. We plot the graphs of \(F_{X_{3:3}}(x)\) and \(F_{Y_{3:3}}(x)\) in Fig. 1a, which clearly verifies Theorem 3.4.

One natural question is that whether the result in Theorem 3.4 can be extended to the reversed hazard rate ordering. The following counterexample suggests that the answer is negative. Indeed, some extra conditions may lead to the desired extension, which is discussed in Theorem 3.10.

Counterexample 3.6

Set \(F(x)=e^{-x^{-1}},~x>0\), which results in the Gumbel type II distribution. Let \((\theta _1,\theta _2,\theta _3)=(1.01,2.01,3.01)\), \((\alpha _1,\alpha _2,\alpha _3)=(2,3,4)\), \((\beta _1,\beta _2,\beta _3)=(5,6,7)\) and \(\lambda =1\). Clearly, all the conditions in Theorem 3.4 are satisfied. Using graphical plots (omitted for the sake of brevity), it can be observed that \(F_{X_{3:3}}(x)\) is always larger than \(F_{Y_{3:3}}(x)\). However, \({\tilde{r}}_{X_{3:3}}(2)-{\tilde{r}}_{Y_{3:3}}(2)\) is positive, that is, \(X_{3:3}\nleq _{\text {rh}} Y_{3:3}\), where \({\tilde{r}}_{X_{n:n}}(x)\) denotes the reversed hazard rate of \(X_{n:n}\) (see Eq. (3.8)).

In the next theorem, we consider models with same fixed tilt parameters, completely different hazard rate parameters and common scale parameters.

Theorem 3.7

Let \({\varvec{X}}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\lambda },\varvec{\theta };{{{\bar{F}}}})\) and \({\varvec{Y}}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\mu },\varvec{\theta };{{{\bar{F}}}})\), where \(\varvec{\theta ,\mu ,\lambda }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Then, for \(0<{\alpha }\le 1, {\varvec{\lambda }}\succeq ^w\varvec{\mu }\Rightarrow X_{n:n}\ge _{\text {st}} Y_{n:n}\).

Proof

Using Lemmas A.2 and A.7, and the assumptions made, the proof of this theorem follows along the lines of that of Theorem 3.4. Thus, it is omitted. \(\square \)

The following example illustrates Theorem 3.7.

Example 3.8

Take \({{\bar{F}}}(x)=e^{-x}, ~x>0\). Further, consider \((\theta _1,\theta _2,\theta _3)=(1,2,3),~(\lambda _1,\lambda _2,\lambda _3) =(0.1,0.2,0.3),~(\mu _1,\mu _2,\mu _3)=(0.2,0.3,0.6),\) and \(\alpha =0.3.\) Direct calculation shows \((\lambda _1,\lambda _2,\lambda _3) \succeq ^w(\mu _1,\mu _2,\mu _3)\). The graphs of \(F_{X_{3:3}}(x)\) and \(F_{Y_{3:3}}(x)\) are depicted in Fig. 1b, from which it is seen that \(X_{3:3}\) is larger than \(Y_{3:3}\) in the usual stochastic ordering.

Combining the results in Theorems 3.1 and 3.7, the following theorem can be proved. Here, we consider different hazard rate parameter vectors and scale parameter vectors for two sets of random variables. The proof is omitted for brevity.

Theorem 3.9

Let \(\varvec{ X}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\lambda },\varvec{\theta };{{{\bar{F}}}})\) and \({\varvec{Y}}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\mu },\varvec{\delta };{{{\bar{F}}}})\), where \(0<\alpha \le 1\), \(\varvec{\theta },\varvec{\lambda },\varvec{\mu },\varvec{\delta }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Then,

-

(i)

\(\varvec{\theta }\succeq ^{p}\varvec{\delta }\) and \(\varvec{\lambda }\succeq ^{w}\varvec{\mu }\) imply \(X_{n:n}\ge _{\text {st}} Y_{n:n}\), provided \(ur^*(u)\) is decreasing in u,

-

(ii)

\(\frac{1}{\varvec{\theta }}\succeq ^{rm}\frac{1}{\varvec{\delta }}\) and \(\varvec{\lambda }\succeq ^{w}\varvec{\mu }\) imply \(X_{n:n}\ge _{\text {st}} Y_{n:n}\), provided \(u^2 r^*(u)\) is decreasing in u.

In Counterexample 3.6, we have shown that the results in Theorem 3.4 cannot be extended to the reversed hazard rate ordering. However, it is possible if we assume some additional conditions. The following theorem provides comparison between \(X_{n:n}\) and \(Y_{n:n}\) in terms of the reversed hazard rate ordering when tilt parameter vectors of two sample sets are connected with weakly supermajorization order. Note that when \(\varvec{X}\sim MPHRS(\varvec{\alpha },\varvec{\lambda },\varvec{\theta };{\bar{F}})\), the reversed hazard rate of \(X_{n:n}\) is given by

Theorem 3.10

Suppose that \({\varvec{X}}\sim MPHRS({\varvec{\alpha }},{\lambda }\varvec{1}_{n},\varvec{\theta };{\bar{F}})\) and \({\varvec{Y}}\sim MPHRS({\varvec{\beta }},{\lambda }\varvec{1}_{n},\varvec{\theta };{\bar{F}})\), where \(0<\varvec{\alpha },\varvec{\beta }\le 1\) and \(\varvec{\theta },\varvec{\alpha },\varvec{\beta }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Then, \({\varvec{\alpha }}\succeq ^w\varvec{\beta }\Rightarrow X_{n:n}\le _{\text {rh}} Y_{n:n},\) provided \(ur^*(u)\) is decreasing in u.

Proof

On differentiating (3.8) partially with respect to \(\alpha _i\), it can be observed that \({{\tilde{r}}}_{X_{n:n}}(x)\) is increasing in \(\alpha _i, i=1,\ldots ,n\). Let \(1\le i \le j\le n\). Then, \(\alpha _i \ge (\le ) \alpha _j\) and \(\theta _i \ge (\le ) \theta _j\) for \(i=1,\ldots ,n\). Combining these with Lemmas A.2 and A.3, and the decreasing property of \(u r^*(u)\), we get \(\frac{\partial \tilde{r}_{X_{n:n}}(x)}{\partial \alpha _i}-\frac{\partial \tilde{r}_{X_{n:n}}(x)}{\partial \alpha _j}\le (\ge )0.\) This implies that \({{\tilde{r}}}_{X_{n:n}}(x)\) is Schur-concave in \(\alpha _i\in {\mathcal {D}}_+({\mathcal {E}}_+), i=1,\ldots ,n\). Finally, the proof follows from Theorem A.8 of Marshall et al. [13]. \(\square \)

To verify Theorem 3.10, we consider the following example.

Example 3.11

Consider \(F(x)=(1-e^{-ax})^{b},~x>0,~a,b>0\). This is known as the exponentiated exponential distribution. Further, it is not hard to check that \(u r^{*}(u)\) is decreasing in \(u>0\) for \(a=0.1\) and \(b=0.2\). We take \((\alpha _1,\alpha _2,\alpha _3)=(0.01,0.2,0.31)\), \((\beta _1,\beta _2,\beta _3)=(0.02,0.3,0.5)\), \((\theta _{1},\theta _2,\theta _3)=(3,6,9)\) and \(\lambda =1\), which satisfy all the assumptions made in Theorem 3.10. Figure 2a plots the reversed hazard rates of \(X_{3:3}\) and \(Y_{3:3}\), which clearly justifies Theorem 3.10.

Now, we present some stochastic comparison results between two smallest order statistics constructed from two sample sets of independent random observations. Note that the minimum order statistic represents the lifetime of a series system. Thus, the following results are useful while comparing the lifetimes of two series systems having heterogeneous MPHRS models. We recall that the survival function of \(X_{1:n}\), where \(\varvec{X} \sim MPHRS(\varvec{\alpha },\varvec{\lambda },\varvec{\theta };{\bar{F}})\), is given by

The first theorem presents comparisons between \(X_{1:n}\) and \(Y_{1:n}\) in terms of the usual stochastic ordering when the scale parameter vectors of two sets are connected with weakly submajorization and p-larger orders. Here, we take common hazard rate parameters.

Theorem 3.12

Consider two random vectors \(\varvec{X}\) and \(\varvec{Y}\) such that \({\varvec{X}}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\lambda },\varvec{\theta };{\bar{F}})\) and \({\varvec{Y}}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\lambda },\varvec{\delta };{\bar{F}})\), where \(\varvec{\theta ,\lambda ,\delta }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Then, for \(\alpha \le 1\),

-

(i)

\({\varvec{\theta }}\succeq _w\varvec{\delta }\Rightarrow X_{1:n}\le _{\text {st}} Y_{1:n}\), provided \( r^*(u)\) is decreasing in u,

-

(ii)

\({{\varvec{\theta }}}\succeq ^{p}{\varvec{\delta }} \Rightarrow X_{1:n}\ge _{\text {st}} Y_{1:n}\), provided \(u r^*(u)\) is decreasing in u.

Proof

(i) The partial derivative of \({\bar{F}}_{X_{1:n}}(x)\) with respect to \(\theta _i,i=1,\ldots ,n\) is

which is at most zero. Thus, \({{\bar{F}}}_{X_{1:n}}(x)\) is decreasing in \(\theta _i,~i=1,\ldots ,n\). Further, let \(1\le i \le j \le n\). Then, \(\theta _i \ge (\le ) \theta _j\) and \(\lambda _i \le (\ge ) \lambda _j\). Now, making use of Lemma A.11, and the decreasing property of \(r^*(u)\), it can be shown that \(\frac{\partial \bar{F}_{X_{1:n}}(x)}{\partial \theta _i}-\frac{\partial \bar{F}_{X_{1:n}}(x)}{\partial \theta _j}\ge (\le )0.\) This implies that \({{\bar{F}}}_{X_{1:n}}(x)\) is Schur-convex in \(\theta _i\in {\mathcal {D}}_+({\mathcal {E}}_+),~i=1,\ldots ,n\). Now, from Theorem A.8 of Marshall et al. [13], the rest of the proof follows.

(ii) Under the assumptions made, Eq. (3.9) can be rewritten as

where \(v_i=\ln \theta _i,~i=1,\ldots ,n\). The rest of the proof follows similar to the proof of Theorem 3.1. Thus, it is omitted. \(\square \)

Remark 3.13

For the baseline distribution with distribution function \(F(x)=1-\exp \{1-x^{\alpha }\},~x\ge 1,\alpha >0, r^{*}(u)\) is decreasing when \(\alpha =2\). Thus, in this case, Theorem 3.12(i) can be applied. For the baseline distribution as in Remark 3.3, \(u r^{*}(u)\) is nonincreasing. For this distribution function, Theorem 3.12(ii) can be easily verified.

Theorem 3.14

Assume \({\varvec{X}}\sim MPHRS(\varvec{\alpha },{\lambda }\varvec{1}_{n},\varvec{\theta };{\bar{F}})\) and \({\varvec{Y}}\sim MPHRS(\varvec{\beta },{\lambda }\varvec{1}_{n},\varvec{\theta };{\bar{F}})\), where \(\varvec{\alpha ,\beta }\in {\mathcal {D}}_+({\mathcal {E}}_+)\) and \(\varvec{\theta }\in {\mathcal {E}}_+({\mathcal {D}}_+).\) Then, \({{\varvec{\alpha }}}\succeq ^{p}{\varvec{\beta }}\Rightarrow X_{1:n}\le _{\text {st}} Y_{1:n}\).

Proof

The distribution function of the minimum order statistic \(X_{1:n}\) is

where \(b_i=\ln \alpha _i,i=1,\ldots ,n\). Differentiating (3.12) with respect to \(b_i\), we get

which is negative, implies \(F_{X_{1:n}}(x)\) is decreasing in \(b_i\) for \(i=1,\ldots ,n\). Further, under the assumptions made, and the result in Lemma A.5, for \(1\le i\le j \le n\), it can be shown that \(\frac{\partial F_{X_{1:n}}(x)}{\partial b_i}-\frac{\partial F_{X_{1:n}}(x)}{\partial b_j}\ge (\le )0 \). Thus, \(F_{X_{1:n}}(x)\) is Schur-convex in \(\varvec{\alpha }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Making use of these, the result follows from Lemma 2.1 of Khaledi and Kochar [10]. This completes the proof of the theorem. \(\square \)

To illustrate Theorem 3.14, we consider the following example.

Example 3.15

Let \(\{X_1,X_2,X_3\} \) and \(\{Y_1,Y_2,Y_3\} \) be two sets of independent random variables with \(X_{i}\sim MPHRS(\alpha _i,\lambda ,\theta _i;{\bar{F}})\) and \(Y_i \sim MPHRS(\beta _i,\lambda ,\theta _i;{\bar{F}})\) for \(i=1,2,3.\) We take \((\theta _1,\theta _2,\theta _3)=(5,4,2),~\lambda =1,~(\alpha _1,\alpha _2,\alpha _3) =(0.1,0.3,0.5),~(\beta _1,\beta _2,\beta _3)=(0.2,0.5,0.6),~\alpha =0.3.\) The baseline distribution is taken to be exponentiated Weibull distribution with distribution function \(F(x)=(1-e^{-x^{\gamma }})^\eta ,~\eta ,\gamma ,x>0\). Further, it can be easily checked that \((\alpha _1,\alpha _2,\alpha _3)\succeq ^p(\beta _1,\beta _2,\beta _3)\). We plot the graphs of the distribution functions for \(X_{1:3}\) and \(Y_{1:3}\) in Fig. 2b when \(\eta =1\) and \(\gamma =2\), which verifies Theorem 3.14.

In the next theorem, we compare minimum order statistics of two sets of independent observations when the tilt parameters are same and fixed. Scale parameter vectors are taken same for both sets.

Theorem 3.16

Let \({\varvec{X}}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\lambda ,\theta };{{{\bar{F}}}})\) and \({\varvec{Y}}\sim MPHRS({\alpha }\varvec{1}_{n},\varvec{\mu ,\theta };{{{\bar{F}}}})\), where \({\alpha }\ge 1\) and \(\varvec{\theta ,\mu ,\lambda }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Then, \({{\varvec{\lambda }}}\succeq _{w}{\varvec{\mu }}\Rightarrow X_{1:n}\le _{\text {st}} Y_{1:n}\).

Proof

Under the assumptions made, and Lemma A.6, it can be shown that the survival function \({{\bar{F}}}_{X_{1:n}}(x)\) is Schur-concave in \(\varvec{\lambda }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Hence, by Theorem A.8 of Marshall et al. [13], the required result can be established. \(\square \)

Note that \(\succeq ^w\) implies \(\succeq ^{p}\). Thus, under the assumptions made as in Theorem 3.14, we have \({\varvec{\alpha }}\succeq ^w\varvec{\beta }\Rightarrow X_{1:n} \le _{\text {st}} Y_{1:n}\). From this result, question naturally arises: Can this result be extended to hazard rate ordering? The answer of this question is negative which is shown in the following counterexample.

Counterexample 3.17

Let \(F(x)=1-\exp \{1-x^{\gamma }\},~x\ge 1,\gamma >0\), the distribution function of a lower truncated Weibull random variable. Let us take \(\lambda =0.5, \gamma =0.1, (\theta _{1},\theta _2,\theta _3)=(0.6,0.5,0.1),(\alpha _1,\alpha _2,\alpha _3)=(0.1,0.3,0.5)\) and \((\beta _{1},\beta _2,\beta _3)=(0.7,0.9,0.11)\). One can easily see that all the conditions of Theorem 3.14 are satisfied. But, \(r_{X_{1:3}}(x)-r_{Y_{1:3}}(x)<0\) for \(x=8\) and \(r_{X_{1:3}}(x)-r_{Y_{1:3}}(x)>0\) for \(x=18\), that is, the curves of \(r_{X_{1:3}}(x)\) and \(r_{Y_{1:3}}(x)\) intersect each other.

Below, we obtain sufficient conditions for which hazard rate ordering between \(X_{1:n}\) and \(Y_{1:n}\) holds. Note that the hazard rate of \(X_{1:n}\), when \({\varvec{X}}\sim MPHRS(\varvec{\alpha },\varvec{\lambda ,\theta };{{{\bar{F}}}})\), is given by

Theorem 3.18

Assume \({\varvec{X}}\sim MPHRS({\varvec{\alpha }},{\lambda }\varvec{1}_{n},\varvec{\theta };{\bar{F}})\) and \({\varvec{Y}}\sim MPHRS({\varvec{\beta }},{\lambda }\varvec{1}_{n},\varvec{\theta };{\bar{F}})\), where \(0<\varvec{\alpha ,\beta }\le 1\) and \(\varvec{\theta ,\alpha ,\beta }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Then, \({\varvec{\alpha }}\succeq ^w\varvec{\beta }\Rightarrow X_{1:n}\le _{\text {hr}} Y_{1:n},\) provided \(ur^*(u)\) is decreasing in u.

Proof

Differentiating (3.14) with respect to \(\alpha _i\) for \(i=1,\ldots ,n,\) we get

which is nonpositive. So, \(r_{X_{1:n}}(x)\) is decreasing in \(\alpha _i\) for \(i=1,\ldots ,n\). Let \(1\le i \le j\le n\). Thus, under the assumptions, \(\alpha _i\ge (\le )\alpha _j\) and \(\theta _i\ge (\le )\theta _j\). Hence, using Lemmas A.2 and A.3, and the decreasing property of \(ur^*(u)\), it can be shown that \(\frac{\partial r_{X_{1:n}}(x)}{\partial \alpha _i}-\frac{\partial r_{X_{1:n}}(x)}{\partial \alpha _j}\ge (\le )0.\) This implies that \(r_{X_{1:n}}(x)\) is Schur-convex in \(\alpha _i,~i=1,\ldots ,n\). By Theorem A.8 of Marshall et al. [13], the result follows. \(\square \)

As an application of Theorem 3.18, we present the following example.

Example 3.19

Consider two series systems each having three components. Let \((X_{1},X_{2},X_{3})\) and \((Y_{1},Y_{2},Y_{3})\) be the random lifetimes of the components of these two systems having heterogeneous MPHRS models with parent distribution function \(F(x)=(1-\exp \{-ax-\frac{b x^2}{2}\})^{\gamma }, x>0,a,b,\gamma >0\). Assume \(a=1,b=0\) and \(\gamma =0.2\). Further, let \((\theta _1,\theta _2,\theta _3)=(0.5,0.9,0.92),\) \((\alpha _1,\alpha _2,\alpha _3)=(0.1,0.3,0.7)\), \((\beta _1,\beta _2,\beta _3)=(0.4,0.9,0.91)\) and \(\lambda =2.2\). It is not hard to see that the assumptions of Theorem 3.18 are satisfied. Also, \((\alpha _1,\alpha _2,\alpha _3)\succeq ^w(\beta _1,\beta _2,\beta _3)\). Thus, as an application of Theorem 3.18, we can conclude that the series system with component lifetimes \((Y_1,Y_2,Y_3)\) performs better than the other systems with component lifetimes \((X_{1},X_{2},X_{3})\) in the sense of the hazard rate order.

We end this subsection with the following theorem, which deals with various sufficient conditions for the usual stochastic ordering between the kth order statistics \(X_{k:n}\) and \(Y_{k:n}\). This result is useful when we compare the lifetimes of two \((n-k+1)\)-out-of-n systems.

Theorem 3.20

Let \({\varvec{X}}\sim MPHRS(\varvec{\alpha ,\lambda ,\theta };{\bar{F}})\) and \({\varvec{Y}}\sim MPHRS(\varvec{\beta ,\mu ,\delta };{\bar{F}}).\) Further, let \(0<\varvec{\alpha ,\beta }\le 1\) and \(k=1,\ldots ,n\).

-

(i)

Suppose \(\alpha _i=\beta _i=\alpha \) and \(\theta _i=\delta _i=\theta \) for \(i=1,\ldots ,n.\) Then, \({\varvec{\lambda }}\succeq ^m\varvec{\mu }\Rightarrow X_{k:n}\ge _{\text {st}} Y_{k:n}\).

-

(ii)

Suppose \(\theta _i=\delta _i=\theta \) and \(\lambda _i=\mu _i=\lambda \) for \(i=1,\ldots ,n.\) Then, \({\varvec{\alpha }}\succeq ^m\varvec{\beta }\Rightarrow X_{k:n}\ge _{\text {st}} Y_{k:n}\).

-

(iii)

Suppose \(\alpha _i=\beta _i=\alpha \) and \(\lambda _i=\mu _i=\lambda \) for \(i=1,\ldots ,n.\) Then, \({\varvec{\theta }}\succeq ^m\varvec{\delta }\Rightarrow X_{k:n}\ge _{\text {st}} Y_{k:n}\), provided \(r^*(u)\) is decreasing in u.

Proof

We prove the first part of the theorem. The proofs of Part (ii) and Part (iii) follow the same lines as the proof of (i) by considering the mentioned sufficient conditions. Under the conditions made as in Part (i), the survival function of \(X_{i},~i=1,\ldots ,n\), is given by

Taking logarithm on both sides of (3.16), and then differentiating with respect to \(\lambda _i\) twice, we obtain

which is nonnegative. This implies that \(\ln {{\bar{G}}}(x)\) is monotone and convex in \(\lambda _i,i=1,\ldots ,n\). Thus, from Lemma 2.1 [16], the survival function of the kth order statistic, say \({\bar{F}}_{X_{k:n}}(x)\), is Schur-convex with respect to \(\lambda _i,i=1,\ldots ,n\). Utilizing this, the desired result is obtained using Theorem A.8 in Marshall et al. [13]. \(\square \)

3.2 Results for MPRHRS Model

Analogous to the previous subsection, here, we are interested in investigating stochastic comparisons between order statistics. These are obtained when two sets of independent observations have heterogeneous MPRHRS models. Consider a set of independent random observations \(\{X_{1},\ldots ,X_{n}\}\) such that \(X_{i}\sim MPRHRS(\alpha _i,\eta _i,\theta _i;F),~i=1,\ldots ,n\). We denote \({\varvec{X}}\sim MPRHRS({\varvec{\alpha }},\varvec{\eta },\varvec{\theta };F)\), where \(\varvec{\eta }=(\eta _1,\ldots ,\eta _n)\). First, let us present comparison results for maximum order statistics. We recall that the distribution function of \(X_{n:n}\) is given by

In the first theorem of this subsection, we take scalar but equal tilt parameters and vector valued but equal reversed hazard parameters.

Theorem 3.21

Assume \({\varvec{X}}\sim MPRHRS({\alpha }\varvec{1}_{n},\varvec{\eta },\varvec{\theta }; F)\) and \({\varvec{Y}}\sim MPRHRS({\alpha }\varvec{1}_{n},\varvec{\eta },\varvec{\delta };{ F})\), where \(\alpha \ge 1\), \(\varvec{\theta ,\delta }\in {\mathcal {D}}_+({\mathcal {E}}_+)\) and \(\varvec{\eta }\in {\mathcal {E}}_+({\mathcal {D}}_+)\).

-

(i)

If \(u{{\tilde{r}}}^*(u)\) is decreasing in u, then \({\varvec{\theta }}\succeq ^p\varvec{\delta }\Rightarrow X_{n:n}\ge _{\text {st}} Y_{n:n}\).

-

(ii)

If \(u^2 {{\tilde{r}}}^*(u)\) is decreasing in u, then \(\frac{1}{{\varvec{\theta }}} \succeq ^{rm}\frac{1}{\varvec{\delta }}\Rightarrow X_{n:n}\ge _{\text {st}} Y_{n:n}\).

Proof

(i) The distribution function of \(X_{n:n}\) is given by

where \(v_i=\ln \theta _i.\) The partial derivative of \(F_{X_{n:n}}(x)\) with respect to \(v_{i}\) is

which is nonnegative. Thus, \(\xi _1({\varvec{v}})\) is increasing with respect to \(v_{i},i=1,\ldots ,n\). Consider \(1\le i \le j \le n\). Then, under the assumptions, \(\eta _i\le (\ge )\eta _j\) and \(v_i\ge (\le )v_j.\) Utilizing this with the result of Lemma A.8, it can be shown that \(\frac{\partial \xi _1({\varvec{v}})}{\partial v_i}-\frac{\partial \xi _1({\varvec{v}})}{\partial v_j}\le (\ge )0\) since \(u{{\tilde{r}}}^*(u)\) is decreasing in u. This implies that \(\xi _1({\varvec{v}})\) is Schur-concave in \(v_i\in \mathcal {D_+}(\mathcal {E_+})\) for \(i=1,\ldots ,n\). Thus, the required result in Part (i) follows from Lemma 2.1 of Khaledi and Kochar [10].

(ii) To prove the second part of the theorem, we rewrite (3.18) as

where \(w_{i}=1/\theta _i,i=1,\ldots ,n\). The partial derivative of (3.21) with respect to \(w_i\) is given by

which is nonpositive. This implies that \(\xi _2\left( 1/{\varvec{w}} \right) \) is decreasing in \(w_{i}\). Further, let \(1\le i \le j \le n\). Then, under the assumption made, \(\theta _i\le (\ge )\theta _j\Rightarrow x/w_i\le (\ge ) x/w_j\) and \(\eta _i\ge (\le )\eta _j.\) Utilizing this with Lemma A.8, and the decreasing property of \(u^2 {{\tilde{r}}}^*(u)\), we obtain \(\frac{\partial \xi _2(1/{\varvec{w}})}{\partial w_i}-\frac{\partial \xi _2(1/{\varvec{w}})}{\partial w_j}\le (\ge )0\). Hence, \(\xi _2\left( 1/{\varvec{w}} \right) \) is Schur-concave in \(w_i\in \mathcal {D_+}(\mathcal {E_+})\), \(i=1,\ldots ,n\). The rest of the proof follows from Lemma 2.1 of Hazra et al. [8]. \(\square \)

Remark 3.22

Consider distribution functions of Frechet, exponential and Pareto distributions which are given by \(F_{1}(x)=\exp \{-1/x\},x>0\), \(F_{2}(x)=1-\exp \{-x\},~x>0\) and \(F_{3}(x)=1-x^{-\tau },~x\ge 1,\tau >0\), respectively. For these, \(u {{\tilde{r}}}^*(u)\) and \(u^2 {{\tilde{r}}}^*(u)\) are decreasing in u. Now, consider two parallel systems whose component lifetimes have heterogeneous MPRHRS models with either of these parent distributions. Then, Theorem 3.21 is useful to investigate a better system in terms of the usual stochastic ordering provided the assumptions on the model parameters are satisfied.

Next, we assume that the reversed hazard rate parameters are scalar valued and equal, the scale parameter vectors are common for both sets of independent random observations.

Theorem 3.23

Consider that \({\varvec{X}}\sim MPRHRS({\varvec{\alpha }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\) and \({\varvec{Y}}\sim MPRHRS({\varvec{\beta }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\), where \(\varvec{\theta ,\alpha ,\beta },\eta \in {\mathcal {E}}_+({\mathcal {D}}_+).\) Then, \({\varvec{\alpha }}\succeq ^p\varvec{\beta }\Rightarrow X_{n:n}\ge _{\text {st}} Y_{n:n}\).

Proof

Note that the distribution function of \(X_{n:n}\) is

where \(\tau _i=\ln \alpha _i.\) After differentiating (3.23) with respect to \(\tau _i,\) partially, we get

which is at least zero. Thus, \(\xi _3(e^{{\varvec{\tau }}})\) is increasing in \(\tau _{i},~i=1,\ldots ,n\). Under the given assumptions, for \(1\le i \le j\le n\), we have \({\alpha _i}\le (\ge ) {\alpha _j}\Rightarrow {\tau _i}\le (\ge ) {\tau _j}\) and \(\theta _i\le (\ge )\theta _j.\) Further, using Lemma A.9, we get \(\frac{\partial \xi _3(e^{{\varvec{\tau }}})}{\partial \tau _i}-\frac{\partial \xi _3(e^{{\varvec{\tau }}})}{\partial \tau _j}\ge (\le )0\), which implies that \(\xi _3(e^{{\varvec{\tau }}})\) is Schur-concave in \(\tau _i\in \mathcal {E_+}(\mathcal {D_+})\). Hence, from Lemma 2.1 of Khaledi and Kochar [10], the required result readily follows. \(\square \)

The following example illustrates Theorem 3.23.

Example 3.24

Let \(\{X_{1},X_{2},X_{3}\}\) and \(\{Y_{1},Y_{2},Y_{3}\}\) be two sets of independent random observations with \(X_{i}\sim MPRHRS(\alpha _{i},{\eta },\theta _{i};{ F})\) and \(Y_{i}\sim MPRHRS(\beta _{i},{\eta },\theta _{i};{ F}), i=1,2,3\). We take \((\theta _1,\theta _2,\theta _3) = (5, 6, 7), (\alpha _1,\alpha _2,\alpha _3) = (0.1, 0.2, 0.3), (\beta _1,\beta _2,\beta _3) = (0.4, 0.5, 0.6)\) and \(\eta =1\). Consider the baseline distribution as \(F(x)=1-(1+\zeta x)^{-1/\zeta },x>0,\zeta >0\), which is known as the generalized Pareto distribution. It can be observed that all the assumptions in Theorem 3.23 are satisfied. We plot the graphs of \(F_{X_{3:3}}(x)\) and \(F_{Y_{3:3}}(x)\) in Fig. 3a when \(\zeta =2\). This clearly verifies Theorem 3.23.

Remark 3.25

Since \(\succeq ^w\) implies \(\succeq ^{p}\), an alternative result to Theorem 3.23 can be stated as follows. Let \({\varvec{X}}\sim MPRHRS({\varvec{\alpha }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\) and \({\varvec{Y}}\sim MPRHRS({\varvec{\beta }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\), where \(\varvec{\theta ,\alpha ,\beta },\eta \in {\mathcal {E}}_+({\mathcal {D}}_+).\) Then, \({\varvec{\alpha }}\succeq ^w\varvec{\beta }\Rightarrow X_{n:n}\ge _{\text {st}} Y_{n:n}\).

Counterexample 3.26

Let \(F(x)=1-x^{-\gamma },x\ge 1,\gamma >0\). Further, let \(\eta =0.5,(\theta _1,\theta _2,\theta _3)=(0.1,0.5,0.9),(\alpha _1,\alpha _2,\alpha _3) =(0.3,0.7,0.9)\) and \((\beta _1,\beta _2,\beta _3)=(0.15,0.16,0.17)\). Then, the assumptions made in Remark 3.25 are satisfied. In this case, when \(\gamma =5,\) \({\tilde{r}}_{X_{3:3}}(11)-{\tilde{r}}_{Y_{3:3}}(11)=-0.126988\), implies \(X_{3:3}\ngeq _{\text {rh}} Y_{3:3}\).

The Counterexample 3.26 shows that under the conditions taken in Remark 3.25, the usual stochastic order cannot be extended to the reversed hazard rate order. Next, we obtain sufficient conditions for the comparison of \(X_{n:n}\) and \(Y_{n:n}\) in terms of the reversed hazard rate ordering. Let \({\varvec{X}}\sim MPRHRS({\varvec{\alpha }},{\varvec{\eta }},\varvec{\theta };{ F})\). Then, the reversed hazard rate function of the maximum order statistic \(X_{n:n}\) is obtained as

Below, we consider two sets of independent random variables with common vector-valued scale parameters and same scalar reversed hazard rate parameters.

Theorem 3.27

Assume \({\varvec{X}}\sim MPRHRS({\varvec{\alpha }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\) and \({\varvec{Y}}\sim {MPRHRS}({\varvec{\beta }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\), where \(0<{\varvec{\alpha },\varvec{\beta }}\le 1\), \(\varvec{\alpha ,\beta }\in {\mathcal {D}}_+({\mathcal {E}}_+)\) and \(\varvec{\theta }\in {\mathcal {E}}_+({\mathcal {D}}_+).\) Then, \({\varvec{\alpha }}\succeq ^w\varvec{\beta }\Rightarrow X_{n:n}\ge _{\text {rh}} Y_{n:n},\) provided \(u{{\tilde{r}}}^*(u)\) is increasing in u.

Proof

Similar to the arguments used to prove the previous theorem, it can be shown that \({{\tilde{r}}}_{X_{n:n}}(x)\) is decreasing and Schur-convex in \(\alpha _i\in {\mathcal {D}}_+({\mathcal {E}}_+)\) for \(i=1,\ldots ,n.\) Using this, the proof follows from Lemmas A.4 and A.10, and Theorem A.8 of Marshall et al. [13]. \(\square \)

Consider two series systems comprising heterogeneous MPRHRS models. In the following consecutive theorems, we obtain comparison results between the lifetimes of series systems in terms of the usual stochastic and hazard rate orderings under various assumptions.

Theorem 3.28

Let \({\varvec{X}}\sim MPRHRS({\varvec{\alpha }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\) and \({\varvec{Y}}\sim MPRHRS({\varvec{\beta }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\), where \(0<{\varvec{\alpha },\varvec{\beta }}\le 1\), \(\varvec{\alpha ,\beta }\in {\mathcal {D}}_+({\mathcal {E}}_+)\) and \(\varvec{\theta }\in {\mathcal {E}}_+({\mathcal {D}}_+).\) Then, \({\varvec{\alpha }}\succeq ^w\varvec{\beta }\Rightarrow X_{1:n}\ge _{\text {st}} Y_{1:n}\).

Proof

Making use of Lemma A.4, the proof follows similar to that of the previous theorem. Hence, it is omitted. \(\square \)

Below, we present a counterexample to show that the usual stochastic ordering can not be extended to the hazard rate ordering when same sufficient conditions in Theorem 3.28 hold.

Counterexample 3.29

Take same distribution function as in Counterexample 3.26. Assume \((\theta _1,\theta _2,\theta _3)=(0.9,0.5,0.1),(\alpha _1,\alpha _2,\alpha _3) =(0.2,0.3,0.4), (\beta _1,\beta _2,\beta _3)=(0.11,0.12,0.13)\) and \(\eta =1\). Note that all the conditions in Theorem 3.28 are satisfied. Further, \(r_{X_{1:3}}(x)-r_{Y_{1:3}}(x)=0.0484186\) at \(x=11\) when \(\gamma =0.5\). This implies that \(X_{1:3}\ngeq _{\text {hr}} Y_{1:3}\).

Next, we obtain sufficient conditions for the hazard rate ordering between \(X_{1:n}\) and \(Y_{1:n}\). Let \({\varvec{X}}\sim MPRHRS(\varvec{\alpha },\varvec{\eta },\varvec{\theta };{ F})\). Then, the hazard rate of \(X_{1:n}\) is given by

Theorem 3.30

Assume \({\varvec{X}}\sim MPRHRS({\varvec{\alpha }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\) and \({\varvec{Y}}\sim MPRHRS({\varvec{\beta }},{\eta }\varvec{1}_{n},\varvec{\theta };{ F})\), where \(0<\varvec{\alpha ,\beta }\le 1\) and \(\varvec{\alpha ,\beta }\in {\mathcal {E}}_+({\mathcal {D}}_+)\) and \(\varvec{\theta }\in {\mathcal {D}}_+({\mathcal {E}}_+).\) Then, \({\varvec{\alpha }}\succeq ^w\varvec{\beta }\Rightarrow X_{1:n}\ge _{\text {hr}} Y_{1:n},\) provided \(u{{\tilde{r}}}^*(u)\) is increasing in u.

Proof

The partial derivative of \(r_{X_{1:n}}(x)\) with respect to \(\alpha _i\) is

This is clearly nonnegative, implies \(r_{X_{1:n}}(x)\) is increasing with respect to \(\alpha _i,i=1,\ldots ,n\). Let \(1\le i \le j \le n\). Then, under the assumptions made, \(\alpha _i\le (\ge )\alpha _j\) and \(\theta _i\ge (\le )\theta _j\). Further, using Lemmas A.4 and A.10, and increasing property of \(u{{\tilde{r}}}^*(u)\), it can be shown that \(\frac{\partial r_{X_{1:n}}(x)}{\partial \alpha _i}-\frac{\partial r_{X_{1:n}}(x)}{\partial \alpha _j}\ge (\le )0.\) Hence, \( r_{X_{1:n}}(x)\) is Schur-concave in \(\alpha _i\in {\mathcal {E}}_+({\mathcal {D}}_+)\) for \(i=1,\ldots ,n.\) Finally, by Theorem A.8 of Marshall et al. [13], we obtain the required result. \(\square \)

As an application of Theorem 3.30, we present the following example.

Example 3.31

Consider two systems A and B each having three components connected in series. Let \((X_{1},X_{2},X_{3})\) and \((Y_1,Y_2,Y_3)\) be the lifetimes of the components of A and B, respectively. Assume that the lifetimes follow heterogeneous MPRHRS distributions with parent distribution function \(F(x)=(x/a)^{l},~0<x<a,l>0\). For this case, \(u{\tilde{r}}^*(u)\) is nondecreasing in u. Further, let \((\alpha _1,\alpha _2,\alpha _3)=(0.2,0.5,0.9), (\beta _1,\beta _2,\beta _3)=(0.3,0.7,1.1),(\theta _1,\theta _2,\theta _3)=(0.5,0.4,0.1)\) and \(\eta =2\). Moreover, all the conditions of Theorem 3.30 are clearly satisfied. Therefore, as an application of Theorem 3.30, we conclude that the system A has better performance than system B in the sense of the hazard rate ordering.

Below, we present stochastic ordering results between kth order statistics \(X_{k:n}\) and \(Y_{k:n}\) when two sets of independent observations are available with heterogeneous MPRHRS models.

Theorem 3.32

Let \({\varvec{X}}\sim MPRHRS(\varvec{\alpha },\varvec{\eta },\varvec{\theta };{F})\) and \({\varvec{Y}}\sim MPRHRS(\varvec{\beta },\varvec{\gamma },\varvec{\delta };{F}).\) Further, assume \(0<\varvec{\alpha },\varvec{\beta }\le 1\).

-

(i)

Suppose \(\alpha _i=\beta _i=\alpha \) and \(\theta _i=\delta _i=\theta \) for \(i=1,\ldots ,n.\) Then, \({\varvec{\eta }}\succeq ^m\varvec{\gamma }\Rightarrow X_{k:n}\ge _{\text {st}} Y_{k:n}\).

-

(ii)

Suppose \(\theta _i=\delta _i=\theta \) and \(\eta _i=\gamma _i= \eta \) for \(i=1,\ldots ,n.\) Then, \({\varvec{\alpha }}\succeq ^m{\varvec{\beta }}\Rightarrow X_{k:n}\le _{\text {st}} Y_{k:n}\).

Proof

The proof is analogous to that of Theorem 3.20. Thus, it is omitted. \(\square \)

4 Multiple-Outlier Models

This section is devoted to the comparison of the smallest and largest order statistics taken from multiple-outlier models. Assume \(X_1,\ldots ,X_n\) be a collection of independent random observations, where \(X_i\overset{st}{=}X,~i=1,\ldots ,p\) and \(X_i\overset{st}{=}Y,~i=p+1,\ldots ,n.\) Let \(p+q=n,~p^*+q^*=n^*,\) and \(n\ne n^*.\) Denote \(X_{n:n}(p,q)\) and \(X_{1:n}(p,q)\) the largest and smallest order statistics, respectively, taken from \((X_1,\ldots ,X_p,X_{p+1},\ldots ,X_n)\). Similarly, \(X_{n^*:n^*}(p^*,q^*)\) and \(X_{1:n^*}(p^*,q^*)\) are, respectively, denoted as the largest and smallest order statistics, which are constructed from \((X_1,\ldots ,X_{p^*},X_{p^*+1},\ldots ,X_{n^*}).\) Suppose \(F_1(x)\) and \(F_2(x)\) are the distribution functions of X and Y, respectively. Then, from Arnold et al. [1], the distribution function of \(X_{n:n}(p,q)\) is given by

Throughout this section, we assume that \(p^*\le p\le q \le q^*.\)

Theorem 4.1

Assume that for \(i=1,\ldots ,p,\) \( X_i\sim MPHRS({\alpha _1},{\lambda _1,\theta _1};{ {{\bar{F}}}})\) and for \(i=p+1,\ldots ,n,\) \( X_i\sim MPHRS({\alpha _2},\lambda _2,\theta _2;\bar{F}).\) Let \((p^*,q^*)\succeq ^w(p,q).\) Then,

-

(i)

\( X_{1:n}(p,q)\le _{\text {st}} X_{1:n^*}(p^*,q^*),\) provided \(\alpha _1\le \alpha _2,~\theta _1\ge \theta _2\) and \(\lambda _1\ge \lambda _2\),

-

(ii)

\( X_{n:n}(p,q)\ge _{\text {st}} X_{n^*:n^*}(p^*,q^*),\) provided \(\alpha _1\ge \alpha _2,~\theta _1\le \theta _2\) and \(\lambda _1\le \lambda _2\).

Proof

To prove the first part of the theorem, we have to show that

Further, it is assumed that \((p^*,q^*)\succeq ^w(p,q)\). Therefore,

Moreover, it can be shown that the function \(\frac{\alpha (1-t)^\lambda }{1-{{\bar{\alpha }}}(1-t)^\lambda }\) is increasing in \(\alpha ,\) and decreasing in \(\lambda \) and t. Utilizing this and the assumptions, we obtain

Combining inequalities in (4.3) and (4.4), we get (4.2). This completes the proof of the first part. The proof of the second part is similar, and thus omitted. \(\square \)

To verify Theorem 4.1(i), we consider the following example.

Example 4.2

Let \(F(x)=1-\exp \{1-(1+x^c)^{1/k}\},~x>0,c,k>0\). This is known as power generalized Weibull distribution. Now, set \((p^*,q^*)=(2,7),(p,q)=(5,6),(\alpha _1,\alpha _2)=(0.1,0.3),(\lambda _1,\lambda _2)=(3,1)\) and \((\theta _1,\theta _2)=(2.4,1.2)\). All the conditions of Theorem 4.1(i) are satisfied. The graphs of \(F_{X_{1:11}(5,6)}(x)\) and \(F_{X_{1:9}(2,7)}(x)\) are plotted in Fig. 3b for \(c=k=1\), which clearly verifies the result in Theorem 4.1(i).

Below, we propose sufficient conditions for which hazard rate and reversed hazard rate orderings between \(X_{n:n}(p,q)\) and \(X_{n^*:n^*}(p^*,q^*)\) hold.

Theorem 4.3

Assume, for \(i=1,\ldots ,p,\) \( X_i\sim MPHRS({\alpha _1},\lambda _1,\theta _1;{ {{\bar{F}}}})\) and for \(i=p+1,\ldots ,n,\) \( X_i\sim MPHRS({\alpha _2},\lambda _2,\theta _2;{\bar{F}}).\) Let \((p^*,q^*)\succeq ^w(p,q), 0<\alpha _1,\alpha _2\le 1\) and \(u r^*(u)\) is decreasing in u. Then,

-

(i)

\( X_{1:n}(p,q)\le _{\text {hr}} X_{1:n^*}(p^*,q^*),\) provided \(\alpha _1\le \alpha _2,~\theta _1\le \theta _2,~\lambda _1\ge \lambda _2\),

-

(ii)

\( X_{n:n}(p,q)\ge _{\text {rh}} X_{n^*:n^*}(p^*,q^*),\) provided \(\alpha _1\ge \alpha _2,~\theta _1\le \theta _2,~\lambda _1\le \lambda _2.\)

Proof

(i) To prove Part (i), we need to show that

which can be obtained using the assumptions and Lemma A.11.

(ii) In this case, we have to show that

Now, it can be proved that for \(0<t\le 1\), \(\frac{\alpha }{1-{{\bar{\alpha }}}(1-t)^\lambda }\) is increasing in \(\alpha \), and decreasing in \(\lambda \) and t for \(0<\alpha \le 1\). Using this result along with the given assumption and Lemma A.1, the inequality in (4.6) follows. \(\square \)

Note that the result stated in Theorem 4.3 (i) can be obtained when the values of \(\alpha _1\) and \(\alpha _2\) are greater than or equal to one under slightly modified conditions. The proof of this result is similar to that of Theorem 4.3 (i). Thus, we only state the result.

Theorem 4.4

Assume, for \(i=1,\ldots ,p,\) \( X_i\sim MPHRS({\alpha _1},\lambda _1, {\theta _1};{ {{\bar{F}}}})\) and for \(i=p+1,\ldots ,n,\) \( X_i\sim MPHRS({\alpha _2},\lambda _2,\theta _2;{\bar{F}}).\) Let \((p^*,q^*)\succeq ^w(p,q)\). Then, \( X_{1:n}(p,q)\le _{\text {hr}} X_{1:n^*}(p^*,q^*),\) provided \(\alpha _1\le \alpha _2,~\theta _1\ge \theta _2,~\lambda _1\ge \lambda _2,~\alpha _1,\alpha _2\ge 1\) and \(u r^*(u)\) is increasing in u.

In the next theorem, we extend Theorem 4.3(i) from the hazard rate order to the likelihood ratio order when \(0<\alpha _1,\alpha _2\le 1.\)

Theorem 4.5

Assume, for \(i=1,\ldots ,p,\) \( X_i\sim MPHRS({\alpha _1},{\lambda _1,\theta _1};{ {{\bar{F}}}})\) and for \(i=p+1,\ldots ,n,\) \( X_i\sim MPHRS({\alpha _2},\lambda _2,\theta _2;\bar{F}),0<\alpha _1,\alpha _2\le 1.\) If \((p^*,q^*)\succeq ^w(p,q)\), then \( X_{1:n}(p,q)\le _{\text {lr}} X_{1:n^*}(p^*,q^*)\) holds, provided \(\alpha _1\le \alpha _2,~\theta _1\le \theta _2,\) \(\lambda _1\ge \lambda _2\) and \(ur^*(u)\) is decreasing and \(u{r^*}'(u)/r^*(u)\) is increasing in u.

Proof

To prove the required result, we have to show that the ratio of two density functions

is increasing in \(x>0\). From Theorem 4.3(i), we have \(\frac{{{\bar{F}}_{X_{1:n^*}(p^*,q^*)}(x)}}{{{\bar{F}}_{X_{1:n}(p,q)}(x)}}\) is increasing in x. Thus, we only need to show that the other function in (4.7) is increasing in \(x>0\). Differentiating K(x) with respect to x, we get

Further, \(p^*\le p\le q\le q^*\) implies \(p^*q\le q^*p.\) Also, since \(\theta _1\le \theta _2\), we have \(\frac{x\theta _1{r^*}'(x\theta _1)}{r^*(x\theta _1)}\le \frac{x\theta _2{r^*}'(x\theta _2)}{r^*(x\theta _2)}\) and \(x\theta _1r^*(x\theta _1)\ge x\theta _2 r^*(x\theta _2).\) Utilizing these along with the assumptions and Lemma A.11, it can be shown that the derivative of K(x) is at least zero. This completes the proof. \(\square \)

To validate Theorem 4.5, we consider the following example.

Example 4.6

Let \(F(x)=[1-\exp \{-(ax+bx^2/2)\}]^{c}\), where \(x>0\) and \(a,b,c>0\) be the distribution function of the generalized linear failure rate distribution. When \(b=0,a=1\) and \(c=0.5\), it can be verified that \(u r^*(u)\) is decreasing and \(u{r^*}'(u)/r^*(u)\) is increasing in u. Now, take \((\theta _1,\theta _2)=(0.2,0.3)\), \((\lambda _1,\lambda _2)=(2,1), (\alpha _1,\alpha _2)=(0.3,0.9)\), \((p,q)=(8,9)\) and \((p^*,q^*)=(5,11)\). Clearly, all the conditions of Theorem 4.5 are satisfied. Furthermore, \((5,11)\succeq ^{w}(8,9)\). Figure 4a plots the ratio of two density functions given in (4.7), from which it can be observed that \( X_{1:17}(8,9)\le _{\text {lr}} X_{1:16}(5,11)\). Hence, the validity of Theorem 4.5 is justified.

Till now, we have concentrated on the systems comprising of MPHRS-distributed component lifetimes. Hereafter, we consider the parallel and series systems having MPRHRS distributed component lifetimes.

Theorem 4.7

For \(i=1,\ldots ,p,\) let \( X_i\sim MPRHRS({\alpha _1},{\eta _1,\theta _1};{ F})\) and for \(i=p+1,\ldots ,n,\) \( X_i\sim MPRHRS({\alpha _2},\eta _2,\theta _2; F).\) Let \((p^*,q^*)\succeq ^w(p,q)\) hold.

-

(i)

If \(\alpha _1,\alpha _2\ge 1\), then \( X_{n:n}(p,q)\ge _{\text {st}} X_{n^*:n^*}(p^*,q^*),\) provided \(\alpha _1\le \alpha _2,~\theta _1 \le \theta _2\) and \(\eta _1\ge \eta _2\).

-

(ii)

If \(\alpha _1,\alpha _2>0\), then \( X_{1:n}(p,q)\le _{\text {st}} X_{1:n^*}(p^*,q^*),\) provided \(\alpha _1\ge \alpha _2,~\theta _1\ge \theta _2\) and \(\eta _1\le \eta _2\).

Proof

The proof of this theorem is analogous to that of Theorem 4.1. Thus, it is omitted for the sake of conciseness. \(\square \)

Counterexample 4.8

Consider the distribution function of exponentiated Lomax distribution as \(F(x)=1-(1+x)^{-1},~x>0\).

\(\mathbf{(i)}\) Let \((\theta _1,\theta _2)=(0.2,0.3)\), \((\eta _1,\eta _2)=(0.9,0.5), (\alpha _1,\alpha _2)=(2,3)\), \((p,q)=(3,6)\) and \((p^*,q^*)=(1,7)\). Thus, all the assumptions in Theorem 4.7(i) are satisfied. Further, \((1,7)\succeq ^{w} (3,6)\). Now, for \(x=2,\) we obtain \({\tilde{r}}_{X_{9:9}(3,6)}(x)-{\tilde{r}}_{X_{8:8}(1,7)}(x)=-0.6310\). This implies that based on the same assumptions in Theorem 4.7(i), \((p^*,q^*)\succeq ^w(p,q)\nRightarrow X_{n:n}(p,q)\ge _{\text {rh}} X_{n^*:n^*}(p^*,q^*)\).

\(\mathbf{(ii)}\) Let \((\theta _1,\theta _2)=(0.5,0.3)\), \((\alpha _1,\alpha _2)=(0.2,0.8), (p,q)=(5,6), (p^*,q^*)=(2,8)\) and \((\eta _1,\eta _2)=(1,7)\). It is easy to verify that all the conditions taken in Theorem 4.7(ii) are satisfied. Here, \((2,8)\succeq ^{w} (5,6)\). At \(x=11,\) we get \(r_{X_{1:11}(5,6)}(x)-r_{X_{1:10}(2,8)}(x)=-2.439\). This implies that under the same assumptions as in Theorem 4.7(ii), \((p^*,q^*)\succeq ^w(p,q)\nRightarrow X_{1:n}(p,q)\le _{\text {hr}} X_{1:n^*}(p^*,q^*)\).

In the next result, we show that there exist sufficient conditions for which Theorems 4.7(i) and 4.7(ii) can be extended to the reversed hazard rate and hazard rate orderings, respectively.

Theorem 4.9

Assume \( X_i\sim MPRHRS(\alpha _1,\eta _1,\theta _1;{ F})\) for \(i=1,\ldots ,p,\) and for \(i=p+1,\ldots ,n,\) \( X_i\sim MPRHRS({\alpha _2},\eta _2,\theta _2; F).\) Let \((p^*,q^*)\succeq ^w(p,q)\) and \(u{{\tilde{r}}}^*(u)\) be increasing in u. Then, for \(0<\alpha _1,\alpha _2\le 1,\)

-

(i)

\( X_{n:n}(p,q)\ge _{\text {rh}} X_{n^*:n^*}(p^*,q^*),\) provided \(\alpha _1\le \alpha _2, \theta _1\ge \theta _2,~\eta _1\ge \eta _2\),

-

(ii)

\( X_{1:n}(p,q)\le _{\text {hr}} X_{1:n^*}(p^*,q^*),\) provided \(\alpha _1\le \alpha _2,\) \(\theta _1\ge \theta _2,~\eta _1\le \eta _2\).

Proof

The detailed proof is omitted, being similar to that of Theorem 4.3. Note that the first part follows using Lemma A.8 and the assumptions. Utilizing given conditions, the second part follows from Lemmas A.12 and A.13. \(\square \)

Further, it may be of interest to examine the change in conditions of Theorem 4.9(i) if ‘\(0<\alpha _1,\alpha _2 \le 1, \theta _1\ge \theta _2\) and \(u{\tilde{r}}^{*}(u)\) is increasing’ are replaced by ‘\(\alpha _1,\alpha _2 \ge 1, \theta _1\le \theta _2\) and \(u{\tilde{r}}^{*}(u)\) is decreasing.’ In this direction, we have the following theorem.

Theorem 4.10

Assume for \(i=1,\ldots ,p,\) \( X_i\sim MPRHRS({\alpha _1},{\eta _1,\theta _1};{ F})\) and for \(i=p+1,\ldots ,n,\) \( X_i\sim MPRHRS({\alpha _2},\eta _2,\theta _2; F).\) Further, let \((p^*,q^*)\succeq ^w(p,q).\) Then, \( X_{n:n}(p,q)\ge _{\text {rh}} X_{n^*:n^*}(p^*,q^*),\) provided \(1\le \alpha _1\le \alpha _2,\theta _1\le \theta _2,\eta _1\ge \eta _2\) and \(u{{\tilde{r}}}^*(u)\) is decreasing in u.

Proof

The proof is similar to Theorem 4.9(i), and hence it is omitted. \(\square \)

In the following theorem, we show that the likelihood ratio order holds between \(X_{n:n}(p,q)\) and \(X_{n^*:n^*}(p^*,q^*)\) when the reversed hazard rate parameters are fixed and the tilt parameters belong to (0, 1].

Theorem 4.11

For \(i=1,\ldots ,p,\) assume \( X_i\sim MPRHRS({\alpha _1},{\eta _1,\theta _1};{ F})\), and for \(i=p+1,\ldots ,n,\) \( X_i\sim MPRHRS({\alpha _2},\eta _2,\theta _2; F),~0<\alpha _1,\alpha _2\le 1.\) If \((p^*,q^*)\succeq ^w(p,q),\) then \( X_{n:n}(p,q)\ge _{\text {lr}} X_{n^*:n^*}(p^*,q^*),\) provided \(\alpha _1\le \alpha _2,~\theta _1\ge \theta _2,\) \(\eta _1=\eta _2\), and \(u{\tilde{r}}^*(u)\) and \( u{{\tilde{r}}^{*'}}(u)/{\tilde{r}}^*(u)\) are increasing in u.

Proof

To prove the said result, we have to show that

is increasing in x. Now, by Theorem 4.9(i), we have \(\frac{F_{X_{n:n}(p,q)}(x)}{F_{X_{n^*:n^*}(p^*,q^*)}(x)}\) is increasing in x. So, it is enough to show that \(K_2(x)\) is increasing in x. After differentiating \(K_2(x)\) with respect to x, we get

Further, \(p^*\le p\le q\le q^*\). This implies \(p^*q\le q^*p\). Also, \(\theta _1\ge \theta _2\) implies \(\frac{x\theta _1{{\tilde{r}}^{*'}}(x\theta _1)}{{\tilde{r}}^*(x\theta _1)} \ge \frac{x\theta _2{{\tilde{r}}^{*'}}(x\theta _2)}{{\tilde{r}}^*(x\theta _2)}\) and \(\theta _1{\tilde{r}}^*(x\theta _1)\ge \theta _2{\tilde{r}}^*(x\theta _2).\) Finally, using the assumptions made and Lemma A.4, we can show that \(K_2'(x)\ge 0\). This completes the proof. \(\square \)

To verify Theorem 4.11, we consider the following example.

Example 4.12

Let \(F(x)=(x/a)^{l}\), where \(0<x<a\) and \(l>0\). Further, let \((\theta _1,\theta _2)=(6,2), (\alpha _1,\alpha _2)=(0.5,0.7)\), \((p,q)=(5,8), (p^*,q^*)=(2,10)\) and \(\eta _1=\eta _2=2\). Furthermore, we take \(a=10\) and \(l=0.1\). It is easy to see that all the conditions in Theorem 4.11 are satisfied. Based on these values, in Fig. 4b, we plot the ratio \(f_{X_{13:13}(5,8)}(x)/f_{X_{12:12}(2,10)}(x)\), which clearly justifies Theorem 4.11.

Remark 4.13

Theorem 4.3 can be applied to the exponentiated exponential distribution with shape parameter strictly less than one. For the exponential baseline distribution, Theorem 4.4 can be applied. Theorem 4.9 is applicable for the baseline distribution function considered in Example 4.12. For the exponentiated exponential and exponentiated Lomax distributions, Theorem 4.10 can be verified.

5 Concluding Remarks

The theory of stochastic orderings plays an important role in several areas of science and engineering to describe the evolution of systems over time. The failure time is often used to make decisions regarding the reliability of a system. Thus, the established results related to the failure times can be useful in reliability theory. In this paper, we have dedicated ourselves in obtaining various ordering properties of order statistics constructed from two sets of independent heterogenous MPHRS and MPRHRS distributed observations. These are established in terms of the usual stochastic, hazard rate and reversed hazard rate orderings under majorization-based partial orderings. We also consider multiple-outlier models and prove several comparison results among the extreme order statistics in terms of the usual stochastic, hazard rate, reversed hazard rate and likelihood ratio orderings.

References

Arnold, B.C., Balakrishnan, N., Nagaraja, H.N.: A First Course in Order Statistics, vol. 54. SIAM, Philadelphia (1992)

Azzalini, A.: A class of distributions which includes the normal ones. Scand. J. Stat. 12, 171–178 (1985)

Balakrishnan, N., Barmalzan, G., Haidari, A.: Modified proportional hazard rates and proportional reversed hazard rates models via marshall-olkin distribution and some stochastic comparisons. J. Korean Stat. Soc. 47(1), 127–138 (2018)

Balakrishnan, N., Nanda, P., Kayal, S.: Ordering of series and parallel systems comprising heterogeneous generalized modified Weibull components. Appl. Stoch. Models Bus. Ind. https://doi.org/10.1002/asmb.2353 (2018)

Barmalzan, G., Payandeh Najafabadi, A.T., Balakrishnan, N.: Ordering properties of the smallest and largest claim amounts in a general scale model. Scand. Actuar. J. 2017(2), 105–124 (2017)

Bashkar, E., Torabi, H., Roozegar, R.: Stochastic comparisons of extreme order statistics in the heterogeneous exponentiated scale model. J. Stat. Theory Appl. 16(2), 219–238 (2017)

Chowdhury, S., Kundu, A.: Stochastic comparison of parallel systems with log-Lindley distributed components. Oper. Res. Lett. 45(3), 199–205 (2017)

Hazra, N.K., Kuiti, M.R., Finkelstein, M., Nanda, A.K.: On stochastic comparisons of maximum order statistics from the location-scale family of distributions. J. Multivar. Anal. 160, 31–41 (2017)

Kayal, S.: Stochastic comparisons of series and parallel systems with Kumaraswamy-G distributed components. Am. J. Math. Manag. Sci. 38, 1–22 (2019)

Khaledi, B.E., Kochar, S.C.: Dispersive ordering among linear combinations of uniform random variables. J. Stat. Plan. Inference 100(1), 13–21 (2002)

Kundu, A., Chowdhury, S., Nanda, A.K., Hazra, N.K.: Some results on majorization and their applications. J. Comput. Appl. Math. 301, 161–177 (2016)

Marshall, A.W., Olkin, I.: A new method for adding a parameter to a family of distributions with application to the exponential and Weibull families. Biometrika 84(3), 641–652 (1997)

Marshall, A.W., Olkin, I., Arnold, B.C.: Inequality: Theory of Majorization and Its Applications. Springer Series in Statistics. Springer, New York (2011)

Mudholkar, G.S., Srivastava, D.K.: Exponentiated Weibull family for analyzing bathtub failure-rate data. IEEE Trans. Reliab. 42(2), 299–302 (1993)

Nadeba, H., Torabi, H.: Stochastic comparisons of series systems with independent heterogeneous Lomax-exponential components. J. Stat. Theory Pract. 12, 794–812 (2018)

Pledger, G., Proschan, F.: Comparisons of Order Statistics and of Spacings from Heterogeneous Distributions, pp. 89–113. Academic Press, New York (1971)

Shaked, M., Shanthikumar, J.G.: Stochastic Orders. Springer, Berlin (2007)

Torrado, N.: Stochastic comparisons between extreme order statistics from scale models. Statistics 51(6), 1359–1376 (2017)

Zhang, Y., Cai, X., Zhao, P., Wang, H.: Stochastic comparisons of parallel and series systems with heterogeneous resilience-scaled components. Statistics 53, 126–147 (2019)

Acknowledgements

The authors would like to thank the Editor, Associate Editor and the anonymous reviewers for their careful reading of this paper. One of the authors, Sangita Das, thanks the financial support provided by the MHRD, Government of India. Suchandan Kayal gratefully acknowledges the partial financial support for this research work under a grant MTR / 2018 / 000350 SERB, India.

Author information

Authors and Affiliations

Corresponding author

Appendix

Appendix

Here, we present various lemmas which are used throughout the paper.

Lemma A.1

Let \(g_1:(0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_1(\lambda ,t)=\frac{\lambda (1-t)^{\lambda }}{1- (1-t)^{\lambda }}\). Then, \(g_1(\lambda ,t)\) is decreasing with respect to both \(\lambda \) and t.

Proof

Differentiating \(g_1(\lambda ,t)\) partially with respect to \(\lambda \) and t, we, respectively, get

Further, for all \(x>0,~\ln x\le x-1\). This implies that \(g_1(\lambda ,t)\) is decreasing in \(\lambda \) and t. \(\square \)

Next, we present further results whose proofs are straightforward. We omit the proofs.

Lemma A.2

Let \(g_2:(0,1)\times (0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_2(\alpha ,\lambda ,t)=\frac{ 1}{1-{{\bar{\alpha }}} (1-t)^{\lambda }}\). Then, \(g_2(\alpha ,\lambda ,t)\) is decreasing with respect to \(\alpha , \lambda \) and t.

Lemma A.3

Let \(g_3:(0,\infty )\times (0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_3(\alpha ,\lambda ,t)=\frac{(1-t)^{\lambda }}{1-{{\bar{\alpha }}} (1-t)^{\lambda }}\). Then, for each \(\lambda , g_3(\alpha ,\lambda ,t)\) is decreasing with respect to \(\alpha , \lambda \) and t.

Lemma A.4

Let \(g_{4}:(0,\infty )\times (0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_{4}(\eta ,\alpha ,t)=\frac{t^\eta }{1-{{\bar{\alpha }}} t^{\eta }}.\) Then,

-

(i)

for each \(t, g_{4}(\eta ,\alpha ,t)\) is decreasing with respect to \(\alpha \),

-

(ii)

for each \(\alpha \) and \(\eta , g_{4}(\eta ,\alpha ,t)\) is increasing with respect to \(\eta \) and t.

Lemma A.5

Let \(g_5:(0,\infty )\times (0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_5(\alpha ,\lambda ,t)=\frac{1- (1-t)^\lambda }{1-{{\bar{\alpha }}} (1-t)^{\lambda }}\). Then,

-

(i)

for each t and \(\lambda , g_5(\alpha ,\lambda ,t)\) is decreasing with respect to \(\alpha \),

-

(ii)

for each \(\alpha , g_5(\alpha ,\lambda ,t)\) is increasing with respect to \(\lambda \) and t.

Lemma A.6

Let \(g_6:(0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_6(\lambda ,t)=\frac{\ln (1-t)}{1-{{\bar{\alpha }}} (1-t)^{\lambda }}\) where \(\alpha \ge 1\). Then, for each \(t\in (0,1)\) and \(\lambda \in (0,\infty ), g_6(\lambda ,t)\) is decreasing with respect to \(t,\lambda .\)

Lemma A.7

Let \(g_7:(0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_7(\lambda ,t)=\frac{(1-t)^{\lambda }\ln (1-t) }{1- (1-t)^{\lambda }}\). Then, \(g_7(\lambda ,t)\) is increasing with respect to \(\lambda \) and t.

Lemma A.8

Let \(g_8:(0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_8(\eta ,t)=\frac{\eta }{1-{{\bar{\alpha }}} t^{\eta }}\). Then,

-

(i)

for each \(t, g_8(\eta ,t)\) is increasing with respect to \(\eta \) when \(\alpha \ge 1\),

-

(ii)

for each \(\eta \), \(g_8(\eta ,t)\) is decreasing with respect to t when \(\alpha \ge 1\),

-

(iii)

\(g_{8}(\eta ,t)\) is increasing in \(\eta \) and t, and decreasing in \(\alpha \) when \(0<\alpha \le 1\).

Lemma A.9

Let \(g_9:(0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_9(\eta ,\alpha ,t)=\frac{1-t^\eta }{1-{{\bar{\alpha }}} t^{\eta }}\), where \(\alpha > 0\). Then,

-

(i)

for each \(t\in (0,1)\) and \(\alpha \in (0,\infty )\), \(g_9(\eta ,\alpha ,t)\) is decreasing with respect to \(\alpha ,t\),

-

(ii)

for each \(t\in (0,1)\) and \(\alpha \in (0,\infty )\), \(g_9(\eta ,\alpha ,t)\) is increasing with respect to \(\eta \).

Lemma A.10

Let \(g_{11}:(0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_{11}(\alpha ,t)=\frac{1}{1-{{\bar{\alpha }}} t^{\eta }}\), where \(0<\alpha \le 1\). Then,

-

(i)

for each \(t\in (0,1), g_{11}(\alpha ,t)\) is decreasing with respect to \(\alpha \),

-

(ii)

for each \(0<\alpha \le 1, g_{11}(\alpha ,t)\) is increasing with respect to t.

Lemma A.11

Let \(g_{12}:(0,\infty )\times (0,1]\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_{12}(\lambda ,\alpha ,t)=\frac{\lambda }{1-{{\bar{\alpha }}} (1-t)^{\lambda }}\). Then,

-

(i)

for each \(\lambda , g_{12}(\lambda ,\alpha ,t)\) is decreasing with respect to \(\alpha \) and t,

-

(ii)

for each \(\alpha \) and \(t, g_{12}(\lambda ,\alpha ,t)\) is increasing with respect to \(\lambda \).

Lemma A.12

Let \(g_{13}:(0,\infty )\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_{13}(\lambda ,t)=\frac{\eta t^{\eta }}{1-t^{\eta }}\). Then, \(g_{13}(\eta ,t)\) is decreasing with respect to \(\eta \) and increasing in t.

Lemma A.13

Let \(g_{14}:(0,\infty )\times (0,1]\times (0,1)\rightarrow (0,\infty )\) be defined as \(g_{14}(\eta ,\alpha ,t)=\frac{\alpha }{1-{{\bar{\alpha }}} t^{\eta }}\). Then,

-

(i)

\(g_{14}(\eta ,\alpha ,t)\) is decreasing with respect to \(\alpha \) and \(\eta \),

-

(ii)

\(g_{14}(\eta ,\alpha ,t)\) is increasing with respect to t.

Rights and permissions

About this article

Cite this article

Das, S., Kayal, S. Some Ordering Results for the Marshall and Olkin’s Family of Distributions. Commun. Math. Stat. 9, 153–179 (2021). https://doi.org/10.1007/s40304-019-00191-6

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40304-019-00191-6