Abstract

A generic Bayesian framework is presented to track lost-in-space noncooperative maneuvering satellites. The developed framework predicts the reachability set for a lost-in-space satellite given bounds on maneuver parameters such as maneuver time and maneuver magnitude. Reachability sets are represented as a desired order polynomial series as a function of maneuver parameters. Recent advances in non-product quadrature methods are utilized to compute coefficients of this polynomial series in a computationally efficient manner. A major contribution of this work is to develop quadrature methods to generate samples for spherically uniform distribution for bounded magnitude maneuvers. Samples generated from this polynomial series are used for direct particle propagation in a traditional Bayesian filter rather than solving governing equations of motion for each sample point. An important component of the developed framework is a search strategy which exploits the reachability set calculations to task the sensor to increase the detection probability of the satellite. The samples generated from initial reachability sets are updated to systematically reduce the target search region based on actual detection of the target in a Bayesian framework. Numerical simulations are performed to show the efficacy of the developed ideas for tracking a lost-in-space satellite with the help of space based sensor. Performance of the proposed method varies widely based on factors such as the reachability set polynomial order, maneuver uncertainty bounds, sensor parameters (Field of view, measurement frequency, and detection probability), and initial conditions. For numerical experiments performed, the observer gained the custody of the maneuvering target in \(100\%\) and \(96\%\) of Monte Carlo (MC) simulations for the single maneuver and two maneuver cases, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Space has become an increasingly congested environment over the last few decades, leading to difficulties in operationally tracking and maintaining the space catalog to enable maximum space situational awareness. These problems are greatly exacerbated when considering that noncooperative active satellites can make unknown maneuvers which lead to issues with tracking and data association. Maneuvering target tracking is a well-studied problem in the literature, with a history dating back to the 1970s [5, 11, 33]. The core objective of maneuvering target tracking is to extract meaningful information about the trajectory of the target based on observational data. In most tracking applications, this involves using real-time measurements to inform the dynamic model, as well as reconstruct or estimate the maneuver as it occurs. A comprehensive introduction to maneuvering target tracking is presented in a six part paper series[24,25,26,27,28,29] which covers literature up to the early 2000s. Methods for maneuvering target tracking in this category include decision-based methods [6, 12, 33] and multiple-model methods [3, 4, 10]. A variety of target tracking methods and algorithms exist which vary widely by application. There is a diverse body of existing methods in the literature for tracking maneuvering targets using real-time measurements [6, 7, 32, 37]; however, it is not within the scope of this paper to fully examine them. The authors emphasize here that all the literature cited above assume data-richness, such that measurements of the target are acquired during the target maneuver.

Methods using real-time measurements are useful in air and ground target tracking scenarios where it is reasonable to assume that measurements of the target are available throughout the entire trajectory. Unfortunately, due to the limited coverage and availability of sensor resources, satellite tracking applications frequently have large time delays between observations on the order of hours or days. In these data-sparse situations, unobserved maneuvers can drastically change the target trajectory to the point where a tasked sensor loses custody of the intended target entirely.

Detecting and reconstructing maneuvers in data-sparse situations has received some, albeit limited, coverage in the literature. Patera [36] addresses the problem of detecting maneuvers and other events (collisions, reentry, etc) in terms of statistically significant changes in orbital energy. An optimal control based method has also been developed to reconstruct finite maneuvers [19, 31, 39] connecting two measurements. The underlying technique for this method was first formulated in 1988 as the minimum model error method [34]. The minimum model error method treats the control as an unmodeled deviation from the dynamics, and minimizes this deviation such that the state estimate is statistically consistent with the observations. When applied to the satellite tracking problem, this method formulates the maneuver reconstruction process as a two point boundary value problem under the assumption of a minimum fuel control policy. Whenever the optimal control profile rises above the level of system noise, it is assumed that a maneuver has occurred. Although the minimum fuel maneuver is not necessarily a bad assumption, this method does not account for the many suboptimal trajectories that can explain the same observational data. Furthermore, the orbital energy method as well as the minimum model error method make the assumption that the target can be observed after making a maneuver. The problem addressed in this work is fundamentally different. The problem currently considered is to use sensor data in conjunction with dynamical model for target motion and bounds on maneuver parameters to seek and locate a target satellite which has been lost due to an unknown maneuver.

In this respect, the objective of this paper is to determine a set of sensing parameters to locate the target given apriori knowledge about the bounds on maneuver parameters (e.g. magnitude and time). This apriori knowledge is exploited in conjunction with dynamical model to compute a search area for the sensor at future times. The search area is defined by the reachability set of the target, and is synonymous with the target state pdf given by the mapping of target maneuver to state. The pdf represents all possible target states given a-priori knowledge on control bounds and measurement data, and the true target state will always lie within this set. If the tasked sensor is able to detect the target, then the measurement of the detected target is used to systematically reduce error in target state and maneuver estimates. In case of unsuccessful detection, the search area is updated and propagated to future times via reachability based methods. An important feature of this approach is that unsuccessful detection is also exploited to improve the future search and tracking of noncooperative satellites. The method presented in this paper creates a unified framework for search, detection, tracking, and maneuver estimation of a noncooperative target.

An important component of this framework is to compute the reachability sets of the target. Generally speaking, a reachability set is the domain of all possible future states of a system given a constrained control effort. There is some disagreement in the literature over the specific definition of a reachability set; some analytical methods consider only the reachable outer surface or reachable envelope of states given a constrained control input. In the authors previous works [14], reachability sets are defined by the entire domain of reachable states (i.e. pdf) given uncertainty in initial state, model parameters, and control input. This is the definition that will be adopted throughout this paper.

An optimal control formulation of the reachable envelope is given analytically in [18]; however, this formulation doesn’t provide information about the region inside the reachable envelope. Since then, there have been many analytical investigations into the application of reachability sets to impulsively maneuvering spacecraft [43, 45], and numerical objective map reachability analyses into proximity operations around asteroids [42]. Traditionally, numerical reachability set computation for high-dimensional systems require tensor-product quadature methods to evaluate the multi-dimensional integrals involved. This can render the problem computationally intractable.

Recently, the higher order sensitivity matrix (HOSM) method [14, 15] has been developed to address this problem. The HOSM method is analogous to polynomial chaos uncertainty propagation techniques, but utilizes higher order non-product quadrature schemes such as the conjugate unscented transform (CUT) method to effectively compute the multidimensional integrals involved in reachability set computation. Benefits of the non-product quadrature rules used in the HOSM method include higher accuracy than other popular methods like the unscented transform (UT) and sparse grid quadratures, and greatly reduced computational expense compared to alternative tensor product methods like Gauss–Hermite (GH). In this work, non-product quadrature rules are extended to the spherically uniform distribution for unknown maneuvers, and to mixed distributions for both unknown maneuver magnitude and maneuver time.

Once a target reachability set has been computed, a method for tasking sensors to search the set must be developed. A greedy in time maximum detection likelihood policy is implemented for this purpose. In practice, the maximum likelihood cost function for highly nonlinear dynamic and measurement models are evaluated using MC samples efficiently propagated via the reachability set model. The final component to the proposed framework is the measurement update step. A key contribution of the proposed method is the utilization of measurements of the reachability set rather than the target itself to provide better information on the remaining possible target locations. If the tasked sensor does not observe the target, the detection likelihood function is used to update the target search area. Conversely, if the target is located, an importance sampling with progressive correction (ISPC) procedure is used to accurately define the posterior.

The organization of the paper proceeds as follows. Section 2 provides a description of the data-sparse maneuvering target search method. Section 3 provides a summary of the higher order sensitivity matrix reachability set computation method, and discusses quadrature methods used in this problem. Sections 4 and 5 detail the sensor tasking and filtering/estimation components respectively. Section 6 provides numerical simulations and discussion of the applicability and limitations of this method, and Sect. 7 provides concluding remarks.

2 Problem Description

The objective of this problem is to locate a target that is able to make bounded unknown maneuvers at unknown times between observations. Given a sensor with limited field of view (FOV), the sensor parameters which provide the highest likelihood of detecting the target must be determined. After tasking the sensors, observational data must then be used to update the search region and track the target. This section will define the dynamic system, stochastic inputs, and sensor model for the noncooperative maneuvering satellite tracking problem.

2.1 Generic Target-Observer System

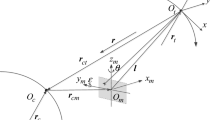

Assume a continuous-time dynamic system governing target and observer motion

where \(\textbf{x}_t\) is the \((n_t\times 1)\) target state vector, \(\textbf{u}_t\) is the \((l_t\times 1)\) target control vector, \(\textbf{f}_t\) is the target dynamic model, and \(\textbf{g}_t\) is the target control model. The observer is similarly assigned an \((n_{ob}\times 1)\) state \(\textbf{x}_{ob}\), an \((l_{ob}\times 1)\) control \(\textbf{u}_{ob}\), and dynamic and control models \(\textbf{f}_{ob},\textbf{g}_{ob}\). The target control vector is approximated by a series of M impulsive maneuvers \(\textbf{u}_{t,i}\) and maneuver times \(t_i\) with known bounds

where \(\delta (\cdot )\) is the dirac delta function. As will be discussed in Sect. 3, the dimension of \(\textbf{u}_t\) imposes severe computational limitations on reachability set evaluation. Therefore, the proposed methodology is better suited for impulsive maneuvers use cases than continuous low thrust maneuvers which requires many independent random variables. The system flow for the target \(\varvec{\chi }_t\) and the observer \(\varvec{\chi }_{ob}\) can be written compactly as

where k is the discrete-time index of the current time step \(\mathbf {t_k}\). The combined system state is defined as the target state augmented by the observer state \(\textbf{x}^T=\left[ \textbf{x}_t^T\ \textbf{x}_{ob}^T\right]\). Assume the sensor has field of view (FOV) constrained to a region defined by \(\mathcal {C}_s(\textbf{x}_{k+1},\varvec{\theta }_{k+1})\le 0\) where \(\varvec{\theta }_{k+1}\) is an \((l\times 1)\) vector of sensor parameters to be selected such that the target is detected. A piecewise detection likelihood function \(\pi _d(\textbf{x}_{k+1},\varvec{\theta }_{k+1})\) can be defined with respect to the FOV constraints

such that the target has a probability \(\pi _d^\prime (\textbf{x}_{k+1},\varvec{\theta }_{k+1})\in [0,1]\) of being detected within the FOV. Given known bounds on the target maneuvers \(\textbf{u}_{t,i}\), FOV constraints, and detection likelihood function, sensor parameters \(\varvec{\theta }_{k+1}\) which maximize some target detection metric \(J_d\) must be determined. It should be noted that the observer trajectory is assumed to be known in this work, however, one can optimize the trajectory of the observer along with sensing parameters. This work will consider scenarios under the simplifying assumptions of a single-observer, single-target, and greedy in time tasking approach.Footnote 1 The greedy in time assumption made here indicates that the sensor parameters \(\varvec{\theta }_{k+1}\) may be independently optimized at each timestep rather than optimizing a sequence of sensor parameters \([\varvec{\theta }_{0}\ \varvec{\theta }_{1}\ldots \ \varvec{\theta }_{k+1}]\) for a finite time horizon. Under these simplifications, it is sufficient to define criteria \(J_d\) as the expectation value of the detection likelihood function \(\pi _d(\textbf{x}_{k+1},\varvec{\theta }_{k+1})\)

Assuming selection of \(\varvec{\theta }_{k+1}\) provides a successful detection, the target measurement can be modeled by

where \(\textbf{y}_{k+1}\) is an \((m\times 1)\) measurement vector, \(\textbf{h}\) is the measurement model, and \(\varvec{\nu }_{k+1}\in \mathcal {N}(\textbf{0},\textbf{R}_{k+1})\) is zero mean Gaussian measurement noise. This measurement can be used in a classical filtering sense to update the target state and maneuver estimate. If there is not a successful detection, however, the target search region must be updated to inform future sensor tasking. The following section will outline the framework proposed to accomplish the objectives of this problem.

2.2 Overall Approach

The objective of this problem is to locate a target executing unknown maneuvers that has been lost by determining a set of sensor to search for the target. This problem is an extension of the classical filtering problem, where the sequence of maneuvers in time is unknown and observations are unavailable during the maneuver. It is assumed that the maneuvers have caused the target to deviate from its nominal trajectory to an extent where a limited FOV sensor cannot locate the target using traditional means.

The proposed method is a multipronged framework for determining the target search space, sensor tasking, and incorporation of measurement data for tracking/estimation. A Taylor series-based approach is used to compute the target pdf, i.e. reachability set, as a function of bounded stochastic maneuvers. This reachability set defines the search region over which an observer is tasked to maximize the likelihood of detecting the target. A Bayesian particle filtering framework is used to update the target pdf such that unsuccessful sensor tasking measurements systematically reduce the remaining reachable target states. The proposed framework depicted in Fig. 1, can be split into three main components

-

1.

Reachability Set Computation

-

2.

Sensor Tasking

-

3.

Tacking/Estimation

A core premise of this method is that the reachability set of the target is synonymous with the target state pdf resulting from stochastic maneuvers mapped through the dynamic system. Assume the system input \(\textbf{z}\) is given by the known deterministic observer initial state \(\textbf{x}_{ob,0}\) and control \(\textbf{u}_{ob}\), in addition to the stochastic target initial state \(\textbf{x}_{t,0}\) and maneuver sequence \(\textbf{u}_{t,i},t_i\)

where M is the total number of target maneuvers. Assume the initial target state is defined by random variable \(\textbf{x}_{t,0}\) with known pdf \(\pi (\textbf{x}_{t,0})\). Traditionally, target maneuvers are represented by equivalent Gaussian process noise applied at each timestep; however, this does not intuitively make sense for the application currently considered. Instead, the maneuvers can be defined as impulsive zero-mean spherically uniform distributions up to maximum radius \(\Delta V_{max}\), denoted by \(\textbf{u}_{t,i}\in \mathcal {U}_s(0,\Delta V_{max})\), and maneuver times can be defined as linearly uniform between two time limits \(t_{i}\in \mathcal {U}(t_a,t_b)\). Defining the maneuvers in this manner imposes assumptions only on the maximum maneuver magnitude, and considers any target attitude, maneuver magnitude, and maneuver time to be equally probable. Computing reachability sets using the statistics of this distribution will be discussed in Sect. 3.

For numerical accuracy, the stochastic part of the input vector is typically normalized to a zero mean vector \(\varvec{\zeta }\)

where \(\textbf{S}\) is a block-diagonal scaling matrix dependent on the pdf normalization of each component of \(\textbf{z}\), and \(\varvec{\mu }\) is the augmented mean input vector. Table 1 summarizes the various input types and their associated means and the scaling components, which comprise the diagonal blocks of \(\textbf{S}\). Using the normalized input vector, the system flow \(\varvec{\chi }\) can be rewritten as

The system flow represents the mapping of stochastic inputs with joint distribution \(\pi (\varvec{\zeta })\) onto the reachability set \(\pi (\textbf{x}_k)\). Note that the selection of inputs to include in \(\varvec{\zeta }\) is left to the discretion of the user based upon the application considered. The first problem in the proposed framework is to efficiently compute the target search area defined by the reachability set \(\pi (\textbf{x}_k)\).

3 Reachability Set Computation

Direct reachability set computation is notoriously expensive from a computational standpoint and involves the explicit evaluation of many MC samples to provide an accurate representation of the search space. This section outlines the basic concepts and equations of the HOSM, which enables very efficient polynomial approximation of a reachability set. Note that although the basic equations are provided here, a more detailed discussion can be found in Hall and Singla [15].

3.1 Taylor Series Expansion

Consider a d th order Taylor series expansion on Eq. (9)

where \(\varvec{\zeta }\) is the normalized random input variable with known pdf. The objective of the HOSM method is to numerically compute a model analogous to the above Taylor series expansion rather than explicitly evaluating partial derivatives of \(\varvec{\chi }\). Grouping the constant partial derivatives into sensitivity matrices \(\textbf{C}_i\) and the \(\zeta\) terms into sets of \(i^{th}\) order basis functions \(\varvec{\phi }_i\), the expansion can be rewritten as,

These sensitivity matrices and basis functions can be grouped so that the target state is approximated by the compact polynomial model

where \(\textbf{C}\) is an \((n\times L)\) matrix of coefficients, and \(\varvec{\phi }\) is an \((L\times 1)\) vector of basis functions. A least squares minimization procedure is applied to the above approximation and the coefficients can computed using the normal equations given by

where \(E[\cdot ]\) denotes the expectation value operator with respect to the input pdf \(\pi (\varvec{\zeta })\). Note that if the basis functions \(\phi _i's\) are orthogonal with respect to the pdf \(\pi (\varvec{\zeta })\), \(\textbf{B}\) becomes a diagonal matrix and the coefficients can be computed simply as

Additional details on the above procedure are provided in [15]. The problem now becomes a matter of accurately computing coefficients by evaluating the expectation integrals in (14). Expectation values in the denominator are purely polynomial, and are thus trivial to compute; however, the expectation values in the numerator are generic functions of the system flow. Quadrature methods can be used for this purpose.

All quadrature methods apply the same basic approach: approximate the function as a Taylor polynomial, and integrate that polynomial instead. The generic form of a quadrature rule is to approximate a polynomial integral as the finite sum of the function evaluated at specific points \(\varvec{\zeta }^{(i)}\) multiplied by weights \(w^{(i)}\)

where the computational expense of the method is directly proportional to the number of points N required to evaluate. The key difference between various quadrature rules lies in how the points and weights are selected, and is intimately related to the Taylor series expansion as discussed in [13]. Substituting the Taylor series expansion (10) into (15) provides a following set of equations known as the moment constraint equations (MCEs).

Extensive research has gone into devising quadrature rules which match the moments of Gaussian and uniform distributions; however, quadrature rules for the spherically uniform unknown maneuver model used in this work is a relatively unexplored problem. The following section will derive the moments of the spherically uniform distribution and discuss how quadrature sets may be determined to enable reachability coefficient computation.

3.2 Spherically Uniform Quadrature

Unknown target maneuvers \(\textbf{u}_{t,i}\) are characterized as zero-mean spherically uniform distributions with bounded magnitude, denoted by \(\mathcal {U}_s(0,\Delta V_{max})\). To accurately compute the reachable space for such a maneuver, it is necessary to determine quadrature sets which match the MCEs up to a desired order d.

Consider the spherical coordinate system \((\alpha ,\phi ,r)\) given in Fig. 2 where \(\alpha\) is the azimuth, \(\phi\) is the co-latitude, and r is the radius. The transformation between Cartesian coordinates \((\zeta _1,\zeta _2, \zeta _3)\) and spherical coordinates is

Assume there exist random variables \(\alpha ,\phi ,r\) such that the pdf of a unit sphere is uniformly distributed in Cartesian space \(\pi (\varvec{\zeta })=c\). The constant c must be determined such that the pdf integrates to one.

where \(\Omega\) is the support of a sphere, i.e. \(r<1\). Firstly, notice that since the domain of the pdf is spherical, the bounds cannot be directly expressed in Cartesian space, therefore a transformation must be applied to map the differential volume element from Cartesian coordinates \(d\varvec{\zeta }\) to spherical coordinates \(drd\alpha d\phi\). This transformation is given by the determinant of the Jacobian of Cartesian variables with respect to spherical variables.

Using the differential volume element transformation, the constant c can be found by directly integrating the expression

where the constant c can be thought of as normalizing the sphere to unit volume. The expectation value operator with respect to the uniform spherical distribution can now be defined as

Expression (21) can be used to analytically evaluate the moments of the uniform spherical distribution with respect to cartesian space. These moments are evalutated and listed in Table 2. Note that since the uniformly spherical distribution is symmetric, the odd-order moments are all equal to zero.

There are many quadrature methods that one might consider to match the moments of the spherically uniform distribution \((n=3)\). Perhaps the most well-known class of quadrature method, Gaussian quadrature rules, requires only N points to compute up to \(d=2N-1\) order moments for systems where \(n=1\), which is minimal for one dimensional systems [41]. Unfortunately, higher dimensional systems require a tensor product to be taken, which leads to exponential growth in the number of points. Furthermore, the Cartesian coordinates of the spherically uniform distribution are not statistically independent, i.e \(E[\zeta _i^2\zeta _j^2]\ne E[\zeta _i^2]E[\zeta _j^2]\). This causes the tensor product of 1D points to incorrectly match moments, and thus be invalid.

The celebrated Unscented Transform (UT) [20,21,22] is a popular alternative to tensor product methods which avoids expontential growth in N with increasing dimension, and maintains positive weights. The UT symmetrically places points on the principal axes of the input \(\varvec{\zeta }\) to match up to \(3^{rd}\) order moments while incurring only linear growth in N with dimension. Consider matching up to \(3^{rd}\) order moments of the uniform sphere using equally weighted \(w_1\) points placed at a distance of \(r_1\) on each principal axis. See Fig. 3 for a schematic of the UT for \(n=3\). Since the points are symmetric, odd-order MCEs are automatically satisfied, and the even order equations are given by

which leads to the simple solution

This solution matches up to 3rd order moments of \(\varvec{\zeta }\) using only 6 points. Furthermore, notice that \(r_1<1\) lies within the unit radius constraint for a spherical distribution and is therefore a valid solution. Support constraints \(\varvec{\zeta }^{(i)}\in \Omega\) become very important when defining higher order quadrature sets. Notice that if a similar procedure is attempted for 5th order moments, the cross-dimension expectation value \(E[\zeta _i^2\zeta _j^2]\) will never be replicated because at least one dimension will always have a zero component. Thus, an alternative method must be used.

The conjugate unscented transform (CUT) method is a higher order generalization of the UT method specifically designed with the cross-moment problem in mind. The CUT method leverages special symmetric axes to directly construct points in nD space, circumventing the need for a tensor product. The details of the CUT method are discussed thoroughly in Adurthi et al [2]; however, this section will outline application of CUT to the spherically uniform distribution.

Let us consider a set of points with distance \(r_1\) and weight \(w_1\) on the principal axes, and a set of points with distance \(r_2\) and weight \(w_2\) on the \(c^{(3)}\) axis to saitsfy \(5^{th}\) order MCEs. The \(c^{(3)}\) axis is a symmetric axis which yields the set of points \(\left\{ \textbf{Z}^{(3)}\right\} =\{[r_2\ r_2\ r_2],\ [-r_2\ r_2\ r_2],\ [r_2\ -r_2\ r_2],\ \ldots [-r_2\ -r_2\ -r_2]\}\) with all permutations of negative and positive scaling parameters. Note that there are a total of 6 points on the principal axes constrained such that \(r_1\le 1\) and 8 points on the \(c^{(3)}\) axis constrained such that \(r_2\le \frac{1}{\sqrt{3}}\). Recalling that the symmetry automatically satisfies the odd-order moments, the even-order MCEs for the uniform sphere are given by

Analytically, these equations can be reduced to expressions for \(w_1,\ w_2,\ r_1\)

and a charateristic polynomial which is quadratic in \(r_2^2\)

This equation leads to two positive solutions for the second scaling parameter

It is cruical now to notice the role of constraints. The only feasible value \(r_2\le \frac{1}{\sqrt{3}}\) is given by \(r_2=0.4190\). Unfortunately, when substituting this solution for \(r_2\) into the expression for \(r_1\), a value of \(r_1=1.2388\) is found, which lies outside of the unit radius constraint. Thus, there are no feasible solutions using this set of points.

This example highlights one of the most glaring difficulties with the CUT method. There is no guarantee that a selected set of CUT axes will satisfy the MCEs and support constraints, so selecting the axes is often a guess and check procedure. For example, consider adding a single central point \(\varvec{\zeta }^{(0)}=[0,0,0]\) with weight \(w_0\) to previously examined set. The only MCE that is influenced by this change is

which yields the modified characteristic equation

with solution

Further analysis of this solution, shows that real solutions only exist for \(w_0\le \frac{4}{25}\); however, selection of the central weight must still allow the unit radius constraint to be satisfied. Fig. 4 shows plots of \(r_1\) and \(r_2\) parameters vs. central weight \(w_0\). The solid lines represent \(r_1\), the dotted lines represent \(r_2\), and red/blue lines represent coupled solutions (from either the plus or minus solution in (30)). It can be determined that the minimum central weight with a solution that satisfies the constraints is \(w_0=0.0571\).

If \(r_1\) is chosen to be fixed at the boundary value \(r_1=1\), then the solution for the remaining parameters can be computed as \(r_2=0.4472\), \(w_0=0.1143\), \(w_1=0.0286\), \(w_2=0.0893\). This solution satisfies up to \(5^{th}\) order moments using only \(N=15\) points. Finding an analytical solution can be a tedious process even for \(5^{th}\) order MCEs, and the complexity of extending analytical solutions to higher n and d can render analytical solutions impossible. Numerical solutions using CUT methodology can be found; however, selecting axes which satisfy the MCEs and support constraints while providing minimum N is very difficult.

The CUT method can be used if only a single unknown maneuver with known time is used as the input, however, the generic input considered in this work (7) is mixed rather than solely spherically uniform. This introduces another problem. All of the previously discussed methods implicitly assume fully-symmetric input, which is problematic when constructing non-product quadrature sets for a mixed distribution. The following section will discuss the method used in this work to determine quadrature sets for mixed distributions to enable reachability set propagation.

3.3 Quadrature for Mixed Distributions

The generic input \(\varvec{\zeta }\) considered in this paper consists of a mix of Gaussian, uniform, and spherically uniform distributions. The traditional way of handling mixed distributions is to take the tensor product of lower dimensional fully-symmetric sets. For example, consider the input \(\varvec{\zeta }=[\textbf{u}_{t,1}\in \mathcal {U}_s(0,1),t_1\in \mathcal {U}(-1,1)]\) for a known initial condition with unknown maneuver magnitude and maneuver time. Using the \((15\times 3)\) 5th order spherically uniform CUT set found in the previous section \(\textbf{X}_1\in \mathcal {U}_s(0,1)\), and 5th order \((3\times 1)\) uniform Gauss-Legendre points \(\textbf{X}_2\in \mathcal {U}(-1,1)\), a tensor product set can be computed as

where \(\textbf{X}_{tens}\) is a \((45\times 4)\) set which replicates up to \(5^{th}\) order moments. Although this method is valid, the tensor product is not minimal, so a non-product method is desired. To address this problem, a recently developed direct moment matching method known as designed quadrature (DQ) [23] is used.

A brief summary of the DQ algorithm is given here; however, a more detailed discussion can be found in [23]. The DQ algorithm is a recursive algorithm used to find a set of quadrature points and weights which satisfy a generic set of moments, then prunes out points to find a set with minimal number of points N. Recall that the computational expense of a quadrature method is directly proportional N. Therefore, despite an increased initial cost of finding a minimal quadrature set via recursive DQ, the efficiency of the resulting set for computing reachability sets is vastly improved.

The DQ algorithm does not impose any symmetric assumptions on \(\varvec{\zeta }\) or require any explicit knowledge of \(\pi (\varvec{\zeta })\). In fact, the only required information of \(\varvec{\zeta }\) is the numeric value of the statistical moments of \(\varvec{\zeta }\), and any constraints associated with the support \(\Omega\). Since there are no symmetric conditions imposed on the quadrature set, odd-order moments must be included. Fortunately, moments are able to be easily computed analytically by separating the expectation values of independent mixed distibutions. Statistical moments for Gaussian and uniform distributions can be easily computed analytically and are provided in Tables 3 and 4 respectively.

For example, the cross-moments between spherically uniform dimension \(\zeta _1\) and uniform dimension \(\zeta _4\) can be computed as

Given the numeric moment values and support constraints of the inputs, the DQ method will solve for a set that satisfies the constraints and MCEs up to a desired tolerance. DQ is an iterative procedure which minimizes the MCEs and support constraint penalty for fixed N using Gauss-Newton method, then prunes out points and repeats until a valid set cannot be found with fewer points. The four major components are

-

1.

Penalization Define the cost function \({\tilde{\textbf{R}}}_{k}\) to be the squared error in MCEs augmented by support and weight constraint error penalties. This enables the cost function to be minimized using standard unconstrained minimization tools.

-

2.

Gauss-Newton Iteration An iterative update step

$$\begin{aligned} \textbf{d}_{k+1}=\textbf{d}_k-\Delta \textbf{d} \end{aligned}$$(33)is performed where \(\textbf{d}\) is a vector of decision variables, i.e. quadrature point coordinates and weights. The unconstrained optimization problem at the \(k^{th}\) iteration can be written

$$\begin{aligned} \min _{\Delta \textbf{d}}\ ||\tilde{\textbf{R}}_{k+1}-\tilde{\textbf{J}}_{k+1}\Delta \textbf{d}||_2^2 \end{aligned}$$(34)where \(\tilde{\textbf{J}}_{k+1}\) is the Jacobian of the cost function. The solution of this problem is found by solving for \(\Delta \textbf{d}\) with a classical least squares solution.

$$\begin{aligned} \Delta \textbf{d}=\left( \tilde{\textbf{J}}_k^T\tilde{\textbf{J}}_k\right) ^{-1}\tilde{\textbf{J}}_k^T\tilde{\textbf{R}}_k \end{aligned}$$(35) -

3.

Regularization The standard least squares solution is almost always poorly conditioned due to the sparse structure of the Jacobian, so an additional Tikhanov regularization procedure is required to get a reasonable solution. Regularization is done by including an additional term in the minimization

$$\begin{aligned} \min _{\Delta \textbf{d}}\ ||\tilde{\textbf{R}}_{k+1}-\tilde{\textbf{J}}_{k+1}\Delta \textbf{d}||_2^2+\lambda ||\Delta \textbf{d}||_2^2 \end{aligned}$$(36)The solution to this modified least squares is found using singular value decomposition and including a modifying filter factor to the computation of \(\Delta \textbf{d}\). See [23] for additional details

-

4.

Initialization The DQ method requires an initial guess which can greatly impact the speed of finding a solution. In fact, initializing the DQ algorithm with a quadrature set determined from one of the previously discussed methods can prove benefical in terms off computation time. For example, initializing the DQ algorithm with the \((45\times 4)\) set \(\textbf{X}_{tens}\), yields a final \((21\times 4)\) set \(\textbf{X}_{DQ}\). Similar final results can be found via random initialization, however, initializing using a tensor product set reduces runtime of the DQ algorithm because the initial guess satisfies the cost function and the algorithm immediately begins pruning points. This illustrates how directly solving for a mixed distribution quadrature set in nD using DQ can provide significant computational savings when compared to traditional means. Since the mixed input considered in this problem consists of normalized distributions, a set can be solved once offline and used to determine the reachable space of a target in real time. See Sect. 6 for a comparison of the accuracy of reachability sets computed using the quadrature sets \(\textbf{X}_{tens}\) and \(\textbf{X}_{DQ}\).

The DQ method implemented here is slightly modified from the algorithm specified in [23]. A two-tiered optimization loop is used such that the first loop uses a weak tolerance \(\epsilon _1\) to find a solution with N near the optimal \(N^*\), then minimizes the solution to a strict tolerance \(\epsilon _2\). If the strict tolerance is used throughout the minimization, the large majority of computational effort will go towards reducing error in a solution with \(N>>N^*\). It is much more effective to use a lower tolerance first, then enforce the desired tolerance. Now that a method for computing the reachability set has been presented, a systematic method for tasking sensors must be determined.

4 Sensor Tasking

Assume at this stage a polynomial model has been found via HOSM method which can efficiently propagate the target reachability set, i.e. state pdf \(\pi (\textbf{x})\), through the system dynamics. The question now becomes how to task sensors to search the reachability set. Recall the maximum detection likelihood objective posed in (5)

The observer for simulations in this work is assumed to be a space-based sensor with parameters \(\varvec{\theta }\) defined as attitude angles with associated attitude unit vector \(\hat{\textbf{a}}\). The detection likelihood function (4) can be defined in a number of ways. A simplistic detection likelihood function would simply be \(\pi _d^\prime (\textbf{x},\varvec{\theta })=1\) which implies a \(100\%\) probability of detecting targets within the FOV. Other options include geometric models which adjust the likelihood based on the position within the FOV, or physics based models which use basic principals to model the detection likelihood. Since the purpose of this paper is to provide a methodological framework rather than to provide realistic simulations, the detection likelihood function used in this paper is a toy geometric model with conical FOV constraints

where \(\gamma ^*\) is the FOV half-angle, \(|\rho _s|\) is a scale distance, \(\varvec{\rho }=\textbf{x}_t-\textbf{x}_{ob}\) is the range vector, and \(\gamma\) is the angle between attitude vector and range vector. Angle \(\gamma\) can be computed by

A schematic of the geometry between the observer FOV, target, and detection likelihood function is shown in Fig. 5. The problem now becomes how to evaluate the expected detection likelihood, i.e. cost function.

Due to the FOV constraints imposed on the detection probability function and the generic nonlinear pdf \(\pi (\textbf{x})\), evaluation via quadrature methods is not a practical option. Thus, a MC evaluation is necessary. Typically this would require the explicit propagation of random samples throughout the dynamics; however, the polynomial model computed in the previous section enables rapid and accurate approximation of many thousands of samples to represent \(\pi (\textbf{x})\). If N samples are randomly drawn from the normalized input vector \(\varvec{\zeta }^{(i)}\in \pi (\varvec{\zeta })\) and assigned equal weights \(w^{(i)}=\frac{1}{N}\), then the output state is approximated using the reachability coefficients

and the reachability set can be approximated as a finite sum

where \(\delta (\cdot )\) is the dirac delta function. Substituting (40) into (5) yields the MC approximated cost function

In practice, the objective function is maximized by performing a grid search of \(\varvec{\theta }\) and selecting the attitude which maximizes \(J_d\). Due to the axial symmetry of the conic FOV and detection probability, only two attitude angles are required to describe the observer attitude. Note that there is a tradeoff here between computational efficiency and the optimality of the grid search.

An adaptive grid is used to avoid unnecessary computational costs. The grid is defined at each timestep by

where \(\varvec{\Phi }\) is the grid of angles, and \(\theta ,\phi\) are the azimuth and colatitudes corresponding to the reachability samples respectively. In this manner the minimum and maximum azimuth and colatitude angles of the current target reachability set are used to bound the grid search and set nodes in increments of the FOV half-angle rather than fully grid searching all possible angles. After the optimal sensor parameters are selected, a measurement is taken and must be used to update the reachability set.

Note that in a generic multi-target multi-sensor tasking problem, an information metric may be used select sensor parameters. An example of this is the mutual information approach taken in Ref [1]. The following section discusses filtering measurements and estimating the target state and maneuver sequence using a particle filtering framework.

5 Filtering and Estimation

The traditional filtering problem involves fusion of target measurement data with propagated state uncertainty to most accurately describe the posterior target distribution. In contrast, the problem considered here is to use propagated state uncertainty to task sensors to detect the target. If the target is not detected, observations of vacant regions of the search area are used to update the search area at future times; however, if the target is detected, the objective is synonymous with the traditional filtering problem. Here, the prior and posterior update states will be denoted \(\textbf{x}^-\) and \(\textbf{x}^+\) respectively.

In data-rich applications, linearization such as the Extended Kalman Filter (EKF) are frequently sufficient to approximate nonlinear systems. The state can often be approximated as Gaussian in these applications; however, linear assumptions quickly break down in data-sparse applications due to the large interval between measurements. As a result, a particle filtering framework will be used to consider full density estimation of the target pdf. Particle filters are a subset of sequential Monte Carlo (SMC) techniques which are well suited for this purpose. SMC techniques are a popular class of methods which approximate a full pdf \(\pi (\textbf{x})\) as an ensemble of discrete samples. A thorough introduction to SMC methods can be found in references [9, 38, 40]. Considering the MC ensemble representation of the prior given by (40), sensor parameters \(\varvec{\theta }\) computed via maximum likelihood procedure given in Sect. 4, a measurement \(\tilde{\textbf{y}}\) is taken. The prior pdf \(\pi (\textbf{x}^-)\) can be updated to the posterior pdf \(\pi (\textbf{x}^+)\) using the process of Bayesian inference. Bayes rule can be written as

where \(\pi (\tilde{\textbf{y}}|\textbf{x}^-)\) is the measurement likelihood function. Notice that the denominator of Bayes rule is simply a normalization factor, so the update can instead be written as a proportionality

Substituting (40) into (44), the measurement update can be written as a point-wise weight update

where \(\hat{w}^{(i)+}\) is an intermediate weight proportional to the prior weight. Recognizing that the denominator of Bayes rule is given by \(\sum \hat{w}^{(i)+}\), the posterior weights are given by

The prior samples are now shifted to the posterior samples \(\varvec{\zeta }^{(i)+}=\varvec{\zeta }^{(i)-}\) and can be propagated to the next timestep.

The measurement likelihood function \(\pi (\tilde{\textbf{y}}|\textbf{x}^{(i)-})\) plays an important role in the proposed search procedure and is closely related to the detection likelihood function. For simulation purposes, a random number is drawn on the interval [0, 1] and if it is less than the detection likelihood of the true target state \(\pi _d(\textbf{x}^*_t,\varvec{\theta })\), the target is considered to have been located. If the target is detected, then the measurement likelihood is defined using zero mean Gaussian sensor noise \(\mathcal {N}(0,\textbf{R})\) associated with the measurement model (6)

however, if the target is not detected, the measurement likelihood is defined to be the compliment of the detection probability

In this manner, samples inside the observer FOV will have their weight reduced proportional to the detection likelihood if the target is not detected by measurement \(\tilde{\textbf{y}}\).

Notice that any particle states with very low measurement likelihood \(\pi (\tilde{\textbf{y}}|\textbf{x}^{(i)-})\) will have a posterior weight \(w^{(i)+}\) near zero. In this situation, the particle \(\textbf{x}^{(i)+}\) contributes very little to the approximation of the posterior, and as the particle filter update is repeated over multiple timesteps, the weight becomes concentrated in very few or even a single particle. This phenomenon is known as sample impoverishment or particle degeneracy and leads to a poor approximation of the target pdf. Resampling is an effective way to alleviate this issue. Ref [8] provides a concise summary and comparison of several popular resampling algorithms. The systematic resampling algorithm will be used in this paper for its efficiency and easy implementation. Note that since the system is propagated using the HOSM method, the normalized input samples \(\varvec{\zeta }^{(i)}\) are redrawn from \(\pi (\varvec{\zeta }^+)\) rather than directly from \(\pi (\textbf{x}^+)\). Typically, a resampling condition is used to avoid costly resampling procedures at every timestep. Ref [30] characterizes this resampling condition using the Effective Sample Size (ESS) criterion

The resampling condition is to resample only when ESS is less than a specific threshold \(N_t\). The threshold \(N_t=N/2\) is used in implementation of this paper.

Although resampling is an effective method for preventing particle degeneracy over multiple timesteps, if the prior distribution is very diffuse with respect to the likelihood function, problems with particle degeneracy may arise at a single timestep. This is frequently the case in the current application when a target with a large reachability set is detected by an observer with low sensor noise. For example, if a sensor has a range mesurement with noise standard deviation on the order of meters, and the closest particle in the reachability set has a range on the order of kilometers from the measured range, then the closest particle is thousands of standard deviations away from the expected measurement. The likelihood of such a particle is numerically zero, and thus, all of the particle weights will be set to zero and the filter will become singular.

This phenomenon is well understood in particle filters, and several methods have been developed to alleviate this practical implementation issue. This paper uses the importance sampling with progressive correction (ISPC) technique outlined in Ref [35] to update the reachability set when the target is detected. The idea behind ISPC is to include an expansion factor \(\lambda _k\) in the likelihood function, and iteratively resample intermediate posterior distributions until the expansion factor converges to the true posterior. The modified likelihood function is given by

such that for \(\lambda _k>1\) the standard deviation of the likelihood function is artifically inflated. Ref[35] provides an adaptive choice for parameter \(\lambda _k\) given by

where \(\delta _{max}\) is a tunable parameter which influences the size of the progressive correction steps.

The final step in the maneuvering satellite search procedure is to estimate the target state and maneuver sequence. In fact, the HOSM method framework unifies the estimation of the target state and maneuver sequence into the same procedure. Since the samples in the maneuver space \(\varvec{\zeta }^{(i)}\) are mapped to samples in the state space \(\textbf{x}^{(i)}\) by the polynomial approximation (39), estimates can be computed simultaneously using

Note that during the search phase, the point estimate of the target given above may be a poor estimate of the true target location due to the very diffuse target pdf. Typically, a point estimate of mean target state only becomes meaningful after the first target detection when the pdf contracts to the levels of sensor noise around the measurement. Additionally, it is important to note that since measurements are taken in the \(\textbf{x}\) space, the reliability of maneuver estimates in the \(\varvec{\zeta }\) space are highly dependent on the quality of the polynomial approximation (39). This will be discussed further in Sect. 6. The Bayesian particle filtering method can be summarized as following

-

1.

Initialize Particles and Quadrature Points: Randomly sample N reachability set particles from the normalized maneuver distribution: \(\varvec{\zeta }_{rs}^{(i)}\in \pi (\varvec{\zeta })\) and assign equal weights \(w^{(i)}=\frac{1}{N}\), and initialize quadrature points computed via non-product quadrature method: \(\varvec{\zeta }_q^{(i)}\)

-

2.

Propagate Directly propagate quadrature points: \(\textbf{x}_{q,k+1}^{(i)}=\varvec{\chi }(\varvec{\zeta }_q^{(i)},\textbf{z}_{ob},k+1)\) to next measurement at \(t_{k+1}\). Use \(\textbf{x}_{q,k+1}^{(i)}\) to evaluate polynomial model coefficients via (13). Propagate reachability samples to \(t_{k+1}\) using polynomial approximation: \(\textbf{x}_{rs,k+1}^{(i)}\approx \textbf{C}_{k+1}\varvec{\phi }(\varvec{\zeta }_{rs}^{(i)})\). Since the quadrature points are designed to be minimal, approximating \(\textbf{x}_{rs,k+1}^{(i)}\) in this manner can offer orders of magnitude of computational savings.

-

3.

Determine Sensor Parameters Create a grid of sensor parameters \(\varvec{\Phi }\). For each parameter combination, evaluate the cost function (41) using reachability samples \(\textbf{x}^{(i)}_{rs,k+1}\) and select the parameters which maximize detection likelihood \(\varvec{\theta }^*={{\,\mathrm{arg\,max}\,}}_{\Phi }\left[ J_d(\textbf{x},\varvec{\Phi })\right]\)

-

4.

Reachability Set Update Update the reachability set particle weights using (45) and (46). If target is detected, use likelihood function (47), if target is not detected, use likelihood function (48).

-

5.

Resample If the target is detected for the first time, resample using ISPC algorithm, otherwise if resampling criterion \(ESS<\frac{N}{2}\) is satisfied, resample \(\varvec{\zeta }_{rs}^{(i)}\) using systematic resampling algorithm.

-

6.

Estimate State and Maneuver Compute the current target state and maneuver estimates using (52). If desired, covariance estimates can be computed as well. Keep in mind that estimate covariance will be very large if the reachability set is still being searched. Return to step 2.

The above particle filtering framework in conjunction with maximum detection likelihood sensor tasking and the HOSM method enables a feasible framework for propagating and searching the reachability set of a noncooperative target satellite. Figure 6 summarizes each component of the proposed method, and the following section will present numerical simulations and discuss results.

6 Numerical Results

This section validates the mixed-distribution quadrature method described in Sect. 3.3 used to propagate reachability sets, as well as provides simulation results for two examples of the full noncooperative satellite search method. The reachability set validation method provides a comparison of sets propagated using a tensor product method and using the DQ method. The two simulation test cases are 1) a single maneuver case, and 2) a two maneuver case. The first test case studies the effect of different order polynomial approximations on the overall performance of the developed framework.

The quadrature method validation section, propagates the reachability set for a target initially in an inclined LEO orbit, whereas both test cases consider a target initially in a slightly eccentric and slightly inclined Geosynchronous Earth Orbit (GEO). Additional test cases of the full search method, including a LEO orbit case, can be found in [16, 17]. The model \(\textbf{f}(\textbf{x},t)\) used for all results is the nonlinear \(J_2\)-perturbed relative equations of motion defined in [44]. The equations of motion for the dynamic model are

where \(\omega _i\) terms are angular velocity of the rotating frame, \(\alpha _i\) terms are the angular acceleration of the rotating frame, and \(\zeta\),\(\eta\) are constants related to the \(J_2\) acceleration. The coordinate frame for this model is the relative RSW frame depicted in Fig. 9. The RSW coordinate frame is defined by \(\hat{R}\) in the radial direction, \(\hat{S}\) in the in-track direction and \(\hat{W}\) in the orbit normal direction. The origin of the RSW frame is located at the nominal orbit of the target at \(t_0\). The following section will provide a comparison of reachability sets computed with competing methods in the RSW frame with dynamic model \(\textbf{f}(\textbf{x},t)\).

6.1 Comparison of Quadrature Methods

Consider the case of a single unknown maneuver magnitude and unknown maneuver time, with input vector \(\textbf{z}_t=[\textbf{u}_{t,1},t_1]\). Two quadrature sets which match up to 5th order moments for this input were computed in Sect. 3.3. The first is a \((45\times 4)\) matrix \(\textbf{X}_{tens}\) computed via tensor product of spherically uniform CUT points and uniform Gauss-Legendre points, and the second is a \((21\times 4)\) matrix \(\textbf{X}_{DQ}\) constructed via the DQ algorithm.

The target is initially in an inclined LEO orbit with orbital elements given in Table 5, and is assumed to make a maneuver bounded by a magnitude of 5m/s \(\textbf{u}_{t,1}\in \mathcal {U}_s(0,5m/s)\) and bounded maneuver time \(t_1\in \mathcal {U}(0,P/2)\) where P is the orbital period \((P=5309.6 s)\).

Reachability set coefficents with respect to a second order polynomial basis are computed in intervals of 30 seconds throughout the first orbital period using each quadrature set \(\textbf{X}_{tens}\) and \(\textbf{X}_{DQ}\). 10,000 random Monte Carlo samples are then drawn from the input vector \(\varvec{\zeta }_{mc}^{(i)}\) and exactly integrated through the dynamic model to target states \(\textbf{x}_{mc,k}^{(i)}=\varvec{\chi }(\varvec{\zeta }_{mc}^{(i)},\textbf{z}_{ob},k)\) in the same 30 second intervals. The error between the exact MC sample evaluations and the polynomial approximation is computed for each quadrature method.

The norm of position and velocity errors, \(||\epsilon _r||_2,\ ||\epsilon _v||_2\) for each sample are computed and the RMS error value is computed over all samples

The RMS error of position and velocity is plotted for each method vs. time in Fig. 7.

It is immediately obvious, that the errors for the DQ method and the tensor product method are nearly identical throughout the entirety of the simulation. This result demonstrates, that although the non-product DQ method has computational savings of \(53\%\) compared to the product method (\(N=21\) vs. \(N=45\)), there is no loss in accuracy. This is due to the fact that the same order of MCEs were satisfied for both sets regardless of the number of points used.

Figure 8 shows a position scatter plot of all 10,000 MC samples \(\textbf{x}_{mc}^{(i)}\) at the final timestep, and the colorbar denotes the distribution of position errors thorughout the reachability set. Although this error analysis is only shown for a 2nd order basis, examples in [17] show that increasing the basis function order can significantly decrease the error in reachability set approximation. This fact is reflected in the following sections in the accuracy of target state and maneuver estimates for varying polynomial basis order. It has been demonstrated that the non-product DQ quadrature method has equivalent accuracy to a tensor product method, with significantly improved efficiency. The following sections demonstrate these efficient non-product quadrature methods can be used to enable the noncooperative maneuvering satellite search method.

6.2 Search Method Test Cases

This section describes the simulation parameters for the noncooperative satellite search method test cases and provide numerical results and analysis of each. Both test cases consider the observer to be a space-based satellite with the same dynamic equations of motion as the target. The observer is prescribed range \(|\varvec{\rho }|\), range-rate \(|\varvec{\dot{\rho }}|\), azimuth \(\hat{\theta }_1\), and colatitude \(\hat{\theta }_2\) measurements given by

where all relative position and velocity coordinates are given in the RSW frame. The sensor parameters \(\varvec{\theta }=[\theta _1\ \theta _2]^T\) specify the observer attitude, which can be converted into a unit vector \(\hat{\textbf{a}}\) given by

such that \(\gamma\) is the angle between \(\hat{\textbf{a}}\) and \(\varvec{\rho }\) and can be computed using (38).

The measurements are assigned Gaussian noise with covariance matrix \(\textbf{R}=diag([25m,0.1m/s,0.1^\circ ,0.1^\circ ]^2)\). The observer is assigned a FOV with half angle \(\gamma ^*=7.5^\circ\) and a scale distance \(|\rho _s|=1000km\) for computing detection probability.

Note that the outcome of the simulation is dependent on the overall observability of the reachability set with respect to the observer detection zone. If the volume of the observer FOV is significantly less than the volume of the reachability set, then the observer may never be able to locate the target within the set; and conversely, if the observer FOV is larger than the reachable set, then the target will be detected within the first few timesteps. The parameters selected here correspond to a sensor with long range, but narrow FOV, and were chosen for moderate observability to demonstrate a range of outcomes.

Assume that half an orbital period elapses without observing the target such that maneuver time is defined as uniformly distributed between 0 and 12 hours, i.e. \(t_i\in \mathcal {U}(0hr,12hr)\). Measurements are taken every 5 minutes (\(\Delta t=300 sec\)) starting from \(t=12 hr\) through the end of the first orbital period \(t_f=24 hr\). Also assume it is known that the target has a maximum maneuver capability of 5m/s such that unknown maneuvers are spherically uniform distributions \(\textbf{u}_{t,i}\in \mathcal {U}_s(0,5m/s)\). The nominal target satellite orbit at \(t=0\) is given by the orbital parameters and corresponding Earth Centered Inertial (ECI) coordinate state vector given in Tables 6 and 7.

6.2.1 Test Case 1: Single Maneuver

This section will present the results for the single unknown maneuver test case. For this test case, the random target input vector is given by

The nominal observer orbit at the start of the search \(t=12hr\) is given by the relative RSW coordinate state vector given in Table 8.

Quadrature sets which satisfy moment constraint equations up to \(d=4,6,8,10\) order are computed and used to evaluate reachability set coefficients for polynomial models of \(D=2,3,4,5\) order respectively. Refer to Sect. 3 for details on the computation of quadrature sets. The number of quadrature points and basis functions required for each model is given in Table 9. Once reachability set coefficients are computed, 10,000 samples \(\varvec{\zeta }^{(i)}\) are drawn and evaluated using the polynomial model. The position of these samples in RSW coordinates at \(t=12hr\) is shown in Fig. 10 for the 5th order polynomial model, where the colorbar depicts the maneuver time for a given sample.

Consider a single realization of the true target maneuver \(\textbf{u}_{t,1}^*\) and maneuver time \(t_1^*\) given by

Fig. 11 depicts the evolution of the target pdf in the initial target orbital plane (\({\hat{R}},{\hat{S}}\)) using a 5th order polynomial basis in 1 hour intervals. A subset of 100 samples are randomly selected and plotted over the contours of Fig. 11. The initial observer state and true target maneuver were specifically selected for this test case to demonstrate a few key takeaways. First and foremost, notice that the true target state is captured within the contours of the pdf at all times. The pdf contours represent all possible states and maneuvers histories that could explain the measurements up to the current time. Secondly, the geometry of the observer and the reachability set means that the target will not be in the observer’s FOV when initially searching the most likely region for the target (near the origin). This is done to demonstrate how the contours of the target pdf become more concentrated around the true target state as vacant regions of the reachability set are searched, and by 17 hours into the simulation the true target location is centered in the most probable region of the reachability set. The largest reduction in uncertainty during the search procedure is, by far, at the time of first detection \(t_d\). Therefore, \(t_d\), as well as state and maneuver estimate errors are important performance metrics. The detection time for the 5th order case is 18 hours and 25mins.

The state and maneuver estimates at both the time of first detection \(t_d\) as well as the final simulation time \(t_f\) are computed for all polynomial models using (52). The target position and velocity estimate errors \(\epsilon _r\) and \(\epsilon _v\) respectively, are defined as

where \(\textbf{r}_0\) and \(\textbf{v}_0\) are used to normalize the errors by the initial Cartesian position and velocity. The maneuver estimate errors are given by

These errors are tabulated for the initial detection time as well as the final time \(t_f=24 hrs\) in Tables 10 and 11, respectively. Generally speaking, the error values for both the target state and maneuver estimate appear to decrease as the order of the polynomial model increases. There are some subtle numerical reasons why the state and maneuver estimate errors for this particular realization do not strictly decrease as polynomial order increases. One reason is that at \(t_f\) the state estimate is largely a function of the assumed measurement error, not the reachability set error. A reason that the estimate errors do not correlate entirely with polynomial order at detection time \(t_d\) may simply be random chance that certain order polynomials approximate the reachability set better at this particular input realization. In other words, on average over the entire reachability set higher order polynomials will approximate the set more accurately; however, there will always be locations in the set where a low order polynomial approximates the set better than a high order polynomial purely by chance. To this end, examining these results too closely will not provide a full picture, as only looking at a single realization of the true target maneuver doesn’t encompass the full domain of the reachability set.

Rather than considering a single realization of the true target maneuver, now consider the full simulation run 250 times with a new true target maneuver randomly sampled for each simulation. The simulation is run using the same 250 realizations for all polynomial models. Figure 12 shows histograms of the time of first detection \(t_d\) for varying polynomial model orders. From Fig. 12, it can be observed that target target is detected quickly in the majority of the simulations; however some target maneuvers cause the target to be undetected for longer. The simulations are run long enough that \(100\%\) of targets are detected, however increasing the polynomial order slightly reduces the time required to locate the target in these extreme cases. The target was detected in \(95\%\) of simulations by \(t=20.17\)hr for the model order \(D=2\), \(t=19.58\)hr for \(D=3\), \(t=19.17\)hr for \(D=4\), and \(t=18.58\)hr for \(D=5\).

Figures 13, and 14 show histograms of log estimate error in maneuver magnitude \(\epsilon _u\), and maneuver time \(\epsilon _t\) respectively for varying polynomial model orders. It can be readily seen that the maneuver magnitude and maneuver time estimate errors are reduced by increasing polynomial order. Since reduction of detection time is only very modestly improved by increased polynomial order, selection of the polynomial order to be used is dependent on the application considered. If the only requirement is to locate the target, perhaps a low order reachability set can be used to define the reachable space more efficiently; however, if maneuver reconstruction is required, higher accuracy reachability sets may be necessary to accurately estimate the maneuver. Table 12 summarizes the results of the 250 simulations by giving the mean error of the target state and maneuver estimates at the final time along with a \(1\sigma\) plus or minus bounds.

6.2.2 Test Case 2: Two Maneuvers

This section will present the results for a case with two unknown maneuvers. For this test case, the random target input vector is given by

where maneuver \(\textbf{u}_{t,i}\) and maneuver time \(t_i\) distributions are defined identically to the those in test case 1. The main difference in this test case is the dimension of the input and the total \(\Delta V\) the target is allowed to make. Due to the curse of dimensionality, the number of points required to evaluate the reachability coefficients greatly exceeds that required for a 4-D input. In fact, 6th and 8th order quadrature points were unable to be found directly in eight dimensional space using the DQ algorithm, so a tensor product of lower dimensional sets had to be used as described in Sect. 3.3. Table 13 lists the number of basis functions and number of quadrature points required to compute reachability sets for varying polynomial model order.

For brevity, only the results for the 4th order polynomial model will be shown. Figure 16 depicts the target reachability set in RSW coordinates using a 4th order polynomial model, where the colorbar indicates the average maneuver time. As is intuitively obvious, the larger total \(\Delta V\) available to the target makes the physical size of the two maneuver reachability set considerably larger than the single maneuver set shown in Fig. 10. The evolution of the pdf for a single realization of the two maneuvers is shown in Fig. 15. The detection time for this realization was 15hr 45min.

The 4th order polynomial model is used to run 250 full simulations with a new true target maneuver sequence randomly sampled for each iteration. The error in estimated maneuver magnitude \(\epsilon _u\) and maneuver time \(\epsilon _t\) for both unknown maneuvers at the final simulation time is given in Fig. 17. It is apparent from Fig. 17 that the maneuver estimates have greater error than the respective 4th order models in the single maneuver test case (Figs. 13, 14). This is to be expected for several reasons. Firstly, the physically larger size of the reachability set will cause errors in the polynomial approximation to increase, thereby increasing error in the mapping between maneuvers and target states. Furthermore, the second maneuver creates the possibility for multiple maneuver sequences to acheive the same target trajectory. In this situation, it is impossible for the particle filter to disambiguate between possible maneuver histories and the filter may converge around an incorrect solution.

Despite the increased error in maneuver estimate and larger search region, using the proposed method the observer satellite was able to locate the target satellite in \(96\%\) of the 250 simulations run (i.e. 10 unfound targets). There are several reasons why some targets were not detected in this simulation. Firstly, the parameters of the observation zone and detection probability were kept the same as the single maneuver case, and due to the larger search region, portions of the reachability set further away from the observer were unable to be searched adequately. Secondly, the final simulation time was kept constant at 24hr so it may be the case that the observer simply didn’t have enough time to find the target in extreme cases.

Simulations where the target was not detected were removed from state and maneuver estimate calculations. The error in position \(\epsilon _r\) and velocity \(\epsilon _v\) estimate at the final time are computed using the normalization given by (60). The average state and maneuver estimate errors at the final simulation time plus or minus \(1\sigma\) is shown in Table 14. The average error in both maneuver and state estimates were higher for this case than the compareable 4th order estimate errors in test case 1. This is to be expected due to the higher dimensionality and larger physical size of the reachability set.

7 Conclusions

This paper presents a systematic search method for detecting a satellite that has been lost due to unknown maneuvers. A novel characterization of unknown maneuvers defines the target search space, and a maximum detection likelihood approach is used to task sensors. Sensor observations of vacant regions of the search space are used to reduce uncertainty in target state, and inform future sensor tasking. In fact, the reachability set represents the set of all possible states and maneuver histories that can be explained by the measurments up to a given time. The true target state will always be a subset of this search space given that the bounds on input uncertainty are not violated. The proposed method provides a unified framework for search, detection, tracking, and maneuver estimation of a noncooperative target.

The applicability of the proposed method is largely dependent on the observability of the problem. Sensors with large FOV relative to the target reachability set will easily locate the target, whereas a sensor with small FOV relative to a physically large reachable set may only have limited observability. Generalization of the single-sensor single-target sensor tasking cases examined here to the generic sensor tasking problem as well as enabling active control of mobile sensors may prove beneficial for increasing observability of the reachability set.

Polynomial model order of the reachability set approximation is a flexible design parameter left to the discretion of the user. Higher order polynomial models offer better target state and maneuver estimation accuracy at the expense of increased computational cost. If the only objective is to locate the target, without regard for accurate maneuver reconstruction, using low order polynomials to model the reachable space can provide similar detection time performance. Additionally, increasing the assumed number of maneuvers the target imposes strongly prohibitive computational burdens on the proposed method. The current research work is focused on the computationally efficient extension of this approach for low-thrust continuous maneuvers.

Notes

In a generic multi-target multi-sensor scenario, this problem can be posed as \(\max _{\{\varvec{\Theta }\}}\ J_d(\textbf{X},\varvec{\Theta })\) where \(\varvec{\Theta }\) is the set of all possible sensor network configurations and \(\textbf{X}\) is the set of all system states over some finite time horizon.

References

Adurthi, N., Singla, P., Majji, M.: Mutual information based sensor tasking with applications to space situational awareness. J. Guid. Control. Dyn. 43(4), 767–789 (2020)

Adurthi, N., Singla, P., Singh, T.: Conjugate unscented transformation: applications to estimation and control. J. Dyn. Syst. Measur. Control 140(3), 030907 (2018)

Bar-Shalom, Y., Chang, K.C., Blom, H.A.: Tracking a maneuvering target using input estimation versus the interacting multiple model algorithm. IEEE Trans. Aerosp. Electron. Syst. 25(2), 296–300 (1989)

Blom, H.A., Bar-Shalom, Y.: The interacting multiple model algorithm for systems with Markovian switching coefficients. IEEE Trans. Autom. Control 33(8), 780–783 (1988)

Bogler, P.L.: Tracking a maneuvering target using input estimation. IEEE Trans. Aerosp. Electron. Syst. 3, 298–310 (1987)

Chan, Y., Hu, A., Plant, J.: A Kalman filter based tracking scheme with input estimation. IEEE Trans. Aerosp. Electron. Syst. 2, 237–244 (1979)

Chen, G., Chui, C.K.: A modified adaptive Kalman filter for real-time applications. IEEE Trans. Aerosp. Electron. Syst. 27(1), 149–154 (1991)

Douc, R., Cappé, O.: Comparison of resampling schemes for particle filtering. In: ISPA 2005. Proceedings of the 4th International Symposium on Image and Signal Processing and Analysis, 2005, pp. 64–69. IEEE (2005)

Doucet, A., Godsill, S., Andrieu, C.: On sequential Monte Carlo sampling methods for Bayesian filtering. Stat. Comput. 10(3), 197–208 (2000)

Doucet, A., Ristic, B.: Recursive state estimation for multiple switching models with unknown transition probabilities. IEEE Trans. Aerosp. Electron. Syst. 38(3), 1098–1104 (2002)

Gholson, N.H., Moose, R.L.: Maneuvering target tracking using adaptive state estimation. IEEE Trans. Aerosp. Electron. Syst. 3, 310–317 (1977)

Gustafsson, F.: The marginalized likelihood ratio test for detecting abrupt changes. IEEE Trans. Autom. Control 41(1), 66–78 (1996)

Hall, Z.: A probabilistic approach for uncertain lambert problem and reachability set calculations. Master’s thesis, The Pennsylvania State University (2018)

Hall, Z., Singla, P.: Higher order polynomial series expansion for uncertain Lambert problem. In: AAS Astrodynamics Specialist Conference (2018)

Hall, Z., Singla, P.: Higher-order sensitivity matrix method for probabilistic solution to uncertain lambert problem and reachability set problem. Celest. Mech. Dyn. Astron. 132(10), 1–36 (2020)

Hall, Z., Singla, P.: Reachability analysis based tracking: applications to non-cooperative space object tracking. In: Darema, F., Blasch, E., Ravela, S., Aved, A. (eds.) Dynamic Data Driven Applications Systems, pp. 200–207. Springer International Publishing, Cham (2020)

Hall, Z., Singla, P., Johnson, K.: A particle filtering approach to space-based maneuvering satellite location and estimation. In: AAS Astrodynamics Specialist Conference (2020)

Holzinger, M., Scheeres, D.: Applied reachability for space situational awareness and safety in spacecraft proximity operations. In: AIAA Guidance, Navigation, and Control Conference, p. 6096 (2009)

Holzinger, M.J., Scheeres, D.J., Alfriend, K.T.: Object correlation, maneuver detection, and characterization using control distance metrics. J. Guid. Control. Dyn. 35(4), 1312–1325 (2012)

Julier, S., Uhlmann, J.: Reduced sigma point filters for the propagation of means and covariances through nonlinear transformations. In: American Control Conference, 2002. Proceedings of the 2002, vol. 2, pp. 887–892. (2002). https://doi.org/10.1109/ACC.2002.1023128

Julier, S., Uhlmann, J.: Unscented filtering and nonlinear estimation. Proc. IEEE 92(3), 401–422 (2004)

Julier, S.J., Uhlmann, J.K., Durrant-Whyte, H.F.: A new approach for filtering nonlinear systems. In: Proceedings of the American Control Conference, pp. 1628–1632. Seattle, WA (1995)

Keshavarzzadeh, V., Kirby, R.M., Narayan, A.: Numerical integration in multiple dimensions with designed quadrature. SIAM J. Sci. Comput. 40(4), A2033–A2061 (2018). https://doi.org/10.1137/17m1137875

Li, X.R., Jilkov, V.P.: Survey of maneuvering target tracking: III. measurement models. In: Signal and Data Processing of Small Targets 2001, vol. 4473, pp. 423–446. International Society for Optics and Photonics (2001)

Li, X.R., Jilkov, V.P.: Survey of maneuvering target tracking: Iv decision-based methods. In: Signal and Data Processing of Small Targets 2002, vol. 4728, pp. 511–534. International Society for Optics and Photonics (2002)

Li, X.R., Jilkov, V.P.: Survey of maneuvering target tracking. Part I. Dynamic models. IEEE Trans. Aerosp. Electron. Syst. 39(4), 1333–1364 (2003)

Li, X.R., Jilkov, V.P.: A survey of maneuvering target tracking: approximation techniques for nonlinear filtering. In: Signal and Data Processing of Small Targets 2004, vol. 5428, pp. 537–550. International Society for Optics and Photonics (2004)

Li, X.R., Jilkov, V.P.: Survey of maneuvering target tracking. Part V. Multiple-model methods. IEEE Trans. Aerosp. Electron. Syst. 41(4), 1255–1321 (2005)

Li, X.R., Jilkov, V.P.: Survey of maneuvering target tracking. Part II: Motion models of ballistic and space targets. IEEE Trans. Aerosp. Electroni. Syst. 46(1), 96–119 (2010)

Liu, J.S.: Monte Carlo Strategies in Scientific Computing. Springer Science & Business Media, Berlin (2008)

Lubey, D.P., Scheeres, D.J.: Identifying and estimating mismodeled dynamics via optimal control policies and distance metrics. J. Guid. Control. Dyn. 37(5), 1512–1523 (2014)

Mazor, E., Averbuch, A., Bar-Shalom, Y., Dayan, J.: Interacting multiple model methods in target tracking: a survey. IEEE Trans. Aerosp. Electron. Syst. 34(1), 103–123 (1998)

McAulay, R.J., Denlinger, E.: A decision–directed adaptive tracker. IEEE Trans. Aerosp. Electron. Syst. 9(2), 229–236 (1973)

Mook, D.J., Junkins, J.L.: Minimum model error estimation for poorly modeled dynamic systems. J. Guid. Control. Dyn. 11(3), 256–261 (1988)

Musso, C., Oudjane, N., Le Gland, F.: Improving regularised particle filters. In: Sequential Monte Carlo methods in practice, pp. 247–271. Springer, New York (2001)

Patera, R.P.: Space event detection method. J. Spacecr. Rocket. 45(3), 554–559 (2008)

Punithakumar, K., Kirubarajan, T., Sinha, A.: Multiple-model probability hypothesis density filter for tracking maneuvering targets. IEEE Trans. Aerosp. Electron. Syst. 44(1), 87–98 (2008)

Simon, D.: Optimal State Estimation: Kalman, H. and Nonlinear Approaches. Wiley, New York (2006)

Singh, N., Horwood, J.T., Poore, A.B.: Space object maneuver detection via a joint optimal control and multiple hypothesis tracking approach. In: Proceedings of the 22nd AAS/AIAA Space Flight Mechanics Meeting, vol. 143, pp. 843–862. Univelt San Diego, CA (2012)

Smith, A.: Sequential Monte Carlo Methods in Practice. Springer Science & Business Media, New York (2013)

Stroud, A.H., Secrest, D.: Gaussian Quadrature Formulas. Prentice Hall, Englewood Cliffs, NJ (1966)

Surovik, D.A., Scheeres, D.J.: Adaptive reachability analysis to achieve mission objectives in strongly non-Keplerian systems. J. Guid. Control. Dyn. 38(3), 468–477 (2015)