Abstract

Background

Pharmaceuticals are usually granted a marketing authorisation on the basis of randomised controlled trials (RCTs). Occasionally the efficacy of a treatment is assessed without a randomised comparator group (either active or placebo).

Objective

To identify and develop a taxonomic account of economic modelling approaches for pharmaceuticals licensed without RCT data.

Methods

We searched PubMed, the websites of UK health technology assessment bodies and the International Society for Pharmacoeconomics and Outcomes Research Scientific Presentations Database for assessments of treatments granted a marketing authorisation by the US Food and Drug Administration or European Medicines Agency from January 1999 to May 2014 without RCT data (74 indications). The outcome of interest was the approach to modelling efficacy data.

Results

Fifty-one unique models were identified in 29 peer-reviewed articles, 30 health technology appraisals, and 15 International Society for Pharmacoeconomics and Outcomes Research abstracts concerning 30 indications (44 indications had not been modelled). We noted the high rate of non-submission to health technology assessment agencies (28/98). The majority of models (43/51) were based on ‘historical controls’—comparisons to previous meta-analysis or pooling of trials (5), individual trials (16), registries/case series (15), or expert opinion (7). Other approaches used the patient as their own control, performed threshold analysis, assumed time on treatment was added to overall survival, or performed cost-minimisation analysis.

Conclusions

There is considerable variation in the quality and approach of models constructed for drugs granted a marketing authorisation without a RCT. The most common approach is of a naive comparison to historical data (using other trials/registry data as a control group), which has considerable scope for bias.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

There exists no formal guidance for modelling treatments that achieved a marketing authorisation based only on uncontrolled clinical trial data. |

Of treatments gaining a marketing authorisation in this manner, approximately half have been analysed in published models, with around a quarter of indications not-submitted to UK health technology assessment bodies for review when requested. |

Where models have been constructed, the most frequent approach is a naive comparison to a historical control without any adjustment for differences in patient population. This approach is open to bias should the patients be non-exchangeable. |

1 Introduction

Treatments are usually granted a marketing authorisation on the basis of randomised controlled trials (RCTs), conducted against either a placebo or an active control [1]. This provides a basis for regulators to make decisions regarding the efficacy of interventions compared with the current standard of care [2]. This evidence may then be used to estimate the difference between the new treatment and the standard of care. Indirect treatment comparisons using a common comparator sometimes enable the comparison of the efficacy of treatments across different studies [3, 4].

Less commonly, treatments can be granted a marketing authorisation without a study containing a control arm. In a few cases, it may be apparent that the treatment is efficacious, for example, if all patients died before an intervention was available, but all live afterwards [5], or patients achieve a marked improvement in an objective measure, for example, blood count [2]. While treatments may receive a licence without being supported by RCTs, estimates of their comparative efficacy (relative to the current standard of care) are still needed to inform decisions on reimbursement in many healthcare systems. This decision problem faced by regulators is different to that of payers—whilst a regulator must ask the question of whether the benefit/risk of a product is positive, a payer is interested in how much benefit is gained for the additional cost of treatment (or alternatively may use the additional benefit to set a price). In many countries (particularly in Western Europe), these calculations are formally brought in to decision making through the use of cost-effectiveness analysis for resource allocation decisions [3].

Where cost-effectiveness analysis is used as a decision criterion, in general, treatments are required to generate more health (usually defined in terms of quality-adjusted life-years) than the treatments that would be displaced (represented by a ‘shadow budget’). This means that in practice the money spent on the new intervention should generate more health than money spent elsewhere in the healthcare system. To estimate the magnitude of the health gains seen with new technologies, modelling is used to extrapolate the benefits beyond the trial(s), though how comparative estimates should be constructed without controlled trials is unclear. While there exists extensive guidance on constructing economic models based on RCT results, there is no health technology agency or professional body guidance on the most appropriate method of modelling study data without an internal control (Table 1).

The objective of this study was therefore to identify models constructed for treatments granted marketing authorisation without RCT evidence, and the approach taken to estimating relative efficacy of the treatment(s).

2 Methods

Hatswell et al. [6] identified treatments granted a marketing authorisation by either the US Food and Drug Administration or the European Medicines Agency from January 1999 to May 2014, without supportive RCT results (74 indications for 62 drugs). We conducted a systematic review for economic evaluations published for each of the treatments in the relevant indications using PubMed (search terms given in Fig. 1). The search strategy used was an extremely broad one, as multiple types of study may have included methods used to estimate comparative efficacy, for example, clinical papers estimating the benefit of treatment and cost-effectiveness studies will have required comparative effectiveness as an input. Furthermore, cost-effectiveness studies are often published with varying titles, again supporting a wide search strategy with the expectation of a large amount of filtering performed on hits.

To ensure we identified all relevant modelling approaches, searches were also conducted for health technology appraisals conducted by the National Institute for Health and Care Excellence (NICE), the Scottish Medicines Consortium (SMC) and the All Wales Medicines Strategy Group (AWMSG), as well as the grey literature of the International Society for Pharmacoeconomics and Outcomes Research (ISPOR) Scientific Presentations Database. As the search tools on the health technology appraisal agency and ISPOR websites lack the sophistication and complexity of PubMed, a search was conducted on each website for the generic drug name, the US brand name or the European Union brand name. This again was expected to result in a large number of hits not meeting the inclusion criteria (as any document mentioning the product would be included), but is likely to include all relevant papers.

After identification, results (papers, health technology appraisal submissions and scientific presentations) were filtered for models that analysed indications where uncontrolled study data were the primary basis for approval and used only these non-RCT data (some pharmaceuticals had multiple indications, or subsequently had RCT data become available). The exclusion criteria used were for hits that did not include a method of generating comparative effectiveness in the specified indication, for example, it only discussed the (uncontrolled) trial results in isolation, or made comment on the cost of the drug. Results were then de-duplicated, based on the model descriptions and study authors, to account for the same model being used for different purposes (for example, a model used in a NICE submission, then published with Spanish costs, all while using the same approach to modelling efficacy). Where it was not clear whether a model was reported on multiple occasions, or was a similar (yet independent) approach, this was discussed by the reviewers and a decision reached by consensus.

Following identification of the economic models, the approaches used to estimate efficacy against the relevant comparator were categorised for each model. If a model included multiple approaches to modelling efficacy data, these were classed as separate modelling approaches. The modelling approaches identified were then placed into a taxonomic framework and analysed for commonality in approach.

3 Results

Figure 2 shows the Preferred Reporting Items for Systematic Reviews and Meta-Analyses diagram for economic evaluations retrieved through PubMed [7]. The initial 74 literature searches in PubMed yielded 1202 hits, which were reduced to 56 full articles after abstract and title review. Twenty-nine papers were included after the full paper review. The main reasons for exclusion during this review were models being based on RCT data (n = 9), models evaluating a different indication (including a different stage of the same disease, n = 7) and papers that did not contain an economic model (e.g., burden of illness studies, n = 6). As expected, there was a large amount of initial hits (owing to the wide search strategy) excluded at the initial review stage, for example, papers that discussed the treatment of interest (search 4), and mentioned cost (in any context).

In addition to published papers, searches of health technology assessment body websites led to 19 NICE appraisals being identified (9 included), 52 SMC appraisals identified (16 included) and 27 AWMSG appraisals identified (5 included). Overall, there was a notable level of non-submission to health technology assessment agencies, in particular to the SMC (13/52 non-submissions) and AWMSG (13/27 non-submissions). Appraisals also often occurred after RCT-based results had become available (NICE 8/19, SMC 9/52, AWMSG 3/27), leading to exclusion from this study. Full results of the review are shown in Table 2.

Searching the ISPOR Scientific Presentations Database led to 1780 abstract hits, with 43 records selected for further review and 15 full records included. The most common reason for exclusion of records selected for full review was insufficient information reported regarding the model or approach used (n = 14). The large number of hits relative to included documents was mostly owing to the imprecise nature of the search function available, where we were forced to search for all abstracts that mentioned the drug in any context. For widely used drugs, this resulted in a large number of hits that were on the whole, not relevant—1569/1780 hits (88 %) were for just six products (imatinib, cetuximab, bortezomib, sunitinib, dasatinib and nilotinib) and yielded only eight relevant abstracts. Whilst this pattern was similar with PubMed hits, the additional specificity of search terms meant these six products constituted 882/1202 of hits (73 %) whilst representing 15 of the 74 indications (20 %).

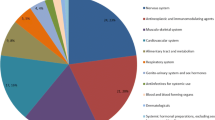

In total, 74 relevant documents were identified (including publications, health technology appraisals and scientific presentations), which described 91 distinct modelling approaches for 30 indications. After consolidation of approaches reported multiple times (for example, one model being used for NICE and SMC submissions, presented at ISPOR and then published in an indexed journal), 51 unique modelling approaches were identified. Of these 51 modelling approaches, the overwhelming majority (n = 43, 84 %) were based on historical controls. Other approaches identified included using patients as their own control by statistical analysis or comparisons with baseline values (n = 3, 6 %), cost-minimisation analyses (n = 3, 6 %), threshold analyses (n = 1, 2 %) or assuming in oncology that time on treatment (assumed to be equal to progression-free survival in the model) was added to overall survival, with treatments then given in sequence (termed the ‘cumulative method’; n = 1, 2 %) (Fig. 3).

All the 43 historical controls identified compared the results of the uncontrolled study of the new treatment with a separate set of data. In 16 cases (37 %), the treatment was compared with an investigational arm from another clinical study, and in five cases (12 %), the treatment was compared with pooled or meta-analysed data from a series of studies. A further 15 models (34 %) used comparisons to registry or case series data, and seven models (16 %) compared the results of the uncontrolled study with expert opinion. Trial and registry data appeared to be used interchangeably in evaluations, with only seven studies (16 %) attempting to account for differences in patient characteristics or patient selection between data sources. A summary of each of the modelling approaches identified is given in Table 3, which reports the approach taken, taxonomic category and reporting source.

When looking at the taxonomy by source (health technology assessment, published paper or conference proceedings), a similar pattern of modelling methods is apparent. This is shown in the Online Supplementary Material Appendix (Taxonomy of economic modelling approaches used for estimating incremental benefit from uncontrolled clinical studies by source).

4 Discussion

The results of this review show that 51 unique models have been published for 30 different indications granted a marketing authorisation without a comparative trial. Of the 74 indications for treatments approved without a comparative trial [6], 44 indications have not been modelled and estimates of relative effectiveness are not available. It is not known what the rate of economic evaluation of new treatments is, although we suspect it will be higher than the 40 % rate seen in this study.

The use of a historical control was by far the most common approach (43/51), which was most frequently taken from another trial or trials (21/43). However, even within this method there was substantial variation, some studies compared the results of uncontrolled trials with results taken from multiple trials (for example, Dinnes et al. who pooled the results of eight other clinical trials to compare against), whereas the majority of models compared against single arms from other studies.

The assumption inherent in naive comparisons to historical controls (first proposed by Pocock [8]) is that patients are similar, or “exchangeable”, between studies. If this is not the case, and patients do systematically differ between studies, then this procedure will introduce bias in the comparison. Several approaches to matching patients and baseline characteristics between studies are available in the literature, including methods based on propensity scores [9] and match-adjusted indirect comparisons [10]. Despite the availability of these approaches, only seven models attempted to control for any differences between trials, with one notable example being the work by Annemans et al., who constructed a historical control by reviewing patient records at the centres participating in the clinical trial in the time period before the clinical trial was open for enrolment, matching patients against the trial inclusion criteria [11].

The lack of adjustment of outcomes to reflect potentially more favourable patient cohorts may represent a substantial bias in the literature in favour of the new treatments. In a study by Sacks et al. of 50 RCTs and 56 historically controlled trials of the same interventions, the randomised control arm performed better than the historical control arm. In the studies cited, 79 % of historically controlled trials stated that the intervention was effective, compared with only 20 % of RCTs [12]. Diehl and Perry investigated the same question looking at overall survival or relapse-free survival in oncology, finding 43 examples in the literature of well-matched historical cohorts and RCT control groups. However, when comparing the outcomes of the two groups, 18 of the 43 studies had a greater than 10 % difference in effect size between the control groups—the randomised group performing better on 17 out of 18 occasions [13]. This latter finding is particularly concerning given that 32 of the 43 historically controlled models identified in our study were in oncology, though other example historical controls have proved a poor match for RCT control arms that would be expected to have shown similar results based solely on the inclusion criteria of patients [14–16].

Outside of historical controls, cost minimisation (though frowned upon in the literature [17]) was used in three models. While it may appear superficially attractive to assume treatments have equal efficacy to similar ones, it is unlikely that they exhibit exactly the same efficacy, with zero uncertainty. A further three models compared patient outcomes on treatment with a patient’s baseline result. This is also a potentially biased approach, owing to issues such as regression to the mean [18]. One additional approach, comparing all patients with non-responders, allows the estimation of an effect size, but it will be overly favourable towards the intervention, as non-responders will include an inherently sicker population [19]. The final approach noted was that of Tappenden et al., who pragmatically performed threshold analysis of the relative risk needed for the drug to be considered cost effective. Although this does not necessarily give an estimate of effect size, it allows a decision maker to make a more informed decision after reviewing the clinical evidence [20]; as such, we would recommend the use of similar threshold analyses where appropriate.

That there is a number of differing approaches to modelling, with a lack of a standard approach to handling issues such as patient selection, is likely a reflection of the relative rarity of evaluations with this type of data (we identified only 51 models, compared with the vast literature of health economic evaluations published [21]). Nevertheless, despite the lack of standard approaches and guidelines, some studies appear to be well conducted, with attempts to select an approach based on reasonable assumptions and control for any patient selection (for example Woods et al. [22]). Guidance has also recently been published by the NICE Decision Support Unit on the use of observational data in modelling where individual patient data are available for both trials [23], although this is not likely to be relevant in all instances, it does provide an outline of the available methods for use by modellers.

Whilst we have focussed on how comparative estimates have been generated, other limitations should also be noted regarding clinical studies without an internal control. These include limited sample size (with correspondingly large uncertainty) from which to extrapolate, the use of surrogate endpoints or interim endpoints (such as response rates rather than overall survival), and the duration of evidence collected (requiring extrapolation). Because of the limited information collected in studies without a control arm (both in sample size, duration and comparative data), regulators often specify the need for confirmatory clinical trials to be conducted. These may be comparative (yet in an earlier stage of disease) or may be single arm, and will most commonly be used to confirm the benefits seen with the new treatment in a larger cohort, and increase the number of treated patients for a better understanding of the adverse-event profile.

5 Conclusion

The majority of treatments granted a marketing authorisation without controlled study results have not been subject to economic evaluation in a published form, and there is a high level of non-submission to UK health technology agencies for such products. The evaluations that have been performed were generally based on naive comparisons to historical controls from individual arms of clinical trials, or registry/case series data.

Further research and guidance is required on the appropriateness of historical controls in economic evaluation, and on the most relevant methods to use when modelling without RCT data with the aim of estimating comparative effectiveness (including the relevance of data from other indications already approved). Ultimately, formal guidance and standardisation may reduce the level of bias in economic evaluations of indications approved without RCT data, and lead to an improvement in the average quality of published models. Standardisation would also provide a basis for comparison between studies, such that interventions can be more readily compared with other approaches to evaluation, where methods are comparable [24].

References

Hill SR, Mitchell AS, Henry DA. Problems with the interpretation of pharmacoeconomic analyses: a review of submissions to the Australian Pharmaceutical Benefits Scheme. JAMA. 2000;283:2116–21.

ICH Expert Working Group. ICH Harmonised Tripartite guideline E 10: choice of control group and related issues in clinical trials. In: International conference on harmonisation of technical requirements for registration of pharmaceuticals for human use choice. 2000.

Buxton MJ, Drummond MF, Van Hout BA, et al. Modelling in economic evaluation: an unavoidable fact of life. Health Econ. 1997;6:217–27.

Sutton A, Ades AE, Cooper N, Abrams K. Use of indirect and mixed treatment comparisons for technology assessment. PharmacoEconomics. 2008;26:753–67.

Glasziou P, Chalmers I, Rawlins M, McCulloch P. When are randomised trials unnecessary? Picking signal from noise. BMJ. 2007;334:349–51.

Hatswell A, Baio G, Irs A, et al. The regulatory approval of pharmaceuticals without a randomised controlled study: analysis of EMA and FDA approvals 1999–2014. BMJ Open. Accepted for publication.

Moher D, Liberati A, Tetzlaff J, Altman DG. Preferred reporting items for systematic reviews and meta-analyses: the PRISMA statement. Ann Intern Med. 2009;151:264–9.

Pocock SJ. The combination of randomized and historical controls in clinical trials. J Chronic Dis. 1976;29:175–88.

Freemantle N, Marston L, Walters K, et al. Making inferences on treatment effects from real world data: propensity scores, confounding by indication, and other perils for the unwary in observational research. BMJ. 2013;347:f6409.

Signorovitch JE, Sikirica V, Erder MH, et al. Matching-adjusted indirect comparisons: a new tool for timely comparative effectiveness research. Value Health. 2012;15:940–7.

Annemans L, Van Cutsem E, Humblet Y, et al. Cost-effectiveness of cetuximab in combination with irinotecan compared with current care in metastatic colorectal cancer after failure on irinotecan: a Belgian analysis. Acta Clin Belg. 2007;62:419–25.

Sacks H, Chalmers TC, Smith H Jr. Randomized versus historical controls for clinical trials. Am J Med. 1982;72:233–40.

Diehl LF, Perry DJ. A comparison of randomized concurrent control groups with matched historical control groups: are historical controls valid? J Clin Oncol. 1986;4:1114–20.

MacLehose RR, Reeves BC, Harvey IM, et al. A systematic review of comparisons of effect sizes derived from randomised and non-randomised studies. Health Technol Assess Winch Engl. 2000;4:1–154.

Moroz V, Wilson JS, Kearns P, Wheatley K. Comparison of anticipated and actual control group outcomes in randomised trials in paediatric oncology provides evidence that historically controlled studies are biased in favour of the novel treatment. Trials. 2014;15:481.

Ioannidis JP, Haidich AB, Pappa M, et al. Comparison of evidence of treatment effects in randomized and nonrandomized studies. JAMA. 2001;286:821–30.

Briggs AH, O’Brien BJ. The death of cost-minimization analysis? Health Econ. 2001;10:179–84.

Schulz KF, Chalmers I, Hayes RJ, Altman DG. Empirical evidence of bias: dimensions of methodological quality associated with estimates of treatment effects in controlled trials. JAMA. 1995;273:408–12.

Hoyle M, Crathorne L, Garside R, Hyde C. Ofatumumab for the treatment of chronic lymphocytic leukaemia in patients who are refractory to fludarabine and alemtuzumab: a critique of the submission from GSK. Health Technol Assess Winch Engl. 2011;15(Suppl 1):61–7.

Tappenden P, Jones R, Paisley S, Carroll C. Systematic review and economic evaluation of bevacizumab and cetuximab for the treatment of metastatic colorectal cancer. Health Technol Assess. 2007;11(12):1–128, iii–iv.

Wagstaff A, Culyer AJ. Four decades of health economics through a bibliometric lens. J Health Econ. 2012;31:406–39.

Woods B, Thompson J, Barcena L, et al. PSY5: comparing brentuximab vedotin overall survival data to standard of care in patients with relapsed/refractory Hodgkin lymphoma post-autologus stem cell transplant. Dublin: ISPOR Europe; 2013.

Faria R, Alava MH, Manca A, Wailoo AJ. NICE DSU technical support document 17: the use of observational data to inform estimates of treatment effectiveness in technology appraisal: methods for comparative individual patient data. 2015. http://www.nicedsu.org.uk/TSD17%20-%20DSU%20Observational%20data%20FINAL.pdf. Accessed 28 Jan 2016.

Weinstein MC, Siegel JE, Gold MR, et al. Recommendations of the panel on cost-effectiveness in health and medicine. J Am Med Assoc. 1996;276:1253–8.

Caro JJ, Briggs AH, Siebert U, Kuntz KM. Modeling good research practices: overview. A report of the ISPOR-SMDM Modeling Good Research Practices Task Force-1. Med Decis Making. 2012;32:667–77.

NICE. Guide to the methods of technology appraisal 2013. NICE; 2013. https://www.nice.org.uk/process/pmg9/resources/guide-to-the-methods-of-technologyappraisal-2013-pdf-2007975843781.

Scottish Medicines Consortium. Guidance to manufacturers for completion of New Product Assessment Form (NPAF). NHS Scotland; 2014. https://www.scottishmedicines.org.uk/files/submissionprocess/Guidance_on_NPAF_Final_October_2014.doc. Accessed 17 Oct 2016.

All Wales Medicines Strategy Group. Form B guidance notes. All Wales Medicines Strategy Group; 2013. http://www.awmsg.org/docs/awmsg/appraisaldocs/inforandforms/Form%20B%20guidance%20notes.pdf. Accessed 17 Oct 2016.

Australian Government Department of Health. Guidelines for preparing submissions to the Pharmaceutical Benefits Advisory Committee Version 4.4. Australian Government Department of Health; 2013. http://www.pbac.pbs.gov.au/content/information/printable-files/pbacg-book.pdf. Accessed 5 March 2015.

Pharmaceutical Management Agency. Prescription for pharmacoeconomic analysis: methods for cost-utility analysis version 2.1. New Zealand Government; 2012. https://www.pharmac.health.nz/assets/pfpa-final.pdf. Accessed 17 Oct 2016.

New Zealand Government. Guidelines for funding applications to PHARMAC. New Zealand Government; 2015. http://www.pharmac.govt.nz/2010/02/11/Guidelines%20for%20Suppliers%20Submissions.pdf. Accessed 17 Oct 2016.

Canadian Agency for Drugs and Technologies in Health. Guidelines for the economic evaluation of health technologies: Canada, 3rd edn. Canadian Agency for Drugs and Technologies in Health; 2006. http://www.cadth.ca/media/pdf/186_EconomicGuidelines_e.pdf. Accessed 17 Oct 2016.

Canadian Agency for Drugs and Technologies in Health. Addendum to CADTH’s guidelines for the economic evaluation of health technologies: specific guidance for oncology products. Canadian Agency for Drugs and Technologies in Health; 2009. http://www.cadth.ca/media/pdf/H0405_Guidance_for_Oncology_Prodcuts_gr_e.pdf. http://www.cadth.ca/media/pdf/186_EconomicGuidelines_e.pdf. Accessed 17 Oct 2016.

Scott WG, Scott HM. Economic evaluation of third-line treatment with alemtuzumab for chronic lymphocytic leukaemia. Clin Drug Investig. 2007;27:755–64.

Castro-Jaramillo HE. The cost-effectiveness of enzyme replacement therapy (ERT) for the infantile form of Pompe disease: comparing a high-income country’s approach (England) to that of a middle-income one (Colombia). Rev Salud Pública. 2012;14:143–55.

Scottish Medicines Consortium. Alglucosidase alfa 50mg powder for concentrate for solution for infusion (Myozyme) No. (352/07). Scottish Medicines Consortium; 2007. https://www.scottishmedicines.org.uk/files/alglucosidase_alfa_50mg_powder_Myozyme__352-07_.pdf. Accessed 17 Oct 2016.

Kanters TA, Hoogenboom-Plug I, Rutten-Van Mölken MP, et al. Cost-effectiveness of enzyme replacement therapy with alglucosidase alfa in classic-infantile patients with Pompe disease. Orphanet J Rare Dis. 2014;9:75.

Bennett CL, Weinberg PO, Golub RM. Cost-effectiveness model of a phase II clinical trial of a new pharmaceutical for essential thrombocythemia: is it helpful to policy makers? Semin Hematol. 1999;36:26–9.

Golub R, Adams J, Dave S, Bennett CL. Cost-effectiveness consideration in the treatment of essential thrombocytopenia. Semin Oncol. 2002;29:28–32.

Scottish Medicines Consortium. Re-submission: anagrelide 0.5 mg capsule (Xagrid). Scottish Medicines Consortium; 2005.

Goldberg Arnold R, Kim R, Tang B. The cost-effectiveness of argatroban treatment in heparin-induced thrombocytopenia: the effect of early versus delayed treatment. Cardiol Rev. 2006;14:7–13.

Patrick AR, Winkelmayer WC, Avorn J, Fischer MA. Strategies for the management of suspected heparin-induced thrombocytopenia: a cost-effectiveness analysis. PharmacoEconomics. 2007;25:949–61.

AWMSG. AWMSG Secretariat Assessment Report: Advice No. 4312 Argatroban (Exembol®). All Wales Medicines Strategy Group; 2012. http://www.awmsg.org/awmsgonline/grabber;jsessionid=1f631736a0503b59c6ed8236c4b8?resId=308. Accessed 17 Oct 2016.

Scottish Medicines Consortium. Argatroban, 100 mg/ml, concentrate for solution for infusion (Exembol) SMC No. (812/12). Scottish Medicines Consortium; 2012. https://www.scottishmedicines.org.uk/files/advice/argatroban_Exembol_FINAL_October_2012_for_website.pdf. Accessed 17 Oct 2016.

Peters B, Goeckner B, Ponzillo J, et al. Pegaspargase versus asparaginase in adult ALL: a pharmacoeconomic assessment. Formulary. 1995;30:388–93.

Scottish Medicines Consortium. Resubmission: betaine anhydrous oral powder (Cystadane) No. (407/07). Scottish Medicines Consortium; 2010. https://www.scottishmedicines.org.uk/files/betaine_anhydrous_Cystadane_2nd_RESUBMISSION_FINAL_July_2010.pdf. Accessed 17 Oct 2016.

Yoong K, Attard C, Sehn L. Cost-effectiveness analysis of bortezomib in relapsed mantle cell lymphoma patients in Canada. Paris: ISPOR Europe; 2009.

Mehta J, Duff SB, Gupta S. Cost effectiveness of bortezomib in the treatment of advanced multiple myeloma. Manag Care Interface. 2004;17:52–61.

Pennington B, Hatswell A, Clifton-Brown E. CL1: a comparison of methodologies for estimating survival in patients treated with second-generation tyrosine-kinase inhibitors for chronic myeloid leukaemia. Dublin: 2013.

Scottish Medicines Consortium. Bosutinib 100 mg, 500 mg film-coated tablets (Bosulif®) SMC No. (910/13). Scottish Medicines Consortium; 2013. https://www.scottishmedicines.org.uk/files/advice/bosutinib_Bosulif_FINAL_October_2013_for_website_Amended_31.03.14.pdf. Accessed 17 Oct 2016.

NICE. NICE technology appraisal guidance 299: bosutinib for previously treated chronic myeloid leukaemia. NICE; 2013. http://www.nice.org.uk/nicemedia/live/14310/65847/65847.pdf. Accessed 17 Oct 2016.

Hoyle M, Snowsill T, Haasova M, et al. Bosutinib for previously treated chronic myeloid laukaemia: a single technology appraisal. Exeter: University of Exeter Medical School; 2013.

Telléz Girón G, Salgado J, Soto H. PCN71: cost effectiveness analysis of busulfan + cyclophosphomide (BUCY2) as conditioning regimen before allogeneic human steam cell transplantation (HSCT): comparison of oral versus IV busulfan. Washington, DC: ISPOR International; 2012.

Scottish Medicines Consortium. Carglumic acid 200 mg dispersible tablets (Carbaglu®) SMC No. (899/13). Scottish Medicines Consortium; 2013. https://www.scottishmedicines.org.uk/files/advice/carglumic_acid_Carbaglu_FINAL_September_2013_website.pdf. Accessed 17 Oct 2016.

Starling N, Tilden D, White J, Cunningham D. Cost-effectiveness analysis of cetuximab/irinotecan vs active/best supportive care for the treatment of metastatic colorectal cancer patients who have failed previous chemotherapy treatment. Br J Cancer. 2007;96:206–12.

Merck Pharmaceuticals. Cetuximab (Erbitux) 100 mg solution for infusion (2 mg/mL): submission to the National Institute for Health and Clinical Excellence. National Institute for Health and Clinical Excellence; 2005. https://www.nice.org.uk/guidance/TA118/documents/merck2. Accessed 17 Oct 2016.

Tappenden P, Jones R, Paisley S, Carroll C. The use of bevacizumab and cetuximab for the treatment of metastatic colorectal cancer. School of Health and Related Research (ScHARR), The University of Sheffield; 2006. https://www.nice.org.uk/guidance/TA118/documents/colorectal-cancer-metastatic-bevacizumab-cetuximab-assessment-report2. Accessed 17 Oct 2016.

Guest JF, Smith H, Sladkevicius E, Jackson G. Cost-effectiveness of pentostatin compared with cladribine in the management of hairy cell leukemia in the United Kingdom. Clin Ther. 2009;31:2398–415.

AWMSG. Final appraisal report: clofarabine (Evoltra®). All Wales Medicines Strategy Group; 2007. http://www.awmsg.org/awmsgonline/grabber?resId=520. Accessed 17 Oct 2016.

Scottish Medicines Consortium. Clofarabine, 1mg/ml concentrate for solution for infusion (Evoltra®) (No. 327/06). Scottish Medicines Consortium; 2006. https://www.scottishmedicines.org.uk/files/clofarabine_1mgml_concentrate_solution_infusion_Evoltra.pdf. Accessed 17 Oct 2016.

AWMSG. Final appraisal report: dasatinib (Sprycel®) for chronic, accelerated or blast phase CML. All Wales Medicines Strategy Group; 2007. http://www.awmsg.org/awmsgonline/grabber?resId=251. Accessed 17 Oct 2016.

Thompson Coon J, Hoyle M, Pitt M, et al. Dasatinib and nilotinib for imatinib-resistant or -intolerant chronic myeloid leukaemia: a systematic review and economic evaluation. Peninsula Technology Assessment Group (PenTAG), Peninsula Medical School, Peninsula College of Medicine and Dentistry; 2009. http://www.nice.org.uk/guidance/ta241/documents/assessment-report2. Accessed 17 Oct 2016.

Scottish Medicines Consortium. Dasatinib, 20 mg, 50 mg, 70 mg tablets (Sprycel) No. (370/07). Scottish Medicines Consortium; 2007. https://www.scottishmedicines.org.uk/files/dasatanib__Sprycel__CML_FINAL_April_2007__amended_010507__for_website.pdf. Accessed 17 Oct 2016.

AWMSG. Final appraisal report: dasatinib (Sprycel®) for lymphoid blast CML and PH + ALL. All Wales Medicines Strategy Group; 2007. http://www.awmsg.org/awmsgonline/grabber?resId=250. Accessed 17 Oct 2016.

Scottish Medicines Consortium. Defibrotide, 80 mg/mL, concentrate for solution for infusion (Defitelio®) SMC No. (967/14). Scottish Medicines Consortium; 2014. https://www.scottishmedicines.org.uk/files/advice/M__Scottish_Medicine_Consortium_Web_Data_Audit_advice_Advice_by_Year_2014_No.6_-_June_2014_defibrotide__Defitelio__FINAL_May_2014_for_website.pdf. Accessed 17 Oct 2016.

Ratcliffe A, Beard S, Wolowacz S. A pharmacoeconomic model of the cost-effectiveness of gefitinib (‘Iressa’) compared with best supportive care (BSC) in third-line treatment of patients with refractory advanced non-small-cell lung cancer (NSCLC) in the UK. Hamburg: ISPOR Europe; 2004.

Lang K, Menzin J, Earle CC, Mallick R. Outcomes in patients treated with gemtuzumab ozogamicin for relapsed acute myelogenous leukemia. Am J Health Syst Pharm. 2002;59:941–8.

Garside R, Round A, Dalziel K, et al. The effectiveness and cost-effectiveness of imatinib (STI 571) in chronic myeloid leukaemia. Exeter: University of Exeter; 2002.

Warren E, Ward S, Gordois A, Scuffham P. Cost-utility analysis of imatinib mesylate for the treatment of chronic myelogenous leukemia in the chronic phase. Clin Ther. 2004;26:1924–33.

NICE. Guidance on the use of imatinib for chronic myeloid leukemia. London: National Institute for Clinical Excellence; 2003. http://www.nice.org.uk/nicemedia/live/11516/32754/32754.pdf. Accessed 13 Feb 2014.

Gordois A, Scuffham P, Warren E, Ward S. Cost-utility analysis of imatinib mesilate for the treatment of advanced stage chronic myeloid leukaemia. Br J Cancer. 2003;89:634–40.

Wilson J, Connock M, Song F, et al. Imatinib for the treatment of patients with unresectable and/or metastatic gastrointestinal stromal tumours: systematic review and economic evaluation. Health Technol Assess. 2005;9(25):1–142.

Huse DM, von Mehren M, Lenhart G, et al. Cost effectiveness of imatinib mesylate in the treatment of advanced gastrointestinal stromal tumours. Clin Drug Investig. 2007;27:85–93.

Wilson J, Connock M, Song F, et al. Imatinib for the treatment of patients with unresectable and/or metastatic gastro-intestinal stromal tumours: a systematic review and economic evaluation. National Institute for Clinical Excellence; 2003. https://www.nice.org.uk/guidance/TA86/documents/gastrointestinal-stromal-tumours-imatinib-assessment-report-2. Accessed 17 Oct 2016.

De Abreu Lourenco R, Wonder M. The cost-effectiveness of imatinib for the treatment of patients with gastroinstestinal stromal tumours. Kobe: ISPOR Asia; 2003.

AWMSG. Final appraisal report: nelarabine for the treatment of T-cell acute lymphoblastic leukaemia and T-cell lymphoblastic lymphoma. All Wales Medicines Strategy Group; 2009. http://www.awmsg.org/awmsgonline/grabber?resId=365. Accessed 17 Oct 2016.

Scottish Medicines Consortium. Nelarabine, 5 mg/ml solution for infusion (Atriance) No. (454/08). Scottish Medicines Consortium; 2008. https://www.scottishmedicines.org.uk/files/nelarabine__Atriance__FINAL_March_2008.doc_for_website.pdf. Accessed 17 Oct 2016.

Scottish Medicines Consortium. Nilotinib, 200 mg capsules (Tasigna) (440/08). Scottish Medicines Consortium; 2008. https://www.scottishmedicines.org.uk/files/nilotinib_200mg_capsules_Tasigna_FINAL_Feb_2008_amended_May_2008_for_Website.pdf. Accessed 17 Oct 2016.

Taylor M, Lewis L, Lebmeier M, Wang Q. An economic evaluation of dasatinib for the treatment of imatinib-resistant patients with chronic-phase chronic myelogenous leukaemia. Baltimore: ISPOR International; 2011.

Loveman E, Cooper K, Bryant J, et al. Dasatinib, high-dose imatinib and nilotinib for the treatment of imatinib-resistant chronic myeloid leukaemia: a systematic review and economic evaluation. Health Technol Assess. 2011;16(23):iii–xiii, 1–137.

Scottish Medicines Consortium. Ofatumumab, 100 mg concentrate for solution for infusion (Arzerra) No. (626/10). Scottish Medicines Consortium; 2010. https://www.scottishmedicines.org.uk/files/ofatumumab_Arzerra_FINAL_July_2010.pdf. Accessed 17 Oct 2016.

Almond C, Stevens J, Ren K, Batty A. The estimated survival of patient with double refractory chronic lymphocytic leukaemia: a reanalysis of NICE TA202 using Bayesian methodology to model observed data. Dublin: ISPOR Europe; 2013.

Batty A, Thompson G, Maroudas P, Delea T. Estimating cost-effectiveness based on the results of uncontrolled clinical trials: ofatumumab for the treatment of fludarabine- and alemtuzumab-refractory chronic lymphocytic leukaemia. Prague: ISPOR Europe; 2010.

Hoyle M, Crathorne L, Moxham T, et al. Ofatumumab (Arzerra®) for the treatment of chronic lymphocytic leukaemia in patients who are refractory to fludarabine and alemtuzumab: a critique of the submission from GSK. Exeter: University of Exeter; 2010.

Van Nooten F, Dewilde S, Van Belle S, Marbaix S. Cost-effectiveness of sunitinib as second line treatment in patients with metastatic renal cancer in belgium. Dublin: ISPOR Europe; 2007.

Aiello EC, Muszbek N, Richardet E, et al. Cost-effectiveness of new targeted therapy sunitinib malate as second line treatment in metastatic renal cell carcinoma in Argentina. Arlington: ISPOR International; 2007.

Paz-Ares L, del Muro JG, Grande E, Díaz S. A cost-effectiveness analysis of sunitinib in patients with metastatic renal cell carcinoma intolerant to or experiencing disease progression on immunotherapy: perspective of the Spanish National Health System. J Clin Pharm Ther. 2010;35(4):429–38.

Scottish Medicines Consortium. Sunitinib 50mg capsules (Sutent) No. (343/07). Scottish Medicines Consortium; 2007. https://www.scottishmedicines.org.uk/files/sunitinib_Sutent_MRCC_343_07.pdf. Accessed 17 Oct 2016.

Smith WD, Arbuckle R. Pharmacoeconomic analysis of sorafenib and sunitinib for first-line treatment of advanced renal cell carcinoma (ARCC) at a comprehensive cancer center. Arlington: ISPOR International; 2007.

Purmonen T, Martikainen JA, Soini EJO, et al. Sunitinib malate provides additional survival and value for money as second line treatment for metastatic renal cell carcinoma (MRCC): an economic evaluation using bayesian approach. Dublin: ISPOR Europe; 2007.

Purmonen T, Martikainen JA, Soini EJO, et al. Economic evaluation of sunitinib malate in second-line treatment of metastatic renal cell carcinoma in Finland. Clin Ther. 2008;30:382–92.

Hopper C, Niziol C, Sidhu M. The cost-effectiveness of Foscan mediated photodynamic therapy (Foscan-PDT) compared with extensive palliative surgery and palliative chemotherapy for patients with advanced head and neck cancer in the UK. Oral Oncol. 2004;40:372–82.

Kübler A, Niziol C, Sidhu M, et al. Eine Kosten-Effektivitäts-Analyse der photodynamischen Therapie mit Foscan® (Foscan®-PDT) im Vergleich zu einer palliativen Chemotherapie bei Patienten mit fortgeschrittenen Kopf-Halstumoren in Deutschland. Laryngo Rhino Otol. 2005;84:725–32.

Dinnes J, Cave C, Huang S, et al. The effectiveness and cost-effectiveness of temozolomide for the treatment of recurrent malignant glioma. National Institute for Health and Clinical Excellence; 2000. https://www.nice.org.uk/guidance/ta23/documents/ta23-brain-cancer-temozolomide-hta-report2. Accessed 17 Oct 2016.

Scottish Medicines Consortium. Tocofersolan, 50 mg/mL (corresponding to 74.5 IU tocopherol) oral solution (Vedrop®) SMC No. (696/11). Scottish Medicines Consortium; 2012. https://www.scottishmedicines.org.uk/files/advice/tocofersolan_Vedrop.pdf. Accessed 17 Oct 2016.

Soini EJO, Garcia San Andres B, Joensuu T. Trabectedin in the treatment of metastatic soft tissue sarcoma: cost-effectiveness, cost-utility and value of information. Ann Oncol. 2011;22:215–23.

Soini E, García San Andrés B, Joensuu T. Economic evaluation of trabectedin in the treatment of metastatic soft-tissue sarcoma (mSTS) in the Finnish setting. Paris: ISPOR Europe; 2009.

Scottish Medicines Consortium. Trabectedin 0.25 mg, 1 mg power for concentrate for solution for infusion (Yondelis) No. (452/08). Scottish Medicines Consortium; 2008. https://www.scottishmedicines.org.uk/files/trabectedin__Yondelis__FINAL_July_2008.doc_for_website.pdf. Accessed 17 Oct 2016.

AWMSG. Final appraisal report: trabectedin (Yondelis) for advanced soft tissue sarcoma. All Wales Medicines Strategy Group; 2008. http://www.awmsg.org/awmsgonline/grabber;jsessionid=2064f61ddd25570cee81d9956c22?resId=394. Accessed 17 Oct 2016.

Scottish Medicines Consortium. Trabectedin, 0.25 and 1 mg powder for concentrate for solution for infusion (Yondelis). Scottish Medicines Consortium; 2010. https://www.scottishmedicines.org.uk/files/advice/trabectedin_Yondelis_RESUBMISSION_FINAL_October_2010.doc_for_website.pdf. Accessed 17 Oct 2016.

Scottish Medicines Consortium. 2nd re-submission in confidence—trabectedin, 0.25 and 1 mg powder for concentrate for solution for infusion (Yondelis). Scottish Medicines Consortium; 2011. https://www.scottishmedicines.org.uk/files/advice/trabectedin_Yondelis_2ND_RESUBMISSION_FINAL_JUNE_2011_for_website.pdf. Accessed 17 Oct 2016.

Simpson E, Rafia R, Stevenson M. Trabectedin for the treatment of advanced metastatic soft tissue sarcoma. National Institute for Health and Clinical Excellence; 2009. https://www.nice.org.uk/guidance/TA185/documents/evidence-review-group-report2. Accessed 17 Oct 2016.

Simpson E, Rafia R, Stevenson M, Papaioannou D. Trabectedin for the treatment of advanced metastatic soft tissue sarcoma. Health Technol Assess. 2010;14(Suppl 1):63–7.

Rafia R, Simpson E, Stevenson M, Papaioannou D. Trabectedin for the treatment of advanced metastatic soft tissue sarcoma: a NICE single technology appraisal. PharmacoEconomics. 2013;31:471–8.

Amdahl J, Manson SC, Isbell R, et al. Cost-effectiveness of pazopanib in advanced soft tissue sarcoma in the United Kingdom. Sarcoma. 2014;2014:1–14.

Villa G, Hernández-Pastor LJ, Guix M, et al. Cost-effectiveness analysis of pazopanib in second-line treatment of advanced soft tissue sarcoma in Spain. Clin Transl Oncol. 2015;17(1):24–33.

Author contributions

The study was designed by AJH, GB and NF, the literature searches were conducted by AJH, and interpretation was provided by AJH, GB and NF. All authors read and approved the final manuscript.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

No funding was receiving for the preparation of this article.

Conflict of interest

AJH, GB and NF have no conflicts of interest directly relevant to the content of this article.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Hatswell, A.J., Freemantle, N. & Baio, G. Economic Evaluations of Pharmaceuticals Granted a Marketing Authorisation Without the Results of Randomised Trials: A Systematic Review and Taxonomy. PharmacoEconomics 35, 163–176 (2017). https://doi.org/10.1007/s40273-016-0460-6

Published:

Issue Date:

DOI: https://doi.org/10.1007/s40273-016-0460-6