Abstract

Discharging excessive pollution into rivers that exceeds their self-purification capacity decreases the quality of water and threatens the aquatic ecosystem. In such water systems, polluters and environmental protection agencies are involved parties that often have opposite interests. This research proposes a method to enhance the water quality of rivers while satisfying the interests of both parties. It allocates waste loads to polluters and requires them to pay the treatment cost in order to remove pollution. The proposed methodology employs a combination of QUAL2Kw and non-dominated sorting genetic algorithm-II to minimize wastewater treatment costs and dissolved oxygen violation from the standard level. The river inflow uncertainty is considered by Latin hypercube sampling to give more real insights to decision makers through a stochastic approach. As a result, the Pareto sets act as strategies that can be used to meet the objectives, but they show the contradiction between parties’ interests. According to the waste load criterion, this methodology reduces waste load from 145.5 to 79, 107.15 and 115 units for dry, normal and wet months. Also, it decreases treatment cost from the range of [160,000–180,000 $] to [100,000–130,000 $] considering the water quality and dischargers’ interest. According to the results, considering river inflow uncertainty can increase the concentration of dissolved oxygen even more than the standard level, and point sources treat their wastewater more than non-point ones. Generally, it is a suitable tool to find solutions for minimizing the treatment cost in favor of dischargers and improving water quality.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Optimizing waste load allocation (WLA) refers to assigning the treatment level of pollutants to pollution sources in a water system. The water quality standards can be met, while the treatment level and cost may not be necessarily satisfactory for the dischargers. Therefore, WLA optimization is a controversial issue because of the conflicts among all beneficiaries like the Environmental Protection Agency (EPA) and pollution sources. The aim of water quality management is to minimize the total cost and violations from standards in addition to finding the most acceptable strategies among beneficiaries. It must consider the best approaches in order to satisfy almost all involved parties in water system issues. That is why linear and nonlinear optimization algorithms have been developed for WLA problems. These algorithms are capable of finding optimized solutions that may not be acceptable to all parties. While the solution may not be the best solution, considering all parties’ interests can resolve the conflict and reach an agreement through a cooperative approach (Loucks et al. 1967; Burn and Lence 1992; Ghosh and Mujumdar 2010; Aghasian et al. 2019; Moridi 2019).

In this case, applying multi-objective optimizations leads to a set of best solutions in the form of Pareto front so that decision makers can assess and choose the most agreeable solutions. Evolutionary algorithms (EAs) and classical optimization methods are two common optimization approaches, but EA has shown a more effective performance by reaching better Pareto fronts and taking less time (Eheart 1980; Anile et al. 2005; Hojjati et al. 2018).

According to the stakeholders’ interests, both environmental and economic objectives have been defined in the form of minimizing dissolved oxygen (DO) concentration, biochemical oxygen demand (BOD) or total treatment cost and maximizing equality among dischargers in distinct studies (Murty et al. 2006). Loucks et al. (1967) have done research on water quality control by inflow augmentation and wastewater treatment, and it illustrated that both stochastic and deterministic optimization–simulation models are efficient in the preliminary selection of operating and design alternatives for simulation and analysis. Liu et al. (2014) investigated the influence of three objectives by non-dominated sorting genetic algorithm-II (NSGA-ΙΙ) in an integrated model. The objectives included maximizing economic benefit, minimizing water deficiency and maximizing pollutant discharge. The model was efficient in analyzing the interaction between water and wastewater allocation simultaneously. The results showed the importance of reducing chemical oxygen demand (COD) in the river. In another study, Saberi and Niksokhan (2017) proposed a methodology for optimizing a river system WLA based on non-cooperative decision makers’ behavior. The research included an evolutionary optimization algorithm and the Streeter–Phelps equation. The best solutions were drawn based on the minimized total cost (treatment plus penalty) and BOD violation from a standard level at the control point. Because of the stakeholders’ opposite objectives, optimum results were not effective unless all stakeholders agreed to use the solution and follow the optimal strategy.

In addition to the deterministic approach, stochastic optimization studies have also been conducted to involve various sources of uncertainty in water quality modeling and decision making. Monte Carlo Simulation (MCS) is one of the most efficient of these methods that is based on random sampling. Since the direct sampling method requires a high number of samples and consequently high computational time in MCS, researchers developed efficient sampling methods like the Latin hypercube sampling (LHS) technique to yield favorable results with a smaller number of samples and time of calculation. The LHS has been used in the sensitivity analysis of water quality as well (McKay et al. 2000; Manache and Melching 2004, 2008). Niksokhan et al. (2009) utilized MC for generating river inflow samples as model input in an optimal WLA model. The simulation–optimization model used the Young conflict resolution theory and had the following objective functions: minimizing total treatment cost and the DO violation. Nikoo et al. (2016) used fuzzy theory in an optimization model to determine WLA policies with cooperative and non-cooperative approaches. The results showed the effectiveness of the methodology and the fairness in reallocating treatment costs to dischargers.

Although treating waste load is a common approach for increasing DO concentration, an additional aeration system can act as a cheap complementary process for water quality management in favor of dischargers (Pimpunchat et al. 2007). Verma et al. (2007) conducted research to find out the influence of artificial aeration system on increasing DO and improving water quality. Water quality parameters like BOD, COD and DO were studied in addition to the performance of the aeration unit. The final results indicated the positive influence of aeration on increasing DO and decreasing BOD, COD and algae growth.

This research aims to develop a stochastic WLA model and compare its results with the deterministic model based on the uncertainty of river inflows. It discusses and analyzes the impact of uncertainty on the acceptable levels of water quality parameters and attempts to accomplish it by the LHS technique and a simulation-based optimization approach. Results are presented in the form of cost–DO trade-off curves, including their uncertainty bounds, and the waste load criterion is employed to find an acceptable solution. This research is prepared based on the results of an MSc thesis at Shahid Beheshti University, Tehran, Iran.

Materials and methods

Methodology

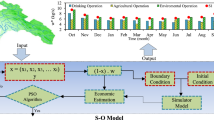

Figure 1 provides an overview of the methodology that is used for improving river water quality. The stochastic and deterministic approaches are both investigated in the simulation–optimization model of this research. According to the flowchart, gathering the necessary data for water quality modeling is the first step. After preparation and entering all data in QUAL2Kw, calibration and verification of the model are carried out. Then, the river flows are generated by the LHS technique, applying lognormal distribution function for the stochastic approach. The difference between the deterministic and stochastic approaches is that the stochastic model is run for a series of river inflows instead of one inflow in the deterministic model. This two-objective optimization model minimizes “total treatment cost” and “DO violation from standard level” at the control point. The aeration is an extra process that is employed in this study and influences the DO concentration of each pollution source proportional to their treatment levels. In each iteration of NSGA-ΙΙ, new chromosomes are generated by crossover and mutation, and they are sorted based on non-domination (ND) and crowding distance (CD). This process continued to meet the convergence criterion eventually. At the end of the model execution, results are presented as the Pareto front solutions representing the trade-off between the objective functions. The computer information included CPU: Intel(R) Core (TM) i5-4790 CPU @ 3.6 GHz RAM. 8 GB, and more than 90 days were spent on the computations.

Latin hypercube sampling

Several sampling methods such as simple random, stratified and LHS are used for examining the performance of data uncertainty (Macdonald 2009). According to studies on water resources and environmental engineering, the MC and the LHS techniques have the efficiency and flexibility in comparison with direct integration methods and point estimation. McKay et al. (2000) proved that the LHS classified process is able to converge more quickly than other methods such as MC; the accuracy relies on the number of samples, and no fixed law exists. On the contrary, MC requires an excessive number of samples so that it converges the fixed values of mean and average and variance approach to constant values for the output variables. Macdonald (2009) proved that the LHS is a more appropriate technique in comparison with simple random and stratified methods. Although the results of LHS and stratified method are close, the required number of samples is less in the LHS technique. This reduces the computational process and time.

The LHS is a random sampling classified method of MC in which a K variable sample of size N from multiple continuous variables is drawn (McKay et al. 2000). In this method, the sample probability space is divided into N intervals, and the probability of occurrence in each stratum is 1/N. A sample of size N is drawn for K independent variables according to Eq. (1):

where \(F_{j}\) = cumulative distribution function of variable j for each j = 1,…, k; pij = a random permutation of 1,…, N; and \(\varepsilon_{ij}\) = a uniform distribution random number between [0,1] (Pebesma and Heuvelink 1999). In fact, the LHS leads to a uniform sample space with equal probability of occurrence for sample intervals.

In this paper, the LHS technique is used to generate a significant number of estimated river inflows, which are utilized as input variables in the simulation–optimization model.

QUAL2Kw: water quality simulation model

QUAL2Kw is a 1D water quality simulation model that solves the governing differential equations of constant river flow and advection–dispersion, numerically. These equations include the effects of advection–dispersion, dilution, constituent reactions and interactions, and sources or sinks. In QUAL2Kw, the general mass balance (Eq. 2) calculates the concentration of all defined water quality parameters, considering a steady-state flow in river systems:

where C = concentration of the specific parameter; v = volume of the river; U = mean velocity; D = diffusion coefficient; A = river estuary section area; Sc = external sinks or sources of the C element; t = time (day) and x = the distance of each reach along the river in the flow direction (Chapra et al. 2003). It can simulate water quality constituents, including the BOD, DO and PH in addition to processes such as organic pollutant degradation, nitrification, denitrification and sedimentation.

QUAL2Kw is developed by integrating QUAL2K and genetic algorithm (GA) to replace the manual calibration process with an automatic optimizing technique. GA is used to maximize the fitting goodness of the observed and simulated results of the model. The fitting function is determined as a reciprocal weighted average of the normalized root mean squared error (RMSE) of the difference between the model predictions and the observed data for the water quality constituents (Pelletier et al. 2006; Cho and Lee 2018).

Non-dominated sorting genetic algorithm-II

In this study, the WLA is conducted by an optimization model, and the treatment process is used for removing a portion of the initial BOD concentration. Expenditures of treatment include only BOD removal. In this research, it is assumed that the construction cost is paid by the government, so the polluters were only supposed to pay for the operational cost of wastewater treatment. Equation (3) shows the economic objective function that attempts to minimize the total annual treatment cost that all polluters have to pay. Each polluter must pay the treatment cost in terms of the linear equation (\(c_{i} t_{i}\)), and the objective function is equal to their summation (Eq. 3). The treatment cost is gained by the treatment level and the coefficients presented in Tables 1. In fact, the treatment cost of each polluter is found by multiplying the coefficient with the percentage of BOD that has to be removed. The values of ci are evaluated from the information that Estalaki et al. (2015) used.

Equation (4) refers to the second objective function and is in favor of environmental interests. It is based on the water quality parameter and minimizes the DO violation from the standard level at the control point. Equation (5) shows the released BOD concentration from the polluters, and Eq. (6) pertains to the additional aeration process in treatment plants that causes an increase in DO concentrations of pollution sources during the optimization process. Equations (7) and (8) refer to dilution in the river based on the simulation model and calculate the BOD and DO concentrations at each point of the river. Each iteration of the simulation–optimization process leads to Eq. (9), which calculates the DO at the checkpoint. The relation between ti and xi is \(x_{i} = 1 - t_{i}\).

Subjected to:

where i = number of point and non-point sources; ci = treatment expenditure coefficient of polluter i, ti = treatment level of polluter i; N = the number of generated inflows by LHS; \({\text{DO}}_{n}\) = DO at the control point; \({\text{DO}}_{\text{standard}}\) = standard DO equal to 5 mg/l; \({\text{BOD}}_{i}^{\text{new}}\) = optimized BOD of polluter i; \(x_{i}\) = percentage of discharged BOD of polluter i; \({\text{BOD}}_{i}^{\text{initial}}\) = the initial BOD of polluter i before optimization, \({\text{DO}}_{i}^{\text{new}}\) = discharged DO of polluter i under aeration and optimization; \({\text{DO}}_{i}^{\text{initial}}\) = initial DO of polluter i before optimization; \({\text{BOD}}_{R,j}\) = total BOD of the point j in the river; \(Q_{i}\) = flow of wastewater from polluter i; \({\text{BOD}}_{i}\) = BOD of wastewater from polluter i; \(Q_{R}\) = upstream river flow; \({\text{BOD}}_{R}\) = BOD of the river water; \({\text{DO}}_{R,j}\) = total DO of the point j in the river; \({\text{DO}}_{i}\) = DO of wastewater from polluter i; and \({\text{DO}}_{R}\) = DO of the river water.

Gradient-based and evolutionary optimization algorithms are known as efficient tools to find cost-effective designs or strategies in many engineering optimization problems. The obstacles and difficulties associated with gradient-based approaches have led to the extensive application of EAs and their development. These algorithms can approach the near global optima even in situations where the solution space suffers from the discontinuity, multimodality, nonlinearity and non-convexity. NSGA-ΙΙ (developed by Estalaki et al. 2015) is one of the most efficient multi-objective optimization algorithms that have been widely used in many engineering disciplines, successfully. This algorithm integrates GA operators with the concepts of ND sorting and CD to find a set of optimal solutions called Pareto front (Yazdi et al. 2016). The most important features included in NSGA-II, compared to the previous multi-objective evolutionary algorithms (MOEAs), are as follows (Deb et al. 2000):

-

1.

A crowding approach for diversity preservation in which N (as large as 2 N, where N is the population size) solutions are processed objective-wise;

-

2.

An elitism operator that helps in significantly speeding up the performance of the GA, and in preserving good non-dominated solutions; and

-

3.

An objective-wise distance computation.

The main steps of NSGA-ΙΙ are as follows:

-

Randomly creating a population of parent (\(P_{0}\)) of size N;

-

Sorting generated population based on ND;

-

Assigning a fitness (rank) equal to the ND level (1 is the best level, 2 is the next best level, and the trend continues) for each non-dominated solution;

-

Creating a child population (\({\text{Q}}_{0}\)) of size N using binary tournament selection, crossover, and mutation operators;

-

Creating new generation constituents from the first generation onward as follows:

-

Creating the mating pool (\(R_{t}\)) of size 2 N by mixing the parent population (\(P_{t}\)) and the child population (\(Q_{t}\));

-

Sorting the mixed population (\(R_{t}\)) based on ‘ND’ (Deb et al. 2000) to identify all non-dominated fronts (\(F_{1} , \, F_{2} , \, \ldots , \, F_{L}\));

-

Generating the new parent population (\(P_{t + 1}\)) of size N by adding non-dominated solutions starting from the first ranked non-dominated front (\(F_{1}\)) and proceeding with the subsequently ranked non-dominated fronts (\(F_{1} , \, F_{2} , \ldots , \, F_{L}\)) till the size exceeds N. This means that the total count of the non-dominated solutions from the fronts \(F_{1} , \, F_{2} , \ldots , \, F_{L}\), exceeds the population size N. Now, in order to make the total count of the non-dominated solutions equal to N, some of the lower ranked non-dominated solutions from the last (\(F_{L} {\text{th}}\)) front have to be rejected. This is achieved through a sorting done according to the crowded comparison operator (\(\ge \, n\)) based on the ‘CD’ assigned to each solution contained in the \(F_{l} {\text{th}}\) non-dominated front. Thus, the new parent population (\(P_{t + 1}\)) of size N is constructed, which produces the new child population (\({\text{Q}}_{t + 1}\)) of size N.

-

Repeating step 5 until the last number of generations (Yandamuri et al. 2006).

Case study

The WLA optimization model is applied to the Zarjub River in the north of Iran to manage the river water quality. This river is a part of the Siahrud River (which is 24-km long) and passes from the suburbs and inside the city and after flowing into the Anzali Lagoon ends in the Caspian Sea (Fig. 2).

The river’s annual discharge of 59 million cubic meters transfers a massive volume of effluent into the lagoon and sea, threatening the habitat of the marine creatures. Although the upstream DO concentration is equal to 6 mg/l, the waste load reduces it to 1 mg/l. The river is surrounded by point and non-point source polluters. The major polluters of Zarjub River are point sources, and Fig. 2 identifies that their density is at the end of the river.

The polluters’ information is presented in Table 2 in order to show their BOD and DO concentrations before the optimization process. According to Table 2, there are 11 point and seven non-point sources along the river, and the polluters number 1 (point and non-point) are the nearest sources to the river’s upstream and Behdan Station. The reduction in water quality occurs due to the direct reception of domestic pollutants (point source) and agricultural wastewater (non-point source) associated with toxic substances and fertilizers in the return runoff. In this river, domestic wastes accounts for 90% of the whole pollution load, and the DO concentration is mostly lower than the standard level and almost less than 1 mg/l at downstream. This research attempts to allocate specific waste load release to point and non-point sources to meet standard DO concentration at the checkpoint (IWPC Technical Report 2013; Estalaki et al. 2015).

Results and discussion

Calibration and verification

In this paper, BOD and DO concentrations are used as water quality characteristics for calibration and verification. Data recorded in October 2005 are used for calibration, and recorded data from September 2005 are utilized for verification. The calibration and verification results are represented in Figs. 3 and 4, respectively, in which the observed and simulated (calculated) values are depicted by points.

The axes of observed and simulated values and the graph’s trend line show high correlation between the data. The coefficient of determination (\(R^{2}\)) is calculated according to the square of Eq. (10) as a measurement criterion, and it shows the accuracy of the simulation model and its functionality. The more the value of \(R^{2}\), the more the correlation between the data.

where \(r_{{x_{\text{obs}} ,x_{\text{sim}} }}\) = correlation coefficient; n = number of data; \(x_{\text{obs}}\) = observed data; and \(x_{\text{sim}}\) = simulated data.

Based on the calibration schemas, \(R^{2}\) is equal to 0.79 and 0.70, respectively, for BOD and DO. Moreover, the value of \(R^{2}\) which is equal to 0.87 and 0.72 for BOD and DO values verifies the accuracy of the simulation model. Obtained values of the coefficient of determination show that the model’s results are in close agreement with observed information. Despite simulation errors and limitations of data, the accuracy of results is acceptable.

Results of the optimization model

In this study, a simulation–optimization model is presented based on deterministic and stochastic approaches to reduce waste load. The purpose of this study is to find the best scenarios that increase water quality and are beneficial to pollution sources. The selected scenario must provide required DO concentration in the river with lower treatment cost. The stochastic model shows the effect of river inflow uncertainty on the output of the model. The Zarjub River inflows of August, April and November are utilized in the simulation–optimization model, and they refer to the dry (minimum average inflow), normal (average inflow) and wet (maximum average inflow) periods, respectively. In the stochastic approach, the river inflows are generated by the LHS technique, applying lognormal distribution function. This distribution function has proved its efficiency for simulating inflows of both wet and dry season (Bowers et al. 2012; Langat et al. 2019). Assessing the mean and variance of the 50, 100, 200, 500 and 1000 simulated samples illustrates that 100 samples are adequate to run the model.

After implementing the simulation–optimization model, the final results are depicted in the form of treatment cost–DO trade-off (Fig. 5). Each sub-figure includes three trade-offs: upper bound, lower bound and the middle bound of DO concentration. The middle Pareto set and the corresponding decision variables are achieved through the simulation–optimization process. Then, the upper and lower bands are obtained by running the QUAL2Kw with the decision variables and the highest and lowest values of generated inflow in each period.

There is a specific point in sub-figures (a), (b) and (c) where the graphs’ slope almost changes; this is called the break point (BP). The area in which DO is less than the standard concentration is not satisfactory for the EPA, so solutions like point 1 are not acceptable. A horizontal red line separates the unacceptable part from DO ≥ 5 mg/l. Comparing the uncertain bounds illustrates that sub-figure (a) has the narrowest uncertain area that is due to the closeness of inflow values in August. The picture shows that the upper and lower bounds converge on DO equals 5 mg/l, and the vicinity of the solutions (points) in the trade-off implies the properness of results.

In all 3 months, the uncertainty bandwidths are wider in low values of treatment cost-DO, and the lowest treatment levels are allocated to those solutions. Hence, the less treatment level causes less DO concentration at the checkpoint. The enormous changes of upper and lower bounds in comparison with April and November prove the considerable influence of river inflow on DO when it is lower than standard level. The August upper and lower uncertainty bands are almost − 63% and − 0.88% of April bands. The comparison of wet (November) and normal (April) periods shows that although there is a difference at the beginning of the bandwidth, they are almost similar in shape and uncertainty bands. The results approve that the disparity of river inflows in April and November is more than August (dry period) and causes wider uncertain bandwidth. Besides, Pareto fronts converge at a point in all three periods, thereby showing that the increment in inflow cannot increase DO concentration with the same trend. The treatment levels of those optimization solutions are the influential factors, and the results prove that achieving favorable DO is possible in all cases.

For analyzing the results in detail, five solutions of each Pareto front are selected. Table 3 shows their DO and corresponding treatment costs for August, April and November. In addition to the points that are in the acceptable area (DO > 5 mg/l), the first solution is also selected from the unacceptable part to investigate both parties’ interest. Though it is unacceptable, it is desirable for polluters.

Analyzing the corresponding decision variables of points 2, 4 and 5 (permitted percentage of discharged BOD) showed that point and non-point sources treatment levels are different in dry (August), normal (April) and (November) wet periods (Table 4).

Point 2 in each month refers to solutions that result in DO that is close to the standard level, and point 5 shows the highest values of treatment cost and DO. Point 4 is chosen for comparing solutions that have almost similar values of treatment cost in all periods. The results indicate that although the point sources’ BOD is much larger than the non-point sources’, polluters that contained higher initial DO faces larger BOD removal. This is because the decision variables are influenced by the aeration equation in this study. Equation (6) shows that the oxygen of aeration depends on the initial DO of each pollution source. Unlike point sources, non-point sources contain zero or low initial DO concentration because the dominant inflow is via groundwater. Therefore, the local condition forces point sources to treat their pollution load highly. For instance, even though the second contaminant’s BOD is far less than others, its pollution must be necessarily removed. Except for the two last polluters among point sources, others must treat their BOD to a great extent. The more DO each polluter has, the more BOD has to be removed. It seems BOD reduction is the best solution for meeting water quality, but according to the results and the correlation of discharge percentage and dischargers’ DO, another conclusion can be drawn. Aeration can be the subsidiary approach to help rivers contain sufficient DO. Although more river inflows can produce better environmental health, the final treatment costs for pollution sources affect the selected solution. Therefore, it can be reasonable to find the polluters’ priority in each of the selected solutions first. The solutions between DO = 5 mg/l and BP are the most suitable scenarios for both EPA and pollution sources, because they not only meet the DO standard, but also the pollution sources give priority to them as they are more economical. By investigating non-point sources, it is understood that they are allowed to release their pollution with less treatment because in addition to zero initial DO concentration, diffusion sources affect the nutrient pollution concentration more.

The overlap of results in Fig. 6 proves that the proposed model is devised correctly because the stochastic approach is based on averaging DO at the control point corresponding to the river inflows, and the deterministic inflow is almost the average of probable inflows. Although the expenses of refinement are not too different, the results prove the positive influence of maximum inflows on quality and expenditures. In lower costs, the stochastic model, which has a better adaption with real conditions, gives lower DO concentration relative to the deterministic model, especially for the dry month. These lower DO values vary gradually from 3.55 mg/l in the minimum cost level to 5 mg/l in the minimum cost level of around 120,000 $. For the dry season, the main differences are in the middle-cost levels (80,000–130,000 $) with a maximum difference of 0.23 mg/l in cost corresponding to 110,000 $. The difference between the stochastic and deterministic approaches in August is smaller than other months. Since DO concentration is more important in dry and normal conditions, using the stochastic approach gives decision makers more real insights into the actual states of DO concentrations in the rivers, which proves the merit of applying the stochastic model. The close inflow of April and November and low mean annual discharge of the river upstream causes little change in the results, but they eventually show the impacts of stream inflow on outcomes. Index Σ (BOD.Q) is used as a comparison criterion that shows this methodology can reduce waste load from 145.5 to 79, 107.15 and 115 units for dry, normal and wet periods. In brief, the best range of Pareto front solutions is \({\text{DO}}_{\text{standard}} \le {\text{DO}} \le {\text{DO}}({\text{BP}})\).

Although the LHS method has been proven to be powerful, it cannot easily measure the effect each inflow parameter has on the outflow parameter, since it only samples a single value from each partition of equal probability. To quantify the weight effect each input parameter has on the outputs, one has to perform a global MC sensitivity analysis, where the small deviation of the input parameter around a mean value is included and the effect of this small variability to the output variable can be measured. It seems that limiting the aeration to the BOD treatment level and the initial DO of pollution sources can be a flaw because this constraint restricts the optimization to the pollution sources that have more initial DO and ignores the others.

Conclusion

The increasing waste load from pollution sources decreases rivers’ water quality. This issue requires the environmentalists and pollution sources to reach an agreement when there is a conflict of interest. Polluters desire to release their waste even though this reduces water quality and is against EPA goals. Therefore, an optimization approach can be a suitable method that addresses both parties’ interests. Herein, both economic and environmental issues are considered in the form of treatment cost and DO concentration along the river. The proposed methodology investigates the influence of river inflow uncertainty on the treatment cost-DO trade-off in river systems and aims at finding acceptable solutions. An extra aeration process for adding extra DO to the treated pollution is considered in this methodology, and its efficiency is investigated through a real case study in the Zarjub River.

According to the results, the proposed model leads to satisfactory water quality with low treatment cost. Although the Pareto fronts show conflict between environmental and economic objectives, the best solutions belong to points in which the DO concentration is above standard level and that have lower treatment costs. Considering river uncertainty leads to having more DO and more discharged BOD than the deterministic model. The waste load criterion of this research shows that this methodology can decrease waste load from 257.96 to 77.61, 61.35 and 96.13 in terms of months and the treatment cost reduces from 160,000–180,000 $ to 100,000–130,000 $ considering water quality and dischargers’ interest. Point sources have to treat their wastewater more than non-point ones.

Final results prove that limiting the aeration to the discharged BOD and the initial DO of pollution sources is a flaw because this limits the optimization to focus on the pollution sources that have more initial DO than the others. Besides, the LHS method cannot easily measure the effect of each inflow on the outflow parameter, and it is better to perform a global MC sensitivity analysis. Then, the small deviation of inputs around a mean value is included, and the effect of small variability to the outputs can be measured. Generally, it is a suitable tool to find solutions for minimizing treatment cost in favor of dischargers and improving water quality.

References

Aghasian K, Moridi A, Mirbagheri A, Abbaspour M (2019) A conflict resolution method for waste load reallocation in river systems. Int J Environ Sci Technol 16:79–88. https://doi.org/10.1007/s13762-018-1993-3

Anile AM, Cutello V, Nicosia G, Rascuna R, Spinella S (2005) Comparison among evolutionary algorithms and classical optimization methods for circuit design problems. IEEE Congress Evolut Comput 1:765–772. https://doi.org/10.1109/CEC.2005.1554760

Bowers MC, Tung WW, Gao JB (2012) On the distributions of seasonal river flows: lognormal or power law? Water Resour Res 48:W05536. https://doi.org/10.1029/2011WR011308

Burn DH, Lence BJ (1992) Comparison of optimization formulations for waste-load allocations. J Environ Eng 118:597–612. https://doi.org/10.1061/(ASCE)0733-9372

Chapra SC, Pelletier GJ, Tao H (2003) QUAL2K: a modeling framework for simulating river and stream water quality: Documentation and users manual. Civil and Environmental Engineering Dept., Tufts University, Medford, MA. 25: 121

Cho JH, Lee JH (2018) Automatic calibration and performance evaluation of a water quality model for a river greatly influenced by wastewater treatment plant effluent. EPiC Series Eng 3:447–455

Deb K, Agrawal S, Pratap A, Meyarivan T (2000) A fast elitist non-dominated sorting genetic algorithm for multi-objective optimization: NSGA-II. International conference on parallel problem solving from nature. Springer, Berlin, pp 849–858. https://doi.org/10.1007/3-540-45356-3_83

Eheart JW (1980) Cost-efficiency of transferable discharge permits for the control of BOD discharges. Water Resour Res 16:980–986. https://doi.org/10.1029/WR016i006p00980

Estalaki SM, Abed-Elmdoust A, Kerachian R (2015) Developing environmental penalty functions for river water quality management: application of evolutionary game theory. Environ Earth Sci 73:4201–4213. https://doi.org/10.1007/s12665-014-3706-7

Ghosh S, Mujumdar PP (2010) Fuzzy waste load allocation model: a multiobjective approach. J Hydroinform 12:83–96. https://doi.org/10.2166/hydro.2010.028

Hojjati A, Monadi M, Faridhosseini A, Mohammadi M (2018) Application and comparison of NSGA-II and MOPSO in multi-objective optimization of water resources systems. J Hydrol Hydromech 66:323–329. https://doi.org/10.2478/johh-2018-0006

IWPC (Iran’s Water and Power Resources Development Company))2013(“(Available in Persian).” Technical Rep., IWPC Research Dep., Tehran, Iran

Langat PK, Kumar L, Koech R (2019) Identification of the most suitable probability distribution models for maximum, minimum, and mean streamflow. Water 11:734. https://doi.org/10.3390/w11040734

Liu D, Guo S, Shao Q, Jiang Y, Chen X (2014) Optimal allocation of water quantity and waste load in the Northwest Pearl River Delta, China. Stoch Environ Res Risk Assess 28:1525–1542. https://doi.org/10.1007/s00477-013-0829-4

Loucks DP, Revelle CS, Lynn WR (1967) Linear progamming models for water pollution control. Manage Sci 14:166. https://doi.org/10.1287/mnsc.14.4.B166

Macdonald IA (2009) Comparison of sampling techniques on the performance of Monte-Carlo based sensitivity analysis. In Eleventh international IBPSA conference. pp 992–999

Manache G, Melching CS (2004) Sensitivity analysis of a water-quality model using Latin hypercube sampling. J Water Resour Plann Manage 130:232–242. https://doi.org/10.1061/(ASCE)0733-9496(2004)130:3(232)

Manache G, Melching CS (2008) Identification of reliable regression-and correlation-based sensitivity measures for importance ranking of water-quality model parameters. Environ Model Softw 23:549–562. https://doi.org/10.1016/j.envsoft.2007.08.001

McKay MD, Beckman RJ, Conover WJ (2000) A comparison of three methods for selecting values of input variables in the analysis of output from a computer code. Technometrics 42:55–61. https://doi.org/10.1080/00401706.2000.10485979

Moridi A (2019) A bankruptcy method for pollution load reallocation in river systems. J Hydroinform 21:45–55. https://doi.org/10.2166/hydro.2018.156

Murty YS, Bhallamudi SM, Srinivasan K (2006) Non-uniform flow effect on optimal waste load allocation in rivers. Water Resour Manage 20:509–530. https://doi.org/10.1007/s11269-006-3084-3

Nikoo MR, Beiglou PH, Mahjouri N (2016) Optimizing multiple-pollutant waste load allocation in rivers: an interval parameter game theoretic model. Water Resour Manage 30:4201–4220. https://doi.org/10.1007/s11269-016-1415-6

Niksokhan MH, Kerachian R, Amin P (2009) A stochastic conflict resolution model for trading pollutant discharge permits in river systems. Environ Monitor Assess 154:219–232. https://doi.org/10.1007/s10661-008-0390-7

Pebesma EJ, Heuvelink GB (1999) Latin hypercube sampling of Gaussian random fields. Technometrics 41:303–312. https://doi.org/10.1080/00401706.1999.10485930

Pelletier GJ, Chapra SC, Tao H (2006) QUAL2Kw–A framework for modeling water quality in streams and rivers using a genetic algorithm for calibration. Environ Model Softw 21:419–425. https://doi.org/10.1016/j.envsoft.2005.07.002

Pimpunchat B, Sweatman WL, Triampo W, Wake GC, Parshotam A (2007) Modelling river pollution and removal by aeration. In: MODSIM 2007 international congress on modelling and simulation. land, water & environmental management: integrated systems for sustainability. modelling and simulation society of Australia and New Zealand, pp 2431–2437

Saberi L, Niksokhan MH (2017) Optimal waste load allocation using graph model for conflict resolution. Water Sci Technol 75:1512–1522. https://doi.org/10.2166/wst.2016.429

Verma N, Kumar B, Bajpai A, Mishra DD (2007) Lake Aeration Systems: Bhopal. Proc. Taal. 1960–1966

Yandamuri SR, Srinivasan K, Murty Bhallamudi S (2006) Multiobjective optimal waste load allocation models for rivers using nondominated sorting genetic algorithm-II. J Water Resour Plann Manage 132:133–143. https://doi.org/10.1061/(ASCE)0733-9496(2006)132:3(133)

Yazdi J, Zahraie B, Salehi Neyshabouri SA (2016) A stochastic optimization algorithm for optimizing flood risk management measures including rainfall uncertainties and nonphysical flood damages. J Hydrol Eng 21:04016006. https://doi.org/10.1061/(ASCE)HE.1943-5584.0001334

Acknowledgements

This research has been supported by research Grant No. 600/1181 funded by Shahid Beheshti University, Tehran, Iran. This research is based on the results of an MSc thesis at Shahid Beheshti University, Tehran, Iran.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Editorial responsibility: Samareh Mirkia.

Rights and permissions

About this article

Cite this article

Zare Farjoudi, S., Moridi, A. & Sarang, A. Multi-objective waste load allocation in river system under inflow uncertainty. Int. J. Environ. Sci. Technol. 18, 1549–1560 (2021). https://doi.org/10.1007/s13762-020-02897-5

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13762-020-02897-5