Abstract

In this paper, a secured watermarking algorithm based on chaotic embedding of speech signal in discrete wavelet transform (DWT) domain of cover audio is proposed. The speech signal to be embedded is compressed using discrete cosine transform (DCT) by finding the suitable number of DCT coefficients such that the perceptual quality of decompressed signal is preserved. The chaotic map is used to select the cover audio frames randomly instead of performing sequential embedding. The cover audio is decomposed using DWT followed by singular value decomposition (SVD), and the DCT coefficients of the speech signal are embedded in the singular matrix of the cover audio. The proposed watermarking algorithm achieves good imperceptibility with an average SNR and ODG of 46 dB and \(-1.07\), respectively. The proposed algorithm can resist to various signal processing attacks such as noise addition, low-pass filtering, requantization, resampling, amplitude scaling, and MP3 compression. Experimental results show that the secret speech is reconstructed with an average perceptual evaluation of speech quality (PESQ) score of 4.26 under no attack condition, and above 3.0 under various signal processing attacks. Further, the correlation between original and reconstructed secret speech signal is close to unity. In addition, the loss in the generality of the information of the reconstructed speech signal is tested and is found minimum even the watermarked audio is subjected to various signal processing attacks. The proposed algorithm is also tested for false positive test to ensure the security of watermarking algorithm.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

The security of digital multimedia data is one of the major challenge in communicating sensitive information in various fields such as medical diagnosis and military. Various techniques have been proposed by the researchers based on cryptography, secret sharing, and information hiding to achieve the secured communication. In cryptography, a secret key is used to encode the plaintext into a meaningless and unreadable format known as ciphertext. The decoding process of the ciphertext is done with a valid key that is shared with authorized persons only. The techniques proposed in [1, 2] uses the mechanism of cryptography for secured transmission of audio.

In secret sharing technique, the secret data to be communicated are divided into multiple parts known as shares. In a (k, n) secret sharing scheme, the secret message is divided into ‘n’ shares, and at least ‘k’ shares are required to regenerate the secret message [3, 4]. Bharthi et al. [5] proposed a verifiable (n, n) secret audio sharing scheme where the audio is divided into stream of amplitudes and signs. These streams are further divided into shares and a key is embedded into them to avoid the reconstruction of original audio by unauthorized users.

There exists certain limitations with cryptography and secret sharing-based techniques. (1) Certainty of the existence of secret data cannot be avoided, (2) Retrieval of secret data is difficult when an intentional signal processing attack, such as noise addition, compression, and cropping, is performed.

The process of concealment of secret data in a cover medium (either audio, image or text) is known as information/data hiding which is commonly known as watermarking or steganogarphy. These data hiding techniques conceal the secret data, and exhibit good robustness to the signal processing attacks. So that, the secret message can be recovered with minimum error. In addition, it ensures that the perceptual distortion introduced due to embedding is minimum.

In this paper, a novel audio watermarking algorithm is proposed where both the cover medium and secret data are audio signals. The audio watermarking techniques can be classified into time domain and transform domain [6,7,8]. In time domain, the secret data can be embedded in various ways such as direct modification of amplitudes, substitution of least significant bits (LSB), and insertion of echo signals. The time-domain approaches are easier and faster for implementation, but these are not robust to the signal processing attacks [7].

In transform domain, the cover audio is transformed using different frequency transformation techniques such as fast Fourier transform (FFT), discrete cosine transform (DCT), discrete wavelet transform (DWT), and lifting wavelet transform (LWT). Then, the secret data are embedded in this transformed coefficients to achieve good imperceptibility and robustness to the attacks.

To the best of authors’ knowledge, a few works have been reported in [9,10,11,12,13,14,15,16,17] for hiding secret speech in audio. Xu et al. [9] proposed a secure speech communication scheme in which the secret speech is compressed using a compressive sensing method and converted it into a binary stream before embedding. The cover audio is transformed using DCT followed by LWT, and binary bits are embedded in LWT coefficients using scalar costa scheme. At extraction side, it uses a pre-trained K-SVD-based dictionary to decompress the extracted speech signal. The experimental results show that the watermarked audio achieves segmental signal-to-noise ratio (SNR) of 32.34 dB with mean opinion score (MOS) of 3.616. Similarly, the reconstructed speech signal achieves segmental SNR of 13.06 dB and is enhanced to 14.50 dB after performing wavelet de-noising. In addition, the results demonstrated that the scheme is robust to additive white Gaussian noise (AWGN) of 20 dB and low-pass filtering (LPF) with normalized correlation coefficient (NCC) of 0.91 and 0.99, respectively.

Shahidi et al. [10] proposed an audio steganography scheme using integer LWT. The secret speech samples are converted into binary stream and then embedded in LSB positions of second level LWT coefficients of cover audio by a dynamic stego key. The proposed scheme achieves hiding capacity of 25% of the cover audio size and SNR of watermarked audio is about 45 dB. Moreover, it shows robustness against adaptive noise up to 60 dB AWGN with NCC equal to 0.96.

Ballesteros et al. [11] proposed a steganography model in which the secret speech and the cover speech are decomposed using DWT and then the wavelet coefficients of speech signal are sorted as per the order of cover audio’s wavelet coefficients. The embedding of these sorted coefficients of speech signal is performed by modifying the first five LSB positions of wavelet coefficients of cover audio. The order in which the coefficients are sorted is used as a key at extraction side to recover the secret speech. Due to its large key size, the watermarking system achieves higher security.

Ali et al. [12] compressed the secret audio using fractal coding technique in which the best match of secret audio with the cover audio is computed and obtained the corresponding fractal parameters. These fractal parameters are then converted into binary and chaotically embedded in LSB positions of the cover audio. This method achieved good imperceptibility of extracted secret speech with an average NCC equal to 0.99. The same authors proposed a modified version of the above technique in [16], wherein the fractal parameters are embedded in LWT coefficients of cover audio using uniform coefficient modulation scheme. The proposed scheme extracts the secret speech with an average correlation of 0.99 under no attack condition. In addition, the robustness test results showed that this scheme can resist to AWGN attack of 30 dB, echo addition, and cropping attack. The computational time of these fractal coding-based techniques is higher due to its asymmetric property. The encoding process is time-consuming during the range-domain matching process, while the decoding is simple and faster.

Ballesteros et al. [13] proposed a technique, where the secret speech samples are scrambled such that it imitates a super-Gaussian noise signal with similar statistics to that of secret speech. The scrambled samples of the secret speech are embedded into LSB positions of cover audio based on an adaptive parameter. At extraction side, the secret speech is extracted by using a key that contains the original positions of scrambled speech signal. The proposed scheme achieves \(99.7\%\) transparency of watermarked audio with an average SNR of 23 dB, and the secret speech is extracted with a correlation of 0.974. The authors’ have reported that the robustness evaluation of the scheme is considered as a future work.

Bharthi et al. [14] proposed an audio stenography technique in which the amplitudes and signs of the secret audio signal samples are separated and embedded in LSB positions of cover audio samples in a non-deterministic manner using a key. The experimental results showed that the secret speech is extracted with an average correlation of 0.97 under no attack condition.

Alsabhany et al. [15] proposed an adaptive multi-level phase coding audio steganography technique based on FFT and LSB. This method achieved perceptual transparency of 35 dB SNR of watermarked audio, and robustness to AWGN attack with an average bit error rate of 0.3.

The limitations of the techniques discussed above are as follows: The technique proposed in [9] requires a pre-trained dictionary to decompress the extracted secret speech signal. Fractal encoding in [12, 16] and speech scrambling in [13] are the iterative procedures that consume more time to process the secret speech signal before embedding. In addition, the watermarking algorithms proposed in [9, 10, 14, 16] do not provide high robustness against the signal processing attacks.

The authors’ of this paper proposed a steganography technique in [17] where the secret speech is divided into non-overlapping frames and SVD is applied on each frame. The singular values of secret speech frame are embedded in singular value matrix of DWT coefficients of cover audio. This scheme achieves good imperceptibility and robustness to the signal processing attacks, but it is a non-blind technique which requires partial information of secret speech at extraction stage.

To overcome the above said limitations, a watermarking algorithm for embedding the secret speech in the cover audio has been proposed that provides good imperceptibility and robustness. The major contributions of this paper are:

-

DCT-based compression is used for compressing the speech signal, and the suitable number of DCT coefficients are obtained for embedding.

-

Random numbers are generated for chaotic embedding of the secret data in cover audio to increase the security of watermarking.

-

DWT–SVD-based watermarking is proposed in which the secret data are embedded in the singular value matrix of cover audio.

-

Robustness of the proposed method is tested by computing NCC, perceptual evaluation of speech quality (PESQ) score, and the loss in generality of the information of reconstructed speech signal.

The organization of this paper is as follows: Preliminaries are discussed in Sect. 2, proposed method of embedding and extraction are explained in Sect. 3. Experimental results are presented in Sect. 4, followed by conclusion in Sect. 5.

2 Preliminaries

2.1 Compression of Speech Signal Using DCT

In this paper, DCT-based compression technique is used due to its energy compaction property. The information present in the higher-order DCT coefficients is negligible which can be eliminated without huge effect on speech signal [18]. Consider a sequence x(n) of length M; the 1-D DCT of the sequence is computed as:

where \(k=0,1,2,\dots ,M-1\) and

Similarly, the inverse DCT is computed as:

where \(n=0,1,2,\dots ,M-1\). Figure 1 shows a frame of speech signal and it’s DCT coefficients of length 96 samples. It can be observed that the energy of the signal is concentrated in the lower-order coefficients, whereas higher-order DCT coefficients are negligible.

Therefore, by neglecting the higher-order DCT coefficients, the compressed version of signal can be obtained by considering the suitable number of DCT coefficients such that the correlation coefficient (CC) between original and decompressed signal is closer to unity and a minimum PESQ score of 4.0. Algorithm 1 shows the procedure for finding the suitable number of DCT coefficients of secret speech for embedding in the cover audio.

In this algorithm, the compression factor (CF) is initialized to zero, and in each iteration, the CF is incremented by a step size of ‘1/l’ (\(l\le M\)) for finding the suitable number of DCT coefficients for compression. For each value of CF, PESQ score for decompressed speech signal is measured. If the PESQ \(\ge 4.0\), then the algorithm will return the corresponding CF value. As per the International Telecommunication Union—Telecommunication Standardization Sector (ITU-T) P.862 standard [19], PESQ score lies between \(-0.5\) and 4.5, where, 1 indicates poor quality and 4.5 indicates excellent.

The small value of ‘l’ terminates the algorithm quickly, whereas large value of ‘l’ provides better resolution. So, a proper value of ‘l’ must be chosen based on the frame size M.

2.2 Chaotic Map for Random Embedding

Chaotic systems are type of dynamic systems whose random states and irregularities depends on its governing equations (difference equation which is termed as chaotic map) that are highly sensitive to the initial conditions. Due to the various properties exhibited by these systems, they can be used as a basis to generate random numbers in an efficient manner [1, 20]. As the chaotic system is highly sensitive to initial conditions of chaotic map, a very small change in the initial condition will diverge the output. There are various 1-D chaotic maps existing in the literature such as logistic map, tent map, Bernoulli shift, and Chen map to generate the random numbers [1].

In this paper, a series of random numbers are generated using a logistic chaotic map to choose the cover audio frames for embedding. The usage of chaotic map makes data embedding in cover audio frames randomly instead of embedding in sequential manner, which in turn increases the security of watermarking.

Logistic map is one of the most popular models for discrete nonlinear dynamic systems which is analogous to the logistic equation first proposed by Pierre Francois Verhulst [21]. A logistic map is given by,

where \(y_n \,\, \epsilon \left( 0,1\right) \) is the ratio of the existing population to the maximum possible population after n years (i.e., \(y_n\) is the random value after n number of iterations), \(y_0\,\, \epsilon \left( 0,1\right) \) is the initial population, and \(r\,\,\epsilon \left( 0,4 \right] \) is the parameter that controls the rate of population.

The bifurcation diagram of logistic map for \(y_0=0.052\) is shown in Fig. 2. It can be observed that the randomness in the value of y depends on the control parameter r. If \(r\,\,\epsilon \left( 0,1 \right) \), then the value of y is close to 0 and is independent of initial population. If \(r\,\,\epsilon \left[ 1,3 \right) \), then the value of y approaches to \(\nicefrac {r}{1-r}\) and it is also independent of initial population, and for \(r\,\,\epsilon \left( 3.5,4 \right] \), the logistic map shows chaotic characteristics. Figure 3 shows the randomness of y distributed between zero and one for 10,000 iterations with initial value \(y_0=0.052\) and \(r=4\). It is observed that y value always ranges between 0 and 1, but it is required to generate the random integers to choose the cover audio frames for embedding. It can be achieved by modifying the result of logistic map as follows: [22],

where \(y_i^\prime \) is the random integer, \(y_i\) is the random number generated from Eq. (3) with \(y_0\) and r values, and p is the largest interval upto which the random integer is to be generated.

Finally, the set of \(y_i^\prime \) are sorted in ascending order, and the repetition of integers is removed by using MATLAB functions sort() and unique(), respectively, as shown in Eq. (5).

where loc contains the random integers. These are used as frame indices of the cover audio in which the secret data is to be embedded.

2.3 Discrete Wavelet Transform

The DWT represents the signal’s characteristics in both time and frequency domain using a scaling function \(\phi (t)\) and a wavelet function \(\psi (t)\) which are defined as follows [23]:

where \(h_0(n)\) and \(h_1(n)\) are the coefficients of low-pass and high-pass analysis filter, respectively.

If the signal \(f(t)\in L^2({\mathbb {R}})\), then it can be expressed as a series expansion of scaling and wavelet functions given by,

where c(k) is the low-pass coefficients, and d(j, k) is \(j\mathrm{th}\)-level high-pass coefficients of the wavelet transform. The first term in the above expansion gives lower resolution or approximation of signal f(t), and second term gives higher resolution of signal for each value of j.

Figure 4 shows the multi-level DWT decomposition of signal f(t) employing the filter bank \(h_0(n)\) and \(h_1(n)\), where the output of low-pass filter is called as approximate coefficients and output of high-pass filter as detailed coefficients. These are obtained by calculating inner-product of f(t) and \(\phi _{k}(t)\), f(t) and \(\psi _{j,k}(t)\), respectively, as follows:

where \(\langle \cdot \rangle \) indicates inner product, \( \phi _{k}(t) = \phi (t-k)\), and \( \psi _{j,k}(t) = 2^{j/2}\psi (2^{j}t-k)\).

Similarly, the inverse DWT is computed as follows:

where \({\tilde{h}}_0(n)\) and \({\tilde{h}}_1(n)\) are the coefficients of low-pass and high-pass synthesis filter, respectively. The corresponding filter coefficients are obtained as: \({\tilde{h}}_i(n)={h}_i(L-1-n)\), where \(i=0,1\) and ‘L’ is length of the filter.

In this paper, DWT is chosen for watermarking due its following advantages: (1) As \(L_2\) norm is preserved in DWT domain, the amount of distortion introduced in the high-energy approximate coefficients due to embedding is minimum. Hence, good imperceptibility of watermarked audio can be achieved [24]. (2) Watermarking in the approximate coefficients of a signal are found to be robust against signal processing attacks [25].

2.4 Singular Value Decomposition

SVD decomposes a matrix [A] of size \(m\times m\) into combination of three matrices as shown below:

where \(u_i\) and \(v_i\) are the column components of U and V matrices, respectively. These \(u_i\) and \(v_i\) components are obtained by computing the eigenvectors of the matrices \(AA^T\) and \(A^{T}A\), respectively. S is known as singular matrix that contains singular values \((\sigma _{i}>0)\) of A arranged diagonally such that \(\sigma _{1}>\sigma _{2}>\cdots >\sigma _{m}\) and zeros along non-diagonal positions.

In this paper, SVD is chosen for watermarking the cover audio signal due to its unique properties. The embedding of data in singular matrix provides good imperceptibility and robustness to the watermarked signal because the singular matrix [S] represents the energy of a signal [26]. If any data are embedded into [S] matrix, then the variation in its singular values is minimum [27].

3 Proposed Method of Watermarking and Extraction

3.1 Embedding Algorithm

The process of embedding the secret speech signal in cover audio is shown in Fig. 5, and the steps are explained as follows:

Step 1: Pre-processing

The cover audio signal is divided into \(N_c\) number of non-overlapping frames with the frame size of N samples. The secret speech signal is divided into \(N_s\) number of non-overlapping frames with the frame size of M samples and then apply 1-D DCT on each frame.

The DCT coefficients of each secret audio frame is further divided into ‘l’ number of segments to obtain the DCT coefficients for embedding in cover audio frame as discussed in the Sect. 2.1. To select the cover audio frames chaotically for embedding the secret speech, random integers are generated using Eqs. (3), (4), and (5) with initial conditions of \(y_0\) and r. These values serve as a key at the extraction side for logistic chaotic map.

Step 2: DWT

Initially, the cover audio frames are selected chaotically from the set of random integers generated in step 1, and then second-level DWT is performed on each cover audio frame. The approximate coefficients of the corresponding cover audio frame are further divided into ‘l’ segments.

Consider that \(c_k\) represents the second-level approximate coefficients of the \(k\mathrm{th}\) cover audio frame of size ‘N’ samples.

Then, \(c_k^{i}\) represents the \(i\mathrm{th}\) segment of the \(k\mathrm{th}\) frame as follows:

where \(i=1,2,\dots ,l\). These N/4l coefficients are arranged in a \(m\times m\) matrix to perform SVD operation on it.

Step 3: SVD

SVD operation is performed on the coefficient matrix \([c_k^i]_{m\times m}\) and decompose it into \([U_k^i]\), \([S_k^i]\), and \([V_k^i]\) matrices to embed the data in the singular matrix.

Step 4: Embedding into cover audio frame

The DCT coefficients of \(i\mathrm{th}\) segment of \(k\mathrm{th}\) secret speech frame are arranged in a matrix [W] of size \(m \times m\) such that the zeros are arranged diagonally, while the DCT coefficients in non-diagonal positions are as follows:

where \(X_1, X_2, \dots , X_{12}\) are the DCT coefficients of the secret speech signal.

The watermark is embedded into the singular matrix \([S_k^i]\) such that \([{{\hat{S}}}_k^i]=[S_k^i]+\alpha [W]\), where \(\alpha \) is a scaling parameter that ranges in between zero and one. The small value of ‘\(\alpha \)’ leads to better imperceptibility, whereas large value leads to better robustness. Hence, it must be chosen to optimize the trade-off between imperceptibility and robustness. In this paper, ‘\(\alpha \)’ is chosen empirically and is set to 0.08.

The SVD operation is again performed on \([S_k^i]\) matrix to obtain \([{{\hat{U}}}_k^i]\) and \([{{\hat{V}}}_k^i]\) matrices which are used during extraction phase.

Step 5: Generation of watermarked audio frame

Inverse SVD operation is performed on \([{{\hat{S}}}_k^i]\) by multiplying with corresponding \([U_k^i]\) and \([V_k^i]^T\) matrices to obtain the modified approximate coefficients \([{{\hat{c}}}_k^i]\) of the cover audio frame. These modified coefficients are arranged into a vector form, and then second-level inverse DWT is performed to get the watermarked audio frame.

This embedding process is repeated for all the chaotically selected frames and then merged to get the watermarked audio.

3.2 Extraction Algorithm

In extraction phase, the initial conditions of logistic map is given as a key for finding the embedding locations in the watermarked audio to extract the secret speech. The process of extracting the secret speech signal is shown in Fig. 6. The watermarked audio is divided into non-overlapping frames and are selected chaotically using the random integers generated from logistic map. The \(2\mathrm{nd}\)-level DWT is applied on these selected frames, and the approximate coefficients are arranged in a matrix \([\dot{c}_k^i]\).

The SVD operation is performed on \([\dot{c}_k^i]\) and decompose into three matrices, namely \([\dot{U}_k^i]\), \([\dot{S}_k^i]\), and \([\dot{V}_k^i]\). The secret data are extracted by performing the inverse SVD on \([\dot{S}_k^i]\) using pre-stored matrices \([{{\hat{U}}}_k^i]\) and \([{{\hat{V}}}_k^i]\) which results a matrix \([D_k^i]\), as follows:

The watermark data are extracted from the matrix \([D_k^i]\) as follows:

where \(D_w\) is extracted DCT coefficients of secret speech. The above process for all l segments of the \(k\mathrm{th}\) watermarked audio frame is repeated, and then M-point inverse DCT is performed using Eq. (2) to reconstruct the secret speech frame. Finally, all the extracted frames are merged to reconstruct the secret speech.

4 Results and Discussions

The proposed watermarking algorithm is tested on unified speech and audio (USAC) database [28] which contains five music files sampled at 48 kHz with 16-bit quantization. The speech signal is chosen as secret audio from NOIZEUS database [29] that contains 30 speech (15 male and 15 female voices) signals sampled at 8 kHz with 16-bit quantization. In this section, compression test results on secret audio and performance of proposed audio watermarking technique are discussed.

4.1 Compression of Secret Audio

In this paper, the DCT-based compression on secret speech signal is performed by considering the frame size \(M=96\), and the compression factor CF is incremented by 1/8 in each iteration. Therefore, 12 number of DCT coefficients are considered for compression, and the corresponding PESQ score is measured in each iteration.

In the proposed algorithm, the compressed secret speech is embedded in cover audio. Upon extraction, in addition to PESQ score, the decompressed speech signal was also tested to verify whether the reconstructed secret speech is compatible to existing speech to text conversion algorithms which may be employed in several applications such as speech recognition. This is performed by converting the decompressed speech signal into text by using the Microsoft Azure Speech application program interface (API) [30] built using Java script. The original text of the speech signals is taken from [29] and compared with the results of the speech-to-text API to count the number of erroneous words.

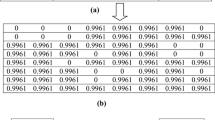

Table 1 shows the transparency test results of the secret speech signal ‘Sp13’ for various values of CF. It can be observed that for \(\mathrm{CF}=6/8\), PESQ score is greater than 4.0 and the number of erroneous words are found to be zero. It is also observed that CC is closer to unity and signal-to-noise ratio (SNR) of decompressed speech signal is 25 dB which is an acceptable value as per International Federation of Phonographic Industry (IFPI) standard [31].

In the similar manner, the compression is performed on all the speech signals of NOIZEUS database and it is found that compression factor, \(\mathrm{CF}=6/8\), gives the optimized result. Therefore, 72 number of DCT coefficients from each speech frame are taken and then embedded into cover audio.

4.2 Performance of Proposed Audio Watermarking Algorithm

The performance of watermarking algorithm is evaluated by conducting 150 number of tests for both imperceptibility and robustness by embedding each secret speech into each of the cover audio. In the proposed watermarking algorithm, the frame size of the cover audio is chosen as 512 samples. The initial conditions for logistic map were chosen as \(y_0=0.052\) and \(r=3.95\) to generate random numbers, and the random integers are generated using Eq. (4) with ‘p’ equals to the number of cover audio frames.

4.2.1 Imperceptibility

The imperceptibility of watermarked audio is quantified by measuring SNR as per Eq. (19),

where \(\mathrm{SNR}_w\) is SNR of the watermarked audio. s(n), and \(s^\prime (n)\) are cover and watermarked audio signals, respectively. In addition to SNR, objective difference grade (ODG) score is measured by using perceptual evaluation of audio quality (PEAQ) measurement technique [32] specified by International Telecommunication Union—Radio—communication Sector (ITU-R) BS.1387 standard [33]. This ODG score quantifies the perceptual quality of watermarked audio as shown in Table 2.

The SNR and ODG values of the watermarked audio signals with speech signal ‘Sp01’ as secret audio is shown in Table 3. It is observed that the watermarked audio achieves an average SNR of 47 dB and an ODG score of \(-0.8\). In similar manner, each speech signal from NOIZEUS database are embedded in all the five cover audio signals and measured the \(\mathrm{SNR}_w\) and ODG score as mentioned. Table 4 shows the average \(\mathrm{SNR}_w\) and ODG scores of all five watermarked audio signals corresponding to each secret speech signal. The average SNR of all 150 watermarked audios is 46 dB \(\pm 0.7242\) and ODG score is \(-1.07 \pm 0.0070\). These results show that the distortion introduced due to embedding the secret speech in cover audio is negligible, and the perceptual quality of watermarked audio is preserved.

The comparison of imperceptibility results of watermarked audio is shown in Table 5. It is observed that the proposed algorithm achieves better SNR than the techniques present in [9, 10, 13]. The fractal encoding-based watermarking techniques in [12, 16] achieve significantly better SNR than the proposed algorithm.

According to IFPI standard [31], the SNR of watermarked audio should be greater than 20 dB to achieve good imperceptibility. The proposed approach achieves an average SNR of 46 dB that meets the said criterion.

The imperceptibility of watermarked audio is also quantified by measuring the ODG score. From Table 2, it is observed that the ODG score lying in the range of \(-1\) to 0 indicates that watermarked audio is imperceptible. From the imperceptibility test results mentioned in Table 4, it is found that the average ODG score of the watermarked audio is 1.07. From these experimental results, it is observed that the proposed approach maintains the minimum criteria required for achieving better imperceptibility of watermarked audio.

4.2.2 Robustness

Robustness test measures the ability of watermarked audio to reconstruct the secret data when it is subjected to various signal processing attacks. Various signal processing attacks that are considered in this paper are mentioned below:

-

1.

Amplitude scaling (AS): The amplitude of watermarked audio is scaled by a factor of 0.75.

-

2.

Additive white Gaussian noise (AWGN): A white Gaussian noise of SNR 20 dB is added to the watermarked audio.

-

3.

Low-pass filtering (LPF): A second-order low-pass filter with cut-off frequency of 24 kHz is applied to the watermarked audio.

-

4.

Requantization (RQ): The 16-bit watermarked audio is quantized to 8 bits/sample and back to 16 bits/sample.

-

5.

MP3 compression: Watermarked audio signal is compressed to MPEG-I layer III (MP3) format at the rate of 128 kbps and again converted back to .wav format.

-

6.

Resampling (RS): Watermarked audio is downsampled and then upsampled to original sampling frequency.

To evaluate the robustness of watermarking algorithm, NCC, SNR and PESQ score are measured in this paper. The purpose of NCC is to find the similarity between the original and reconstructed secret speech signals which is computed as per Eq. (20).

where \(S_\mathrm{o}(i)\) and \(S_\mathrm{r}(i)\) represents original and reconstructed secret speech signals, respectively. Similarly, SNR and PESQ score are measured that gives the perceptual quality of reconstructed secret speech signal. In addition to these parameters, the number of erroneous words present in the text extracted from reconstructed secret speech is also determined.

The robustness test results of reconstructed speech signals for various attacks are given in Table 6, where \(\mathrm{SNR}_\mathrm{rs}\) is the SNR of the reconstructed secret speech, EW is the number of erroneous words present in the extracted text from secret speech. Considering the case for secret speech signal ‘Sp01,’ it is observed that the proposed algorithm is able to reconstruct the secret speech with high correlation of 0.98 under various attacks. Even though \(\mathrm{SNR}_\mathrm{rs}\) is lesser for some of the attacks, it is seen that there is no loss in the generality of the information. Figure 7 shows the PESQ scores of the reconstructed speech signal ‘Sp01’ from various watermarked audios against the signal processing attacks. It is found that the perceptual quality of speech signal lies in the acceptable range, and the average PESQ score of 3.81 is achieved.

In similar manner, a total of 150 number of robustness tests are conducted by reconstructing each speech signal from each cover audio under various signal processing attacks. Table 6 shows the robustness test results of all the thirty speech signals. It is observed that the number of erroneous words present in the extracted text from the reconstructed speech signal are zeros for most of the cases.

The robustness test results for LPF attack are shown in Figs. 8, 9, and 10 which show the PESQ score, \(\mathrm{SNR}_\mathrm{rs}\), and NCC values of reconstructed speech signals, respectively. It is observed that the average PESQ score of the reconstructed speech is greater than 3.0, and the interquartile range (IQR) of PESQ scores is same for each box. Similarly, from Fig. 10, it is observed that the speech signal is reconstructed with the average correlation of 0.97 from each watermarked audio. These results show that the perceptual quality and correlation of the speech signal is high irrespective of the selection of cover audio signal which indicates that the proposed watermarking technique is able to resist the LPF attack. The reason is that the watermarking is performed in \(2\mathrm{nd}\)-level approximate coefficients of cover audio signal.

Table 7 shows the average values of \(\mathrm{SNR}_\mathrm{rs}\), NCC, and PESQ of reconstructed speech signal for all 150 robustness tests on watermarked audio. It is observed that the proposed algorithm is able to extract the speech signal with high correlation close to unity and PESQ score of 3.78. This shows that the perceptual quality secret speech is retained under various signal processing attacks. Table 8 shows the comparison of SNR and correlation values between original and reconstructed secret speech with the relevant watermarking algorithms. It is observed that the SNR of the reconstructed speech is lesser when compared to the methods proposed in [12, 14, 16]. This is due to the fact that the number of DCT coefficients being embedded are limited by a factor \(\mathrm{CF}=6/8\) as discussed in Sect. 4.1. Even though \(\mathrm{SNR}_\mathrm{rs}\) is lesser, the secret speech is reconstructed with a PESQ score of greater than 4.0 and NCC equal to unity.

The performance of proposed audio watermarking algorithm is compared with the techniques in [9, 10, 14, 16]. Table 9 shows the comparison of robustness test results of the proposed algorithm with the relevant techniques. It is observed that the proposed algorithm shows better robustness toward AWGN and resampling attacks compared to the technique presented in [14]. From these experimental results, it is evident that the proposed audio watermarking technique shows good robustness to the signal processing attacks and is able to reconstruct the secret speech with the correlation closer to unity and an average PESQ score of 3.78.

4.3 Security Test

To evaluate the security of the watermarked audio, two approaches were adopted here:

-

1.

False positive test: To ensure that the watermark cannot be extracted from the U and V matrices of other watermarked audio signals, the false positive test is performed as follows: The secret speech ‘Sp01’ is embedded in two different cover audios namely, chorus and classical. At extraction side, an attempt was made to reconstruct the secret speech ‘Sp01’ from chorus watermarked audio by using the U and V matrices of classical audio. From Fig. 11, it is evident that the extraction of secret speech is not possible with incorrect U and V matrices. The reason is that the embedding is performed in SVD matrix of cover audio. Since, this decomposition is unique for each audio signal, it is not possible to extract the watermark from other cover audio signals.

-

2.

Sensitivity to initial conditions: In this paper, the secret speech is embedded chaotically to increase the security of watermarking. So, a logistic chaotic map is chosen to generate random numbers with the initial conditions \(y_0=0.052\) and \(r=3.95\). Figure 12 shows the effect of sensitivity to \(y_0\) and r values. It is observed that even if an intruder guesses value of r exactly and \(y_0\) with an error of \(10^{-10}\), the extracted speech is not intelligible when compared to original speech.

From these results, it is evident that the proposed watermarking technique is secured against the intruder attacks as discussed above.

5 Conclusion

In this paper, a watermarking algorithm for chaotic embedding of DCT compressed speech signal using DWT and SVD is proposed. The DCT compression of secret speech signal is achieved by finding the suitable number of DCT coefficients that are required for embedding such that the PESQ score of the decompressed signal is greater than 4.0 to ensure the speech quality. To increase the security of watermarking algorithm, logistic map is used to generate random numbers, and watermarking of the selected DCT coefficients is performed chaotically in cover audio by decomposing it using DWT followed by SVD. The experimental results show that the proposed watermarking algorithm achieves good imperceptibility with an average SNR and ODG of 46 dB and \(-1.07\), respectively. The robustness test results show that the secret speech signal is reconstructed with an average NCC of 0.95 by preserving the perceptual quality of reconstructed speech signal under various signal processing attacks. In addition, it is found that the loss in the generality of the information of reconstructed speech signal is minimum when the watermarked audio is subjected to various signal processing attacks.

References

Sathiyamurthi, P.; Ramakrishnan, S.: Speech encryption using chaotic shift keying for secured speech communication. EURASIP J. Audio Speech Music Process. 1, 20 (2017). https://doi.org/10.1186/s13636-017-0118-0

Lakshmi, C.; Ravi, V.M.; Thenmozhi, K.; Rayappan, J.B.B.; Amirtharajan, R.: Con (dif) fused voice to convey secret: a dual-domain approach. Multimed. Syst. 26, 1–11 (2020)

Ehdaie, M.; Eghlidos, T.; Aref, M.R.: A novel secret sharing scheme from audio perspective. In: 2008 International Symposium on Telecommunications, IEEE, pp. 13–18 (2008)

Wang, J.Z.; Wu, T.X.; Sun, T.Y.: An audio secret sharing system based on fractal encoding. In: 2015 International Carnahan Conference on Security Technology (ICCST), IEEE, pp. 211–216 (2015)

Bharti, S.S.; Gupta, M.; Agarwal, S.: A novel approach for verifiable \((n, n)\) audio secret sharing scheme. Multimed. Tools Appl. 77(19), 25629–25657 (2018). https://doi.org/10.1007/s11042-018-5810-2

Djebbar, F.; Ayad, B.; Meraim, K.A.; Hamam, H.: Comparative study of digital audio steganography techniques. EURASIP J. Audio Speech Music Process. 1, 25 (2012)

Hua, G.; Huang, J.; Shi, Y.Q.; Goh, J.; Thing, V.L.: Twenty years of digital audio watermarking—a comprehensive review. Signal Process. 128, 222–242 (2016)

Mishra, S.; Yadav, V.K.; Trivedi, M.C.; Shrimali, T.: Audio steganography techniques: a survey. In: Advances in Computer and Computational Sciences, pp. 581–589. Springer, Berlin (2018)

Xu, T.; Yang, Z.; Shao, X.: Novel speech secure communication system based on information hiding and compressed sensing. In: 2009 Fourth International Conference on Systems and Networks Communications, IEEE, pp. 201–206 (2009). https://doi.org/10.1109/ICSNC.2009.71

Shahadi, H.I.; Jidin, R.; Way, W.H.: Lossless audio steganography based on lifting wavelet transform and dynamic stego key. Indian J. Sci. Technol. 7(3), 323 (2014)

Ballesteros, L.D.M.; Moreno, A.J.M.: Highly transparent steganography model of speech signals using efficient wavelet masking. Expert Syst. Appl. 39(10), 9141–9149 (2012). https://doi.org/10.1016/j.eswa.2012.02.066

Ali, A.H.; George, L.E.; Zaidan, A.; Mokhtar, M.R.: High capacity, transparent and secure audio steganography model based on fractal coding and chaotic map in temporal domain. Multimed. Tools Appl. 77(23), 31487–31516 (2018). https://doi.org/10.1007/s11042-018-6213-0

Ballesteros, D.M.; Renza, D.: Secure speech content based on scrambling and adaptive hiding. Symmetry 10(12), 694 (2018). https://doi.org/10.3390/sym10120694

Bharti, S.S.; Gupta, M.; Agarwal, S.: A novel approach for audio steganography by processing of amplitudes and signs of secret audio separately. Multimed. Tools Appl. 78(16), 23179–23201 (2019). https://doi.org/10.1007/s11042-019-7630-4

Alsabhany, A.A.; Ridzuan, F.; Azni, A.H.: The adaptive multi-level phase coding method in audio steganography. IEEE Access 7, 129291–129306 (2019)

Ali, A.H.; George, L.E.; Mokhtar, M.R.: An adaptive high capacity model for secure audio communication based on fractal coding and uniform coefficient modulation. Circuits Syst. Signal Process. 39, 1–28 (2020). https://doi.org/10.1007/s00034-020-01409-7

Kasetty, P.K.; Kanhe, A.: Covert speech communication through audio steganography using DWT and SVD. In: 2020 11th International Conference on Computing, Communication and Networking Technologies (ICCCNT), IEEE, pp. 1–5 (2020). https://doi.org/10.1109/ICCCNT49239.2020.9225399

Hassan, T.A.; Al-Hashemy, R.H.; Ajel, R.I.: Speech signal compression algorithm based on the JPEG technique. J. Intell. Syst. 29(1), 554–564 (2020). https://doi.org/10.1515/jisys-2018-0127

ITU-T R: Perceptual evaluation of speech quality (PESQ): an objective method for end-to-end speech quality assessment of narrow-band telephone networks and speech codecs. Rec ITU-T P 862 (2001)

Al-Azawi, M.K.M.; Gaze, A.M.: Combined speech compression and encryption using chaotic compressive sensing with large key size. IET Signal Process. 12(2), 214–218 (2017). https://doi.org/10.1049/iet-spr.2016.0708

Weisstein, E.W.: Logistic Map from MathWorld—A Wolfram Web Resource. https://mathworld.wolfram.com/LogisticMap.html. Accessed Feb 2021 (2021)

Peng, J.; Jiang, Y.; Tang, S.; Meziane, F.: Security of streaming media communications with logistic map and self-adaptive detection-based steganography. IEEE Trans. Depend. Secure Comput. (2019). https://doi.org/10.1109/tdsc.2019.2946138

Burrus, C.; Gopinath, R.; Guo, H.: Introduction to Wavelets and Wavelet Transforms—A Primer. Prentice-Hall, New Jersey (1998)

Chen, S.T.; Huang, H.N.: Optimization-based audio watermarking with integrated quantization embedding. Multimed. Tools Appl. 75, 4735–4751 (2016). https://doi.org/10.1016/0003-4916(63)90068-X

Kaur, A.; Dutta, M.K.: An optimized high payload audio watermarking algorithm based on LU-factorization. Multimed. Syst. 24(3), 341–353 (2018). https://doi.org/10.1007/s00530-017-0545-x

Kanhe, A.; Gnanasekaran, A.: A QIM-based energy modulation scheme for audio watermarking robust to synchronization attack. Arab. J. Sci. Eng. 44(4), 3415–3423 (2019). https://doi.org/10.1007/s13369-018-3540-4

Hwang, M.; Lee, J.; Lee, M.; Kang, H.: SVD-based adaptive QIM watermarking on stereo audio signals. IEEE Trans. Multimed. 20(1), 45–54 (2018). https://doi.org/10.1109/TMM.2017.2721642

VoiceAge: Unified speech and audio database (USAC). http://www.voiceage.com/Audio-Samples-AMR-WB.html. Accessed Apr 2020 (2020)

Hu, Y.; Loizou, P.C.: Subjective comparison and evaluation of speech enhancement algorithms. Speech Commun. 49(7), 588–601 (2007). https://doi.org/10.1016/j.specom.2006.12.006

Azure, M.: Microsoft\(^{\text{TM}}\) Azure Speech Services API. https://azure.microsoft.com/en-in/services/cognitive-services/speech-to-text. Accessed Feb 2021 (2021)

Katzenbeisser, S.; Petitcolas, F.A.: Information Hiding Techniques for Steganography and Digital Watermarking, 1st edn Artech House, Inc., Norwood (2000)

Kabal, P., et al.: An examination and interpretation of ITU-R BS. 1387: perceptual evaluation of audio quality. TSP Lab Technical Report, Department Electrical & Computer Engineering, McGill University, pp. 1–89 (2002)

ITU-R R: Methods for objective measurements of perceived audio quality. ITU-R BS 13871 (2001)

Author information

Authors and Affiliations

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Rights and permissions

About this article

Cite this article

Kumar, K.P., Kanhe, A. Secured Speech Watermarking with DCT Compression and Chaotic Embedding Using DWT and SVD. Arab J Sci Eng 47, 10003–10024 (2022). https://doi.org/10.1007/s13369-021-06431-8

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13369-021-06431-8