Abstract

Recommender systems are becoming more essential than ever as the data available online is increasing manifold. The increasing data presents us with an opportunity to build complex systems that can model the user interactions more accurately and extract sophisticated features to provide recommendations with better accuracy. To construct these complex models, deep learning is emerging as one of the most powerful tools. It can process large amounts of data to learn the structure and patterns that can be exploited. It has been used in recommender systems to solve cold-start problem, better estimate the interaction functions, and extract deep feature representations, among other facets that plague the traditional recommender systems. As big data is becoming more prevalent, there is a need to use tools that can take advantage of such explosive data. An extensive study on recommender systems using deep learning has been performed in the paper. The literature review spans in-depth analysis and comparative study of the research domain. The paper exhibits a vast range of scope for efficient recommender systems in future.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

A recommender system’s goal is to present items to a user, which are most likely to lead to conversion. Conversion might relate to different things in different contexts. For example, for e-commerce, it might mean purchasing the product, and for Netflix, it might mean viewing the content. To achieve this goal, recommender systems have to study the underlying data, consisting of items, users, and their interactions. To study these interactions, we need to extract relevant features and create a system that can learn and model such interactions.

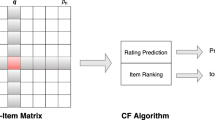

Based on the properties of the network that the system exploits, recommender systems can be categorized into content-based, collaborative filtering-based, and hybrid systems. Recommender systems based on content exploit the item’s features to find similar items that the user might like. A collaborative filtering recommender system uses the user–user interaction. The philosophy being user similar to each other might have similar choices. Systems that combine both these aspects in one or the other way are termed as hybrid systems.

Recommender systems use machine learning capabilities at its core to learn the interaction functions and predict items that are most likely to lead to conversion. As the data present online is increasing every day, and the concept of big data is harnessing attention, the dimensionality and modality of data are growing explosively. Hence, there arises a need for a tool that can exploit big data to provide better results. Deep learning is essentially an extension of machine learning that is specifically designed to manage big data. Deep learning algorithms are based on large neural networks with multiple hidden layers. These models can exploit data to learn complex interactions and feature representations. This can help us improve the accuracy of recommendations and bestow new meaning to tackle problems like cold start that plagues traditional recommender systems.

Because of the many advantages, there is a sudden boom in implementing deep learning in RS. For this reason, ACM has been holding a workshop DLRS (deep learning in recommender systems) with RecSys since the year 2016. Several deep learning techniques are implemented in recommender systems, as discussed in the paper. Some papers have used only a single technique, while others have used an amalgamation of different techniques to improve the results. Deep learning is a powerful tool and is being used to solve a plethora of problems in recommender systems. It has also proved to be effective in improving accuracy in various domains. In this paper, the research work done in RS using deep learning techniques has been explored. With the advancement in big data, practitioners will need better tools to tackle the complexities that come with it, and at the same time, exploit it for better results.

To make useful recommendations, there are various aspects to be kept in mind like the application domain, type of dataset, problem statement, kind of recommender system used, type of deep learning technique used, the side information such as gender of the user, user’s login sessions, text semantics, and much more.

This paper will report the following research questions restricted to deep learning in recommender systems, and the answers to these questions have been provided in Sect. 3:

.

RQ 1 | Which application domains have been included in the study? |

RQ 2 | What are the different pre-defined and self-generated datasets other authors have worked on in the past? |

RQ 3 | What types of recommender systems have been studied and implemented in past studies? |

RQ 4 | Which deep learning techniques have been implemented in the previous research? |

RQ 5 | Which metrics have been used to analyze the results? |

The paper has been organized as follows. Section 2 furnishes the background study of the literature survey, i.e., a short description of the recommender systems, machine learning, and deep learning. Section 3 is the Research Methodology in which the readers can understand the division of research papers studied for this survey paper. Here, the papers have been classified based on various parameters. Section 4 is the Literature Review, which consists of a detailed study of the research work and encompasses the conclusion and the research gaps observed in the mentioned field of study. The paper concludes with References.

This paper is written to explain the application of deep learning in recommender systems and discuss how recommender systems can be improved and extended by applying different deep learning techniques. To perform this survey, we conducted an extensive study of previous research done in recommender systems, employing deep learning. We surveyed top conferences and journals in deep learning in recommender systems and collated the papers relevant to our research area. The original contribution of the survey is presented in Sect. 4 focusing on a systematic flow of information. First, we explained the basic concept of deep learning models. Afterward, we explored its applications in recommender systems and provided detailed analysis of key recommender systems using those techniques.

2 Background

This section expounds on the major concepts introduced in the paper, namely recommender systems, machine learning, and deep learning.

2.1 Recommender Systems

Recommender systems use machine learning and artificial intelligence algorithms to predict items to users. In today’s world, the availability of such a vast pool of data makes it difficult for e-commerce websites to identify user-centric items [1]. If a user intends to select an item, he might not be able to find the item most suitable to him due to the presence of multiple options. To make this task easy, the concept of recommender systems was introduced. RS uses machine learning and artificial intelligence techniques to recommend relevant items to the user.

2.1.1 Content-Based Filtering

In content-based filtering, the recommendations are performed based on the previous choices of the user. As the name suggests, this type of filtering technique depends upon the various parameters of the user items. These parameters, when taken into account, reveal the granular level information about the user’s preferences. Content-based filtering aims to exploit such a detailed analysis of the user’s liking and recommends items in the present. The recommender system studies the choices user made in the past in different domains and depending upon those choices, the user is recommended items [2]. Such choices made by the user depend on various parameters or features of the items. Since these recommendations match the preferences the user made in the past, hence they are more user-centric. To explain with the help of an example of content-based recommendations, let us say that a user likes to watch movies by the director Quentin Tarantino, so the movies which will be recommended to the user will feature the same director. This was content-based filtering based on just one feature. Similarly, we can take multiple features to determine the recommendation list. Mathematically, content-based RS for multiple users can be represented using Eq. 1:

Here, \( \theta^{j} \) represents the parameter vector for user j, r(i,j) = 1 if user j has rated the movie i, else 0, xi represents the feature vector for movie i, and \( y^{{\left( {i,j} \right)}} \) represents the rating for movie i by user j.

2.1.2 Collaborative Filtering

The second type of recommender system is collaborative filtering-based Recommender System. In this type of filtering, the recommendations are affected by the neighbors of the user. It is seen that if a user’s friend buys a particular product, the user is likely to buy the same or a similar product. Based on this principle, collaborative filtering adopts a neighborhood-based approach. In this technique, the user’s neighbors’ choices are studied, and based on those choices, similar items are recommended to the user. User-based collaborative filtering is essentially a neighborhood-based approach. To explain with an example of how collaborative filtering works, let us say that user a has a neighbor user b. User b likes a particular product, say product p. Now in this filtering technique, the preference of user a will be subjected to the preferences of user b and vice versa. Hence, user a will be recommended product p and products similar to product p.

Collaborative filtering is further of two types, namely, item-based collaborative filtering and user-based collaborative filtering.

-

a)

Item-based Collaborative Filtering

In this type of collaborative filtering technique, the recommendations are based on the similarity between the items. If a user purchases product p and product q is similar to product p in one or more ways, i.e., if they share some common features, the user is recommended product q and other products similar to product q. The similarity between the items is calculated using several similarity measures. It can be cosine-based similarity, which can be calculated using Eq. 2. It is also known as vector-based similarity, and the similarity is determined by calculating the cosine of the angle between the two vectors.

Here, \( \vec{a} \) and \( \vec{b} \) are the two item vectors,

Another similarity measure that can be used to deduce the similarity between different items is Pearson correlation-based similarity. It can be given by Eq. 3.

Here, \( R_{u,a} \) denotes the rating given by user u for item a, \( \bar{R}_{a} \) denotes the average rating of item a.

Similarly, \( R_{u,b} \) denotes the rating given by user u for item b, and \( \bar{R}_{b} \) denotes the average rating of item b.

-

b)

User-Based Collaborative Filtering

In this type of collaborative filtering technique, the recommendations take place depending upon the preferences of the user’s neighbors. If user u has a neighbor user v, and user v likes product p, then user u will be recommended product p and all such products preferred by user v.

2.1.3 Hybrid Filtering

The third approach is the hybrid approach. It is an amalgamation of content-based filtering and collaborative filtering. Here, first, the social network analysis of a user’s neighborhood takes place. This step helps identify the neighbors’ preferences, and items are kept in the account to be recommended to the user. In the second step, the user’s history is studied, and depending on the items he bought previously and items having similar content or attributes as the past items; item recommendations are taken into account [3]. Finally, analyzing the results obtained in both the steps, the user is recommended the items. Authors in [4] differentiated user’s preferences in generated recommendations by using a deep hybrid recommender system. In order to explain the underlying literature of hybrid filtering, let us take the example used in the previous Sect. 2.1.2. Let us say that product p has a similar product, product q, along with one or several features. The system will apply content-based filtering to identify products similar to product p and product q. Upon selecting such products, say list l, the system will use collaborative filtering, and user b will now be recommended product p, product q, and other similar products, i.e., products from list l.

Any of the three techniques can be used to recommend items to users. Depending upon the chosen technique, the recommendations are made to the user. Hybrid recommender systems can be categorized into the following types:

-

a)

Monolithic Hybrid Design

In this type, there exists a single recommendation component that aggregates multiple recommendation methods by preprocessing and combining numerous knowledge sources. The architecture of monolithic hybrid design is represented in Fig. 1.

-

b)

Parallelized Hybrid Design

In this type, several recommender systems are run in parallel, and the output of each is combined at the later stages using an aggregation mechanism. These are further of three types, i.e., mixed, weighted, and switching. The architecture of parallelized hybrid design is shown in Fig. 2.

-

c)

Pipelined Hybrid Design

In this type, every recommender system processes the input pertaining to its recommendation mechanism. The output, hence produced, is forwarded as input to subsequent recommender systems mimicking a staged process. The architecture of the pipelined hybrid design is given in Fig. 3.

2.2 Machine Learning

Machine learning is a section in computer science that deals with computing and solving problems intelligently and analyzing the results. The system is presented with a dataset, and various machine learning algorithms can be applied to that dataset to obtain results. Due to the availability of such extensive data and the need to get predictive results, machine learning algorithms are used widely. There exist three types of machine learning techniques, which can be seen in Fig. 4.

2.2.1 Supervised Learning

In supervised machine learning algorithms, the system is presented with the dataset, and the outcome is pre-defined. For example, it is known that the outcome has to be whether a given transaction is fraudulent or genuine for identifying fraudulent bank transactions. Since the result is known beforehand, the algorithm works to guide the expected result [5]. Hence, the entire working is supervised. A few supervised learning algorithms are Logistic Regression, Neural Network, Naïve Bayes, Decision Trees, Nearest Neighbors, and others.

Semi-supervised learning is another type of technique in machine learning [6]. This technique is used when the expected result is known only for a few data points, i.e., when in a given dataset, not every data point is labeled. It helps in identifying and learning the structure of the input variables.

2.2.2 Unsupervised Learning

The second type of technique is unsupervised learning. In this type of technique, the data is not classified. The expected results are not known before its implementation. The system is presented with the dataset, and any of the unsupervised machine learning algorithms are applied to it, and the results are obtained. Since there is no supervision on the desired results, this technique is called unsupervised learning [7]. The main motive behind using this technique is to identify hidden clusters and patterns in the data. Examples of such algorithms are K-means clustering, self-organizing maps (SOM), hierarchical clustering, and others.

2.2.3 Reinforcement Learning

In reinforcement learning, the algorithm works on the concept of rewards and penalty. None of the data points in the dataset are labeled, i.e., the expected output is unknown, but the learning takes place in such a way that the system learns the environment on-the-go. The system is given an environment, and a set of actions are defined. Depending on the type of action the system takes, it is rewarded [8]. If the system’s action takes it toward the goal state, it is rewarded. However, if the system’s action takes it away from the goal state, it is charged with a penalty. To carry out this task, an objective function is determined, including all the possible action and state spaces. The main aim is to maximize this objective function, and policy is defined, which is to be followed. The most widely accepted and real-life example of reinforcement learning is how a toddler learns about the positive and negative outcomes of his actions by experiencing the rewards and penalties of those actions.

Machine learning algorithms can be employed in implementing recommender systems. Based on the type of recommender system being used, the data is collected. In collaborative filtering, the data is collected by performing social network analysis and identifying the items bought or liked by user’s neighbors. These data points or choices serve as the dataset for implementing the algorithm. If content-based filtering is used, the user’s past choices are identified as the data points. For example, for a movie recommender system, the movies user has watched in the past, the genre, director, actors, and others are taken into account, and these bits of information constitute the dataset. Further, algorithms are implemented on this dataset to recommend items. Lastly, hybrid filtering can be used for collating data using both supervised and unsupervised learning and presenting the final data points to the system.

2.3 Deep Learning

Deep learning is a discipline of machine learning algorithms that are layered. Here each layer does nonlinear processing producing different abstractions of the data. Each layer takes the output of previous layers as input, hence producing hierarchical abstractions. For example, in computer vision, the original image matrix is the data that is processed. The first layer takes this data, and it performs feature extraction and transformation in such a way that it identifies the edges. It then gives this extracted feature as an input to the next layer, which may create a new layer of abstraction by identifying the orientation of the edges detected by the previous layer. This is repeated numerous times with multiple layers.

In this paper, as depicted in Fig. 5, research work done using several deep learning techniques has been studied.

2.3.1 Why Use Deep Learning?

The web is a vast pool of data; the dimensionality and modality of data present online are very high. To work with such multimodal and complex data with extensive features requires extensive machine learning support. If we use traditional design and technology, the recommendations generated will not be of any use because the system will not exploit the information at hand, and the complex pattern analysis of data would not be done. To solve this problem, we have used deep learning, which can work on highly complex data with hidden features and a high number of training instances. Deep learning’s capability provides breakthrough results by extracting the data on a granular level and analyzing the patterns. Hence, the representation of all the details of data in a joint unified framework is made possible by using deep learning [9].

Conventional machine learning models like Matrix Factorization and others are linear models that can work efficiently on linear data, but when we have to deal with nonlinear data, the computations require complex functions like sigmoid, tanh, etc. To catch such intricate user–item interaction patterns, there arises a need for deep learning models.

The web is full of illustrative data; even for making recommendations, there is a lot of description and content attached to data. To understand this information and use it to our advantage, we have employed recommender systems. Another significant advantage of using deep learning is simplifying the process of feature engineering.

Every set of data has sophisticated features attached to it, and the task of deep learning is to understand the raw features and process them using supervised or unsupervised machine learning algorithms. Similarly, even for the content to every set of data, deep learning makes it possible for the system to use all the available data to generate expert recommendations.

Another important reason for using deep learning is sequential pattern mining. The task of sequence modeling that includes Natural Language Processing, speech recognition, etc. is usually performed by either recurrent neural network (RNN), which has internal memory to learn the next in sequence, or convolutional neural network (CNN) that performs the sequence modeling using temporal computations. We have explained this further in Sects. 4.3 and 4.6, respectively (Table 1).

3 Research Methodology

In this paper, various parameters were considered for identifying the relevant research papers to be included in the study. The broad area of consideration was deep learning in recommender systems. In this section, the research methodology adopted in this paper has been explored. Broadly, the research methods have been based upon ten attributes, i.e., publisher of the paper, year of publication, number of citations, and location of performing the study (Table 2).

3.1 Application Domain of Recommender Implementing Deep Learning

RQ 1

Which application domains have been included in the study?

There is a need for a dataset to apply machine learning or artificial intelligence algorithms to implement a recommender system. The dataset depends on the application domain of the recommender system. Table 1 presents all the application domains of the refereed papers and several studies performed in that domain.

It can be observed from the table that maximum work has been done in the domain of item recommendations, news recommendations, and movie recommendations.

3.2 Datasets Involved in the Referred Studies

RQ 2

What are the different pre-defined and self-generated datasets other authors have worked on in the past?

In this section, the datasets used in the research papers have been listed. In some papers, authors used publicly available datasets to perform the recommendation process using their proposed model. In other papers, authors scraped data from websites or applications using APIs or manually collected data to implement recommender systems. Table 2 enlists the external datasets used in previous studies, and Table 3 enlists self-generated datasets.

It is evident from Table 2 that the MovieLens dataset is extensively used for analyzing the effectiveness of implementing deep learning in recommender systems. Other widely used datasets are Amazon and Yelp.

3.3 Types of Recommender Systems Used in the Studied Papers

RQ 3

What types of recommender systems have been used in the past studies?

There are various types of recommender systems, depending on the recommendation technique used. In this section, the types of recommender systems used in the studied papers have been listed along with the studies they were used in.

It is evident from Table 4 that most of the studies have been done in content-based and collaborative filtering algorithms. This presents the readers an opportunity to work in other hybrids, context-aware, and other similar recommendation techniques.

3.4 Deep Learning Techniques Used in the papers

RQ 4

Which deep learning techniques have been used in the past research?

To efficiently implement the recommendation process, several deep learning techniques were used in the referred research work. Table 5 lists all the techniques used in the papers and hence, classifies the research work.

It can be seen from the table that the recurrent neural network (RNN) is the most used deep learning technique in recommender systems. Hence, users can try to implement other deep learning techniques and perform a comparative study.

3.5 Metrics Used for Analyzing the Results by Deploying Deep Learning in Recommender Systems

RQ 5

Which metrics have been used to analyze the results?

The result obtained by implementing the recommender systems is analyzed by using various metrics. Different papers deploy different metrics to get a multi-dimensional interpretation of the results. Table 6 enlists the metrics used in the papers. Figure 6 graphically analyzes the metrics used.

This analysis of metrics shows that the recall metric is used by maximum researchers to analyze their results. The graph also helps in analyzing the usage gaps between all the metrics (Fig. 7).

4 Related Work and Original Contribution of the Paper

Some of the significant works in deep learning in recommender Systems are covered in Table 7.

For performing the research papers’ complete study, various aspects and attributes of these studies were divided into columns, and all such columns were collated together to form a spreadsheet. Such information clusters included the problem statement, the dataset, the proposed model, deep learning technique, type of recommender system used, and others. Later in the Conclusions section, the significant research gaps were identified and reported, extracted from the studied research papers. In this section, a holistic study of all the research papers has been presented.

In this subsection, the problems addressed in different papers have been categorized into eleven clusters of deep learning techniques as presented henceforth. Every subsection expounds a detailed research work done in recommender systems for each deep learning technique. At the end of every subsection, a detailed description of the findings and open issues have been presented.

4.1 Multilayer Perceptron (MLP)

Multilayer Perceptron (MLP) is a feed-forward network with one or several computation layers and has nonlinear activations. It has at least one layer that is connected in a feed-forward manner [106]. It can also be used to transform the linear methods of recommender systems into nonlinear models. Convolutional neural networks (CNN) are also a kind of feed-forward network.

In a deep forward neural network, the information flows in one direction across multiple layers, as shown in Fig. 7. The output from one layer becomes the input into the next layer. This architecture does not consist of any cycles, and hence it is called “feed-forward.”

For a feed-forward neural network with D inputs, x = [x1,…..,xD], a layer with K hidden nodes h = [h1,…..,hK], then the output node y is given by Eq. 4:

where v = [v1,…..,vK] \( \in {\mathbb{R}}^{K} , W = \left[ {w_{1} , \ldots ..,w_{K} } \right] \in {\mathbb{R}}^{D \times K} \), f represents the nonlinear activation function.

The given Eq. 5 mathematically represents the value of every hidden node.

4.1.1 Wide & Deep Learning

The wide and deep learning model is capable of solving both regression and classification problems [107]. The wide learning element is a generalized linear model consisting of a single layer perceptron, whereas the deep learning element consists of the multilayer perceptron. The blend of the two above stated elements leads to the inclusion of both memorization and generalization. The ability of wide learning element to capture prominent features from historical data results in memorization. Whereas the ability of deep learning element to create general and abstract representations results in generalization. The amalgamation of the two results in diverse results and better performance of the recommender system.

The architecture of this model is given in Fig. 8.

This concept can be represented mathematically using Eq. 6, which represents the wide learning element, and Eq. 7, which represents the deep learning element, respectively. The final wide & deep learning model is represented by Eq. 8:

Here, \( W_{\text{wide}}^{T} \) and b represent the model parameters, x represents the raw input feature, and \( \phi \left( x \right) \) represents the transformed feature.

Here, l represents the lth layer, \( f\left( \cdot \right) \) represents the activation function, \( W_{\text{deep}}^{l} \) represents the weight term, and \( b^{l} \) represents the bias term

Here, \( \sigma \left( \cdot \right) \) represents the sigmoid function, \( \hat{r}_{ui} \) represents the binary rating label, \( a^{{l_{f} }} \) represents the final activation.

Authors in [108] used a feed-forward multilayer neural network for collaborative filtering recommender systems. Once the model learned the hidden factors and features, the system feed-forwarded the latent features to identify <user,item> pairs having NULL ratings. The authors [57] realized that the usage of hashtags in social media required additional efforts by the user. Hence, they used the deep forward neural network to recommend relevant hashtags to the users. To carry out this task, first, they performed tweet collection and data preprocessing, followed by feature engineering by vector generation. In the end, they performed training and evaluation. In another work, the authors addressed the sparsity of data by using Wide & Deep learning by training comprehensive linear models with feed-forward deep neural networks [104].

It was found out that rating prediction techniques often rely on user’s private information that is a threat to privacy. Hence, the authors came up with a feed-forward neural network-based dTrust model that used the topology of anonymous user–item interactions that combined user’s trust relations with user rating scores [55]. In another implementation of the feed-forward deep neural network, the researchers addressed the 0/1 recommendation problem by combining the ratings given by users and Natural Language Processing (NLP) of texts [29]. For using hashtags, user-defined tags usually suffer from various problems like data sparsity, redundancy, ambiguity, and others. To solve this problem, the authors [58] abstracted features that were used to make recommendations instead of raw data. The authors also addressed this problem, and they solved it by using deep neural network [59].

In another work, the authors observed that since recommender systems influence both content and user interactions to create recommendations that adapt to the users’ preferences, this can be used as a leverage to improve recommendations [27]. They proposed a Deep Space model that learned a user-independent high dimensional based on their substitutability, semantic space items were positioned, and according to the user’s past preferences, it learned user-specific transformation function to convert this space into a ranking. In news recommender systems, modeling temporal behavior, the cost of estimating the parameters also increases, making the recommendations costly. The authors in [22] used the deep neural network to address this issue. It was observed by some researchers [56] that the preference of choosing friends in social media did not always match. Hence, it became challenging to recommend friends on online social networks. Thus, they introduced a deep learning approach to learn about both user preferences and the social influence of friends for an effective recommendation. In the paper [44], as a solution to the cold-start problem in music recommenders, the authors combined text and audio information with user feedback data using deep neural network frameworks.

Some authors in [53] observed that sometimes erroneous police photo lineups resulted in the conviction of innocent suspects. To avoid this, they came up with a two leveled approach. The first one was based on the visual descriptors of the deep neural network, and the other was based on the content-based features of persons. The authors [14] proposed a model based on the deep neural network for product recommendations, which required only ratings for making recommendations. In another work [18], the authors realized that the amount of calculation for the learned model to predict all user–item pairs’ preferences was humungous. To solve this problem, they proposed a TDM attention-DNN (tree-based deep model using attention network). For an appropriate research paper recommendation to the scholars, a study [64] used the deep neural network with the paragraph vectors re-ranking method for adequate recommendations. It was observed by some researchers that side information written for business reviews were seldom taken into account for the recommendation [16]. Hence, they used an artificial neural network in a hybrid recommender with the inclusion of side information. Some authors [17] addressed the issue of cross-domain in social recommendation. To solve this problem, they introduced the model Neural Social Collaborative Ranking (NSCR), which immaculately integrated user–item interactions of the information sector and user–user social relations of the social sector.

In a study, it was observed that the existing recommender systems did not take into account the effect of distrust among users [87]. They generate recommendations pertaining to the trust relations among users. As a solution, items were recommended for both the trust and distrust relations among active users. In this paper [109], the authors proposed a novel recommender system RecDNNing that combined the user and item embeddings using a deep neural network. First, the authors created deep embedding for users and items, and later the average and concatenated values of those embeddings are given as input to the system. The deep layers generated recommendations using the forward propagation method. The authors in [110] used the hybrid of content-based and collaborative filtering approaches to create a deep classification model for generating efficient music recommendations. To carry out this implementation, they came up with the Tunes Recommendation Systems (T-RecSys) algorithm. Authors in [111] proposed a deep learning-based novel collaborative filtering algorithm. The input of the system were the normalized values of user and item rating vectors. This resulted in decreasing the time complexity as the system need not learn the features of users and items. In paper [112], the researchers applied deep learning in the domain of agriculture. They used the Twitter platform to scrape agriculture tweets and applied sentiment analysis on those tweets. This helped the authors to predict the sentiment range of agriculture tweets.

4.2 Findings and Open Issues

Deep feed-forward networks Multilayer Perceptron is used to approximate any measurable function to any given degree of accuracy. It also acts as a basis of typical advanced approaches and is widely adopted in numerous domains. Implementing Multilayer Perceptron for feature representation is very elementary and remarkably efficient, although it might not be as demonstrative as autoencoders, CNNs, and RNNs.

However, one major limitation of deep feed-forward neural networks is that they do not have memories or loops for remembering preceding computations [113].

One of the primary reasons for employing Deep Neural Networks is that they are synthesized so that multiple neural networks can be merged into one big differentiable function and trained end-to-end. This application becomes a necessity because of the abundant availability of multimodal data. The DNN framework for recommender systems typically extracts user and item feature vectors or latent and explicit features. Another significant advantage of Deep Neural Network is that it can effectively extract essential features from raw data automatically.

The effect of upper-case letters in hashtags used for hashtag recommendations can be explored, and its effect on the recommendations can be analyzed in detail. Another scope in the future is to analyze the effect of language modeling on prediction performance. Another scope is to remediate the cold-start problem for user-based Collaborative Filtering by learning the similarities between user-to-user and user-to-job. This can be implemented on a multimodal document embedding.

4.3 Multi-view Deep Neural Network (MV-DNN)

Multi-view deep neural network (MV-DNN) is excellent in modeling domain recommendations [107]. It considers users as the main view and every other domain, say Z, as a secondary view. For every secondary or auxiliary user-domain pair, there exists a specific similarity score. The loss function of MV-DNN can be computed using Eq. 9. The architecture of MV-DNN is represented in Fig. 9.

Here, \( \theta \) represents the model parameters, \( \gamma \) represents the smoothing factor, Yu represents the user’s output view, a represents active view’s index, and Rda represents view a’s input domain.

Figure 10 showcases the structure of the Deep Structured Semantic Model (DSSM) [11]. The crude textual features are fed as input in the form of a high-dimensional vector. The DSSM forwards these inputs to two neural networks separately and maps them into semantic vectors into a joint semantic space.

In Eqs. 10, 11, and 12, x represents the input vector, y represents the output vector, Wi represents the ith weight matrix, and li represents the hidden layers, such that \( \forall i \in \left\{ {1, \ldots .., N - 1} \right\} \). q represents the query and d represents the document.

The activation function is given by Eq. 13:

The semantic relevance score between a query Q and a document D is given by Eq. 14:

MV-DNN can be implemented in several domains. Conforming to the concepts of user-based collaborative filtering, as discussed in Sect. 2.1.2, users having similar preferences in one domain tend to have similar preferences in other domains as well. However, in many cases, this assumption may be rendered ineffective. Hence, the basic knowledge of the correlations between different domains is an essential aspect of MV-DNN. It is based on a Deep Structure-based Semantic Model (DSSM). In MV-DNN, the architecture of DSSM contains multiple views to map sparse features in high dimensions into the low-dimensional dense matrix. The authors observed that the online services present humungous content to the users, making this content user-centric [11]. Hence, they came up with the Deep Structured Semantic Model (DSSM), which used content-based filtering to improve the recommendations.

The authors on [30] observed that current CF-based techniques could only comprehend a single type of relations. RBM, for example, considers either user–user or item–item relations. The matrix factorization approach considers use-item relations only. To fix this problem, the authors propose a framework that first learns low-dimension user and item vectors. This captures the user–user and item–item relations. This is then passed to a multi-view deep neural net, which models the user–item interaction.

4.4 Findings and Open Issues

Initially, Multi-view deep neural network (MV-DNN) was incepted for performing cross-domain recommendations.

However, the understanding of cross-domain recommendations may not be fruitful for MV-DNN. This is said essentially because the underlying literature of generating recommendations states that if a user likes an item a and there exists an item b similar to item a, then the user will like item b as well. However, this is not always true. For the times this hypothesis fails, this assumption becomes obstructive for the implementation of MV-DNN.

4.5 Convolution Neural Network (CNN)

For bio-inspired Multilayer Perceptrons and Computer Vision, CNN are the most used deep learning models. The essential elements of CNN are the convolutional layers and the subsampling layers. The convolutional layers work as a filter for the output from previous layers. These convolutional layers hence produce filtered outputs. The subsampling layers subsample the convolution output based on their activations [114]. To create a deep CNN model, the convolutional layers and the subsample layers are added alternatively. Hence, such a deep model of CNN can learn a hierarchy of complex features. Another massive advantage of CNN is that it has fewer parameters than the traditional feed-forward neural networks. This method is based on the feed-forward deep neural network. To reduce the preprocessing, multilayered perceptrons are used in this model. The architecture of CNN is shown in Fig. 11.

Cooperative neural network (CoNN) is a model where two neural networks work in tandem [15]. To discover novel user and item features, user and item reviews are given as input to the system. A common shared layer is added on the top of the two neural networks which couples them and the user and item features are mapped together into a common feature vector space to enable the interaction among them. Figure 12 represents the architecture of CoNN. Another latent layer is added to the architecture to enable the interaction of user and item latent features.

The two neural networks, i.e., neural network for items (NNi) and neural network for users (NNu), run parallel. The item and user ratings are fed as inputs to the two neural networks, respectively. In the lookup layer, the user and item review texts are placed as matrices of word embeddings. The subsequent layers perform the functions of convolution, max pooling, and full connection, respectively. This layer also acts as a platform for computing the objective function for calculating the rating prediction error. One major drawback of Cooperative Neural Network is that it is incapable of addressing users and items which do not have ratings.

Many researchers addressed data sparsity, and they used CNN in their papers to solve this problem.

The authors in [15] observed that maximum recommender systems ignored the reviews leading to increased data sparsity problem. Thus, they proposed Deep Cooperative Neural Networks (DeepCoNN) model to learn item properties and user behaviors in the review text. Similarly, to solve the issue of the sparsity of data, authors integrated convolutional neural network (CNN) into probabilistic matrix factorization (PMF), resulting in convolutional matrix factorization (ConvMF) [48]. In the research work [92], the researchers solve the data sparsity problem by including tag and user information. First in nearest neighbors set, the most significant user impact is found using similarity metric, to process item information CNN is used. To get the results, the prediction matrix is decomposed by the probability matrix.

The profound use of CNN in the fashion industry was acknowledged in some research papers. The authors in [77] observed that not much work had been done on complicated recommendation scenarios involving knowledge transfer across multiple domains in fashion recommendations. Similarly, for online clothing shopping, current methods did not address the challenges in the cross-domain clothing retrieval scenario completely. The intra-domain and cross-domain data relations were considered together, and the numbers of matched and unmatched cross-domain pairs were imbalanced. Hence, the authors [78] proposed a deep cross-triplet embedding algorithm and a cross-triplet sampling technique to provide improved recommendations.

Some other applications of CNN have been included in the survey. For efficient venue recommendation, the authors proposed a City Melange framework that matched the interacting user to social media platforms with similar interests [80]. As a result, this approach could recommend both on- and off-the-beaten-track locations to the users. In another work, the authors proposed a hybrid music recommender system that used CNN to model real-world users’ information and high-level rendering of audio data [102]. The authors observed in [52] that finding appropriate Ukiyo (a Japanese firm) e-prints that intrigue a novice is challenging. Thus, they proposed Ukiyo-e recommendation using a deep learning-based CNN model to present recommendations. The input to the recommender system is an image provided by the user. The CNN model is used to create a classifier that takes input images and other information related to the image and outputs whether the user will like or dislike it. The authors in [93] used tag information and user information and improved the recommendation by obtaining the nearest neighbor set that significantly impacted on the target user. They named their model convolution deep learning model on Label Weight Nearest neighbors (LWNCDL). Traditionally, candidate articles were handpicked from a vast pool of articles, and hence, its recommendation was manual and laborious. To solve this problem, the Dynamic Attention Deep Model (DADM) was proposed, which used multiple neural networks [50]. As a result, the authors used the convolutional neural network to study the connection between visual and textual inputs and examine the possibility of knowledge transfer from complex domains for expert recommendations.

The researchers in [76] addressed the problem of job recommendation by analyzing semi-structured resumes of candidates. To recommend a job, they used CNN to process the entire input at once, which is important since any part of the text in a resume can affect its semantics. The authors in [115] proposed an AODR model that considers user and item rating texts to infer item aspects and user opinions by applying deep learning. Such item aspects and user opinions were later merged using collaborative filtering to learn insightful information. In paper [106], the researchers introduced an algorithm, Aspect-Based Opinion Mining (ABOM), which analyzed the user reviews extensively to learn hidden features that further improve the recommendation accuracy. To carry out this task, they used multichannel deep CNN (MCNN) and Tensor Factorization (TF) machines. In paper [116], the authors used decentralized knowledge graphs in deep recommender systems to validate the efficiency of the system. The knowledge graph was constructed by crowdsourcing. The authors in [117] created a passenger hunting recommender system. The proposed system consists of two components, i.e., offline training and online implementation. In the former component, the authors applied deep CNN, and in the latter component, the authors proposed the DL-PHRec method. Hence, the authors could generate a personalized ranking list of destinations regions for each taxi driver.

4.6 Findings and Open Issues

CNNs are efficient in handling unstructured multimedia data with convolution and pooling functions. The majority of CNN based recommender models use CNNs for performing feature extraction. CNN based models are instrumental in learning deep features to model user and item latent factors. The significant advantage of using CNN is its implementation of pooling operation for reducing training data dimensions. CNN has been used for feature extraction to efficiently model user and item representations for Recommender Systems. It is also useful in image processing.

Another new scope is to embed the user’s rating information in a matrix. A Convolution Neural Network can be trained on this matrix, treating it like an image where each data point represents a particular feature of the user. Exploiting Convolution Neural Networks for prediction of risk with medical imaging can be explored.

One major limitation is that it requires massive hyperparameter tuning to extract optimal features. In addition to this, it is also challenging to support intricate activity details. Although CNN uses feed-forwarding, it has fewer parameters than traditional deep feed-forward networks [114].

4.7 Autoencoders (AE)

Autoencoders are typically unsupervised neural networks that are trained to mirror its input as output. It is a tri-layered neural network, consisting of the input layer, hidden layer, and the output layer. The input layer is fed with the complex representations of the dataset, and in the hidden layer, such complex representations are transformed into low-dimensional representations. It essentially mirrors the working of an encoder, which encodes the complex, high-dimensional representations into low-dimensional representations. Mirroring the operations in a reverse manner, the low-dimensional representations are converted into high-dimensional representations as the data travels from hidden layer to output layer. This can also be termed as the working of a decoder. The architecture of denoising autoencoder is given in Fig. 13.

The feature extraction performed as the encoding is not robust enough. It was observed that the addition of Gaussian noise improved the problem described above [118]. Hence, to make the system more robust and training the hidden layers to identify hidden data features, denoising autoencoders were introduced. The structure of such autoencoders encodes the input while preserving the information about the input, and reduces the effect of the alteration process, applied stochastically to the input of the autoencoder. The authors in this work attempted to improve the automatic recommendations [26]. This was done by extracting the features of input data and reconstituting the input to perform recommendations. Authors in [119] extended generative models for the task of Collaborative filtering. To do this, they used Wasserstein autoencoders.

4.7.1 Stacked Denoising Autoencoders (SDAE)

A stacked autoencoder is a neural network having several layers of sparse autoencoders wherein the output of each layer is forwarded as the input of the successive layer. The hidden layers in a denoising autoencoders can be colluded to create a deep network by forwarding the output of the previous layer as the input to the next layer. Such a strong feature extraction capability makes stacked denoising autoencoder aptly suited for recommender systems. The architecture of a stacked denoising autoencoder is given in Fig. 14.

The method of generating top-N recommendations using Stacked denoising Autoencoder starts with selecting a dataset with user reviews [23]. Then, the similarity between the items liked by the user is calculated for each user. Afterward, the nearest data points with a defined length, say K, are selected. Such K-nearest datasets are combined, and the user reviewed movies are excluded from such datasets to form another dataset. The similarity between the two datasets is calculated, and the top-N similar movies among the two datasets are selected and recommended to the user.

Upon stacking the denoising autoencoder, the deep model hence created increases the capability of feature extraction. To make this task robust, Gaussian noise is added to the system.

The authors proposed a Hybrid Recommendation system with CF and Deep learning (HRCD) [101]. It explored the content features of the items learned from a deep learning neural network and applied later to the timeSVD ++ CF model. The authors in [23] addressed the problem of information overloading and data sparsity. As a solution, stacked autoencoders were used with denoising to extract low-dimensional features from the sparse user–item matrix. It was observed that personalized recommendations often led to sparse observations of users’ adoption of items. As a solution to the problem, the authors used Collaborative Topic Regression with Denoising Autoencoder or (CTR-DAE) [34]. Here, the user’s community-based preference was bridged with his topic-based preference.

Similarly, some other researchers addressed data sparsity by proposing a hybrid model that executed deep learning of users and items’ latent factors from side information and CF obtained from the rating matrix [97]. According to some authors, CF recommender systems suffer from Complete Cold-Start (CCS) problem where zero rating records are present and Incomplete Cold-Start (ICS) problems where significantly less rating records are available for new items or users in the system [35]. Hence, they used timeSVD++ models and used temporal dynamics of user preferences and item features for improvised recommendations.

4.7.2 Variational Autoencoders (VAE)

Due to the drawbacks of collaborative based filtering, the authors in [103] used variational autoencoders to include rating and content information for the recommendation. The novelty in the framework is that the latent distribution for content is learned in latent space rather than observational space. In another paper, the authors demonstrated the enhancement of modeling in CF with side information by using Variational Autoencoders-based Collaborative Filtering (VAE-CF) [92]. The authors [120] proposed a novel healthcare recommender system collaborative variational deep learning (CVDL) to eliminate the limitations of data sparsity and cold-start problem from recommender systems to generate useful recommendations. The paper [121] proposes a deep autoencoder model to learn low and high-dimensional features to remediate data sparsity and data imbalance problems in recommender systems.

To make the task of feature selection simpler and more efficient, this paper [122] proposed a fuzzy entropy-based deep learning recommender system to decrease data dimensionality and eliminating unnecessary noise from data. This paper [123] proposed an end-to-end recommender model by taking several content sources, for example, textual content, graphical content, and others. The authors used a stacked autoencoder to carry out this implementation. In this paper [124], the authors identified the limitations of side information in RS. Apart from having extensive detail about the rating, side information also contains a tremendous amount of noise. Hence, the process of feature extraction from such side information becomes challenging. So, the authors proposed a Stacked Discriminative Denoising Autoencoder by merging deep learning with matrix factorization-based RS.

4.8 Findings and Open Issues

Autoencoder is an efficient feature representation learning method that learns feature representations from user–item content features. Autoencoder can be used in recommender systems by learning low-dimensional feature representations at the outer layer or by building the interaction matrix directly in the reconstruction layer. Autoencoder models have a high capability to work with noisy data to learn the complicated and hierarchical structure from the input data. Sparse autoencoders are very efficient for low-dimensional feature extraction from input data using a supervised learning technique. Denoising autoencoders are trained by initializing layers wherein each layer produces input data for the next layer. One of its significant advantages lies in implementing recommender systems as denoising autoencoders can be stacked to decrease the processing errors.

Autoencoders suffer from certain limitations. Autoencoder based Collaborative Filtering (ACF) is incapable of incorporating non-integer ratings, and partially observed features result in low recommendation accuracy. Another major disadvantage of using deep autoencoders is that they are incapable of searching for an optimal solution, and due to high parameter tuning, the time complexity of the training process increases manifold.

4.9 Neural Matrix Factorization (NMF)

Matrix factorization (MF) is one of the most widely and commonly used Collaborative Filtering approaches. Such MF models learn the low-dimensional embeddings of items and users in common latent factor space. The system generates a user–item matrix where the ratings provided for every item by the users are captured. However, due to the problem of data sparsity, the user–item matrix is sparse. Such a sparse matrix is factorized into a dense matrix to make computations simple. This is done by taking the product of two low-rank matrices consisting of user–item embeddings. Upon learning the latent factors, the similarity between user and item is computed. In addition to this, the newly discovered preferences are considered by analyzing the user and item latent factor representations

To learn such latent user–item factors, loss function has to be calculated as given in Eq. 15:

Here, vi represents the latent factor of item i, vu represents the latent factor of user u, rui represents the rating given to item i by user u, and \( \lambda \) represents the item bias to prevent overfitting.

Factorization Machines (FM) are supervised learning models that are used in various prediction problems like regression, classification, and others. These models map random real features into low-dimensional latent feature vector space. They can determine the parameters of the model precisely given a sparse user–item matrix and train with linear complexity. Such characteristic makes FMs ideal for implementing real-world recommender systems.

4.9.1 Deep Factorization Machines (DeepFM)

DeepFM is a model wherein the capabilities of Factorization Machines are combined with deep feature learning to form a novel neural architecture, wherein Factorization Machines and Deep Neural Network (DNN) are integrated to model both low-dimensional and high-dimensional feature vectors.

As the name suggests deepFM is a dual component model, i.e., the factorization machine component and deep learning component. These components share the same input. These two components are jointly trained for the prediction model computed using Eq. 16.

Here, \( \hat{y}\varepsilon \left( {0,1} \right) \), \( y_{\text{FM}} \) represents the output of the FM component, and \( Y_{\text{DNN}} \) represents the output of the deep learning component.

The main advantages of using this model are no requirement for pre-training the model, its capability to learn both low-dimensional and high-dimensional features. The shared feature embedding of FM and deep components enables the system to avoid extensive feature engineering.

Rather than conventional push–pull methodology among the user and item pairs, the authors proposed LRML (Latent Relational Metric Learning) model to learn latent relations that described every user–item interaction [96]. The authors observed in [114] that although Weighted Matrix Factorization (WMF) can learn latent features from implicit feedback, these features are still not good enough to train a CNN model. The authors in [95] proposed a novel matrix factorization method with neural network architecture and novel use of binary cross-entropy loss function.

In another work, the authors identified that Factorization Machines (FM) are incapable of modeling the nonlinear aspect of the real-world data [104]. In [54], the authors observed that the recommendation systems primarily relied on initializing the user and item latent feature vectors. In this way, they used deep learning to estimate the initialization in MF for trust-aware social recommendations and to differentiate the neighborhood effect in the user’s trusted social circle. This was achieved with the help of the deep learning-based matrix factorization (DLMF) model.

4.10 Findings and Open Issues

Neural matrix factorization provides exemplary results for latent feature models; however, it is influenced by methods involving local graph structure.

One limitation of neural matrix factorization is that to investigate the scope of system architectures, activation functions, regularization techniques, and cross-validation strategies. There is a risk of overfitting, which may lead to erroneous or insignificant insights.

4.11 Neural Collaborative Filtering (NCF)

In recommender systems, the users and items collate together to create a mapping that helps to under the similarity between the two and further recommend items to the user. Since the phenomenon of generating recommendations in a dual process, which involves both user latent factors and item latent factors. Hence, to model this two-way interaction between user and item, Neural Collaborative Filtering (NCF) is used. The scoring function of NCF can be computed using Eq. 17:

Here, f(·) represents the multilayer perceptron, \( s_{u}^{\text{user}} \) represents the side information of the user profile, \( s_{i}^{\text{item}} \) represents the side information of the item features, and \( \theta \) represents the parameters of the network.

It becomes easier to combine the neural representation of MF with Multilayer Perceptron to create a model that incorporates the linear characteristics of matrix factorization and nonlinear characteristic of MLP. This results in improved performance of the recommender engine. The cross-entropy loss for implicit and explicit feedback is given by Eq. 18:

Here, u represents the user, i represents the item, and rui represents the rating of item i given by user u.

4.11.1 Collaborative Deep Learning (CDL)

This technique is an amalgamation of deep representation of content and collaborative filtering for the ratings. CDL is a deep learning recommender model that learns from the text of the reviews given by the users [114]. It combines the autoencoder and Click Through Rate (CTR) to model ratings.

To learn from such review texts, CDL implements Bag-Of-Words (BOW) technique. This model has certain limitations, such as it models only item review texts, limiting the capabilities of a recommender system, and due to its usage of the BOW method, it only considers the frequency of words in a text, and not the similarities between different texts. Another limitation of the CDL model is that it considers the order of words in a text which sometimes contradicts the semantics of the text. Figure 15 represents the architecture of SDAE, upon which the SDAE is built. Figure 16 represents the architecture of CDL.

The authors argued that the sole criteria for recommending items were user reviews [102]. However, this often led to data sparsity, hence degrading the recommendation quality. To avoid this, the authors came up with a collaborative deep learning (CDL) model, which simultaneously performed deep learning of content and collaborative filtering for the ratings. In another work, the authors used CDL-based Bayesian deep learning for Recommender Systems model to achieve integrated intelligence that involved both perception and inference in a principled probabilistic framework [89]. In [70], the researchers attempted to improve YouTube recommendations by using a deep collaborative filtering model that efficiently integrated various signals and added layers of depth to the interaction. Some authors in their paper [83] compared the Deep Neural Network (DNN) features with visual features for artwork recommendation and concluded that DNN features outperformed the other.

The authors in [90] presented a novel neural network model, Neighborhood-based Neural Collaborative Filtering (NNCF), which jointly characterized both user–item interactions and neighborhood information for the recommendation.

The authors in [125] indicated that the Collaborative Filtering algorithms primarily deal with low-dimensional and linear interactions between users and items. To address this issue, the authors proposed a novel deep CF recommender algorithm for service recommendations. The authors in [126] used MLP to learn the interaction function to implement the recommender system. The authors [127] proposed a deep multi-criteria collaborative filtering RS to generate accurate multi-criteria recommendations.

4.12 Findings and Open Issues

Collaborative deep learning (CDL) can perform deep learning of content and collaborative filtering for the ratings of items. This allows the model to balance the effects of side information and interaction history.

One significant limitation of the CDL model is that it cannot apprehend contextual information with word embeddings and convolutional filters.

4.13 Deep Belief Network (DBN)

It is a class of deep learning where several layers of hidden units are stacked together to form a deep net. The hidden units present in each layer are not directly connected. They can be viewed as a class of unsupervised networks like Restricted Boltzmann Machines (RBM) [128]. Authors [129] observed that classic neural networks suffer from the problem of optimization. DBN was introduced to solve this problem [130]. This technique integrates supervised and unsupervised learning by implementing a local search to get an optimized result and learn the data distribution without prior knowledge [129]. DBN employs a stacked RBM structure for extracting deep features of data [69]. Thus, it can be viewed as a generative probabilistic model [36]. In DBN, the hidden layers are trained in a bottom-up manner. This pre-training of the layers is termed as the pre-train stage, and upon the addition of the objective layer, the training of the model becomes supervised [131]. Figure 17 represents the architecture of the Deep Belief Network.

The standard RBM is given by Eq. 19

where \( \vec{u} \) is the visible unit vector and \( \vec{v} \) is the hidden unit vector. w represents the weight matrix and \( \vec{x} \) is the visible bias vector and \( \vec{y} \) is the hidden bias vector.

In video recommender systems, generating recommendations for videos becomes complicated because extracting intricate features from graphics is difficult. The workflow of such a recommender system is presented in Fig. 18. To make this task simpler, authors in [69] divided the user–item matrix into 0–1 matrices and feed them as input to the DBN model. Hence, post-training, the difference between trained features and raw features becomes prominent. Afterward, UBCF calculates the similarity between neighboring users to generate recommendations.

4.14 Findings and Open Issues

One of the most significant advantages of DBN is that Deep Belief Network has been successfully employed to perform extensive feature engineering. It is useful in extracting hidden and useful features from audio data [106]. Another significant advantage of using DBN is that it is also used for dimensionality reduction. DBN has also proved to help solve the data sparsity problem, which leads to the cold-start problem and generation of poor-quality recommendations.

However, DBN comes with several limitations as well. Due to extensive parameter initialization, the DBN model is challenging to train. Another major disadvantage of DBN is that it cannot learn the representation of features from labeled and unlabeled data[132].

The training of the Deep Belief Network model can be further optimized to improve the recommendations.

4.15 Recurrent Neural Network (RNN)

Unlike feed-forward deep neural network, the layers present in a recurrent neural network form a graph, and hence the layers interact with each other. The graphs thus formed can either be cyclic or acyclic [107]. Essentially RNN captures the sequential data temporally, which is used for training the model [133]. The RNN takes the input and computes the weighted sums as given in Eq. 20. The architecture of the recurrent neural network is presented in Fig. 19.

where nx are the input nodes and nh are the hidden nodes.

The weights between input and hidden nodes are given by wxh, and whh gives the weights between the hidden nodes. Using Eq. 21, the activation values can be calculated.

The weighted sums of the output layer can be calculated as given in Eq. 22

4.15.1 Gated Recurrent Unit for Recommender System (GRU4REC)

GRU4REC is a session-based recommender system proposed by [134]. The input given to the model is an encoding of 1 of N, where N represents the number of items. The output of the system determines the possibility of an item to move to the subsequent session. If the item is active in the current session, the coordinate is assigned value 1, otherwise 0. The architecture of GRU4REC is given in Fig. 20.

The ranking loss function for this model is computed using Eq. 23:

Here, S represents the sample size, \( \hat{r}_{si} \) represents the scores on negative item i at session s, \( \hat{r}_{sj} \) represents the scores on positive item j at session s, and \( \sigma \) represents the logistic sigmoid function.

In GRU-based recurrent neural networks (RNN), the input given to the system depicts the current state of the system, and the output of the system depicts the subsequent event of the system. Unlike in GRU4REC, where 1-of-N encoding is used, in GRU based RNN for session-based recommender systems, a weighted sum of the representations is used. In such cases, the events which occurred in the past are given as discounted when fed as an input. Hence, to make the system stable, the input vector is normalized.

When multiple feed-forward layers are added to the system, the output predicts whether the item will be included in the next session or not. The addition of multiple GRU layers uses the output of the previous layer as the input to the next layer. This deep connection of layers improves the performance of the system.

In some research studies, the side information was included to make the recommendations efficient. It was observed in [10] that recommender systems based on collaborative filtering could be enhanced by including side information like natural language reviews. To carry out this task, the authors used the bag-of-words technique along with RNN. In [67], according to the authors, current recommendation techniques using RNN only took into account the user’s past activities and did not consider the essential side information. To cater to this problem, they used contextual recurrent neural networks (CRNN) to include the side information to give recommendations. Some authors considered the temporal while making the recommendations and studied its effects. To model the temporal behavior in recommendation systems, a group of researchers [21] introduced the Temporal Deep Semantic Structured Model (TDSSM) based on RNN, where they used long-term static and short-term temporal user choices to improvise the performance of the recommender system.

Similarly, researchers in [62] observed that the conventional POI recommender systems did not consider the temporal aspect while generating the recommendation. Hence, they came up with the idea to capture sequential check-in data of users and used RNN based deep neural network to provide recommendations. Catering to the same issue, the authors in [85] observed that a notable problem with recommender systems is that they do not encompass the context of time. So, they came up with the idea of using RNN and including the temporal shift while performing recommendations.

In a few research papers, the RNN technique was applied for session recommendations. In [37], the authors used the recurrent neural network for session-based recommendations. They incorporated two procedures for improving the model. The first one was data augmentation, and the second was to observe shifts in the input data distribution. Similarly, it was observed in [38] that in the real-life recommender systems, the session-based recommendations were based solely on the clicks of a user session. To solve it, the authors introduced several parallel RNN (p-RNN) frameworks to model sessions depending on the clicks and the features of the clicked items. The researchers in [41] proposed a model for session-based recommendations, where the recommendations were made at the starting of the sessions, which avoided the cold-start problem. In [72], the authors used Long Short-Term Memory (LSTM), a neural network-based RNN technique for quotes recommendation. Similarly, to make recommendations using the data content describing the items, the authors proposed Ask Me Any Rating (AMAR), which used Long Short-Term Memory (LSTM) networks to simultaneously learn two embeddings representing the items to be recommended and user preferences [65]. The concept of LSTM was also used in [41], where the authors proposed Recurrent Recommender Networks (RRN) to incorporate dynamic recommendations using Long Short-Term Memory (LSTM) autoregressive model.

In some papers, the problem of data sparsity was addressed and rectified using RNN. The authors in [40] introduced a ReLaVaR model that addressed data sparsity by considering the network recurrent units as stochastic latent variables with a former distribution performed over them. Similarly, it was observed that personalizing Adaptive User-Interfaces (AUIs) became difficult with several interaction modules as the item-user matrix is sparse. The authors in [84] used architecture consisting of RNN that performed sequential recommendations of content and control elements to solve this problem. Several other independent research works were performed using RNN. The authors in [46] analyzed that in documents, the semantic similarities between texts were not considered for making recommendations. They were able to effectively model the content of the abstracts of the documents using linear regression based on RNN. In another work, it was observed that the evolution and drifting of features might take place over time as users interact with different items. Hence, the authors utilized the recurrent neural network to learn a representation of impact from drift, evolution, and co-evolution of user and item attributes to make recommendations [82]. To improve the performance of multi-task learning, the authors used deep RNN to encode the text sequence into a latent vector trained on the collaborative filtering procedure [49].

Performing recommendations using sentiment analysis of short texts like sentences did not yield correct results due to less contextual information. Hence, the authors used the deep learning technique recurrent neural networks to solve this problem [99]. To find relevant citations or related work, the authors came up with Neural Citation Network (NCN) model to provide a curated list of high-quality candidates from a short passage of text [63]. For generating sequential recommendations, the authors in [13] came up with user-based RNN, which allowed generating personalized suggestions. In another work, to find relevant research articles, researchers usually rely only on the keyword-based search or by following citations. In the paper, the authors analyzed user activities to provide recommendations [65]. For song recommendations, it was observed by the authors that the combination factor of lyrics and genre was not included for the recommendation [45]. Hence, they used RNN to predict the user’s next song of interest-based on the similarity factor. Some authors analyzed that the recommendation quality of rating predictions in traditional systems had scope for improvement [75]. Hence, they proposed a Neural Rating and Tips generation (NRT) framework, which could predict accurate ratings and create conceptual tips and strong linguistic quality. In another work, the authors addressed the limitation of efficient methods in the e-learning area [74].

To improve the recommendations, the authors used Gated Recurrent Unit (GRU) neural networks, a type of RNN. In another work, the authors deployed TARMF (Topical Attention Regularized Matrix Factorization) model that co-learned user and item details from ratings and customer reviews by optimizing matrix factorization and an attention-based GRU network [19].

To fully exploit and understand the user sentiments present in the review texts, the authors in this [135] paper implemented a deep learning-based neural network model, SDRA. Gated Recurrent Unit (GRU) is a type of Long Short-Term Memory (LSTM) model, which is an extension of an RNN model. GRU is a much simpler version of the LSTM model; however, the parameter setting in GRU for better accuracy is much easier as compared to LSTM [131].

4.16 Findings and Open Issues

Recurrent neural network is efficient for using sequential data. Also, for subsuming side information like time, logs, etc., RNNs are beneficial.

An important open issue is for music recommendation systems, the recommendations can be improved by performing temporal analysis of the features of music using recurrent neural network, and fascinating characteristics of music can be uncovered by interpreting automatically learned features. Another scope is to explore recurrent neural networks (RNNs) with bi interaction pooling to model sequential data. To further improve the performance of LSTM models, attention-based memory networks can be explored. The possibility of using a sequence-to-sequence (seq 2seq) learning framework can be explored, aiming to create a convincing recommendation description to aid customers in making a better purchase.

An important limitation of RNN that can also be considered as an open issue is that although applying the neighborhood approach in RNN can lead to good results, but it is also suggested that some baselines in recent research studies are not well-justified and correctly evaluated.

Gated Recurrent Unit primarily addresses the problem of the vanishing gradient. GRUs often combine several memories and gates to record sequential activities. Another advantage of this model is that it can perform rating prediction and create general tips with linguistic quality. This model efficiently stores contextual information for rendering user–item latent features into a brief sentence.

4.17 Hybrid Networks

4.17.1 word2vec and Convolutional Neural Networks

The authors in [79] worked on a blog recommendation. They stated that due to a large number of blogs surfacing each day, it was crucial to recommend the right blogs to the right users. For this, they came up with the Boosted Inductive Matrix Completion method. Hence, they used the side information of users and blogs for adequate recommendations.

4.17.2 RNN and CNN