Abstract

Deflection measurements on structures continue to be a challenge with current sensor technologies. Material degradation and changes in the mechanical properties over time (e.g. creep and shrinkage in concrete bridges) directly impact the deflections exhibited by a structure. In this article, we introduce and discuss the evaluation of a novel laser- and video-based displacement sensor prototype to monitor displacements and rotations on structures remotely. The sensor is inexpensive, using off-the shelf components, but also accurate and practical for situations that do not allow the use of conventional displacement sensors, which require a reference base. In contrast to other image-based approaches such as digital image correlation (DIC) or Eulerian-based virtual video sensors (VVS), the digital camera of our proposed solution is located at the measurement location on the structure. The sensor was evaluated using laboratory tests to determine the practicality, accuracy, and sensitivity to lighting conditions. The accuracy of the sensor was found to be approximately ± 0.9 mm (± 0.035 in) (95% prediction limits) for a 30.5 m (100 ft) measurement distance under laboratory conditions. Finally, we applied and evaluated the sensor under real-world conditions on a concrete deck/single steel box girder pedestrian bridge under static and dynamic loading conditions as well as on a five-story steel moment-frame building under ambient conditions. Essential for field applications, the results demonstrate the prototype offers an inexpensive yet practical and accurate solution for monitoring displacements and rotations remotely.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Deflections and rotations may be the most important variables associated with structural health since they directly correlate with the serviceability of the structure [1]. Moreover, effects such as creep, shrinkage, and prestressing losses in prestressed/post-tensioned structures directly impact deflection. The same observations can be made with regard to the effects of environmental processes on a structure (e.g. corrosion, carbonation, overall structural aging, etc.).

Although highly useful, monitoring of deflections on structures has proven to be challenging due to the shortcomings of current displacement measurement technologies, the most important one being that they require a reference base [2]. In addition to the harsh environmental conditions often surrounding bridges and civil structures such as parking garages and tall buildings, the scale of these structures often makes displacement measurements more difficult. The current commercially available technologies to measure displacements such as linear variable differential transducers (LVDT) or potentiometers, GPS-based systems, accelerometers, and laser distance meters, either require the sensor to be connected to a fixed reference, are of low resolution, are unable to measure slowly-varying displacements, or are expensive, respectively [3]. Thus, a cost-effective and reliable solution for monitoring slowly varying displacements on structures is needed.

A significant amount of research has been performed and is published around the monitoring and evaluation of dynamic properties of structures using video-based sensors. Measurements have typically been carried out in short intervals of seconds or minutes. Results from these tests are typically compared to more traditional sensors such as accelerometers and LVDT [4,5,6,7,8,9]. Most video-based sensor solutions to date have placed the camera at a fixed location off-structure [10,11,12,13,14,15]. The camera is then pointed at an area of interest on the structure and data are collected. Fuhr et al. proposed a methodology using diffraction gratings and CCD arrays to measure linear displacement and two angles at a remote point of interest [16].

Our proposed sensing approach instead places a digital camera at the measurement point of interest on the structure. A set of lasers is placed at a fixed location off-structure and then focused on a translucent panel attached to the sensor unit containing the camera [17]. Any deflections experienced by the structure are directly experienced by the camera [18, 19]. The movement of the camera directly corresponds to movement of the laser dot location on the translucent panel (recorded direction of laser movement being in the opposite direction of the movement observed by the camera). A similar method was proposed by Zhao et al. in which a smartphone camera is used to record laser projection displacements on a projection panel [20]. While there are similarities between the two approaches, the main difference lies in the “packaging” of the movable part of our sensor. The proposed methodology encloses the camera inside an opaque box and measures laser dot movement against a translucent panel from inside (see Fig. 1). Also, the camera is positioned perpendicular to the translucent panel, eliminating the need for projection angle transformations (setup used by Zhao et al. placed the panel at 45 degree angle to camera [20]). An added benefit of our novel approach is that the sensor is less sensitive to rotational effects placed on the camera itself.

In the following sections, the proposed laser and video-based displacement sensor is described in detail. Two laboratory-based studies aimed at quantifying accuracy, repeatability, and sensitivity to varying lighting conditions are presented and the results from two real-world applications are discussed.

2 Sensor design

2.1 Components, equipment, and setup

Our proposed laser- and video-based displacement sensor is comprised of two main components: a fixed part and a movable part. Figure 1 provides a general overview of these two components. The fixed part (Fig. 1a) is placed at an immovable location where it remains fixed for the entire duration of planned monitoring. It is comprised of two laser emitters secured to a fixed support. For this prototype, two inexpensive lasers (Manufacturer and model: Pinty, FBA_Pinty GLS) were utilized that emit green light, producing two dots when focused on the translucent panel of the movable part of the sensor (Fig. 1b). Appropriate protective gear needs to be used when working with lasers. One element of the system that significantly affects the operational life is the power consumption. The proposed system, including fixed and movable parts, can be powered either by (a) batteries alone, (b) batteries in conjunction with photovoltaic systems, or (c) line power, depending on availability.

The movable part of the sensor (Fig. 1b) is comprised of three main elements: a translucent panel, a series of (8) red light-emitting diodes (LED), and a digital video camera. The translucent panel is made of medium-weight plain white paper stock, measuring 100 mm (4 in) (= width) × 150 mm (6 in) (= height). The panel is fastened securely to the sensor case to ensure the panel remains planar and orthogonal to the camera. The red LEDs are located around the perimeter of the panel and used to provide a reference coordinate system for calculating displacement and rotation of the movable part of the sensor during monitoring. The digital video camera used for this prototype is a GoPro Hero 3-Black Edition (GoPro, San Mateo, CA, USA). Image resolution used during single image data collection is 3000 × 4000 pixels with images being recorded in the RGB color space. Videos are recorded at 30 frames per second (fps), with a resolution of 2704 × 1536 pixels in the RGB color space. The camera is fixed to the inside of the sensor case via a 3D printed case bracket attached to the case. The camera location was chosen so that the recorded image captured the entire translucent panel and as little area beyond the panel as possible, while remaining within the focal length requirements of the lens.

The focal length of the standard lens that comes with the GoPro Hero 3-Black is 15 mm (0.591 in). The resulting image captured with this short focal length results in what is commonly referred to as a “fish eye effect”. To minimize the distortion of this in-camera, a 10 × magnification lens (Brand and model: Vivitar, Series 1 Close-Up Macro Lens (Sakar International, Inc., Edison, NJ, USA)) was attached to the face of the camera.

The GoPro Hero 3-Black Edition was purchased in 2014 for $400 USD. The following components were all purchased in 2019: a 64 GB SD memory card for $20 USD, two Pinty lasers for $20 USD each, and the housing for the movable part of the sensor for $40 USD (fabricated on a 3D printer). For a long-term setup, the movable part of the sensor could become more costly, requiring parts with higher resistance to environmental exposure. However, due to the nature of technological and material advancement, there would likely be a marked decrease in the cost of some of the components making up the system (e.g. video camera, lasers, data storage hardware), especially given the wide range of applications in which they are currently used.

Installation of the sensor in the field is relatively straight forward, but does require care. The movable part should be attached firmly to the structure with clamps or adhesive at the measurement location. The fixed part should be installed securely at a location that provides a direct line of sight and that does not move or vibrate notably. During installation of the fixed part, the lasers need to be oriented such that they point near the vertical midpoint of the translucent panel with enough space between the projected laser dots for the processing software to distinguish the two individual laser dots. The GoPro camera housed in the movable part can be accessed via cell phone app using a WiFi connection, which works for distances up to 100 m (330 ft) when a range extender is used. For longer distances, or when no range extender is used, a second person can be within range of the movable part to verify the lasers are set up appropriately and communicate that via cell phone or two-way radio transceivers.

2.2 Sensing methodology

The overall goal of the research was to develop a sensing methodology for capturing static and dynamic displacements on structures. A successful methodology is both accurate and repeatable, while minimizing data processing times. Two data processing approaches were considered for this research: centroid detection with color thresholding of the green laser dots used as reference points and cross-correlation techniques, which maximize a function describing the displacements between an image with a known location and orientation in space and an image of unknown location and orientation. Figure 2 provides a flowchart of the processing steps used in the sensing methodology evaluated in this article.

2.2.1 Distortion correction

As mentioned in Sect. 2.1, a 10 × magnification lens was attached to the face of the camera to minimize the “fish eye” effect of the small focal length of the camera. However, upon visual inspection of the captured images, it was apparent that not all of the distortion caused by the small focal length of the camera was removed. Additional post-processing of captured images to remove remaining distortion was performed prior to color thresholding procedures being applied [21,22,23,24]. Results were then compared to pre-distortion correction color thresholding results. The intent of the comparison was to determine the accuracy gain obtained by performing the distortion correction as well as the processing time required.

As part of MATLAB’s Computer Vision Toolbox [25], several tools are available to assist in correcting image distortion. The MATLAB function [J, newOrigin]=undistortImage(I, cameraParams) was used in this study, where \(J\) is the output undistorted image, newOrigin is a 2-element vector containing the output image origin, \(I\) is the M-by-N-by-3 truecolor input image, and cameraParams is the object used to store camera parameters. The camera parameters are determined using the estimateCameraParameters() function in MATLAB. This function returns an object containing estimates for the intrinsic and extrinsic parameters and distortion coefficients of a particular camera. Several images from the camera in question are passed to the function containing images of a calibration checkerboard. Along with the images, the real-world dimensions of the checkerboard squares are passed to the function. For the calibration used during this research, 11 images were used in the calibration process. Checkerboard squares were measured using a digital caliper and determined to be 18 × 18 mm. Figure 3a shows one of the checkerboard images prior to processing and Fig. 3b shows the same image after processing the image using the distortion correction parameters determined from the estimateCameraParameters() function.

2.2.2 Centroid detection technique

The goal of the video/image centroid detection technique is twofold. The first goal is to accurately and efficiently extract the centroid location of each green laser dot in a two-dimensional space. The second, and equally as important, goal is to orient the centroid location extracted from each green laser dot with respect to some known “constant” location. In the case of this study, the red LEDs located around the perimeter of the sensor case serve as the fixed location by which the green laser dots can be oriented (see Fig. 4a). The laser emitters project a set of two green dots onto the translucent panel of the sensor.

The first task when approaching this processing step is to correctly identify the centroid of each green dot within a single image/frame taken from the camera. This is accomplished using color thresholding procedures, e.g. following Huang and Wang [26]. Each image file contains information regarding the color and intensity of each pixel within the image. Color thresholding allows for the isolation of certain pixels within an image that fall within pre-defined color/intensity criteria. Certain colors, and certain color intensities, can then be isolated within an image. Kromanis et al. used a similar approach by developing a MATLAB-based application that can track the locations of “blob-like” objects based on color and brightness differences from the surrounding regions [27].

Preliminary testing was performed to determine the thresholds necessary to repeatably identify only the green dot locations. A sample image containing the two green laser dots was recorded that were desired to be isolated. Each image was broken into its three primary color bands: red, green, and blue. Histograms were generated for each of these color bands to determine the location of highest intensity within each color band. Threshold boundaries were then selected to capture the most data within the green band, and the least data in the red and blue bands. Once isolated, additional pixel information within the image was removed to improve processing times.

Figure 5 displays an example of how the thresholding procedure works. The three grayscale images across the top row display the isolated red, green, and blue color bands contained in the image. Lighter, white, pixels are indicative of higher color intensity at that location. The second row of images within the figure show histograms for each color band. These identify the quantity of pixels at each intensity level.

Thresholds were then placed on each color band to mask out the undesired color ranges from each image. In the case of this study, the green laser dots have higher intensities of color within each color band. Specifically, having the following boundaries for each color band allowed for reliable and repeatable green laser dot isolation:

Red color band threshold: 200–255.

Green color band threshold: 200–255.

Blue color band threshold: 200–255.

Once the color band thresholds have been applied to the original image, the resulting image contains only the image data of interest. From here, a built in MATLAB function called regionprops() is used to extract several different properties from the image, such as areas of grouped pixels containing data, perimeter of those grouped pixels, and the centroid (center of mass) in 2-dimensional coordinates of the grouped pixels.

Figure 4 shows the programmed MATLAB thresholding procedure and centroid detection technique at its intermediate steps: Fig. 4a shows the undistorted image upon being imported into the program, Fig. 4b shows the mask resulting from the applied color band thresholding, and Fig. 4c shows the original image with the locations of centroids calculated from regionprops() superimposed in the respective locations.

Several algorithms comprise the regionprops() function, but the main interest for this research is the centroid calculation. After the thresholding procedure has cleared all pixels in the image that do not contain data relevant to the green laser dot locations, the regionprops() function is called. The function first loads the original image and converts it to black and white. For this, all pixels with data relevant to the laser location are assigned the color white (a value of “1” in the image array), and all other pixels are assigned the color black (a value of “0” in the image array). The function then fills any small holes existing in the regions of interest to ensure that a continuously filled region exists. The area of the region is then calculated based on pixels contained in each enclosed region. Working row by row, and column by column, the program determines the area contained within each row and each column of the image array. The weighted centroid is defined as the following:

where XCEN and YCEN are the coordinates of the centroid of each laser dot, xi and yi are the centroid coordinates of each pixel containing laser data (i = 1 to Nref), and Ai is the area of each pixel [28,29,30,31].

2.2.3 Cross-correlation techniques

Cross-correlation was used as an alternative to the centroid technique discussed in Sect. 2.2.2. Cross-correlation measures the similarity between two signals as a function of distance between the two. Instead of attempting to locate the centroid, cross-correlation attempts to mathematically describe the difference between two signals. This concept can be incorporated into image-based analysis, where data extracted from an image (the “signal” of the image) are compared to a reference image by means of cross-correlation to determine the displacement function between the data from the two images. For discrete functions m and n, the cross-correlation function is defined as follows:

where \(\overline{H}\) denotes the complex conjugate of \(H\), and \(k,l\) represent the displacement (lag) row and column indices. The result of cross-correlation analysis produces a value for the shift in each of the two principal axes between the two images, which corresponds to the maximum value of the cross-correlation function. The only variable portion of each image captured by the sensor is the green laser dot locations. Therefore, shifts found during cross-correlation directly correspond to movements observed in the green laser dots.

Several built-in functions within MATLAB are available to perform the desired cross-correlation procedures. For the purpose of this research, the function xcorr2(A, B) was used. The xcorr2(A, B) function returns the cross-correlation of matrices A and B with no scaling. Upon completion of the xcorr2(A,B) function, the maximum amplitude of the returned signal from cross-correlation is identified and assigned to the y principal axis. The ind2sub() function is then used to identify the index location of the signal at the max Y-axis value. The extracted X–Y coordinate corresponds to the shift between the original image signal and the image signal of interest.

The xcorr2() function only produces results to the nearest pixel, hence an alternative cross-correlation technique was implemented that is capable of producing results with sub-pixel accuracy [32]. Instead of using a zero-padded Fast Fourier Transform (FFT) as with traditional cross-correlation techniques used in the xcorr2() function, the alternative method uses selective up-sampling by a matrix-multiply discrete Fourier transform (DFT). This approach uses all image data points to compute the up-sampled cross-correlation in a very small region near the peak of the DFT. This method has been termed single-step DFT algorithm (SSDFT) [32].

3 Laboratory tests

3.1 Laboratory study 1: Static displacements

3.1.1 Setup and procedures

The primary goal of the first laboratory study was to characterize the sensor’s response at measurement distances, L = 3.05 m (10 ft), 15.2 m (50 ft), and 30.5 m (100 ft) under static displacements.

Figure 6a shows the fixed part of the sensor, which is comprised of a steel bracket approximately 457 mm (18 in) tall, secured to a heavy steel base. After securing each laser emitter to the fixed support, vertical and horizontal micro-adjusters located on each laser emitter were used to fine-tune laser dot locations on the translucent sensor panel prior to taking measurements. As a starting point, the green lasers were oriented such that they were located approximately at mid-height between the vertical maximum and minimum extents of the translucent panel. Figure 6b shows the configuration of the movable part of the sensor. The base of the movable part of the sensor was comprised of stacked HSS sections, welded together. A vice clamp was connected to the topmost HSS section. A high-precision digital caliper (Brand and model: Neiko (Zhejiang Kangle Group, Wenzhou, China), 01407A) was fixed between the vice clamp and a length of angle steel, which was used as a platform for the movable part of the sensor to mount against. The sensor case was affixed to the angle steel platform with a strong magnet placed on the inside of the sensor case. The angle steel created a movable platform for the sensor where precise vertical displacements could be measured via the digital caliper.

At each measurement distance, L, the movable part of the sensor was moved vertically in approximately 8 mm (0.315 in) increments until the lasers were at the extreme end of the translucent panel. Subsequently, it was moved vertically in the opposite direction, first by 4 mm (0.157 in), then subsequently in 8 mm (0.315 in) increments so that measurements were available at approximately 4 mm (0.157 in) increments across the face of the translucent panel. At each measurement location, three images were recorded. Image resolution used during image data collection was 3000 × 4000 pixels and images were recorded in the RGB color space. Processed data for each of the three images captured were also compared with each other to determine the amount of noise/variation resulting from each of the processing techniques.

3.1.2 Results and discussion

The objective of this study was to determine the sensor’s conversion factor, its accuracy depending on the used image processing technique, as well as demonstrate the improvement due to image distortion correction.

3.1.2.1 Conversion factor

Figure 7 shows correlation plots for each of the three measurement distances, L comparing the recorded caliper reading (measured in mm) with the computed displacement in the vertical axis (measured in pixels) using the SSDFT technique. A first-order polynomial-curve fit function was found as the best fit with an average coefficient of determination, R2 = 0.999 for all measurement distances. 95% prediction intervals were computed and used as a measure of accuracy of the sensor, which is discussed in detail in Sect. 3.1.2.2. The inverse of the slope of the curve fit function can be interpreted as the conversion factor, C, which was found to be independent of the measurement distance:

This conversion factor was used throughout the remainder of the laboratory tests.

3.1.2.2 Sensor accuracy

The accuracy of the sensor was taken as the 95% prediction intervals obtained for the curve fit described in Sect. 3.1.2.1 and computed for all three processing techniques for comparison. In addition, processing times between the three techniques were compared to determine overall processing cost and efficiency of each technique. Figure 8 illustrates the mean 95% prediction intervals vs. measurement distance for each of the three processing techniques and how they compare to each other.

Linear curve fitting was performed between prediction intervals and measurement distance, L for each of the three techniques. Table 1 shows the curve fitting results for each of the three processing techniques performed.

This would indicate that the single-step DFT technique is slightly better than the standard cross-correlation technique for both shorter and longer measurement distances.

The shortest processing time per frame was observed when using the centroid detection technique, averaging 0.313 s/image/frame. Compared to the centroid detection technique, processing times for the SSDFT and standard cross-correlation technique took 10.6 and 278 times as long, respectively.

3.1.2.3 Distortion correction

Pre- and post-distortion correction results were compared to determine the accuracy gained from the distortion correction step for the SSDFT technique. Figure 9 shows the 95% prediction intervals for pre- and post-correction processing as a function of the measurement distance, L. For L = 3.05 m (10ft), the 95% prediction intervals decreased by 84.5%, for L = 15.2 m (50 ft), the 95% prediction intervals decreased by 44.3%, and for L = 30.5 m (100 ft), the 95% prediction intervals decreased by 24.4%. As can be observed, the distortion correction step significantly improves the accuracy of the sensor, with the greatest improvement seen for shorter measurement distances.

3.2 Laboratory study 2: effect of lighting conditions

3.2.1 Setup and procedures

The second laboratory study aimed to gather data on the sensitivity of the sensor to varying lighting conditions. Results for this study are presented and discussed in Sect. 3.2.2.

The fluorescent indoor lighting of the lab was used as the reference lighting condition. The movable part of the sensor was set up in the same manner as described in Sect. 3.1.1, using a measurement distance, L = 7.62 m (25 ft). Similar to Laboratory Study 1, image resolution used during image data collection was 3000 × 4000 pixels and images were recorded in the RGB color space. With the two laser dots focused near the center portion of the translucent panel, three images were recorded under reference conditions. Next, a bright fluorescent lamp was placed so that the entire translucent panel was completely illuminated. Three images were then recorded under these lighting conditions. The intent of this lighting condition was to simulate “direct sun” exposure of the sensor. Next, the fluorescent lamp was oriented so that only part of the translucent panel was illuminated. The lamp was oriented such that one of the green laser dots was within the illuminated portion of the panel, and one green laser dot was located within the unilluminated portion of the panel. The intent of this was to provide a “partial shade” condition. As before, three images were recorded under these conditions. For the final lighting condition, all lights within the lab, including the fluorescent lamp were turned off, with the intent to explore the functionality of the sensor at night. Figure 10 provides sample images recorded during each of the four lighting conditions, along with the exposure information for each.

3.2.2 Results and discussion

For each of the lighting conditions, the three images were processed and compared with each other to determine the level of noise contained in the image data. Since both parts of the sensor remained fixed during this study, any deviation in the calculated displacements was considered as intrinsic noise. Several factors could lead to the deviations observed in the processed data, but the most likely contributors would be small inconsistencies in the weighted average approach to calculating the centroid (centroid technique only) and minute ambient vibrations of the test setup. As discussed in Sect. 3.2.1, images recorded under normal indoor fluorescent lighting were used as the reference condition. Specifically, the location from the three images captured under this lighting condition were used as the reference location. As would be expected, the indoor fluorescent lighting condition, when compared to the reference location, had the smallest deviation, averaging ± 0.008 mm (0.0003 in) or 0.007%. The difference between the direct sunlight and the reference condition averaged ± 0.10 mm (0.0039 in) or 0.058%. Partial shade condition 1 deviation from the reference condition averaged ± 0.03 mm (0.0012 in) or 0.054%. Partial shade condition 2 deviation from the reference condition averaged ± 0.07 mm (0.0028 in) or 0.080%. Finally, the full darkness lighting condition had the largest deviation from the reference condition, averaging ± 0.19 mm (0.0075 in) or 0.164%. The results show that the proposed sensor shows relatively minor sensitivity to varying lighting conditions.

4 Field tests

4.1 Field study 1: monitoring of a five-story building under ambient loading

4.1.1 Setup and procedures

The first field study was performed on the Engineering Building located on Portland State University’s campus. The Engineering Building has five above-grade levels and one below-grade level with an overall height of approximately 15.2 m (50 ft) above ground. The building is constructed of steel moment frames with prestressed concrete slabs at each level. The main stairwell of the building is open, providing a direct view from the lowest level to the top level. The intent of this study was to capture lateral deflections and rotation of the structure under low-moderate wind conditions. The laser emitters were fixed to the floor slab on the top level and positioned in a manner to project the lasers straight down the stairwell to the lowest level. Figure 11a shows the configured setup of the fixed part of the sensor. The movable part of the sensor was located on the lowest level and positioned with the translucent panel pointing straight up, with a direct line-of-sight to the laser emitters (see Fig. 11b).

A frame rate of 30 fps was used for all recordings. This provided a Nyquist frequency of 15 Hz, which was well beyond the expected fundamental natural vibration frequency of the structure. Individual frames extracted from the videos were 2704 × 1536 pixels. Three separate video recordings were taken during the study. Two of the recordings had a length of 30 s, and the final video had a length of 60 s. Upon completion, the recorded videos were imported into MATLAB for processing. Individual image frames were extracted from each video file and stored in a matrix. Similar to Laboratory Studies 1 and 2, individual image frames extracted from the videos were processed using the centroid technique and two cross-correlation techniques to determine the displacement of each green laser dot. The displacements for each laser were stored along with the frame number to create a displacement-vs-time array. A DFT was performed on each dataset to identify primary vibration frequencies captured by the sensor. This was compared with the theoretical first mode of vibration of the structure obtained from current building code formulas and to identify additional frequencies present in the data.

In addition to interpreting results for displacement in the X- and Y-directions, rotational characteristics of the data were analyzed [33,34,35]. The locations of the two green laser dots extracted from the first frame of each video were used as the reference location. The vector slope and magnitude between these initial two laser dot locations were calculated and stored. The same calculation was carried out for each subsequent image frame. The angle between the base vector and a frame of interest was calculated using the following formula:

This equation results only in positive values of the angle, θ. To determine its sign, the difference in the slope between the two laser dots of the image frame of interest and the base image frame were calculated and compared. Positive values were assigned a positive value of θ, and negative values were assigned a negative θ. Like the data located in the X- and Y-directions, a DFT was performed on each dataset for the rotational direction. These data were also compared to the theoretical fundamental natural vibration period of the structure obtained from current building code formulas.

For the calculation of the theoretical natural vibration period of the structure, methods described in ASCE 7-16, Chapter 12.8 were utilized [36]. The following equations were used to estimate the natural vibration period and frequency of the structure:

where \({h}_{n}\) is the height above ground of the structure, and \({C}_{t}\) and x are coefficients taken from ASCE 7-16, Table 12.8–2 (\({C}_{t}=0.028\) and x = 0.8 for steel moment-resisting frames) [36]. Floor heights were approximated at 3.05 m (10 ft), for a total above-ground height of 15.2 m (50 ft). This results in a theoretical period of vibration, Ta = 0.64 s, and a fundamental frequency, fa = 1.56 Hz.

4.1.2 Results and discussion

Figure 12 shows displacement vs. time for each laser in the X- and Y-directions and Fig. 13 shows rotation vs. time for the first recording taken.

First, this test demonstrates that the sensor can monitor horizontal displacements and rotation of a building, which is of interest by itself.

Moreover, a DFT was performed for each laser measurement shown in Fig. 12 in the X- and Y-directions. In addition, a DFT was performed for the angular rotation measured between the two lasers. Figure 14 shows the results of the DFT for laser 1. Key frequencies are labeled, along with the ASCE 7–16 calculated fundamental natural vibration frequency, fa = 1.56 Hz, which is marked with a vertical red bar. For laser 1, a frequency peak at 1.73 Hz can be observed, which is close to the frequency estimated using the ASCE 7-16 formula. Due to the presence of several external excitations on the structure (e.g. people moving, activity in the laboratories, mechanical machinery operations, vehicular traffic outside the building, etc.) and the relatively low wind speeds observed during testing, it could not be conclusively determined whether or not the first fundamental frequency of the building was captured by the sensor. Additional testing under higher wind conditions or using a harmonic vibration generator may provide more conclusive results.

4.2 Field study 2: monitoring of a pedestrian bridge under dynamic loading

4.2.1 Setup and procedures

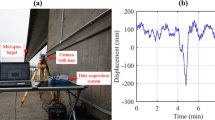

The second field study was designed to capture vertical deflections at the mid-span of a pedestrian bridge under both dynamic and static loading conditions. The pedestrian bridge, located on an unnamed university campus in Portland, Oregon, was chosen for this study. The pedestrian bridge has spans of 35.1 (115 ft), 42.8 (140 ft), and 35.1 m (115 ft), for a total length of 113.0 m (370 ft). The bridge is 3.05 m (10 ft) wide out-to-out, with a concrete deck bearing upon a single steel box girder. Figure 15 provides an overview of the structure.

The movable part of the sensor was placed at mid-span of the bridge near the railing on the west side of the structure. The fixed part was located just off the structure near the southwest corner, to maintain direct line-of-site with the movable part of the sensor, which consisted of the laser emitters affixed to a steel vise. Figure 15a provides an overall plan view of the bridge and the test setup. The measurement distance was determined as, L = 55 m (180 ft). Figure 15b provides a photo of the bridge. The conversion factor presented in Eq. (4) was used to convert pixels to displacement.

To approximate the fundamental natural vibration frequency of the structure, a basic finite element model of the bridge was analyzed using Midas Civil software using measured spans and approximated cross-sectional properties [37]. The results of the analysis determined the first fundamental vibration frequency of the structure to be approximately fa = 2.1 Hz.

Dynamic loading of the structure was accomplished by having four individuals jump in unison at the approximated fundamental natural vibration frequency of the structure determined from the finite element model. A phone-based application (Physics Toolbox by Vieyra Software) was used to capture the accelerations generated by the loading for comparison. The phone was placed face up on the surface of the bridge deck immediately next to the movable part of the sensor, aligning the Z-axis of the phone’s accelerometer with the vertical displacement component of the structure. A sampling rate of 200 Hz was used during data collection for the accelerometer.

Static loading of the structure was accomplished by having four individuals slowly walk across the structure starting at mid-span (loading), then walk off the bridge (unloading), then walk again back to mid-span (loading). Sensor readings were taken continuously during the loading by recording a video at 30 fps.

4.2.2 Results and discussion

For the dynamic loading on the pedestrian bridge, four individuals as described in Sect. 3.4 jumped simultaneously at the approximated first fundamental vibration frequency of the structure determined by the finite element model, fa = 2.1 Hz. The displacement-vs-time recordings taken using the sensor are shown in Fig. 16a. Displacements with amplitudes ranging ± 11.9 mm (± 0.47 in) were observed, corresponding to a deflection ratio of approximately ± L/3000. During testing, the bridge was found to be extremely flexible, making resonance easy to achieve. In fact, once bridge vibrations were achieved, the individuals on the bridge experienced discomfort from the strong vibrations, confirming that the deflections recorded were significant.

A DFT was performed on the displacement data recorded by the sensor to identify dominant frequencies within the recorded data. Figure 16c shows the results of the DFT performed on data collected from laser 1 from the first test. A peak frequency can be observed at 1.93 Hz. The two additional smaller peaks appear to be higher harmonics having frequencies of 3.87 Hz, 5.80 Hz, and even higher ones.

The same DFT procedure was performed on the data collected from the accelerometer used to take measurements during the study (Fig. 16d). A peak frequency was identified at 1.93 Hz, which agrees with the peak frequency obtained from the sensor displacement data.

The frequency of 1.93 Hz is in the range of what would be expected for a bridge of this span, construction material, and design.

Figure 17 shows the results of displacement measurements captured by our proposed sensor during the static test as described in Sect. 4.2.1. As can be observed, the bridge was constantly vibrating at an amplitude of approximately 1 mm (0.04 in) (shown in blue). Despite these vibrations, the loading process is clearly discernible. A line was fitted to the data (shown in black), representing the approximate static displacement as a function of time.

The results of the static loading test show a maximum static displacement of approximately 1.5 mm (0.06 in) when the four individuals were located at mid-span. This test demonstrates our sensor’s ability to capture slowly varying deflections, which are not possible to be measured with an accelerometer.

5 Summary and conclusions

With the rate of advancement in video-based technology and image processing software, it is likely that the accuracy, availability, and applicability of laser and video-based solutions will continue to improve.

Due to the direct correlation between deflection and overall structure serviceability, having a method of measuring deflections on structures is a high priority. Having a solution that is accurate, repeatable, and cost-effective in a wide variety of environmental conditions is crucial. Advances in video-based sensors and video processing therefore offer new opportunities in the field of structural health monitoring (SHM).

The objective of our studies was to determine the sensor’s key characteristics for the monitoring of static and dynamic displacements of structures. Two laboratory-based studies were conducted to determine the conversion factor from optical-digital (measured in pixels) to physical displacement (measured in mm), sensor accuracy using three different post-processing techniques (cross-correlation, centroid technique, and single step DFT), distortion correction necessary due to the physical properties of the camera lens used in the studies, and the effect of lighting conditions on the accuracy and sensitivity of the sensor. In addition to laboratory-based studies, two field studies were conducted (one on a five-story building under ambient loading and one on a three-span pedestrian bridge under static and dynamic loading) in an attempt to evaluate the functionality of the sensor in the field.

Based on the results presented, our proposed laser and video-based displacement sensor is a viable solution for the monitoring of static and dynamic deflections of civil structures such as buildings and bridges. The conversion factor determined during the first laboratory study was found to be independent of the measurement distance between the fixed and movable parts of the sensor, allowing the sensor to be utilized across a wide variety of applications. Three processing techniques were employed and compared to determine the most accurate and efficient methodology for tracking displacements with the sensor. Although the centroid detection technique had the greatest advantage with respect to computational efficiency, the single-step DFT (SSDFT) provided the greatest accuracy while still providing reasonably efficient processing times (3.62 s/image/frame). Overall, our sensor prototype has a resolution of approximately ± 0.9 mm (± 0.035 in) (95% prediction limits) for distances up to 30.5 m (100 ft) with frequencies up to 30 Hz. Laboratory testing under widely varying lighting conditions showed deviations in accuracy to be a less than 0.164% (testing in complete darkness) further increasing the applications by which the sensor can be utilized. To conclude, the findings presented in this article represent the foundation for the creation of a commercial sensor.

The accuracy and reliability of our proposed sensor are mainly affected by rigidity and stability of the fixed part and care is required to ensure the lasers do not move during the measurement. This is of particular importance for static and long-term measurements.

Further research includes characterization of the sensor’s performance for in-field measurements on a variety of structures as well as long-term, to capture slowly-varying deflections, under a variety of environmental conditions.

References

American Association of State Highway and Transportation Officials. AASHTO LRFD Bridge Design Specifications. 2017

Shan B, Wang L, Huo X, Yuan W, Xue Z (2016) A bridge deflection monitoring system based on CCD. Adv Mater Sci Eng. https://doi.org/10.1155/2016/4857373

Hoag A, Hoult N, Take W, Moreu F, Le H, Tolikonda V (2017) Measuring displacements of a railroad bridge using DIC and accelerometers. Smart Struct Syst. https://doi.org/10.12989/sss.2017.19.2.225

Khuc T, Catbas FN (2017a) Computer vision-based displacement and vibration monitoring without using physical target on structures. Struct Infrastruct Eng 13(4):505–516

Khuc T, Catbas FN (2017b) Completely contactless structural health monitoring of real-life structures using cameras and computer vision. Struct Control Health Monit 24:e1852

Zhang D, Guo J, Lei X, Zhu C (2016) A high-speed vision-based sensor for dynamic vibration analysis using fast motion extraction algorithms. Sensors 16:572

Shariati A, Schumacher T, Ramanna N (2015) Eulerian-based virtual visual sensors to detect natural frequencies of structures. J Civil Struct Health Monit 5(4):457–468. https://doi.org/10.1007/s13349-015-0128-5

Schumacher T, Shariati A (2013) Monitoring of structures and mechanical systems using virtual visual sensors for video analysis: fundamental concept and proof of feasibility. Sensors 13:16551–16564

Yu J, Yan B, Meng X, Shao X (2016) Measurement of bridge dynamic responses using network-based real-time kinematic GNSS technique. J Surv Eng. https://doi.org/10.1061/(ASCE)SU.1943-5428.0000167

Ye XW, Dong CZ, Liu T (2016) A review of machine vision-based structural health monitoring: methodologies and applications. J Sensors. https://doi.org/10.1155/2016/7103039

Tian L, Pan B (2016) Remote bridge deflection measurement using an advanced video deflectometer and actively illuminated led targets. Sensors 16:1344

Feng D, Feng MQ, Ozer E, Fukuda Y (2015) A vision-based sensor for noncontact structural displacement measurement. Sensors 15:16557–16575

Shariati A, Schumacher T (2017) Eulerian-based virtual visual sensors to measure dynamic displacements of structures. Struct Control Health Monit. https://doi.org/10.1002/stc.1977

Lee JJ, Shinozuka M (2006) A vision-based system for remote sensing of bridge displacement. NDT&E Int 39:425–431

Yoneyama S, Ueda H (2012) Bridge deflection measurement using digital image correlation with camera movement correction. Mater Trans 53:285–290

Fuhr PL, Huston D, Beliveau JG, Spillman WB, Kajenski PJ (1991) Optical noncontact dual-angle linear displacement measurements of large structures. Exp Mech 31:185–188

Vicente M, Gonzalez D, Minguez J, Schumacher T (2018) A novel laser and video-based displacement transducer to monitor bridge deflections. Sensors 18:970

Feng DM, Feng MQ (2017) Experimental validation of cost-effective vision-based structural health monitoring. Mech Syst Signal Process 88:199–211

Feng DM, Feng MQ (2018) Computer vision for SHM of civil infrastructure: from dynamic response measurement to damage detection—a review. Eng Struct 156:105–117

Zhao X, Liu H, Yu Y, Zhu Q, Hu W, Li M, Ou J (2016) Displacement monitoring technique using a smartphone based on the laser projection-sensing method. Sens Actuators A Phys 246:35–47. https://doi.org/10.1016/j.sna.2016.05.012

Wu LJ, Casciati F, Casciati S (2014) Dynamic testing of a laboratory model via vision-based sensing. Eng Struct 60:113–125

Kohut P, Holak K, Martowicz A (2012) An uncertainty propagation in developed vision based measurement system aided by numerical and experimental tests. J Theor Appl Mech 50:1049–1061

Jeong Y, Park D, Park KH (2017) PTZ camera-based displacement sensor system with perspective distortion correction unit for early detection of building destruction. Sensors 17:430

Sładek J, Ostrowska K, Kohut P, Holak K, Gaska A, Uhl T (2013) Development of a vision based deflection measurement system and its accuracy assessment. Measurement 46:1237–1249

MATLAB (2019) version 9.6.0.1174912 (R2019a). The MathWorks Inc., Natick, Massachusetts. https://www.mathworks.com/

Huang L-K, Wang M-JJ (1995) Image thresholding by minimizing the measures of fuzziness. Pattern Recognit 28(1):41–51

Kromanis R, Xu Y, Lydon D, Martinez del Rincon J, Al-Habaibeh A (2019) Measuring structural deformations in the laboratory environment using smartphones. Front Built Environ 5:44. https://doi.org/10.3389/fbuil.2019.00044

Pan B, Qian K, Xie H, Asundi A (2009) Two-dimensional digital image correlation for in-plane displacement and strain measurement: a review. Meas Sci Technol 20(6):062001

Pan B, Xie H, Xu B, Dai F (2006) Performance of sub-pixel registration algorithms in digital image correlation. Meas Sci Technol 17(6):1615

Quine BM, Tarasyuk V, Mebrahtu H, Hornsey R (2007) Determining star-image location: a new sub-pixel interpolation technique to process image centroids. Comput Phys Commun 177:700–706

Ding W, Gong D, Zhang Y, He Y (2014) Centroid estimation based on MSER detection and Gaussian Mixture Model. In: 2014 12th international conference on signal processing (ICSP), pp 774–779

Guizar-Sicairos M, Thurman ST, Fienup JR (2008) Efficient subpixel image registration algorithms. Opt Lett 33:156–158

Artese S, Achilli V, Zinno R (2018) Monitoring of bridges by a laser pointer. Dynamic measurement of support rotations and elastic line displacements: methodology and first test. Sensors 18(2):3381–3416

Park YS, Agbayani JA, Lee JH, Lee JJ (2016) Rotational angle measurement of bridge support using image processing techniques. J Sensors. https://doi.org/10.1155/2016/1923934

Artese G, Perrelli M, Artese S, Meduri S, Brogno N (2015) POIS, a low-cost tilt and position sensor: design and first tests. Sensors 15:10806–10824

American Society of Civil Engineers (2016) Minimum design loads and associated criteria for buildings and other structures (ASCE/SEI 7–16). American Society of Civil Engineers, Reston

MIDAS: Civil 2015, Gyeonggido, Korea, MIDAS information technology co., Ltd. Civil 2015 version 1.1. https://www.midasoft.com/bridge-library/civil/products/midascivil

Vicente M, González D, Mínguez J (2019) Spanish Patent No. ES 2684134 B2. “SISTEMA Y PROCEDIMIENTO PARA LA MONITORIZACIÓN DE ESTRUCTURAS”, Award Date: Oct. 3rd

Acknowledgements

The authors would like to extend their thanks to Portland State University for the financial support for the studies and use of its facilities and to the University of Burgos for allowing the use of the patent listed in the following section.

Patents

A Spanish patent (patent no: ES 2 684 134 B2) has been granted [38].

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interests

The authors declare that they have no conflict of interests.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Brown, N., Schumacher, T. & Vicente, M.A. Evaluation of a novel video- and laser-based displacement sensor prototype for civil infrastructure applications. J Civil Struct Health Monit 11, 265–281 (2021). https://doi.org/10.1007/s13349-020-00450-z

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13349-020-00450-z