Abstract

Online social networks (OSNs) are now implied as an important source of news and information besides establishing social connections. However, such information sharing is not always authentic because people, sometimes, also share their perceptions and fabricated information on OSNs. Thus, verification of online posts is important to maintain reliability over this useful communication medium. To address this concern, multiple approaches have been investigated including machine learning, natural language processing, source authentication, empirical studies, web semantics, and modeling/simulations, but the problem still persists. This research proposes an effective synergy-based rumor verification method along with a weighted-mean reputation management system to mitigate the spread of rumors over OSN. The model was formally verified through Colored Petri-Nets while its semantic behavior was analyzed through ontologies. Moreover, a third-party Facebook application was developed for proof of concept, and users’ acceptance and usability analysis was performed through Technology Acceptance Model and Self-Efficacy scale. The results indicate that the proposed approach can be used as an effective tool for the identification of rumors and it has the potential to improve the quality of users’ online experience.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Online social networks (OSNs) have attracted millions of users and are consistently growing at a high rate (Ahmad et al. 2022; Imran and Ahmad 2023). The proliferation of OSNs has increased the number of users relying on them as an important source of information, which has altered the traditional means of propagating news (Pourghomi et al. 2017). News over OSN is usually considered equally reliable as the traditional news-generating sources, and almost 86% of American adults rely on OSNs for current news (Shearer 2021). This ubiquitous reachability and sharing of news on OSN have led to the emergence of rumor dissemination (Reshi and Ali 2019) as the content hardly gets verified through any moderation, fact checking, or any other authentication (Clayton et al. 2020). This propagation is even exaggerated because of the self-interest of some users to get more visibility by mixing genuine and fabricated information (Pourghomi et al. 2017).

The spread of rumors is not a new phenomenon rather it has been a concern in traditional environments for many decades (Samreen et al. 2020); however, with OSN, information propagation occurs at a faster rate which intensifies this misleading. The incredible potential of OSN for information propagation causes an impact on the real world, within a few minutes, to millions of users (Ahmad et al. 2019). While consciously misleading news generates fake assumptions in the minds of readers, they also influence personal decisions on several matters like savings, health, online buying, travel, and recreation (Rubin et al. 2015; Muhammad and Ahmad 2021). Even, during the last couple of US presidential elections, Facebook and Twitter encountered criticisms that they might have influenced the voters’ mindset through the immense spread of fake news on their platforms (Le et al. 2019; Mills 2012). The sharing of fake news on OSNs is not only a waste of time, but, in some cases, it may lead to fatal situations (Mughaid et al. 2022), for instance, the killing of a university student by an irate mob (Hoodbhoy 2017) or killing of child abductors by a mob (Martínez 2018) due to ‘news’ spread on social media. In both of these cases, the information turned out to be false but resulted in killings before anyone could ensure their authenticity. As pointed out by the MIT Technology Review report (Rotman 2013), the spread of misinformation contributes to a general decline of Internet freedom all over the world and also leads to violent assaults on human rights activists and reporters. Moreover, anonymous structures like automatic bots (social, spam, and influential), fake profiles, pseudonyms, trolls, false social activists, and impersonators are rapidly developing new methods of disrupting democracy which are even more difficult to track and yet to be fully understood. Several countries are also considering legislative approaches to resolve this issue. For example, the European Union is fighting misinformation by creating a professional group (Funke 2017). Germany took special measures before its 2017 elections to ensure that its systems were secured from meddling. Likewise, Somalia blocked more than a dozen OSNs at some stage of its election (MIT Technology Review 2017). These initiatives highlight the intensity of the problem as different countries are forcefully deploying measures to mitigate the spread at various times and situations.

Currently, multiple methods for rumor verification are available based on different approaches of machine learning (Castillo et al. 2011; Gupta et al. 2013; Granik and Mesyura 2017), natural language processing (Wiegand and Middleton 2016; Dang 2016; Boididou et al. 2018a), source authentication (Ratkiewicz et al. 2011; Gupta et al. 2014; Shao et al. 2016), empirical studies (Ozturk et al. 2015; Chua and Banerjee 2017a; Wang et al. 2018), web semantics (Barboza et al. 2013), and modeling/simulations (Kermack and McKendrick 1939); however, most of these approaches partially address the problem. For instance, machine learning approaches rely on model training which can be defeated by fabricating the rumor and altering its characteristics. In addition, if the data are biased or skewed, it may compromise the whole decision-making process. Likewise, NLP techniques require a sample from the latest event of breaking news in case of misleading real-time content detection. Further, source authentication methods only require a fake developed website to disrupt the whole verification procedure. Though empirical studies provide interesting insights about the spread, features, and propagation of rumors, they also lack in several aspects. For example, they are usually conducted over a span of time so it cannot be used for real-time identification of rumors, and the results are continually afflicted with uncertainty so it cannot be generalized for trusted verification. Further, in the area of web semantics, the major challenges are content availability, scalability, availability of ontology, semantic web language stability, and visualization to decrease information overload. Likewise, in the domain of modeling and simulations, the key challenges are uncertainty, reuse of existing models, and computational issues.

To further complicate the issue, rumor propagation is hard to handle through a single source as rumors are circulated in various forms for instance, fake news, videos, images, etc. Therefore, this research utilizes the knowledge of multiple users as a crowd-based model to enhance positive synergy and the application of reward and punishment theory (Gray 1981; Wilson et al. 1989) through a weighted-mean reputation management approach to combat false information spread on OSNs. Crowdsourcing has solved various complicated problems in the past including economic and social activities (Gatautis and Vitkauskaite 2014), issues concerning public and private organizations (Clark et al. 2019), fundraising (Simons et al. 2019), anti-spamming (De Guerre 2007), and many other technical solutions (Becker and Bendett 2015), but is never explored for the verification of rumors. It is hypothesized in this research that synergy-based rumor verification along with weighted-mean reputation management can be used to verify published content on OSNs more proficiently compared to the existing techniques.

To acquire experimental proof of the proposed approach, the following modules were attained that can also be reflected as the main contributions of this research:

-

(a)

The consistency of the proposed synergy-based rumor verification model was proved using Petri-Nets. Simulations based on the Petri-Nets assisted in understanding the structural inconsistencies of the proposed model including the identification of dead states as well as dependencies among diverse states of the model. The overall effectiveness of the proposed model is improved as several structural problems were resolved during the simulation phase.

-

(b)

The semantics of the proposed model were validated through ontologies. The key benefit of using ontology is the identification of logical dependencies for semantic validation. This facilitated to enhance the functional behavior of the proposed model. The overall efficiency of the proposed model is improved as many logical dependencies were resolved in this phase.

-

(c)

A Facebook application named “Synews-Verification” was also developed as a proof of concept for the proposed verification model. This application serves as a reminder that the concept can be visualized in real OSNs and it has the potential to solve the problem of rumor verification.

-

(d)

Additionally, a survey was also conducted for evaluation of the developed application. The purpose of the survey was to estimate the acceptability of users regarding the Facebook third-party application as well as its usefulness as a real-time rumor verification system.

The rest of the paper is organized as follows: Sect. 2 provides an overview of the related work. Section 3 presents the proposed model in detail. Section 4 outlines the formal verification of the model using Petri-Nets. Ontology is presented in Sect. 5 to check the semantic behavior of the proposed model. In Sect. 6, implementation details are provided. Section 7 presents the results, and Sect. 8 concludes the paper along with future directions.

2 Related work

The aim of this research is the verification of rumors through crowdsourcing. Therefore, the related work for this research can be broadly classified into two major domains: (i) rumor verification in OSNs and (ii) application of crowdsourcing in other domains. This section briefly discusses research work in both of these domains.

2.1 Rumor verification in OSNs

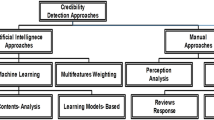

The literature on rumor verification can be categorized into six themes including (a) machine learning, (b) natural language processing, (c) source authentication, (d) empirical studies, (e) web semantics, and (f) modeling/simulation. Different research efforts in each of these themes are explored below:

2.1.1 Machine learning

Machine learning approaches permit computers to learn automatically without human assistance and modify their actions accordingly (Alpaydin 2009). These approaches excel in making predictions based on data that lead to forecasting future outcomes among several domains in a more efficient manner (Wu et al. 2013). In rumor verification, machine learning approaches predict future rumors based on the training of the model on a given data set (Wang 2017). Among various machine learning studies, Khaled et al. (2023) utilized diverse machine learning approaches for enhancing user stance prediction using conversation threads and other features related to communicating users. The experimentation was performed on Twitter dataset, and the results attained an F1-score of 0.7233. Moreover, Luvembe et al. (2023) utilized dual emotion features for fake news detection. Their classification was performed using a deep normalized attention-based approach and an adaptive genetic weight update to extract dual emotion features. During the experimentation, the model outperformed others by 14% improvement in accuracy. Likewise, Suthanthira and Karthika (2022) presented a veracity detection neural network to identify rumor-relevant Twitter posts. Their algorithm used the convolutional sentence encoder (CSE) BiLSTM model along with the pre-trained vectorization approaches. Their experimentation was performed on three different PHEME datasets, and the results achieved accuracy values of 90.56%, 86.18%, and 93.89%. Further, Choudhury and Acharjee (2023) proposed an identification approach based on Genetic algorithm (GA) and ML classifiers for the fitness function. Their experimentation was performed on different sample sizes to find an optimal solution, and results showed that their approach, achieving an accuracy of 95%, outperformed traditional ML classifiers. Moreover, Cen and Li (2022) used BiLSTM for automatic rumor verification in OSN. Primarily, text data were utilized for extracting sentiment features through multiple BiLSTM. Secondly, diverse categories of social features were extracted and two different word vectors were utilized for vectorization. Their output layer includes SoftMax for classification while the model attained an accuracy, precision, recall, and F1 value of 95%, 94.5%, 94.5%, and 94%, respectively. Similarly, Wang et al. (2021) presented a reinforcement learning approach to detect rumors. Their model is comprised of a detection model and a control model. LSTM was used to capture features and sequential properties of the OSN information. Their experimentation was performed on a PHEME dataset, and the results attained an accuracy of 81%. Moreover, Boididou et al. (2018b) presented an automatic classification of OSN posts as trustworthy or untrustworthy through different classification models trained on Twitter data. The experiments reflected an achieved accuracy of 63% in identifying fake tweets. In another study, Granik and Mesyura (2017) detected false news using the Naïve Bayes classifier on a dataset collected from Buzz Feed News. Their classifier achieved an accuracy of 74% on the test dataset. In a similar vein, Jin et al. (2016) verified news from image content on microblogs through various visual and statistical patterns. Their proposed method detected fake news with an accuracy of 83.6%. Likewise, Rubin et al. (2015) focused on the automatic detection of deceptive content in news. A vector space model was applied to gather the news of a radio show based on feature resemblance. Their results showed an accuracy of 63% on the test dataset. Besides, Gupta et al. (2013) extracted temporal patterns from a Tweet dataset for the circulation of false images around Hurricane Sandy through naive Bayes and J48 decision tree classifiers. The results demonstrated that the decision tree classifier performed better than naïve Bayes with an accuracy of 97% in differentiating false images from real ones.

Although the above-mentioned techniques have proved the application of machine learning approaches to identify rumors, there are certain shortcomings in their application for rumor verification. First, the acquisition of relevant data is a major challenge for the training of any classifier. Second, each dataset needs to be cleaned and preprocessed differently to give the right input to different algorithms. Third, machine learning techniques use trained models to predict unseen data, but if the data are biased or not representative, the whole decision-making process may suffer. Fourth, it is not always guaranteed that a machine learning algorithm will classify correctly in each case because the classifiers are trained on certain features that can be easily defeated by fabricating the rumor and altering its characteristics that eventually increase both false positive and false negative rates.

2.1.2 Natural language processing

Natural language processing (NLP) is an area of artificial intelligence, which improves the machine’s ability to analyze and understand human speech (Lytinen 2005). The major goal of NLP is to program human knowledge into computer systems so the latter can interact with the former. NLP not only empowers voice recognition and chatbots but also improves user experiences by allowing communication with machines (Young et al. 2018). In this domain, Shelke and Attar (2022) utilized BiLSTM and MLP models with various feature categories, i.e., user based, text based, lexical, and content based. Their experimentation was performed on a Twitter dataset where the results attained an accuracy of 89% on lexical features along with PCA. Further, continual model improvements assist in attaining an accuracy of 97% in detecting rumors. Sadeghi et al. (2022) presented an approach based on natural language inference (NLI) to detect fake news. Their approach utilized NLI for boosting ML models including DT, NB, RF, LR, SVM, BiGRU, KNN, and BiLSTM along with the word embeddings. The experimentation results showed an accuracy of 85.58% and 41.31% in FNID-fake news net and FIND-LIAR datasets, respectively. Further, Tu et al. (2021) utilized a CNN model for capturing source tweets' textual contents and dissemination structures of diverse tweets on a single graph. Their experimentation was performed on two diverse datasets—Twitter 15 and Twitter 16, and the results reflected an accuracy of 79.6% and 85.2%, respectively. Likewise, Kotteti et al. (2020) presented an ensemble model that used various learning models, including RNN, LSTM, and GRU to build the ensemble model. Their experimentation was performed on PHEME datasets, and the model achieved a micro-precision of 64.3%. Moreover, Guo et al. (2020) presented a deep transfer model based on a stochastic gradient descent algorithm for rumor detection. During the training phase on polarity review data, model parameters were obtained that were fed as input to the detection model. Their experimentation was performed on Yelp Polarity and Five Breaking News, and the model achieved an accuracy, precision, and F1 score of 87.28%, 79.12%, and 82.5%, respectively. Moreover, Shin et al. (2018) examined the communication of political misinformation on Twitter during the 2012 US election. According to their investigation of fact-checking sites, it was speculated that political rumors will continue to propagate in the future due to the absence of any authentication mechanism. On similar lines, Derczynski et al. (2017) presented an annotation scheme with replies and claims. They invited participants on a shared group task to analyze false information and figured out that the activity had a positive impact on rumor identification. Boididou et al. (2018a) compared three automated NLP approaches (textual patterns, information relevancy, and semi-supervised scheme) for false information classification on Twitter datasets. They also combined these methods to achieve an accuracy improvement of approximately 7%. Similarly, Wiegand and Middleton (2015) performed content-level analysis on YouTube, Twitter, and Instagram during the Paris shootings to verify content veracity and accuracy. Their results showed that trusted source attribution is a valuable indicator regarding content accuracy and temporal segmentation. Likewise, Kumar and Geethakumari (2014) assessed the propagation of false information through the concept of cognitive psychology, which was applied to a Twitter dataset for the automatic detection of rumors. The results showed an accuracy of 90%, while the false positive rate was less than 10%.

There are certain limitations in the application of NLP approaches for rumor verification. First, in case of detection of misleading real-time content, samples are required from the latest event of breaking news. Second, no current NLP technique is capable of automatically examining the real-time OSN streams. Third, due to the semantic nature of content, NLP techniques have limited accuracy and often need manual intervention to detect rumors.

2.1.3 Source authentication

Source authentication refers to the verification of received information through its claimed source (Perrig et al. 2001). It assists in ensuring the integrity of data during transmission and enhances trust by verifying the origin of a data source. The main aim of source authentication is to verify the content posted on OSN through different fact-checking sites like Snoopes.com and Factcheck.org. In the past few years, multiple research studies were also conducted in this domain. For instance, Seddari et al. (2022) presented a fake news detection system that combines linguistic as well as knowledge-based techniques to employ two diverse sets of features, i.e., linguistic features and fact verification features. Their experimentation was performed on a fake news dataset by employing both feature types where the results attained an accuracy of 94.4%. Moreover, Popat et al. (2018) proposed a system CredEye for automatic credibility assessment, which takes an input claim from the user and examines its reliability by consulting related articles from the web. Besides, Middelton et al. (2018) performed research on social computing, where they collected statements and manually checked them against records from different domain-related repositories such as Politifact and DBPedia. They concluded that real-time verification of user-generated content remains difficult to solve in the near future. In a similar vein, Jang et al. (2018) concluded that information origin and evolution patterns are important for quality information and this information can be used to overcome false news propagation over OSN. They examined this issue in the context of the US presidential elections in 2016 by collecting tweets from fake and true news stories. Moreover, several automatic systems are also proposed including Hoaxy (Shao et al. 2016) which detects and analyzes online false information after getting trained on a sample of tweets dataset, and TweetCred (Gupta et al. 2014), a tool that calculates credibility scores for a given set of tweets in real time. The drawback of such systems is that content authentication usually lags behind its propagation by 10–20 h because the information is propagated by active users, whereas fact checking is a grassroots activity. Another approach, presented in Metaxas et al. (2015), allows users to examine the dissemination of rumor, by putting it in a web system. The system then validates various dimensions associated with the rumor including its origin, burst, timeline, propagation, negation, and main actors. However, the system can only detect rumor propagation but lacks in its verification.

Though source checking is an important step in verifying information, but alone it faces difficulties in eradicating the issue, so there are certain shortcomings in its application for rumor verification. First, all the content circulated on OSNs cannot be verified through its source because the proliferation of false news is done at an unpredictable intensity. Second, there are an enormous number of available sources, which makes it practically impossible to consult every piece of information against each source. Third, authenticated news is not always initiated by trusted sources as reflected by the crowd reporting for real-time events. Fourth, the method can easily be defeated by simply developing a fabricated website as the source for the spread of rumors.

2.1.4 Empirical studies

Rumor verification through empirical studies refers to making planned observations to obtain knowledge in a systematic process (Patten 2016). Empirical studies provide data-driven insights and concrete evidence to enhance authentication (Miró-Llinares and Aguerri 2023). Through empirical research, researchers conceptualize how rumors initiate and propagate (Bordia and DiFonzo 2002). Various research studies have been conducted in this domain; for example, Pröllochs and Feuerriegel (2023) examined the effects of crowd and lifetime role in detecting true and false rumors sharing behavior in OSN. Their experimentation was performed on 126,301 Twitter cascades, and results found that the behavior of sharing is considered through lifetime and the effects of crowd describe differences in propagation of true rumors as a contrast to false rumors. They also found that a longer time span is associated with lesser sharing of activities; however, the decrease in sharing is higher for false than for true rumors. Further, Ding et al. (2022) studied the planned behavior (TPB) as well as deterrence theories (TD) for factors that affect the OSN user’s behavior to recognize rumors. They utilized the SEM model to perform testing of the model, and results showed that the severity and certainty attained a positive effect on the subjective norms. Moreover, Wang et al. (2018) emphasized users’ arguments in online discussion platforms and observed their beliefs in rumors. Information cascade and group polarization mechanisms were used to study the effect of rumors on individuals. The outcome indicated that people were more likely to consider rumors with high argument and constancy than rumors with low volume and constancy. Chua and Banerjee (2017a) examined the role of epistemic belief in affecting Internet users’ decisions to share health rumors online. Their dataset was collected from different Chinese websites, and their results showed that epistemologically naïve individuals were more likely to share online health rumors than epistemologically robust participants. Further, Chua and Banerjee (2017b) studied users’ reactions through their click speech in the form of Likes, Shares, and Comments on Facebook. Every rumor was implied as either a wish, dread, or neutral. The quantitative outcomes reflected that 78% were neutral rumors, 6% were wish rumors, and the remaining posts were verified as dread. Moreover, a study by Ehsanfar and Mansouri (2017) proposed an integrated model that combines two models of volunteer dilemma between fake news users and regular agents in OSN. They also formulated a mechanism to reward incentives for volunteering in the system. Their results showed that relatively marginal variation in joint reward has a vital effect on the propagation and eventual dominance of fake news in OSN. In another study, Oztuk et al. (2015) observed that tagging a post with a warning message of being a rumor reduces the spread of fake news. Moreover, it is suggested that OSN technologies are deliberated in such a manner that users can incapacitate the wrong data from their surroundings.

Though empirical studies provide interesting insights about the spread, features, and propagation of rumors, they also lack in several aspects. First, empirical studies cannot be used as formal proof of the authenticity of information as the hypotheses are accepted or rejected on a relative basis within a specific sample. Second, they are usually conducted over a span of time so it cannot be used for real-time identification of rumors. Third, the results are continually afflicted with uncertainty so it cannot be generalized for trusted verification.

2.1.5 Web semantics

Ontologies are used to represent knowledge in a certain area of information systems. They enhance data interoperability and enable more meaningful content (Zeshan et al. 2019). During rumor propagation, correct knowledge acquirement plays a significant role in handling the situation effectively. Inaccurate or unverifiable knowledge may lead to poor decision-making regarding particular information circulated on OSNs which results in the intensification of rumor dissemination. In this regard, the benefits of ontologies to share and reuse existing knowledge reinforced by reasoning tools to extract new knowledge are often appreciated. In the domain of rumor identification, Radhakrishnan and Sathiyanarayanan (2023) utilized a fuzzy inference system along with deep learning algorithms to detect rumors in healthcare management. Their experimentation was performed on a COVID-19 dataset, and the results reflected an improvement of 0.6%, 0.7%, and 1% in accuracy, recall, and precision, respectively. Another research presented in Negm et al. (2018) introduced a news credibility measure, utilizing ontologies and semantic weighing schemes, which use weighing semantic approaches along with ontology to evaluate news authenticity. The model was applied to RSS, a set of news organizations, which illustrated that it can measure the partial trustworthiness of news. Similarly, another ontology was developed to reveal the annotation scheme of rumors on OSN in Zubiaga et al. (2015). The model, which exclusively emphasizes tweets, was generated over the PROTON ontology and later extended to the annotation scheme model for OSN. Likewise, Barboza et al. (2013) developed a framework to enclose news reporting of disreputable issues by utilizing different corpus extraction approaches, SPARQL query, and ontology engineering.

Despite their effectiveness, web semantics lack in several aspects; for instance, currently there is little semantic web content available. Existing content needs to be upgraded on the semantic web content that includes dynamic content, web services, and multimedia. In addition, the availability of ontology becomes an important aspect as it explicitly allows semantic web content semantics. Lastly, standardization efforts need to be performed urgently in this evolving area in order to permit the creation of the required technology that can assist web semantics.

2.1.6 Modeling/simulation

Modeling can assist in understanding the structural dependencies, inconsistencies, and relationships between various modules of a complex system at an early phase. Traditional approaches to model rumor propagation are based on graph theory and the epidemic model. The former (graph theory) focuses on the structure of OSNs (Fountoulakis and Panagiotou 2013) and inspects the interaction among nodes to analyze rumor propagation on the basis of network structures like holes, bridges, clusters, and pivotal nodes (Clementi et al. 2013). The latter (epidemiology) was proposed to predict the outburst and dispersal of diseases (Kermack and McKendrick 1939) through tools like differential equations. The epidemic model splits people into numerous compartments and manipulates differential equations to exemplify the change of people in every compartment. This epidemic model has been altered to various situations, for example, to attain broader influence through fewer connections. In this regard, Ojha et al. (2023) developed a model based on the epidemic method to examine and control false information propagation in OSN. Their model was derived based on differential equations that compute the reproduction number which is a significant indication to examine rumor propagation. Further, Wang et al. utilized the 2SI2R model for simulating rumor dissemination (Wang et al. 2014) of continuous time epidemic model. Likewise, Li et al. presented the rumor phenomenon for SIS epidemic model utilizing singular perturbation theory (Li et al. 2014). Similarly, Nekovee et al. proposed a new model in the domain of epidemiology (Moreno et al. 2004) that categorizes people into three types: Ignorant, Spreader, and Stifler, rather than the traditional Susceptible, Infected, and Recover (S, I, R) classification. Moreover, Philip tried to modify the epidemic model with the Markov chain Monte Carlo approach (O’Neill and D. Philip 2002). These epidemic models have simulated rumor dissemination from several perceptions. There are also studies aiming at the association between the epidemic model and graph theory which tried to demonstrate the dissemination behaviors on networks and then map the epidemic model onto a graph (Pastor and Vespignani 2001; Grassberger 1983). These studies partially resolve the collision between intuition and ease of simulation. The domain of modeling and simulations lacks in several aspects such as uncertainty, reuse of existing models, and computational issues.

The verification of rumors has been done through different methods in literature including machine learning, natural language processing, source authentication, empirical studies, web semantics, and simulation/modeling. Although crowdsourcing has not been explored yet in this domain, the method has been researched in other domains. It is an emerging domain that is predominantly driven by involved OSN technologies.

2.2 Application of crowdsourcing in other domains

Crowdsourcing permits flexible and high-scale invocation of human contribution to gather data and its analysis. The research in the application of crowdsourcing has proved to be quite effective in various domains over the past years. Crowdsourcing is an act of taking a challenge faced by society and despite asking experts to solve the problem invites an open call to individuals (Majchrzak and Malhotra 2013). Some of the well-known initiatives in the area of crowdsourcing include Wikipedia, Yahoo Answers, YouTube, and many others. Wikipedia is a collection of online encyclopedias written collaboratively by internet users. The writings on Wikipedia are distributed in nature, and millions of users are contributing to improve these articles. Wikipedia usually gets more than 18 billion page views per month which makes it one of the most visited websites on the globe (Anderson et al. 2016). Yahoo! Answers is another crowd-sourced question answering platform to provide a collection of human reviewed data on the internet. The company claims to have 227.8 million monthly active users with around 26 billion emails sent on a daily basis (Lunden 2018). Moreover, Crowdsourcing has been successfully used in anti-spamming, to verify the authenticity of users, information sharing systems, and credibility of the content. A methodology to use crowdsourcing for the problem of spam propagation was presented by Ahmad et al. (2020), where reputation was maintained for individual senders as well as the SMTP servers and image spams dealt with diagonal hashing for optimization. The crowdsourcing at the receiver side was deployed to enhance the performance of the anti-spamming, and it proved to be quite effective to mitigate spam. A research study done by Chen (2016) proposed a real-time anti-spamming system for identifying spammers. The approach works by investigating behavioral properties such as response time and answering sequence in the Baidu crowdsourcing platform. Likewise, Saxton et al. (2013) analyzed 103 renowned crowdsourcing sites by using content analysis approaches and hermeneutic reading standards. Based on their analysis, a taxonomic of crowdsourcing was developed by unifying empirical variants in nine different forms of crowdsourcing models. Similarly, another research by Shi and Xie (2013) proposed a reputation-based collaborative method for filtration of spam. The reputation evaluation method was built with a shared repository and weightage of fingerprints. On the basis of users’ feedback, an email is considered spam by calculating a score against every fingerprint. Their results reflected to attain better performance as compared to existing spam filtering approaches on various email corpora. Furthermore, some well-known crowd-based information sharing platforms have highlighted the significance of the general users’ contributions. Another research proposed a system ‘Truthy’ (Ratkiewicz et al. 2011), which is a web service to track political memes and false information on Twitter. The system gathers tweets, detects memes, and provides a web interface that allows users to annotate memes that they consider as ‘truthy.’ Another application of crowdsourcing methodology in anti-spamming is Vipul’s Razor (De Guerre 2007), which is an anti-spamming tool that works through collaborative human intelligence. The reputation of community members is constantly rated and embedded with the automated system of message fingerprinting.

Many businesses use different crowdsourcing platforms for performing various tasks that need human intelligence to find relevant information. Traditional approaches in rumor verification usually involve experts from different domains, but this is often time-consuming and expensive. Crowdsourcing promotes the usage of heterogeneous contextual knowledge from volunteers and allocates different processes to small shares of efforts from various contributors.

3 Synews: synergy-based rumor verification model

The proposed synergy-based rumor verification model is developed as a knowledge-based building model (Saxton et al. 2013), which combines human intelligence and prior knowledge on a particular topic. Knowledge-based building models were revealed by “Wikis” as a constructive method for knowledge building. In synergy-based models, the knowledge generation process is outsourced to the community of users, and various sorts of incentive measures are used to encourage the participation and addition of valuable information. The Synews model triggers useful potential of the crowd to accomplish the desired goal, by assuming that the collective intelligence of the crowd is greater than a defined group of experts’ intellects.

The Synews model is comprised of two components: (a) News Verification and (b) User Reputation. The purpose of the former is to combat fake news spread on OSNs based on the synergy of the crowd. The latter component monitors the repute of the users based on their past decisions in order to assign weightage to their future decisions. Details about these components are provided below:

3.1 News verification

News verification is a process of discerning whether certain news is based on truth (Rubin et al. 2015). The news verification component of the proposed model combats false information on OSNs through crowdsourcing. Users are allowed to put ratings on OSN posts shared with them where the majority vote casting is a decision-based rule (Schmitz and Troger 2012). If the community decides a post as ‘authentic,’ it is declared as news, and if the post is rated as ‘unauthentic’ by the community, it is declared as rumor. The result of the rating is computed after some duration which can vary for different implementations (i.e., after 100 votes (Tschiatschek et al. 2017), or after one week, or re-evaluate after every pre-defined cycle).

The news verification component is further decomposed into three modules, namely (a) News Evaluator, (b) Rating Authenticator, and (c) Majority Voting Calculator. These modules are explained below in detail:

3.1.1 News Evaluator

When a publisher posts some online content, it is shown to his/her social circle depending on the privacy settings. Afterward, the News Evaluator module allows the users to put a rating on the published content upon viewing it based on its authenticity. Authenticity refers to how a user of published content evaluates the publisher’s believability. Users' belief in the published content is the most accepted way for news evaluation. The basic premise underlying this module is that if the majority of users consider a certain post as authentic, others will most likely consider it to be correct, and vice versa. Further, the News Evaluator module stores the ratings provided by all the users and keeps track of its authentic/unauthentic cycle by aggregating all the ratings on a post by different users. This evaluation cycle activates after a pre-defined criterion as mentioned above.

3.1.2 Rating Authenticator

The Rating Authenticator module is responsible for monitoring the ratings on an OSN post. It gets the ratings from the News Evaluator module and performs the analysis by considering each rating as a ‘vote.’ These ratings, in the form of −1 and + 1, reflect the level of authenticity, where −1 indicates unauthentic while + 1 reflects authentic. This module stores the provided ratings in a database and maintains a chain of access and modifications. These authenticated ratings are further passed on to the Majority Voting Calculator module to decide about the authenticity of an OSN post.

3.1.3 Majority Voting Calculator

This module is responsible for making the decision about the authenticity of an OSN post by computing the majority vote casting. It verifies two cases before deciding the status of a post:

(a) Check positive ratings: Let v1, v2, v3, …, vn be a set of voters, where vi: ⋀ → Ω, where ⋀ is OSN post authenticity and Ω was a set of finite voters. Each vote can be either + 1 or −1; if the average of the votes against a post is positive and the sum exceeds a threshold value (φ), then the Majority Voting Calculator assigns a positive label to the OSN post as it is supported by majority of the voters. Therefore, the post is proclaimed as news.

In this equation, (∑Ω/n > 0) signifies the condition that the average of votes against the post is positive, and (∑Ω > φ) represents the condition that the vote sum exceeds the threshold φ. If both these conditions are fulfilled, the label assigned to the post is + 1, signifying that the OSN post is considered as news.

(b) Check negative ratings: Let v1, v2, v3, …, vn be a set of voters, where vi: ˄ → Ω, where ˄ is OSN post authenticity and Ω is a set of finite votes. If the average of the votes against a post is negative or the sum of votes is less than the threshold value, it suggests that there is a negative majority sentiment on the OSN post. The Majority Voting Calculator assigns a negative label (− 1) to the OSN post, and the post is declared as a rumor.

In this equation, (∑Ω/n < 0) signifies the condition that the average of votes against the post is negative, and (∑Ω < φ) represents the condition that the sum of ratings provided by all the users is less than the threshold. If one of these conditions is fulfilled, the post is declared as a rumor.

Moreover, this module re-computes the decision when the votes on an OSN post reach a threshold value. This value can vary for different implementations and is kept dynamic in the proposed model (for the implementation of the proposed model, the cycle repeats after every 100 votes. Details are provided in Sect. 4).

Algorithm 1 performs the post verification by identifying a post either as news or rumor. The algorithm takes a list of post scores and the system decision. If the post score meets the threshold value and its publishers have a good reputation, then the algorithm declares it as news. Details of the algorithm are presented below.

The overall working of the News Verification component is provided in Fig. 1.

The news verification component of the proposed model verifies the authenticity of posts on OSN. When this component reaches some decision on a post and classifies it either as news or rumor, the second component (user reputation) evaluates the raters and either rewards or penalizes them based on their early decision. The correctness of the rating depends on the final classification of posts, and it is determined once a decision has been reached regarding the post. This reward or penalty is executed by improving or reducing his/her score (details are given in subsequent sections).

3.2 User reputation

Crowdsourcing is a significant method to combat deceitful online information; however, it presents a crucial challenge of intentionally false or biased ratings. Just like the creator of fraudulent content as well as their publisher, the voters can put false ratings, either intentionally or unknowingly. Therefore, in order to address this challenge, it is important to maintain some reputation of the raters, based on the truthfulness of their ratings. Hence, the second component of the proposed model deals with this demand by monitoring the repute of users. The significance of this component is to imply a reputed scheme to attract quality ratings on OSN posts.

The idea of maintaining the repute is vitally significant for online involvement because online reputation is considered an asset in online communities (Javanmardi et al. 2010). This component maintains the repute of users by keeping track of their provided ratings on OSN posts as well as the actual authenticity of the posts. Further, it deals with the users’ bias and penalizes them so wrong judgments can be avoided in the future. Roles are assigned to maintain the repute of users to make better decisions regarding OSN posts.

Empirical evaluation is conducted by using three cutoff values for defining the score band (values range defined for role categorization). Before selecting these bands, market professionals were engaged to remark on the outcomes of score bands. With their consensus, the categorization bands were decided along with the roles of users. These roles (Welser et al. 2011) are classified as three positive roles (i.e., Expert, Reviewer, and Contributor), three negative roles (i.e., Inexpert, Ignoramus, and Neglecter), and one neutral role (Freshman). The following rules are used in Protégé for inferencing user posts.

- Rule- 1::

-

User(?p) ^ Rating(?p, ?score) ^ swrlb:greaterThan(?score, 0.84) → Role(?p, “Expert”)

- Rule- 2: :

-

User(?p) ^ Rating(?p, ?score) ^ swrlb:greaterThan(?score, 0.74) ^ swrld: lessThan(?score, 0.85) → Role(?p, “Reviewer”)

- Rule- 3::

-

User(?p) ^ Rating(?p, ?score) ^ swrlb:greaterThan(?score, 0.59) ^ swrld: lessThan(?score, 0.75) → Role(?p, “Contributor”)

- Rule- 4::

-

User(?p) ^ Rating(?p, ?score) ^ swrlb:equal(?score, 0.60) → Role(?p, “Freshman”)

- Rule- 5::

-

User(?p) ^ Rating(?p, ?score) ^ swrlb:greaterThan(?score, 0.50) ^ swrld: lessThan(?score, 0.60) → Role(?p, “Inexpert”)

- Rule- 6::

-

User(?p) ^ Rating(?p, ?score) ^ swrlb:greaterThan(?score, 0.39) ^ swrld: lessThan(?score, 0.50) → Role(?p, “Ignoramus”)

- Rule- 7::

-

User(?p) ^ Rating(?p, ?score) ^ swrld: lessThan(?score, 0.40) → Role(?p, “Neglecter”)

When the news verification component reaches some decision on a post and classifies it either as news or rumor, the user reputation component evaluates all the ratings placed by all the raters and either rewards or penalizes them. The proposed system has seven pre-defined roles that can categorized as one neutral role (Freshman), three positive roles (Contributor, Reviewer, and Expert), and three negative roles (Inexpert, Ignoramus, and Neglecter). These roles have rating weightage associated with them, where a higher role reflects a higher weightage for the next ratings. Initially, each user is assigned a role of freshman and a rating weightage of 1. This role and thus rating weightage are later adjusted based on their overall performance for the authentication of OSN posts. These roles are considered to sustain the users' repute and impact on the verification of a given OSN post.

The user reputation component is further divided into three modules: (a) Score Updater, (b) Roles Assigner, and (c) Roles Range Categorizer. These modules are explained below:

3.2.1 Score Updater

The correctness of the rating is reliant on the final classification of posts and is initiated after a decision is reached on the post. Once a post is declared as news or rumor, all the users’ ratings and decisions are passed to the user reputation component, where they are further processed by this module. The Score Updater modernizes the values of users’ reputation scores after the final decision is computed on an OSN post. This module promotes users for their truthful feedback by upgrading their reputation score as well as demotes users for their untruthful feedback by downgrading their reputation score. If the user reputation component rewards a rater, it improves his/her score by 0.01. On the other hand, if the user reputation component penalizes a rater, it reduces his/her score by 0.02. This score is continuous in nature and is further associated with the roles that are assigned to each user by the Roles Assigner module.

Algorithm 2 modifies the reputation score of raters according to their submitted ratings and the system decision on the OSN post. The algorithm takes a list of posts, user ratings, the system decision, and the raters. First, the user rating is checked against a particular post; then, the system decision is retrieved for that post. If the user’s provided rating and system decision are similar, then the algorithm upgrades the rater’s reputation score; otherwise, it degrades it. There are four possible cases considered by this module which are discussed in Algorithm 2.

This module computes and updates the user reputation score after execution of the above algorithm. Primarily, the reputation Score Updater module assumes that all the users have an initial reputation score of 1 when they start putting ratings on OSN posts. Afterward, this module modifies their reputation score based on the correctness of their votes on previous OSN posts. These reputation scores are further linked to the roles assigned to each user which are managed by the Roles Assigner module.

3.2.2 Roles Assigner

The Roles Assigner module is accountable for assigning roles to users who have performed ratings on an OSN post. This module checks user reputation score (assigned to them based on their correct and incorrect verification of OSN posts) and uses it for role assigning. These roles are designed to maintain the repute of users and are altered based on the users’ performance to verify OSN posts. The Roles Assigner module has seven pre-defined roles which are categorized as three positive roles (Contributor, Reviewer, and Expert), one neutral role (Freshman), and three negative roles (Inexpert, Ignoramus, and Neglecter).

These roles affect the rating weightage of each user and reflect the trustworthiness of a user. Initially, each user is assigned a role of freshman and a rating weightage of 1. This role and its reputation score are further modified based on his/her performance for the authentication of OSN posts. These roles are considered to sustain the users' repute and impact on the verification of a given OSN post. For instance, if the same user having a rating weightage of 1 puts feedback on a post that turns out to be genuine, his/her reputation score will be upgraded to 1.01 by the Score Updater. Once a user attains a reputation score of 1.2, he becomes a contributor. Now when a contributor puts feedback on a post, the rating weightage will be 1.2 which reflects that he has more authority and trustworthiness in the system. This way, bias ratings will be penalized and the system will encourage genuine ratings. Various roles and their rating weightage are handled by the Roles Range Categorizer module.

3.2.3 Roles Range Categorizer

The Roles Range Categorizer module classifies roles based on the reputation score of users. For defining these score bands for roles categorization, empirical evaluation was conducted by using three cut-off values (20–100, 30–100, and 40–100) for defining the score band (values range defined for roles categorization). Several professionals were requested to remark on the outcomes of score bands (20–100, 30–100, and 40–100), and with their consensus (40–100) band was decided.

Algorithm 3 assigns the roles to raters according to their correct and incorrect assessment of OSN posts. First, the list of raters is attained who have submitted ratings on an OSN post, and the reputation scores are taken for role categorization. The assignment of roles to users is mentioned in Algorithm 3.

The overall working of the user reputation component is provided in Fig. 2.

OSN users can be biased about their opinions which may lead to justification of rumors based on wishful thinking. This leads to a high risk of proliferating biased or incorrect ratings. To address this challenge, the second component of the proposed model monitors the repute of users to draw quality ratings on OSN posts. For this purpose, the users are assigned roles based on the correctness of their votes. These roles are designed to encourage users for their honest feedback as well as to demoralize them for their biased feedback. The idea of maintaining the repute of users is imperatively significant for online involvement as users usually do not have any other tangible online stake (Javanmardi et al. 2010).

4 Simulation using Colored Petri-Nets

Modeling can assist in understanding the structural dependencies, relations, and inconsistencies between diverse components at the initial phase of a complex system. Among various techniques for model simulation, Colored Petri-Nets (CPNs) (Jensen 2013) are one of the most popular one. The formal semantics of CPN permit a graphical approach for simulation, execution, and validation of a proposed design. Simulation through Petri-Nets assists in eliminating modeling errors through an understanding of system behavior that can preserve a lot of resources. Some execution rules are followed for the execution of Petri-Net-based simulation, which includes the fulfillment of various pre- and post-conditions to enable a transition. The tokens' existence in every place depicts the system's local state, where multiple local states are assimilated to form a global state. Each modeled system in Petri-Nets has its initial marking that is further followed by the successor states. A system can change state from its initial marking M to a new marking M’ through the firing of tokens T from places P by a transition t.

The hierarchical CPN top-level view is shown in Fig. 3, which signifies an abstract form of Synews. The ellipses are places for the top-level CPN model and illustrate various objects of the system, while rectangles represent the transitions. The state of the modeled system is represented by the tokens (data values) in places that are called the initial marking of the system. There are seven events in the top-level module, which are OSN post, News Evaluator, Rating Authenticator, Majority Voting Calculator, Score Updater, Roles Range Categorizer, and Roles Assigner.

4.1 Analysis report

To validate the CPN model, a state space report was also generated through the simulation of the model. The formal model is evaluated through state space analysis to illustrate how predefined behavioral properties can be verified. State space analysis was performed using full state spaces that contain useful information regarding the behavioral properties including boundedness, liveness, and fairness properties.

The full state space statistics demonstrate that occurrence graph (O-graph) and Scc (strongly connected component) graph have 34,506 nodes and 111,132 arcs, so the CPN model has finite occurrence sequences. Upper and lower bound properties represent maximum and minimum limits of tokens that can reside in the place. There is no home marking, which verifies that the system is not reversible, and no dead transition instance in state space conveys that every transition is executable in the system. There are 6953 dead markings that represent the termination of the simulation and guarantee the reachability of the final state of the system. Further, it is interpreted that once the simulation terminates, it ends as per the required specifications. The fairness properties show that there are no infinite occurrence sequences because some transition is blocked by the guard. The detail of the simulation report is provided in Appendix I.

5 Ontology

Ontologies are used to represent knowledge in a certain area of information systems (Zeshan et al. 2019). The ontology layer comprises of hierarchical distribution of significant concepts in the domain and describes ontology concepts, relations, and their constraints. It provides a metadata scheme together with a vocabulary of concepts that are used for annotation and their conversion into semantic annotations (Ahmad et al. 2017). During rumor propagation, correct knowledge acquirement plays a significant role in handling the situation effectively, while inaccurate knowledge can lead to poor decision-making regarding a particular information circulated in OSNs that results in intensification of rumor dissemination. In this concern, the benefits of ontologies to share and reuse existing knowledge reinforced by reasoning tools to extract new knowledge are appreciated.

An ontology of Synews was developed by adapting METHONTOLOGY methodology, as in Ahmad et al. (2017) and (Khalil et al. 2021), because it provides a step-by-step guide for building new ontologies by reusing the existing ontologies. To fulfill the rumor ontology requirements, entities were extracted by consulting the domain experts along with the study of a number of user manuals, operating guides, and case studies. To reduce the number of entities to a meaningful set, a well-known weighting technique (Jain and Singh 2013) (on each repetition of concept, the score was increased by 1) was used for the entities (concept) selection.

\({\text{AvgConceptScore}}=\) Σ concept Score ̸ Σ concepts.

The ontograph of the developed ontology, as presented in Fig. 4, shows the visual dependencies between different entities and their instances in the ontology. Further, different types of object properties and relations are displayed using ontograph.

5.1 Axioms

Axioms are utilized in ontology to provide a suitable manner for adding rational expressions. Axioms are designed to improve the relationships mapping to different objects and their instances. They are implemented in ontologies in such a way that they are always true. Figure 5 shows the list of logical axioms of our ontology.

5.2 Consistency checking

Consistency checking of ontology is important as it identifies duplicate instances as well as those instances that are assembled based on their sources in similar ontology that might reduce the ontology's effectiveness. There are various tools for consistency checking such as Pellet, FACT + + , and Racer (for verifying the consistency of an OWL ontology). In this research, FACT + + was utilized for the evaluation of the proposed ontology owing to its comparatively user-friendly interface with Protégé. Figure 6 shows the consistency checking of the developed ontology.

SPARQL query language was used to compare and pull values from different known and unknown relationships of Synews ontology as it provides a rich set of functions. Moreover, it supports the OWL ontology because of the existence of OWL to RDF mapping while the Description Logic (DL) query has a limited set of operators and cannot use variables, so the values cannot be compared from different relationships. Initially, the query is directed toward the ontology reasoner to check its consistency and is only processed further if there is no inconsistency present. SPARQL query returns the decision on an OSN post, either as news or rumor, and also displays the user’s role as depicted in Fig. 7.

6 Model implementation

Most of the popular OSNs provide a developer’s platform that allows users to develop and integrate third-party applications. Likewise, Facebook platform offers a set of services and tools that enable Facebook third-party developers to create their own applications. This platform integrates Facebook to access the “social graph” of diverse businesses and other social media platforms. Facebook developers platform offers php SDK that can be utilized to perform Facebook API calls. Facebook apps can retrieve the information from members’ profiles and can post the messages. Facebook developers can use the Facebook Query Language (FQL) which is designed to retrieve the information from the databases. The developers can use the Facebook Markup Language (FBML) to create applications that get integrated into the Facebook platform.

The proposed Synews model was also implemented by using Facebook APIs. PHP language was selected for the development of this application as it enables the developers to implement a rich set of functionalities on the server side. Facebook API calls are used to retrieve user data through access tokens i.e., a string that identifies an application and is used for making graph API calls. The token contains information about which app can generate the token and when the token will expire. Access tokens can be generated by making the object of Facebook API’s helper class. The function getAccessToken() is called to generate access tokens for a user, and the function getLongLivedAccessToken() is used for every login session of a user to get the access token. Moreover, user login is validated through getOAuth2Client() and OAuth 2.0 client handler class. A database was created for storing the information, and various functions were developed to interact with the database, i.e., getrole(), get_user_weight(), update_weightage(), etc. PHP script files were uploaded to the online server, and a database was also deployed through phpMyAdmin, while HTML5 was used for designing the front-end forms. The class diagram, as shown in Fig. 8, reflects different objects, their attributes, their operations, and the relationships among them.

The users of the “Synews Verification” application need to give multiple permissions to the application that including reading the username, email, and Facebook posts before logging into the application. The users can see the page after granting the required permissions. Figure 9a shows the home screen that contains the information of a user. The role of every user is also shown on the home page of the application. Different posts on the wall of the user are displayed in Fig. 9b, which also allows them to put ratings. After clicking on the ‘Rating’ button, a Facebook post is displayed to the user as reflected in Fig. 9c, where he/she is allowed to put a rating on the post as ‘authentic’ or ‘unauthentic.’ All the feedback from different users on each post is collected, and a final decision is taken to declare whether the post is a rumor or news as shown in Fig. 9d.

7 Testing

The proposed system was evaluated through two methods: (a) user acceptance testing and (b) closeness of application results to the human experts’ classification. The details of these evaluations are discussed in this section.

7.1 User acceptance testing

In the current era of innovations and technologies, various technologies are proposed to enhance the quality of users’ experience of OSNs on a daily basis. The exponential upsurge of user behavior information on OSNs brings a promising opportunity to uncover user preferences in a more effective manner (Gao et al. 2023). In this regard, a major issue often faced by researchers is the applications’ acceptance and effectiveness for the users. Therefore, to attain this goal, a survey was designed to evaluate users’ acceptance of the Facebook “Synews Verification” application. The developed application was presented to a group of 297 users from different age groups and genders. Before presenting the application, it was assured that all the participants had Facebook accounts and had an understanding of its usage. The participants were introduced to the working of the application and were asked to install it as a third-party Facebook application. Also, an online survey using Google Forms was designed and offered to all the participants. The questionnaire for the survey was designed on a Likert Scale of seven by following the relevant studies of Technology Acceptance Model (TAM) (Lai 2017) and Self-Efficacy scale (Sherer et al. 1982). The questionnaire contains five variables, i.e., Perceived Ease of Use (PE) (Samreen et al. 2020), Perceived Usefulness (PU) (Samreen et al. 2020), Intension to Use (IU) (Muhammad and Ahmad 2021), Usage Behavior (UB), and Self-Efficacy (SE), where each variable has four items, as shown in Appendix II.

The online questionnaire form was filled out by 195 users (response rate 65.65%), and the results were analyzed through SPPS. The consistency of the data was evaluated through Cronbach’s Alpha, where an average value of 0.835 suggests high internal reliability. Table 1 shows the inter-variable correlation matrix among all the five variables used in the questionnaire.

The values of mean, standard deviation, and Cronbach’s alpha are calculated against all five variables as shown in Table 2, to check the reliability of each variable.

Figure 10 shows the average of users’ responses against each of the variables. The comparison of means is useful as it summarizes differences in descriptive statistics among five variables.

7.2 Comparison with human experts

To find whether the proposed technique provides results close to human perception, a t-test was performed in SPSS. In many disciplines, the investigator looks for 0.05 of a significance level which means there is only a 5% probability of error. The t-test was applied to calculate the rating mean of two variables. The difference computed through the t-test determines whether the average differences are significantly dissimilar from each other. The null hypothesis Ho and alternative hypothesis H1 were defined before executing the t-test as:

H1

The output of the proposed technique is different from the experts’ rating.

Ho

The output of the proposed technique is near to the experts’ rating.

To perform the statistical analysis, a group of 120 OSN posts (n = 120) (VanVoorhis and Morgan 2007) was taken on a random basis from a pool of five hundred OSN posts. The purpose was to investigate whether the proposed technique actually improves the accuracy of classification. The truthfulness of OSN posts is investigated by requesting 10 experts to be involved in the process of verification. The experts were assigned a set of OSN posts to verify independently, and later, their judgments were compared for inter-rater reliability. Results found a high level of inter-rater reliability that shows experts have similar opinions on most OSN posts which assists in minimizing the potential biases that may arise if there are significant discrepancies among the experts.

The test statistics, as shown in Table 3, reflect that the mean of the proposed technique is 87.008% while the mean of human understanding is 87.882%, while Table 4 shows, along with other details, the Sig. (2-tailed) value, which is 0.505 and > 0.05; hence, the null hypothesis (Ho) could not be rejected. This leads to the conclusion that the proposed technique computes results near to the human perception.

8 Conclusion and future work

Online social networks (OSNs) have become an essential part of our lives, and their usage is increasing at an astonishing rate. OSNs are implied as an important source of news as the content includes up-to-date news and trending topics from all over the world. The problem appears when users share their vision and fabricated information instead of actual news, and it gets really hard for the audience to decide which content is real and which is not. Therefore, it is important to verify OSN content before they can be considered as valid.

To address this challenge, this research proposed an efficient yet effective approach that contributes to the literature in the following manners:

-

It presented an efficient synergy-based model for rumor verification along with a weighted-mean reputation management system to lessen the propagation of rumors on OSN.

-

Petri-Nets were formulated to verify structural inconsistences of the proposed model and ontology was designed to check its semantic behavior.

-

The implementation of the proposed model as a Facebook third-party application was done for proof of concept.

-

Usability analysis of the developed Facebook application was performed using Technology Acceptance Model (TAM) and Self-Efficacy scale. Moreover, the classification of the system is compared with human experts to show its effectiveness.

-

The results indicate that the model can be used as an effective tool for the classification of news and rumor, and it has the potential to enhance the quality of user’s online behavior.

This research not only establishes a better understanding of rumor verification but also assists future research ideas and application developments in the domain of crowdsourcing and weighted-mean reputation management systems. There is still scope for improvement in the proposed approach in terms of attaining better accuracy. For instance, the proposed model monitors the rating of users based on their votes, but it can also monitor the rating of publishers who post fabricated news. Moreover, other parameters can also be used for the authenticity of OSN posts that may include the demographic information of a user’s account and their behavioral analysis. The credibility and repute of the publisher of false content can also be considered as another future direction. Further, the monitoring and management of user reputation will be useful to reduce personal biases. We believe that the proposed approach can be used as an effective tool for rumor verification over OSN. Approaches, such as the proposed one, have the ability to improve the overall quality of users’ online behavior by differentiating true and false information.

Data availability

Data will be provided on demand. Please contact the corresponding author.

References

[Online]. Available: https://stats.idre.ucla.edu/spss/faq/what-does-cronbachs-alpha-mean/.

[Online]. Available: https://www.wikipedia.org/.

[Online]. Available: https://www.yahoo.com/.

Ahmad A, Whitworth B, Zeshan F, Bertino E, Friedman R (2017) Extending social networks with delegation. Comput Secur 70:546–564

Ahmad A, Whitworth B, Zeshan F, Janczewski L, Ali M, Chaudary MH, Friedman R (2019) A relation-aware multiparty access control. J Intell Fuzzy Syst 37(1):227–239

Ahmad A, Azhar A, Naqvi S, Nawaz A, Arshad S, Zeshan F, Salih AO (2020) A methodology for sender-oriented anti-spamming. J Intell Fuzzy Syst 38(3):2765–2776

Ahmad A, Whitworth B, Bertino E (2022) A framework for the application of socio-technical design methodology. Ethics Inf Technol 24(4):46

Alpaydin E (2009) Introduction to machine learning, MIT press

Anderson M, Hitlin P, Atkinson M (2016) Wikipedia at 15: millions of readers in scores of languages. 14 January 2016. [Online]. Available: https://www.pewresearch.org/fact-tank/2016/01/14/wikipedia-at-15/.

Barboza FC, Jeong H, Kobayashi K, Shiramatsu S (2013) An ontology-based computational framework for analyzing public opinion framing in news media. In: 2013 IEEE Int Conf Syst Man Cybern (pp 2420–2426). IEEE

Becker D, Bendett S (2015) Crowdsourcing solutions for disaster response: examples and lessons for the US government. Procedia Eng 107:27–33

Boididou C, Middleton SE, Jin Z, Papadopoulos S, Dang-Nguyen DT, Boato G, Kompatsiaris Y (2018a) Verifying information with multimedia content on twitter: a comparative study of automated approaches. Multimed Tools Appl 77:15545–15571

Boididou C, Papadopoulos S, Zampoglou M, Apostolidis L, Papadopoulou O, Kompatsiaris Y (2018b) Detection and visualization of misleading content on twitter. Int J Multimed Inf Retr 7:71–86

Bordia P, DiFonzo N (2002) When social psychology became less social: Prasad and the history of rumor research. Asian J Soc Psychol 5(1):49–61

Castillo C, Mendoza M, Poblete B (2011) Information credibility on twitter. In: Proceedings of the 20th international conference on world wide web, pp 675–684

Cen J, Li Y (2022) A rumor detection method from social network based on deep learning in big data environment. Comput Intell Neurosci

Chen X (2016) A real time anti-spamming system in crowdsourcing platform. In: Software engineering and service science (ICSESS), 2016 7th IEEE international conference on, IEEE, pp 981–984

Choudhury D, Acharjee T (2023) A novel approach to fake news detection in social networks using genetic algorithm applying machine learning classifiers. Multimed Tools Appl 82:9029–9045

Chua AY, Banerjee S (2017a) To share or not to share: the role of epistemic belief in online health rumors. Int J Med Inf 108:36–41

Chua AY, Banerjee S (2017b) Rumor verifications on facebook: click speech of likes, comments and shares. In: 2017 Twelfth international conference on digital information management (ICDIM), pp 257–262

Clark BY, Zingale N, Logan J, Brudney J (2019) A framework for using crowdsourcing in government. In: Social entrepreneurship: concepts, methodologies, tools, and applications, IGI Global, pp 405–425

Clayton K, Blair S, Busam JA, Forstner S, Glance J, Green G, Nyhan B (2020) Real solutions for fake news? Measuring the effectiveness of general warnings and fact-check tags in reducing belief in false stories on social media. Polit Behav 42:1073–1095

Clementi A, Crescenzi P, Doerr C, Fraigniaud P, Pasquale F, Silvestri R (2013) Rumor spreading in random evolving graphs. In: European symposium on algorithms pp 325–336

Dang A, Smit M, Moh'd A, Minghim R, Milios E (2016) Toward understanding how users respond to rumours in social media. In: Advances in social networks analysis and mining (ASONAM), 2016 IEEE/ACM international conference on, IEEE, pp 777–784

De Guerre J (2007) The mechanics of Vipul’s Razor technology. Netw Secur 2007:15–17

Derczynski L, Bontcheva K, Liakata M, Procter R, Hoi GWS, Zubiaga A (2017) SemEval-2017 task 8: RumourEval: determining rumour veracity and support for rumours. arXiv preprint arXiv:1704.05972

Ding X, Zhang X, Fan R, Xu Q, Hunt K, Zhuang J (2022) Rumor recognition behavior of social media users in emergencies. J Manag Sci Eng 7:36–47

Ehsanfar A, Mansouri M (2017) Incentivizing the dissemination of truth versus fake news in social networks. In: 2017 12th system of systems engineering conference (SoSE), pp 1–6

Fountoulakis N, Panagiotou K (2013) Rumor spreading on random regular graphs and expanders. Random Struct Algorithm 43(2):201–220

Funke D (2017) 13 Nov 2017. [Online]. Available: https://www.poynter.org/fact-checking/2017/the-eu-is-asking-for-help-in-its-fight-against-fake-news/.

Gao H, Wu Y, Xu Y, Li R, Jiang Z (2023) Neural collaborative learning for user preference discovery from biased behavior sequences. IEEE Transact Comput Soc Syst

Gatautis R, Vitkauskaite E (2014) Crowdsourcing application in marketing activities. Procedia Soc Behav Sci 110:1243–1250

Granik M, Mesyura V (2017) Fake news detection using naive Bayes classifier. In: Electrical and computer engineering (UKRCON), 2017 IEEE first Ukraine conference on, IEEE, pp 900–903

Grassberger P (1983) On the critical behavior of the general epidemic process and dynamical percolation. Math Biosci 63:157–172

Gray JA (1981) A critique of Eysenck’s theory of personality. In: A model for personality (pp 246–276). Springer Berlin Heidelberg

Guo M, Xu Z, Liu L, Guo M, Zhang Y (2020) An adaptive deep transfer learning model for rumor detection without sufficient identified rumors. Math Probl Eng

Gupta A, Kumaraguru P, Castillo C, Meier P (2014) Tweetcred: real-time credibility assessment of content on twitter. In: International conference on social informatics, Springer, pp 228–243

Gupta A, Lamba H, Kumaraguru P, Joshi A (2013) Faking sandy: characterizing and identifying fake images on twitter during hurricane sandy. In: Proceedings of the 22nd international conference on world wide web, ACM, pp 729–736

Hoodbhoy P (2017) Why they lynched Mashal Khan, 29 April 2017. [Online]. Available: https://www.dawn.com/news/1329909.

Imran M, Ahmad A (2023) Enhancing data quality to mine credible patterns. Inf Syst Front 49:544–564

Jain V, Singh M (2013) Ontology development and query retrieval using protege tool. Int J Intell Syst Appl 9:67–75

Jang SM, Geng T, Li J-YQ, Xia R, Huang C-T, Kim H, Tang J (2018) A computational approach for examining the roots and spreading patterns of fake news: evolution tree analysis. Comput Human Behav Elsevier 84:103–113

Javanmardi S, Lopes C, Baldi P (2010) Modeling user reputation in wikis. Stat Anal Data Min ASA Data Sci J 3:126–139

Jensen K (2013) Coloured petri nets: basic concepts, analysis methods and practical use, vol 1. Springer Science & Business Media

Jin Z, Cao J, Zhang Y, Zhou J, Tian Q (2016) Novel visual and statistical image features for microblogs news verification. IEEE Trans Multimed 19(3):598–608

Kermack WO, McKendrick AG (1939) Contributions to the mathematical theory of epidemics: V. analysis of experimental epidemics of mouse-typhoid; a bacterial disease conferring incomplete immunity. Epidemiol Infect 39(3):271–288

Khaled K, ElKorany A, Ezzat CA (2023) Enhancing prediction of user stance for social networks rumors. Int J Electr Comput Eng (IJECE) 13:6609–6619

Khalil U, Ahmad A, Abdel-Aty AH, Elhoseny M, El-Soud MWA, Zeshan F (2021) Identification of trusted IoT devices for secure delegation. Comput Electr Eng 90:106988

Kotteti CMM, Dong X, Qian L (2020) Ensemble deep learning on time-series representation of tweets for rumor detection in social media. Appl Sci 10:7541

Kumar KK, Geethakumari G (2014) Detecting misinformation in online social networks using cognitive psychology. Human-Centric Comput Inf Sci SpringerOpen 4:14

Lai P (2017) The literature review of technology adoption models and theories for the novelty technology. JISTEM-J Inf Syst Technol Manag 14:21–38

Le H, Boynton G, Shafiq Z, Srinivasan P (2019) A postmortem of suspended Twitter accounts in the 2016 US presidential election. In: 2019 IEEE/ACM international conference on advances in social networks analysis and mining (ASONAM)

Li C, Li J, Ma Z, Zhu H (2014) Canard phenomenon for an SIS epidemic model with nonlinear incidence. J Math Anal Appl 420:987–1004

Lunden I (2018) Yahoo Mail aims at emerging markets and casual users, launches versions for mobile web and Android Go [Online]. Available: https://techcrunch.com/2018/06/19/yahoo-mail-go/.

Luvembe AM, Li W, Li S, Liu F, Xu G (2023) Dual emotion based fake news detection: a deep attention-weight update approach. Inf Process Manag 60(4):103354

Lytinen SL (2005) Artificial intelligence: natural language processing. Van nostrand's scientific encyclopedia

Majchrzak A, Malhotra A (2013) Towards an information systems perspective and research agenda on crowdsourcing for innovation. J Strateg Inf Syst Elsevier 22(4):257–268

Martínez M (2018) Burned to death because of a rumour on WhatsApp. 12 November 2018. [Online]. Available: https://www.bbc.com/news/world-latin-america-46145986.

Metaxas PT, Finn S, Mustafaraj E (2015) Using twittertrails. com to investigate rumor propagation. In: Proceedings of the 18th ACM conference companion on computer supported cooperative work \& social computing, pp 69–72

Middleton SE, Papadopoulos S, Kompatsiaris Y (2018) Social computing for verifying social media content in breaking news. IEEE Internet Comput 22(2):83–89

Mills S (2012) How twitter is winning the 2012 US election. October 2012. [Online]. Available: https://www.theguardian.com/commentisfree/2012/oct/16/twitter-winning-2012-us-election.

Miró-Llinares F, Aguerri JC (2023) Misinformation about fake news: a systematic critical review of empirical studies on the phenomenon and its status as a ‘threat.’ Eur J Criminol 20(1):356–374