Abstract

The study aims to implement a convolutional neural network framework that uses the 18F-FDG PET modality of brain imaging to detect multiple stages of dementia, including Early Mild Cognitive Impairment (EMCI) and Late Mild Cognitive Impairment (LMCI), and Alzheimer’s disease (AD) from Cognitively Normal (CN), and assess the results. 18F-FDG PET imaging modality for brain were procured from Alzheimer’s disease neuroimaging initiative’s (ADNI) repository. The ResNet50V2 model layers were utilised for feature extraction, with the final convolutional layers fine-tuned for this dataset’s multi-classification objectives. Multiple metrics and feature maps were utilized to scrutinize and evaluate the model’s statistical and qualitative inference. The multi-classification model achieved an overarching accuracy of 98.44% and Area under the receiver operating characteristic curve of 95% on the testing set. Feature maps aided in deducing finer aspects of the model’s overall operation. This framework helped classifying from the 18F-FDG PET brain images, the subtypes of Mild Cognitive Impairment (MCI) which include EMCI, LMCI, from AD, CN groups and achieved an all-inclusive sensitivity of 94% and specificity of 95% respectively.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Alzheimer’s disease (AD) is a neurodegenerative disorder that is becoming increasingly prevalent in aging populations [1]. AD is known to be the most typical form of dementia which slowly destroys neurons, thereby affecting the cognitive abilities of the affected individuals. At present, a wide range of treatment and medications are available to reduce the severity of the disease and delay its gradual onset. However, there is no definitive cure, or even a well-defined understanding of the pathogenesis of this disorder [2].

In 2007, a study was established to predict the future prevalence of AD, taking into account the increasing number of cases seen in elderly population [3]. The study concluded that by the year 2050, 1 in 85 persons would be affected by the disorder, with 43% of the cases need advanced level of care. It was also estimated that, if future medical interventions were able to delay the disorder by even 1 year, the number of persons affected in the year 2050 would decrease drastically. It can be concluded from this study and other similar studies [4,5,6] that AD poses a grave threat to global health. Therefore, there is a necessity for advanced methods in the diagnosis of AD in its early stages so as to administer the right treatment to delay the progression of the disease.

The greatest risk factor for being affected by AD is the age factor. Individuals aged 65 and above are at a significant risk of developing AD, and the risk keeps on increasing as their age progress. Other factors such as Down syndrome, genetics, family history also serve as a greater risk of suffering from AD. Research conducted in the past two decades have shown that head injuries, smoking, depression and even exposure to aluminium may make a person more vulnerable to AD [7,8,9,10,11].

Diagnosis of AD relies heavily on clinical methods. The most fundamental characteristics of the disease are the presence of beta-amyloid plaques in the neocortex regions and neurofibrillary tangles. However, their presence is indicated only in later stages of the disease, and there are no laboratory tests that can confirm or predict its onset in early stages. In 1984, a standard criterion for the clinical diagnosis of AD was established [12]. However, research conducted in subsequent years uncovered a lot of previously unknown clinical facts. In 2009, an updated criteria were published that highlighted the complex mechanisms that formed the backbone of the AD pathogenesis [13].

Recent advancements in research have identified a provisional phase between healthy aging and dementia, known as mild cognitive impairment (MCI), which can further be divided into two stages, early MCI (EMCI) and late MCI (LMCI) [14]. Patients with EMCI and LMCI were found to be at-risk of progressing towards AD dementia, with the risk being greater for patients with LMCI [15]. MCI is characterized by higher cognitive impairment and memory loss than normal aging, and therefore must be identified and treated in its early stages to postpone the advent of AD/dementia.

Considering early diagnosis of AD in clinics is complicated, the use of computer-based methods in conjunction with medical professionals has a tremendous role in identifying and diagnosing AD. Deep learning and machine learning classifiers have piqued interest of many researchers in recent years for the challenge of identifying the condition, with impactful outcomes. Since most of the studies have concentrated on structural brain-imaging techniques [16, 17], we made our effort in employing 2-deoxy-2-[fluorine-18] fluoro-d-glucose ( 18F-FDG) Positron Emission Tomography (PET) imaging as our imaging modality for study.

Although neuropsychological tests detect AD, pathophysiological changes in the brain are predominantly diagnosed through imaging. 18F-FDG-PET is a minimally invasive procedure used to assess the cerebral glucose metabolism. One of the most prominent features in AD is the substantial pruning of glucose metabolic activity in specific regions of brain as demonstrated by 18F-FDG-PET. Neurodegeneration is mainly caused due to the cerebral glucose hypometabolism. The metabolically active areas in the brain are represented by higher degrees of 18F-FDG-PET. AD patients have significant reductions in glucose metabolism in temporoparietal regions, Posterior cingulated cortex and frontal cortex regions. These reductions in metabolic activity indicates the early preclinical stages of AD. Hence 18F-FDG PET is used as promising diagnostic modality to identify the diagnostic patterns in the pre-clinical evaluation of AD. This modality can be used in diagnosing the neurocognitive disorder due to AD. It is mainly used for early prediction of AD and it has potential to distinguish the patterns of AD earlier than MRI for MCI subjects. Hence 18F-FDG-PET is considered as a predominant tool for pre-symptomatic diagnosis of AD providing better sensitivity and accuracy.

Since the patterns of cerebral hypometabolism are strongly linked with the various categories of cognitive dysfunctions and neurodegeneration, 18F-FDG-PET as marker is particularly useful for possible diagnosis of the stages of dementia [18]. The progress of deep learning research encourages finding and creating better deep learning methods to promote 18F-FDG-PET study and assessment, which might facilitate in the aid of early clinical AD treatments.

The role of artificial intelligence in healthcare has more powerful impact in recent times and grabbed a lot of attention from researchers and scientists for its effective implementation in medical applications. Deep learning models have been successfully implemented in the past decade for the purpose of diagnosis from medical images. The field of AD research is widely explored and there are several works in literature pertaining to the application of artificial intelligence in AD diagnosis, specifically with regard to 18F-FDG-PET in AD diagnosis. Several of these studies have utilised images from the Alzheimer’s disease neuroimaging initiative’s (ADNI) database, an initiative that aims to study whether the combination of imaging and clinical biomarkers along with neuropsychological evaluation may be used to estimate MCI and early AD.

Due to uncertainties surrounding the clinical biomarkers of the disease, there has been tremendous research work persist to identify the imaging biomarkers to detect the disease in its early stages. Imaging is now being used in the diagnosis of AD for positive reinforcement of clinical diagnosis. Amyloid deposits which are characteristic of AD can also now be visualised through amyloid imaging [19]. Recent works in literature have shown that through imaging, it is possible to identify structural cerebral anomalies that can assist in identifying AD in its early stages of cognitive impairment [20].

Initially, structural MRI was employed to detect progressive cerebral atrophy as a characteristic of neural degeneration, which would imply the onset of AD [21]. However, this approach lacked molecular specificity and was unable to identify the two hallmark pathological signs of AD i.e. amyloid plaques and neurofibrillary tangles. Functional MRI (fMRI) studies focussed on the activation of the hippocampus region of the brain. Decreased hippocampal activity during encoding of new information was seen to be consistently associated with AD [22, 23]. One major disadvantage of this technique is that it is highly sensitive to motion of the head. Diffusion tensor imaging of the hippocampal region has also yielded significant results [24]. However, just like fMRI, further longitudinal studies are needed to validate the concept.

Positron Emission Tomography (PET) with 2-deoxy-2-[fluorine-18] fluoro-d-glucose and integrated with CT (FDG-PET) is highly recognized for its powerful imaging capabilities [25]. FDG-PET has been shown to have a high specificity as well as sensitivity in the detection of AD. It is also possible to differentiate between the PET scans of individuals with AD and other forms of dementia such as Lewy Body Disease (LBD), Parkinson Disease (PD) and Frontotemporal Dementia (FTD) [26]. It is also possible to differentiate AD from other forms of dementia during the MCI stages using FDG-PET. Chen et al. developed a hypo-metabolic convergence index (HCI) based on known AD voxel regions and were successful in identifying which patients with MCI would progress to AD in the next 18 months [27]. Therefore, it can be concluded that FDG-PET is a promising imaging modality in the diagnosis of AD during its early stages as well as in the prediction of the progression of the disease.

Choi et al. [28] were the first to use an unsupervised learning method to recognise aberrant features in FDG-PET images of the brain. On PET scans of normal patients (those without cognitive impairment), a neural network based on variational autoencoder (VAE) was trained. The trained model’s output was an abnormality index, which defined the difference between the provided input image and a normal FDG-PET image. From 18-F-FDG-PET pictures, this model proved successful in recognising aberrant patterning in AD.

Wee et al. [29] incorporated cortical geometry of T1 weighted MRI images to classify controls (CN), EMCI, LMCI and AD. A spectral graph-CNN was trained based on images from the ADNI-2 cohort. The graph-CNN method achieved an accuracy of 85.8% for the classification of AD versus CN. Further, this model was tested on two other populations (ADNI-1 cohort and an Asian cohort), which yielded an accuracy of 89.4 and 90% respectively. Thus, the graph-CNN model was found to be efficient as well as reproducible on different populations. Ding et al. [30] implemented the Inception V3 deep learning architecture in the diagnosis of AD and MCI based on FDG-PET images from the ADNI database. The Inception-V3 architecture achieved an AUC of ROC curve of 0.98.

Liu et al. [31] implemented a combination of CNN and RNN framework to classify AD versus CN and MCI versus CN images from the ADNI database. Their approach was based on decomposition of 3D FDG-PET into 2D slices, from which the RNN framework would learn inter-slice and intra-slice features for classification. Their combined framework led to an AUC of ROC of 95.3% for AD versus CN and 83.9% for MCI versus CN. Singh et al. [32] proposed a method in which probabilistic principal component analysis (PCA) was carried out for feature reduction followed by binary classification using a multilayer perceptron neural network. The study utilised AD, CN, EMCI, LMCI images from the ADNI database. Their network achieved high precision, F1-score and recall scores in the classification of AD versus CN, AD versus MCI, CN versus MCI, AD versus EMCI and AD versus LMCI.

Ramzan et al. [33] used the ResNet-18 architecture for the multiclass classification and automated diagnosis of AD, SMCI, EMCI, LMCI, MCI and CN. They utilised fMRI images from the ADNI database and compared the performance of ResNet-18, when it is trained from scratch with randomly initialised parameters versus the transfer learning approach. Under the transfer learning approach, they further experimented with two different methods: (i) where only the last layer is replaced with a custom layer that is adapted to the current task (off-the-shelf method) and (ii) where more than one layer is replaced and trained for the new task (fine tuning (FT) method). The ResNet architecture was found to be highly suitable for the classification of AD and its various stages. All the different training methods used in this study yielded excellent results, but the highest result was attained by the off-the-shelf method, with an AUC of ROC value of 0.99.

For the detection of AD, non-invasive biomarkers like PET have been used normally.18F-FDG-PET is the most extensively used type of PET image. The most commonly chosen features from 18F-FDG-PET biomarker are cellular glucose metabolism. 18F-FDG PET quantifies the reduction in the regional cerebral metabolic glucose rate and provides a reliable biomarker in the AD patients. In clinical practice, identifying the stage of MCI to progression of AD dementia is the most challenging task for the medical community [34, 35]. To eliminate this challenge, the Worldwide researchers has access to public database such as ADNI Neuroimaging datasets (http://adni.loni.usc.edu) which includes multi-modal imaging data along with demographic, genetic and cognitive measurements of healthy, MCI and AD subjects. These datasets are compared and analysed to perform automated detection and classification of AD, CN and MCI Progression [36, 37] using deep learning techniques like Convolution neural networks.

Earlier diagnosis of AD is very crucial for clinical recommendation of prompt treatment. It’s a very challenging task to predict the progression of the stage MCI to AD and to differentiate MCI from healthy controls for providing appropriate treatment for the patients. Hence there is a need for multi-class classification system to predict the AD and its various stages from healthy controls. Recent advancements in Neuroimaging techniques paves the way for integration of high dimensional multimodal neuroimaging data with deep learning techniques for efficient classification of various stages of AD.

Our study proposes a novel automated method for the diagnosis of the various stages of AD. In this study, we have initialised a transfer learning approach to train a variation of the ResNet architecture known as ResNet-50. The ADNI repository’s 18F-FDG PET brain images were used as the operative case dataset for this study, which consist of images depicting multiple stages of AD/dementia such as CN, EMCI, LMCI and AD. Our aim was to classify the various stages of AD/dementia using the proposed architecture, and comparatively evaluate the performance outcome of our transfer learned model with that of other pre-trained CNN models. Further, we visualised the successful model results using Feature Maps, and analysed the results using statistical metrics.

Methodology

Dataset

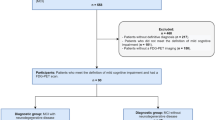

The imaging data orderly acquired for usage in the study were retrieved from the Alzheimer’s disease neuroimaging initiative’s (ADNI) repository. Since two decades, the ADNI research has included collaborations with highly reputed scientific communities including National Institute on Aging (NIA), the National Institute of Biomedical Imaging and Bioengineering (NIBIB), multidisciplinary biomedicine based non-profit organisations, and commercial pharmacological companies. Details about this wide data collection in different image modalities and formats have been made available on the website [38]. ADNI’s important goal has been to study the evolution of cognitive symptoms in different categories of respondents, including normal subjects, early mild cognitive impaired individuals (EMCIs), late mild cognitive impaired individuals (LMCIs), and AD patients. Figure 1 shows the different stages of dementia and AD. For our study, 18F-FDG PET brain imaging protocols were collected with the dates spanning between January 2006 and December 2013. A total of 1130 subjects with different associated stages that include AD, CN, EMCI, LMCI were collected for training our deep learning algorithm. The ground truth image labels have been referred to improve the classification performance accuracy of AD. Details regarding the data collected for training and testing is included below in Table 1. The overall block diagram of the proposed work is given in the Fig. 2.

Image pre-processing

The images were originally acquired in DICOM format from the ADNI database. The pre-processing of such images into a simpler format for feeding into the deep learning network pipeline was done using grid method [30], conducted in Python’s SciPy library. This method resamples the image from 2-mm isotropic voxels to pixel grid of 200 × 200 × 180-mm3 in volume. Conclusively, the images were retrieved in 2-D matrix size of 224 × 224, in PNG format.

Deep learning models identifies important features from neuroimaging data automatically. Deep learning models can identify the optimal set of features during the training stage of data itself. The pre-trained CNN models generate unsupervised features and then combine with a more refined decision layer. This kind of architecture model facilitate the modelling of complex decision boundaries for multi-class classification problems. Hence due to this inherent property of deep learning models, efficient prediction of MCI is possible, even though the prediction of EMCI and LMCI are challenging in Alzheimer’s disease due to limited differences between the four groups.

Deep learning technique uses pre-processes neuroimaging data like 18F-FDG PET to generate the features. The features are obtained based on the intensity measurements of glucose metabolism. Most relevant features are selected based on the component analysis to represent nearest axial perspective of the image. In the proposed work, automated feature extraction was performed using pre-trained models of CNN by fine tuning the hyper parameters to achieve better classification accuracy. The feature activation map provides salient details on how the pertinent layers extract sharp details from the image that would have not been possible to predict through naked eye.

Contrast limited adaptive histogram equalization

For enhancing the visibility level of the gray scale images, (Contrast Limited Adaptive Histogram Equalization (CLAHE) transformation technique is applied. It is a modification of adaptive histogram equalization technique in which excessive amplification of contrast is limited. The CLAHE algorithm is employed on small image regions (named as titles) rather than on entire image. It performs histogram equalization in small tiles or patches with contrast limiting and high accuracy. The artificial boundaries present in the images are removed using the combination of neighbouring tiles with bilinear interpolation method. After this process, still the noise is present in the enhanced image. To overcome the noise, double level thresholding is performed using histogram based thresholding method in the enhanced image. Then finally otsu thresholding technique is applied to obtain the best enhanced image.

Data augmentation

In order to have the most accurate prediction approach on test samples, deep learning demand a vast quantity of labelled sample data. By producing multiple iterations of the samples in a dataset, data augmentation takes the condition of expanding the size and variety of labelled training sets. Data augmentation approaches are often employed to alleviate class imbalance issues, minimize overfitting in deep learning, and facilitate convergence, all of which contribute to improved outcomes.

Collection of such huge number of medical image data is an overly expensive and exhausting process. Our study involved 1130 training samples, which underwent augmentation so that our model could learn the samples with suitable variations. The augmentation procedure was carried out using Python’s albumentation module [39], which provides a wide variety of augmentation techniques. Figure 3 depicts the eight augmentation techniques involved, while Table 2 enlists and explains the augmentation techniques taken for model training.

Transfer learning

Transfer Learning works on the idea of training a base network on a bigger, wider ranged dataset, and later the features or “weights” learned from the first dataset are redeployed for use, to be trained on a second dataset. Pre-trained models, trained on the ImageNet dataset, which has around 1.2 million images of 1000 labels, have been repurposed for use in several classification tasks and thus contributing actively in research. Substantial amount of time is saved, when parameters and hyperparameters are updated or fine-tuned according to the task’s interest, with only the last few layers being trained from scratch.

Residual network

ResNet stands as a short form of Residual Network described in Fig. 4. To overcome the vanishing and exploding gradient problem occurring in the course of backpropagation, the idea of residual learning was introduced [40]. The building functions of residual learning layers comprises the identity branch and the residual mapping function,which makes up the identity shortcut connection.The core idea behind this residual learning is this “identity shortcut connection”, which skips one or more layers.

Mathematically, this maybe be explained as, rather than conforming to the identity mapping of

The additional layers are made to suit another mapping owing to residual learning:

this means H(z) gets transformed to:

It was accomplished by the use of “shortcut connections.”

Figure 4 describes gives the diagrammatic representation of the identity shortcut connections which make up the building block of residual learning.

Training the ResNet model

For training deep neural networks without facing vanishing/exploding gradient problems, ResNet perfectly emends such issues while also keeping the computational time and classification accuracy in check, according to the task. The author [40] offered different variants of Resnet with 50,101,152 layers. For our multi-classification task, Resnet50 was used based on the optimal size of the model, while also having an effective approach for better generalization and classification with fewer error rates. The hyperparameters used for finetuning the ResNet-50 model is given in Table 3. ResNet-50 filters follow two architecture criteria, as shown in Table 4. First, the layers have the same number of filters, when the input and output feature sizes are the same. Second, halving the feature map size doubles the number of filters. Convolutional layers with a stride of 2 execute down-sampling effectively, and batch normalisation occurs soon after each convolution and before activation ReLU unit. A global average pooling layer with a 7 × 7 filter wraps up the network. As ResNet-50 was initially designed on an ImageNet dataset containing 1000 categories dataset, for training our dataset, only the last (i.e.) fifth convolutional block and the final classification layer were trained from scratch, while the other layers were non-trainable or frozen. Figure 5 illustrates the basic architecture of our ResNet-50 model. Detailed analysis of the output layers, shape, filters and channel size is shown in Table 4.

Hyperparameter tuning

Considering the computational constraints and model uniformity across the dataset for training, the model was tuned using the selected hyperparameters enlisted in Table 3. Parameter values for learning rate, exponential decay factor are chosen with consideration of the Adam optimizer. To initialize the weights during training, kernel initialization was done using the default Glorot uniform initializer. The callbacks for preventing overfitting of the model were chosen reduce learning Rate on Plateau (ReduceLRonPlateau) and Model Check Point (save the model with the associated dataset weights).To track the training of the multi-classification problem, categorical cross entropy was applied.

Here, K denotes the number of classes (4 classes in our case), \(y\) being the binary indicator, whether \(c\) is the correct predicted value for observation \(o\), while \(p\) is the predicted probability for observation \(o\) belonging to class \(c\).

Performance metrics

We employ the most frequently used deep learning performance metrics, such as sensitivity, specificity, precision, recall, F1-score, ROC-AUC, and confusion matrix, to assess the performance of our models. For the performance metrics used, the computed range follows around [0, 1]; the greater the value, the better model’s performance. The metrics are as follows: TP stands for true positive, FP stands for false positive, TN stands for true negative, and FN is for false negative.

Recall/Sensitivity: A statistical metric that measures how many accurate positive predictions were made out of all possible positive predictions. It’s determined by dividing the total count of true positives and false negatives by the total value of true positives (e.g. True positive rate).

Specificity: A statistical metric that measures how many accurate negative predictions were made out of all the possible negative predictions. This is determined by dividing the total count of true negatives and false positives by the total value of true negatives. (e.g. True negative rate).

Precision: A statistical metric that measures how many optimistic results were accurate. It is determined by dividing the cumulative count of true positives and false positives by the number of true positives.

F1-score: A harmonic average of statistical measure of Precision and Recall, and it provides a more accurate estimate of wrongly graded cases than accuracy metric.

Accuracy: The proportion of true positives and true negatives in all of the examined cases is calculated using the accuracy metric. The mathematical expression to calculate accuracy is given by

Results

Following the proposed methodology outlined in the previous section, with only 50 epochs, a training accuracy of 96.23% and testing accuracy of 98.44% was achieved, as visualised in Fig. 6. Figure 6a represents the training and validation accuracy curves for 50 epochs of ResNet 50 model. From the curve, it was observed that, the training and validation accuracy gradually increases, as number of epoch increases and reaches the maximum point at 50th epoch. Figure 6b depicts the training and validation loss of ResNet 50 model. As indicated in the graph, the training loss decreases, as the number of epoch increases.

Table 5 shows the performance metrics of the test set of ResNet 50 Model. It compares the outcome findings of the test set to the selected statistical metrics, taking into consideration the results obtained, with and without augmentation. From the Table 5, it was inferred that the accuracy and precision increased for test set with augmented images compared to without augmented images.

A confusion matrix, sometimes called a contingency table, is a statistical method that is used to properly assess paired observations. Rows denote an expected class, while columns reflect an actual class, or vice versa. For our multi-classification problem, confusion matrix was utilized to assess the efficiency of the model in classifying the different stages of the disease A Receiver Operating Characteristic (ROC) Curve is a technique for determining the effectiveness of a test. It’s a graph that shows how the true positive rate (i.e. sensitivity) compares to the false positive rate (i.e. specificity). Figure 7a represents the confusion matrix of Multi –class classification such as AD, CN, EMCI and LMCI and Fig. 7b indicates the ROC for the testing set used in our model.

Visualising the activation layers

The feature maps of the first two layers of our model trained on the dataset are graphically represented in Fig. 8. Both the representation of Fig. 8a, b has 64 images that are organized in an 8 by 8 grid. As the number of parameters with respect to neurons increase at a rampant rate at the later layers of the model, only the first two layers are presented. The activation map provides salient details on how the pertinent layers extract sharp details from the image that would have not been possible to predict through naked eye.

The Fig. 8a, b below visualises the layer first Convolution layer and Max Pooling layer using Feature Maps.

Comparison with other models

We compare our proposed method on the same dataset, on a similar hyper-parameter setting on pre-trained deep learning models (Fig. 9), over a range of 35–50 epochs. Parameters including test accuracy, test loss along with the training time (Table 6) were checked for analysing and deploying the best possible model in practice.

Res Net 50V2 and Mobile net V2 model was trained in google colab for 13 and 14.4 h respectively, whereas Xception net and Dense Net 121 tooks 18.8 and 18.6 h respectively for training duration. It was observed that Res Net 50V2 model was trained in lesser time compared to other nets. Also, the test loss incurred in ResNet 50V2 is less compared to other pre-trained models. The maximum training time with maximum loss is observed in Xception Net. We are running the pre-trained models in Intel core I5 Processor with 8 GB RAM in Google Colab in cloud environment. Hence the computational complexity can be reduced by using optimization or pruning away the weights and quantizing the network.

Discussion

According to current reports, AD may possibly rank third (after heart disease and cancer) as the major risk factor for mortality among the elderly [45]. According to several studies, recognizing the growth of Alzheimer’s disease in its early stages and preventing the disease from developing further has become essentially important. There has been tremendous research done on Alzheimer’s and its early phases that include AD MCI using various imaging modalities such as MRI, functional MRI, and FDG-PET, and it has received a lot of attention [46,47,48,49]. Liu et al. proposed a combination of convolutional and recurrent neural network to perform classification between AD vs CN and MCI vs CN subjects from FDG-PET images, achieving an overall AUC score of 95.3 and 83.9% respectively [48]. Carlos et al. used ensemble model using SVM and RF and achieved an overall accuracy of 66.78% for the AD, CN, MCI stages [49]. In the case of MCI individuals, subtypes, which include late and early phases of MCI development, are seldom discussed in literature, but are relevant in early therapy. Neuroimaging-based metrics have demonstrated great sensitivity in monitoring the stages over time in the examination of AD, and have therefore been presented as prospective biomarker to assess AD incidence, advancement, and responsiveness to treatments. There is a rising emphasis in utilizing 18F-FDG-PET to diagnose and predict moderate cognitive impairment in people with AD and those at potential threat for MCI. This has spurred interest in studying and classifying the MCI group’s EMCI and LMCI cohorts.

As a concrete effort for designing a therapeutically relevant deep learning algorithm, we sought to build a generic model that incorporated the brain images of different conditions (stages of AD) that are effective representation in a clinical context. The ADNI repository’s contributions made it simple to study the relationships between deep learning and features, which solved the challenge of getting ample amount of neuroimaging data in our specified modality. To our knowledge, this is the first study of its kind to examine the multiple stages (AD, EMCI, LMCI, CN) of Alzheimer’s disease utilising the 18F-FDG-PET imaging technique and achieve an overall AUC score of 95% by fine-tuning ResNet50 model. Ding et al. performed a comparable and impressive study in which they used InceptionV3 architecture to classify three groups (AD, MCI, CN) utilising the same imaging technique and got an AUC score of 98% [30]. Ford et al. conducted the study to evaluate the suspected AD and frontotemporal lobar degeneration (FTLD) on FDG-PET images using machine learning techniques [50]. The authors used random forest model for the classification of AD, FTLD, non-AD and non-FTLD and attained the accuracy of 63%. They achieved an AUC of random forest model as 0.82 and 0.89 using Z-score features from cerebellar and whole brain cerebellar regions to distinguish AD and non-AD patients. In the proposed study, RESNET model produced the testing accuracy of 98.4% in classification of AD, CN, EMCI and LMCI patients. The AUC value for RESNET 50 model was found to be 0.95 on the testing dataset for the classification of various stages of AD. Hence the Res Net 50 model outperforms the random forest classifier in discriminating the various stages of AD.

We attempt to investigate further the subtypes of MCI along with AD and control groups, enhance the training set by using image augmentation technique to make our deep learning model classify the four categories with higher sensitivity and accuracy. Making use of feature maps, we tried to visualise the filter and inherent working of our deep learning model. However, it still remains a challenge to interpret the various stages with naked eye. With additional fine-tuning approaches in deep learning and model visualisation methods, this framework maybe be useful for investigation in clinical environment and contribute in a significant way in the coveted field of radiology. The limitations of study are as follows: In clinical practice in the US, Medicare will typically only reimburse for FDG-PET if the question is to distinguish AD from frontotemporal dementia. It appears ResNet50V2 is not optimized to distinguish between different subtypes of dementia.

Conclusion

The study aims to investigate the potential effectiveness of 18F-FDG PET image modality in classifying multiple stages of AD by using deep residual neural network Utilising transfer learning and hyper-parameter tuning approach, Resnet-50 model is implemented for classifying four AD categories. Our modified Resnet-50 model successfully classifies the four categories with an overall sensitivity, specificity and ROC-AUC score of 94%, 95%, 95% respectively on the testing set. The implemented method is compared with the various other cutting-edge deep learning models: MobileNetV2, Xception, DenseNet121 and VGG16. The experiments show that our fine-tuned Resnet-50 model outperforms these models with higher accuracy achieved. The capability of the proposed technique to attain high classification accuracy (98.44% on our test set) using simply the FDG-PET modality and the classification model, makes it practically valuable for potential use during screening processes in radiological and clinical trials. By helping the detection and localisation of irregular patterns at multiple stages of the disease from this imaging modality, we demonstrated that our model could be coupled with experts’ visual interpretation, in existing clinical practise.

Data availability

The data that support the findings of this study are available on request from the corresponding author.

References

Coyle JT, Price DL, DeLong MR (1983) Alzheimer’s disease: a disorder of cortical cholinergic innervation. Science 219:1184–90. https://doi.org/10.1126/science.6338589

Stutzmann GE (2007) The pathogenesis of alzheimers disease—is it a lifelong “calciumopathy”? Neuroscientist 13:546–559. https://doi.org/10.1177/1073858407299730

Brookmeyer R, Johnson E, Ziegler-Graham K, Arrighi H (2007) Forecasting the global burden of Alzheimer’s disease. Alzheimer’s Dement 3:186–191. https://doi.org/10.1016/j.jalz.2007.04.381

Sloane P, Zimmerman S, Suchindran C, Reed P, Wang L, Boustani M, Sudha S (2002) The public health impact of Alzheimer’s disease, 2000–2050: potential implication of treatment advances. Ann Rev Public Health 23:213–31. https://doi.org/10.1146/annurev.publhealth.23.100901.140525

Tobias M, Yeh LC, Johnson E (2008) Burden of Alzheimer’s disease: population-based estimates and projections for New Zealand, 2006–2031. Aust N Z J Psychiatry 42:828–36. https://doi.org/10.1080/00048670802277297

Vickland V, McDonnell G, Werner J, Draper B, Low LF, Brodaty H (2010) A computer model of dementia prevalence in Australia: foreseeing outcomes of delaying dementia onset, slowing disease progression, and eradicating dementia types. Dement Geriatr Cogn Disord 29:123–130. https://doi.org/10.1159/000272436

Jorm AF (2002) History of depression as a risk factor for dementia: an updated review. Aust N Z J Psychiatry 35:776–781. https://doi.org/10.1046/j.1440-1614.2001.00967.x

Flaten TP (2001) Aluminium as a risk factor in Alzheimer’s disease, with emphasis on drinking water. Brain Res Bull 55:187–196. https://doi.org/10.1016/S0361-9230(01)00459-2

Ferreira PC, Piai KA, Takayanagui AMM, Segura-Muñoz SL (2008) Aluminum as a risk factor for Alzheimer’s disease. Rev lat Am Enfermagem 16:151–157. https://doi.org/10.1590/S0104-11692008000100023

Fleminger S, Oliver D, Lovestone S, Rabe-Hesketh S, Giora A (2003) Head injury as a risk factor for Alzheimer’s disease: the evidence 10 years on; a partial replication. J Neurol Neurosurg Psychiatry 74:857–862. https://doi.org/10.1136/jnnp.74.7.857

Chosy EJ, Gross N, Meyer M, Liu CY, Edland SD, Launer LJ, White LR (2019) Brain injury and later-life cognitive impairment and neuropathology: the Honolulu-Asia aging study. J Alzheimer’s Dis 73:317–325. https://doi.org/10.3233/JAD-190053

Mckhann G, Drachman D, Folstein M, Katzman R, Price D, Stadlan EM (1984) Clinical diagnosis of Alzheimer’s disease: report of the NINCDS–ADRDA work group under the auspices of department of health and human services task force on Alzheimer’s disease. Neurology 34:939–44. https://doi.org/10.1212/wnl.34.7.939

Jack C, Albert M, Knopman D, McKhann G, Sperling R, Carrillo M, Thies B, Phelps C (2011) Introduction to the recommendations from the national institute on aging and the Alzheimer’s association workshop on diagnostic guidelines for Alzheimer’s disease. Alzheimer’s Dement 7:257–262. https://doi.org/10.1016/j.jalz.2011.03.004

Subramanyam AA, Singh S (2016) Mild cognitive decline: concept, types, presentation, and management. J Geriatr Mental Health 3:10–20. https://doi.org/10.4103/2348-9995.181910

Jessen F, Wolfsgruber S, Wiese B, Bickel H, Mösch E, Kaduszkiewicz H, Pentzek M, Riedel-Heller S, Luck T, Fuchs A, Weyerer S, Werle J, van den Bussche H, Scherer M, Maier W, Wagner M (2013) AD dementia risk in late MCI, in early MCI, and in subjective memory impairment. Alzheimer’s Dement 10:76–83. https://doi.org/10.1016/j.jalz.2012.09.017

Ahmed S, Choi KY, Lee JJ, Kim BC, Kwon GR, Lee KH, Jung HY (2019) Ensembles of patch-based classifiers for diagnosis of Alzheimer diseases. IEEE Access 7:73373–73383. https://doi.org/10.1109/ACCESS.2019.2920011

Al-Khuzaie F, Duru A (2021) Diagnosis of Alzheimer disease using 2D MRI slices by convolutional neural network. Appl Bionics Biomech. https://doi.org/10.1155/2021/6690539

Chételat G, Arbizu J, Barthel H (2020) Amyloid-PET and 18F-FDG-PET in the diagnostic investigation of Alzheimer’s disease and other dementias. Lancet Neurol 19:951–962. https://doi.org/10.1016/S1474-4422(20)30314-8

Shah A, Niaz K, Ahmed M, Bunyan R (2019) Diagnosis of Alzheimer’s disease using brain imaging: state of the art. In: Ashraf G, Alexiou A (eds) Biological, diagnostic and therapeutic advances in Alzheimer’s disease. Springer, Singapore

Duara R, Loewenstein DA, Greig MT, Potter E, Barker W, Raj A, Schinka J, Borenstein A, Schoenberg M, Wu Y, Banko J, Potter H (2011) Pre-MCI and MCI: neuropsychological, clinical, and imaging features and progression rates. Am J Geriatr Psychiarty 19:951–960. https://doi.org/10.1097/JGP.0b013e3182107c69

Scahill RI, Schott JM, Stevens JM, Rossor MN, Fox NC (2002) Mapping the evolution of regional atrophy in Alzheimer’s disease: unbiased analysis of fluid-registered serial MRI. Proc Natl Acad Sci USA 99:4703–4707. https://doi.org/10.1073/pnas.052587399

Rombouts SA, Barkhof F, Veltman DJ, Machielsen WC, Witter MP, Bierlaagh MA, Lazeron RH, Valk J, Scheltens P (2000) Functional MR imaging in Alzheimer’s disease during memory encoding. Am J Neuroradiol 21:1869–1875

Kato T, Knopman D, Liu H (2001) Dissociation of regional activation in mild AD during visual encoding: a functional MRI study. Neurology 57:812–816. https://doi.org/10.1212/wnl.57.5.812

Fellgiebel A, Yakushev I (2011) Diffusion tensor imaging of the hippocampus in MCI and early Alzheimer’s disease. J Alzheimer’s Dis. https://doi.org/10.3233/JAD-2011-0001

Almuhaideb A, Papathanasiou N, Bomanji J (2011) 18 F-FDG PET/CT imaging in oncology. Ann Saudi Med 31:3–13. https://doi.org/10.4103/0256-4947.75771

Chew J, Silverman DHS (2013) FDG-PET in early AD diagnosis. Med Clin North Am 97:485–94. https://doi.org/10.1016/j.mcna.2012.12.016

Chen K, Ayutyanont N, Langbaum JBS, Fleshier AD, Reschke C, Lee W, Liu X, Bandy D, Alexander GE, Thompson PM, Shaw L, Trojanowski JQ, Jack CR, Landau SM, Foster NL, Harvey DJ, Weiner MW, Koeppe RA, Jaqust WJ, Reiman EM, The Alzheimer’s Disease Neuroimaging initiative (2011) Characterizing Alzheimer’s disease using a hypometabolic convergence index. Neuroimage 56:52–60. https://doi.org/10.1016/j.neuroimage.2011.01.049

Choi H, Ha S, Kang H, Lee H, Lee DS (2019) Deep learning only by normal brain PET identify unheralded brain anomalies. EBioMedicine 43:447–453. https://doi.org/10.1016/j.ebiom.2019.04.022

Wee CY, Liu C, Lee A, Poh JS, Ji H, Qiu A (2019) Cortical graph neural network for AD and MCI diagnosis and transfer learning across populations. NeuroImage Clin 23:101929. https://doi.org/10.1016/j.nicl.2019.101929

Ding Y, Sohn JH, Kawczynski MG, Trivedi H, Harnish RM, Jenkins NW, Lituiev D, Copeland TP, Aboian MS, Aparici CM, Behr SC, Flavell RR, Huang SY, Zalocusky KA, Nardo L, Seo Y, Hawkins RA, Pampaloni MH, Hadley D, Franc BL (2018) A deep learning model to predict a diagnosis of Alzheimer disease by using 18 F-FDG PET of the brain. Radiology 290:456–464. https://doi.org/10.1148/radiol.2018180958

Liu M, Cheng D, Yan W (2018) Classification of Alzheimer’s disease by combination of convolutional and recurrent neural networks using FDG-PET images. Front Neuroinform 12:35. https://doi.org/10.3389/fninf.2018.00035

Singh S, Srivastava A, Mi L, Caselli RJ, Chen K, Goradia D, Reiman EM, Wang Y (2017) Deep learning based classification of FDG-PET data for Alzheimer’s disease categories. Proc SPIE Int Soc Opt Eng. https://doi.org/10.1117/12.2294537

Ramzan F, Khan MUG, Rehmat A, Iqbal S, Saba T, Rehman A, Mehmood Z (2020) A deep learning approach for automated diagnosis and multi-class classification of Alzheimer’s disease stages using resting-state fMRI and residual neural networks. J Med Syst 44:37. https://doi.org/10.1007/s10916-019-1475-2

Hinrichs C, Singh V, Xu G, Johnson SC (2011) Predictive markers for AD in a multi-modality framework: an analysis of MCI progression in the ADNI population. Neuroimage 55(2):574–589. https://doi.org/10.1016/j.neuroimage.2010.10.081

Zhang D, Shen D (2012) Multi-modal multi-task learning for joint prediction of multiple regression and classification variables in Alzheimer’s disease. Neuroimage 59(2):895–907. https://doi.org/10.1016/j.neuroimage.2011.09.069

Pellegrini E, Ballerini L, del Valdes Hernandez MC, Chappell FM, González-Castro V, Anblagan D et al (2018) Machine learning of neuroimaging for assisted diagnosis of cognitive impairment and dementia: a systematic review. Alzheimer’s Dement Diagn 10:519–535

Grueso S, Viejo-Sobera R (2021) Machine learning methods for predicting progression from mild cognitive impairment to Alzheimer’s disease dementia: a systematic review. Alzheimer’s Res Ther 13:162. https://doi.org/10.1186/s13195-021-00900-w

Alzheimer’s Disease Neuroimaging Initiative PET technical procedures manual.http://adni.loni.usc.edu/wp-content/uploads/2010/09/PET-Tech_Procedures_Manual_v9.5.pdf. Published 2006. Accessed July 30, 2021

Buslaev A, Iglovikov VI, Khvedchenya E, Parinov A, Druzhinin M, Kalinin AA (2020) Albumentations: fast and flexible image augmentations. Information 11:125. https://doi.org/10.3390/info11020125

He K, Zhang X, Ren S, Sun J (2015) Deep residual learning for image recognition. Comput Vision Pattern Recognit 1512:03385

Sandler M, Howard A, Zhu M, Zhmoginov A, Chen LC (2018). MobileNetV2: Inverted Residuals and Linear Bottlenecks. 4510–4520. The IEEE Conference on Computer Vision and Pattern Recognition (CVPR). ArXiv: 1801.04381

Chollet F (2017). Xception: Deep Learning with Depth wise Separable Convolutions. 2017 IEEE Conference on Computer Vision and Pattern Recognition (CVPR).1800–1807. Doi:https://doi.org/10.1109/CVPR.2017.195

Huang G, Liu Z, van der Maaten L, Weinberger KQ (2017) Densely connected convolutional networks. IEEE Conf Comput Vision Pattern Recognit (CVPR) 2017:2261–2269. https://doi.org/10.1109/CVPR.2017.243

Simonyan K, Zisserman A (2014) Very deep convolutional networks for large-scale image recognition. Comput Vision and Pattern Recognit 1409:1556

Koh KH, Parekh AK (2018) Toward a united states of health: implications of understanding the US burden of disease. JAMA 319:1438–1440. https://doi.org/10.1001/jama.2018.0157

Gao F, Yoon H, Xu Y, Goradia D, Luo Ji WT, Su Y (2020) AD-NET: age-adjust neural network for improved MCI to AD conversion prediction. NeuroImage: Clin 27:102290. https://doi.org/10.1016/j.nicl.2020.102290

Parmar H, Nutter B, Long L, Antani S, Mitra S (2020) Spatiotemporal feature extraction and classification of Alzheimer’s disease using deep learning 3D-CNN for fMRI data. J Med Imaging 7:056001. https://doi.org/10.1117/1.JMI.7.5.056001

Liu M, Cheng D, Yan W (2018) Classification of Alzheimer’s disease by combination of convolutional and recurrent neural networks using FDG-PET images. Front Neuroinform 12:35. https://doi.org/10.3389/fninf.2018.00035

Cabral C, Morgado P, Costa D, Silveira M (2015) Predicting conversion from MCI to AD with FDG-PET brain images at different prodromal stages. Comput Biol Med 58:101–109. https://doi.org/10.1016/j.compbiomed.2015.01.003

Ford JN, Sweeney EM, Skafida M, Glynn S, Amoashiy M, Lange DJ, Lin E, Chiang GC, Osborne JR, Pahlajani S, de Leon MJ (2021) Heuristic scoring method utilizing FDG-PET statistical parametric mapping in the evaluation of suspected Alzheimer disease and frontotemporal lobar degeneration. Am J Nucl Med Mol Imaging 11:313

Funding

The authors did not receive support from any organization for the submitted work.

Author information

Authors and Affiliations

Contributions

MT, US: made substantial contributions to the conception or design of the work; or the acquisition, analysis, or interpretation of data; or the creation of new software used in the work; MT, US: drafted the work or revised it critically for important intellectual content; US: approved the version to be published.

Corresponding author

Ethics declarations

Conflicts of interest

The authors Mahima and U. Snekhalatha declare that they have no Conflicts of interest/competing interest.

Ethical approval

Not applicable.

Consent to participate

Informed consent form obtained from all the participants in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Thakur, M., Snekhalatha, U. Multi-stage classification of Alzheimer’s disease from 18F-FDG-PET images using deep learning techniques. Phys Eng Sci Med 45, 1301–1315 (2022). https://doi.org/10.1007/s13246-022-01196-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13246-022-01196-2