Abstract

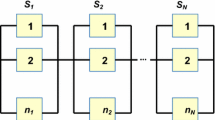

This study looks into the numerous reliability aspects of a series–parallel system. The designed system is made up of the three subsystems A, B, and C that interconnected in a series–parallel manner. Subsystem B has two units, whereas subsystems A and C have a single unit. Here, the system reliability measures such as reliability, availability, and mean time to failure using Laplace transformation and Markov’s process are evaluated. This study deals with minimize cost while system having the maximum reliability as constraining from one of the metaheuristic algorithms i.e., Particle Swarm Optimization (PSO). Lastly, a numerical example and graphical representation has been shown that the proposed methods are effective and efficient for solving reliability measures and cost problems.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Reliability has vital importance at all stages of all types of industries. In today’s era reliability becomes a major part of our day-to-day life. The user anticipates that the device or system will always operate as expected. (Li, 2012). The series–parallel combination is the basis for the entire system and it is used extensively throughout the world, for instance, refrigerators, air- conditioners, geysers, etc. Hence, a well-designed series–parallel configuration for a system is necessary to be extremely reliable. Today, there has been an overemphasis on the value of complex systems that highly reliable and inexpensive.

Reliability theory has become a highly popular subject in literature and has expanded throughout the technical diagrams. In engineering and mathematics, several authors have worked on system reliability. In the existing literature, various techniques have been used to analyze the series–parallel system’s behavior and determine its reliability measures. Markov models are beneficial when a decision problem entails risk that is continuous throughout time, the event timing is crucial, and decisive events may occur more than once. It can be used to capture the transition probabilities as changes occur. Some extensively used techniques are markov chain, the Markov process regenerative point technology etc. Levitin et al. (2013) analyzed a technique for assessing the reliability and performance distribution of complicated non-repairable multi state systems (MSS). Zhou et al. (2014) studied a model based on the Markov process because of single event disruption, onboard computer systems have a high need for reliability. This work provides a system reliability prediction technique and specifies the main module of system reliability using the Markov model. The graph theory is used to forecast a system’s reliability. Montoro-Cazorla et al. (2018) studied reliability system is susceptible to shocks, internal breakdowns, and audits. The system is governed by a Markov process based on some assumptions. Shocks force several units to fail or be damaged at the same time. Qiu and Ming (2019) discussed a MSS in which each component have random behaviour and must fulfil its demand with shared bus performance. If a unit performs better than its demand, the excess might be distributed to other units in the network units with problems. Jiang et al. (2019) optimized the load in MSSs by standpoint of its collective act and evaluated the collection of various integrals is to evaluate the cumulative performance at failure or a specific time. Kvassay (2019) considered the system topology to determine the structure–function characteristics that may be estimated in both static and dynamic cases. The author also demonstrates the effective use of modular decomposition for these computations. To determine the reliability of the wireless communication system, mean-time-to-failure with variation in failures, sensitivity analysis, Markov process and mathematical modelling are used by Kumar and Kumar (2020). Xie (2020) presented a method to study the effects of cascading failures in systems.

It may be further optimized using various optimization methods once the reliability has been evaluated using the heuristic and metaheuristic methods. The search for the best solution in a complex space is known as an optimization problem, which is a common problem in many engineering fields. Numerical approaches may be useful when a problem cannot be solved analytically or can be solved but takes too much time, although there is no guarantee that the solution will be globally optimized (Şenel et al., 2019). Wang (2007) optimized the configuration of an autonomous hybrid generating system with a variety of power sources, including photovoltaics, storage batteries, and wind turbine generators, which are discussed in this study. Tavakkoli-Moghaddam et al. (2008) proposed a new approach i.e., Genetic Algorithm (GA), due to its intricacy, it is extremely challenging to use conventional optimization tools to solve such a problem optimally. The effectiveness of GA for dealing with difficulties of this nature is shown in this research. A discussion of the suggested algorithm’s resilience follows the presentation of computational results for a representative case. Ouzineb et al. (2008) recommended Tabu Search (TS) heuristic to determine the lowest-cost system design under availability constraints. In most cases, the suggested TS outperforms GA alternatives in terms of, solution execution time and quality. Aghaei (2014) talked about nonlinear formulations to offer a multiobjective technique for the Multi Stage Distribution Expansion Planning (MDEP) when DGs are present. Cost reduction, un-distributed energy, active-power-losses, and a voltage stability index, based on short circuit capacity make up the MDEP’s objective functions. On a typical set of data, the proposed method’s efficacy is evaluated and results of the 33-bus test-system are reported. The evolution of PSO and its applications to optimization are briefly covered in the chapter given by Pant et al. (2017). Mellal (2019) talked about the optimization addresses many objectives, for example- optimizing reliability, decreasing the cost, weight, and volume with the aid of PSO of a multi-objective system. Peiravi et al. (2020) explored the work and developed an exact Markov-based methodology. It is a strong and reliable instrument that has the added benefit of quick computation. The model is solved using GA. The suggested Markov model produces better answers with greater reliability values, according to the findings The warm standby, mixed strategies, model dynamics, and the approach in redundancy allocation problems are all taken into consideration by a newer model for the RAP. PSO and GA are used to find a solution and an example clearly demonstrating the efficacy of the proposed strategy by Saghih et al. (2021). Ling et al. (2021) studied the best subsystem grouping approach to increase system reliability and demonstrated the combined shock process. In terms of major optimization order, several component allocation policies are examined. Marouani (2021) presented to solve reliability-redundancy-allocation issues in series, parallel, and complex systems. This work provided an upgraded and improved PSO method. Results reveal that for all three evaluated scenarios, the total system reliability is significantly higher than that of various systems proposed in earlier studies.

In this article, the authors use Transition State Probabilities (TSP) to determine the upstate and downstate probabilities. The authors have also optimized the system cost using PSO by taking reliability as a constraint. They have also examined the system’s reliability characteristics after graph’s evaluation.

Further, the remainder sections are prearranged as follows: Sect. 2 has a mathematical detail of the system. Section 3 explains the methodology to evaluate the reliability of the series–parallel system. Section 4 discussed some reliability measures. Cost optimization with the help of a metaheuristic algorithm is calculated in Sect. 5. Section 6 provides the result discussion about the whole article. Lastly, Sect. 7 concludes the study with future work.

2 System details

This section provides notations, descriptions, and state-transition diagram of the series–parallel system which are then used to assess the series–parallel system’s performance characteristics like availability, reliability, and MTTF.

2.1 Notations

All the notations used in the modeling of the system are described as.\(t\)Time variable in year\(s\)Variable of Laplace transformation\(\gamma\)System repair rate from a malfunctioning condition to a functional state\({\delta }_{A}, {\delta }_{B, }{ \delta }_{C}\)Failure rates for subsystem A, B, C\({p}_{i}(t)\)Transition state probability, where i = 0 to 7\({p}_{up}\)System upstate probability\({p}_{down}\)System downstate probability\(\overline{p }\) (s)Laplace transformation of p(t)\({p}_{i }(q,t)\)The probability of the failed stage \({p}_{i}\) Where i = 8 to 11

2.2 System description

Consider a series–parallel system that contain three sub-systems: A, B, C. Sub-systems A and C are single units while B is a double unit (B1 and B2) as shown in Fig. 1. If subsystems A and B fail, the whole system will be in partially working condition with subsystem C. Similarly, after the failure of C, the system will be in partially working condition with subsystems A and B. Failure of A and C or B and C will result as the overall system failure.

A state-transition-diagram of the system has three states: good, degraded, failed. There are twelve states in which one is good state, seven are degraded states and four are complete failure states. Figure 2 shows the proposed model’s state transition diagram and related states described in Table 1.

3 Model construction and solution

To obtain the following collection of various differential equations that describe the model mathematically. The differential equations of states s0 to s7, which are the equations of good and degraded states, are represented by Eqs. (1–9). Equations (10–13) show the differential equations for states s8 to s11, which are the equations for completely failed states.

Boundary conditions

Initial condition

One of the most significant methods for solving linear differential equations is the Laplace transform. In contrast to Fourier transforms, the Laplace transform produces nonperiodic solutions. The nonperiodic function’s Fourier series will always transform into periodic series. The solutions will also be periodic once these series are used to solve differential equations. It is applied in time-domain applications for t ≥ 0. It gives the value on initial condition. Taking the Laplace transformation of Eqs. (1–13) and using Eq. (14), we have

Boundary conditions

solving Eqs. (15) - (23) with the help of Eqs. (24) - (27), we obtain the transition state probabilities as-

The probability of system’s up-state and down-state are determined as-

4 Some measures

4.1 Availability determination

The system’s availability is equal to the sum of maintenance and reliability (Ram and Singh, 2014). Substituting the value of various failure rates \({\delta }_{A}=0.10, {\delta }_{B}=0.004, {\delta }_{C}=0.08, and \gamma =1\) in Eq. (28). Taking an Inverse Laplace Transformation (ILT) of Eq. (28), One can determine the system’s availability in terms of t is-

After changing the time t from 0 to 50 with interval 2 in Eq. (29) to determine the nature of the availability of the complex system during long run. The authors obtained Table 2 and the related Fig. 3.

4.2 MTTF determination

The MTTF (mean time to failure) of a technological product is the typical interval between non-reparable failures. A system’s MTTF can be founded using its average time between failures (Goyal, 2017a). After using the Laplace variable ‘s’ tends to zero and putting the repair rate \(\gamma =0\) in Eq. (28), one can obtain the MTTF as

Varying \({\delta }_{A}, {\delta }_{B}, and {\delta }_{C}\) respectively as 0.1, 0.2, 0.3, 0.4, 0.5, 0.6, 0.7, 0.8, 0.9, and setting \({\delta }_{A}=0.10, {\delta }_{B}=0.004, and {\delta }_{C}=0.08\), one can find the MTTF variance concerning failure rates. The result is shown in Table 3 and related to Fig. 4.

4.3 Reliability determination

Reliability is the probability that a device will function as intended for a certain amount of time under predetermined circumstances. Reliability is always a function of time (Goyal, 2017b). After fix the value of failure rate as \({\delta }_{A}=0.10, {\delta }_{B}=0.004, {and \delta }_{C}=0.08\) and repair rate equal to zero in Eq. (28), Taking ILT and authors get,

Changing the unit time ‘t’ from 0 to 50 with the interval 2 in Eq. (32), obtain Table 4 and Fig. 5 related to the reliability variation of a system.

5 Cost optimization of series–parallel system with PSO

One of the most effective optimization algorithms that is frequently referenced in the literature is the PSO (PSO) algorithm. Due to its ease of use, sparse number of parameters, and quick convergence rate, the PSO algorithm has been successfully used in numerous optimization problems. Authors choose this algorithm because it performs exceptionally well on a variety of problems from various domains.

It is a population-based meta-heuristic optimization approach (Kennedy and Eberhart, 1995a; Eberhart and Kennedy, 1995b). It takes its cues from the way fish school or flock together to find food. In the PSO, the initial population is initially produced at random within the search domain. The best position of the swarm is constantly stored for each particle. when the swarm iterates, the position of each particle update by the given relation:

The particle in the swarm is referred to as i in this context. The iteration step is denoted by d, and the random numbers r1 and r2 in the range [0, 1], position vector (x), velocity vector (v), and the inertia weight is represented by w. The optimization parameters are represented by the coefficients c1 and c2, and they should be non-negative. The best position pi (local best) is obtained by the ith particle, and pg gives the global best position of the swarm.

The PSO method substitutes a random position within the search space for the new position and velocity of a particle instead of accepting them with a small likelihood. The objective of this operation is to depart from the local minimums. The process runs till an optimal value is obtained otherwise predefined maximum limit of iterations is attained (Şenel et al., 2019). The flowchart of the PSO algorithm is shown in Fig. 6.

The author uses the PSO to reduce system costs while maintaining the necessary reliability.

The reliability (R) and cost (C) of the Series–Parallel System. (Negi et al., 2021; Tillman, 1970).

To obtain the result, the following non-linear programming problem is solved.

Minimize C.

Subject to Constrain

where, K1 = 200, K2 = 250, K3 = 150, and αi = 0.6, i = 1, 2, 3,

To obtain the minimum cost of the system by using PSO take 200 random particles, cognitive constants c1 = 20.9 and social constant c2 = 2.03, and no. of iterations is 1000. By using these values, get the system cost is 481.97.

For the cost optimization of the series–parallel system, the PSO algorithm has been implemented in MATLAB using the simplest penalty functions method for addressing constraints and the result is plotted in the below graph (Fig. 7).

6 Result discussion

In this paper, system reliability, availability, MTTF are evaluated using Markov process and minimize cost by PSO technology. The following aspects have been obtained during overall study.

-

From Table 2 and corresponding Fig. 3, It is observed that as time goes on, the designed system’s availability gradually declines. The designed system’s availability graph remains constant between 46 to 48 years and after that, it again reduces with time.

-

MTTF of the system have been considered with respect to various failure rates shown in Table 3, which represents that with an increment in failure rates\({\delta }_{A}, {\delta }_{B} , {\delta }_{C}\)., system’s MTTF decreases (Fig. 4).

-

Table 5 shows that how the passage of time has an impact on the system’s reliability. As time goes on, the system’s reliability decreases in a curvilinear and uniform manner, as illustrated in Fig. 5.

-

Figure 7 represents the relation between iterations and the fitness function and observed that the system’s cost decreases with the growing iterations. However, the system’s optimized cost is 481.97.

7 Conclusion

The current work focuses on a system reliability measure and cost optimization that consists of three components connected in a series–parallel manner. The Markov process is used to calibrate the system’s availability MTTF and reliability. The PSO technique is utilized to optimize the costs.

Series–parallel systems and the Markov process have a large amount of literature (Levitin et al., 2013; Tsumura et al., 2013; Zhou et al., 2014), but metaheuristics have not been used in their research work. It is concluded from the entire study that the series–parallel system’s availability and reliability reduce as time passes on. On examining the MTTF results of the system, it is observed that the MTTF of the system reduces as failure rates increase. When looking at the MTTF graph, one observes that failure rate \({\delta }_{B}\) has a higher degree of variation than failure rates \({\delta }_{A}\) and \({\delta }_{C}\). metaheuristic algorithm has been used to optimize the cost of the proposed system with the consideration of reliability as a constraint and found that cost is reduced efficiently after using this technology. In future, authors try to analyse other parameters such as MTTR, dependability etc. and a hybrid algorithm can be used to optimize the cost.

References

Aghaei J, Muttaqi KM, Azizivahed A, Gitizadeh M (2014) Distribution expansion planning considering reliability and security of energy using a modified PSO (PSO) algorithm. Fac Eng Inform Sci 65:398–411

Eberhart R, Kennedy J (1995b) Particle swarm optimization. In: Proceedings of the IEEE international conference on neural networks (Vol. 4, pp. 1942–1948)

Goyal N, Ram M, Kaushik A (2017a) Performability of solar thermal power plant under reliability characteristics. Int J Syst Assur Eng Manag 8:479–487

Goyal N, Ram M, Amoli S, Suyal A (2017b) Sensitivity analysis of a three-unit series system under k-out-of-n redundancy. Int J Qual Reliab Manag 34(6):770–784

Jiang T, Liu Y, Zheng YX (2019) Optimal loading strategy for multi-state systems: cumulative performance perspective. Appl Math Model 74:199–216

Kennedy J, Eberhart RC (1995a) Particle swarm optimization. In: Proceedings of the IEEE international conference on neural networks IV (pp. 1942–1948). Piscataway: IEEE

Kumar A, Kumar P (2020) Application of Markov process/mathematical modelling in analysing communication system reliability. Int J Qual Reliab Manag 37(2):354–371

Kvassay M, Rusnak P, Rabcan J (2019) Time-dependent analysis of series-parallel multistate systems using structure function and markov processes. Advances in system reliability engineering. Elsevier, pp 131–165. https://doi.org/10.1016/B978-0-12-815906-4.00005-1

Levitin G, Xing L, Ben-Haim H, Dai Y (2013) Reliability of series-parallel systems with random failure propagation time. IEEE Trans Reliab 62(3):637–647

Li ZS (2012) Reliability engineering, 2nd edition. J Qual Technol 44(4):394–395. https://doi.org/10.1080/00224065.2012.11917908

Ling X, Zhang Y, Gao Y (2021) Reliability optimization in series-parallel and parallel-series systems subject to random shocks. Proc Instit Mech Eng Part O J Risk Reliab 235(6):998–1008

Marouani H (2021) Optimization for the redundancy allocation problem of reliability using an improved PSO algorithm. J Optim 2021:1–9

Mellal MA, Zio E (2019) An adaptive PSO method for multi-objective system reliability optimization. Proc Instit Mech Eng Part O J Risk Reliab 233(6):990–1001

Montoro-Cazorla D, Pérez-Ocón R (2018) Constructing a markov process for modelling a reliability system under multiple failures and replacements. Reliab Eng Syst Saf 173:34–47

Negi G, Kumar A, Pant S, Ram M (2021) Optimization of complex system reliability using hybrid grey wolf optimizer. Decis Making Appl Manag Eng 4(2):241–256

Ouzineb M, Nourelfath M, Gendreau M (2008) Tabu search for the redundancy allocation problem of homogenous series-parallel multi-state systems. Reliab Eng Syst Saf 93(8):1257–1272

Pant S, Kumar A, Ram M (2017) Reliability optimization: a particle swarm approach. In: Mangey Ram J, Davim Paulo (eds) Advances in reliability and system engineering. Springer International Publishing, Cham, pp 163–187. https://doi.org/10.1007/978-3-319-48875-2_7

Peiravi A, Ardakan MA, Zio E (2020) A new Markov-based model for reliability optimization problems with a mixed redundancy strategy. Reliab Eng Syst Saf 201:106987

Qiu S, Ming HX (2019) Reliability evaluation of multi-state series-parallel systems with common bus performance sharing considering transmission loss. Reliab Eng Syst Saf 189:406–415

Ram M, Singh V (2014) Modeling and availability analysis of internet data center with various maintenance policies. Int J Eng 27(4):599–608

Saghih AMF, Modares A (2021) A new dynamic model to optimize the reliability of the series-parallel systems under warm standby components. J Ind Manag Optim 19(1):376–401

Şenel FA, Gökçe F, Yüksel AS, Yiğit T (2019) A novel hybrid PSO–GWO algorithm for optimization problems. Eng Comput 35(4):1359–1373

Tavakkoli-Moghaddam R, Safari J, Sassani F (2008) Reliability optimization of series-parallel systems with a choice of redundancy strategies using a genetic algorithm. Reliab Eng Syst Saf 93(4):550–556

Tillman FA, Hwang CL, Fan LT, Lai KC (1970) Optimal reliability of a complex system. IEEE Trans Reliab 19(3):95–100

Wang L, Singh C (2007) Compromise between cost and reliability in optimum design of an autonomous hybrid power system using mixed-integer PSO algorithm. In: 2007 International conference on clean electrical power. IEEE, Capri, Italy. pp. 682–689

Xie L, Lundteigen MA, Liu Y (2020) Reliability and barrier assessment of series–parallel systems subject to cascading failures. Proc Instit Mech Eng Part O J Risk Reliab 234(3):455–469

Zhou G, Guo B, Gao X, Zhao D, Yan Y (2014) Research on the system reliability modeling based on the Markov process and reliability block diagram. Intelligent data analysis and its applications, vol II. Springer, Cham, pp. 545–553

Acknowledgements

One of the author Miss Shivani Choudhary is thankful to Graphic Era Deemed to be University, Dehradun, India for providing Ph.D. fellowship for this research work.

Funding

No funding is required for the completion of this study.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

There is no conflict of interest for the publication of this manuscript.

Human and animal rights

There is no humans or animals related research are involved in this work.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Appendix

Appendix

Boundary Conditions

Initial Condition.

Good Condition \(p_{0} = 1\) and all are zero (0).

From Eq. (34)

Neglecting higher order terms of \(\Delta t\) since \(\Delta t\) is very small. So, authors get-

Divide by \(\Delta t\) both side

Similarly, from Eq. (35) to (41)

From Eq. (42), by a Taylor expansion

As, \(\Delta q \approx \Delta t\), then we get

Similarly, from Eq. (43) to (45)

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Choudhary, S., Ram, M., Goyal, N. et al. Reliability and cost optimization of series–parallel system with metaheuristic algorithm. Int J Syst Assur Eng Manag 15, 1456–1466 (2024). https://doi.org/10.1007/s13198-023-01905-4

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13198-023-01905-4