Abstract

In recent years, image inpainting approaches have shown remarkable improvements by employing encoder-decoder-based convolutional neural networks (CNNs). An important issue is that texture information is lost during the convolution procedure. Previous works addressed this problem by employing skip connection strategies, which deliver encoder features to a symmetrical decoder, neglecting the fact that the shallow encoder layers contain both zeros and noise. In this paper, we propose a novel strategy that applies a residual partial module (RePM) to divide the feature maps into two branches (i.e., identity and residual branches) to replace skip connections. The identity branch retains the texture information, and the residual branch eliminates noise. Moreover, we propose a mask discriminator that judges the ground truth of the missing area. Experiments on benchmark datasets show the effectiveness of our method in producing coherent images, with our approach performing favourably against existing approaches.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Image inpainting involves filling in missing areas in damaged images given a corresponding mask [5]. This task can be used in scenes like real-world object removal or image editing. Compared to traditional image inpainting methods [4, 5, 9, 10], which propagate uncorrupted image contents to the corrupted regions through patch-based matching, deep neural networks have greatly advanced image inpainting by inferring the image’s semantics to fill incomplete regions. These methods [17, 28] utilize features learned from convolutions in different convolution layers to produce more meaningful and consistent results.

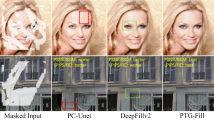

The encoder-decoder framework is commonly used in convolutional neural network (CNN)-based inpainting approaches [17, 21, 28, 36, 37]. However, simply employing skip connections in the encoder-decoder architecture for inpainting has limited effects because shallow encoder layers may have zeros (pixel value = 0) or noise in hole regions. Thus, in practice, the synthesis image may be disturbed by the shallow layer encoder features that are directly connected to the decoder. A useful solution to address this issue is employing a two-stage encoder-decoder architecture. This method [24, 27, 32, 34, 35, 39, 40] typically generates a coarse result in the first stage and leverages the second-stage network to refine the coarse image. However, this method consumes additional memory and training time than traditional approaches. Moreover, the refining stage will deteriorate if the coarse component fails to train adequately. For example, EdgeConnect (edge) [27] and contextual attention (CA) [39] are typical two-stage methods. As shown in Fig. 1c, the images repaired by the edge method are blurred in the hole regions. Furthermore, the visual effect of the image restored by the CA method is very distorted, especially in the center of the mask area (Fig. 1g). Other methods that attempted to solve this problem, such as Partial Conv (PC) [22] and Shift-Net [36], could not successfully address this issue. The PC method has notable artificial traces at the edge of the mask area (Fig. 1b), and Shift-Net distorts the features in the repaired image (Fig. 1h).

In this paper, we propose a novel inpainting strategy to handle insufficient skip connections in one-stage encoder-decoder networks. The shallow layer features from the encoder, which contain zeros and noise in hole regions, may disrupt the semantic features recovered by the deep layers if they connect directly to the corresponding decoder. Thus, we propose residual partial connections to replace skip connections. This strategy consists of two branches: the identity branch and the residual partial branch. The identity branch maintains the original information of the feature map extracted by the encoder, and the residual partial branch eliminates noise and infers zeros in hole regions based on the uncorrupted regions. The identity branch directly outputs the feature map, and the residual partial branch consists of 3 partial convolutions. After the zeros and noise are eliminated by the residual partial branch, we propose a feature equalization method to integrate the two branches. The proposed feature fusion strategy consists of feature concatenation and feature channel fusion. We concatenate two features and employ a \(1\times 1\) kernel convolution to fuse the feature channels [16]. Then, we connect the features produced by the residual partial module to those of the decoder. We input the repaired images produced by the generator into two discriminators: a global discriminator and a mask discriminator. The global discriminator ensures the global authenticity of the image, and the mask discriminator determines the authenticity of the mask regions.

Overview of the rest of paper In Sect. 2, we will introduce the related work of image inpainting and their limitation. And the next section, we will present our method. In Sect. 4, we demonstrate the superiority of our method over other methods by quantitative and qualitative. Also, an ablation study in this section’s last part reflects the effectiveness of our model. The summary of the article will be shown in Sect. 5.

Experiments on benchmark datasets show that the proposed method performs favourably against state-of-the-art approaches. Our contributions can be summarized as follows:

-

We propose the residual partial network (RePNet), a generic approach that replaces skip connections with a residual partial convolution module (RePM) to address the issue of incomplete information passing from the encoder to the decoder.

-

We propose a mask discriminator, which works with the global discriminator to determine the authenticity of the overall image and restored parts of the image.

-

The experimental results demonstrate that our approach performs better than other methods in terms of both objective and subjective metrics.

2 Related works

Traditional Image Inpainting Traditional image inpainting methods can be categorized as patch-based patch-based [4, 6, 7, 13] or diffusion-based [3, 5, 6, 9] methods. Diffusion-based methods propagate neighbouring regions to the target area to synthesize textures. However, these methods address small holes by considering the pixels surrounding target holes and thus cannot generate meaningful structures. In contrast to diffusion-based methods, patch-based approaches complete hole areas by finding and transferring similar image patches from the remaining image region. Although these methods perform well in filling small holes in background inpainting tasks, they cannot generate semantically meaningful results. Therefore, these methods cannot adequately deal with large missing regions.

Deep image inpainting Recently, deep learning-based methods [1, 2, 11, 14, 26, 30, 31, 33] have been utilized to solve many tasks, like object detection, style transfer, semantic segmentation, etc. It is also famous for the image inpainting task. They typically utilize the generative adversarial network [12]. A significant advantage of these methods is that they have the ability to infer the semantics from the mask regions. Pathak et al. [28] first attempt to employ an encoder-decoder architecture with adversarial training for image inpainting. However, the results lack global consistency and contain visual artefacts. Iizuka et al. [17] adopt global and local discriminators and full convolutions networks to solve this problem. Yu et al. [39] found that using only convolutions is insufficient to address long-range dependencies. They proposed a contexture attention mechanism to solve this problem. Yan et al. [35] presented a shift-net module to find similar patches in uncorrupted regions to obtain better predictions. Liu et al. [22] introduced a partial convolution, where the mask area of the window normalizes convolution weights. This technique effectively prevents the convolution filters from capturing too many zeros when they recover the hole regions. Following the [22], Yu et al. [40] proposed a gate convolution that learns the mask-update strategy. Nazeri et al. [27], and Ren et al. [32] generated reasonable structures by using additional prior information. EdgeConnect [27] and StructureFlow [32] recover edge maps and structure maps, respectively, and then fill in the details in the target regions. However, the methods proposed in [27] and [32] separate structure and texture synthesis into two stages and thus do not sufficiently fuse the features, generating visual distortions. Liu et al. [24] proposed semantic coherent semantic attention considering the feature coherency of hole regions. Liu et al. [23] presented a feature fuse strategy. Peng et al. [29] employ a three-stage technique in which the latent space present of structure and texture are studied first, and the structure and texture are inpainted in the second and third stages, respectively. However, [23, 24, 29] consume considerable memory, and the results are limited.

3 Method

We first present an overview of the proposed RePGAN model and how this model restores masked images. Figure 2 shows the two components of our model: the RePNet generator G (Fig. 2a) and two discriminators (global discriminator \(D_g\) and mask discriminator \(D_m\) in Fig. 2c). Given a mask image M(as shown in Fig. 3) and ground truth image \(I_g\), we first generate its masked image input \(I_{in} = I_g \times (1-M)\). Then, G takes \(I_{in}\) as input and generates the repaired result \(I_{out}\). \(D_g\) and \(D_m\) determine the authenticity of \(I_{out}\) and \(M_{out}\), respectively. The details of G and the discriminators are given following.

3.1 RePNet generator

Our RePNet generator G is an encoder-decoder-based convolutional network. An overview of the model architecture is illustrated in Fig. 2a. G is an end-to-end image generator, with six convolutional layers in the encoder and six convolutional layers in the decoder. In addition, four residual blocks [27] with dilated convolutions [38] are set between the encoder and decoder. Dilated convolutions utilize inflated kernels to reduce the computational resource and increase the size of the receptive field thereby allowing the network better to perceive the encoder features. The blue block in Fig. 2a represents our proposed ReP module, which is discussed in more detail in Sect. 3.2.

Sample of masks, the left image is center mask and other images are irregular mask proposed by [22]

Let \(I_g\) be a ground truth image and M be a mask image (1 represents missing areas, 0 represents known regions). The mask images are categorized as the center mask image and irregular mask images, as shown in Fig. 3. The center mask image is \(128\times 128\) square holes at the center of a \(256 \times 256\) image, and irregular mask images are a dataset of approximately 12k mask images provided in [22]. The input to the RePNet generator is the masked image \(I_{in} = I_g \times (1-M)\), and the generator outputs a color image \(I_{out}\), with the missing hole regions filled in. The output image has the same scale as the input image:

To describe the process of G in more detail, we separate G into four parts: the encoder En, the middle blocks (residual blocks) Mid, the ReP Module Re, and the decoder De. Formally, given the ith encoder layer feature \(En_i\), after traversing the ReP Module (as described in Sect. 3.2), we input the feature into the corresponding decoder:

\(De_i\) is shown in light orange, Mid is shown in yellow, Eni is shown in green, and Rei is shown in blue in Fig. 2(a).

3.2 Rep-module

The methods in [14, 33] lose some feature information as the network depth increases. These approaches copy the encoder features and crop them based on the corresponding decoder through skip connections. Unfortunately, skip connections are inefficient in image inpainting tasks because the features contain zero points and noise. Thus, directly copying and cropping this wrong information to the decoder may affect the ability of the generator to synthesize complete images. Therefore, to address this issue, we propose our ReP module, which is based on skip connections, as illustrated in Fig. 2b. In this module, we design two branches (i.e., an identity branch and a residual partial convolution branch), which retain and propagate the original information to the hole regions. The identity branch is equivalent to the skip connection, which enriches the original information and zero points of the features. The residual partial convolution branch eliminates the zeros and noise in the hole regions through partial convolutions [22]. Assuming that \(F_i\) (namely \(En_i\) in Sect. 3.1) is a feature from the ith convolutional layer. Then, we obtain two feature maps, \(F_{i1}\) and \(F_{i2}\) from the identity branch and partial convolution branch, respectively. \(F_{i1}\) contains the original feature map information, including zero points and noise in the hole regions. \(F_{i2}\) eliminates the hole regions and thus loses some of the original information. We then combine these two feature branches to utilize their different advantages.

We use feature equalization to integrate these two branches. This process is formulated as follows:

where f(.) denotes the feature equalizetion process. Assuming that \(F_{i1} \in \mathbb {R}^{c\times h\times w}\) and \(F_{i2} \in \mathbb {R}^{c\times h\times w}\), and \(F_ir\) is the output of this module. We concatenate these features: \((F_{i1}\bigoplus F_{i2})\in \mathbb {R}^{2c\times h\times w}\). Then, a convolution with a kernel size of 1 is used to simply fuse these features and compress the channels, which is denoted as: \(f = Conv((F_{i1}\bigoplus F_{i2}))\in \mathbb {R}^{c\times h\times w}\). Then, We pool the feature in the channel dimension to obtain the temporary variable \(F_t\in \mathbb {R}^{c\times 1\times 1}\). This process is formulated as follows:

We use the squeeze and excitation [16] \(F_t\) after two linear layers, \(FC_1\) and \(FC_2\). \(FC_1\) compresses the features and \(FC_2\) restores them. We then apply \(FC_1(pool(F_c))\in \mathbb {R}^{c/r \times 1\times 1}\), where r is the reduction rate. The output of \(FC_2\) is denoted as \(\sigma\), and the final formula is \(\sigma =FC_2(FC_1(F_t))\in \mathbb {R}^{c\times 1\times 1}\). \(\sigma\) is the importance of every channel in synthesize the final output, where \(F_ir=\sigma \times f\).

Different ReP modules extract distinct encoder layer features and transmit these features to the corresponding decoder layer.

3.3 Mask discriminator

Most image inpainting methods implement only a global discriminator. Although these methods can discern whether an image is real or restored, the global discriminator focuses on the global semantics and neglects the local details. Moreover, the unmasked region is the actual content, which improves the score of the fake image. To address these issues, we propose a mask discriminator to determine the authenticity of the hole regions. We use a global discriminator and a mask discriminator to jointly determine the authenticity of the images. The networks are based on convolution neural networks, which compress the image into a value. The outputs of the networks are two values output by the global and mask discriminators, which are added together. An overview of the networks is shown in Fig. 2c.

The global discriminator takes a \(256\times 256\) pixel image as input. This discriminator consists of five convolutional layers and two linear layers. The convolutional layers extract the image features, and the linear layers compress these features to a value. All convolution layers utilize a stride of \(2\times 2\) pixels to decrease the image resolution. Spectral normalization and activation functions are set after every convolutional layer. We selected LeakyReLU(\(\alpha\)=0.2) as the activation function of the convolutional layers and the sigmoid function as the activation function of the final linear layer. The sigmoid function restricts the final output value to the range of [0, 1]. This value represents the probability that the image is real rather than retorted.

The mask discriminator follows the same architecture, except that the input is a \(256 \times 256\) image that contains areas without valid pixels, and the remaining pixels in the area are set to 0. The output value represents the likelihood of the truth of the mask region.

Finally, the loss functions of the global and mask discriminators are calculated separately, and their outputs are combined.

3.4 Loss function

We denote several loss functions to measure the differences between completed image and ground truth image including pixel reconstruction loss, style loss, perceptual loss, and GAN loss during training.

Pixel reconstruction loss We measure the pixel-difference by calculating the similarity between the ground truth and the finally predicted image by the network. It can be written as follows:

where Iout and Igt represent the network output and ground truth respectively.

Perceptual loss We utilize the perceptual loss [18] to simulate human perception of images quality and capture the high-level semantics. The perceptual loss defined on the ImageNet-pretrained VGG-16 feature backbone:

where \(\varPhi _i\) is the feature map from the i-th layer of the pretrained VGG-16 backbone. In our work, \(\varPhi _i\) was extracted from layers ReLU1-1, ReLU2-1, ReLU3-1, ReLU4-1 and ReLU5-1.

Style loss The deconvolutions from the decoder will bring artifacts that resemble checkerboard. To moderate this effect, we introduce the style loss. Given feature maps of size \(C_j\times H_j\times W_j\), we compute their Gram matrix (\(G_j\)) and then compute the style loss as follows:

where \(G_j^{\varPhi }\) is a \(C_j\times C_j\) Gram matrix constructed from the selected \(\varPhi _i\), namely these feature maps are the same as those used in the perceptual loss.

GAN loss We adopt Relativistic Average LS [19] adversarial loss for our global and mask discriminators. For the generator, the adversarial loss is defined as:

where \(D_{ra}(xr,xf)=sigmoid(C_{x_r}-\mathbb {E}_{x_f} [C_{x_f}])\) and C(.) indicates the local or global discriminator without the last sigmoid function. To this end, real and fake data pairs \((x_r,x_f)\) are sampled from the ground-truth and output image.

Total losses The whole function of the proposed network can be written as:

where \(\lambda _r\), \(\lambda _p\), \(\lambda _s\), \(\lambda _{adv}\) are the hyper parameters. In our implementation, we empirically set \(\lambda _r\) = 1, \(\lambda _p\) = 0.1, \(\lambda _s\) = 250, \(\lambda _{adv}\) = 0.2.

4 Experiments

We evaluate the proposed network through comparisons with several state-of-the-art methods in terms of both quantitative and qualitative metrics. The details of the experimental settings are provided in Sect. 4.1, the experimental results are given in Sect. 4.2, and an ablation study is presented in Sect. 4.3 to prove our module’s effectiveness.

4.1 Experimental settings

We conduct experiments on three datasets, Places2 [41], CelebA-HQ [25] and Paris Street View [8] to evaluate our method. These three datasets contain 10k images, 30k images, and 19k images, respectively. We use the original train, test, and validation splits for these three datasets. Several data augmentation strategies, such as flipping, are adopted during training. Our model is optimized by the Adam algorithm [20] with a learning rate of \(2\times 10^{-4}\) and \(\beta _1=0.5\). We trained our model on a single NVIDIA 1080Ti (11GB) with a batch size of 1. We stopped training the model on the Places2, CelebA-HQ, and Paris Street View after 100, 30, and 70 epoches, respectively. All images and masks were resized to \(256\times 256\) for training and testing. We compare our methods with five state-of-the-art methods:

-

CA: Contextual Attention, proposed by Yu et al. [39]

-

SH: Shift-Net, proposed by Yan et al. [36]

-

PC: Partial Conv, proposed by Liu et al. [22]

-

Edge: Edge-Connect, proposed by Nazeri et al. [27]

-

HVAE: Hierarchical VQ-VAE, proposed by Peng et al. [29]

We evaluate the methods based on both centring masks and irregular holes [39]. Irregular masks are classified based on different hole-to-image area ratios (e.g., 10–20 (%), 20–30 (%)). We compare our method with the CA and SH models through centre mask experiments because these two methods perform better on centre masks than on irregular masks. We compare our method with the PC, Edge, CA, and HVAE models through irregular mask experiments because these four methods perform well on irregular masks.

4.2 Experimental results

Quantitative comparisons The image inpainting task lacks absolute metrics to judge the final result. To compare the results as accurately as possible, we use metrics that were applied in previous works to evaluate the methods. The metrics can be divided into two types: distortion measurement metrics and perceptual quality measurement metrics. The L1-distance (L1), peak signal-to-noise ratio (PSNR), and structural similarity index (SSIM) assume that the results are the same as those of the target image. These metrics are used to assess the distortion of the results. The Frechet inception distance (FID) [15] calculates the Wasserstein-2 distance between two distributions. This metric indicates the perceptual quality of the results. The final evaluation results of the irregular mask experiments are reported in Table 1, which shows that our method achieves better performance than all baseline models in filling irregular holes. Table 2 shows that our method outperforms the other approaches in filling centre holes. Moreover, our method obtains clear improvements in all metrics, which demonstrates the effectiveness of our method. The number of parameters and the inference time shown in Table 3 indicate that the effectiveness of RePGAN does not increase the complexity.

Qualitative comparisons Figures 4 and 5 show visual comparisons between our method and five state-of-the-art methods. Figure 4 visually compares our method with Edge, PC, and HVAE in irregular hole experiments. The Edge method fails to generate satisfactory textures. Although this method can produce appropriate content, the results in the hole regions are considerably more blurry than the results in other areas. Because Edge is a typical two-stage encoder–decoder network without skip connections, substantial information is lost during the convolutional process. The PC results contain unrepaired edges and noise in the mask areas. The main reason for this result is that the PC model utilizes skip connections, which transfer noise and zero points from the encoder to the decoder. Although HVAE generates images with better quality and textures than the abovementioned methods, the mask boundaries have clear traces and local blurring. In contrast, our method produces more realistic textures. The centre hole filling results are shown in Fig. 5. The images produced by CA are distorted and look unnatural. Shift-Net generates natural images, but the centre of the hole regions is not sufficiently coherent. These methods utilize standard convolutions, which generate noise in the hole regions. In contrast, our model produces more coherent content. In summary, RePGAN generates more meaningful semantics and clearer textures than existing approaches.

User study We also performed a subjective study to assess the visual quality of our results. Approximately 30 volunteers answered 20 questions each. Volunteers chose the most realistic image among 3 results generated by different methods (CA, SH, and ours). We summed the results and show the statistics in Table 4. Our model achieves the best results among the comparison methods on the benchmark datasets.

4.3 Ablation study

To demonstrate the effectiveness of the ReP module and mask discriminator, we replaced the ReP module with the simple skip connections with centre masks in the Places2 dataset. The objective results are reported in Table 5, and the subjective results are shown in Fig. 6. Without the ReP module, the results become blurry and distorted and contain noise. In addition, we used only the global discriminator during training in the centre mask experiments on the Paris Street View dataset. The objective results are reported in Table 6, and the subjective results are shown in Fig. 6. The results demonstrate the effectiveness of our proposed ReP module and mask discriminator in image inpainting tasks.

5 Conclusion

In this paper, we present the RePGAN network for image inpainting. In detail, we propose a residual partial module to replace the skip connections used in traditional methods. The ReP module includes an identity branch and a residual partial branch. The identity branch delivers the encoder’s information to the decoder, and the residual partial branch eliminates the noise generated by the encoder. We also propose a mask discriminator to judge the authenticity of the mask area in the images during training. The experimental results demonstrate that our method performs better than state-of-the-art approaches in terms of both quantitative and qualitative aspects.

References

Alzubi JA, Jain R, Kathuria A, Khandelwal A, Saxena A, Singh A (2020) Paraphrase identification using collaborative adversarial networks. J Intell Fuzzy Syst 39(1):1021–1032

Alzubi JA, Jain R, Nagrath P, Satapathy S, Taneja S, Gupta P (2021) Deep image captioning using an ensemble of cnn and lstm based deep neural networks. J Intell Fuzzy Syst 40(4):5761–5769

Ballester C, Bertalmio M, Caselles V, Sapiro G, Verdera J (2001) Filling-in by joint interpolation of vector fields and gray levels. IEEE Trans Image Process 10(8):1200–1211

Barnes C, Shechtman E, Finkelstein A, Goldman DB (2009) Patchmatch: a randomized correspondence algorithm for structural image editing. ACM Trans Graph 28(3):24

Bertalmio M, Sapiro G, Caselles V, Ballester C (2000) Image inpainting. In: Proceedings of the 27th annual conference on Computer graphics and interactive techniques, pp 417–424

Criminisi A, Pérez P, Toyama K (2004) Region filling and object removal by exemplar-based image inpainting. IEEE Trans Image Process 13(9):1200–1212

Darabi S, Shechtman E, Barnes C, Goldman DB, Sen P (2012) Image melding: combining inconsistent images using patch-based synthesis. ACM Trans Graph (TOG) 31(4):1–10

Doersch C, Singh S, Gupta A, Sivic J, Efros A (2012) What makes paris look like paris? ACM Transactions on Graphics 31(4)

Efros AA, Freeman WT (2001) Image quilting for texture synthesis and transfer. In: Proceedings of the 28th annual conference on computer graphics and interactive techniques, pp 341–346

Efros AA, Leung TK (1999) Texture synthesis by non-parametric sampling. In: Proceedings of the seventh IEEE international conference on computer vision, vol 2, pp 1033–1038. IEEE

Gheisari M, Panwar D, Tomar P, Harsh H, Zhang X, Solanki A, Nayyar A, Alzubi JA (2019) An optimization model for software quality prediction with case study analysis using matlab. IEEE Access 7:85123–85138

Goodfellow I, Pouget-Abadie J, Mirza M, Xu B, Warde-Farley D, Ozair S, Courville A, Bengio Y (2014) Generative adversarial nets. Adv Neural Inf Process Syst 27

Hays J, Efros AA (2007) Scene completion using millions of photographs. ACM Trans Graph (ToG) 26(3):4-es

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 770–778

Heusel M, Ramsauer H, Unterthiner T, Nessler B, Hochreiter S (2017) Gans trained by a two time-scale update rule converge to a local nash equilibrium. Adv Neural Inf Process Syst 30

Hu J, Shen L, Sun G (2018) Squeeze-and-excitation networks. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 7132–7141

Iizuka S, Simo-Serra E, Ishikawa H (2017) Globally and locally consistent image completion. ACM Trans Graph (ToG) 36(4):1–14

Johnson J, Alahi A, Fei-Fei L (2016) Perceptual losses for real-time style transfer and super-resolution. In: European conference on computer vision, pp 694–711. Springer, New York

Jolicoeur-Martineau A (2018) The relativistic discriminator: a key element missing from standard gan. arXiv:1807.00734

Kingma DP, Ba J (2014) Adam: a method for stochastic optimization. arXiv:1412.6980

Li Y, Liu S, Yang J, Yang MH (2017) Generative face completion. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 3911–3919

Liu G, Reda FA, Shih KJ, Wang TC, Tao A, Catanzaro B (2018) Image inpainting for irregular holes using partial convolutions. In: Proceedings of the European conference on computer vision (ECCV), pp 85–100

Liu H, Jiang B, Song Y, Huang W, Yang C (2020) Rethinking image inpainting via a mutual encoder-decoder with feature equalizations. In: Computer vision–ECCV 2020: 16th European Conference, Glasgow, UK, August 23–28, 2020, Proceedings, Part II 16, pp 725–741. Springer

Liu H, Jiang B, Xiao Y, Yang C (2019) Coherent semantic attention for image inpainting. In: Proceedings of the IEEE/CVF International Conference on Computer Vision, pp. 4170–4179

Liu Z, Luo P, Wang X, Tang X (2015) Deep learning face attributes in the wild. In: Proceedings of the IEEE international conference on computer vision, pp 3730–3738

Movassagh AA, Alzubi JA, Gheisari M, Rahimi M, Mohan S, Abbasi AA, Nabipour N (2021) Artificial neural networks training algorithm integrating invasive weed optimization with differential evolutionary model. J Ambient Intell Hum Comput, pp 1–9

Nazeri K, Ng E, Joseph T, Qureshi FZ, Ebrahimi M (2019) Edgeconnect: Generative image inpainting with adversarial edge learning. arXiv:1901.00212

Pathak D, Krahenbuhl P, Donahue J, Darrell T, Efros AA (2016) Context encoders: feature learning by inpainting. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 2536–2544

Peng J, Liu D, Xu S, Li H (2021) Generating diverse structure for image inpainting with hierarchical vq-vae. In: Proceedings of the IEEE/CVF conference on computer vision and pattern recognition (CVPR), pp 10775–10784

Redmon J, Farhadi A (2018) Yolov3: an incremental improvement. arXiv:1804.02767

Ren S, He K, Girshick R, Sun J (2015) Faster r-cnn: towards real-time object detection with region proposal networks. Adv Neural Inf Process Syst 28

Ren Y, Yu X, Zhang R, Li TH, Liu S, Li G (2019) Structureflow: image inpainting via structure-aware appearance flow. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 181–190

Ronneberger O, Fischer P, Brox T (2015) U-net: convolutional networks for biomedical image segmentation. In: International conference on medical image computing and computer-assisted intervention, pp 234–241. Springer

Song Y, Yang C, Lin Z, Liu X, Huang Q, Li H, Kuo CCJ (2018) Contextual-based image inpainting: infer, match, and translate. In: Proceedings of the European conference on computer vision (ECCV), pp 3–19

Song Y, Yang C, Shen Y, Wang P, Huang Q, Kuo CCJ (2018) Spg-net: segmentation prediction and guidance network for image inpainting. arXiv:1805.03356

Yan Z, Li X, Li M, Zuo W, Shan S (2018) Shift-net: image inpainting via deep feature rearrangement. In: Proceedings of the European conference on computer vision (ECCV), pp 1–17

Yeh RA, Chen C, Yian Lim T, Schwing AG, Hasegawa-Johnson M, Do MN (2017) Semantic image inpainting with deep generative models. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5485–5493

Yu F, Koltun V (2015) Multi-scale context aggregation by dilated convolutions. arXiv:1511.07122

Yu J, Lin Z, Yang J, Shen X, Lu X, Huang TS (2018) Generative image inpainting with contextual attention. In: Proceedings of the IEEE conference on computer vision and pattern recognition, pp 5505–5514

Yu J, Lin Z, Yang J, Shen X, Lu X, Huang TS (2019) Free-form image inpainting with gated convolution. In: Proceedings of the IEEE/CVF international conference on computer vision, pp 4471–4480

Zhou B, Lapedriza A, Khosla A, Oliva A, Torralba A (2017) Places: a 10 million image database for scene recognition. IEEE Trans Pattern Anal Mach Intell 40(6):1452–1464

Acknowledgements

This work was supported in part by the National Natural Science Foundation of China under Grant 62072169 and Changsha Science and Technology Research Plan under Grant KQ2004005.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

L. Sun is a graduate student at Hunan University.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Sun, L., Jiang, B., Yang, C. et al. RePGAN: image inpainting via residual partial connection and mask discriminator. Int. J. Mach. Learn. & Cyber. 14, 3193–3203 (2023). https://doi.org/10.1007/s13042-023-01827-4

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s13042-023-01827-4