Abstract

The accelerated pace of digital technology development and adoption and the ensuing digital disruption challenge established business models at many levels, particularly by invalidating traditional value proposition logics. Therefore, processes of technology and information system (IS) adoption and implementation are crucial to organizations striving to survive in complex digitalized environments. In these circumstances, organizations should be aware of and minimize the possibilities of not using IS. The user involvement perspective may help organizations face this issue. Involving users in IS implementation through activities, agreements, and behavior during system development activities (what the literature refers to as situational involvement) may be an effective way to increase user psychological identification with the system, achieving what the literature describes as intrinsic involvement, a state that ultimately helps to increase the adoption rate. Nevertheless, it is still necessary to understand the influence of situational involvement on intrinsic involvement. Thus, the paper explores how situational involvement and intrinsic involvement relate through a fractional factorial experiment with engineering undergraduate students. The resulting model explains 57.79% of intrinsic involvement and supports the importance of the theoretical premise that including users in activities that nurture a sense of responsibility contributes toward system implementation success. To practitioners, the authors suggest that convenient and low-cost hands-on activities may contribute significantly to IS implementation success in organizations. The study also contributes to adoption and diffusion theory by exploring the concept of user involvement, usually recognized as necessary for an IS adoption but not entirely contemplated in the key adoption and diffusion models.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Successful Information System (IS) implementation is fundamental for achieving a better value proposition in current digitalized and complex competitive environments (Verhoef et al. 2019), and organizations should strive to minimize possibilities of boycotting and not using IS. This can be achieved by promoting user involvement through convenient and low-cost activities during the IS implementation process, as user involvement is directly related to IS adoption success (Guimarães et al. 1996; Tait and Vessey 1988). The process of IS implementation comprises three phases which are associated with specific activities (Díez and McIntosh 2009): pre-implementation (e.g., system development), implementation (e.g., system use), and post-implementation (e.g., evaluation activities). In the IS adoption literature, several authors have argued that user involvement during IS development (Díez and McIntosh 2009; Mukti and Rawani 2016; Matende and Ogao 2013) and implementation (Bergier 2010; Leclercq 2007) contribute to system adoption success.

However, although previous research corroborates the need to involve users in different phases of an IS implementation, there are still unanswered questions about what user involvement means from a theoretical and a practical perspective. The definition of user involvement has expanded over time. For Ives and Olson (1984), it refers to user “participation during the system’s development process”, but they fail to explain what characterizes it. Later, Hartwick and Barki (1994) divided it into two constructs: intrinsic involvement and situational involvement. Intrinsic involvement refers to a user’s psychological state of identification with the system, which is understood as both crucial and personally relevant and is expressed, for instance, as the sense of ownership toward IS and the motivation to comprehend an IS as personally meaningful. Situational involvement deals with the execution of practical activities by users during the IS development. According to Hartwick and Barki (1994) and Barki and Hartwick (2001), these activities can be classified according to user types (senior user, manager, and end-user) and dimensions (e.g., ‘hands-on activities’, ‘overall responsibility’, ‘communication activities’, and ‘User-IS relationship’). For example, ‘overall responsibility’ encompasses activities and assignments that reflect overall leadership and accountability during IS development projects (e.g., cost estimations, making system decisions), while ‘hands-on activities’ are characterized by development tasks such as ‘system layout development’, ‘input and output forms development’, and ‘application of training to users’, essential activities of any IS implementation project.

User involvement is covered in participatory decision making and organizational change literature, where user involvement leads to commitment, system acceptance, behavioral intention, use, and satisfaction with the system (Alavi and Joachimist-Haler 1992; Amoako-Gyampah and White 1993; Barki and Hartwick 1989; Jackson et al. 1997). However, user involvement was seldom considered as a construct of theoretical models in traditional IS adoption literature (Lai 2017). More specifically, few studies have investigated situational and intrinsic involvement as relevant variables to IS adoption (Venkatesh et al. 2003; Lai 2017). Some studies proposed modifications in adoption models and analyzed the relationship between intrinsic involvement and specific parts of adoption models (Turan et al. 2015), while few included both types of involvements (e.g., Amoako-Gyampah 2007; Paré et al. 2006). Notably, studies adapting the technology acceptance model (TAM) that incorporate user involvement presented positive and significant, although somewhat discrepant results that demand further exploration.

Notwithstanding the limited literature addressing situational and intrinsic involvement relationships, there is empirical evidence of a direct influence influence of the first on the latter (e.g., Baroudi et al. 1986; Kappelman and McLean 1991; Wu and Wang 2008). Situational involvement activities lead the user to believe that the system is necessary and relevant – and thus he or she becomes intrinsically involved (Hartwick and Barki 1994). Moreover, Fakun and Greenough (2004) and Venkatesh and Bala (2008) indicated that situational involvement might lead the user to develop a sense of identification and satisfaction with the system, which are essential characteristics of intrinsic involvement. However, it is still necessary to understand the influence of situational involvement on intrinsic involvement and the elements that compose the situational involvement dimension, as there is no certainty about the contributions of each situational involvement dimension (proposed by Hartwick and Barki 1994 and Barki and Hartwick 2001).

Studying the relationship between situational and intrinsic involvement becomes essential to integrate user involvement into IS adoption theory and explore practical implications. In particular, hands-on activities positively and significantly influence intrinsic involvement (e.g., Leso and Cortimiglia 2021; Li et al. 2015; Aedo et al. 2010; Amoako-Gyampah 2007; Venkatesh and Bala 2008), but the relative relevance of activities and their effects (in terms of magnitude) remain unknown. Understanding how hands-on activities impact IS adoption is significant from a practical perspective, as these activities tend to be relatively simple and easy to introduce in IS development projects. Thus, this paper aims to answer the following research question: how do hands-on activities of situational involvement impact employees’ intrinsic involvement during IS development? To explore this question and achieve our goal, we used a design of experiment technique (fractional factorial experiment), which resulted in a model explains 57.79% supporting the importance of the theoretical premise that including users in activities nurture a sense of responsibility contributes toward system implementation success and contributes to intrinsic involvement. The article is structured into five other sections: (2) theoretical background, (3) methodological procedures, (4) results, (5) discussion, and (6) conclusion.

2 Theoretical Background

This section explores the fundamentals and main definitions of user involvement and its constituting constructs: intrinsic and situational involvement. It describes what previous research (including research on technology adoption models) has uncovered about how user participation in situational involvement activities can develop a state of intrinsic involvement. Finally, the hands-on dimension of situational involvement is in-depth analyzed.

Several authors (e.g., Mukti and Rawani 2016; Díez and McIntosh 2009; Matende and Ogao 2013) argue that user involvement in IS development activities is essential for system adoption success as it alleviates user resistance and increases (1) the quality of relationships established between system and users, (2) the quality of functional requirements, (3) user satisfaction, and (4) system acceptance. Additionally, Matende and Ogao (2013) state that user involvement makes is possible to gain knowledge of specific critical factors related to the human and domains’ expertise aspects, while Ahmad et al. (2012) suggest that including users in the development process facilitates processes that could become complex, avoids the creation of unnecessary characteristics, creates a commitment, and minimizes rejection (Fakun and Greenough 2004).

Nevertheless, according to Baroudi et al. (1986), the user involvement concept can be imprecise and, not rarely, mistakenly treated as a synonym of ‘user participation’ and ‘user engagement’. Thus, it is necessary to explicate these terms since they express different ideas. According to Barki and Hartwick (1989) and Hartwick and Barki (1994), user involvement comprises two constructs: intrinsic involvement and situational involvement. The former refers to a particular attitude characterized as a psychological identification state with something which is considered personally important and relevant. There is consistent literature supporting the influence exerted by intrinsic involvement on a system’s perceived usefulness, as users who sense that a system has organizational relevance and is personally relevant are more inclined to perceive the system as useful to perform their work (Amoako-Gyampah 2007). Venkatesh and Bala (2008) add that users that participate in IS development activities are more prone to forming judgments about the system’s relevance, quality, and result demonstrability (important determinants of perceived usefulness). This view is also shared by Paré et al. (2006) in their study addressing psychological ownership about the system, which is linked to users’ beliefs about the system, and it has a positive effect on perceived usefulness. Segal and Morris (2011) provide evidence of what happens when users are neither intrinsically nor situationally involved during the development: users were reluctant to use the system and tended to reject it as it did not present specific functionalities.

Situational involvement can be characterized by all activities, agreements, and behaviors performed by users during the system development process (Hartwick and Barki 1994). Typically, in literature, situational involvement comprises what the term ‘user participation’ means, and according to some studies, it can lead to intrinsic involvement. According to Lynch and Gregor (2004), situational involvement depends on the users that are involved and the degree of their involvement. Nevertheless, other variables found in literature must also be considered, such as the occurrence of participation (Kappelman and McLean 1991; Díez and McIntosh 2009) or the type of technology used (Hartwick and Barki 1994).

Allingham and O’Conner (1992) proposed a classification that distinguishes users according to organizational hierarchy: senior users (strategic and planning functions), managers (managerial and controlling activities), and end-users (operational activities). However, more important than user type is how the participation occurs (Barki and Hartwick 2001). In this line, Baroudi et al. (1986) indicate that substantive user participation is necessary through activities that effectively influence IS structure development; otherwise, participation will be experienced by users as symbolic and not substantive. Hartwick and Barki (1994) proposed three categories of participation dimension; later, Barki and Hartwick (2001) complemented this with a fourth category:

-

Overall responsibility, which encompasses activities and assignments that reflect overall leadership and accountability for the IS development project, including responsibility for overall system success, responsibility resulting from being a project team leader, from estimating costs, from being in charge of choosing technology, from having to raise funds or to make system decisions, etc.;

-

User-IS relationship, which refers to activities that reflect interactions with the system, such as initial evaluation and approval of requirements, being updated on the system’s evolution, evaluating and approving work on the system, etc.;

-

Hands-on activities, which include the performance of specific physical design and implementation tasks, such as contributing to layout and report format definitions and creating procedures, manuals, and training programs; and

-

Communication activities: activities related to user engagement in informal discussions about the system as well as exchanges of facts, opinions, and visions about the project with system specialists or managers.

According to Barki and Hartwick (2001), the four dimensions are linked to intrinsic involvement, as greater user participation in each dimension will result in a more substantial influence on project management and system development.

2.1 User Involvement in Adoption Models

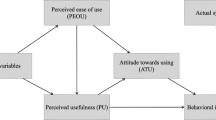

Hartwick and Barki (1994) suggested specific activities for each dimension of situational involvement and proposed a modified Theory of Reasoned Action (TRA) adoption model in order to test how ‘hands-on activities’, ‘overall responsibility’ and ‘user-IS relationship’ dimensions impact ‘intrinsic involvement’ and ‘attitude toward use’ constructs. Results suggest that “overall responsibility” is a high influence dimension for intrinsic involvement, while the other two dimensions do not influence it. Later, Barki and Hartwick (2001) added a fourth situational involvement dimension, ‘communication activities’, leading Paré et al. (2006) to test ‘hands-on activities’, ‘overall responsibility’, and ‘communication activities’ to understand physicians’ ownership sense toward IS clinical use. ‘Communication activities’ exerted the greatest influence, while ‘hands-on activities’ were not significant in generating ownership sense. Bagchi et al. (2003) tested three different models to verify an ERP system use. In two of them, the authors tested a direct relationship between (1) ‘hands-on activities’ and ‘user-IS relationship’, (2) ‘user-IS relationship’ and ‘overall responsibility activities’, and (3) ‘overall responsibility activities’ and intrinsic involvement. Although the model presented its quality parameters below the necessary for a confirmatory factorial analysis, their results indicated that relationships (2) and (3) are positive.

Fakun and Greenough (2004) tested the influence of the ‘user-IS relationship’ dimension on a system’s ‘perceived usefulness’and ‘perceived ease of use’. The authors verified the relationship in two different complexity scenarios. As a result, the ‘user-IS relationship’ dimension was not significant for ‘perceived ease of use’ in both scenarios, but it significantly affected the relationship with ‘perceived usefulness’ in more meaningful complexity environments. Jackson et al. (1997) tested the causal relationships between ‘situational involvement’ and ‘intrinsic involvement’ on ‘behavioral intention’, ‘perceived usefulness’, and ‘attitude toward use’ variables, but did not test the relationships between involvement types.

McKeen and Guimarães (1997) and Yoon et al. (1995) explored the idea that the greater the participation in hands-on development activities (e.g., objectives establishment, requirements determination, requirements approval, forms/screens definition, identifying sources of information, report format, etc.), the greater the user satisfaction with the system is. However, intrinsic involvement was not measured.

In those eight studies reviewed above, the effect of ‘hands-on activities’ on intrinsic involvement was tested only twice – one study found evidence of influence, but the other did not. This is particularly puzzling as such activities represent an important part of any IS implementation project. Some authors claim that ‘hands-on activities’ should have a positive and significant influence on intrinsic involvement, since it provides users with a sense of ownership (e.g., Li et al. 2015; Aedo et al. 2010, Amoako-Gyampah 2007; Venkatesh and Bala 2008). It increases optimism, perceived pleasure, objective usability, use intention, and the belief that the system is good, important, personally relevant, and functional, in addition to decreasing levels of system-related discomfort and anxiety. The literature suggests the ‘hands-on activities’ listed in Table 1.

2.2 Hands-On Dimension of Situational Involvement

According to Haider (2008), IS adoption depends on (1) the users’ perception of the system’s structural aspects and (2) users’ competencies to operate the system. In such a context, it is possible to identify in previous research some parallels between the hands-on activities (described in Table 2) of situational involvement with psychological inclination characteristics.

Jaspers and Khajouei (2008) indicate that it is necessary to pay significant attention to users’ participation in the system’s layout development (LAY), namely, the definition of screens and interfaces for the system operation. According to the authors, ensuring that users’ specific needs are met can significantly impact the ease of the system and enhance adoption. As for the input–output forms (INP), Rajan et al. (2016) indicate that users’ exclusion from the input–output requirement definition process can lead to low system adoption if the system lacks functionalities considered essential by users, and to the possible distancing of users from the process. For Yusof et al. (2007), system flexibility is one of the most critical factors for IS adoption, and it is achieved through user participation in the definition of requirements. The lack of user involvement in requirements definition tends to generate inefficient systems that are not adopted and used as they should be (Yusof 2015). To Haider (2008), a system requirements definition which involves all stakeholders raises user confidence in what the system can provide and ultimately increases adoption rates.

Regarding the report format definition (REP), Mertins and White (2016), in particular, suggest it is an essential factor for managing business performance and it must meet some criteria, such as definitions of tables and graphs, as well as summary measure presence, which influence user perception of report usefulness. Furthermore, according to Kelly et al. (2010), the user manual is also a topic that demands attention. The authors indicate that manuals may not work correctly and, thus, they may not allow work processes to evolve. Van Loggem (2014) also indicates that material quality influences manual use; however, such quality may result in high costs. Therefore, including the user in manual preparation (MAN) can result in more appropriate documents.

Literature linking user participation to hand-on activities is particularly extensive regarding ‘user training’. Most of the studies address users’ participation in training as subjects of the training itself. However, results show that user participation during user training program design (TRN) is an essential aspect for avoiding designing inadequate training programs – which is the cause of failures in the system’s use (Salahuddin and Ismail 2015). User participation in user training program design activities (TRN) is crucial since it makes training appropriate and emphasizes procedural knowledge and appropriate techniques to interact with the system (Yusof 2015). Similarly, involving users as instructors of facilitators of training activities (APP) has been highlighted as important success factor for IS adoption (Yusof et al. 2007). There is evidence in previous literature that training facilitates IS acceptance among those participating in the training. Although there is no consensus on the best user training methods (Edwards et al. 2012), many studies have identified a positive relationship between user training and adoption success and investigated particularities in instruction type and training duration (Yusof 2015; Sahu and Singh 2016; Yusof et al. 2007).

3 Methodological Procedures

We executed a randomized fractional factorial experiment with seven factors without repetition to study the way in which hands-on activities of situational involvement impact employee’s intrinsic involvement. Next, the procedures employed in the experiments are presented.

3.1 Scenario

The experiment was conducted with last year’s students from four BSc degree classes of an Industrial Engineering advanced course on information systems. Students were invited to participate in the development of an IS that all students would use during the semester to support learning processes, group work, and communication between students and instructors. Students were thus characterized as end-users. IS development took place in the first 3 weeks of the semester and student participation during the development phase was voluntary (however, participants were rewarded with extra course credits in the final grade as a stimulus for participation). System functions included student registration, task delivery and execution management, presentations management, asynchronous communication, and course assessment. The system was developed in an online platform where it was possible to simulate an implementation process based on parameter customization according to the environment, context, and process requirements.

An experienced expert in project management and IS development conducted the implementation process, which was structured around the seven types of situational involvement hands-on activities described in Table 2. Essential aspects, objectives, and limits characterized each activity (Table 3). The experiment activities were not carried out during class hours. By the end of the IS development phase, all students enrolled in the course were subjected to a training program about basic and advanced system functions. After this training section, students that participated in IS development activities answered a questionnaire (research instrument) about his/her perception of the experiment aimed at measuring their level of intrinsic involvement.

The decision to carry out this experiment with students instead of professionals is an open question (Feldt et al. 2018), but some points help us validate our choice. First, to use students in research is consistent with system adoption studies (e.g., Kramer 2007; Kumar and Benbasat 2006; Wang and Benbasat 2005; Zhang et al. 2011). Second, it was impractical and expensive to obtain an appropriate professional sample (following Falessi et al. 2018). Therefore using students was an alternative, as they can dedicate themselves to the experiment in a way that would be complicated for professionals.

Considering participants’ qualifications, the platform used for system development is an online tool with concepts of use and interface aligned with new technologies (e.g., Trello, Facebook) to manage processes, projects, and knowledge. It can be argued that undergraduate students may be more skilled in that type of technology than a group of professionals who have not recently used it. Such an argument supports the evidence that students can perform similarly to professionals in experiments (Höst et al. 2000) and that it is not always better to experiment with professionals (Falessi et al. 2018). Besides, the experiment did not require prior knowledge of IS development. It was independent of technical skills and sought to explore a need that students would have throughout the semester. Moreover, it can be said that students are close to the reality of users who are not required for technical development knowledge but for generating value performing a job. Finally, the students involved in the research are in the final stage of the course, and most have professional experience at internship level, which ensures that there is an understanding of the importance and need for an adequate information system.

3.2 Design of the Experiment

The design of experiments is a critical tool to improve new processes development (Montgomery 2001). It is used to develop theories when we wish to understand and extract significant conclusions from the data, resulting in a great understanding of a complex system, which involves the interaction of multiple elements of different types and levels (Cobb et al. 2003). According to Echeveste and Ribeiro (1999), it is an efficient statistical technique to study the effects of variables in a process with a small number of tests, when there are several factors to be investigated. This study’s design was conducted using the Minitab 18 software. Among others, we followed Nanni and Ribeiro’s (1987) guidelines for the design of the experiment’s development, presented next in six steps.

3.2.1 Design of Experiment Objectives Definition

The experiment’s objective was to determine a set of situational involvement hands-on activities that optimize the response variable, intrinsic involvement, and identify how main factors and two-factor interactions affect the response variable.

3.2.2 Independent Variable and Response Variable

Independent variables, also called controllable factors, are represented by seven hands-on activities (Table 1). All of the controllable factors comprise two fixed levels: low (indicating that the student did not participate in the activity) and high (indicating that student participated in the activity). Participation was evidenced by the students’ presence in the activity and the physical registration of the responses to the tasks performed.

The average value of items in the intrinsic involvement construct represents the response variable. It was obtained through a questionnaire designed using constructs, items, and scales empirically validated in previous studies (Hartwick and Barki 1994; Barki and Hartwick 2001). Each item was evaluated through the respondents’ agreement to a statement about it through a Likert-type scale ranging from one to seven, where one indicates complete disagreement and seven indicates full agreement to the statement.

Given that the questionnaire was designed and responded to in Brazilian-Portuguese, it was first translated and adapted from English and validated with three experts (FACHEL 2000). The instrument was applied via an online tool immediately after each student participated in the training program at the end of the experiment. Table 2 presents the construct, its items, and its respective type.

3.2.3 Experimental Restrictions

The experiment was conducted during 5 days during which all the seven situational involvement activities were conducted several times, so participants had different scheduling options to participate in the activities. Each activity was designed to last less than 45 min, considering the content of each activity and the way it should be performed.

Since we carried out a simulation of system development, the system itself was already partially developed before the experiment started. However, this fact was not known to participants. Nevertheless, there was room for modifications to improve the system based on inputs from the experiment. All activities had their exclusive materials, developed according to the needs for each activity. Table 3 depicts tasks for each activity.

We planned four training sections for all participants involved in the experiment. Users that participated in APP activities in partnership with the project management expert conducted these training sessions. Each participant was responsible for a piece of training.

3.2.4 Sample Size

The maximum number of participants was limited to 105 students enrolled in the four courses. Since seven factors had to be tested, a full experiment was not feasible as this would require a \(2^{\left( 7 \right)}\) type experiment demanding 128 runs. Therefore, we opted to conduct a randomized fractional experiment without repetitions, \(2^{{\left( {7 - 1} \right)}}\), in which 64 observations are necessary. According to Mason et al. (2003), fractional factorial experiments are effective alternatives to complete factorial experiments when budgetary, time or experimental constraints preclude the execution of complete factorial experiments. Also, we opted to conduct it randomized to reduce the impact that bias could have on the experimental results. Following Ribeiro and Caten (2001), this type of experiment allows the conduct of experiments without running all the variable combinations in the tests since these runs generate almost the same relevant information that enables response modeling. Additionally, according to Emanuel and Palanisamy (2000), in experiments of six or seven factors it is necessary to neglect the interaction effects of three or more factors in order to avoid wasting resources on irrelevant information, such as the interaction of several factors that have little influence on the response variable.

Due to the nature of a fractional factorial experiment with seven factors without repetition, some factors were confounded with others. Nevertheless, it is still possible to analyze the main effects and two-factor interactions. Therefore, in this experiment, one-factor effects were confounded with six-factor interaction effects, two-factor interaction effects were confounded with five-factor interaction effects, and three-factor interaction effects were confounded with four-factor interactions. Then, in practice, the experiment generates an experimental matrix with 64 combinations of activities. Each of its combinations can be understood as a profile indicating the activities that participants should join. For example, one combination could comprise activities LAY and PRI, while the other combination could comprise all the activities.

Five days before the experiment, each participant was randomly assigned to one of the 64 activity combinations. We also indicated to participants the schedule of activities; thus, each participant could choose the most appropriate time. The research instrument was delivered to participants immediately after the training section. Figure 1 explains the experiment flow and its procedures.

3.2.5 Threats to Validity and Non-Controllable Variables

As threats to the present study’s internal validation, we can indicate the following non-controllable variables which may be responsible for experimental noise and experimental error or residual variability:

-

1.

Participants’ personality and motivation characteristics, as well as previous experience with information system development;

-

2.

Number of participants in the room during the activities;

-

3.

Time of day of the activity (morning, afternoon, night);

-

4.

Participants’ age.

We also had no control over the number and the selection of participants, and the limited number of students (105) necessitates an experiment without repetition (a limitation that was partially addressed through redundant combination profiles to reduce possible noises). Another point is that compensation for participation (through extra course credits) can be seen as a threat to validity (Falessi et al. 2018), although it can be argued that the extra course credits motivate students in a way similar to the demands of ordinary courses.

3.2.6 Factors Held Constant

The factors held constant were: (1) materials available for each activity (consistency in content and format); (2) environmental conditions; and (3) the lecturer conducting the activities.

3.2.7 Statistical Model

The model results from a multiple linear regression, which helps verify the relationship between the response variable and multiple input variables. The regression results analysis was assessed through a combination of measures (P-value, R2 and adjusted R2) and the graphical analysis of the correlation between variables (data distribution charts and standardized effects Pareto chart) (Greenland et al. 2015).

In regression models, the significance is determined by the P-value (usually, values below 0.05 indicate that a factor is significant), coefficients of determination (R2), and the adjusted coefficient of determination (adjusted R2). These values indicate response variable variability (in percentage) explained by the model linear regression equation. Generally, the greater the coefficient of determination, the better the model fits the data (Nagelkerke 1991). The R2 indicates how well a regression model predicts responses for new observations, and it is determined by removing each observation from the data set, estimating the regression equation, and determining how well the model predicts the removed observation.

These analyses were restricted to main factors and two-factor interactions. Since we did not run repetitions in this experiment, three or more factor interactions were neglected and added to error term estimation, since higher-order interactions are generally difficult to interpret (Ribeiro and Caten 2001).

4 Results

Of 105 participants, 75 fully participated in the experiment (that is, participated in the activities assigned to them, participated in the training session, and responded the intrinsic involvement perception questionnaire). All 64 experimental combinations were completed. Next, we present the experiment results with the final model derived from the experiment and show which factors and interactions exert the most significant influence to create intrinsic involvement (the response variable).

4.1 Design of the Experiment Results

4.1.1 Controllable Factors Analysis

The response variable (“II”, Intrinsic Involvement) is the result of an arithmetic mean for the nine item responses to the research instrument (Table 2), and it was modeled through regression analysis using Minitab 18 software. Since the experimental matrix did not encompass repetitions in the experiment, the error was estimated based on three, four, five, six, and seven factors interactions. Nevertheless, after each iteration, main effects or two-factor interactions that did not present significant values were removed from the analysis and combined in the error.

The statistical data analysis evidenced that the model was significant at a 0.05 level, in which the regression F value is above the critical F-value for the characteristics of this experiment. The factors that were not encompassed in the model presented an F-value below the critical F. Thus, after several iterative rounds, the final model was defined with the highest possible values of R2 (57.79%) and Adjusted R2 (50.75%). Table 4 presents the main factors and two-factor interactions. The factors and their interactions explain 57.79% of the response variable variance. Since we address human behavior modeling (which has a high inherent variability), R2, and Adjusted R2 values were deemed sufficient for the analysis.

When calculating a regression equation to model data, the coefficients of each predictor variable are estimated based on the sample (Table 4). The coefficient (‘Coeff’ in Table 4) for a term represents the change in the mean response associated with a change in that term, while the other terms in the model are held constant. In turn, the T-value is used to calculate the P-value (used to test whether the coefficient is significantly different from 0). Three main factors and six two-factor interactions present a P-value equal to or below 0.05 and, therefore, are significant. The following interactions are those with highest coefficients: REP*APP (0.3349), LAY*REP (0.2531), MAN*PRI (− 0.1817), REP*TRN (0.1445). These interactions present the most significant effects, although they vary in direction (positive and negative). Other values have a certain importance importance, although they do not present significant effects. Based on the model, the following equation is used to explain intrinsic involvement (II):

The model shows which factors or interactions have the strongest influence on intrinsic involvement, assisting in developing the belief that the system is good, important, personally relevant, and functional. Details of each significant factor or interaction are discussed in Sect. 5. Next, we present the charts that report the results of effects and their interactions, illustrating the model and facilitating its comprehension.

4.1.2 Charts of Significant Factors and Interactions

The Pareto chart in Fig. 2 depicts the magnitude and importance of each significant factor and interaction in the model. In this chart, the bars indicate the magnitude, and they present statistical significance if they are above or are crossing the reference line. This chart enables the visualization of the effects in their absolute value and illustrate which effects are significant. Nevertheless, this chart does not depict which effects increase or decrease the response variable (which can be verified in Fig. 3). As can be seen, the most important effects in the model are those derived from the interactions of REP*APP and LAY*REP.

The chart showing normal probability for standardized effects (Fig. 3) presents effects based on an adjusted distribution line where all effects are zero. The standardized effects test the null hypothesis that the effect is zero. While positive effects increase the response when definitions change from low to high values, negative effects diminish the response when definitions change from low factor value to high factor value.

Effects farther from zero in the x-axis have a higher magnitude and are more statistically significant. Therefore, both negative and positive effects are found. Nonetheless, positive effects have a higher magnitude, and they are found on REP*APP and LAY*REP interactions.

The optimal combination definition of controllable level factors which optimize intrinsic involvement – that is, the ideal hands-on activities set indicated by the model as necessary to obtain the highest users’ psychological involvement – was found through Minitab18 Response Optimizer (which identifies the combination of input variable settings that optimize a single response or a set of responses). It is depicted in Fig. 4. At a 95% confidence level, the optimal combination encompasses LAY, INP, REP, TRN, APP, and PRI activities and presents a positive intrinsic involvement (II) response value of 6.4653. Thus, the only activity excluded is user procedures, manual development, and definition (MAN).

Equally, we estimated a configuration that would minimize the response variable. Figure 5 shows this information, and variables present in this configuration are INP, REP, MAN, and PRI. The smallest possible value for the response variable is 3,701.

5 Discussion

The resulting model presents reasonable statistical parameters that enable an analysis of the relationship between situational involvement during hands-on activities dimension and intrinsic involvement. This analysis can be divided into two parts: main factors effects analysis and interaction effects analysis.

Table 5 shows the activities’ characteristics. It addresses whether the factor is significant or not and, if significant, whether the effect is positive or negative. Also, it shows the total duration of the activities (approximate times: long duration is equivalent to around 45 min, moderate duration lasts around 30 min, and short duration lasts approximately 15 min). The column ‘Objective’ refers to the activity’s functional objective (if the objective was to design the system’s functionalities or to diffuse knowledge about the system). ‘Approach’ shows if the activity had a theoretical approach (in which the participant had to answer some questions as if it were a test) or a practical approach (in which participant would be part of a discussion group or present something). Furthermore, ‘Execution’ indicates if the activity was performed individually or in a group.

5.1 Interactions Analysis

Regarding the interactions composed of at least one main factor with significant effect (five out of six), the activity REP was part of four significant interactions with LAY, MAN, TRN, and APP. At times, REP presented a positive effect when interacting with other variables, while at other times, its effect was negative. In three interactions, its composition showed a significant positive effect. When REP interacted with APP and LAY, interactions presented the highest and second-highest effects in the model, respectively (Table 4). Further down, we will discuss the possibility of an activity complementary pattern related to REP and other activities. In contrast with those positive relations, REP presented a negative effect when interacting with MAN, which leaves room for further analyses of this factor.

In Table 6, we summarize the analysis of the six significant interactions, and in the next subsections, they are discussed with the help of an interaction surface plot for each one. This is a three-dimensional wireframe graph that shows how the interaction happens when two factors vary between different behaviors (presence ‘1’ or absence ‘− 1’), while the other factors are value fixed (absence).

5.1.1 Interaction Between Report Format Definition (REP) and Users’ Training Activity (APP)

The first interaction analyzed was between REP and APP (level of significance below 0.001, coefficient 0.3349). These factors will be further analyzed individually (in the main factors section). This interaction draws attention since it encounters the two most significant main effects that separately present diverging behaviors. Nevertheless, instead of canceling each other, they represent the interaction with the highest positive effect possible among two-factor interactions.

This interaction reports a relationship between moderate and short-duration activities. Those activities present entirely different characteristics and provide an all-experience perception to participants. Nevertheless, because the interaction produces the opposite effect when REP is isolated, it is assumed that this factor has a complementary APP effect. The response surface interaction chart (Fig. 6) shows that response variable variation is more significant when the APP factor varies (with REP kept constant at a low level) than when REP varies, and APP is kept at a high level.

Therefore, results suggest that the negative effect of participating in REP in isolation is suppressed by the APP effect situationally: when isolated, REP does not promote conditions to influence involvement positively; in fact, it discourages involvement. Nevertheless, when combined with another activity, it helps to direct user perception towards involvement: roughly, it is understood as an activity that expands participation in the experiment, broadening and complementing the effect of another activity.

5.1.2 Interaction Between Report Format Definition (REP) and System Layout Development (LAY)

In the interaction between LAY and REP (level of significance below 0.001; coefficient 0.2531), factor REP presents a similar behavior to the REP * APP interaction. The REP variation level complements the LAY effect (Fig. 7). When LAY is kept constant at a high level, the REP effect becomes significant, which does not occur separately. Nevertheless, when LAY levels range from low to high while maintaining interaction with REP, similar results to REP * APP are produced: interaction with factor LAY changes the direction of REP’s effect. Thus, this interaction promotes conditions to influence the response variable (II) positively.

This behavior can be explained in two ways. The first explanation echoes the argument used for the REP * APP interaction: REP reinforces user perception towards the experience, providing more time for the experience process (despite the two activities presenting similar characteristics: theoretical approach, individual execution, and aimed at the system’s functionalities). The second explanation involves a qualitative argument regarding activities. REP execution uses definitions delineated in LAY activities. Therefore, participants that have participated in both activities understand better the context of the definitions that will serve as the base for decisions made in REP, which allows for a more profound system assimilation and, consequently, a development of stronger feelings of ownership towards it.

5.1.3 Interaction Between Report Format Definition (REP) and User Training Program Design (TRN)

For interaction between REP and TRN (level of significance below 0.05; coefficient: 0.1445), when TRN ranges from low to high, and REP is kept high (Fig. 8), considerable variance in the intrinsic involvement is observed. This fact is in line with two previous interactions: REP alone does not provide necessary conditions for intrinsic involvement; nevertheless, the situation changes when associated with another activity. In this specific case, the effect is consistent with previous research that highlights the importance of user participation in training development (Salahuddin and Ismail 2015; Yusof et al. 2015). TRN addresses training development, and it is characterized by long duration, theoretical approach, individual execution, and it aims to diffuse knowledge. Based on the results, this factor is likely to change the REP effect’s negative impression.

Nevertheless, when TRN is kept high and REP switches from absence to presence, the response variable does not change considerably. That is, REP does not act as a complementary factor as in the interactions discussed previously. The activities’ characteristics (Table 5) can explain this fact. While REP means defining the report format, TRN addressed what will be encompassed in training definitions and how training will occur. Therefore, we neither find facts which support that these activities are complementary (as in the relationship between REP and LAY) nor a direct responsibility promotion for multiplying knowledge (like in the relationship between REP and APP), although TRN is a diffusion activity.

5.1.4 Interaction Between Report Format Definition (REP) and User Procedures Manual Development (MAN)

The interaction between REP and MAN presents a negative effect with a coefficient of 0.1569, at a significance level below 0.03. This interaction is very similar to REP * TRN as it is based on each activity’s characteristics, although what is developed here is the system manual instead of training sessions. This essential difference may evidence that MAN may not be so significant to the process.

When MAN is kept constant at a high level, results show that interaction between these two activities is not recommended since the addition of REP caused a considerable drop in the response variable (Fig. 9). The same behavior occurs when REP level is kept high, varying MAN presence. This interaction shows that not every activity combined with REP will modify its behavior, which can be explained by how participants perceive MAN.

The manual creation activity may have been perceived as (1) a stressful task for with the need to be meticulous, which is in line with Van Loggem (2014), who indicates that manual usage considerably depends on its quality, which may be demanding since the process involves research and a large amount of work. Additionally, manual creation may have been perceived as (2) an activity that does not promote a significant sense of responsibility development toward knowledge diffusion (Kelly et al. 2010), as IS manuals’ effective use is widely questioned. According to Pogue (2017), people are increasingly more used to new technologies (which become ever more comfortable to use), and answers to technology use questions are generally found in online communities, like Youtube tutorials, due to the ease of access and convenience. For all purposes, results suggest that MAN and REP’s exclusive interaction must be avoided.

5.1.5 Interaction Between User Procedures Manual Development (MAN) and System Access Priorities and Data Access Privileges Definition (PRI)

Interaction between MAN and PRI must also be avoided given that their effects cancel each other out (level of significance below 0.02, and negative coefficient of 0.1817), according to the interaction surface chart presented in Fig. 10. The interaction chart indicates that the effect is always more significant in the exclusive presence of any factors: both mutual presence and absence generate bad results for user involvement variance. The characteristics are similar to the previous relationship between REP and MAN. The PRI factor represents a short activity aimed at system configuration. Participation in this activity is individual and theoretical, and aims at the definition of users roles inside the system. Unlike other system development activities, PRI is characterized by the responsibility of indicating who will be able to do what in the system’s functions.

What happens when MAN varies and PRI is kept high is similar to the relationship between MAN and REP. This reinforces the previous interpretation that participating in manual creation activities discourages intrinsic involvement. Thus, when PRI characteristics interact with manual creation, the interaction effect may be disastrous and should be avoided.

5.1.6 Interaction Between Input–Output Forms Development (INP) and User Training Program Design (TRN)

The last relationship to be analyzed (level of significance below 0.02; positive coefficient of 0.1677) regards the interaction with the most prolonged duration (comprising activities of long and moderate duration). The activities INP and TRN have complementary characteristics and the INP * TRN interaction show positive effects. This interaction is similar to REP * APP, except that neither INP or TRN present negative effects when isolated. The system requirement definition activity is cited in the literature as a relevant factor for system design in line with user’s routines and practices (Rajan et al. 2016), while training development is also considered relevant for appropriate knowledge sharing. Therefore, this interaction result is in line with the literature in that it shows a significant effect on involvement generated by these activities.

When TRN is absent (Fig. 11), INP does not vary intrinsic involvement; nevertheless, when TRN is kept high, the presence of INP maximizes interaction effect. Thus, this interaction is qualitatively essential since, on the one hand, it enables users to internalize system definitions and, on the other hand, it enables users to indicate which aspects of the system demand training. This combination creates a sense of ownership in the participant and, consequently, involvement.

5.1.7 Optimal configuration

The optimal configuration does not include User procedures manual development (MAN) in the optimal process composition of the intrinsic involvement response variable (6,4653). This fact is essential as it corroborates the argument that manual design activities should be avoided: the variable had significant negative effects on both interactions. Thus, what could be considered an opportunity to broaden manual quality has become a factor that should not be considered in the process (since this variable alone does not present a significant effect).

5.2 Main Factors

The main factor with the highest effect is Report format definition (REP), and it generates a negative effect on intrinsic involvement. This activity aims to capture users’ perceptions about which system-related information (INP) should be presented and how it can be condensed, summed up, and disclosed in reports. At a level of significance below 0.05, results show a negative effect of − 0.1733 when in isolation.

REP is one of the activities with the shortest duration, and it addresses the informational design of system functionalities. This activity is conducted individually, and it has a theoretical approach. Its objective was to detect the best way to present information. But the information that has to be made available in the reports was defined in another activity (INP), which was not necessarily performed by the participant. Participation in REP may not have been perceived as substantial and relevant to participants due to the tasks involved. From this context, three possibilities arise:

-

1.

Participants may not have had the opportunity to feel they were a part of the development process.

-

2.

Participants may not have agreed that the available information was indeed the most appropriate, or they may have disagreed with material quality;

-

3.

The choices made by the participant were not included in the final system version.

The first two analyses are in line with the literature on adoption that discusses the substantive and influencing nature of participation (Ahmad et al. 2012; Barki and Hartwick 2001), while the third analysis addresses an unmet expectation (Díez and McIntosh 2009). In sum, we propose that participants did not perceive their participation as relevant, and thus, they developed a feeling of discomfort and dissatisfaction.

The second main factor with the highest effect is User’s training activity (APP) (level of significance below 0.05, and coefficient 0.1388). This result is entirely explained by the vast literature on training importance for both trainees and instructors since the knowledge multiplication function was indicated as a success factor in generating involvement in IS implementation (Yusof et al. 2007). Contrary to REP, APP lasts longer since it occurs in two moments: (1) during the comprehension of the system functionalities that should be explained, and (2) during system functionalities explanation at training sessions. Additionally, this activity has a collective character, with a practical rather than theoretical approach, and aims at diffusing knowledge about the system. We argue that this activity enables participants to feel as a significant part of the process. Since they can deeply understand the system and be partly responsible for their classmates’ understanding, this provides an sense of ownership about what has been developed, in addition to the feeling that the system is good, important, personally relevant, and functional, which consequently diminishes the discomfort and anxiety related to the system (Li et al. 2015; Lim 2003; Aedo et al. 2010; Amoako-Gyampah 2007; Monnickendam et al. 2008; Venkatesh and Bala 2008).

Similarly, results related to Input–output forms development (INP) are in line with the literature (level of significance below 0.05, coefficient of 0.1356). Our results also highlight the importance of including users in the decision process about which information is relevant to the system (Rajan et al. 2016), aiming to neither forget any functionality nor make the system inflexible to users’ work routine (Yusof et al. 2007). This is a collective activity with a more practical than theoretical orientation and moderate duration, aiming at the systems’ functionalities. Thus, INP findings agree with almost all aspects of the APP analysis regarding the opportunities to develop an ownership sense in users. Nevertheless, INP differs from APP because the former enables developing a sense of ownership considering users’ experience and routine as a fundamental system part.

The other main factors do not present significant effects which help to explain intrinsic involvement variance. Such variables present common aspects, such as individual execution and theoretical character.

6 Conclusion

This study aimed to experimentally investigate situational involvement elements that explain intrinsic involvement variation through a fractional factorial experiment with last year’s students from an engineering course. Based on 64 observations, we explored seven factors related to situational involvement hands-on activities. Our model explains 57,79% of the response variable variance (which is considered satisfactory when modeling human behavior) and enables main factors and two-factor effects interaction analysis.

This model identified that the effects of report format definition (REP), user’s training (APP), and input and output requirements definition (INP) activities had the highest significant. However, the first one presents a negative effect, while the other two have positive effects. Additionally, we found that interactions with greater significant effects existed between APP and REP, REP, and LAY (system layout development), in which REP surprisingly presents a significantly positive effect in interaction.

This case clearly shows the importance of the experiment’s design since if the two-factor interactions had not been analyzed, REP could arguably be rejected as a type of user participation activity that hinders intrinsic involvement. However, our results suggest that involving users in REP in combination with another activity is potentially valuable and relevant, and it should indeed be executed since, from an operational point of view, this is one of the simplest, briefest and less elaborate activities.

Another critical observation derived from the experiment is that ‘User procedures manual development’ (MAN) should be avoided. Although it was only not significant as a single main factor, its interactions are disastrous, and we suggest that user participation in this activity should be excluded from the IS development process to increase intrinsic involvement. This is also evident in factors that optimize the model composition toward the response variable. We suggest professional IS implementation to avoid users’ inclusion in the design of the instruction manual. It may even be worthwhile to reflect if it is necessary to include a “design of instruction manual” system development process. If found necessary, more agile mechanisms for knowledge appropriation and dealing with questions should be adapted to this activity.

Therefore, we conclude that end-user inclusion is essential and relevant during any system development process, as long as this participation generate feelings of ownership and relevance, causing the user to feel useful and necessary. Meaningless participation may cause the opposite effect in intrinsic involvement creation, effectively putting IS implementation success at risk due to potential non-adoption.

As future research, end-user involvement variables corroborate results from previous research, and they reinforce the importance of including these variables in adoption models, although this is typically neglected. Thus, we strongly advise the use of these variables as constructs in IS adoption models while always analyzing its elements’ relevance; for example, we recommend examining the need for manual design as an activity.

References

Aedo I, Díaz P, Carroll JM, Convertino G, Rosson MB (2010) End-user oriented strategies to facilitate multi-organizational adoption of emergency management & information systems. Inf Process Manag 46:11–21

Ahmad R, Kyratsis Y, Holmes A (2012) When the user is not the chooser: learning from stakeholder involvement in technology adoption decisions in infection control. J Hosp Infect 81:163–168

Alavi M, Joachimsthaler E (1992) Revisiting DSS implementation research: a meta-analysis of the literature and suggestions for researchers. MIS Q 16(1):95

Allingham P, O’Connor M (1992) MIS success: Why does it vary among users? J Inf Technol 7:160–168. https://doi.org/10.1177/026839629200700305

Amoako-Gyampah K, White KB (1993) User involvement and user satisfaction. Inf Manag 25:1–10. https://doi.org/10.1016/0378-7206(93)90021-k

Amoako-Gyampah K (2007) Perceived usefulness, user involvement and behavioral intention: an empirical study of ERP implementation. Comput Hum Behav 23(3):1232–1248. https://doi.org/10.1016/j.chb.2004.12.002

Bagchi S, Kanungo S, Dasgupta S (2003) Modeling use of enterprise resource planning systems: a path analytic study. Europ J Inf Syst 12:142–158

Barki H, Hartwick J (1989) Rethinking the concept of user involvement user involvement. MIS Q 13(1):53–63

Barki H, Hartwick J (2001) Communications as a dimension of user participation. IEEE Transact Prof Commun 44(1):21–35

Baroudi JJ et al (1986) An empirical study on the impact of user involvement on system usage and information satisfaction. Commun ACM 29(3):232–238

Bergier B (2010) Users’ involvement may help respect social and ethical values and improve software quality. Inf Syst Front 12(4):389–397

Cobb P, Confrey J, diSessa A, et al (2003) Design experiments in educational research. Educ Res 32:9–13. https://doi.org/10.3102/0013189x032001009

Díez E, McIntosh BS (2009) A review of the factors which influence the use and usefulness of information systems. Environ Model Softw 24:588–602. https://doi.org/10.1016/j.envsoft.2008.10.009

Echeveste M, Ribeiro JL (1999) Planejando a otimização de processos. Proto Alegre/PPGEP UFRGS

Edwards G, Kitzmiller RR, Breckenridge-Sproat S (2012) Innovative health information technology training. CIN: Comput Inf Nurs 30:104–109. https://doi.org/10.1097/ncn.0b013e31822f7f7a

Emanuel JT, Palanisamy M (2000) Sequential experimentation using two-level fractional factorials. Qual Eng 12(3):335–346

Fachel JMG, Camey S (2000) Avaliação psicométrica: a qualidade das medidas e o entendimento dos dados. Em J. A. Cunha (Org.). Psicodiagnóstico V. Artmed, Porto Alegre, pp 158–170

Fakun D, Greenough RM (2004) An exploratory study into whether to or not to include users in the development of industrial hypermedia applications. Requir Eng 9:57–66

Falessi D, Juristo N, Wohlin C, Turhan B, Münch J, Jedlitschka A, Oivo M (2018) Empirical software engineering experts on the use of students and professionals in experiments. Empir Softw E. https://doi.org/10.1007/s10664-017-9523-3

Feldt R et al (2018) Four commentaries on the use of students and professionals in empirical software engineering experiments. Empir Softw Eng 23(6):3801–3820. https://doi.org/10.1007/s10664-018-9655-0

Greenland S et al (2015) Statistical tests, P values, confidence intervals, and power: a guide to misinterpretations. Europ J Epidemiol 31(4):337–350

Guimaraes T, Yoon Y, Clevenson A (1996) Factors important to expert systems success a field test. Inf Manag 30(3):119–130

Haider A (2008) Fallacies of technological determinism – lessons for asset management. First international conference on infrastructure systems and services: building networks for a brighter future (INFRA), pp 1–6

Hartwick J, Barki H (1994) Explaining the role of user participation in information system use. Manag Sci 40(4):440–465

Höst M (2000) Using students as subjects – a comparative study of students and professionals in lead-time impact assessment. Empir Softw Eng 5(3):201–214

Ives B, Olson MH (1984) User involvement and MIS success: a review of research. Manag Sci 30(5):586–603

Jackson CM, Chow S (1997) Toward an understanding of the behavioral intention to use an information system. Decision Sci 28(2):357–389

Jaspers MW, Khajouei R (2008) CPOE system design aspects and their qualitative effect on usability. Stud Health Technol Inform 136:309–314

Kappelman L, Mclean E (1991) The respective roles of user participation and user involvement in information systems implementation success. International conference on information systems, New York, pp 339–348

Kelly MP, Richardson J, Corbitt B, Lenarcic J (2010) The impact of context on the adoption of health informatics in Australia. In: BLED 2010 Proceedings

Kramer T (2007) The effect of measurement task transparency on preference construction and evaluations of personalized recommendations. J Mark Res 44(2):224–233

Kumar N, Benbasat I (2006) The influence of recommendations and consumer reviews on evaluations of websites. Inf Syst Res 17(4):425–429

Lai PC (2017) The literature review of technology adoption models and theories for the novelty technology. J Inf Syst Technol Manag 14(1):21–38

Leclercq A (2007) The perceptual evaluation of information systems using the construct of user satisfaction. ACM SIGMIS Database 38(2):27

Leso BH, Cortimiglia MN (2021) The influence of user involvement in information system adoption: an extension of TAM. Cogn Technol Work. https://doi.org/10.1007/s10111-021-00685-w

Li J, Ji H, Qi L, Li M, Wang D (2015) Empirical study on influence factors of adaption intention of online customized marketing system in China. Int J Multimed Ubiquit Eng 10(6):365–378

Lim JA (2003) A conceptual framework on the adoption of negotiation support systems. Inf Softw Technol 45:469–477

Mason RL et al (2003) Statistical design and analysis of experiments: with applications to engineering and science. Wiley, New York

Matende S, Ogao P (2013) Enterprise resource planning (ERP) system implementation: a case for user participation. Proced Technol 9:518–526

Mckeen JD, Guimarães T (1997) Successful strategies for user participation in systems development. J Manag Inf Syst 14(2):133–150

Mertins L, White LF (2016) Presentation formats, performance outcomes, and implications for performance evaluations. In: Epstein MJ, Malina MA (eds) Advances in Management Accounting. Bingley, Emerald, pp 1–34

Monnickendam M, Savaya R, Waysman M (2008) Targeting implementation efforts for maximum satisfaction with new computer systems: results from four human service agencies. Comput Hum Behav 24:1724–1740

Montgomery DC (2001) Design and analysis of experiments, 4th edn. Wiley, Hoboken

Mukti SK, Rawani AM (2016) ERP system implementation issues and challenges in developing nations. ARPN Journal of Engineering and Applied Sciences 11:7989–7996

Nagelkerke NJD (1991) A note on a general definition of the coefficient of determination. Biometrika 78:691–692. https://doi.org/10.1093/biomet/78.3.691

Nanni LF, Ribeiro JL (1987) Planejamento e avaliação de experimentos. Porto Alegre: CPGEC/UFRGS, Caderno Técnico, p 193

Paré G, Sicotte C, Jacques H (2006) The effects of creating psychological ownership on physicians’ acceptance of clinical information systems. J Amer Med Inform Assoc 13(2):197–205

Pogue D (2017) What happened to user manuals? Sci Amer 316(4):30–30. https://doi.org/10.1038/scientificamerican0417-30

Rajan JV et al (2016) Understanding the barriers to successful adoption and use of a mobile health information system in a community health center in São Paulo, Brazil: a cohort study. Bmc Med Inform Decis Making 16(1):1–12

Ribeiro JLD, Caten CS (2001) Projeto de experimentos. Porto Alegre: FEENGE/UFRGS, Série Monográfica Qualidade, p 130

Sahu GP, Singh M (2016) Green information system adoption and sustainability: a case study of select indian banks. Social media: the good the bad and the ugly. Springer, Cham, pp 292–304

Salahuddin L, Ismail Z (2015) Classification of antecedents towards safety use of health information technology: a systematic review. Int J Med Inform 84(11):877–891

Segal J, Morris C (2011) Scientific end-user developers and barriers to user/customer engagement. J Org End User Comput 23:51–63. https://doi.org/10.4018/joeuc.2011100104

Tait P, Vessey I (1988) The effect of user involvement on system success: a contingency approach. MIS Q 12(1):91

Turan A, Tunç AÖ, Zehir C (2015) A theoretical model proposal: personal innovativeness and user involvement as antecedents of unified theory of acceptance and use of technology. Procedia Soc Behav Sci 210:43–51

Van Loggem BE (2014) ‘Nobody reads the documentation’: true or not? In: Proceedings of ISIC, the Information Behaviour Conference, Leeds: Part 1. http://InformationR.net/ir/19-3/isic/isic03.html. Accessed 13 May 2021

Venkatesh V, Bala H (2008) Technology acceptance model 3 and a research agenda on interventions. Decis Sci 39(2):273–315

Venkatesh V, Morris MG, Davis GB, Davis FD (2003) User acceptance of information technology: toward a unified view. MIS Q 27(3):425–478

Verhoef PC, Broekhuizen T, Bart Y et al (2019) Digital transformation: a multidisciplinary reflection and research agenda. J Bus Res 122:889–901. https://doi.org/10.1016/j.jbusres.2019.09.022

Wang W, Benbasat I (2005) Trust in and adoption of online recommendation agents. J Assoc Inf Syst 6(3):72–101

Wu JH, Wang YM (2008) Measuring ERP success: the ultimate users’ view. Int J Oper Product Manag 26(8):882–903

Yoon Y, Guimaraes T, O’Neal Q (1995) Exploring the factors associated with expert systems success. MIS Q 19(1):83–106

Yusof MM (2015) A case study evaluation of a critical care information system adoption using the socio-technical and fit approach. Int J Med Inform 84(7):486–499

Yusof MM, Stergioulas L, Zugic J (2007) Health information systems adoption: findings from a systematic review. Stud Health Technol Inform 129:262–266

Zhang TC, Agarwal R, Lucas HC (2011) The value of IT-enabled retailer learning: personalized product recommendations and customer store loyalty in electronic markets. MIS Q 35(4):859–881

Acknowledgements

We would like to thank the following Brazilian agencies for financial support of this research: Coordenação deAperfeiçoamento de Pessoal de Nível Superior (CAPES) and Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq).

Author information

Authors and Affiliations

Corresponding author

Additional information

Accepted after 1 revision by Óscar Pastor.

Rights and permissions

About this article

Cite this article

Leso, B.H., Cortimiglia, M.N. & ten Caten, C.S. The Influence of Situational Involvement on Employees’ Intrinsic Involvement During IS Development. Bus Inf Syst Eng 64, 317–334 (2022). https://doi.org/10.1007/s12599-021-00719-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12599-021-00719-7