Abstract

There is no lack of studies dealing with the consistency of evaluations performed by pairwise comparison in the decision-making literature. Mostly, these studies offer algorithms for reducing the inconsistency of evaluations and indices to measure the evaluation’s consistency degree. The focus on these two research fronts does not cover all the gaps associated with the inconsistent evaluation problem. The existing algorithms are difficult to implement and do not preserve the original evaluations since the original evaluation matrix is replaced with a new matrix. Furthermore, the inconsistency of pairwise comparison has been associated with the specialist’s bounded rationality only at the theoretical-conceptual level. This research investigates the relationship between the lack of specialist knowledge and the inconsistency of evaluations, as well as introduces an approach that ensures the evaluation’s consistency by reducing the specialist’s cognitive stress when comparing a high number of alternatives. The results reveal that the specialist’s limited knowledge about the topic does not impact the degree of consistency of the evaluations as expected. The evaluation’s consistency degree is 59% lower when the specialist does have no knowledge about the decision topic but has theoretical knowledge and experience in evaluating alternatives by pairwise comparison. This is a remarkable contribution with a high degree of universality and applicability because instructing decision-makers on the inconsistency problem is a cheaper, easier way to increase the evaluation’s consistency degree without altering the original information. Furthermore, the introduced approach reduces the number of evaluations and evaluation time by 8.0 and 7.8 times, respectively.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

Composite indicators are one-dimensional representations resulting from the mathematical aggregation of multiple sub-indicators associated with a multidimensional phenomenon [26, 27, 38]. Studies on composite indicators have been gaining importance in the operational research literature and are already found in prominent journals in the area [43, 2, 11, 55, 7]. Composite indicators and operational research have a close relationship as they both address problems of a multi-criteria nature (El Gibari et al. [23]). Moreover, the construction of composite indicators involves mathematical operations widely explored in the operational research literature: normalization [34], weighting [46], and aggregation [26], as well as measuring the uncertainty that each one of these operations generates results [13].

Weighting is one of the most critical mathematical operations in building composite indicators [39, 3]. The sub-indicator weights are the same in the Equal Weights weighting scheme, extracted from the data in the Data-Driven weighting scheme and specialist opinion in the Participatory weighting scheme [28]. This research is focused on the Participatory weighting scheme and introduces an approach to overcome the problem of inconsistency when specialists evaluate alternatives by pairwise comparison.

Evaluation by pairwise comparison is considered highly effective because it decomposes the problem of evaluating all alternatives simultaneously into sub-problems in which only two are compared simultaneously [24]. However, such simplification implies the redundancy of comparisons from intermediate comparisons [8]. Thus, any problem that involves comparing more than three alternatives is subject to inconsistent evaluations [33].

For a better understanding of the problem of evaluation inconsistency, it is possible to use the example of the Cost of Doing Business Index (CDBI), detailed in Sect. 3.1. In this example, the CDBI consists of only three sub-indicators: \({x}_{1}\) costs of starting a business; \({x}_{2}\) import costs; \({x}_{3}\) building license costs. The pairwise comparison implies that the specialist must carry out \(n(n-1)/2\) evaluations, that is, three evaluations. Considering that the specialist evaluates the sub-indicator \({x}_{1}\) has a lower weight in the CDBI than the sub-indicator \({x}_{2}\) and a much smaller weight in the CDBI than the sub-indicator \({x}_{3}\), that is, \({x}_{1}<{x}_{3}<{x}_{2}\). In a second moment, the specialist evaluates that the sub-indicator \({x}_{2}\) has a lower weight in the CDBI than the sub-indicator \({x}_{3}\), that is, \({x}_{2}<{x}_{3}\). It is easy to see from this example that the second evaluation is inconsistent with the first evaluation, which indicates that \({x}_{2}>{x}_{3}\).

The issue of inconsistency in evaluations is crucial in the decision-making process, as the reliability of the priority vectors of the pairwise comparison matrices depends on the inconsistency degree of the evaluations [8]. Although a certain inconsistency degree is expected, highly inconsistent evaluations signal a lack of competence and attention with this process and poor reliability of the results [9].

Researchers indicate that specialists must revise their evaluations to reach an acceptable inconsistency degree when the number of alternatives to be evaluated by pairwise comparison is greater than six [1]. Furthermore, the number of alternatives must be less than ten for the comparisons to be performed acceptably [49, 28].

There is no lack of studies on the consistency of evaluations performed by pairwise comparison in the decision-making literature. However, this literature focuses on only two lines. One line develops algorithms capable of improving the consistency of the evaluations [33, 24, 4, 5, 9, 58]. Another line proposes indices that measure the consistency of the evaluations performed by pairwise comparison [48, 17, 31, 50, 1]. These two lines of research offer solutions to the problem of inconsistency of evaluations in pairwise comparison, but they do not cover all the gaps associated with the problem.

Researchers indicate that existing algorithms are difficult to implement and do not preserve the original comparison information once they replace the original matrix with a new matrix (Ergu et al. [24]). Besides, current studies are not concerned with a deeper understanding of the causes of evaluation inconsistency. These studies suggest that the problem occurs due to limitations in knowledge about the decision topic [24] and due to the cognitive stress of specialists when evaluating many alternatives [29, 30, 28, 8].

The object of this research is twofold. First, to investigate the relationship between the inconsistency of the evaluations and the specialists’ knowledge of the decision topic. Second, to introduce a simple approach capable of reducing cognitive stress on specialists in evaluating the weights of sub-indicators of a composite indicator.

This research intends to contribute to advancing the existing literature in three ways. Firstly, expanding and deepening the current literature that relates the specialist’s knowledge about the decision topic with the inconsistency of evaluations in pairwise comparison, as this relationship has only been explored from a theoretical-conceptual point of view. Secondly, exploring the impact of specialist knowledge on the problem of inconsistency of evaluations in pairwise comparison with the inconsistency degree in evaluations. Therefore, this study is a pioneer in providing empirical evidence of the relationship between the evaluation inconsistency degree and two different knowledge types. Thirdly, filling a gap in the current literature by offering an approach that reduces cognitive stress on specialists when the number of alternatives to be evaluated by pairwise comparison is high. This approach has high applicability and universality as it can be used in multi-criteria decision-making problems involving pairwise comparison. This contribution has important practical implications for operational research, especially for composite indicators, as it reconciles simplicity and rigor to the weighting process of sub-indicators based on specialist opinion.

The remaining parts of this research are structured as follows. The second section discusses the inconsistency of evaluations performed by pairwise comparison, the cognitive stress exerted on specialists during this evaluation process, and the theoretical-conceptual foundations of the approach introduced to solve this problem. The third section presents the materials and methods used to investigate the impact of limited specialist knowledge on the inconsistency degree in evaluations and the implementation of the proposed approach. The fourth and fifth sections present and analyze the results and highlight the main findings and contributions of the research. Finally, the conclusions are presented in section six.

2 Theoretical background

Specialists express opinions about alternatives in decision-making problems in different ways. In the specialized literature, these ways of expressing opinions are known as the preferences format. There is an expressive number of studies on preference formats. The works performed by Chiclana et al. [14, 15], Pedrycz et al. [41], Ramalho et al. [44], and Ekel et al. [20] provide abundant information on the main theoretical, methodological, and practical aspects of the topic, supporting the following discussion.

The most commonly used preference formats are Ordering of Alternatives \(\left(\mathbf{O}\mathbf{A}\right)\), Utility Values \(\left(\mathbf{U}\mathbf{V}\right)\), Multiplicative Relations \(\left(\mathbf{M}\mathbf{R}\right)\), Hesitant Linguistic Preference Relation \(\left(\mathbf{H}\mathbf{L}\mathbf{P}\mathbf{R}\right)\), Probabilistic Preference Relation \(\left(\mathbf{P}\mathbf{L}\mathbf{P}\mathbf{R}\right)\), Distribution Linguistic Preference Relation \(\left(\mathbf{D}\mathbf{L}\mathbf{P}\mathbf{R}\right)\), Fuzzy Estimations \(\left(\mathbf{F}\mathbf{E}\right)\), and Fuzzy Relations, which can be Additive Reciprocal \(\left(\mathbf{R}\mathbf{R}\right)\), and Non-Reciprocal \(\left(\mathbf{R}\mathbf{N}\right)\). The decision on which format to use is associated with the: level of information uncertainty, the psychological disposition of the specialist, the specialist domain on the topic, and the nature of information, e.g., qualitative or quantitative [41].

No preferred format is free from limitations. However, the operationalization of the \(\mathbf{O}\mathbf{A}\) format is considered simpler than the other formats. Decision-makers find it more challenging to evaluate alternatives quantitatively than to rank them. First, decision-makers have more confidence in the rankings of alternatives than in their respective values. Second, decision-makers often struggle to express how much one alternative is better. Third, the evaluation effectiveness is impaired due to differences between the specialists’ numerical interpretation of linguistic terms and the numerical representation of the model. Fourth, the quantitative evaluation of many alternatives exerts cognitive stress on decision-makers, resulting in inconsistent evaluations. This problem is potentiated when specialists evaluate alternatives by pairwise comparison through the \(\mathbf{M}\mathbf{R}\), \(\mathbf{H}\mathbf{L}\mathbf{P}\mathbf{R}\), \(\mathbf{P}\mathbf{L}\mathbf{P}\mathbf{R}\), \(\mathbf{D}\mathbf{L}\mathbf{P}\mathbf{R}\), \(\mathbf{F}\mathbf{E}\), \(\mathbf{R}\mathbf{R}\), and \(\mathbf{R}\mathbf{N}\) formats (Zhou et al. [58]). This cognitive stress is greater in the pairwise comparison as evaluating of \(n\) alternatives requires \(n(n-1)/2\) evaluations.

Pairwise comparison of alternatives is a robust and reliable form of evaluation, as its quality can be verified through indices that estimate the consistency of evaluations [8, 58]. Numerous indices that estimate the inconsistency degree of evaluations by pairwise comparison are reported in the literature: Consistency Index and the Consistency Ratio [48], Consistency Measure [31, 50]Footnote 1, and Geometric Consistency Index [17]. In short, these indices provide a degree of the association of pairwise comparisons with a real number representing the evaluation’s inconsistency degree [9, 1]. A threshold value is applied for these indices to indicate whether the evaluation’s inconsistency degree is acceptable. In particular, the Consistency Index (\(\mathbf{C}\mathbf{I}\)) used in this research considers that a pairwise comparison matrix is consistent and suitable for use when the \(\mathbf{C}\mathbf{I}\) < 0.10 [48].

The evaluation of a high number of alternatives increases the cognitive stress on specialists and the chances of the inconsistency degree of the evaluations exceeding the acceptance thresholds of the index. In these situations, evaluating alternatives using the \(\mathbf{O}\mathbf{A}\) format is a simpler and faster strategy to obtain a ranking of alternatives.

However, many methods of multi-criteria decision-making problems require the scores of alternatives. The weighting of sub-indicators is not performed from the sub-indicators ordered by importance. The weights are scores representing the relative importance of the sub-indicators in the composite indicator and cannot be represented by a ranking. Furthermore, the \(\mathbf{O}\mathbf{A}\) format is problematic when it is necessary to aggregate orderings performed by different decision-makers in a collective ordering, as the literature considers this operation very controversial (Bustince et al. [10]).

These two limitations substantially reduce the range of problems that can be solved by applying the \(\mathbf{O}\mathbf{A}\) format. For example, the evaluation by \(\mathbf{O}\mathbf{A}\) does not offer a viable solution for defining the weights of the sub-indicators of the composite indicator. So, how to weigh the sub-indicators taking advantage of the simplicity and speed of evaluating alternatives using the \(\mathbf{O}\mathbf{A}\) format?

The literature on preference format transformation functions provides some indications to answer this question. Employing different preference formats offers psychological comfort to the decision-maker in evaluating alternatives, but simultaneously, it results in heterogeneous information. Several studies in the operational research field offer the means for treating heterogeneous information. In particular, the studies by Zhang et al. [56, 57]; Chen et al. [12]; Li et al. [35]; Figueiredo et al. [25]; Wu and Liao [53]; Libório et al. [36, 37] show that it is possible to homogenize evaluations in different formats applying transformation functions.

Initially conceived to homogenize evaluations performed in different formats, the transformation functions allow the conversion of evaluations in \(\mathbf{O}\mathbf{A}\) formats to other formats, enabling the definition of weights from the converted evaluations. This approach allows taking advantage of the ease and speed of evaluating alternatives using the \(\mathbf{O}\mathbf{A}\) format to define the sub-indicator weights from the evaluations converted to other formats. However, this approach is not limited to simplifying the process of defining the weights of the sub-indicators, as its application guarantees the consistency of the evaluations [14, 44].

3 Application example: weighting of sub-indicators based on specialist opinion

The development of this research is divided into two parts. The first part investigates the impact of specialist knowledge on the inconsistency of the evaluations. The second part describes the approach introduced for reducing the cognitive stress on specialists when comparing a large number of alternatives by pairwise comparison. These two parts are associated with assigning weights to the 17 sub-indicators of the composite indicator Cost of Doing Business Index.

3.1 Cost of doing Business Index (CDBI)

The CDBI was proposed by Bernardes et al. [6] as an alternative to the Ease of Doing Business Index (EDBI). The EDBI was first published in 2003 by the World Bank to investigate the relationship between the effect of costs arising from excessive regulation on economic development and its impact on the poorest [19]. The index aggregates sub-indicators that reflect the ease of doing business in 190 countries. It is widely used to analyze the quality of the business environment, its relationship with economic growth [32], and foreign direct investment [16]. Although very popular in the business literature, the EDBI has several flaws and limitations that are widely known: unreliable data, constructs with low explanatory power, and the absence of sub-indicator weights [42, 6]. These flaws and limitations were recognized by the World Bank itself in 2021, which decided to end the index disclosure [52].

The theoretical and operational framework of the CDBI offers three solutions to the limitations and failures of the EDBI. First, it uses only sub-indicators whose data are controlled by governments [21]. Second, the construct resulting from these data exceeds Cronbach’s alpha test acceptance threshold of 0.50 (Bernardes et al. [6]). Third, it considers the relative importance of the sub-indicators in the index [36, 37]. This research is dedicated particularly to the improvement of this last solution.

The CDBI provides information on the quality of the institutional and business environment (Bernardes et al. [6]), serving as a measure of economic transaction costs, which strongly correlate with the Gross Domestic Product of countries [22]. In addition, CDBI offers the possibility of identifying which costs most impact the business environment and which reforms governments should prioritize to boost economic development [21].

The index aggregates 17 sub-indicators that reflect the following cost groups: construction permits, enforcing contracts, getting electricity, paying taxes, resolving insolvency, registering property, starting a business, and trading across borders [6]. This large number of sub-indicators makes the CDBI an appropriate example for this research because the evaluation inconsistency is directly and positively related to the number of alternatives. The data was collected from the World Bank DataBank: Doing Business [51].

3.2 Impact of specialist knowledge on the inconsistency of evaluations

The development of this part of the research consists of selecting specialists with two types of knowledge. The first type of knowledge is associated with the decision topic: the cost of doing business. The second type of knowledge is associated with the problem of inconsistency of evaluations in pairwise comparison.

Two groups of specialists were formed based on these two types of knowledge. Group A was formed by five specialists with knowledge about the costs of doing business, with more than five years of professional experience in the business field and academic background of a bachelor’s degree in business or higher. Five specialists without knowledge about the costs of doing business formed Group B. In turn, this group was formed by specialists with theoretical knowledge about the problem of inconsistency of evaluations in pairwise comparison, as well as practical experience in evaluating alternatives using the \(\mathbf{M}\mathbf{R}\) format.

First, the five specialists from Group B evaluated the 17 CDBI sub-indicators using the \(\mathbf{M}\mathbf{R}\) format through a matrix \({MR}_{ n\times n}\) of positive and reciprocal preference relationships \(m\left({x}_{k},{x}_{l}\right), k, l=1,\dots ,n\), where \(m\left({x}_{k},{x}_{l}\right)\) is the intensity of preference for the alternative \({x}_{k}\) over \({x}_{l}\) according to Saaty’s [47] scale. Numerically, considering that the pairwise comparison of \(n\) alternatives requires \(n(n-1)/2\) evaluations and that \(n\)=17, each specialist of Group A and B performed 136 evaluations.

Secondly, the individual weights were performed by normalizing the evaluation matrices and extracting the arithmetic mean of the columns of each matrix row. Then, the consistency index was calculated from these averages by the following expression:

where \({\lambda }_{max}\) is the maximum eigenvalue of the matrix \({MR}_{ n\times n}\), \(RI\) is the random index associated with the number of alternatives \(n\). For \(n\)=17, the \(RI\) value is equal to 1.59.

Finally, the collective weights were obtained by aggregating the individual weights of each sub-indicator by the arithmetic mean. From these weights, the individual \({\mathbf{C}}^{\mathbf{I}}\) and group \({\mathbf{C}}^{\mathbf{G}}\) consensus degree was obtained through the following expressions:

where \({O}^{G}\left({X}_{k}\right)\) corresponds to the position of the \(k\)-th sub-indicator of the collective opinion, \({O}^{{E}_{i}}\left({X}_{k}\right)\) corresponds to the \(k\)-th position of the sub-indicator of the specialist opinion \({E}_{i}\).

3.3 Approach to reducing cognitive stress on specialists by comparing a large number of alternatives

The development of this part of the research consists of converting the evaluations performed in the \(\mathbf{O}\mathbf{A}\) format to the \(\mathbf{M}\mathbf{R}\) format and defining the weights sub-indicators by processing the evaluations in \(\mathbf{M}\mathbf{R}\) format. This approach was implemented by ten specialists with knowledge about the costs of doing business and who met the same requirements as Group A.

The ten specialists from the so-called Group C evaluated the sub-indicators using the \(\mathbf{O}\mathbf{A}\). The \(\mathbf{O}\mathbf{A}\) format is expressed through a vector \(O=\left[o\left({x}_{1}\right),o\left({x}_{2}\right),\dots , o\left({x}_{k}\right)\dots ,o\left({x}_{n}\right)\right]\), where \(o\left({x}_{k}\right)\) is a swap function that indicates the position of the sub-indicator \({x}_{k}\) among the integer values \(\{1, 2, 3, . . . , n\}\). Among these integer values, 1 is the sub-indicator that most impact the costs of doing business, and \(n\) is the sub-indicator with the least impact. The ten evaluations in the \(\mathbf{O}\mathbf{A}\) format were converted into the \(\mathbf{M}\mathbf{R}\) format through the transformation function:

where \({X}_{k}{X}_{l}\) is the preference relationship between \({X}_{k}\) and \({X}_{l}\), \({O}_{l}\) and \({O}_{k}\) are the order of the \(l\)-th and \(k\)-th alternative, \(m=9\), corresponding to the upper limit of the scale proposed by Saaty [47], p. 246 for the construction of the multiplicative relationship matrix \(\mathbf{M}\mathbf{R}\left({X}_{k}{X}_{l}\right)\).

Let us now see the application of (4) in the conversion of the vector \(\{ {x}_{2}, {x}_{3}, {x}_{1}\}\) to the \(\mathbf{M}\mathbf{R}\) format in the construction of the matrix \({MR}_{ n\times n}\):

This conversion allows obtaining ten matrices \({\mathbf{M}\mathbf{R}}_{ n\times n}\) of reciprocal preference relations, with a consistency ratio equal to zero, since the transitivity of the evaluations is preserved by the transform function [14, 20].

The \(\mathbf{C}\mathbf{R}\) of the matrices obtained through (4) is calculated in three steps. First, the eigenvectors (\({w}_{i}\) the sub-indicators) weights are calculated. The eigenvectors are obtained from the average of each of the rows of the matrix (7) normalized:

Then, each eigenvalue \({\lambda }_{i}\) is obtained considering the rows of the matrix (7) and the eigenvectors \({w}_{i}\) of the matrix (8) divided by the eigenvector itself as follows:

Finally, the \(\mathbf{C}\mathbf{R}\) consistency index is calculated considering the maximum eigenvalue (\({\lambda }_{max}\)=3) of (9), the number of sub-indicators (\(n\)=3) and the random index (\(RI\)=0.58) as follows:

The transformation function (4) was also chosen so that the results of the two evaluation formats are fully comparable. Besides, the \(\mathbf{M}\mathbf{R}\) format is associated with one of the most popular multi-criteria decision-making methods in the literature: the Analytic Hierarchy Process [48].

Figure 1 offers an overview of the operational framework developed in the research. It includes a description of the knowledge of the experts in each group, shows the preference format used to evaluate the sub-indicators weights by the specialists in each group, and defines the moment for converting the evaluations performed in \(\mathbf{O}\mathbf{A}\) to the \(\mathbf{M}\mathbf{R}\) format. Figure 1 also presents the parameters used to analyze the results: time spent to carry out the evaluations, consistency index of the matrices, sub-indicator weights, and consensus degree on the weights.

4 Presentation and analysis of the results

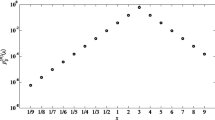

The research’s first set of results provides evidence of the direct and positive relationship between the lack of specialized knowledge about pairwise comparisons and the inconsistency of the evaluations. The evaluations made by the five specialists who know about business costs and do not know about pairwise comparisons are highly inconsistent. The \(\mathbf{C}\mathbf{R}\) of the five evaluation matrices varies between 0.22 and 0.75, with an average of 0.40. The least inconsistent evaluations were performed by Specialist 5. These assessments have a \(\mathbf{C}\mathbf{R}\) = 0.22, much higher than the threshold of 0.10.

The analysis of the time spent on the evaluations also brings to light aspects that had not been explored until then about the relationship between the specialist’s knowledge of pairwise comparisons and evaluation inconsistency. Two specialists needed more than forty minutes to complete the 136 evaluations in \(\mathbf{M}\mathbf{R}\) format. This high amount of time specialists spend in evaluations indicates that cognitive stress is a significant source of inconsistency in evaluations.

The \(\mathbf{C}\mathbf{R}\) and time spent evaluating each of the five experts in Group A are presented with the consensus degree among experts and the weights of the seventeen sub-indicators obtained from the evaluations performed in \(\mathbf{M}\mathbf{R}\) format.

Table 2 presents the results of the evaluations performed by specialists from Group B, revealing that the knowledge about the consistency of the evaluations allows obtaining matrices with a lower consistency ratio than those obtained by specialists from Group A. This is compelling empirical evidence of the direct and positive effect of the lack of knowledge of the specialist in the inconsistency of the evaluations carried out by paired comparisons.

A comparison of the two results presented in Tables 1 and 2 reveals a direct and positive relationship between the time of evaluation and the evaluation’s inconsistency. The consistency ratio of the matrixes and the time spent on the evaluations are higher in Group A, which comprises specialists without any knowledge of the inconsistent evaluations performed by pairwise comparisons. Specialists from Group A spend an average of 31 min performing the evaluation, generating matrices with \(\mathbf{C}\mathbf{R}\) = 0.40. Specialists from Group B performed the evaluations in 20 min on average, generating matrices with \(\mathbf{C}\mathbf{R}\) = 0.23.

Finally, Tables 1 and 2 show that the consensus degree positively relates to the specialist’s knowledge of business costs. Specialists with knowledge about business costs (Group A) present 1.23 times more convergent evaluations than specialists without this knowledge (Group B).

The research’s second set of results provides evidence of the advantages of evaluating the sub-indicators through the \(\mathbf{O}\mathbf{A}\) format and obtaining the weights by converting the evaluations for the \(\mathbf{M}\mathbf{R}\) format. Table 3 shows the order of importance of sub-indicators in the cost of doing business according to the ten specialists of Group C.

The results in Table 3 indicate the order of importance of the sub-indicators in constructing the Cost of Doing Business Index. However, this ordering does not allow calculating the index, as it is built from aggregating weighted (normalized) sub-indicators.

At this point, it is relevant to highlight the advantages of evaluating the sub-indicators using the \(\mathbf{O}\mathbf{A}\) format and converting these evaluations to the \(\mathbf{M}\mathbf{R}\) to obtain the weights of the sub-indicators. First, the evaluation in \(\mathbf{O}\mathbf{A}\) format requires less cognitive effort from specialists. Group C specialists spend an average of 04’,11” minutes to perform the evaluations. This evaluation time represents a drastic reduction of more than 15 minutes in relation to the evaluations carried out in the \(\mathbf{M}\mathbf{R}\) format in Groups A and B, which spend an average of 31’, 24” and 21’, 00” minutes. Second, the matrices of the evaluations converted from \(\mathbf{O}\mathbf{A}\) to \(\mathbf{M}\mathbf{R}\) formation through the transformation function have a consistency ratio equal to zero. Third, obtaining weights indirectly does not affect the convergence of opinions among specialists. The consensus degree among specialists in Group C is 0.78.

Table 4 presents the sub-indicator weights obtained from converting the evaluations from the \(\mathbf{O}\mathbf{A}\) format to \(\mathbf{M}\mathbf{R}\), the time specialists spent evaluating the sub-indicators in the \(\mathbf{O}\mathbf{A}\) format, and the consensus degree among the ten specialists in Group C.

5 Research findings and contributions

The simplification of the evaluation process that converts the sub-indicators ordered by importance into weights allows for reaching four main findings. First, converting evaluations in the \(\mathbf{O}\mathbf{A}\) format to the \(\mathbf{M}\mathbf{R}\) format eliminates the need to review inconsistent evaluations. Second, the transformation function that converts the evaluations in \(\text{t}\text{h}\text{e} \mathbf{O}\mathbf{A}\) format to the \(\mathbf{M}\mathbf{R}\) format permits one to obtain matrices with a consistency ratio greater than the 10% acceptance threshold. Third, the number of evaluations drops significantly when opting for the \(\mathbf{O}\mathbf{A}\) format over the \(\mathbf{M}\mathbf{R}\) format. The \(\mathbf{O}\mathbf{A}\) format requires 17 evaluations, while the \(\mathbf{M}\mathbf{R}\) format requires 136 evaluations. Fourth, the evaluation process is simplified when specialists evaluate alternatives in the \(\mathbf{O}\mathbf{A}\) format. On average, the ten specialists who ordered the sub-indicators in order of importance evaluated the 17 alternatives in 4 min.

The transformation of evaluations in the \(\mathbf{O}\mathbf{A}\) format to the \(\mathbf{M}\mathbf{R}\) format reduces by eight times the number of evaluations needed to assign weights to the sub-indicators. This reduction allows Group C specialists to evaluate the 17 alternatives in less time than Group A and Group B specialists. Group C specialists spend 7.5 and 4.8 times less time evaluating alternatives than Groups A and B specialists. The lower cognitive stress on the specialists in Group C, resulting from simplifying the evaluation process, allows for obtaining a satisfactory consensus degree.

Besides, the research’s main findings summarized in Table 5 indicate that simplifying the evaluation process does not influence the consensus degree among specialists. The consensus degree among groups of specialists who have knowledge about the costs of doing business is \({\mathbf{C}}^{\mathbf{G}}\)=0.78 for Group C and \({\mathbf{C}}^{\mathbf{G}}\)=0.81 for Group A. However, it is necessary to consider that Group C has twice as many specialists as Group A and that the evaluations of the specialists of Group A are inconsistent. Besides, the consensus degree is higher than 0.70 for the seventeen sub-indicators in Group C and the sixteen sub-indicators in Group A. The consistency ratio of the matrices generated by the evaluations of the specialists in Group A is, on average, 0.39, exceeding the acceptance threshold of 0.10.

The evaluations performed in the format \(\mathbf{M}\mathbf{R}\) take 26.7 min on average. However, the specialists of Group A spent 1.57 times more time evaluating the 17 sub-indicators than the specialists of Group B. The specialists’ evaluations were performed more quickly in Group B than in Group A. The consistency ratio of matrices in Group B is 59% lower than Group A on average. These results suggest that the consistency ratio depends more on knowledge about the problem of inconsistency of evaluations in pairwise comparison than on knowledge about the cost of doing business. However, the results show that knowledge about the problem of inconsistency of evaluations in pairwise comparison is not enough to obtain matrices with acceptable consistency ratios when the number of pairwise comparisons is high.

Furthermore, the lack of knowledge about the cost of doing business impacts the consensus degree drastically. The consensus degree among specialists in Group B is only 0.67. These results show that knowing the cost of doing business increases the consensus degree. The consensus degree among Group B specialists, who do not know the cost of doing business field is 14% lower than Group C and 17% lower than Group A.

The results presented in the research strongly indicate that transferring knowledge about the problem of inconsistency of evaluations in pairwise comparison to the decision maker is a strategy with a high potential for reducing the consistency index of the matrices. However, reducing the inconsistency of evaluations may not reach the threshold of 0.10, requiring a second strategy. This second strategy can be: to invite the specialists for a second round of evaluations; or to implement the approach proposed in this research.

However, inviting the specialists for a second round of evaluations does not guarantee that the consistency index of the matrices will reach the acceptance limit, as it does not reduce the cognitive stress from evaluating a high number of alternatives. This situation makes the proposed approach the natural strategy for solving the problem, as it reduces the number of evaluations. In particular, transformation functions allow converting the 17 evaluations in \(\mathbf{O}\mathbf{A}\) format into 136 evaluations in\(\mathbf{M}\mathbf{R}\) format and then obtaining the sub-indicator’s weights quickly, consistently, and with a satisfactory consensus degree. In short, the results show that the application of the proposed approach has three important advantages in decision-making problems involving the evaluation of a large number of alternatives:

-

Obtaining fully consistent evaluations regardless of the number of alternatives, resulting from the application of a transformation function that guarantees the transitivity of the evaluations;

-

Drastic reduction of cognitive effort on experts when evaluating a large number of alternatives, represented by the time spent in the evaluation;

-

The simplification of the evaluation process of alternatives does not interfere with the convergence of opinion among specialists, reflected in a high degree of consensus among specialists.

Besides, the research results offer two important novelties for the decision-making literature:

-

The process of evaluating alternatives must include a stage of knowledge transfer about the problem of intransitivity of evaluations;

-

A third justification for the problem of matrix inconsistency is the specialist’s lack of knowledge about the problem of intransitivity of evaluations when these are performed by pairwise comparison.

Transferring knowledge about the problem of intransitivity of evaluations has great potential to reduce the need to reassess alternatives or to apply algorithms to reduce matrix inconsistency. This finding is an important novelty for the multicriteria decision-making literature, which has focused exclusively on solving the problem of inconsistency of matrices after evaluating alternatives. This finding expands the literature that discusses possible causes of inconsistencies in evaluations performed by pairwise comparisons [24, 41, 20]. This discovery makes this research a pioneer in pointing out that the inconsistency of evaluations is not caused solely by the cognitive stress of evaluating many alternatives [29, 30, 28, 8], adding the lack of knowledge about the intransitivity of evaluations by pairwise comparison as a relevant cause of the inconsistency of the evaluations.

The approach that allows defining the weights of the sub-indicators based on their ordering is also a point of originality and an important novelty for the composite indicators literature as it offers a new way of evaluating sub-indicator weights, especially useful when the number of alternatives and the chance of matrix inconsistency is high.

6 Conclusions

The results of this research permit us to conclude that knowledge about the consistency of evaluations allows obtaining matrices with a lower consistency ratio (\(\mathbf{C}\mathbf{R}\)=0.23) than knowledge about the problem of the cost of doing business (\(\mathbf{C}\mathbf{R}\)=0.39). This knowledge does not prevent the consistency ratio of the matrices from exceeding the acceptance threshold of 0.10. However, transferring the knowledge about the consistency of evaluations to specialists who do not have this knowledge is a helpful strategy to reduce the consistency ratio of matrices.

The simplification of the evaluation process in which the alternatives ordered by importance are converted into weights has the advantage of obtaining reliable matrices in a shorter time and with a satisfactory consensus degree. The transformation of evaluations in \(\text{t}\text{h}\text{e}\, \mathbf{O}\mathbf{A}\) format to \(\text{t}\text{h}\text{e}\, \mathbf{M}\mathbf{R}\) format has as one of its main properties the maintenance of consistency of evaluations. Specialists who know the cost of doing business field need 31 min and 24 s to evaluate the seventeen alternatives in the \(\mathbf{M}\mathbf{R}\) format and 4 min and 11 s in the \(\mathbf{O}\mathbf{A}\) format. The consensus degree reached by this simplified process is satisfactory, considering the time taken for the evaluations and the number of specialists who evaluated the alternatives. The consensus degree among the ten specialists in Group C who evaluated the seventeen alternatives in 4 min and 11 s was \({\mathbf{C}}^{\mathbf{G}}\)=0.78. This result is 0.03 lower than the five specialists in Group A, who needed 26 min and 47 s longer to evaluate the same seventeen alternatives. Besides, the seventeen sub-indicators of Group C present a consensus degree higher than 0.70. This number drops to sixteen sub-indicators for Group A and six sub-indicators for Group B.

The contributions of this research have a high degree of universality and applicability in decision-making problems involving pairwise comparisons and a high potential for appropriation by researchers and practitioners in the operational research field. The decision maker’s lack of knowledge about the problem of inconsistency in pairwise comparisons strongly impacts the consistency index of evaluations. Instructing the decision maker about this problem increases the consistency of their evaluations, being an alternative solution to inconsistency reduction algorithms, which are challenging to implement and modify the original evaluation matrix. Reducing the inconsistency of evaluations through knowledge transfer is extremely easy but remarkably important for weighting sub-indicators by specialist opinion because it allows for more accurate evaluations and reduces the need for reevaluations.

The benefit of instructing the decision-maker on the problem of inconsistent evaluations is limited in scope. Problems involving a large number of alternatives increase the chances that the acceptance threshold of the inconsistency index will be exceeded. These situations are especially adherent to the approach introduced in this research, which makes it possible to obtain the sub-indicator weights by ordering the alternatives and converting them to pairwise comparison matrices. The possibilities of implementing this approach in the weighting of sub-indicators are extensive and relevant. Composite indicators are usually constructed with over ten sub-indicators (e.g., Ease of Doing Business Index, Sustainable Development Goals Index, and Global Innovation Index). Besides, composite indicator scores are highly sensitive to weights, and even slight differences in weights change countries’ positions in the ranking.

The results of this research open an avenue for further investigations with a high potential to contribute to the multicriteria decision-making and composite indicators literature. The transfer of knowledge about the intransitivity problem of pairwise comparison evaluations will require a deeper analysis of the limited number of alternatives in which specialists can perform evaluations without significant problems. In this line, it is interesting to investigate to what extent the transfer of knowledge about intransitivity from pairwise comparison evaluations can reduce cognitive stress on experts.

The research results also indicate the possibility of using multiple preference formats to evaluate the sub-indicators’ weights in the composite indicator. This possibility stimulates the development of new preference format transformation functions. The budget allocation, for example, is a preference format widely used to define sub-indicator weights in the composite indicators literature [18]. Transformation functions from the budget allocation format to other formats have not been identified in the specialized literature [14, 15, 41, 20], being a novelty with high application power within the multicriteria decision-making literature.

Data Availability

Silva, LML, Libório, MP (2022) A new Ease of Doing Business Index for G20 countries: a consensus-based approach to weighting individual indicators. Mendeley Data, V2, doi: https://doi.org/10.17632/kwss6jyfxk.2.

Notes

Koczkodaj’s [31] Consistency Measure is calculated from a given element of the matrix and not on the characteristics of the global matrix as an eigenvalue. Salo and Hämäläinen’s [50] Consistency Measure is invariant to the scale and is calculated after transforming inconsistent responses into a non-empty set of viable priorities.

References

Amenta, P., Lucadamo, A., Marcarelli, G.: On the transitivity and consistency approximated thresholds of some consistency indices for pairwise comparison matrices. Inf. Sci. 507, 274–287 (2020)

Bampatsou, C., Halkos, G., Astara, O.H.: Composite indicators in evaluating tourism performance and seasonality. Oper. Res. Int. J., 1–24 (2020)

Becker, W., Saisana, M., Paruolo, P., Vandecasteele, I.: Weights and importance in composite indicators: closing the gap. Ecol. Ind. 80, 12–22 (2017)

Benítez, J., Delgado-Galván, X., Izquierdo, J., Pérez-García, R.: Achieving matrix consistency in AHP through linearization. Appl. Math. Model. 35(9), 4449–4457 (2011)

Benítez, J., Delgado-Galván, X., Izquierdo, J., Pérez-García, R.: Improving consistency in AHP decision-making processes. Appl. Math. Comput. 219(5), 2432–2441 (2012)

Bernardes, P., Ekel, P.I., Rezende, S.F.L., Pereira Júnior, J.G., dos Santos, A.C.G., da Costa, M.A.R., Libório, M.P.: Cost of doing business index in Latin America. Qual. Quant. 56(4), 2233–2252 (2022)

Boggia, A., Fagioli, F.F., Paolotti, L., Ruiz, F., Cabello, J.M., Rocchi, L.: Using accounting dataset for agricultural sustainability assessment through a multi-criteria approach: an Italian case study. Int. Trans. Oper. Res. (2022)

Bortot, S., Brunelli, M., Fedrizzi, M., Pereira, R.M.: A novel perspective on the inconsistency indices of reciprocal relations and pairwise comparison matrices. Fuzzy Sets Syst. (2022)

Bozóki, S., Fülöp, J., Poesz, A.: On reducing inconsistency of pairwise comparison matrices below an acceptance threshold. Cent. Eur. J. Oper. Res. 23(4), 849–866 (2015)

Bustince, H., Bedregal, B., Campión, M.J., Silva, D.I., Fernandez, J., Induráin, E., Santiago, R.H.: Aggregation of individual rankings through fusion functions: criticism and optimality analysis. IEEE Trans. Fuzzy Syst. 30(3), 638–648 (2020)

Camanho, A.S., Stumbriene, D., Barbosa, F., Jakaitiene, A.: The assessment of performance trends and convergence in education and training systems of European countries. Eur. J. Oper. Res. (2022)

Chen, X., Zhang, H., Dong, Y.: The fusion process with heterogeneous preference structures in group decision making: a survey. Inform. Fusion 24, 72–83 (2015)

Cherchye, L., Moesen, W., Rogge, N., Van Puyenbroeck, T., Saisana, M., Saltelli, A., Tarantola, S.: Creating composite indicators with DEA and robustness analysis: the case of the technology achievement index. J. Oper. Res. Soc. 59(2), 239–251 (2008)

Chiclana, F., Herrera, F., Herrera-Viedma, E.: Integrating three representation models in fuzzy multipurpose decision making based on fuzzy preference relations. Fuzzy Sets Syst. 97(1), 33–48 (1998)

Chiclana, F., Herrera, F., Herrera-Viedma, E.: Integrating multiplicative preference relations in a multipurpose decision-making model based on fuzzy preference relations. Fuzzy Sets Syst. 122(2), 277–291 (2001)

Corcoran, A., Gillanders, R.: Foreign direct investment and the ease of doing business. Rev. world Econ. 151, 103–126 (2015)

Crawford, G., Williams, C.: A note on the analysis of subjective judgment matrices. J. Math. Psychol. 29(4), 387–405 (1985)

Dialga, I., Giang, T.H., L: Highlighting methodological limitations in the steps of composite indicators construction. Soc. Indic. Res. 131, 441–465 (2017)

Djankov, S.: The doing business project: how it started: correspondence. J. Econ. Perspect. 30(1), 247–248 (2016)

Ekel, P., Pedrycz, W., Pereira, J., Jr.: Multicriteria Decision-Making Under Conditions of Uncertainty: A Fuzzy set Perspective. John Wiley & Sons, Hoboken (2019)

Ekel, P., Bernardes, P., Vale, G.M.V., Libório, M.P.: South American business environment cost index: Reforms for Brazil. Int. J. Bus. Environ. 13(2), 212–233 (2022a)

Ekel, P., Bernardes, P., Laudares, S., Libório, M.P.: Evidence of the negative relationship between transaction costs and economic performance in G7 + BRICS countries. Technol. Audit Prod. Reserves. 5(67), 37–42 (2022b)

El Gibari, S., Gómez, T., Ruiz, F.: Building composite indicators using multicriteria methods: a review. J. Bus. Econ. 89(1), 1–24 (2019)

Ergu, D., Kou, G., Peng, Y., Shi, Y.: A simple method to improve the consistency ratio of the pair-wise comparison matrix in ANP. Eur. J. Oper. Res. 213(1), 246–259 (2011)

Figueiredo, L.R., Frej, E.A., Soares, G.L., Ekel, P.Y.: Group decision-based construction of scenarios for multicriteria analysis in conditions of uncertainty on the basis of quantitative and qualitative information. Group Decis. Negot. 30(3), 665–696 (2021)

Fusco, E.: Enhancing non-compensatory composite indicators: a directional proposal. Eur. J. Oper. Res. 242(2), 620–630 (2015a)

Fusco, E.: Potential improvements approach in composite indicators construction: the multi-directional benefit of the doubt model. Socio-Economic Plann. Sci. 85, 101447 (2023)

Greco, S., Ishizaka, A., Tasiou, M., Torrisi, G.: On the methodological framework of composite indices: a review of the issues of weighting, aggregation, and robustness. Soc. Indic. Res. 141(1), 61–94 (2019)

Herrera-Viedma, E., Herrera, F., Chiclana, F., Luque, M.: Some issues on consistency of fuzzy preference relations. Eur. J. Oper. Res. 154(1), 98–109 (2004)

Ishizaka, A., Lusti, M.: An expert module to improve the consistency of AHP matrices. Int. Trans. Oper. Res. 11(1), 97–105 (2004)

Koczkodaj, W.W.: A new definition of consistency of pairwise comparisons. Math. Comput. Model. 18(7), 79–84 (1993)

Kuc-Czarnecka, M., Piano, S.L., Saltelli, A.: Quantitative storytelling in the making of a composite indicator. Soc. Indic. Res. 149(3), 775–802 (2020)

Lamata, M.T., Peláez, J.I.: A method for improving the consistency of judgements. Int. J. Uncertain. Fuzziness Knowledge-Based Syst. 10(06), 677–686 (2002)

Lee, S.K., Yu, J.H.: Composite indicator development using utility function and fuzzy theory. J. Oper. Res. Soc. 64(8), 1279–1290 (2013)

Li, G., Kou, G., Peng, Y.: A group decision making model for integrating heterogeneous information. IEEE Trans. Syst. Man Cybern.: Syst. 48(6), 982–992 (2016)

Libório, M.P., da Silva, L.M.L., Ekel, P.I., Figueiredo, L.R., Bernardes, P.: Consensus-based sub-indicator weighting approach: constructing composite indicators compatible with expert opinion. Soc. Indic. Res. 164, 1073–1099 (2022a)

Libório, M.P., Ekel, P.Y., Martinuci, O.D.S., Figueiredo, L.R., Hadad, R.M., Lyrio, R.D.M., Bernardes, P.: Fuzzy set based intra-urban inequality indicator. Qual. Quant. 56(2), 667–687 (2022b)

Libório, M.P., de Abreu, J.F., Ekel, P.I., Machado, A.M.C.: Effect of sub-indicator weighting schemes on the spatial dependence of multidimensional phenomena. J. Geogr. Syst. 25(2), 185–211 (2023)

Munda, G., Nardo, M.: Constructing Consistent Composite Indicators: The Issue of Weights. Institute for the Protection and Security of the Citizen, Italy (2005)

Pearson, K.: On lines and planes of closest fit to systems of points in space. Lond. Edinb. Dublin philosophical magazine J. Sci. 2(11), 559–572 (1901)

Pedrycz, W., Ekel, P., Parreiras, R.: Fuzzy Multi-Criteria Decision-Making: Models, Methods and Applications. John Wiley & Sons, Hoboken (2011)

Pinheiro-Alves, R., Zambujal-Oliveira, J.: The ease of doing Business Index as a tool for investment location decisions. Econ. Lett. 117(1), 66–70 (2012)

Proudlove, N.C., Goff, M., Walshe, K., Boaden, R.: The signal in the noise: robust detection of performance “outliers” in health services. J. Oper. Res. Soc. 70(7), 1102–1114 (2019)

Ramalho, F.D., Ekel, P.Y., Pedrycz, W., Júnior, J.G.P., Soares, G.L.: Multicriteria decision making under conditions of uncertainty in application to multiobjective allocation of resources. Inform. Fusion. 49, 249–261 (2019)

Ramalho, F.D., Silva, I.S., Ekel, P.Y., da Silva Martins, C.A.P., Bernardes, P., Libório, M.P.: Multimethod to prioritize projects evaluated in different formats. MethodsX. 8, 101371 (2021)

Rogge, N.: Composite indicators as generalized benefit-of-the-doubt weighted averages. Eur. J. Oper. Res. 267(1), 381–392 (2018)

Saaty, T.L.: A scaling method for priorities in hierarchical structures. J. Math. Psychol. 15(3), 234–281 (1977)

Saaty, T.: Multicriteria Decision Making: The Analytic Hierarchy Process. McGraw-Hill, New York (1980)

Saisana, M., Tarantola, S.: State-of-the-art Report on Current Methodologies and Practices for Composite indicator Development. European Commission, Joint Research Centre, Institute for the Protection and the Security of the Citizen, Technological and Economic Risk Management Unit, Ispra, Italy (2002)

Salo, A.A., Hämäläinen, R.P.: On the measurement of preferences in the analytic hierarchy process. J. Multi-Criteria Decis. Anal. 6(6), 309–319 (1997)

The World Bank Group:. DataBank: Doing Business 2020. Retrieved on May 5, 2022 (2020). From: https://databank.worldbank.org/source/doing-business

World Bank Group, World Bank Group to Discontinue Doing Business Report:. Retrieved on April 25, 2023 (2021). From: https://www.worldbank.org/en/news/statement/2021/09/16/world-bank-group-to-discontinue-doing-business-report

Wu, Z., Liao, H.: A consensus reaching process for large-scale group decision making with heterogeneous preference information. Int. J. Intell. Syst. 36(9), 4560–4591 (2021)

Xia, M., Xu, Z., Chen, J.: Algorithms for improving consistency or consensus of reciprocal [0, 1]-valued preference relations. Fuzzy Sets Syst. 216, 108–133 (2013)

Zamani, K., Omrani, H.: A complete information PCA-imprecise DEA approach for constructing composite indicator with interval data: an application for finding development degree of cities. Int. J. Oper. Res. 44(4), 522–549 (2022)

Zhang, Q., Chen, J.C., Chong, P.P.: Decision consolidation: criteria weight determination using multiple preference formats. Decis. Support Syst. 38(2), 247–258 (2004)

Zhang, B., Dong, Y., Herrera-Viedma, E.: Group decision making with heterogeneous preference structures: an automatic mechanism to support consensus reaching. Group Decis. Negot. 28(3), 585–617 (2019)

Zhou, M., Hu, M., Chen, Y.W., Cheng, B.Y., Wu, J., Herrera-Viedma, E.: Towards achieving consistent opinion fusion in group decision making with complete distributed preference relations. Knowl. Based Syst. 236, 107740 (2022)

Funding

This work was carried out with the support of the National Council for Scientific and Technological Development of Brazil (CNPq) - productivity grant 311922/2021-0 and Junior postdoctoral fellowship 151518/2022-0.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare they have no financial interests.

Human and/or animals rights

No human participants and/or animals are involved in this research.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Libório, M.P., Ekel, P.I., Bernardes, P. et al. Specialists’ knowledge and cognitive stress in making pairwise comparisons. OPSEARCH 61, 51–70 (2024). https://doi.org/10.1007/s12597-023-00689-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12597-023-00689-2

Keywords

- Pairwise comparison

- Matrix consistency

- Preference formats

- Transformation functions

- Composite indicator

- Group decision-making