Abstract

In lossless or near-lossless image compression, the compression ratio should not be very high to avoid loss of information (especially applicable for remote sensing and medical images). To avoid loss of information (especially applicable for remote sensing and medical images), the compression ratio should not be very high in lossless or near-lossless image compression processes. Due to this restriction, storage space consumption in lossless image compression techniques is more compared with lossy processes. In this article, we propose a second level of the storage space optimization method that applies equally to all lossless image compression techniques. This process can be implemented as a preprocessing or postprocessing method in addition to the researcher’s method. As a result, storage space optimization is enhanced to a satisfactory level. Here, initially, the compressed image (for postprocessing) or the original image (for preprocessing) is modulated using the diffraction grating (amplitude) in the optical frequency domain. Due to this modulation, the compressed image is converted into three spectra (one central spectrum and two side spectra). We consider one of the side spectra (circular-shaped) for further processing. Inverse Fourier Transform along with a Low Pass Filter is applied to extract the image information. It has been observed that this circular-shaped spectrum consumes relatively less storage space than compared to the compressed image. In the case of multiple images, the chosen spectra are placed properly on a canvas whose size is also less than the series of compressed images. This optical system is applicable is any lossless image compression method. We have proved that this second level of compression applies to all lossless and near-lossless techniques in terms of storage space optimization and maintaining a good quality the output.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Lossless or near-lossless image compression techniques are especially applicable to medical or remote-sensing images where too much information loss is not allowed. These images carry a lot of information and hence actual images can’t be recovered in lossy compression processes. Due to this problem, lossy compression processes are not used in medical or remote-sensing images as detailed analysis is not possible for further processing. So, this is a challenging problem for lossless compression techniques where storage space consumption is also a major issue. We studied a few of the very recent image compression methods (last 5 years) and applied their output to our method.

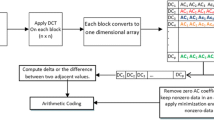

The application of vector quantization with principal component analysis is one of the latest techniques used for lossless compression of JPEG images [1]. The neural network system is also used for lossless compression of remote-sensing images which improves the PSNR of the output [2, 3]. A combination of DWT and residual network provides a 2.5 compression ratio for JPEG images [4, 5]. Deep learning is applied to video surveillance in underground mines [6] which perform lossless compression as well as object detection. Lifting wavelet lossless image compression improves storage space optimization using a high compression ratio (sometimes more than 7) [7]. DICOM system helps to maintain a very high PSNR value for medical images (> 80) [8]. DCT is also used for near-lossless compression, especially for dental images [9]. A 3D version of it is effectively used in endoscopic images [10].

The researchers applied the grating system for multiplexing and compression of a series of images as well as video [11, 12]. Using either amplitude or phase grating, multiple images are modulated at different angles using variable spatial frequencies. A part of the final spectrum is transmitted for storage space optimization. The main drawback of the system is that the number of images is limited. In the modified version of it, this problem is overcome. Here, the selected spectra are placed sequentially in the canvas which is to be transmitted to a long distance [13, 14].

In this article, using amplitude grating, selected images are modulated one by one along the x-axis. A high value of spatial frequency is maintained to overcome the aliasing problem. As a result of this modulation, three spectra are generated. One is the center spectrum which is the direct Fourier Transform of the image. Other spectra are the side lobes generated due to the modulation. Both of them contain the same information hence only one is considered for further processing. With the application of the Inverse Fourier Transform in addition to a low pass filter is utilized for the extraction of the images. During this process, a pixel intensity graph is drawn along the x-axis, and the intensity of the central point and the periphery is measured which is useful for cut-off frequency detection. Lastly, the PSNR value of the extracted images is measured.

Methodology

Let us assume that the selected image is marked as \({k}_{1 }(x,y)\)

Image \({k}_{1 }(x,y)\) is modulated using the amplitude grating Eq.

where \({b}_{0}\) is treated as the spatial frequency (lines/cm) used for modulation

As we consider the 1st order only, hence the value of \(m=1\)

So, the Eq. (1) is written as

Due to the modulation in the image \({k}_{1 }(x,y)\) with the grating system \(g\left(x\right)\), the resultant object is

So. Its Fourier Transform is

\(\frac{1}{2}{K}_{1 }\left(u,v\right)\) denotes the centre spectrum and \(K\left(u\pm {b}_{0},v\right)\) denotes the side spectra. Both spectra contain the same information, hence only one of them is sufficient for the next process. We considered spectrum \({K}_{1 }(u+{b}_{0},v)\) in our research work. The main reason for selecting only the ±1st order is the very poor value of the diffraction efficiency for the ±2nd order and beyond. So, it is impossible to reconstruct the images from those side bands.

By applying the Inverse Fourier Transform and a low pass filter with a suitable cut-off frequency, we recovered the image information.

Result

In this research work, we have chosen five different images which are used by the researchers in the reference research works. As we used different pixel resolution images, we resized them by 512 × 512 for comparative study and analysis of our result. These images are displayed in Fig. 1(a-e).

Figure 2 indicates the enlarged version of the amplitude grating system with a high spatial frequency. The high spatial frequency is used to avoid the aliasing effect of the system. In the case of the aliasing effect, the spectra overlap with each other, hence proper reconstruction would be impossible. We used the spatial frequency value as 1000 (lines/cm) which is sufficient to avoid overlap.

Figure 3(a) shows the modulated spectra (contains three spectra in the same canvas). The center spectrum is the direct Fourier Transform of the image whereas the other spectra represent \(K\left(u\pm {b}_{0},v\right)\). As both of them contain the same information, only one is enough for further processing. We selected \({K}_{1 }\left(u+{b}_{0},v\right)\) which is shown in Fig. 3(b).

Figure 4(a) and Fig. 4(b) show the diagram of the extraction process from the spectrum \({K}_{1 }\left(u+{b}_{0},v\right)\). During this process, initially, we calculate the intensity of the central point and the periphery of the spectrum. These two points are marked as A and B and their difference is denoted by R. It has been observed that the intensity at the periphery (point B) is just half of the centre value intensity (point A). It has been observed that with the increase of the diameter length, the size of the reconstructed images also increases hence more space is required to store the recovered images. So to optimize the storage space, this particular value is selected.

In the next process, the value of R (pixel distance) is calculated which is the cut-off value of the low pass filter. It is possible to extract almost 99% of the image information.

This intensity-based cut-off value selection is useful for the coding of the system. The cut-off frequency value varies from image to image. Hence for any image, where the intensity is half of the center point intensity, this value is treated as the maximum value of R. Now the pixel distance value of R indicates the cut-off frequency value.

In our research work, a value of R = 130 is observed. This is the final value for the selection of the cut-off frequency. The intensity graph is displayed in the Fig. 5.

Figure 5(a-e) displays the extracted images with a pixel resolution size of 260 × 260.

Using three tables, we highlighted the compression ratio (Table 1), the PSNR value of the output images (Table 2), and the improved compression ratio with the existing methods (Table 3)

Special features of this method

-

1.

The compression ratio is almost 4 for the image 512 × 512. In existing lossless or near-lossless methods, the value of the compression ratio is nearly 3. So, in addition to this method, researchers can optimize storage space.

-

2.

The PSNR value is greater than 38 in all cases, which indicates a satisfactory result (> 30 value of PSNR is generally known as good quality output).

-

3.

Especially applicable for remote-sensing and medical images, where too much information loss is not allowed and suitable for all types of images whereas few applications are suitable for JPEG images only.

Conclusion

In this communication, we propose a preprocessing or postprocessing near-lossless compression which can be additionally used with researchers’ compression methods. This near-lossless process provides a compression ratio of 3.9 which is practically more than 20 times (Table 3) for any lossless method. The PSNR value of the output image is greater than 38 which indicates an excellent result. This system is also applicable to colour images. In that case, the entire operation is performed in each plane of the color image and then they are reconstructed together.

References

D. Báscones, C. González, D. Mozos, Hyperspectral image Compression using Vector Quantization, PCA and JPEG2000. Remote Sens. 10, 907 (2018)

J. Li, Z. Liu, Multispectral transforms using convolution neural networks for remote sensing multispectral image Compression. Remote Sens. 11, 759 (2019)

F. Zhang, Z. Xu, W. Chen, Z. Zhang, H. Zhong, J. Luan, C. Li, An Image Compression Method for Video Surveillance System in Underground Mines based on residual networks and Discrete Wavelet transform. Electronics. 8, 1559 (2019)

D. Chen, Y. Li, H. Zhang, W. Gao, Invertible update-then-predict integer lifting wavelet for lossless image compression. EURASIP J. Adv. Signal Processing 2017, 8–17; (2017)

W. Li, W. Sun, Y. Zhao, Z. Yuan, Y. Liu, Deep Image Compression with residual learning. Appl. Sci. 10, 4023 (2020)

S. Yamagiwa, Y. Wenjia, K. Wada, Neural Network; Electron. 11, 504 (2022). Adaptive Lossless Image Data Compression Method Inferring Data Entropy by Applying Deep

T. Gandor, J. Nalepa, First gradually, then suddenly: understanding the impact of Image Compression on object detection using deep learning; sensors; 22, 1104; (2022)

Y. Pourasad, F. Cavallaro, A novel image Processing Approach to Enhancement and Compression of X-ray images. Int. J. Environ. Res. Public. Health. 18, 6724 (2021)

S. Krivenko, V. Lukin, O. Krylova, L. Kryvenko, K. Egiazarian, A fast method of visually Lossless Compression of Dental images. Appl. Sci. 11, 135 (2021)

J. Xue, L. Yin, Z. Lan, M. Long, G. Li, Z. Wang, X. Xie, A 3D DCT Based Image Compression Method for The Medical Endoscopic Application. Sensors. 21, 1817 (2021)

A. Patra, A. Saha, K. Bhattacharya, Efficient storage and encryption of 32-slice CT scan images using phase grating. Arab. J. Sci. Eng., 47 (6); (2022)

A. Patra, A. Saha, Multiplexing and encryption of images using phase grating and random phase mask. Opt. Eng. 59(3), 033105 (2020)

A. Patra, A. Saha, High-resolution image multiplexing using amplitude grating for remote sensing applications. Opt. Eng. 60(7), 073104 (2021)

A. Patra, A. Saha, Compression of High-Resolution Video using Phase Grating. J. Indian Soc. Remote Sensing; Vol. 51(10), 2057–2066 (2023)

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

None of the authors have a conflict of interest to disclose.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Electronic supplementary material

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Patra, A., Saha, A. & Bhattacharya, K. Second level storage space optimization for lossless image compression using diffraction grating. J Opt (2024). https://doi.org/10.1007/s12596-024-01919-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12596-024-01919-6