Abstract

Unmanned aerial vehicle (UAV) imaging has been increasingly applied for environmental monitoring due to its high spatial resolution. However, the digital number representations of UAV images cannot precisely represent the true radiance of ground objects due to the complexity of solar radiation transmission through the atmosphere. Nonetheless, previous studies have not considered the radiometric information of UAV images in terms of the atmospheric effects obtained under different meteorological conditions. This is addressed in the present work by proposing an atmospheric correction algorithm for UAV imagery based on Gordon’s standard near-infrared (NIR) atmospheric correction applied to water color remote sensing. First, the atmospheric path radiance (Lpath) and the diffuse transmittance (t) of water bodies in each spectral band of UAV images are obtained by measurements of the radiance of clear water bodies. Then, the obtained values of Lpath and t are employed in the atmospheric correction processing of UAV imagery. As such, the proposed method is generally applicable to areas with clear water bodies. The atmospheric correction performance of the proposed method is compared with that of dark pixel atmospheric correction. The vegetation radiance corrected by the proposed correction (Lv'(λi)) was more closer to the measurement than that corrected by dark pixel correction (Lv''(λi)). Three vegetation indices (ExG, NGRDI, and NDVI) were used to further compare the performance of the two atmospheric correction. The average percentile differences of EXG (12.41%, 19.15% and 10.18%) obtained from the UAV images corrected using the proposed algorithm (AC_W) were less than those (48.18%, 18.75%, and 58.9%) obtained from the UAV images corrected using the dark pixel method (AC_D). So did those of NGRDI and NDVI. The proposed method is demonstrated to perform distinctly better. Despite some error (5–7%), the proposed method provides an alternative for applying an atmospheric correction to UAV imagery under different meteorological conditions. The proposed method is also demonstrated to be simpler and more operable than the atmospheric aerosol optical thickness method.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Since its emergence, remote sensing technology has been applied widely for the periodic monitoring of natural and human environments under various spectral, spatial, and temporal resolutions, and its use has expanded greatly in recent decades. However, the spectral, spatial, and temporal resolutions required are increasing day by day with rapid developments in technology and the needs of economies and societies worldwide. Presently, the rapid development of unmanned aerial vehicle (UAV) technology has led to its greatly expanding use for capturing low altitude imagery due to its high spatial resolution, flexibility, relatively low cost, and timely nature (Mohamar et al., 2014). The advantages of this technology enable the capture of UAV images at virtually any time with a wide selection of wavelengths and flight altitudes. Thus, UAV-based environmental studies have become an essential supplement to studies based on remote sensing technologies employing satellite and conventional aircraft platforms. Moreover, developments in photogrammetry and associated image-processing techniques have extended the use of UAV imaging platforms to a wide variety of application fields, such as disaster monitoring (Nugroho et al., 2015), topography (Roosevelt, 2014; Yucel & Turan, 2016), archeology (Themistocleous et al., 2015), glaciers (Bliakharskii & Florinsky, 2018), soil erosion (D'Oleire-Oltmanns et al., 2012), precision agriculture (Zhang & Kovacs, 2012), and resource management (Mozhdeh et al., 2014).

The application of geographical registration techniques to the processing of UAV imagery has been widely explored because of the inherent geometric deformations in UAV images (Doucette et al. 2013). However, UAV images are also subject to distortions owing to sensor characteristics, lighting geometry, flight attitude, atmospheric conditions, etc. As a result, digital number (DN) representations of UAV images cannot represent the true radiance of ground objects. Nonetheless, previous studies have not considered the radiometric information of UAV images in terms of the atmospheric effects obtained under different meteorological conditions. Here, conducting advanced spectral analyses of DN data requires additional information regarding the radiometric quality of UAV imagery, particularly when applying spectral indices in vegetation monitoring (Dbrowski & Jenerowicz, 2015). For example, a QA index was developed to assess the radiometric quality of multispectral UAV imagery data by considering the influence of lighting and atmospheric conditions, camera noise, and topographic conditions (Wierzbicki et al., 2019). While this index was demonstrated to be helpful in this regard, it could not distinguish noise from useful signal data and therefore could not expand the range of UAV imaging applications. We also note that while multiple atmospheric correction techniques have been developed for satellite imagery, such as Moderate Resolution Transmission (MORTRAN) (Berk, Bernstein, & Robertson, 1989), Low Resolution Transmission (LOWTRAN) (Van & Alley, 1990), Second Simulation of the Satellite Signal in the Solar Spectrum (6S) (Hu et al., 2014) and Atmospheric Correction and Haze Reduction (ATCOR) (Middleton et al., 2012), these techniques are inherently ill-suited to UAV imagery due to the atmosphere optical thickness difference at the distinct flight altitudes. Accordingly, standardized atmospheric correction techniques appropriate for UAV imagery are urgently required.

This issue has been addressed to some extent in past studies. For example, an atmospheric correction technique based on the radiation transfer model was developed according to an analysis of the effect of atmospheric attenuation on UAV image data obtained at different altitudes and was implemented using near-infrared (NIR) transmittance measurements (Yu et al., 2016). The developed technique was effective but limited because its successful application required several preconditions to be met. First, the technique was only usable on sunny days. Second, the NIR atmospheric transmittance had to be very close to 1. Therefore, clean air with a low concentration of suspended solid or liquid particles forming an aerosol was essential. While particle size distribution samplers and photometers would undoubtedly reduce the limitations of the method by providing NIR transmittance and aerosol particle size distributions synchronously, this not only is laborious but would also introduce unavoidable experimental and instrumental errors. The results of the proposed method demonstrated the usefulness of atmospheric correction based on attenuation. However, the development of alternatives to NIR transmittance measurements is preferred.

The atmospheric attenuation obtained in the UAV imaging process at low altitude includes absorption by atmospheric gases and Rayleigh and Mie scattering. The absorption of visible light (Vis) by molecular nitrogen (N2) is negligible, while absorption caused by moisture (H2O), carbon dioxide (CO2), and molecular oxygen (O2) is mainly concentrated at NIR wavelengths rather than at Vis wavelengths, and these can be avoided by the application of a selective atmosphere window (NIRAW). Thus, the atmospheric attenuation of the Vis band at low altitude is mainly the result of Rayleigh and Mie scattering, and only attenuation due to these sources needs to be considered and measured, so as to be removed from the DN representations of UAV imagery. The Rayleigh scattering intensity is calculated in a straightforward manner based on the inverse correlation between itself and the wavelength to the fourth power (Kahle, 2012). Therefore, aerosol single scattering and aerosol multiple scattering represent the focus of atmospheric correction for UAV imagery. However, this is quite challenging considering the impact of changing meteorological conditions and the incompletely understood relationship between aerosol multiple scattering and atmospheric diffuse transmittance.

The present work addresses the above-discussed issues associated with developing a standardized atmospheric correction technique appropriate for UAV imagery by employing the unique reflectance spectra characteristics of clear water according to Gordon’s standard NIR empirical atmospheric correction algorithm originally developed for water color remote sensing (Gordon & Wang, 1994). Here, we note that the NIR reflectance of clear water is very close to zero. Accordingly, the DN values of an image of clear water are derived strictly from atmospheric path radiation and therefore consist of a Rayleigh scattering component (Lr), an aerosol single scattering component (La), and an aerosol multiple scattering component (Lra). Therefore, the proposed algorithm first obtains the atmospheric path radiation (Lpath) and the diffuse transmittance (t) of clear water bodies in each spectral band of UAV images by measurements of their radiance. Then, the obtained values of Lpath and t are employed in the atmospheric correction processing of UAV imagery. As such, the proposed method is generally applicable to areas with clear water bodies. The atmospheric correction performance of the proposed method is compared with that of dark pixel atmospheric correction, and the proposed method is demonstrated to perform distinctly better based on evaluations of the errors in corrected radiance values and the values of vegetation indices relative to measured values. Despite some error, the proposed method provides an alternative for applying an atmospheric correction to UAV imagery under different meteorological conditions. The proposed method is also demonstrated to be simpler and more operable than the atmospheric aerosol optical thickness method.

Materials and Methods

Study Area and Data Acquisition

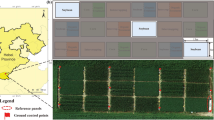

The study area is located in the Dongmotang village of Yantai city, Shandong Province (121°21′10′′–121°21′30′′ E, 37°23′42′′-21°24′02′′ N), which is indicated by the star in Fig. 1. The region is flat and covered with low crop, and with single trees and shrubs. The study area is characterized by a low degree of urbanization, with single-family houses, roadways, and a water reservoir. Our flights were conducted on May 30, 2019, which was a fine, windless day.

Hyperspectral UAV images were captured with an EcoDrone UAS-8 Pro platform equipped with eight brushless electric motors. The platform was equipped with a high-resolution digital camera (Snapshot) providing multispectral images in the Vis and NIR range (500–900 nm). The navigation system included GPS/GLONASS and an optical positioning system. The UAV platform was steered with an integrated autonomous dual hot backup flight controller operating on the 0.84 GHz frequency. A RedEdge-MX sensor with five spectral channels was mounted on a three-axis stabilized gimbal. The acquired images were georeferenced using the on-board GPS and then combined into a mosaic. Image data were acquired at altitudes of 50 m, 100 m, and 200 m. The low atmosphere radiation process model employed for UAV imagery is illustrated in Fig. 2.

The proposed atmosphere correction algorithm was calibrated, and its results validated by measuring the reflectance spectra of vegetation using a FieldSpec Pro Dual Vis to NIR (VNIR) spectroradiometer (Analytical Spectral Devices, Inc., Boulder CO, the USA), which is a hyper-spectrum radiometer capable of collecting irradiation measurements over a range from 350 to 1050 nm with a spectral resolution of 3 nm. A near-Lambertian reflectance plaque (Lab-sphere, Inc., North Sutton NH, the USA) was used as a reference standard to adjust and optimize the incoming irradiation measurements of the spectroradiometer. The radiance and reflectance spectra of water were subsequently measured to obtain the current Lc(λ) = Lra(λ) + La(λ) at a given wavelength λ and t. Here, we adopted Tang’s method for measuring the radiance of water due to the distinct radiative transport process of water compared with that of vegetation (Tang et al. 2004). Savitzky–Golay smoothing was used to reduce the noise of the measured spectral curves of water and vegetation. The values of Lc(λ) and t(λ) were based on an average of three water measurements. Similarly, the radiance and reflectance spectra of vegetation were based on an average of three measurements. Examples of radiance and reflectance spectra are, respectively, presented in Fig. 3a and b for vegetation (i.e., crop) and water areas.

Theoretical Basis of Atmospheric Correction for UAV Imagery

The goal of atmospheric correction for water color remote sensing is to retrieve the actual radiance Lw(λ) produced by an area of water at a center wavelength λ from the total radiance Lt(λ) received by the various sensors mounted on the associated platforms (Gordon & Wang, 1994). For this purpose, Lt(λ) is defined as follows:

where Lpath(λ) is the component of radiance derived from the scattering of atmospheric molecules and aerosols along the path of light transmission, Lg(λ) is the radiance generated from sun glitter, which is the specular reflection of direct sunlight from the water surface, T(λ) is the direct atmospheric transmittance, t(λ) is the atmospheric diffuse transmittance, and Lwc(λ) is the radiance arising from whitecaps on the water surface. Here, Lg(λ) and Lwc(λ) can be ignored for inland water areas with small undulations and waves. Accordingly, (1) can be rewritten under these conditions as follows:

Based on (2) above, the value of Lpath(λ) can be obtained from the signal Lt(λ) received by the sensor at NIR wavelengths λnir because Lw(λnir) is very close to zero. From fundamental analysis, we know that Lpath(λnir) can be given as follows:

Here, the Rayleigh scattering component Lr(λnir) is relatively stable on a global scale and depends on the altitude (Wang et al., 2012). The details of calculating Lr(λnir) are presented in the following subsection. Accordingly, the sum Lc(λnir) = La(λnir) + Lra(λnir) can now be obtained as Lc(λnir) = Lpath(λnir) − Lr(λnir). Further, we can obtain the total aerosol scattering reflectance ρc(λnir) = πLc(λnir)/(F0(λnir)cos(θ0)), where F0(λnir) is the NIR solar irradiance at the top of the atmosphere, which is obtained online (http://oceancolor.gsfc.nasa.gov/), and the solar zenith angle θ0 is calculated based on date, time, and local latitude and longitude. Then, we denote ρc(λnir) = K(λnir)ρas(λnir) according Gorden’s finding, where K(λ) is an empirical constant, and ρas(λ) is the aerosol single scattering reflectance (Gordon & Wang, 1994).

We can now calculate the atmospheric correction parameter ε = ρas(λi)/(ρas(λj)) at two NIR wavelengths λi and λj based on the values of K and ρc obtained at those wavelengths as follows (Wang & Shi, 2007):

Actually, the value of K(λj)/(K(λi) is very close to 1 for maritime, coastal, and tropospheric aerosol models with different relative humidities (Gordon & Voss, 1999). Accordingly, (4) is rewritten as ε(λi, λj) = ρc(λi)/ρc(λj). In addition, ε can be obtained at the two NIR wavelengths λi and λj as follows (Gordon & Wang, 1994).

Accordingly, the constant c in (5) can be obtained based on the solution obtained in (4) as c = ln[ε(λi, λj)]/((λj − λi)/1000). Then, ρc(λ) can be iteratively calculated at the remaining wavelengths λi based on (4) and (5). Similarly, Lc(λ) can be calculated at the remaining wavelengths λi. Because Lc(λ) is now known at each λ, the value of Lpath(λ) can be obtained from (3) based on the calculated values of Lr(λ). Accordingly, t(λ)Lw(λ) at each λ can then be obtained at each λ based on (2). Finally, t(λ) can be obtained with the measured value of Lw(λ).

Although the above derivation was applied to water bodies, the obtained t(λ) at each wavelength is general to other objects nearby as is the obtained Lc(λ). Thus, the values t(λ) and Lc(λ) can be employed in the atmospheric correction of UAVs imagery for vegetation monitoring, which is presented as follows.

The DN values of pixels in UAV images originate from the ground irradiance (H), ground surface reflectance (R), the atmospheric diffuse transmittance t(λ), and the atmosphere path radiance (Dpath) (Li, Chi, & Yi, 1993):

where C is the inherent photoelectric conversion coefficient of the associated sensor and H = πLref(λ)/ρref(λ). Here, Lref(λ) and ρref(λ) denote the measured radiance and the pre-calibrated reflectance of the reference plaque, respectively. The value of Dpath is given as C(Lc(λ) + Lr(λ)). Because the value of Dpath for a water body was equal to the value Dpath for other objects nearby, so is the value of t(λ). Then, R is finally obtained based on the known DN values.

Calculation of Atmospheric Transmittance and Dpath

The DN values of water bodies in UAV imagery are transformed into radiance based on the inherent radiometric calibration function of the RedEdge-MX sensor. The obtained NIR radiance is the value of Dpath(NIR) for a water body because the NIR radiance of water is zero. This enables Lr(λnir) to be obtained as follows (Wang et al., 2013):

where Toz(λnir) is the single Rayleigh scattering albedo, τR(λnir) is the optical thickness of the ozone layer, and Pr(ψ↓) and Pr(ψ↑) are the Rayleigh phase functions of incident light and reflected light, respectively. Similarly, ρ(μ) and ρ(μ0) are the Fresnel reflectivity, where μ and μ0 are the cosine of the sensor zenith angle and the cosine of the solar zenith angle, respectively. Owing to the low altitude of UAVs, τR(λnir) = 1 due to the lack of an ozone layer. Similarly, μ was considered to be 1 due to the low altitude and the vertical photography of UAVs (Fig. 2).

We now present explicit calculations involving (4) and (5) above. Because Lr(λnir) was obtained, Lc(λnir) can be obtained by extracting Lr(λnir) from Lpath(λnir), and ρc(λnir) is obtained from Lc(λnir). Once ρc(λnir) is obtained, we can now calculate ε by substituting ρc(λnir) in (4) at NIR wavelengths of 720 and 840 nm as follows (Gordon & Voss, 1999):

The undetermined constant c is then obtained based on the solution of (8) in conjunction with (5) as c = ln[ε(720, 840)/(0.84 − 0.72). Finally, ε(λi,840) at the remaining wavelengths λi can be obtained as follows (Chen, 2007):

The values of ρc(λi) at the remaining wavelengths of UAV images can then be iteratively calculated from (8) and (9). Then, Dpath(λi) can be obtained. The obtained value of Dpath(λi) and the measured water reflectance Ri are employed to calculate t(λi) using (6). The values of Lt(λi), Lr(λi), Lc(λi), Lw(λi), and t(λi) obtained according to the above-outlined process (Fig. 4) are given in Fig. 5 for a representative body of water. Although the preceding example was applied to a water body, the obtained values of Dpath(λi) and t(λi) are applicable to other objects nearby because they are subject to the same meteorological condition.

We note from the figure that Lr(λi) decreased slightly from 6.84 to 6.71 (μW·dm−1·sr−1·nm−1) with increasing altitude, and the ratio of Lr(λi) to Lpath ranged from 91 to 50% at 50 m, 77% to 25% at 100 m, and 61% to 14% at 200 m. We also note that Lpath was dominated by the Lr(λi) component (i.e., Rayleigh scattering) at Vis wavelengths. In contrast, Lc(λi) increased from 0.4 to 2.6 with increasing altitude, and Lpath was dominated by the Lc(λi) component (i.e., Mie scattering) at NIR wavelengths. Here, Lc(λi) increased significantly from the lower atmosphere to the upper atmosphere due to the exponential decay of the aerosol extinction coefficient (Xuan et al., 2016). The ratio of Lc(λi) to Lpath observed here is greater than those obtained by satellite remote sensing atmospheric correction methods, which may be due to the fact that aerosol concentrations in the lower atmosphere are much greater than those in the upper atmosphere (Xing-xing et al., 2018). Meanwhile, the results in Fig. 5d indicate that the obtained values of t(λi) increased with increasing wavelength, which verifies that NIR wavelengths are less affected by atmospheric molecules and aerosols than Vis wavelengths. In addition, t(λi) significantly decreased with increasing altitude owing to the changing meteorological conditions.

Evaluation of Atmospheric Correction

While no customized atmospheric correction algorithm exists for the UAV imagery, conventional dark pixel atmospheric correction may be expected to function appropriately to some extent according to its operating principle (Bian, 2013). Therefore, the performance of the atmospheric correction algorithm proposed herein was compared with that of dark pixel atmospheric correction, where the minimum radiance of each wavelength band was used as the radiance of the dark pixel. Performance comparisons were based on the following three UAV-derived vegetation indices (VIs):

Here, NIR, R, G, and B denote the reflectance values of R obtained at the wavelengths 840, 670, 560, and 475 nm, respectively.

Results

The proposed atmospheric correction algorithm and dark pixel atmospheric correction were applied to UAV images obtained at 50, 100, and 200 m, and the Lt(λi), and Lv(λi) values obtained at these altitudes are, respectively, presented in Fig. 6a, b, and c with respect to wavelength. Here, Lv(λi) is the measured vegetation radiance, and the values of Lv'(λi) and Lv''(λi) represent the vegetation radiance corrected by the proposed correction and dark pixel correction methods, respectively. In addition, the values of ExG, NGRDI, and NDVI obtained from the measurement, and the corresponding values obtained from the uncorrected images and the images corrected using the proposed atmospheric correction algorithm and dark pixel atmospheric correction are presented in Fig. 6d, where the unprimed, primed, and double-primed values are for images captured at 50, 100, and 200 m, respectively.

Values of Lt(λi), Lv(λi), Lv'(λi), and Lv''(λi) obtained with respect to wavelength for UAV images captured at altitudes of 50 m (a), 100 m (b), and 200 m (c). In addition, the measured values of ExG (Ex), NGRDI (NG), and NDVI (ND), and the corresponding values obtained from the uncorrected images (UC) and the images corrected using the proposed atmospheric correction algorithm (AC_W) and dark pixel atmospheric correction (AC_D) are presented in (d), where the unprimed, primed, and double-primed values are for images captured at 50, 100, and 200 m, respectively

We note from Fig. 6a–c that Lt(λi) increased with increasing altitude, indicating that the atmospheric path radiation was indeed affected by altitude. The average percentile differences ((Lt(λi)—Lv(λi))/ Lv(λi)) between the values of Lt(λi) and Lv(λi) at altitudes of 50, 100, and 200 m were 10%, 14%, and 19.7%, respectively. Accordingly, atmospheric correction was essential. We also note that Lv′(λi) was closer to Lv(λi) than Lv′′(λi) and exhibited a similar trend to that of Lv(λi) at each altitude. Here, the average percentile differences ((Lv(λi)—Lv′(λi))/ Lv(λi)) between the values of Lv(λi) and Lv′(λi) at altitudes of 50, 100, and 200 m were 6%, 5%, and 7%, while those of Lv′′(λi) were 11%, 13%, and 14.8%, respectively. Obviously, the proposed atmospheric correction algorithm performed better than dark pixel atmospheric correction. We also note that the obtained Lv′(λi) values were less than those of Lv(λi), particularly at 475 nm (i.e., blue) and 560 nm (i.e., green). The main reason was the overestimation of ε for the Vis band resulting from the use of the exponential function in (9) (Chen, 2007). This error is general and admissible in water color remote sensing atmospheric correction (Chen, 2007). In addition, the Lv′(λi) and Lv′′(λi) values were very close to Lv(λi) at 720 nm (i.e., red) and 840 nm (i.e., NIR) due to their reliance on the similar premise that Lw(λnir) was very close to 0.

The results presented in Fig. 6d are compared in Table 1 according to the percentile differences ((VI_corrected-VI_measured)/VI_measured) between the VI values obtained from the corrected UAV images captured at the three altitudes relative to the corresponding measured VI values. Here, the errors in the VI values obtained from the UAV images corrected using the proposed algorithm and the dark pixel method are denoted as AC_W and AC_D, respectively. The results indicate that the proposed atmospheric correction algorithm provided far more accurate VI values than dark pixel atmospheric correction for nearly all VIs and altitudes. The only exception is observed for the NGRDI index obtained at an altitude of 100 m, where AC_D is slightly less than AC_W. We also note that the accuracies obtained under both correction methods when determining NDVI, which relies heavily on the NIR band, were much better than those obtained for the other two VIs, verifying that the NIR band was less affected by atmospheric path radiation than the Vis band (Nishizawa et al., 2004).

Discussion

As discussed, most studies have ignored the effect of atmospheric path radiation on UAV imagery because of the very low flight altitudes involved. Moreover, few studies have considered the effect of aerosol multiple scattering. The above-discussed results clearly indicate that atmospheric path radiation was prevalent in our tests and indeed affected the DN values of UAV images. As such, and atmospheric path radiation would limit the further application of UAV imagery into areas presently restricted to remote sensing based on satellite and aircraft platforms. It was also addressed here based on the results that Lt(λi) increased with increasing altitude, indicating that the atmospheric path radiation was indeed affected by altitude. The average percentile differences between the values of Lt(λi) and Lv(λi) at altitudes of 50 m, 100 m, and 200 m were 10%, 14%, and 19.7%, respectively. Atmospheric correction was essential.

Lv′(λi) at each band presented a similar trend to that of Lv(λi) at each altitude (50 m, 100 m and 200 m), so did Lv′′(λi). However, Lv′(λi) was more closer to Lv(λi) than Lv′′(λi). We used three VIs (ExG, NGRDI, and NDVI) to further compare the performance of the two atmospheric correction methods. The average percentile differences of EXG (12.41%, 19.15%, and 10.18%) obtained from the UAV images corrected using the proposed algorithm (AC_W) were less than those (48.18%, 18.75%, and 58.9%) obtained from the UAV images corrected using the dark pixel method (AC_D). So did those of NGRDI and NDVI. The proposed atmospheric correction algorithm provided far more accurate VI values than dark pixel atmospheric correction for nearly all VIs and altitudes. Moreover, the accuracies obtained under both correction methods when determining NDVI, which relies heavily on the NIR band, were much better than those obtained for the other two VIs, verifying that the NIR band was less affected by atmospheric path radiation than the Vis band.

Moreover, the method also avoids the need for the laborious evaluation of aerosol particle size distributions because the values of Lpath and t(λi) can be obtained easily under different meteorological conditions regardless of the aerosol optical thickness, resulting in improved accuracy and operability for the atmospheric correction of UAV imagery. Therefore, the proposed algorithm can be expected to be generally useful for various imaging applications subject to varying meteorological conditions.

While the proposed algorithm has been demonstrated to provide superior correction performance, the measured radiance still exhibited some error in the range of 5%–7%, which was mainly derived from the obtained value of Lc(λi) in the Vis band owing to the use of the exponential function in (5). However, this method has been generally employed in atmospheric correction for water color remote sensing with an admissible degree of error and is expected to be reasonable for use in the present context under the assumption that the NIR reflectance of clear water is very close to zero. As such, the proposed algorithm requires a clear water body without eutrophication. Under conditions where the optical characteristics of water bodies are affected by chlorophyll, suspended matter, and/or yellow matter, the NIR wavelengths adopted in this paper should be replaced by shortwave infrared (SWIR) wavelengths because the backscattering coefficients of chlorophyll and yellow matter in the SWIR band are very close to zero unless the concentrations of chlorophyll and suspended matter are extremely high (Tian et al., 2010).

Conclusions

This study proposed an atmospheric correction algorithm for UAV imagery based on the working principles of Gordon’s empirical NIR atmospheric correction algorithm, which was originally developed for water color remote sensing. Atmospheric path radiance (Lc(λi)) and atmospheric diffuse transmittance (t(λi)) were first obtained at different wavelengths of the Vis and NIR bands for UAV imagery of clear water bodies based on the general assumption that the NIR reflectance of clear water is very close to zero. The obtained values of Lpath and t(λi) for the water body were then used in the atmospheric correction processing of UAV imagery for nearby vegetation because both the water and vegetation were imaged under an equivalent meteorological condition. The atmospheric correction performance of the proposed method was compared with that of dark pixel atmospheric correction based on evaluations of the errors in corrected radiance values and the values of three VIs (ExG, NGRDI, and NDVI) relative to measured values. The proposed algorithm was demonstrated to perform better than the dark pixel method in terms of corrected radiance values, and the values of the three VIs relative to the measured values for images captured at each of three altitudes (50 m, 100 m, and 200 m). Among the three VIs, the accuracies obtained under both correction methods when determining NDVI were much better than those obtained for the other two VIs at each altitude. Despite some error, the proposed method was demonstrated to provide an alternative for applying an atmospheric correction to UAV imagery under different meteorological conditions. The proposed method was also demonstrated to be simpler and more operable than the atmospheric aerosol optical thickness method.

References

Berk, A., Bernstein, L. S., Robertson, D. C. (1989). MODTRAN: A moderate resolution model for LOWTRAN. Air Force Geophysical Laboratory Technical Report.

Bian, J. (2013). Back analysis of aerosol optical thickness on Bohai Gulf based on MISR data. Journal of Applied Optics, 34(1), 74–78.

Bliakharskii, D., Florinsky, I. (2018). Unmanned aerial survey for modelling glacier topography in Antarctica: First results. In Paper presented at the 4th International Conference on Geographical Information Systems Theory, Applications and Management (GISTAM 2018).

Chen, J. (2007). Atmospheric correction of MODIS image for turbid coastal waters. Journal of Atmospheric & Environmental Optics, 2(4), 306–311.

Dbrowski, R., & Jenerowicz A. (2015). PORTABLE IMAGERY QUALITY ASSESSMENT TEST FIELD FOR UAV SENSORS. Isprs International Archives of the Photogrammetry Remote Sensing & Spatial Information Sciences XL-1/W4:117-22.

D’Oleire-Oltmanns, S., Marzolff, I., Peter, K. D., & Ries, J. B. (2012). Unmanned aerial vehicle (UAV) for monitoring soil erosion in Morocco. Remote Sensing., 4, 3390–3416.

Doucette, P., Antonisse, J., Braun, A., Lenihan, M., & Brennan, M. (2013). Image georegistration methods: A framework for application guidelines. In: 2013 IEEE Applied Imagery Pattern Recognition Workshop (AIPR), 2013, Washington, DC, USA, pp. 1–14. https://doi.org/10.1109/AIPR.2013.6749317.

Gordon, H. R., & Voss, K. J. (1999). MODIS normalized water-leaving radiance algorithm theoretical basis document. Contract Number NAS5-31363, NASA Goddard Space Flight Center, Greenbelt, MD, USA, pp. 1–2.

Gordon, H. R., & Wang, M. (1994). Retrieval of water-leaving radiance and aerosol optical thickness over the oceans with SeaWiFS: A preliminary algorithm. Applied Optics, 33(3), 443–452.

Hu, Y., Liu, L., Liu, L., Peng, D., Jiao, Q., & Zhang, H. (2014). A Landsat-5 atmospheric correction based on MODIS atmosphere products and 6S model. IEEE Journal of Selected Topics in Applied Earth Observations & Remote Sensing, 7(5), 1609–1615.

Kahle, A. B. (2012). Intensity of radiation from a Rayleigh-scattering atmosphere. Journal of Geophysical Research, 73(23), 7511–7518.

Li, X., Chi, T., & Yi, L. (1993). Inverse computation of ground albedo from remotely sensed data. Remote Sensing of Environment, 8(4), 306–314.

Middleton, M., Nahi, P., Arkimaa, H., Hyvonen, E., Kuosmanen, V., Treitz, P., & Sutinen, R. (2012). Ordination and hyperspectral remote sensing approach to classify peatland biotopes along soil moisture and fertility gradients. Remote Sensing of Environment, 124, 596–609.

Mohamar, M. O., Aurore, D., Debouche, C., & Lisein, J. (2014). The evaluation of unmanned aerial system-based photogrammetry and terrestrial laser scanning to generate DEMs of agricultural watersheds. Geomorphology, 214, 339–355.

Mozhdeh, S., Théau, J., & Ménard, P. (2014). Recent applications of unmanned aerial imagery in natural resource management. Giscience & Remote Sensing., 51, 339–365.

Nishizawa, T., Asano, S., Uchiyama, A., & Yamazaki, A. (2004). Seasonal variation of aerosol direct radiative forcing and optical properties estimated from ground-based solar radiation measurements. Journal of the Atmospheric Sciences, 61(1), 57–72.

Nugroho, G., Satrio, M., Rafsanjani, A. A., Sadewo, R. R. T. 2015. Avionic system design Unmanned Aerial Vehicle for disaster area monitoring. In Paper presented at the 2015 International Conference on Advanced Mechatronics, Intelligent Manufacture, and Industrial Automation (ICAMIMIA).

Roosevelt, C. H. (2014). Mapping site-level microtopography with Real-Time Kinematic Global Navigation Satellite Systems (RTK GNSS) and Unmanned Aerial Vehicle Photogrammetry (UAVP). Open Archaeology, 1(1), 29–53.

Tang, J., Guo, T., Wang, X., Wang, X., & Song, Q. (2004). The methods of water spectra measurement and analysis I:above-water method. Journal of Remote Sensing, 8(1), 37–44.

Themistocleous, K., Agapiou, A., Cuca, B., & Hadjimitsis, D. G. (2015). Unmanned aerial systems and spectroscopy for remote sensing applications in archaeology. International Archives of the Photogrammetry Remote Sensing & S XL-7/W3, 7, 1419–1423.

Tian, L. Q., Jian Zhong, Lu., Chen, X. L., Zhi Feng, Yu., Xiao, J. J., Qiu, F., & Zhao, Xi. (2010). Atmospheric correction of HJ-1A/B CCD images over Chinese coastal waters using MODIS-Terra aerosol data. SCIENCE CHINA Technological Sciences, 53(s1), 191–195.

Van den Bosch, J. M, & Alley R. E. (1990). Application Of Lowtran 7 As An Atmospheric Correction To Airborne Visible/infrared Imaging Spectrometer (AVIRIS) Data. In Paper presented at the International Geoscience & Remote Sensing Symposium.

Wang, M., Ahn, J. H., Jiang, L., Shi, W., Son, S. H., Park, Y. J., & Ryu, J. H. (2013). Ocean color products from the Korean Geostationary Ocean Color Imager (GOCI). Optics Express, 21(3), 3835.

Wang, M., & Shi, W. (2007). The NIR-SWIR combined atmospheric correction approach for MODIS ocean color data processing. Optics Express, 15(24), 15722–15733.

Wang, M., Shi, W., & Jiang, L. (2012). Atmospheric correction using near-infrared bands for satellite ocean color data processing in the turbid western Pacific region. Optics Express, 20(2), 741.

Wierzbicki, D., Kedzierski, M., Grochala, A., Fryskowska, A., & Siewert, J. (2019). Influence of lower atmosphere on the radiometric quality of unmanned aerial vehicle imagery. Remote Sensing, 11(10), 1214–1237.

Xing-xing, G., Yan, C., Lei, Z., & Wu, Z. (2018). Vertical distribution of seasonal aerosols and their optical properties over Northern China. Journal of Lanzhou University: Natural Sciences, 54(3), 395–403.

Xuan, L., Bin, Z., Liang, Y., Xuefeng, G., Xinjiang Meteorological Service Center, Key Laboratory of Meteorological Disaster, Ministry of Education, et al. (2016). Characteristics of Aerosol Vertical Distribution in Eastern China Based on CALIPSO Satellite Data. Desert & Oasis Meteorology.

Yu, X., Liu, Q., Liu, X., Liu, X., & Wang, Y. (2016). A physical-based atmospheric correction algorithm of unmanned aerial vehicles images and its utility analysis. International Journal of Remote Sensing, 38(8–10), 3101–3112.

Yucel, M. A., & Turan, R. Y. (2016). Areal change detection and 3D modeling of mine lakes using high-resolution unmanned aerial vehicle images. Arabian Journal for Science & Engineering, 41(12), 4867–4878.

Zhang, C., & Kovacs, J. M. (2012). The application of small unmanned aerial systems for precision agriculture: A review. Precision Agriculture, 13(6), 693–712.

Acknowledgements

This research was funded by the Shandong Society of Soil and Water Conservation Innovation Project (Grant No. 2019004), the National Science Foundation of China-Shandong United (Grant No. U1706220), the National Science Foundation of China (Grant Nos. 41901006 and 41471005), the Shandong Provincial Natural Science Foundation (Grant No. ZR2020MD082, ZR2019BD005) , the Science and Technology Support Plan for Youth Innovation of Colleges and Universities of Shandong (Grant Nos. 2019KJH009 and 2020KJH002) and the Key Research and Development Plan of Shandong (2018GSF117001,2018GSF117021). We thank LetPub (www.letpub.com) for its linguistic assistance during the preparation of this manuscript.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

About this article

Cite this article

Ma, L., Liu, Y., Yu, X. et al. The Utility of Gordon’s Standard NIR Empirical Atmospheric Correction Algorithm for Unmanned Aerial Vehicle Imagery. J Indian Soc Remote Sens 49, 2891–2901 (2021). https://doi.org/10.1007/s12524-021-01434-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12524-021-01434-2