Abstract

This paper presents new zooarcheological data examining the relative abundances of artiodactyl and leporid remains from Holocene-aged sites in the Bonneville basin. Prior scholarship derived largely from sheltered sites suggests favorable climate conditions during the late Holocene increased foraging efficiency and supported a focus on hunting high-value artiodactyls. Using theoretical rationale from foraging theory and empirical data, we re-evaluate the trade-offs between the risk of hunting failure and energetic returns associated with the procurement artiodactyls and leporids, the two most common prey groups found in the regional zooarcheological record. The trade-offs between risk and energy show that while small in body size, leporids are a low risk, reliable food source rather than an inefficient resource targeted only when high-ranked prey are unavailable. We present faunal data from more than 80 open contexts in the Bonneville basin dating to the late Holocene that show a relatively stable exploitation strategy centered on leporids, especially hares (Lepus sp.). Additional data from open and sheltered sites in neighboring areas show a similar pattern. The prehistoric reliance on small game is consistent with divergent labor patterns observed in the ethnographic and historic records of the area. We advocate for the evaluation of the trade-offs between risk and energy of different sized prey within a regional context, and the use of zooarcheological data derived from a large number of sites and different site types to infer prey exploitation patterns.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Guided by theoretical rationale derived from the prey choice model (PreyCM), the relative abundances of large to small-sized prey in zooarcheological assemblages are often used as important tools to measure relative prehistoric foraging efficiency (e.g., Broughton 1994, 2002; Butler and Campbell 2004; Wolverton 2005; Codding et al. 2010; Broughton et al. 2011). The PreyCM predicts dietary choice from an array of available resources ranked on a single dimension of profitability—the post-encounter return rate (kilocalories (kcals) obtained per unit of handling time)—and is generally viewed as a robust model for predicting resource choices among contemporary foragers (e.g., Winterhalder 1981; Hawkes and O’Connell 1985; Hill et al. 1987; Smith 1991). Working under the assumption that a forager’s goal is to maximize efficiency, resources fall in and out of the diet in rank order depending on the encounter rate(s) with high-value resources. The model provides an important theoretical context for interpreting the abundances of different prey in zooarcheological assemblages because body size is routinely viewed as a proxy measure for the post-encounter return rate of prey (e.g., Broughton et al. 2011). This assumption is based on empirical research showing that large-sized animals are often, but not always, higher ranked than those that are smaller in body size (e.g., Alvard 1993; Hill and Hawkes 1983; but see Madsen and Schmitt 1998; Bird et al. 2013).

The value of the PreyCM to zooarcheological interpretations is particularly well-demonstrated in the Great Basin where assemblages are often comprised artiodactyl and leporid remains. These prey types are commonly cast as reflecting opposing ends of the diet breadth with artiodactyls representing the highest value prey and leporids viewed as the lowest ranked prey. Accordingly, prehistoric increases in the abundances of small relative to larger-sized prey are considered to be signs of diminishing foraging efficiency arising from reduced encounter rates with high-ranked resources and linked to resource depression and/or habitat reduction from climate change (e.g., Szuter and Bayham 1989; Janetski 1997; Cannon 2003; Byers and Broughton 2004). Conversely, decreases in the abundances of small to large prey are often viewed as indicators of increasing environmental productivity as encounter rates with high-value prey rise (e.g., Broughton and Bayham 2003; Broughton et al. 2011).

Despite the obvious appeal of the PreyCM as an interpretive and predictive tool, an increasing number of empirical studies show that resource choice among ethnographic subsistence hunters is not always predicted solely by energetic returns and is often based on the trade-offs between risk and energy (e.g., Winterhalder 1981; Hawkes et al. 1991; Smith 1991, 2013; Lupo and Schmitt 2005; Lupo 2007; Bird et al. 2009, 2012; Codding et al. 2011). Risk is defined here as the probability of failure to acquire the target prey after it is encountered relative to other alternative resources. Important constraints arising from prey behavioral and physiological characteristics, such as mobility, predator avoidance, and defense responses, can appreciably increase the risk associated with pursing particular prey (Stiner et al. 2000, Stiner and Munro 2002; Lyman 2003; Koster 2007:98; Jones et al. 2008; Bird et al. 2009, 2012; Speth 2012; Wolverton et al. 2012; Lupo and Schmitt 2016). Furthermore, some prey, especially those that are mobile, have high pursuit costs which not only can increase the costs of pursuing the animal but also can lead to high failure rates. Often, the same prey with characteristics that make them difficult to pursue are larger-sized and presumably high-ranked. But failed and/or prolonged pursuits increase the costs of handling those prey and, by definition, decrease the post-encounter return rates associated with those animals (see Lupo and Schmitt 2016). Depending on the available hunting technology and pursuit strategy, large-sized prey with characteristics that make them difficult or expensive to pursue may be less efficient to acquire than smaller-bodied but lower-risk prey.

The ethnographic record shows that differences in prey characteristics can influence human predation. Hunters sometimes deliberately avoid pursing certain high-value prey because of the difficulty associated with its acquisition (see Lee 1979:231-234; Smith 1980:302-303; Yost and Kelly 1983:205-206; Lupo and Schmitt 2016). Conversely, hunters sometimes specifically target prey that are difficult to capture or that have a high risk of hunting failure relative to other available opportunities. Hunters may target these prey to enhance prestige, build social and/or political alliances, or gain mating opportunities (Hawkes et al. 1991, 2010; Sosis 2000; Hawkes and Bliege Bird 2002; Wiessner 2002; Smith 2004; Bird et al. 2009, 2012; Lupo and Schmitt 2016). These empirical observations do not invalidate the use of the PreyCM or the use of body size as a proxy for resource rankings, but show that the elevated risks and costs associated with the acquisition of certain prey can have an appreciable effect on prey rank. Clearly, these observations invite further questions about the ecological and social circumstances that might support the pursuit of high-risk prey.

Recently, Codding et al. (2011) (see also Bliege Bird et al. 2009) identified important ecological circumstances influencing the trade-offs between energetic returns and risk and how these articulate with the foraging goals of men and women. In circumstances characterized by unpredictable high-value resources or associated with high levels of daily variance, men who are more risk-prone than women may target high-risk prey with the goal of social provisioning. When men target high-risk prey, women often focus on more predictable resources with lower daily variances in return (or lower risk of failure) with the goal of provisioning (the so-called divergent strategies). Conversely, in biomes where many different high-value resources are predictably available and have a low risk of failure, the goals of men and women can overlap and result in coordinated acquisition strategies (the so-called convergent strategies). Mitigating factors include population densities, the availability of alloparents and social support, and the value of social networks, alliances, and prestige (also see Elston et al. 2014).

Leporids, artiodactyls, and foraging strategies in the Great Basin

Great Basin ethnographic and historic records show that indigenous hunter gatherers had divergent foraging patterns in which men targeted high-risk large prey and women focused on reliable low-risk resources that comprised the bulk of the diet (e.g., Elston et al. 2014). Large-bodied prey densities and, by extension, encounter rates were generally low (albeit geographically variable) throughout the region and smaller-sized prey, especially leporids, were a common prey item targeted by all segments of the population. The prehistoric paleoenvironmental record for the Great Basin, however, is characterized by dramatic changes in temperature and precipitation that influenced overall productivity and presumably prey abundances. Following the early Holocene, the middle Holocene (ca. 9000–4500 cal BP) was characterized by warmer temperatures and reduced precipitation that greatly reduced prey abundances and increased human population mobility (Madsen et al. 2001; Broughton and Bayham 2003; Byers and Broughton 2004; Madsen 2007; Broughton et al. 2011; Grayson 2011; Jones and Beck 2012). With the onset of the late Holocene approximately 4500 cal BP, cooler and moister conditions returned and likely increased environmental productivity and possibly the encounter rates with artiodactyls. Abundances of artiodactyl fecal pellets (measured as pellets per liter of sediment) from Homestead Cave, for example, show that the highest densities occurred some 3690–3330 cal BP (Hunt et al. 2000:52-53). Broughton et al. (2008, 2011) use these data, in concert with data from Hogup and Camels Back caves (Fig. 1), to argue for wetter summers and drier winters during the late Holocene that increased artiodactyl populations and fueled an increase in big game hunting and hunting efficiency in the Bonneville basin and much of the western USA. Hockett (2015), however, found that zooarcheological assemblages from Bonneville Estates Rockshelter and other cave sites showed sustained and stable artiodactyl hunting from the middle through late Holocene. He notes that artiodactyl hunting, as reflected by faunal abundances, remained stable through other notable climate perturbations, including the Neopluvial (3500–2650 cal BP), late Holocene drought (2600–1650 cal BP), and the Little Ice Age (650–100 cal BP) (e.g., Grayson 2006, 2011). He concludes that artiodactyls were always part of a very broad and diverse subsistence regime which varied with regional opportunities (Hockett 2015). In addition to climatic change, the late Holocene witnessed changes in hunting technology and pursuit strategies that may have influenced prey handling costs, risks, and social and economic values associated with artiodactyl hunting. These included the advent and spread of the bow and arrow some 2000–1400 years ago (Codding et al. 2010; Grayson 2011; Smith et al. 2013) and an increase in cooperative hunts/drives after about 5000 years ago associated with changes in sociopolitical organization and processes (Hockett 2005; Hockett et al. 2013).

While most researchers agree that localized conditions offered different sets of resource opportunities and constraints to prehistoric populations throughout the Great Basin, there is little consensus on the extent or scale of late Holocene increases in hunting productivity. It is also not clear if increases in artiodactyl hunting and foraging efficiency in the latest Holocene had an appreciable influence on the diet breadth and/or subsistence labor patterns of men and women. Increased big-game productivity during the late Holocene should lead to a more convergent labor pattern focused on a narrower diet breadth with a decreased exploitation of smaller-sized and lower value prey than observed in the ethnographic and historic records. Here we consider how the trade-offs between risk and energy influence classic Great Basin prey rankings and targets. Zooarcheological data from a large sample of Bonneville basin open contexts are considered in light of these trade-offs and together with additional data from neighboring Holocene-aged sites reveal a relatively stable pattern of artiodactyl and leporid exploitation.

Determining the trade-offs between risk and energy

The energetic values and post-encounter return rates for many of the different wild resources exploited in the ethnographic record of the Great Basin are well-established in the published literature. The most widely used of these sources is Simms’ (1984, 1985, 1987) pioneering data on the handling costs (pursuit and processing times) and benefits (as measured by kcals) of different resources. To determine the pursuit costs for artiodactyl encounter hunting, he used interviews with contemporary hunters who reported that pursuit varied from a few minutes to approximately 1 h (Simms 1984). For simplicity, Simms applied the same pursuit costs for deer (Odocoileus sp.), mountain sheep (Ovis canadensis), and pronghorn antelope (Antilocapra americana). Processing costs for deer and mountain sheep were estimated to be 1.5 and 1 h for antelope. For smaller-sized prey, such as leporids (hares (Lepus cf. californicus) and rabbits (Sylvilagus sp.)), he used the best estimates possible from limited ethnographic and wildlife literature and assumed 2–3 min pursuit after the animal was encountered. Processing costs for hares were estimated to be 5 and 3 min for rabbits. As Simms (1987) points out, for artiodactyls pursuit costs would have to be considerably higher to appreciably change the post-encounter rankings for these prey because of the high cost of processing large-sized carcasses. Accordingly, prey ranking is largely based on processing time which varies as a function of prey body size. Most notably, processing costs for artiodactyls are nearly the same as pursuit costs. Simms (1987:91) notes that doubling the pursuit for deer to 2 h only lowers the return rate from 17,971 to 12,580 kcal/h. Compared with a considerably lower ranked prey, such as duck, doubling the pursuit time changes the return rate from 1508 to 1231 kg/h. This exercise demonstrates just how dramatic differences in pursuit times can be on post-encounter return rates. A doubling of pursuit times results in a much larger change in post-encounter return rates for artiodactyls than it does for ducks (> 5000 versus < 300 kcal/h). Furthermore, given the limited nature of available data, Simms’ values do not include failed pursuits and the influence of risk from hunting failure on post-encounter return rates.

In traditional applications of the PreyCM to zooarcheological assemblages, similarly sized prey such as artiodactyls have assumed homogenous handling costs, including the probability of failure, and usually are treated as a group (e.g., Janetski 1997; Byers and Broughton 2004; Ugan 2005; Broughton et al. 2011). However, while all the species that comprise artiodactyls (and leporids) are mobile, they often occupy different (albeit sometimes overlapping) habitats, move at different speeds, and, more importantly, have very different predator defense mechanisms (Table 1). Among common artiodactyls in the Bonneville basin, for example, pronghorns are the fastest animal in the Western Hemisphere reaching speeds of over 100 km/h and are known for their superlative aerobic capacity that allows for prolonged long-distance running up to 5 or 6 km before becoming exhausted (e.g., Lindstedt et al. 1991; Lubinski and Herren 2000). In contrast, mountain sheep can reach about 50 km/h on flat ground but only 15 km/h on broken terrain and escape predators by using landscape obstacles such as steep and rocky cliff faces (Valdez and Krausman 1999; Shackleton 1985). Similarly, the pursuit costs of leporids can greatly differ given their antipredator responses and preferred habitats (Table 1). These differences in mobility, predator defense strategies, and other features could potentially translate into vastly different risks of hunting associated with respective prey.

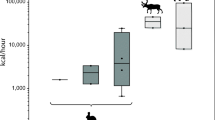

While it is challenging to know how the elevated pursuit costs and failure rates of all of the different animals that comprise artiodactyls and lagomorph influenced prehistoric hunter success, recent analyses of a large set of empirical data derived from contemporary subsistence hunters identify several important trends (Lupo and Schmitt 2016). Among subsistence hunters using a wide range of traditional hunting technologies and pursuit techniques including spears, bows and poisoned arrows, and bow guns, hunting success (as measured by the number of times a hunter kills and acquires and carcass divided by the number of times the prey was encountered and pursued on the landscape) is negatively correlated with prey body size (Fig. 2). The pursuit of smaller-sized prey is generally (but not always) associated with higher hunter success than larger-bodied prey which often have longer pursuit times and higher hunting failure rates. In this sample, large-sized prey includes fleet artiodactyls and other animals that are dangerous or simply difficult to kill with traditional technologies (see Lupo and Schmitt 2016).

Unfortunately, the pursuit costs and specific risks of failure associated with different prey in the Great Basin are unavailable. Sparse ethnographic and ethnohistoric descriptions are illustrative of the range of techniques used to pursue certain species, such as antelope and jackrabbits, but few report quantitative data on the costs or failure rate of these pursuits (see Lubinski and Herren 2000; McCabe et al. 2010). For example, large numbers of antelope and jackrabbits could be taken in communal drives that were held seasonally, required a large organized labor force, and likely involved large investments of time and effort (e.g., Hockett et al. 2013). Far less information is available on encounter hunting of individual animals. In the case of antelope, this could involve substantial time investments in wearing a disguise and/or stalking. Lowie (1909:185) described mounted northern Shoshoni pursuing antelope and reported that 40 or 50 mounted hunters could spend half of a day to kill 2 or 3 animals. Another common, but high cost pursuit method involved persistence hunting, which could last 2 days before the animal was dispatched (McCabe et al. 2010:61). Similarly, many different dispatch methods including communal drives with and without nets could be used to dispatch hares, but other methods include snares, hand capture, clubs, arrows, and rabbit throwing sticks.

Even less information is available on hunting failure rates related to the pursuit of prey. In a novel attempt to estimate the risk of hunting failure, Broughton et al. (2011) cited survey data collected from contemporary firearm hunter’s in South Carolina and Kentucky pursuing cottontails (Sylvilagus sp.) and white-tailed deer (Odocoileus virginianus) hunters in Ontario and South Carolina. As they acknowledge, these data are clearly not directly comparable with the success rates of prehistoric hunters but are only illustrative of the potential degree of risk associated with pursuing different prey. Contemporary hunters use a variety of different modern weaponry (rifles, improved bow and arrows, trained dogs, etc.), hunt in designated areas, and have a single-prey foci as dictated by tags/licenses, and much of the available quantitative data are derived from self-reported surveys which are often inaccurate (e.g., Lukacs et al. 2011). Broughton et al. (2011) cite data reporting a modest success rate of 56% (42–62%) based on the number of reported rabbits seen jumping by hunters and number that were subsequently killed. By this measure, rabbit hunting appears to be a very high-risk pursuit. However, the number of rabbits jumped in these surveys does not represent the number of animals actively pursued by hunters and reflects only the densities of rabbits on the landscape. Footnote 1 For artiodactyls, they cite reports on overall hunting success of 79–80% for O. virginianus with an estimated failure rate of 20% from an experimental hunt conducted in enclosed and heavily managed hunting club. These values are based on the general hunter success rate and not success as a function of the number of animals killed from those encountered and seem to show that hunting large-sized artiodactyls is a low-risk strategy in comparison with pursuing rabbits.

Here we follow Broughton et al. (2011) and dig a bit deeper into the published literature on hunter success and failure as reported in the wildlife literature. Two different measures can provide insights into hunting success and the risk of failure. In most of the published wildlife literature, overall hunting success is based on measures of whether or not a hunter made a kill at some point during a given interval, irrespective of how many animals were encountered. More accurate measures of hunting success should include data on how often the hunter dispatches an animal after it is encountered (number of animals dispatched/number of animals encountered and pursued). However, measurements of hunting success based on the number of animals killed given the number pursued are very rare in the available literature. The closest approximation can be made from available data on wounding or crippling rates, which provide some insight into the risk of hunting failure given the number of prey encountered. Wounding rates measure how many animals were shot by the hunter but either escaped and recovered or eventually died from of their wounds, but the carcasses were never found. Data from the South Carolina and Kentucky hunting surveys mentioned above show relatively low rabbit wounding rates of approximately 2%. This is because the rabbits were dispatched with guns which inflict traumatic injury, but the low wounding rates also suggest that hunters did not often miss their target after it was selected. In a separate controlled study targeting European rabbits (Oryctolagus cuniculus), Hampton et al. (2015) report a high success rate of 79% and found that of the animals targeted by hunters, about 12% were wounded and another 9% escaped unharmed. Comparable accurate wounding rates for deer are difficult to find but are reportedly much higher—between 40 and 60%—especially for bow hunters (Croft 1963; Downing 1971; Garland 1972; Stormer et al. 1979; McPhillips et al. 1985; Boydston and Gore 1987; Ditchkoff et al. 1998). Lower wounding rates of 7–18% are reported, but these are either associated with highly modified bows and/or enclosed hunting areas such as managed clubs or islands where numerous hunters participated in organized hunts (Severinghaus 1963; Gladfelter et al. 1983; Krueger 1995; Ruth and Simmons 1999; Pedersen et al. 2008). Despite the shortfalls in these data, lower failure rates are associated with hunting leporids in comparison with artiodactyls.

More quantitative data are available for overall hunting success rates for contemporary hunters of large artiodactyls and leporids. Here, we use overall hunting success rates of gun hunters in California spanning some 12 years (Fig. 3). These data show that hunters who pursued cottontail rabbits and hares were fairly successful over this interval and significantly more successful than those reported by Broughton et al. (2011). Although the values simply reflect whether a hunter was successful irrespective of the number of animals they encountered and pursued, the values are strikingly different. In general, these gross measures show that deer hunters are far less successful than those targeting cottontails and jackrabbits. While all these taxa are mobile and leporids are likely more abundant on the landscape than artiodactyls, they also present a much smaller-sized target and would presumably more difficult to hit than deer, especially with modern weaponry.

Overall hunting success rates for cottontails, jackrabbits, and deer in California, 1996, 1999–2008, and 2010 (California Department of Fish and Upland Game/Waterfowl Program (https://www.wildlife.ca.gov/hunting/harvest-statistics))

To evaluate how risk of hunting failure could potentially influence the rankings of different prey, we recalculated the post-encounter return rates as reported by Simms (1984) using overall hunting success. We follow the modification suggested by Ugan and Simms (2012) of discounting the post-encounter return rate by the probability of a failed pursuit (Fig. 4). When post-encounter return rates are discounted by failure rates derived from contemporary hunters, the ranking of prey changes (see Lupo and Schmitt 2016) and smaller-sized prey with lower risks of hunting failure become more efficient choices. Clearly there are circumstances where the high risk of failure can make smaller-sized and low-risk prey more efficient than large-sized prey. While it is impossible to know the actual risks of failure faced by prehistoric hunters, these data can shed light on subsistence patterns that appear to run contrary to general predictions of the PreyCM.

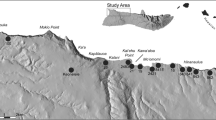

Prehistoric Bonneville basin environs and human subsistence

A general overview of regional basin and range topography shows that Great Basin habitats are characterized by elevational zonation (e.g., Grayson 1993, 2011; Harper 1986). These include sparsely vegetated xerophytic scrub communities located on valley floors and lower piedmonts, pygmy forests of juniper (Juniperus sp.) and pinyon pine (Pinus edulis) on the lower mountain slopes, and subalpine forests of aspen (Populus tremuloides) and limber pine (Pinus flexilis) at higher elevations. In the eastern Great Basin, the Bonneville basin is a massive Pleistocene lake basin that covers portions of southern Idaho, eastern Nevada, and much of western Utah, including the Great Salt Lake and Great Salt Lake Desert (e.g., Madsen 2000) (Fig. 1). Piedmonts, extensive alluvial fans, and broad valleys comprise most of the region which currently support open xerophytic plant communities, and regional paleoecological studies of floral and faunal remains agree that these contexts supported similarly open and arid habitats throughout most of the Holocene (Grayson 2000, 2011; Madsen et al. 2001; Louderback and Rhode 2009; Schmitt and Lupo 2012, 2016; Rhode 2016). Among other species, these vast open tracts provided ideal environments for leporids and artiodactyls, at times including bison (Lupo 1996; Grayson 2006), as well as excellent hunting opportunities for regional peoples (Schmitt et al. 2004). Jackrabbits are particularly well-suited for low-elevation arid habitats and are common in most areas, pronghorn also favor open brush/grass communities on valley floors and lower foothills and co-occur with hares, and deer often occur in valleys and along mid-level slopes and canyons with sage and forest communities that include both hares and cottontails (e.g., Hall 1946; O’Gara 1978).

To investigate regional prehistoric subsistence pursuits, zooarcheological data from various groups of sites in the southern Great Salt Lake Desert are examined. First, Table 2 presents jackrabbit and artiodactyl assemblages as quantified by the number of identified specimens (NISP) from excavated and dated cultural features across the region. Overall, 22 dated contexts with associated food residues are reported and include hearths and/or occupation surfaces at Buzz-Cut Dune (Madsen and Schmitt 2005), Camels Back Cave (Schmitt and Madsen 2005), Playa View Dune (Simms et al. 1999), and a late prehistoric/protohistoric occupation at 42To567 (Rhode et al. 2011) (Fig. 1). Note that most assemblages contain considerably more jackrabbit bones than those of artiodactyls. Cumulatively these collections mark hare processing episodes that span more than 8000 years with a number of contexts dating between about 5600 and 4400 cal BP. With the exception of an ephemeral hearth (Feature 66) in Camels Back Cave and subsequent living surface (Feature 25, ~ 750 cal BP) where appreciable numbers of identified artiodactyl and artiodactyl-sized specimens were deposited (Schmitt and Lupo 2005), bone assemblages from these various cultural contexts are dominated by jackrabbits with artiodactyl remains being few, or in 11 cases, entirely absent (Table 2).

Second, and less well known, are numerous surface assemblages documented in regional archeological surveys that provide further evidence for the presence and recurrent dominance of hares in local subsistence systems. Table 3 presents presence-absence data on observed jackrabbit and artiodactyl remains in 65 open sites recorded in survey projects along the southern margins of the Great Salt Lake Desert. Most survey areas (AFUA, Loiter, TAE, T&T, and White Sage) largely encompassed dune deposits along the toes of alluvial fans, but a few (Tess 1, 5, and 7) were atop the flat, sparsely vegetated cap of regressive phase lacustrine fines in the bottom of Dugway Valley (Madsen et al. 2015), and one (BSP; Fig. 1) incorporated dunes and deflated alkali mudflats (Page et al. 2014). To control for taphonomic and associated site formational issues, we note that the bone was typically found in direct association with fire-altered rock that includes both discrete concentration loci and eroded scatters. Furthermore, given the potential presence of occasional on-site jackrabbit natural death assemblages and especially fragmentary hare remains deposited in carnivore scatological droppings (e.g., Schmitt and Juell 1994), only severely burned (carbonized and/or calcined) specimens were considered human food residues (cf. Byers and Broughton 2004). The observed assemblages ranged from a couple of charred bones to hundreds of carbonized and calcined fragments. In a number of occurrences, the charred bone and fire-altered rock clusters contained pieces of woody charcoal and/or oxidized sediment and likely served as cooking features or refuse dumps. Some burned bones were observed in association with small artifact assemblages (e.g., a few flakes and one or two tools) that likely manifest briefly occupied task sites and camps, and others were found in large scatters containing ground stone, ceramics, and large and diverse assemblages of lithic tools and detritus (Table 4) that doubtless mark prolonged stays by family groups. Although these site data only afford surface expressions, 84 of the 85 loci with associated human food refuse contain jackrabbit/jackrabbit-sized bones (Table 3), and in all but two contexts, jackrabbits are the only species present.

Twenty-one of these surface bone assemblages occur with temporally diagnostic projectile points and/or ceramics, and there are radiocarbon age estimates on charcoal from associated fire-cracked rock features at eight Tess 1 sites (Schmitt et al. 2010) (Table 4). Overall, the types of associated artifacts and the results of radiocarbon assay mark occupations dating to the past ~ 2000 years and include a large number of sites dating to the Fremont Period (~ 1800–500 cal BP; e.g., Madsen 1989; Madsen and Simms 1998; Simms 2008:185–228) and extending into the Late Prehistoric times. Together with the dated excavations (Table 2), there are 106 episodes of jackrabbit processing along the margins of the southern Great Salt Lake Desert, with one site containing only artiodactyl remains and 94 (89%) marking hare-only processing events.

Fifty-one contexts with chronological data illustrate the continued use of hares over the last ~ 8300 cal years, including a mass collecting event(s) near Camels Back Cave ~ 7400–7250 cal BP (Table 2; Schmitt et al. 2004). Importantly, clusters of dated use episodes occur during two very disparate climatic events, with the first increase in hare processing features dating to ~ 5600–4400 cal BP during the later years of middle Holocene desertification (e.g., Madsen 2000; Grayson 2011). This cluster includes occupations at Buzz-Cut Dune (Madsen and Schmitt 2005) and Playa View Dune (Simms et al. 1999) and multiple visits to Camels Back Cave (Schmitt and Madsen 2005). While the pursuit of jackrabbits during this arid interval may suggest resource intensification, hares dominated regional human refuse aggregates prior to this time, and it is more plausible that these occurrences mark procurements of a low-risk dietary staple.

The second concentration of dated contexts is during Fremont times where foragers and farmers commonly took hares during a climatic cycle marked by increases in summer moisture that included monsoonal storms and the associated expansion of grassland habitats (Madsen 2000; Wigand and Rhode 2002; Grayson 2011). These novel grasslands provided propitious forage for both hares and large herbivores, including bison, whose populations expanded significantly in some areas as a result of these improved environmental conditions (Lupo 1996; Grayson 2006; Broughton et al. 2008). In fact, a number of Lepus-only/dominant Fremont assemblages in the southern Great Salt Lake Desert were deposited while neighboring foragers and farmers in lake margins along the Wasatch Front were taking significant numbers of large mammals (e.g., Lupo and Schmitt 1997; Grayson 2006 and references therein), as were the inhabitants of Oranjeboom Cave (Buck et al. 2002). Thus, during a time when regional subsistence pursuits should have forsaken hares and focused on artiodactyl prey, many people continued to rely on jackrabbits as a food resource (e.g., Hockett 1998). During the Fremont Period at Camels Back Cave, it appears that artiodactyls and hares were pursued in tandem (Table 2), as there was a marked increase in fragmentary large mammal remains that included bison and bighorn associated with Lepus bones processed by human hunters (Schmitt and Lupo 2005).

In a final and more far-reaching look at regional subsistence strategies, we incorporate temporal and quantitative data on leporids and artiodactyls from neighboring eastern and central Great Basin sites with the southern Great Salt Lake Desert data (Online Resource 1). Included are skeletal abundances in stratigraphic aggregates from Swallow (Dalley 1976; Swanson 2011) and James Creek (Grayson 1990) shelters and Hogup (Durrant 1970; Martin et al. 2017) and Danger (Grayson 1988) caves (Fig. 1), and bone collections from open Fremont residential sites along the Great Basin’s easternmost edge (e.g., Sharrock and Marwitt 1967; Marwitt 1970). Each of these sites occurs in habitats that support both leporids and artiodactyls and we do not include the aforementioned artiodactyl-rich Fremont and Late Prehistoric sites unique to the wetlands along the margins of the Great Salt and Utah lakes.

With the addition of these assemblages, there are now 119 dated bone aggregates from 51 sites. The context of each assemblage and its age and abundance index are presented in Online Resource 1, and a scatterplot of the abundance indices through time are presented in Fig. 5. Our use of mean age estimates and calculations of abundance indices follow previous measures used by researchers across the region (e.g., Broughton 1994; Janetski 1997; Byers and Broughton 2004). In a few instances, multiple stratigraphic bone aggregates were bracketed by widely distributed age estimates and were assigned mean ages based on the number and position of stratigraphic horizons and the span of the bracketing dates. Except for minimum number of individual counts on the Hogup Cave specimens (Durrant 1970), all temporal bins were quantified using NISP, and the abundance indices represent artiodactyl indices (e.g., Szuter and Bayham 1989) calculated as Σartiodactyls/Σartiodactyls+lagomorphs to track differences in the ratio of large-bodied herbivores to smaller hares and rabbits. While the data suggest a very slight increase in artiodactyl abundances through time, the relationship is not significant (Pearson’s correlation coefficient; r = 0.092, p = .320, df = 117) and the low and flat trendline (Fig. 5) illustrates the persistence, indeed importance, of hares and rabbits in regional prehistoric subsistence systems as they dominate most assemblages (mean of artiodactyl index values = 0.24). In fact, 39 assemblages contain only leporid remains and 92 of the 119 aggregates (77.3%) contain more leporid bones than those of artiodactyl.

Summary and discussion

A review of the trade-offs between the energy and risk associated with the acquisition of artiodactyls and leporids suggests that hunting failure can be a significant factor influencing post-encounter return rates and traditional prey rankings (also see Lupo and Schmitt 2016). It might be argued that the published post-encounter return rates that underlie resource rankings are generalized estimates that represent most circumstances of capture and processing and are not meant to address all possible outcomes. But published post-encounter values do not encompass the costs of hunting failure, and as demonstrated here and elsewhere, when these values are discounted to account for the failure, the rank ordering of different sized prey is significantly altered. Arguably, the use of modern hunting success/failure rates as estimates for discounting post-encounter return rates is a very blunt tool and more exacting data on the pursuit costs of different prey and failure rates are needed. But these data illustrate the salient point that measurements of risk need to be incorporated into the prey rankings that are commonly used to interpret archeological data.

The PreyCM is a robust tool for predicting subsistence choices, but some of the original empirical applications of foraging theory among hunters and gatherers produced quantitative evidence challenging the underlying logic of the model (Hames 1979; Winterhalder 1981; Hill et al. 1987; Lupo 2007). Early applications of the model to the ethnographic record identified the potential influence of nonenergetic currencies on resource choice. Hill et al. (1987), for example, presented data showing that energetic efficiency does not always predict resource choice among the Aché, and that differences in macronutritional composition between resources might be an important factor guiding food choice. Additionally, numerous ethnographic observations demonstrate that some hunters consistently pursue high-risk, costly, or seemingly wasteful hunting opportunities even when less costly and more reliable alternatives are available (e.g., Hawkes et al. 1991; Bliege Bird et al. 2001; Lupo and Schmitt 2002; Bird et al. 2009). These pursuits are viewed as costly signals aimed at enhancing non-consumptive benefits such as attracting political alliances, friendships, and other sociopolitical advantages. Moreover, there are a variety of different circumstances where hunter gatherers intentionally alter their environments (e.g., using fire) in ways that enhance or maintain productivity of certain resources, including those that would be considered low ranked (e.g., Winterhalder and Lu 1997; Smith and Wishnie 2000; Bird et al. 2005).

The prehistoric acquisition of artiodactyls and leporids in the Great Basin provided additional benefits beyond energy that potentially influenced the valuation and subsequent rankings of these prey. In ethnographic and historic records of indigenous peoples, artiodactyls and leporids are frequently mentioned as prey exploited for protein, hide, and other products such as bone and teeth (e.g., Simpson 1876; Steward 1938; Lowie 1939; Downs 1966; Fowler 1992). Among these, hides and pelts may have been the most important non-consumptive resource, especially for their use in garments and coverings. Beyond their value as a low-risk consumable resource, hares seasonally provided pelts that were used to manufacture essential clothing, robes, and/or blankets used by native peoples throughout the Great Basin and elsewhere. Jackrabbit drives to procure meat and pelts also figured prominently into fall festivals that often coincided with the harvest of other important resources, but also had a substantial social component(s) as participation in these hunts was likely an entrée into these larger events (Steward 1938). Artiodactyls were used for the same products and in similar contexts. Cooperative antelope drives, for example, were highly organized events associated with festivals that provided opportunities to gain hunting prestige. However, the value and use of the skin for garments differed between artiodactyls, such as deer, and jackrabbits. Steward (1941:245) reports that a high social value was placed on tailored skin clothing such as hide leggings and shirts made from larger-sized skins. For men, these items were an “advertisement of the man’s industry and skill as a hunter, thus affording slight prestige value” (also see Lowie 1924:217–218; Kelly 1932:106). For women, Steward (1941:245) states, “The most pretentious woman’s garment was a long gown made of two skins…which represented affluence and was preferred in the winter.” Larger-sized and high-quality artiodactyl skins were highly valued, especially in parts of the Great Basin where they were difficult to find (Kelly 1964:45; Steward 1938:45). In contrast, jackrabbit pelts were twined and fabricated into blankets and robes which were considered an essential item for everyday lifeFootnote 2 (see Palmer 1897:68; Gilmore 1953). Interestingly, Steward (1941:245) noted that “poor or unlucky hunters only wore breechclouts but still had a robe.” Twined jackrabbit ropes made from pelts were used as a type of currency and could be sold for cash or exchanged for fine buckskinsFootnote 3 (Steward 1938:45). Rabbit skin robes or blankets were usually custom-made, curated, and highly valued by their owners as a versatile garment (Palmer 1897). Experimental studies show that rabbit pelts have superlative insulative and thermal properties that likely made them indispensable during the winter months (Yoder et al. 2005). Thus, while deer and jackrabbits both yielded skins, buckskin clothing was often considered a marker of prestige, while jackrabbit robes were viewed as essential garments.

In this analysis, we show that hare bones dominate prehistoric human subsistence detritus even when large-bodied ungulates were available and their encounter rates were ostensibly increasing. Beginning with the Lepus bone refuse deposited by the initial late Pleistocene human foragers at Bonneville Estates Rockshelter (Hockett 2007, 2015), hares dominated Bonneville basin subsistence assemblages and were a common and fundamental part of people’s lives. If hunting high-risk artiodactyls was a pursuit that conferred prestige and low-risk leporids provided a more predictable return, these circumstances would support a diversified labor system similar to the organization of labor reported in the ethnographic record. In the ethnographic and historic records of the Great Basin, women are described hunting/collecting a variety of small prey as part of their subsistence regime (e.g., Ferris 1940:267; Fowler 1986; Fowler and Walter 1985; Leonard 1904:119). For example, Northern Paiute and Uintah Ute women, as well as children and adolescents, trapped small mammals (Fowler 1989; Steward 1970:138–139). Hares and other small mammals were not only targeted by women and children as the ethnographic record also mentions men as hunting small game, and communal drives where large numbers of hares (and other animals) were synchronously acquired often employed all available workers (e.g., Lowie 1939; Steward 1970; Fowler 1986). Conversely, artiodactyl hunting was largely pursued by men with the exception of communal hunts in which women, children, and the elderly assisted in capturing and especially processing and transporting carcasses (see Kelly 1964; Stewart 1941). While it is difficult to ascribe task group composition to particular archeological remains, some of the sites and jackrabbit remains reported here may have been the result of hunting by women and children. As noted above, some of the open sites contained ground stone and ceramics that were likely related to women’s subsistence activities, but it is also probable that many of these site assemblages represent the acquisition of a staple resource by any and all members of the population.

Finally, current approaches to interpreting prehistoric prey acquisition focus on estimating the encounter rates with (and relative abundances of) high-value prey such as artiodactyls from limited fecal and skeletal data collected from sheltered contexts (see Grayson 2011). All zooarcheological assemblages are influenced to some degree by taphonomic processes and many of the well-dated and available assemblages have not undergone systematic taphonomic analysis. Even more problematic is the fact that, with few exceptions, most of the available sites with well-dated faunal assemblages are from sheltered contexts where the abundances of prey bones may be tied to the frequency and degree to which those sites were used by people, but may not reflect the abundances of the prey on the landscape (Grayson 2011; also see Speth 2012). Speth (2012) has noted that the frequent disparities in faunal abundances between synchronous occupied cave and open sites may be revealing different aspects of the diet breadth. A good example of this phenomenon is reflected in the Fremont Period subsistence residues from Camels Back Cave; while burned and broken jackrabbit bones are present, the assemblage contains abundant artiodactyl bones (Table 2) while neighboring open sites dating to the period contain only the remains of hares. In the Bonneville basin, virtually all interpretations of artiodactyl abundances are based on cave sites, especially Hogup and Homestead caves. One of the few exceptions is from the Little Boulder Basin sites with occupations spanning the last 3000 years in the Humboldt River drainage which is located some 300 km away from the Bonneville basin (Broughton et al. 2011). Data from open contexts reported here greatly expand the available data set on faunal abundances and reveal a very different dimension of subsistence and the diet breadth.

Conclusions

In the prehistoric Bonneville basin, jackrabbits were exploited throughout the Holocene and were a dominant meat source during middle Holocene desertification and particularly the more mesic Fremont Period where they provided nutrition to expanding populations (e.g., Madsen and Simms 1998). Considering the longitudinal pattern of exploitation spanning the last 8300 years, the wealth of hare-rich Fremont faunal assemblages reflects the continuation of a stable exploitation pattern and not necessarily declines in foraging efficiency. Rather, the hare was an integral part of everyday life for regional peoples that provided food, adornment, and vital warmth, and that doubtless served as the center of many conversations and familial ties.

Abundances of artiodactyls and leporids are often interpreted within the context of the PreyCM, a useful quantitative and highly flexible analytic tool with demonstrable explanatory power. The PreyCM is one of the several broad, evolutionary-based models that allow researchers to change goals, constraints, and currencies (Bird and O’Connell 2006; Lupo 2007; Codding and Bird 2015). As such, the model has the potential to illuminate many different aspects of human foraging behavior. We believe that future applications of the model to the zooarcheological record require additional data. These data should be generated through experimentation and/or simulation modeling on the risks and pursuit and processing costs of acquiring large and small game using different hunting technologies and techniques. Comprehensive ecological models could also provide temporal views of taxonomic abundances on regional landscapes. Moreover, and while some assumptions necessarily remain, zooarcheologists need to carefully consider taphonomic processes and quantitative methods (e.g., Schmitt and Lupo 1995; Hockett 1996; Cannon 2013; Fisher 2018) and examine age profiles and human processing patterns to infer the capture technique(s) (see especially Jones 2006). It is time for researchers to revisit exactly what is being measured in these applications and how those measurements are derived.

Notes

Rabbits jumped are the number of rabbits seen but not necessarily the number targeted. Some rabbits were not targeted because they jumped when the hunters let their dogs run for exercise. Others were not pursued because of thick brush coverage (i.e., poor shot advantage) and other variables (South Carolina rabbit hunter study, Department of Natural Resources 2011–2012:5). See also South Carolina rabbit hunter studies, Department of Natural Resources (2009-2010, 2018-2019).

Gilmore (1953:152–153) states, “Natural hemp, made from the bark of plants, was used as thread in sewing these small strips of fur together. A single blanket has been known to have required...one-hundred skins in its making. However, most blankets were custom-made, being made to reach from the ground to the top of the head of the person for whom the blanket was being made. Rabbit blankets lasted many years and continued to be warm as long as they were kept clean. Rabbit fur was so valuable… that it was frequently used instead of coin in purchasing articles and for wagering at gambling…”

Steward (1938:45) reports that 20 lengths of roped or twined rabbit pelt sold for about 5$ and could be used to purchase a buckskin.

References

Alvard MS (1993) Testing the “ecologically noble savage” hypothesis: interspecific prey choice by Piro hunters of Amazonian Peru. Hum Ecol 21:355–387

Best T (1996) Lepus californicus. Mammalian Species 530:1–10

Bird DW, Bliege Bird R, Codding BF (2009) In pursuit of mobile prey: Martu hunting strategies and archaeofaunal interpretation. Am Antiq 74:3–29

Bird DW, Bliege Bird R, Parker CH (2005) Aboriginal burning regimes and hunting strategies in Australia’s western desert. Hum Ecol 33:443–464

Bird DW, Codding BF, Bliege Bird R, Zeanah DW (2012) Risky pursuits: Martu hunting and the effects of prey mobility: reply to Ugan and Simms. Am Antiq 77:186–194

Bird DW, Codding BF, Bliege Bird R, Zeanah DW, Taylor CJ (2013) Megafauna in a continent of small game: archaeological implications of Martu camel hunting in Australia’s western desert. Quat International 297:155–166

Bird DW, O’Connell JF (2006) Behavioral ecology and archaeology. J Archaeol Res 14:143–188

Bliege Bird R, Smith EA, Bird DW (2001) The hunting handicap: costly signaling in male foraging strategies. Behav Ecol and Sociobiol 50:9–19

Bliege Bird R, Codding BF, Bird DW (2009) Determinants of gendered foraging and production inequalities among Martu. Hum Nat 20:105–129

Boydston GA, Gore HG (1987) Archery wounding loss in Texas. Texas Parks and Wildlife Department, Austin

Broughton JM (1994) Late Holocene resource intensification in the Sacramento Valley, California: the vertebrate evidence. J Archaeol Sci 21:501–514

Broughton JM (2002) Prey spatial structure and behavior affect archaeological tests of optimal foraging models: examples from the Emeryville Shellmound vertebrate fauna. World Archaeol 34:60–83

Broughton JM, Bayham FE (2003) Showing off, foraging models, and the ascendance of large game hunting in the California middle Archaic. Am Antiq 68:783–789

Broughton JM, Byers DA, Bryson RA, Eckerle W, Madsen DB (2008) Did climatic seasonality control Late Quaternary artiodactyl densities in western North America? Quat Sci Rev 27:1916–1937

Broughton JM, Cannon MD, Bayham FE, Byers DA (2011) Prey body size and ranking in zooarchaeology: theory, empirical evidence, and applications from the northern Great Basin. Am Antiq 76:403–428

Buck P, Hockett B, Graf K, Geobel T, Griego G, Perry L, Dillingham E (2002) Oranjeboom Cave: a single component Eastgate site in northeastern Nevada. Utah Archaeol 15:99–112

Butler VL, Campbell SK (2004) Resource intensification and resource depression in the Pacific Northwest of North America: a zooarchaeological review. J World Prehistory 18:327–405

Byers D, Broughton JM (2004) Holocene environmental change, artiodactyl abundances, and human hunting strategies in the Great Basin. Am Antiq 69:235–255

California Department of Fish and Upland Game/Waterfowl Program report of the [1996, 1999-2008, 2010] game take hunter survey, Sacramento https://nrm.dfg.ca.gov/

Cannon MD (2003) A model of central place forager prey choice and an application to faunal remains from the Mimbres Valley, New Mexico. J Anthropol Archaeol 22:1–25

Cannon MD (2013) NISP, Bone fragmentation, and the measurement of taxonomic abundance. J Archaeol Method Theory 20:397–419

Carbyn LN, Trottier T (1988) Descriptions of wolf attacks on bison calves in Wood Buffalo National Park. Arct 41:297–302

Chapman JA, Willner GR (1978) Sylvilagus audubonii. Mammalian Species 106:1–4

Codding BF, Bird DW (2015) Behavioral ecology and the future of archaeological science. J Archaeol Sci 56:9–20

Codding BF, Bliege Bird R, Bird DW (2011) Provisioning offspring and others: risk–energy trade-offs and gender differences in hunter–gatherer foraging strategies. Proc Royal Soc B 278:2502–2509

Codding BF, Porcasi JF, Jones TL (2010) Explaining prehistoric variation in the abundance of large prey: a zooarchaeological analysis of deer and rabbit hunting along the Pecho Coast of central California. J Anthropol Archaeol 29:47–61

Creel S, Winnie J Jr, Maxwell B, Hamlin K, Creel M (2005) Elk alter habitat selection as an antipredator response to wolves. Ecol 86:3387–3397

Croft RL (1963) A survey of Georgia bowhunters. Proc Southeast Assoc of Game and Fish Comm 17:155–163

Dalley GF (1976) Swallow shelter and associated sites. Anthropological Papers no. 96. University of Utah Press, Salt Lake City

Ditchkoff SS, Welch ER Jr, Lochmiller RL, Masters RE, Starry WR, Dinkines WC (1998) Wounding rates of white-tailed deer with traditional archery equipment. Proc Annu Conf Southeast Assoc Fish and Wildl Agencies 52:244–248

Downing RL (1971) Comparison of crippling losses of white-tailed deer caused by archery, buckshot, and shotgun slugs. Proc Annu Conf Southeast Assoc Game and Fish Comm 25:77–82

Downs JF (1966) The two worlds of the Washo: an Indian tribe of California and Nevada. Holt, Rinehart and Winston, New York

Durrant SD (1970) Faunal remains as indicators of neothermal climates at Hogup Cave. In: Aikens CM Hogup Cave. Anthropological Papers no. 93. University of Utah Press, Salt Lake City, pp 241-245

Elston RG, Zeanah DW, Codding BF (2014) Living outside the box: an updated perspective on diet breadth and sexual division of labor in the prearchaic Great Basin. Quat Int 352:200–211

Ferris WA (1940) Life in the Rocky Mountains: a diary of wanderings on the sources of the rivers Missouri, Columbia and Colorado from February 1830 to November 1831. Old West Publishing, Denver

Fisher JL (2018) Influence of bone survivorship on taxonomic abundance measures. In: Giovas CM, LeFebvre MJ (eds) Zooarchaeology in practice: case studies in methodology and interpretation in archaeofaunal analysis. Springer, Cham, pp 127–149

Fowler CS (1986) Subsistence. In: d’Azevedo WG (ed) Handbook of North American Indians, Great Basin, vol 11. Smithsonian Institution, Washington DC, pp 64–97

Fowler CS (1989) Willard Z. Park’s ethnographic notes on the Northern Paiute of western Nevada, 1933-1944. Anthropological Papers No. 114. University of Utah Press, Salt Lake City

Fowler CS (1992) In the shadow of Fox Peak: an ethnography of the cattail-eater Northern Paiute people of Stillwater Marsh. U.S. Fish and Wildlife Service Cultural Resource Series no. 5. U.S. Government Printing Office, Washington DC

Fowler CS, Walter NP (1985) Harvesting pandora moth larvae with the Owens Valley Paiute. J Calif Gt Basin Anthropol 7:155–165

Garland LE (1972) Bowhunting for deer in Vermont: some characteristics of the hunters, the Hunt, and the Harvest. Vermont Fish and Game Department, Waterbury

Garland T Jr (1983) The relation between maximal running speed and body mass in terrestrial mammals. J Zoology 199:157–170

Geist V (1998) Deer of the world: their evolution, behavior, and ecology. Stackpole Books, Mechanicsburg, Pennsylvania

Gilmore HW (1953) Hunting habits of the early Nevada Paiutes. Am Anthropol 55:148–153

Gladfelter HL, Kienzler JM, Koehler KJ (1983) Effects of compound bow use on deer hunter success and crippling rates in Iowa. Wildl Society Bull 11:7–12

Grayson DK (1983) The paleontology of Gatecliff Shelter: small mammals. In: Thomas DH The Archaeology of Monitor Valley 2, Gatecliff Shelter. Anthropological Papers 59(1). American Museum of Natural History, New York, pp 99-126

Grayson DK (1988) Danger Cave, Last Supper Cave, and Hanging Rock Shelter: the faunas. Anthropological Papers 66(1). American Museum of Natural History, New York

Grayson DK (1990) The James Creek Shelter mammals. In: Elston RG, Budy EE (eds) The archaeology of James Creek Shelter. Anthropological Papers no. 115. University of Utah Press, Salt Lake City, pp 87-98

Grayson DK (2000) Mammalian responses to middle Holocene climatic change in the Great Basin of the western United States. J Biogeogr 27:181–192

Grayson DK (2006) Holocene bison in the Great Basin, western USA. The Holocene 16:913–925

Grayson DK (2011) The Great Basin: a natural prehistory. University of California Press, Berkeley

Hall ER (1946) Mammals of Nevada. University of California Press, Berkeley

Hames RB (1979) A comparison of the efficiencies of the shotgun and the bow in neotropical forest hunting. Hum Ecol 7:219–252

Hampton JO, Forsyth DM, Mackenzie D, Stuart I (2015) A simple quantitative method for assessing animal welfare outcomes in terrestrial wildlife shooting: the European rabbit as a case study. Animal Welfare 24:307–317

Harper KT (1986) Historical environments. In: d’Azevedo WG (ed) Handbook of North American Indians, Great Basin, vol 11. Smithsonian Institution, Washington DC, pp 51–63

Hawkes K, Bliege Bird R (2002) Showing off, handicap signaling, and the evolution of men’s work. Evol Anthropol 11:58–67

Hawkes K, O’Connell JF (1985) Optimal foraging models and the case of the! Kung. Am Anthropol 87:401–405

Hawkes K, O’Connell JF, Blurton Jones N (1991) Hunting income patterns among the Hadza: big game, common goods, foraging goals and the evolution of the human diet. Philos Trans Royal Soc B 334(1270):243–251

Hawkes K, O’Connell JF, Coxworth JE (2010) Family provisioning is not the only reason men hunt: a comment on Gurven and Hill. Current Anthropol 51:259–264

Hill K (1988) Macronutrient modifications of optimal foraging theory: an approach using indifference curves applied to some modern foragers. Hum Ecol 16:157–197

Hill K, Hawkes K (1983) Neotropical hunting among the Ache of eastern Paraguay. In: Hames RB, Vickers WT (eds) Adaptations of native Amazonians. Academic Press, Orlando, pp 139–188

Hill K, Kaplan H, Hawkes K, Hurtado AM (1987) Foraging decisions among Ache hunter-gatherers: new data and implications for optimal foraging models. Ethnol Sociobiol 8:1–36

Hockett B (1996) Corroded, thinned and polished bones created by golden eagles (Aquila chrysaetos): taphonomic implications for archaeological interpretations. J Archaeol Sci 23:587–591

Hockett B (1998) Sociopolitical meaning of faunal remains from Baker Village. Am Antiq 63:289–302

Hockett B (2005) Middle and late Holocene hunting in the Great Basin: a critical review of the debate and future prospects. Am Antiq 70:713–731

Hockett B (2007) Nutritional ecology of late Pleistocene to middle Holocene subsistence in the Great Basin: zooarchaeological evidence from Bonneville Estates Rockshelter. In: Graf KE, Schmitt DN (eds) Paleoindian or paleoarchaic? Great Basin human ecology at the Pleistocene-Holocene transition. University of Utah Press, Salt Lake City, pp 204–230

Hockett B (2015) The zooarchaeology of Bonneville Estates Rockshelter: 13,000 years of Great Basin hunting strategies. J Archaeol Sci: Rep 2:291–301

Hockett B, Creger C, Smith B, Young C, Carter J, Dillingham E, Crews R, Pellegrini E (2013) Large-scale trapping features from the Great Basin, USA: the significance of leadership and communal gatherings in ancient foraging societies. Quat Int 297:64–78

Hunt JM, Rhode D, Madsen DB (2000) Homestead Cave flora and non-vertebrate fauna. In: Madsen DB Late Quaternary paleoecology in the Bonneville basin. Bulletin 130. Utah Geological Survey, Salt Lake City, pp 47-58

Janetski JC (1997) Fremont hunting and resource intensification in the eastern Great Basin. J Archaeol Sci 24:1075–1088

Jones EL (2006) Prey choice, mass collecting, and the wild European rabbit (Oryctolagus cuniculus). J Anthropol Archaeol 25:275–289

Jones GT, Beck C (2012) The emergence of the desert archaic in the Great Basin. In: Bousman CB, Vierra BJ (eds) From the Pleistocene to the Holocene: human organization and cultural transformations in prehistoric North America. Texas A&M University Press, College Station, pp 105–124

Jones TL, Porcasi JF, Gaeta J, Codding BF (2008) The Diablo Canyon fauna: a course-grained record of trans-Holocene foraging from the central California coast. Am Antiq 73:289–316

Kelly IT (1932 ) Ethnography of the Surprise Valley Paiute. University of California Publications in American Archaeology and Ethnology 31(3). University of California Press, Berkeley, pp. 67-210

Kelly IT (1964) Southern Pauite ethnography. Anthropological Papers no. 69. University of Utah Press, Salt Lake City

Koster JM (2007) Hunting and subsistence among the Mayangna and Miskito of Nicaragua’s Bosawas Biosphere Reserve. Ph.D. dissertation, Pennsylvania State University, State College

Krausman PR, Bowyer RT (2003) Mountain sheep (Ovis canadensis and O. dalli). In: Feldhamer GA, Thompson BC, Chapman JA (eds) Wild mammals of North America: biology, management, and conservation, 2nd edn. Johns Hopkins University Press, Baltimore, pp 1095–1115

Krueger WJ (1995) Aspects of wounding of white-tailed deer by bowhunters. West Virginia University, Morgantown, Ph.D. dissertation

Lee RB (1979) The! Kung San: men, women, and work in a foraging society. Cambridge University Press, Cambridge

Leonard Z (1904) Leonard’s narrative: adventure of Zenas Leonard, fur trader and trapper, 1831-1836. Wagner WF (ed). Burrows Brothers, Cleveland

Lindstedt SL, Hokanson JF, Wells DJ, Swain SD, Hoppeler H, Navarro V (1991) Running energetics in the pronghorn antelope. Nat 353(6346):748

Louderback LA, Rhode D (2009) 15,000 years of vegetation change in the Bonneville basin: the Blue Lake pollen record. Quat Sci Rev 28:308–326

Lowie RH (1909) The Northern Shoshone. Anthropological Papers 2(2). American Museum of Natural History, New York, pp 165–306

Lowie RF (1924) Notes on Shoshonean Ethnography. Anthropological Papers 20(3). American Museum of Natural History, New York, pp 185–324

Lowie RF (1939) Ethnographic notes on the Washo. Publications in American Archaeology and Ethnology 36. University of California, Berkeley, pp 301–352

Lubinski PM, Herren V (2000) An introduction to pronghorn biology, ethnography and archaeology. Plains Anthropol 45:3–11

Lukacs PM, Gude JA, Russell RE, Ackerman BB (2011) Evaluating cost-efficiency and accuracy of hunter harvest survey designs. Wildl Soc Bull 35:430–437

Lupo KD (1996) The historical occurrence and demise of bison in northern Utah. Nevada Hist Q 64:168–180

Lupo KD (2007) Evolutionary foraging models in zooarchaeological analysis: recent applications and future challenges. J Archaeol Res 15:143–189

Lupo KD, Schmitt DN (1997) On late Holocene variability in bison populations in the northeastern Great Basin. J Calif Gt Basin Anthropol 19:50–69

Lupo KD, Schmitt DN (2002) Upper Paleolithic net-hunting, small prey exploitation, and women’s work effort: a view from the ethnographic and ethnoarchaeological record of the Congo Basin. Archaeol Method Theory 9:147–179

Lupo KD, Schmitt DN (2005) Small prey hunting and zooarchaeological measures of taxonomic diversity and abundance: ethnoarchaeological evidence from Central African forest foragers. J Anthropol Archaeol 24:335–353

Lupo KD, Schmitt DN (2016) When bigger is not better: the economics of hunting megafauna and its implications for Plio-Pleistocene hunter-gatherers. J Anthropol Archaeol 44:185–197

Lyman RL (2003) Pinniped behavior, foraging theory, and the depression of metapopulations and nondepression of a local population on the southern northwest coast of North America. J Anthropol Archaeol 22:376–388

Madsen DB (1989) Exploring the Fremont. Occasional Publication No. 8. Utah Museum of Natural History, Salt Lake City

Madsen DB (2000) Late Quaternary paleoecology in the Bonneville basin. Bulletin 130. Utah Geological Survey, Salt Lake City

Madsen DB (2007) The paleoarchaic to archaic transition in the Great Basin. In: Graf KE, Schmitt DN (eds) Paleoindian or paleoarchaic? Great Basin human ecology at the Pleistocene-Holocene transition. University of Utah Press, Salt Lake City, pp 3–20

Madsen DB, Oviatt CG, Young DC, Page D (2015) Old River Bed delta geomorphology and chronology. In: Madsen, DB, Schmitt DN, Page D The paleoarchaic occupation of the Old River Bed delta. Anthropological Papers No. 128. University of Utah Press, Salt Lake City, pp 30-60

Madsen DB, Rhode D, Grayson DK, Broughton JM, Livingston SD, Hunt JM, Quade J, Schmitt DN, Shaver MW III (2001) Late Quaternary environmental change in the Bonneville basin, western USA. Palaeogeogr Palaeoclimatol Palaeoecol 167:243–271

Madsen DB, Schmitt DN (1998) Mass collecting and the diet breadth model: a Great Basin example. J Archaeol Sci 25:445–455

Madsen DB, Schmitt DN (2005) Buzz-Cut Dune and Fremont foraging at the margin of horticulture. Anthropological Papers no. 124. University of Utah Press, Salt Lake City

Madsen DB, Simms SR (1998) The Fremont complex: a behavioral perspective. J World Prehistory 12:255–336

Martin EP, Coltrain JB, Codding BF (2017) Revisiting Hogup Cave, Utah: insights from new radiocarbon dates and stratigraphic analysis. Am Antiq 82:301–324

Marwitt JP (1970) Median Village and Fremont culture regional variation. Anthropological Papers no. 95. University of Utah Press, Salt Lake City

Mitchell GJ (1971) Measurements, weights, and carcass yields of pronghorns in Alberta. J Wildl Manag 35:76–85

McCabe RE, Reeves HM, O’Gara BW (2010) Prairie ghost: pronghorn and human interaction in early America. University Press of Colorado, Boulder

McPhillips KB, Linder RL, Wentz WA (1985) Nonreporting, success, and wounding by South Dakota deer bowhunters - 1981. Wildl Soc Bull 13:395–398

O’Gara BM (1978) Antilocapra americana. Mammalian Species 90:1–7

Orr RT (1940) The rabbits of California. Occasional papers 19. California Academy of Sciences, San Francisco

Page D, Schmitt DN (2012) A class III cultural resource inventory of 2,693 acres in support of training area expansion, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Page D, Schmitt DN (2014a) A class III cultural resource inventory and site protection measures in support of testing and training, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Page D, Schmitt DN (2014b) A class III cultural resource inventory of Air Force use areas, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Page D, Schmitt DN (2014c) A class III cultural resource inventory in support of phase 7 of the PD-Tess range modernization project, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Page D, Schmitt DN, Rhode D (2014) A class III cultural resource inventory of the Baker Strong Point parcel in support of testing and training, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Palmer TS (1897) The jack rabbits of the United States. U.S. Department of Agriculture Bulletin no. 8. Government Printing Office, Washington DC

Pedersen MA, Berry SM, Bossart JC (2008) Wounding rates of white-tailed deer with modern archery equipment. Proc Ann Conf Southeastern Assoc Fish and Wildl Agencies 62:31–34

Quimby DC, Johnson DE (1951) Weights and measurements of Rocky Mountain elk. J Wildl Manag 15:57–62

Rhode D (2016) Quaternary vegetation changes in the Bonneville Basin. In: Oviatt CG, Shroder JF (eds) Lake Bonneville: a scientific update, Developments in Earth Surface Processes, vol 20. Elsevier, Amsterdam, pp 420–441

Rhode D, Page D, Schmitt DN (2011) Archaeological data recovery at site 42To0567 in the Wig Mountain training area, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Rhode D, Page D, Schmitt DN (2014) A class III cultural resource inventory of the White Sage training area, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Ruth CR, Simmons H (1999) Answering questions about guns, ammo, and man’s best friend. Proc Annu Meet Southeast Deer Study Group 22:28–29

Schmitt DN (2016) Faunal analysis. In: Beck RK (ed) Archaeological investigations for the Sigurd to Red Butte No. 2–345KV transmission project in Beaver, Iron, Millard, Sevier, and Washington Counties, Utah. Volume II: prehistoric synthesis. Bureau of Land Management, Cedar City, pp 9-1–9-16

Schmitt DN, Juell KE (1994) Toward the identification of coyote scatological faunal accumulations in archaeological contexts. J Archaeol Sci 21:496–514

Schmitt DN, Lupo KD (1995) On mammalian taphonomy, taxonomic diversity, and measuring subsistence data in zooarchaeology. Am Antiq 60:496–514

Schmitt DN, Lupo KD (2005) The Camels Back Cave mammalian fauna. In: Schmitt DN, Madsen DB Camels Back Cave. Anthropological Papers no. 125. University of Utah Press, Salt Lake City, pp. 136-176

Schmitt DN, Lupo KD (2012) The Bonneville Estates Rockshelter rodent fauna and changes in late Pleistocene-middle Holocene climates and biogeography in the northern Bonneville basin, USA. Quat Res 78:95–102

Schmitt DN, Lupo KD (2016) Changes in late Quaternary mammalian biogeography in the Bonneville basin. In: Oviatt CG, Shroder JF (eds) Lake Bonneville: a scientific update. Developments in Earth Surface Processes 20. Elsevier, Amsterdam, pp. 352-370

Schmitt DN, Madsen DB (2005) Camels Back Cave. Anthropological Papers no. 125. University of Utah Press, Salt Lake City

Schmitt DN, Madsen DB, Lupo KD (2004) The worst of times, the best of times: jackrabbit hunting by middle Holocene human foragers in the Bonneville basin of western North America. In: Mondini M, Muñoz S, Wickler S (eds) Colonization, migration, and marginal areas: a zooarchaeological approach. Oxbow Books, Oxford, pp 86–95

Schmitt DN, Page D (2011) A class III cultural resource inventory in support of phase 5 of the PD-Tess range modernization project, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Schmitt DN, Page D, Memmott M (2010) A class III cultural resource inventory for phase 1 of the PD-TESS project, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Schmitt DN, Page D, Wazaney B, Dalldorf G (2012) A class III cultural resource inventory of the UAS Loiter area north of MAAF, U.S. Army Dugway Proving Ground, Tooele County, Utah. Desert Research Institute, Reno

Severinghaus CW (1963) Effectiveness of archery in controlling deer abundance on the Howland Island Game Management Area. NY Fish Game J 10:186–193

Shackleton DM (1985) Ovis canadensis. Mammalian Species 230:1–9

Sharrock FW, Marwitt JP (1967) Excavations at Nephi, Utah, 1965-1966. Anthropological Papers no. 88. University of Utah Press, Salt Lake City

Simms SR (1984) Aboriginal Great Basin foraging strategies: an evolutionary analysis. Ph.D. dissertation, University of Utah, Salt Lake City

Simms SR (1985) Acquisition cost and nutritional data on Great Basin resources. J Calif and Gt Basin Anthropol 7:117–126

Simms SR (1987) Behavioral ecology and hunter-gatherer foraging: an example from the Great Basin. BAR International Series no. 381. British Archaeological Reports, Oxford

Simms SR (2008) Ancient peoples of the Great Basin and Colorado Plateau. Left Coast Press, Walnut Creek, California

Simms SR, Schmitt DN, Jensen K (1999) Playa View Dune: a mid-Holocene campsite in the Great Salt Lake Desert. Utah Archaeol 12:31–49

Simpson Capt JH (1876) Report of explorations across the Great Basin of the territory of Utah for a direct wagon-route from Camp Floyd to Genoa: In Carson Valley, in 1859. U.S. Government Printing Office, Washington, DC

Smith EA (1980) Evolutionary ecology and the analysis of human foraging behavior: an Inuit example from the east coast of Hudson Bay. Ph.D. dissertation, Cornell University, Ithaca

Smith EA (1991) Inujjuamiut foraging strategies: evolutionary ecology of an arctic hunting economy. Aldine de Grutyer, Hawthorne, New York

Smith EA (2004) Why do good hunters have higher reproductive success? Hum Nat 15:343–364

Smith EA (2013) Agency and adaptation: new directions in evolutionary anthropology. Annu Rev Anthropol 42:103–120

Smith EA, Wishnie M (2000) Conservation and subsistence in small-scale societies. Annu Rev Anthropol 29:493–524

Smith GM, Barker P, Hattori EM, Raymond A, Goebel T (2013) Points in time: direct radiocarbon dates on Great Basin projectile points. Am Antiq 78:580–594

Sosis R (2000) Costly signaling and torch fishing on Ifaluk atoll. Evol and Hum Behav 21:223–244

South Carolina Rabbit Hunter Study [2009-2010, 2011-2012, 2018-2019]. South Carolina Department of Natural Resources, Columbia https://dc.statelibrary.sc.gov/

Speth JD (2012) The paleoanthropology and archaeology of big-game hunting: protein, fat, or politics? Springer, New York

Steward JH (1938) Basin-plateau aboriginal sociopolitical groups. Bureau of American Ethnology Bulletin 120. Smithsonian Institution, Washington DC

Steward, JH (1941) Culture element distributions, XIII: Nevada Shoshone. Anthropological Records 4(2). University of California, Berkeley, pp 209-360

Steward JH (1970) The foundations of basin-plateau Shoshonean society. In: Swanson EH (ed) Languages and cultures of western North America: essays in honor of Sven S. Liljeblad. Idaho State University Press, Pocatello, pp 113–151

Stewart OC (1941) Culture element distributions, XIV: Northern Paiute. Anthropological Records 4(3). University of California, Berkeley, pp 361-446

Stiner MC, Munro ND (2002) Approaches to prehistoric diet breadth, demography, and prey ranking systems in time and space. J Archaeol Method Theory 9:181–214

Stiner MC, Munro ND, Survoell TA (2000) The tortoise and the hare: small-game use, the broad-spectrum revolution, and Paleolithic demography. Curr Anthropol 41:39–73

Stormer FA, Kirkpatrick CM, Hoekstra TW (1979) Hunter-inflicted wounding of white-tailed deer. Wildl Soc Bull 7:10–16

Swanson R (2011) A comprehensive analysis of the Swallow Shelter (42BO268) faunal assemblage. Master’s thesis, Washington State University, Pullman

Szuter CR, Bayham FE (1989) Sedentism and prehistoric animal procurement among desert horticulturalists of the North American southwest. In: Kent S (ed) Farmers as hunters: the implications of sedentism. Cambridge University Press, Cambridge, pp 80–95

Ugan A (2005) Climate, bone density, and resource depression: what is driving variation in large and small game in Fremont archaeofaunas? J Anthropol Archaeol 24:227–251

Ugan A, Simms S (2012) On prey mobility, prey rank, and foraging goals. Am Antiq 77:179–185

Valdez R, Krausman PR (eds) (1999) Mountain sheep of North America. University of Arizona Press, Tucson

Wiessner P (2002) Hunting, healing, and hxaro exchange: a long-term perspective on! Kung (Ju/’hoansi) large-game hunting. Evol Hum Behav 23:407–436

Wigand PE, Rhode D (2002) Great Basin vegetational history and aquatic systems: the last 150,000 years. In: Hershler R, Madsen DB, Currey DR (eds) Great Basin aquatic systems history. Smithsonian Contributions to Earth Sciences 33. Smithsonian Institution Press, Washington, DC, pp 309-367

Winterhalder B (1981) Foraging strategies in the boreal forest: an analysis of Cree hunting and gathering. In: Winterhalder B, Smith EA (eds) Hunter-gatherer foraging strategies: ethnographic and archaeological analyses. University of Chicago Press, pp 66-98

Winterhalder B, Lu F (1997) A forager-resource population ecology model and implications for indigenous conservation. Conserv Biology 11:1354–1364

Wolverton S (2005) The effects of the Hypsithermal on prehistoric foraging efficiency in Missouri. Am Antiq 70:91–106

Wolverton S, Nagaoka L, Dong P, Kennedy JH (2012) On behavioral depression in white-tailed deer. J Archaeol Method Theory 19:462–489

Yoder D, Blood J, Mason R (2005) How warn were they? Thermal properties of rabbit skin robes and blankets. J Calif Gt Basin Anthropol 25:55–68

Yost JA, Kelly P (1983) Shotguns, blowguns and spears: the analysis of technological efficiency. In: Hames RB, Vickers WT (eds) Adaptive responses of native Amazonians. Academic Press, Orlando, pp 189–224

Acknowledgments

Thanks to two anonymous reviewers who provided useful critiques and suggestions and to Britt Starkovich and Tiina Manne for inviting us to participate in the 2019 Albuquerque symposium and this special issue.

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This article is part of the Topical Collection on Do good things come in small packages?

Electronic supplementary material

ESM 1

(DOCX 20.4 kb)

Rights and permissions

About this article

Cite this article

Lupo, K.D., Schmitt, D.N. & Madsen, D.B. Size matters only sometimes: the energy-risk trade-offs of Holocene prey acquisition in the Bonneville basin, western USA. Archaeol Anthropol Sci 12, 160 (2020). https://doi.org/10.1007/s12520-020-01146-7

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12520-020-01146-7