Abstract

The prediction of foundation pit deformation is of great significance in ensuring the safety of neighbors and efficiency of construction. The back-propagation neural network (BPNN) is one of the most successful models that have been implemented for data prediction. An accurate mapping relationship cannot be constructed by directly applying neural networks due to the measured data of foundation pit suffer from small quantity and big noise. In the present study, the main idea of residual network (ResNet) was introduced in prediction, and genetic algorithm (GA) and back propagation (BP) were coupled to develop a hybrid GA-ResNN training algorithm with global search capabilities. The dimension of the input data is doubled by overlapping and encoding the input data. The dependence of the neural network on the initial model can be reduced using GA and ResNet. Cases reported in the literature are used to demonstrate the effectiveness and accuracy of the proposed model.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

With the rapid development of urban construction, the number and scale of underground projects have risen sharply, along with the number of accidents in deep foundation pit. A series of problems such as damage to foundation pits, casualties, economic losses, damage to surrounding buildings, and even collapse also occur frequently. Unpredictable effects and losses on society were caused due to those problems. Therefore, the prediction work during the construction of deep foundation pits is of great significance in ensuring the safety of neighbors and efficiency of construction. Deformation prediction work of foundation pit projects consists of a lot of contents, and monitoring works, e.g., surrounding ground surface settlement, surrounding pipeline displacement, deformation of enclosure structure, and bottom heave, should be carried out in practice. Mathematical models for deformation prediction of deep foundation pits established by traditional theory have a disadvantage of low accuracy, which are difficult to meet the requirements of prediction accuracy of deep foundation pit projects.

Currently, there are many methods for processing and predicting the deformation data in geosciences including the application in foundation pit. Time series (Shen et al. 2013), gray system (Guo et al. 2015), regression statistical analysis (Arthur et al. 2015), Kalman filtering model (Boukharouba 2013), back propagation neural network (BPNN) (Lv et al. 2020), artificial neural network (ANN) (Riahi-Madvar and Seifi 2018; Riahi-Madvar et al. 2021a; Ghorbani et al. 2020, 2021), and adaptive network-based fuzzy inference system (ANFIS) (Riahi-Madvar et al. 2021b; Li and Cheng 2021) were used for most cases to date. Among the various neural network models used for predicting the deformation in foundation pits, the BPNN has the ability to map complex nonlinear problems and unknown relationships in a simple structure, also with a good nonlinear fitting ability and self-learning ability (Wang et al 2015). The weights and bias of BPNN are calculated by gradient descent based on a local search algorithm. BPNN has been widely used in practice, although drawbacks including slow convergence speed and the appearance of local extremum still exist (French et al 1992). Drawbacks came from the synaptic weights and errors generated randomly before the training of neural network. With each repeated operation of the training of neural network training, the final weight of each time was evaluated by BPNN algorithm, in which the trained neural network has different prediction performance and convergence speed.

It is necessary to propose a method to improve the performance and global convergence of BPNN to minimize the inconsistency. Some nature-inspired algorithms that find the global optimum have been developed to hybridize with artificial intelligence models and improve the performance of these models (Niu et al. 2018). For example, Cui et al. (2021) applied PSO optimization algorithm to optimize and improve the GM (1, 1) model and the BP network model, and Madvar et al. (2020) proposed a hybridized ANN With PSO and cat swarm optimization (CSO) algorithms. Genetic algorithm (GA), i.e., a global searching technology with gradient-free method inspired by evolutionary processes, or residual network, i.e., a framework that reformulates the layers as learning residual functions with reference to the layers input, instead of learning unreferenced functions, can be used to improve the network structure of BPNN. Asadi et al. (2013) proposed a hybrid intelligent model for runoff prediction by combining genetic algorithms and feed forward neural networks. Irani and Nasimi (2011) utilized a hybrid genetic algorithm–neural network strategy to predict permeability of Mansuri Bangestan reservoir. Khandelwal and Armaghani (2016) proposed a hybrid genetic algorithm (GA)–artificial neural network to estimate convenient drilling rate index (DRI). He et al. (2016) proposed the deep residual learning for image recognition and won 1st place on the tasks of ImageNet detection. Zhang et al. (2017) used residual learning in denoising convolutional neural networks (DnCNNs) to embrace the progress in very deep architecture, learning algorithm, and regularization method into image denoising.

However, there is no hybrid method that combined GA and residual network in predicting the deformation of deep foundation pits. To get an optimized neural network with a hybrid GA-ResNN training algorithm for predicting deformation of the foundation pit, a hybrid model using GA optimized residual neural network was established in this work. To demonstrate the effectiveness and accuracy of the proposed model, cases reported in the literature are used in this paper. The present work can provide accurate guidance and suggestions for the construction of the foundation pit.

A hybrid GA-Res neural network

Introduction of residual network (ResNet)

The concept of residual network was first proposed by He et al. (2016) and the training results of ResNet won 1st place on the ILSVRC 2015 classification task. The residual network can solve the problems of gradient dispersion and gradient explosion caused by the increase in the number of network layers. Assuming that the latter layers of the deep network are identity mapping, the model degenerates into a shallow network. It is more difficult to directly fit some layers to a potential identity mapping function A(x) = x. However, if the network is designed as A(x) = F(x) + x, as shown in Fig. 1, the idea of a residual network is to transform the whole process into learning a residual function F(x) = A(x) – x. Therefore, identity mapping is built A(x) = x when F(x) = 0.

Flowchart of residual learning (He et al. 2016)

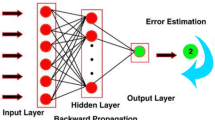

Introduction of GA-BP algorithm

The back-propagation (BP) algorithm is one of the most successful methods that have been implemented for network optimization, which was proposed by a group of scientists led by Rumelhart et al. (1986). A BP neural network (BPNN) model is composed of layers (input, hidden, and output layers), neurons, and weights between neurons. Genetic algorithm (GA) is a computational model that simulates the natural evolution of Darwin’s theory of evolution and the biological mechanism of genetics. The theory and method of GA was first proposed by Holland (1975). Individuals with better fitness will be reserved via the processes including training, optimizing the initial population, and selecting, crossing, and mutating genetic genes.

Figure 2 shows the flowchart of the GA optimized BPNN model, which can be divided into three parts: determination of BPNN structure, optimization process of GA, and prediction of BPNN. Better initial weights and thresholds of network can be acquired via the GA-ResNN. The basic idea is to use individuals as the initial weights and thresholds of the network and the prediction errors of the BPNN as the fitness value. Finding the optimal individual through selecting, crossing, and mutation, which is also the process to find the optimal initial weight and threshold.

The optimal initial weight and threshold are substituted into the BPNN network for training; the calculation is not terminated until the global error is less than the limited error. BPNN can converge quickly to avoid falling into a local minimum, and integrates the optimization of the GA; in addition, the calculation speed and accuracy of the BPNN can be improved.

Introduction of A hybrid GA-Res neural network

This paper combines the idea of residual training and GA-BP algorithm and proposes a hybrid GA-ResNN model. Data residuals are fitted by GA-BP algorithm to achieve the expected results. The implementation process will be described in the following section.

Deformation predicting model of foundation pit

Training samples for foundation pit data

The primary task for building a prediction model of neural network on foundation pit deformation is to reasonably select training samples. Training samples need to be selected according to their different characteristics as follows:

-

(1)

Small amount of data. Model suffers from some inaccuracies in prediction results if the number of training samples is not enough. Therefore, data grouping is used in this prediction model to reasonably expand the amount of data on the original basis. Taking three pieces of data as a group, and comparing the prediction result with the fourth data, the error is used as the fitness value and then the GA algorithm is performed in the flowchart, as shown in Fig. 3.

-

(2)

Diversified type of data. Level indicators include self-parameters (SP), supporting structure (SS), excavation scheme (ES), dewatering (DW), safety monitoring (SM), peripheral influence (PI), structure status of the existing tunnels (SET), and deformation monitoring of tunnels in construction period (DMT). The ability to reveal the characteristics of the deformation of foundation pit is not only to rely on the factors that have obvious effect to the results, which means only selecting a part of factors as the model input is not sufficient.

Therefore, the prediction model in this paper can realize the function of predicting any secondary indicators. After selecting the level indicator, then select the desired secondary indicator you want for the prediction result. The classified indicators that affecting the deformation of the foundation pit are shown in Table 1. In the process of data screening, indicators that have no reference value have been removed because of the reason that data has not changed over time.

-

(3)

Data is suitable for model training and predicting. The deformation of the foundation pit has the characteristics of trending, randomness, and volatility, which is suitable for training and predicting of the model. Meanwhile, boundedness brought by a certain regression model in the analysis of time series can be overcome by GA optimized BPNN algorithm.

-

(4)

Multiple data training and predicting methods. Data training and predicting methods include the following three methods: single indicator using data under the same level of indicator, single indicator using all data from all level indicators, single indicator using its own data only.

The calculation speed of the second method is the lowest among the three methods because it gets all the data involved in, and the correlation of indicators under different types of level indicator is not as strong as that of under the same indicator. The third method adopts data under the same single indicator for training and predicting; drawbacks such as low efficiency and inaccurate results appear when the data has a low number in amount under nonlinear fitting. Therefore, the first method is used in this paper.

Model implementation

To train the proposed GA-ResNN model, the GA-ResNN method was programmed using the MATLAB software (see the main coding structure shown in Fig. 4). The optimization residual of BPNN is taken as the initial value of GA, and the expected results will be output after the operations of selecting, crossing, mutation, and calculating the fitness.

Rationality verification of GA-ResNN method

Introduction of engineering case

A square foundation pit in Xi’an is taken as the example as well as the measured data (Zhang 2017). Foundation pit was located on the east side of a high-speed railway station and on the south side of the coach station. The national highway passes from the west side of the square. The main function of the project was an underground garage and an underground shopping mall. The north and south parts were symmetrically arranged on both sides of the subway shield section, and the two sections were connected by three connecting channels at the upper part of the shield section.

There are mainly two parts of risk influential factors for the construction of deep foundation pit near an existing tunnel, e.g., one is the condition of the existing tunnel, and the second part is the scale, stability, and impact on the surrounding environment of the deep foundation pit. According to the analysis of Zhang (2017), deformation and failure form of deep foundation pit, deformation form of existing tunnel, and the safety level analysis of deep foundation pit based on gray correlation degree, the influencing parameters of deep foundation pit during the construction are summarized in Table 1.

Design of topological structure in GA-ResNN

Number of input layer

Number of input layer is the types of data times the number of data which can be realized by the following code (Rumelhart et al. 1986), e.g., inputnum = m*num_g.

Number of hidden layer

The number of hidden layers is generally determined by empirical formula. Researchers have accumulated a lot of experiences for choosing the number of hidden layer during a long time using the BPNN model. The specific formula can be expressed as follows (Zhang 2017):

where m is the node number of input layer, n is the node number of output layer, w is the node number of hidden layer, and a is usually taken in [1, 10] and is determined as 1 after several times of calculation in this paper.

Number of output layer

There is only one node in output layer which means the results only related to one indicator for each time of the calculation.

The topological structure of GA-ResNN is shown in Fig. 5. Users can input any one of the desired indicators within the edited range (self-parameters (SP), supporting structure (SS), excavation scheme (ES), dewatering (DW), safety monitoring (SM), peripheral influence (PI), structure status of the existing tunnels (SET), deformation monitoring of tunnels in construction period (DMT)) in the input layer according to their own needs. After the calculation of GA-ResNN, the prediction results of the desired indicator will be output. Taking the example of soil strength of foundation pit (see Table 1). Soil strength of foundation pit is one of the secondary indicators of self-parameters (SP), so the procedure is calling for the SP model first, and data of soil strength of foundation pit in known time-series as input variables, then the output result will be the same variables but in the future time-series, which is the next two days in our case.

Training samples

There are 39 secondary indicators in measured data, 5 of which remain the same during the measured period. The remaining data from 34 secondary indicators are used in this paper, and each indicator has 14 sets of measured data in 14 days. The construction of training samples refers to the content involved in “Training samples for foundation pit data.” See the appendix for all measured data used in this paper.

Parameters setting of GA

The optimal initial weight for BPNN is determined by GA without making any change on the topological structure of neural network while the GA-ResNN prediction model is under working. The GA method can better prevent the neural network from falling into local extremes and improve the training speed. The number of iterations of the genetic algorithm is controlled by a specified maximum number of iterations. In this paper, the parameters of GA algorithm are already fine-tuned based on the research of Li (2007), the maximum iteration of each optimization process is set as 50, the population size is 30, the cross selection probability is 0.3, and the mutation probability is 0.2.

Results on GA-ResNN prediction model

The total number of data is 34 sets due to that there are 34 secondary indicators in total. Only 30 sets of data are randomly selected for analysis and testing to display the results clearly. Each 10 sets of data is grouped for the same reason of displaying clearly. Due to our model is a time-series regression model with the same input and output variables, the data of secondary indicators are the variables in our model. Data of the known time series are used for model training, so the prediction results of the next two days can be realized for output. Therefore, the results from the two days will be separately analyzed.

Tables 2, 3, 4, 5, 6, and 7 show the measured data, the predicted value of BPNN, the predicted value of GA-ResNN, and the errors E between them, separately. Tables 2 and 3 show the first 10 sets of data in the next two days separately, Tables 4 and 5 show the second 10 sets of data in the next two days separately, and Tables 6 and 7 show the last 10 sets of data in the next two days separately. The calculation formula of E can be expressed as:

where Pb is the predicted value of BPNN, Pa is the predicted value of GA-ResNN, and R is the measured data. Therefore, the prediction accuracy of GA-ResNN is better than that of BPNN along with the value of E gets larger.

The value of E in the first 10 sets of data is always positive according to Tables 2 and 3, while the average value of E is 4.17%. The value of E in the second 10 sets of data is always positive according to Tables 4 and 5, while the average value of E is 91.47%. The value of E in the last 10 sets of data is always positive except “Change rate of groundwater level” according to Tables 6 and 7, while the average value of E is 180.27%. GA-ResNN reveals abolutely advantages in front of the traditional BP model in predicting data.

According to Tables 2, 3, 4, 5, 6, and 7, results can be obtained as follows:

-

(1)

The optimized GA-ResNN simulations correlate well with the field measurements while over 98.3% of E are positive. The network’s prediction accuracy is determined by comparing the network predictions between two models (BP and GA-ResNN).

-

(2)

Unstable results also occur occasionally (E is − 0.38% in Table 7). The quality of prediction is determined by the discreteness of the input value. It can be concluded that a relatively accurate and stable result is presented in the rest of the data, while the average value of E is 91.97%.

-

(3)

The predicted accuracy of the BP model is bad while the input values are negative, while the results of GA-ResNN are relatively accurate than that of the BP model.

-

(4)

GA-ResNN shows its advantages while the input values have the characteristics of small change of gradient and small data.

Figure 6 shows the ratio curves of predicted errors on three models including BPNN, GA-BPNN, and GA-ResNN to measured data, respectively. The total number of model results is 60, and for the reason of a clear display, each 10 of them is grouped. The first ten pieces of data are named as group A; the second ten pieces of data are named as group B; the third ten pieces of data are named as group C; the fourth ten pieces of data are named as group D; the fifth ten pieces of data are named as group E; the last ten pieces of data are named as group F. GA-BPNN is the model without the residual training in GA-ResNN algorithm. Based on the predicted values in 2 days on 30 indicators. Standard statistical errors RMSE (root mean squared error) and MAE (mean squared error) are also used for evaluation. One obtains:

where x is the actual value, y is the predicted value, and n is the number of data.

The calculation results of the three models are given in Fig. 6. From top to bottom, the three groups of results correspond to the BP model, GA-BP model, and GA-ResNN model, respectively, in Fig. 6.

Results of analysis can be obtained as follows:

-

(1)

The network trained using the proposed GA-ResNN algorithm gives a better correlation with the measured data than does the existing BP model and GA-BP models (the BP curve and GA-BP curve are above the GA-ResNN curve for the majority of the results). At the same time, the GA-ResNN model has the lowest RMSE and MAE values. Hence, predicted results with higher accuracy and veracity can be acquired by using the GA-ResNN prediction model.

-

(2)

GA-BP also has a strong predictive ability, because when the results of the GA-BP model are very close to the measured data, the results of GA-ResNN are also in the similar conditions (curves of GA-BP and GA-ResNN overlapped according to Fig. 6 (c) and (d)). Even GA-ResNN does not perform as well as the GA-BP model in certain samples. However, when dealing with small data, GA-ResNN shows a better ability, so you need to choose a suitable prediction model according to the characteristics of the data.

-

(3)

A suitable prediction model needs to be picked based on the charateristics of data samples. GA-ResNN shows a better ability in predicting dealing with small data compared with the other two training models.

Conclusions

The hybrid algorithm (GA-ResNN) which integrates the back propagation neural network (BPNN) with genetic algorithm (GA) and the idea of residual training can predict the trend of risk indicators of foundation pit relatively accurately. More accurate prediction data and higher operating efficiency can be obtained while data is classified according to the correlation during the pre-processing step. Through the optimization of genetic algorithm (GA) and ResNet, the BPNN model can quickly converge and drawback such as falling into local extremum can be avoided. Calculation speed and accuracy of ResNet are optimized by integrating with GA.

The hybrid GA-ResNN model gives more accurate prediction results than the GA-BP and BPNN models. In summary, the GA-ResNN model has a better feasibility in the prediction of foundation pit deformation no matter in practical engineering or scientific research. The inaccuracy produced by GA-ResNN while the initial data is negative needs to be further corrected. And the selection of training model also needs to be carried out after the analysis of the data features.

Data availability

Some or all data, models, or codes that support the findings of this study are available from the corresponding author upon reasonable request.

References

Arthur CK, Temeng VA, Ziggah YY (2015) Novel approach to predicting blast-induced ground vibration using Gaussian process regression. Eng Comput-Germany 36(1):29–42. https://doi.org/10.1007/s00366-018-0686-3

Asadi S, Shahrabi J, Abbaszadeh P, Tabanmehr S (2013) A new hybrid artificial neural networks for rainfall-runoff process modeling. Neurocomputing 121(dec.9):470–480. https://doi.org/10.1016/j.neucom.2013.05.023

Boukharouba K (2013) Annual stream flow simulation by ARMA processes and prediction by Kalman filter. Arab J Geosci 6(7):2193–2201. https://doi.org/10.1007/s12517-012-0529-2

Cui D, Zhu C, Li Q, Huang Q, Luo Q (2021) Research on deformation prediction of foundation pit based on PSO-GM-BP model. Adv Civ Eng 1:1–17. https://doi.org/10.1155/2021/8822929

French MN, Krajewski WF, Cuykendall RR (1992) Rainfall forecasting in space and time using a neural network. J Hydrol 137(1–4):1–31. https://doi.org/10.1016/0022-1694(92)90046-X

Ghorbani B, Arulrajah A, Narsilio G, Horpibulsuk S, Bo MW (2020) Shakedown analysis of pet blends with demolition waste as pavement base/subbase materials using experimental and neural network methods. Transp. Geotech. https://doi.org/10.1016/j.trgeo.2020.100481

Ghorbani B, Arulrajah A, Narsilio G, Horpibulsuk S, Bo MW (2021) Dynamic characterization of recycled glass-recycled concrete blends using experimental analysis and artificial neural network modeling. Soil Dyn Earthq Eng 142(7):106544. https://doi.org/10.1016/j.soildyn.2020.106544

Guo X, Liu S, Wu L, Gao Y, Yang Y (2015) A multi-variable grey model with a self-memory component and its application on engineering prediction. Eng Appl Artif Int 42(jun):82–93. https://doi.org/10.1016/j.engappai.2015.03.014

He K, Zhang X, Ren S, Sun J (2016) Deep residual learning for image recognition. 2016 IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 770–778. https://doi.org/10.1109/CVPR.2016.90

Holland JH (1975) Adaptation in natural and artificial systems. University of Michigan Press, Ann Arbor

Irani R, Nasimi R (2011) Evolving neural network using real coded genetic algorithm for permeability estimation of the reservoir. Expert Syst Appl 38(8):9862–9866. https://doi.org/10.1016/j.eswa.2011.02.046

Khandelwal M, Armaghani DJ (2016) Prediction of drillability of rocks with strength properties using a hybrid GA-ANN technique. Geotech Geol Eng 34(2):605–620. https://doi.org/10.1007/s10706-015-9970-9

Li M (2007) Application research on quality evaluation of urban human settlements based on the BP neural network improved by GA, Dissertation, Liaoning Normal University. (In Chinese)

Li ZC, Cheng PF (2021) A study on the prediction of displacement in the accelerated deformation stage of the creep bedding rock landslides. Arab J Geosci 14(2):1–11. https://doi.org/10.1007/s12517-020-06404-5

Lv Y, Liu T, Ma J, Wei SD, Gao CL (2020) Study on settlement prediction model of deep foundation pit in sand and pebble strata based on grey theory and BP neural network. Arab J Geosci 13(23):1238. https://doi.org/10.1007/s12517-020-06232-7

Madvar HR, Dehghani M, Memarzadeh R, Salwana E, Mosavi A, Shahab S (2020) Derivation of optimized equations for estimation of dispersion coefficient in natural streams using hybridized ANN with PSO and CSO algorithms. IEEE Access 2020:156582–156599. https://doi.org/10.1109/ACCESS.2020.3019362

Niu WJ, Feng ZK, Cheng CT, Zhou JZ (2018) Forecasting daily runoff by extreme learning machine based on quantum-behaved particle swarm optimization. J Hydrol Eng 23(3):04018002.1-04018002. https://doi.org/10.1061/(ASCE)HE.1943-5584.0001625

Riahi-Madvar H, Seifi A (2018) Uncertainty analysis in bed load transport prediction of gravel bed rivers by ANN and ANFIS. Arab J Geosci 11(21):1–20. https://doi.org/10.1007/s12517-018-3968-6

Riahi-Madvar H, Dehghani M, Memarzadeh R, Gharabaghi B (2021a) Short to long-term forecasting of river flows by heuristic optimization algorithms hybridized with ANFIS. Water Resour Manag 35(4):1149–1166. https://doi.org/10.1007/s11269-020-02756-5/

Riahi-Madvar H, Mahsa G, Bahram G, Seyed MS (2021b) A predictive equation for residual strength using a hybrid of subset selection of maximum dissimilarity method with Pareto optimal multi-gene genetic programming. Geosci Front 12(5):101222. https://doi.org/10.1016/j.gsf.2021.101222

Rumelhart DE, Hinton G, Williams RJ (1986) Learning representations by back-propagating errors. Nature 323(6088):533–536. https://doi.org/10.1038/323533a0

Shen SL, Ma L, Xu YS, Yin ZY (2013) Interpretation of increased deformation rate in aquifer IV due to groundwater pumping in Shanghai. Can Geotech J 50(11):1129–1142. https://doi.org/10.1139/cgj-2013-0042

Wang L, Zeng Y, Chen T (2015) Back propagation neural network with adaptive differential evolution algorithm for time series forecasting. Expert Syst Appl 42(2):855–863. https://doi.org/10.1016/j.eswa.2014.08.018

Zhang Y (2017) Study on safety risk assessment and control of deep foundation pit construction adjacent to existing subway, Dissertation, Xi’an University of Architecture and Technology. (In Chinese)

Zhang K, Zuo W, Chen Y, Meng D, Zhang L (2017) Beyond a Gaussian denoiser: residual learning of deep CNN for image denoising. IEEE Trans Image Process 26(7):3142–3155. https://doi.org/10.1109/TIP.2017.2662206

Funding

This work was supported by the Young Experts of Taishan Scholar Project of Shandong Province (No. tsqn202103163), the National Natural Science Foundation of China (No. 52078278, No. 51778345), the Key Research and Development Foundation of Shandong Province of China (No. 2019GSF109006), and the program of Qilu Young Scholars of Shandong University. Great appreciation goes to the editorial board and the reviewers of this paper.

Author information

Authors and Affiliations

Contributions

All authors participated in drafting the article and agreed to submit it for publication. Wei Cui was project supervisor and was responsible for study conception. Chunyu Cui was responsible for data analysis, the revision, and writing the manuscript. Shanwei Liu and Bin Ma were responsible for the proofreading and reviewing the manuscript.

Corresponding author

Ethics declarations

Competing interests

The authors declare that they have no competing interests.

Additional information

Responsible Editor: Zeynal Abiddin Erguler

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

About this article

Cite this article

Cui, Cy., Cui, W., Liu, Sw. et al. An optimized neural network with a hybrid GA-ResNN training algorithm: applications in foundation pit. Arab J Geosci 14, 2443 (2021). https://doi.org/10.1007/s12517-021-08775-9

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s12517-021-08775-9