Abstract

Trust is an important factor in human-robot interaction, it plays an important role in improving human acceptance of robots and building human-robot relationships. Today, when robots are more intelligent and the human-robot relationships is more intimate, the social attributes of robots and interaction scenarios are important factors affecting human-robot trust. Altruistic behaviour is a typical social behaviour, and reciprocity is a typical social interaction scenario. So, this study investigates the effects of reciprocity and robots’ altruistic behaviours on cognitive trust, emotional trust, behavioural trust and the mediating role of perceived intelligence. An experiment involving 42 participants was conducted. The virtual robots used in the experiment have three different behaviours: altruistic, selfish and control, and two interactive scenarios in the experiment: reciprocity and non-reciprocity. Participants in our study played the adapted Repeated Public Goods Game and the Trust Game with robot in two scenarios. The results indicate that robots’ altruistic behaviours significantly influence participants’ cognitive trust, emotional trust and behavioural trust, and reciprocity significantly influences only emotional trust and behavioural trust. Both robots’ altruistic behaviours and reciprocity positively influence perceived intelligence. Perceived intelligence mediates the effects of robots’ altruistic behaviours on cognitive and emotional trust and the effect of reciprocity on emotional trust. Implications are discussed for future work.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Robots are gradually being used in social situations and play the role of human partners, such as working partners or home assistants, which means that robots can have humanoid behaviours and reactions such as understanding tasks, sharing knowledge, and providing help. These humanoid features allow people to interact naturally with robot partners and feel the robot’s ability to get along with people in cooperation. However, the design of complex humanoid behaviours presents many challenges. In addition to technical reliability, developers must consider which robot behaviours support appropriate human-robot relationships. Trust is an important factor that guarantees the establishment and maintenance of the relationship between humans and robots [1] and facilitates human acceptance of robots [2]. At present, some scholars have conducted research on human-robot trust. However, with the development of artificial intelligence technology, the human-obot relationship has changed, more intelligent human-robot interactions have appeared, and human-robot trust has also changed. Therefore, more in-depth exploration of human-robot trust is required. When the human-robot interaction is more intelligent and more interpersonal, the relevant research theories and methods of human-human trust can be used to conduct research on human-robot trust.

Some related works have used economic trust games to measure interpersonal trust and even human-robot trust [2, 3]. In addition to the traditional questionnaire method to measure trust, related studies use behavioural data in trust games to measure trust, that is, behavioural trust. This type of research considers the amount of money participants allocate to their partners in the experiment as trust behaviours. However, behavioural trust in the trust game is limited to situations related to money gains and losses, and it cannot provide a comprehensive overview of trust; therefore, it is also necessary to use questionnaires to measure subjective trust [4].

Previous studies have often used a single-dimensional scale to measure human-obot subjective trust, and they have lacked in-depth exploration of human-robot subjective trust. In the research on interpersonal trust, many studies emphasize the dual dimension of trust and assume that trust includes cognitive trust and emotional trust [5]. Emotional trust is based on the emotional relationship generated when people interact with robot partners. Cognitive trust is a reasonable and objective assessment of the ability, reliability, integrity and other characteristics of robots. In the research of human-robot trust, the previous research has focused on people’s trust towards robot partners using different behavioural skills, cognitive methods and physical characteristics, and they have explored trust in robot capabilities and reliability, which is the same as the concept of cognition in interpersonal trust. However, for human-obot collaborative teams, in addition to the cognitive dimension of trust, the development of emotional relationships between team members is also worth exploring. Such exploration would help identify the key factors in the development of trust and those that affect the psychological state of the human-robot team [6], which is similar to the concept of emotional trust in interpersonal trust. Therefore, this study uses the experimental paradigm of the trust game to measure human-robot behavioural trust, draws on the concept of the dual dimension of interpersonal trust, measures human-robot subjective trust, deeply explores the duality of human-robot trust.

Reciprocity is the cornerstone of human social interaction [7], and it is an important factor of interaction in human society. Interpersonal cooperation, persuasion, and mutual trust all mainly depend on reciprocity. Some studies have suggested that there is reciprocity between humans and robots, and a small number of previous studies have suggested that cooperation and persuasion between humans and intelligent robots may also be affected by human-obot reciprocity. For example, Fogg and Nass [8] found that people prefer to interact with computers that have helped them in previous human-computer cooperation. Lee and Liang [9] found that robots that provided assistance significantly increased the likelihood of subjects obeying orders compared with robots that did not provide assistance. However, the current degree of intelligence and sociality of robots still has certain limitations that affect the social attributes and social behaviours of robots and thereby affect human-robot interaction. Therefore, the impact of human-robot reciprocity on trust may be different from that of interpersonal reciprocity. Thus, there is a need to further explore this issue. However, there is still a lack of research on human-robot reciprocity and its influence on trust. This study explores the influence of reciprocity on human-robot trust including behavioural trust, cognitive trust and emotional trust.

In the research on human-robot interaction, exploring the influence of robot behaviour on humans often centres on humans. At present, the focus is on the human-like behaviour of robots including the influence of simple behaviours such as gestures and gaze [10, 11] on people, while the influence of complex behaviours such as cooperation and persuasion [9] has not been sufficiently researched. However, in long-term interactions, people quickly lose interest in robots that can only gesture and gaze, which leads to reduced attention and affects the effects of human-robot interactions. People are more interested in robots that exhibit social human-like behaviours such as cooperation and persuasion. The altruistic behaviour of robots is a typical social human-like behaviour that allows people to experience their robot partners as being to their needs and interests as well as having generous and considerate attitudes, thereby affecting human-robot trust and interaction for longer periods of time.

Current robots still lack enough necessary social skills to better participate in human-robot collaborative work. As robots are gradually integrated into people’s lives, it is necessary to develop robots with higher degrees of social intelligence. The level of robot intelligence is related to human-robot trust and reciprocity as well as robots’ altruistic behaviour. When a robot demonstrates human-like behaviour, people will autonomously judge the robot’s intelligence level [12] and show different interactive behaviours and trust levels. Therefore, this study also explores the role of perceived intelligence in human-robot trust.

Therefore, this study aims to investigate the influence of reciprocity and robots’ altruistic behaviour on emotional, cognitive and behavioural trust in the process of human-robot interaction and to explore the role of perceived intelligence in these effects. This study combines the research methods of objective recording according to experiments and subjective reports [13, 14], uses the experimental paradigm of game theory research, measures cognitive trust and emotional trust through subjective data acquired via questionnaire surveys, and measures behavioural trust through objective data recorded during experiments.

2 Literature Review

2.1 HRI Altruistic Behaviour

Altruistic behaviour is a prosocial behaviour, i.e., a voluntary, intentional behaviour that brings benefits to others. The motivation of this behaviour is to truly want to benefit others while not gaining any personal benefits [15]. However, there are also a large number of researchers who believe that altruistic behaviours are often driven by self-interest (non-altruistic) motives such as desire for reputation and status, avoidance of inner sadness and guilt [16].

Most of the previous studies have sought to understand the mechanism of altruistic behaviour, such as homophily [17], dynamic social networks [18], and social influence [19], with the aim of providing strategies to promote altruistic behaviour. Past studies have shown that homophily promotes altruistic behaviour. Homophily is the tendency to connect with others who exhibit similar traits or behaviours; individuals who exhibit altruistic behaviour tend to make friends with people who behave in similar ways [17]. Rand et al. [18] studied how personal behaviours in dynamic social networks are affected by the behaviours of others and indicated that dynamic social network environments encourage altruistic behaviours because people have more opportunities to establish and maintain interactions with generous partners and terminate interactions with selfish partners. Social influence also affects altruistic behaviour [19]; that is, altruistic behaviour is affected not only by people who directly interact with the individual but also by people who indirectly interact with the individual. Fowler and Christakis [20] reported a reanalysis of a previous study in which altruism was measured by a public goods game. Analysis showed that people’s contributions were affected by the contributions of partners. The more participants who had previously interacted with a generous partner subsequently contributed. Crucially, people’s contributions were also influenced by the contributions of partners with whom they did not interact directly, and they would contribute more when interacting with a generous partner with whom they had not previously collaborated.

Homophily and social influence are both mechanisms for interpersonal altruistic behaviour, and both occur in social networks. There is evidence that emotions, thoughts, and behaviours can be spread through social network ties [21, 22], and people’s behaviour will be affected by their connections in social networks. In static networks, people’s interaction objects are relatively fixed. When humans interact repeatedly in relatively fixed social networks, one of the main goals may be to successfully cooperate with others because mutual cooperation is preferable to mutual betrayal [18, 19]. In a dynamic network, humans can control their interactive partners and can freely cut off old connections and form new connections. In such a rapidly updated social network, it may not be possible to predict future changes in a person’s behaviour. We draw on the mechanism of human altruistic behaviour and explore the impact of robot altruistic behaviour on human-robot interaction and human-robot trust in human-robot interaction scenarios. The results show that in this kind of human-like interaction scenario, robot altruistic behaviour has the same effect on humans as human altruistic behaviour. This means that in future research, the human-like behaviour of robots can be simulated based on the deep motivation of human behaviour, and the impact of robot behaviours generated in this way on humans is comparable to the impact of human behaviours.

Based on the motivation of human behaviour, robots may also produce altruistic behaviours through homophily, social networks and social influence; however, it may be due to their behavioural motivation and intelligence level perceived by humans, the mechanism of influence is different from that of altruistic behaviour in interpersonal relationships. In the current related research, compared with interpersonal altruism, there are three hypotheses about the tendency of human altruism in human-robot coordination systems to explain this difference [25,26,27]. In the first hypothesis, people think that robots will always value human well-being. Under this assumption, the robot companion has no untrustworthy behaviours such as betrayal and cheating, and there is no betrayal cost for the altruism of the robot companion. Therefore, people may show altruistic tendencies [25]. The second hypothesis is that robots are not humans, their social attributes are not equivalent to those of humans and the altruistic behaviour of benefiting robots cannot improve the well-being of other “people”. Therefore, when cooperating with robots, people will consider their own interests more, thus showing a selfish tendency [26]. In the third hypothesis, people expect positive reciprocity and want to understand the altruistic behaviour of robot companions. This kind of concern for other people’s altruistic behaviour is called social curiosity, that is, understanding other people’s altruistic tendencies [27]. Based on the viewpoint of social curiosity, in cooperation with humans or robot companions, people can satisfy social curiosity by understanding the altruistic behaviour of their peers; thus, there is no difference in altruistic behaviours when facing two kinds of cooperative partners.

There is still a lack of research on the mechanism of the influence of robot’s altruistic behaviour. Through the comparison of the above three hypotheses, it is evident that assumptions about the motivation of the robot’s altruistic behaviour in the first two hypotheses are based on a low level of robot intelligence; i.e., the robot has only one preset behaviour tendency, which is to either “pay attention” or “not pay attention” to the well-being of human beings [26]. In the third hypothesis, the perception of robot intelligence is higher than in the first two hypotheses. The belief is that robots are social entities, have their own ideas about altruistic behaviour, and therefore the expectation is that both humans and robots engage in reciprocal altruistic behaviours. The intelligence level of the robot is one of the reasons for the presumed influence of the robot’s altruistic behaviour. In addition, some scholars have proposed that anthropomorphism and mentalizing are also the influencing mechanisms of robot’s altruistic behaviour. Among them, De Kleijn et al. [28] evaluated the anthropomorphic degree of the robot opponent in an experiment, and he found that the proposal made by the robot with a high degree of anthropomorphism in the ultimatum game is unlikely to be rejected. Nishio et al. [29] believe that another mechanism that affects people’s altruistic behaviour is mentalizing, which usually refers to the developmental process of recognizing other people’s minds; however, the term also applies to non-human agents. Mentalizing stimulation affects human altruistic behaviour in different ways depending on the robot’s anthropomorphic appearance. Duffy [30] believes that perceived intelligence plays a role in the process of anthropomorphism affecting human-robot interaction, emphasizing the influence of appearance and sound on robot intelligence judgements. The use of anthropomorphic design in the physical design and behaviour of robots can influence human-robot interaction through perceived intelligence. In this study, virtual robots cannot reflect the advantages of physical design for a long time. Once people have the opportunity to perceive the behaviour of robots, people will be attracted by that behaviour [12]. Therefore, this research focuses on the effect of perceived intelligence on the influence of robot altruistic behaviour.

2.2 Reciprocity and HRI

Reciprocity involves mutual action between two parties, and it can refer to situations in which people will act only when others have done something for them first. Gouldner [7] proposed the “reciprocity norm”, which is defined as “compulsion behaviour that returns help or gifts in interpersonal relationships”; that is, reciprocity embodies feedback behaviour. Fehr and Gachter [31] put forward a more complete definition of reciprocity: “Reciprocity means that when people respond to friendly behaviours, they tend to be friendlier and more cooperative than the self-interested model predicts; conversely, when responding to hostile behaviours, people can often behave in more hostile and even cruel ways. This definition explains reciprocity not only from the perspective of feedback on friendly behaviours but also from the perspective of feedback on hostile behaviours; that is, individual behavioural responses can reflect individual perceptions of reciprocity.

Based on the theory of interpersonal reciprocity, previous studies assert that a similar reciprocal relationship exists between humans and robots [32,33,34] and follow the basic principle of interpersonal reciprocity; that is, people will respond to robots’ favours or hostile behaviours (reciprocity). Muscolo et al. [35] pointed out that the benefit of the reciprocal relationship between humans and robots is that this relationship makes the robot’s behaviour more intuitive and can improve the performance of social robot companions. Sandoval et al. [36] state that in human-robot cooperation, the lack of reciprocity may cause the devaluation of the services provided by robots. They believe that reciprocity is a necessary factor for people to pay attention to robots. If the robot does not do enough to attract the user’s attention, then the user will be less interested in the robot because the robot is perceived to be insufficiently or non-functional. In the healthcare field, Broadbent et al. [37] found that robots that do not interact with people have no significant impact on patients’ quality of life, depression or compliance whereas the interaction of patients and robots may be able to help improve their lives.

At present, because robots’ intelligence and sociality still have certain limitations, people may think that because robots are not humans, their social attributes will not be equivalent to human social attributes. Reciprocity behaviours such as providing benefits to robots will not improve their own well-being. Therefore, there may be differences between the reciprocity behaviour in human-robot relationships and the reciprocity behaviour in interpersonal relationships. This requires exploring the mechanism of human reciprocity behaviour in human-robot interactions. Kahn et al. [38] found that reciprocity is caused by different interaction objects. Studies have shown that children respond more positively to AIBO robots that engage in some activities, behaviours, and language stimulation, in comparison with toy dogs, and the difference in these responses is due to the reciprocity of the interaction made possible by the AIBO robots. Nishio et al. [29] believe that the appearance of robots affects reciprocity. In an ultimatum game involving reciprocity, they found that the attitude changes shown by people depend on the appearance of the agent. People show changes in their attitude depending on the agent’s appearance. The agent (robot, human or computer) in the role of proposer influences the number of the rejections of the proposals. Kiesler et al. [39] believe that the performance of robots in different situations affects individual reciprocity behaviour. They found that in the prisoner’s dilemma, when individuals have the opportunity to interact with robots intensively, they are more likely to show cooperative behaviour. In a very specific study, Fogg and Nass [8] found that reciprocity comes from mutual help between humans and robots. Research has found that users tend to interact with computers that have helped them before. Sandoval et al. [40] found that the interaction strategy of robots affects reciprocity. Participants tend to reciprocate more towards a robot who starts a game and uses a pure reciprocal strategy compared with other combined strategies (tit for tat, inverse tit for tat and reciprocal offer and inverse reciprocal offer).

Researchers have done some work on the mechanism of reciprocity in human-robot interactions and its influence on human-robot relationships. On the one hand, the prior research on human-robot reciprocity has mainly focused on persuasion [9, 41] and negotiation [42]. On the other hand, the related research has mainly focused on the influence of robot behaviour on human-robot reciprocity; for example, when a robot provides good service or help, people will in turn help the robot in response to the robot’s behaviour [43]. However, human-robot reciprocity has not been fully explored, and little research has been done to explore the influence of situational factors on human-robot reciprocity. This research draws on the mechanism of altruistic human behaviour and compares the altruistic behaviour of robots in dynamic and static social networks. Compared with dynamic social networks, the altruistic behaviour of robots in static social networks can trigger reciprocity in repeated interactions (direct reciprocity) [23]. Therefore, this study explores the impact of two scenarios without reciprocity and reciprocity on human-robot interaction in dynamic and static social networks.

2.3 Human-robot Trust

As the basis of all interpersonal relationships, trust has received extensive attention from researchers. Dunn et al. [44] assert that trust is a state of mind in which the trusting party is willing to place himself or herself in a situation that makes that party vulnerable to harm by a trusted party based on the ability and personality of the trusted party and on the emotional connection between the two parties. A large amount of previous research has been conducted on interpersonal trust. Trust is also one of the important factors affecting human-robot interaction. Billings et al. [45] discussed that human trust in robots is crucial and can affect the success of human-robot cooperation; it can also determine the future use of robots and influence the results of human-robot interaction.

Scholars in the field of human-robot interaction have conducted research on the factors affecting human-robot trust in different situations. Some researchers have evaluated and quantified the impact of humans, robots, and environmental factors on human-robot trust and found that factors related to the robot itself, especially its performance, have the highest correlation with trust, while environmental factors have a medium correlation with trust [1]. Hancock et al. [1] further believe that the performance of robots is the most influential factor in human-robot trust. Some researchers have also successfully used the ability-based robot trust model for robot decision-making research [46] and evaluated the effectiveness of human-robot teams [47].

Although the research on interpersonal trust has made great progress, it is believed that trust contains multiple dimensions. However, the research on human-robot trust still mainly regards trust as a single-dimensional factor; only a few researchers have engaged in a more in-depth theoretical exploration of human-robot trust, and related empirical research is relatively scarce. In a small number of studies exploring interpersonal trust from a multidimensional perspective, a large number of scholars currently adopt the viewpoint of McAllister [5] and assume that trust contains two dimensions, specifically, cognitive trust and emotional trust. Among them, cognitive trust depends on a reasonable and objective evaluation of the characteristics of others. These characteristics include the ability, reliability, integrity, and honesty [6]. Emotional trust comes from the development of emotional relationships and the degree to which the trustee feels safe and comfortable [48]. At present, the empirical research on human-robot trust using a one-dimensional trust structure mainly explores the category of cognitive trust, that is, the influence of robot appearance and ability on human-robot trust. For example, vanden Brule et al. [49] explored the impact of robot performance and behaviour on people’s trust and found that trust comes not only from the completion of a task but also from the how the task is performed. Antos et al. [50] find that when a robot’s emotional expression can convey information, it will affect people’s perception of trust.

In a small number of studies exploring human-robot trust from a multidimensional perspective, Schaefer [51] theoretically proposed four dimensions of human-robot trust including trust tendency, emotion-based trust, cognition-based trust, and trustworthiness. The research has shown that human-robot trust based on emotion and cognition is a dynamic process that will change with interaction, trust tendency is a stable feature, and trustworthiness is attributed to the characteristics of the robot, it also remains relatively stable. Therefore, although Schaefer [51] research shows that human-robot trust contains four dimensions, the dynamic process of human-robot interaction is similar to interpersonal trust. Only cognitive trust and emotional trust have developed over time in the process of human-robot interaction, while the other two dimensions will remain relatively stable for a long time [52], which means that in the empirical research on human-robot interaction, more attention should be paid to cognitive trust and emotional trust. McAllister emphasized the importance of measuring the two dimensions of trust in empirical research and stated that cognitive trust contributes to the development of emotional trust. Therefore, it is necessary to explore the dual structure of trust in the dynamic process of human-robot interaction, which is helpful for in-depth exploration of the influence mechanism of robots and environmental factors on human-robot trust.

In addition, from the perspective of subjective and objective evaluation indicators, both cognitive trust and emotional trust belong to subjective trust, and more studies focus on objective indicators of trust through the use of trust-related experimental paradigms. Such studies measure objective trust by directly collecting objective data from experiments; that is, behavioural trust, which will make up for the disadvantage that subjective trust may be affected by factors other than the expected experimental operation [14]. For example, Haring et al. [53] used trust games to compare the perception and trust of robots by people in different countries. Paeng et al. [54] used the coin entrustment game to analyse people’s cooperation and trust in robots. Deligianis et al. [55] played a cooperative visual tracking game with robots and explored human-robot trust based on whether humans accept the answers given by robots in the game.

To explore human-robot trust more comprehensively and to reduce the shortcomings of single dimensions and indicators, this research also focuses on different dimensions and evaluation indicators of human-robot trust and explores the relationship between human-robot trust and reciprocity and robot’s altruistic behaviour based on three aspects: emotional trust, cognitive trust, and behavioural trust.

2.4 Robot Intelligence

To make human-robot interaction more meaningful, robots must have intellectual skills to support smooth interaction with humans. Although the rapid development and great potential of robotic hardware technology and artificial intelligence technology enable robots to have rich functions, unless they show sufficient intelligence, they will not be accepted by people as their partners and not be able to participate in human work and life. If the intelligence of robots is not sufficient to enable them to focus on and value human needs and expectations, they will not effectively cooperate with humans [56].

The intelligence of robots is usually defined as social intelligence. Albrecht [57] defines robot social intelligence as the ability to get along with others while winning the cooperation of others. He believes that robotic social intelligence requires social awareness, sensitivity to human needs and interests, a generous and considerate attitude, and a set of practical skills to successfully interact with people in any environment. In human-robot interaction, once the robot shows human-like behaviour, such as human-like language, people will pay more attention to the robot’s social intelligence rather than to its external attraction [30]. Robots with a certain level of social intelligence can produce appropriate responses in the social environment [58]. This allows people to feel that the robot can understand their needs in the interaction and that the interaction process is natural and smooth thereby reassuring them that the robot will be capable of cooperating with them.

Observing the behaviour of robots is one of the ways in which to judge the social intelligence of robots. For example, people can judge whether robots understand their intentions by observing robots’ behaviours and whether they can continue to cooperate. Duffy [30] emphasized the influence of appearance and voice/language on judgements of robot intelligence. Robots with high degrees of anthropomorphism, i.e., they exhibit human-like behaviours such as the production of gestures and expressions, can gain status in human-robot interactions. Mirnig et al. [59] purposefully programmed wrong behaviours into a robot’s program and had participants attempt to complete the task of building Lego bricks with robots that made mistakes and did not make mistakes. They found that the participants preferred the robots that made mistakes. Ragni et al. [60] used experiments to solve reasoning tasks and memorize numbers in competitive game scenarios and provided additional evidence for the study of the intelligence of robots that make mistakes on purpose–the robots that made mistakes aroused more positive emotions. Churamani [61] explored the impact of a human companion robot’s personalized interaction ability on human perceived intelligence. That study used a learning scenario that allowed users to conduct personalized conversations before teaching a robot to recognize different objects and discovered that people will feel that robots with personalized interactive functions are smarter and lovable.

This study evaluates people’s perceived intelligence by observing interactions with robots with different altruistic behaviours in different reciprocity situations. It further explores the role of perceived intelligence in robots’ altruistic behaviours and reciprocity affecting human-robot trust.

3 Hypothesis

Compared with interpersonal trust, in the process of interacting with robots, human-robot trust is affected not only by reciprocity and altruism but also by the intelligence of robots. Therefore, this study explores the influence of reciprocity and robots’ altruistic behaviour on human-robot trust, including emotional trust, cognitive trust, and behavioural trust, and it explores the mediating role of perceived intelligence in these influences. This study constructs models of reciprocity, robots’ altruistic behaviour, human-robot trust and perceived intelligence and proposes seven hypotheses. The model frame is shown in Fig. 1.

Subjective trust includes two forms: cognitive trust and emotional trust [5]. The former is based on the individual’s belief in peer reliability, and the latter is based on mutual care and concern. When the robot exhibits altruistic behaviour, it may be considered by people to be reliable, thereby increasing cognitive trust; at the same time, it may also be considered in terms of caring for oneself, that is, the robot does not want people to gain too little, thereby increasing emotional trust. Therefore, hypotheses H1a and H1b that the robot’s altruistic behaviour affects emotional trust and cognitive trust are proposed. In a fixed social network, the reciprocity caused by repeated altruistic behaviours of robots may be intuitively regarded by people as reliability, thereby increasing cognitive trust. However, it may be because people prefer to cooperate in such networks and thereby enhance their emotional relationships with others, thereby increasing emotional trust. Therefore, hypotheses H2a and H2b that reciprocity affects emotional trust and cognitive trust are proposed. In the dynamic process of human-robot interaction, human trust depends on the robot’s behaviour and situational factors, and the robot’s altruistic behaviour and reciprocity will increase human positive feedback, that is, behavioural trust. Therefore, hypotheses H1c and H2c that reciprocity and the altruistic behaviour of robots affect behavioural trust are proposed.

H1

Robots’ altruistic behaviours will increase people’s trust in robots.

H1a

Robots’ altruistic behaviours will increase people’s emotional trust in robots.

H1b

Robots’ altruistic behaviours will increase people’s cognitive trust in robots.

H1c

Robots’ altruistic behaviours will increase people’s behavioural trust in robots.

H2

Cooperation that follows reciprocity will increase people’s trust in robots.

H2a

Cooperation that follows reciprocity will increase people’s emotional trust in robots.

H2b

Cooperation that follows reciprocity will increase people’s cognitive trust in robots.

H2c

Cooperation that follows reciprocity will increase people’s behavioural trust in robots.

Cognitive trust requires less time investment than emotional trust [61], and cognitive trust provides a basis for emotional trust. When people work with robots, at first they do not know the robots and may focus on robot behaviours. Once a reliable impression (cognitive trust) is established through robots’ behaviours, a good perception of robots (emotional trust) will follow. In other words, in the dynamic process of human-robot interaction, robots’ meeting of people’s expectations for robot reliability is conducive to the development of human-robot emotions. Therefore, hypothesis H3 that cognitive trust affects emotional trust is proposed.

H3

In the process of cooperation, people’s cognitive trust in robots significantly affects emotional trust.

When interacting with robots, humans tend to unconsciously assign some human characteristics to them. When people discover that robots can speak or show human-like behaviour, they seem to value the intelligence of robots more than their attractiveness. In this study, when the robot partner exhibits altruistic behaviour, that is, human-like behaviour, humans will make a higher assessment of the robot’s intelligence. In addition, people will also assign their work environment characteristics to human-robot interaction scenarios. When people repeatedly interact in a relatively fixed social network, one of the main goals may be to successfully cooperate with others; thus, people may prefer to cooperate with robots and believe that robots have a higher level of intelligence in a reciprocity scenario. Therefore, hypotheses H4 and H5 regarding the influence of robots’ altruistic behaviour and reciprocity on perceived intelligence are proposed.

H4

People have a higher level of perceived intelligence in a reciprocity scenario.

H5

People have a higher level of perceived intelligence when robots exhibit altruistic behaviour.

In the process of human-robot interaction, once the robot exhibits human-like behaviour, people will re-evaluate its intelligence level, which will affect the interaction; thus, the robot partner must show a certain degree of intelligence in terms of physical appearance or language behaviour. Robot altruistic behaviour is a typical social human-like behaviour. Once the robot exhibits this behaviour during an interaction, people will guess the robot’s motivation and inadvertently endow the robot with a certain level of intelligence, which will play a role in the effect of robots’ altruistic behaviour on human-robot trust. According to the characteristics of static social networks, in reciprocity scenarios, people expect to achieve cooperation goals through repeated interactions with robots and achieve a win-win situation, which means that people believe that robots in reciprocity scenarios have more social intelligence required for intelligent interaction. This will play a role in the influence of reciprocity on human-robot trust. This kind of meaningful interaction contributes to the development of cognitive trust, emotional trust, and behavioural trust. Therefore, hypotheses H6 and H7 regarding the mediating role of perceived intelligence are proposed.

H6

Perceived intelligence mediates the effects of robots’ altruistic behaviours on human-robot trust.

H6a

Perceived intelligence mediates the effects of robots’ altruistic behaviours on emotional trust.

H6b

Perceived intelligence mediates the effects of robots’ altruistic behaviours on cognitive trust.

H6c

Perceived intelligence mediates the effects of robots’ altruistic behaviours on behavioural trust.

H7

Perceived intelligence mediates the effects of reciprocity on human-robot trust.

H7a

Perceived intelligence mediates the effects of reciprocity on emotional trust.

H7b

Perceived intelligence mediates the effects of reciprocity on cognitive trust.

H7c

Perceived intelligence mediates the effects of reciprocity on behavioural trust.

4 Method

4.1 Participants

In this study, 42 participants were recruited through questionnaires, all of whom were graduate students at Beijing University of Chemical Technology. Among them, 26 were females (62%), 16 were males (38%), 38 were aged between 18 and 25, accounting for 90%, and 4 were aged between 26 and 30, accounting for 10%. The participants’ social relationships were also assessed based on 4 questions: being able to receive support and help from friends, the state of being alone for a year, participating in activities of group organizations (union, student organizations), and support and care from peers. These questions were measured using a five-point Likert scale, where 1 = strongly disagree and 5 = strongly agree. According to the results of descriptive statistical analysis, most of the subjects believed that they had enough close friends who could provide support and help (M = 3.95, SD = 0.73), and they had rarely been alone in the past year (M = 2.31. SD = 1.13), they often participate in activities organized by a group (M = 3.36, SD = 1.19) and can obtain support and care from their peers (M = 4.21, SD = 064). Detailed information is shown in Table 1.

All participants were divided into two groups, with 21 persons in each group. The numbers of female participants in the two groups were 15 and 11, respectively. According to the t-test results, there were no significant differences between the two groups in terms of gender ratio, friend relationship, life status, group activity experience, or friend support (all p > 0.05). Detailed information is shown in Table 1.

4.2 Measures

This study contains two independent variables involving robots’ altruistic behaviour and reciprocity. Among them, the robots’ altruistic behaviour is the within-subject variable (three levels: selfish, altruistic, control), and the reciprocity is the between-subjects variable (two levels: reciprocity and non-reciprocity). The study includes three dependent variables, cognitive trust, emotional trust and behavioural trust, and includes an intermediate variable: perceived intelligence.

This study uses the trust questionnaire developed by Komiak and Benbasat [47] to measure cognitive trust and emotional trust. In the original scale, the scales of cognitive trust and emotional trust had 5 questions and 3 questions, respectively. This study does not involve robot knowledge and deception. Therefore, the two questions “This RA has good knowledge about products” and “This RA is honest” on the scale of cognitive trust in the original questionnaire were deleted. The scale has 6 questions involving 3 items in both the scales of cognitive trust and emotional trust. This study also modified the description of virtual agent system (agent) behavior in the Komiak and Benbasat scales to the description of the robot's behavior in related tasks in the experiment. For example, change “I consider this RA to be of integrity” to “I consider Mike to be of integrity in the investment game”. Behavioural trust obtains objective behavioural data through experiments, that is, the total amount of money shared by the participants in the trust game and the amount of money allocated to each robot. These data are filled in the experimental system according to the participants and automatically recorded by the system.

Perceived intelligence is measured by the intelligence scale in the Godspeed questionnaire [11]. The original scale has five questions: Incompetent/Competent, Ignorant/Knowledgeable, Irresponsible/Responsible, Unintelligent/Intelligent, and Foolish/Sensible. This study does not involve and measure robot capabilities; thus, the questionnaire “Incompetent/Competent” was deleted. The perceived intelligence scale of this study contains 4 questions.

In addition, this study contains 6 control variables such as friend relationships, life status, activity experience, friend support, and the anthropomorphism and likability of robots. Among them, the anthropomorphism and likability of robots were measured after the experiment, and the remaining 4 variables were measured before the experiment.

Except for age and gender, all subjective scales were measured using a 5-point Likert scale: 1 = strongly disagree; 5 = strongly agree. The age variable was measured in the form of a 6-point scale, 1 = below 18 years old, 2 = 18–25, 3 = 26–30, 4 = 31–40, 5 = 41–50, 6 = over 60.

4.3 Experimental Design

This study writes a human-robot interaction program-investment game.exe. The program contains a virtual robot, as shown in Fig. 2, The robot can interact with the participants by speaking, which can display information to the subjects through a computer interface and obtain feedback through the subjects’ filling in information to achieve human-robot interaction. The interactive interface is shown in Fig. 3.

In the experiment, the participants needed to complete an investment game with a virtual robot and complete the trust game based on their interactive experience in the investment game. In the investment game, the participants assumed that they were an entrepreneur, and together with three robot entrepreneurs, they invested in 5 public environment renovation projects to improve the competitiveness of the city. Before each investment, the subject and the robot entrepreneur each received a 10 RMB investment fund, and the subject had to decide the amount of this investment. For every 1 RMB of investment a city receives, it will get 1.6 RMB in return. These benefits will be used as rebates, which will be evenly distributed between the subjects and the robot entrepreneurs.

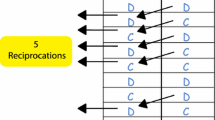

All subjects were divided into two groups with 21 people in each group, specifically, a reciprocity group and a non-reciprocity group. The robot entrepreneurs faced by the two groups were different. For the reciprocity group, in the game, the robot entrepreneurs faced by the participants in different rounds of investment were the same three robots. In each investment, the investment behaviours of the three robot entrepreneurs are different. One has a higher investment, one has a lower investment, and the other has a medium investment. These are the three levels of independent variable robots’ altruistic behaviour. For the non-reciprocity group, the participants faced different entrepreneurs in different rounds of investment, and the system randomly selected from a list of 30 robotics entrepreneurs (the difference between the 30 robots was their names). The differences in the robot entrepreneurs faced by the two groups of subjects are the two levels of the independent variable of reciprocity.

In each investment, the investment behaviours of the three robot entrepreneurs are different. One has a higher investment, one has a lower investment, and the other has a medium investment. These are the three levels of independent variable robots’ altruistic behaviour. Robot behaviour with higher investment is considered altruistic (altruistic robot), robot behaviour with lower investment is considered selfish (selfish robot), and robot behaviour with medium investment is considered control level (control robot). The investment game draws on the classic multi-round Public Goods Game experimental paradigm. In the experimental paradigm, the investment amount of most participants showed a downward trend as the experiment progressed [62, 63]. Therefore, this study assumes that at the beginning of the investment game, the investment amount of altruistic robots is 8, the investment amount of selfish robot is 3, and the investment amount of control robots is 5. With the increase in the number of investment rounds, the investment amounts of the three robots all declined, eventually the investment amount of altruistic robots dropping to 6, the investment amount of selfish robot dropping to 4, and the investment amount of control robots dropping to 1.

After the investment game is over, the participants must complete the trust game. Before the start of the trust game, each participant received a fund of 50 RMB and could invest in three robot entrepreneurs. The participants needed to decide the proportion of the three people and could not deplete the 50 RMB fund. The actual amount obtained by the robot is 3 times the amount allocated by the participant, and the robot will decide whether to rebate the participant. The participants must guess the robot’s rebate based on the interactive experience in the investment game to obtain higher returns. For the reciprocity group, the participants faced the same robot entrepreneurs in the trust game and the investment game. For the non-reciprocity group, the entrepreneurs faced by the participants in the trust game were the most, medium, and least invested robot entrepreneurs in the investment game.

4.4 Experimental Setup

Each participant participated in the experiment individually. Before the start of the experiment, the subjects signed an informed consent form, read the experiment description and game background, and completed the pre-experiment questionnaire, including friend relationship, life status, group activity experience, friend support, the anthropomorphism and likability of the robots, and three problem-related experimental rules. These three problems confirm whether they understand the rules of the game including “In the investment game, will your return of each round be affected by the robot partner?”, “In the investment game, will the amount of your investment affect the return of the robot partner?”, and “If there is an impact, which part of the income of the other party is mainly affected by your investment amount?”. There was no significant difference between the statistical results of the three questions and the correct answers, which means that the participants understood the rules of the game correctly. After the experimenter confirmed that the participants completed the questionnaire, they showed the experimental interface of the investment game.exe, as shown in Fig. 4a. The three robots greeted the participants in turn, as shown in Fig. 4b.

The experimenter left, the game started, and the participants participated in each round of the investment game and trust games in turn. In each round of investment, the participants first filled in the amount of investment for the project in the program, as shown in Fig. 4c and d. Then, the participants learned the investment amount of other robot entrepreneurs, as shown in Fig. 4e, and the income of this round, as shown in Fig. 4f. In the trust game, the participant decided to allocate money to three robots, as shown in Fig. 5a, and received feedback from the robot rebates, as shown in Fig. 5b.

At the end of the game, the participants completed a post-experiment questionnaire, which included questions addressing aspects such as cognitive trust, emotional trust, perceived intelligence, and the anthropomorphism and likability of robots.

5 Results

The data analysis of this study consists of three steps. First, we perform descriptive statistics of variables, analyse the correlation between variables, and check whether there are differences between the two sets of samples. Second, we check the influence of reciprocity and robots’ altruistic behaviour on trust. Third, we examine how perceived intelligence mediates the effects of robots’ altruistic behaviours and reciprocity on cognitive trust, emotional trust and behavioural trust.

5.1 Manipulation Check

According to the results of the t-test, there was no significant difference between the two groups in terms of friend relationships, life status, group activity experience, friend support, the anthropomorphism and likability of robots (all p > 0.05), as shown in Table 1. According to the results of the chi-square test, there was no significant difference in the sex ratio between the two groups (p = 0.341). These results indicate that further data analysis can be carried out.

The reliability test results show that the Cronbach’s alpha values of perceived intelligence, cognitive trust, and emotional trust are 0.83, 0.62, and 0.77, respectively, which are all greater than 0.6, which indicates that the internal reliability of these three scales is acceptable [64].

According to the correlation test results, there is a significant correlation between perceived intelligence, cognitive trust, emotional trust and behavioural trust (all p < 0.05), as shown in Table 2. These results indicate that further data analysis can be carried out.

5.2 The Effects of Reciprocity and Robots’ Altruistic Behaviours on Trust

According to the results of descriptive statistical analysis, in each scenario, the mean values of perceived intelligence are greater than 3.1, indicating that the participants perceive a certain level of intelligence of the robot. For the subjects’ emotional trust and cognitive trust, when the robots are selfish, their mean values are 2.51 and 2.62, respectively, the value is less than the mid point, indicating that they do not trust the robots numerically. In other scenarios, their mean values are greater than 3.1, the value is greater than the mid point, indicating that they do not trust the robots numerically. For the participants’ behavioural trust, when the robot is selfish, the average value is 6.77RMB (indicating the amount of money that the robot is willing to allocate from the 50 RMB fund in the trust game), and when the robot is altruistic, the average value is 15.54RMB. 15.54 RMB is greater than 6.77RMB, These two numbers indicate that altruistic robots are numerically more trustworthy; in the scenario of reciprocity, the mean value is 31.14RMB (indicating the total amount of money to be distributed to three robots from a 50 RMB fund in the trust game), and in the scenario of non-reciprocity, the mean value is 19.29RMB, 31.14 RMB is greater than19.29RMB, which means that the subjects’ behavioural trust is numerically higher in the scenario of reciprocity. The specific descriptive statistical results are shown in Table 3.

Analysis of variance was used to test the influence of the robots’ altruistic behaviours and reciprocity on the subjects’ trust. The data analysis results show that the robots’ altruistic behaviours and reciprocity have no interaction effect on emotional and cognitive trust (both p > 0.05). Therefore, only the main effects of the robots’ altruistic behaviours and reciprocity are considered. The robots’ altruistic behaviours and reciprocity have a significant impact on emotional trust (F = 7.593, p < 0.05; F = 9.525, p < 0.05); the robots’ altruistic behaviours have a significant impact on cognitive trust (F = 8.906, p < 0.05), Reciprocity has no effect on cognitive trust (p > 0.05); the robots’ altruistic behaviours and reciprocity have a significant effect on behavioural trust (F = 46.265, p < 0.05; F = 388.760, p < 0.05). The specific results are shown in Table 4.

To correct the situation where the error of the first type error has increased due to multiple comparisons, Bonferroni is used to correct the post-test p-value. According to the corrected results, in the scenario of non-reciprocity, the subjects’ emotional trust is significantly higher than in the scenario of reciprocity (M = 3.462, SD = 0.077, M = 3.13, SD = 0.077, t = 0.325, p = 0.006). The participants’ behavioural trust in the scenario of non-reciprocity was lower than the value in the scenario of reciprocity (M = 19.29, SD = 6.68; M = 31.14, SD = 6.79, t = − 0.05, p < 0.001). There was no difference in the cognitive trust of the participants in the two scenarios (M = 3.25, SD = 0.56; M = 3.20, SD = 0.53, t = 1.71, p = 0.559).

The participants’ emotional trust in altruistic robots was higher than in selfish robots (t = 0.257, p < 0.001), and there was no significant difference between control robots and the other two levels. The participants’ cognitive trust in altruistic robots was higher than those of the other two levels (altruistic-selfish, t = 0.239, p = 0.042; altruistic-control, t = 1.075, p < 0.001). There was no significant difference between control robots and selfish robots (p > 0.05). The participants’ behavioural trust in robots was higher than that of the other two levels (altruistic-selfish, t = 0.109, p < 0.001; altruistic-control, t = 0.139, p < 0.001). There was no significant difference between control robots and selfish robots (p > 0.05). The specific results are shown in Table 5.

5.3 The Mediating Role of Perceived Intelligence and the Relationship Between Cognitive Trust and Emotional Trust

Regression analysis was used to examine the relationship between cognitive trust and emotional trust and the mediating role of perceived intelligence. The data analysis results show that cognitive trust has a significant positive impact on emotional trust (B = 0.820, p < 0.05). For emotional trust, in Model 1, the mediator perceived intelligence has a significant regression effect on the independent variable reciprocity and robots’ altruistic behaviours (reciprocity: B = − 0.211, p < 0.05; robots’ altruistic behaviours: B = 0.264, p < 0.05). In model 2, the dependent variable emotional trust has a significant regression effect on the independent variable reciprocity and robots’ altruistic behaviours (reciprocity: B = 0.267, p < 0.05; robots’ altruistic behaviours: B = 0.499, p < 0.05). In model 3, the dependent variable emotional trust has a significant regression effect on the independent variable reciprocity and robots’ altruistic behaviours and the mediator perceived intelligence (all p < 0.05); thus, perceived intelligence partially mediates the effects of the robots’ altruistic behaviours and reciprocity on emotional trust.

For cognitive trust, in model 1, the mediator perceived intelligence has a significant regression effect on the independent variable reciprocity and the robots’ altruistic behaviours (reciprocity: B = − 0.211, p < 0.05; robots’ altruistic behaviours: B = 0.264, p < 0.05). In model 2, the dependent variable cognitive trust has a significant regression effect on the independent variable robots’ altruistic behaviours (B = 0.475, p < 0.05) and no significant regression effect on the independent variable reciprocity (p > 0.05). In model 3, the dependent variable cognitive trust has a significant regression effect on the independent variable robots’ altruistic behaviours and the mediator perceived intelligence (all p < 0.05). Therefore, perceived intelligence partially mediates the effects of robots’ altruistic behaviours on cognitive trust but does not mediate the effects of reciprocity on cognitive trust.

For behavioural trust, in Model 3, the perceived intelligence coefficient did not reach the significance level, and the Sobel test was further used to verify its mediating effect. According to the test results (reciprocity: p = 0.364; robots’ altruistic behaviours: p = 0.766), perceived intelligence does not mediate the effects of reciprocity and robots’ altruistic behaviours on behavioural trust (all p > 0.05). The specific results are shown in Table 6.

6 Discussion

H1 is verified, and people’s emotional trust, cognitive trust and behavioural trust in altruistic robots are significantly higher than those in control and selfish robot partners, which is consistent with the previous situation in interpersonal collaboration. That is, for partners with different levels of altruism, people will trust more generous partners and distribute money to the most selfless group members [65]. The most altruistic members may attract the best or most partners [62].

H2 is only partially verified. In the reciprocity scenario, the participants’ behavioural trust was higher than in the non-reciprocity scenario. However, the former’s emotional trust is lower while cognitive trust is not different in these scenarios. The relevant conclusions of behavioural trust are consistent with those of the previous studies. People in the scenario of reciprocity prefer to cooperate with robots to obtain more benefits in long-term interactions. This trust incentive leads to the emergence of higher behavioural trust [62]. Regarding emotional trust, there has been little research in the past to explore its relationship with reciprocity. In the scenario of reciprocity, the participant’s robot partner is fixed. Hancock et al. [1] pointed out that people ignore the existence of the robot when they get along with the robot for a long time, neglect tolerance should be appropriately adjusted according to the ability of the robot and the degree of human-robot trust. Too much neglect will make it difficult for individuals to regain situation awareness after redirecting attention back towards the robot. Too little neglect means that people do not pay attention to their own tasks, which leads to poor results in the entire human-robot interaction process. In the scenario of nonreciprocity, the participant’s robot partner is constantly changing, which is less likely to reduce trust due to ignoring the existence of the robot. The participant’s emotional trust can always be maintained in a relatively stable state; thus, in the scenario of reciprocity, the participants’ emotional trust is higher than in the scenario of no reciprocity; however, the specific mechanism requires further verification. For cognitive trust, reciprocity has no effect on it, which is consistent with the research of Gompe et al. [51]. Emotional trust develops faster than cognitive trust in the early stages of human-robot interaction. Cognitive trust develops faster than emotional trust in the latter stages of human-robot interaction. In this study, the human-robot interaction time in the experiment was shorter, emotional trust was developed, and cognitive trust was not fully established; thus, the latter did not show the influence of experimental variables.

H3 is verified, and people’s cognitive trust in robots significantly affects emotional trust, which is consistent with previous studies on interpersonal interaction. For example, Punyatoya [61] proposed that consumer cognitive trust and emotional trust in online retailers are positively correlated. Johnson et al. [66] believe that customers’ cognitive trust in service providers significantly affects emotional trust.

H4 and H5 are verified; that is, robots’ altruistic behaviours and reciprocity affect perceived intelligence. Robots can reflect their intelligence through speech and behaviour. Once the robot shows some human-like characteristics (such as speaking, language), humans will unconsciously evaluate their intelligence level [11]; for example, Correia et al. [67] believe that robots’ emotions will affect people’s perception of robots, and people prefer robots with group emotions. The robots’ altruistic behaviours involved in this study are typical social human-like behaviours [68], and the participants believe that altruistic robots have a higher degree of intelligence. In addition, in social interaction, according to the principle of reciprocity, people hope to achieve cooperation through repeated interactions and achieve a win-win situation. In this study, the subjects were more likely to think that the robot had social intelligence because of its repeated interaction ability in the scenario of reciprocity and thus had a higher evaluation of its intelligence level.

H6 and H7 are partially verified. Perceived intelligence moderated the effects of reciprocity and robot altruistic behaviours on cognitive and emotional trust but did not moderate the effects of reciprocity and robot altruistic behaviours on behavioural trust. Behavioural trust mainly depends on the investment behaviour of robots, and this type of robot investment behaviour does not reflect sensitivity to human needs and interests [56]; that is, the level of social intelligence of robots cannot be reflected; thus, perceived intelligence did not moderate the effects of reciprocity and robot altruistic behaviours on behavioural trust; however, the specific influence mechanism requires further research.

The results of this study show that reciprocity and robot altruistic behaviours affect human-robot trust, which is basically consistent with the research related to interpersonal trust. Van Den Bos et al. [69] believe that the reciprocity of trust is very important in social interactions, and reciprocity motivation affects mutual trust among individuals. The human-robot interaction in this study is also affected by reciprocity, which affects human-robot trust. Dohmen [70] further discussed the relationship between trust and reciprocity and found that trust is weakly related to positive reciprocity, while trust is negatively related to negative reciprocity. The research on human-robot interaction requires future study. Barclay [62] shows that people tend to trust altruistic individuals and competitive altruism helps maintain cooperative behaviour. This study also proves that people are more inclined to trust altruistic robots, and such robots can maintain a certain degree of cooperation and trust. This study draws on the related research theories of binary interpersonal trust, measures emotional trust and cognitive trust, and finds that in the human-robot relationship, cognitive trust also has a significant impact on emotional trust, which is consistent with the extant conclusions about interpersonal trust [61, 66]. This means that the relevant research conclusions of interpersonal reciprocity, altruism and trust can be borrowed from the context of human-robot interaction, which provides a basis for the development of the theory of human-robot interaction in the future.

In addition, this study measures objective trust, that is, behavioural trust. The results show that the impact of robots’ altruistic behaviour on subjective (emotional trust and cognitive trust) and objective trust (behavioural trust) is consistent. When robots are altruistic, the degree of human trust is higher, and reciprocity has an inconsistent influence on subjective trust and objective trust. Cooperation following reciprocity will increase the participants’ behavioural trust but will not increase emotional trust and cognitive trust. One possible reason for the inconsistent explanation is that, compared with subjective trust, behavioural trust depends more on the subjects’ intuitive feelings in the experiment. In the scenario of reciprocity, when humans and robots repeatedly interact in a fixed social network, one of the main goals may be to successfully cooperate with others [17, 18], with the aim of obtaining more dividends in the trust game. Therefore, behavioural trust is higher than in the scenario of non-reciprocity. For subjective trust, in addition to the intuitive experience in the experiment, the participants’ feelings on the reliability of the robot and the emotional relationship were also needed. At present, the intelligence and sociality of robots still have certain limitations. People may recognize that robots are not humans, and their social attributes are not equivalent to those of humans. Therefore, changes in reciprocity alone cannot significantly increase people’s subjective trust.

Finally, this research is carried out in a laboratory environment drawing on the mature trust experiment paradigm and abstracting the complex human-robot interaction scenario into simpler experimental tasks. In future research, more complex tasks and scenarios and longer-term human-robot interaction scenarios will be considered, providing more empirical results for the human-robot interaction and human-robot trust research. In addition, this study focused on robots’ altruistic behaviour. Future studies can consider more robots’ social behaviours to explore the impact of the anthropomorphism of robots on human-robot trust, as well as the similarities and differences with interpersonal trust, to facilitate the development of intelligent robot products with high reliability and acceptability. This study explores the influence of robots’ behaviour and reciprocity on human-robot trust. Future research can expand to explore the influence of human factors on human-robot trust. Previous studies on human-robot trust more often regard trust as a single dimensional variable, and the results suggest that factors related to people, such as personality characteristics, demographic characteristics (culture, educational background) [71], and self-construction [72], may affect human-robot trust although the influence is small [1]. This study analyses human-robot trust in more depth and explores it from multiple dimensions such as emotion, cognition, and behaviour. It is found that the influence of human-robot trust in this case is different from previous research conclusions. Therefore, future research can further explore the influence of human factors on multi-dimensional human-robot trust.

7 Conclusions

The social attributes and anthropomorphic behaviour of robots are one of the most important factors affecting human-robot interaction. Altruistic behaviour is a typical social behaviour, and reciprocity is a typical social interaction criterion. This study investigated the influence of reciprocity and robots’ altruistic behaviour on human-robot trust from multiple dimensions, such as emotional trust, cognitive trust and behavioural trust, and it studied how perceived intelligence mediates the effects of reciprocity and robots’ altruistic behaviours on human-robot trust. Based on the classic trust game paradigm, this study designed a human-robot interaction experiment. An experiment involving 42 participants was conducted. The experimental results show that robots’ altruistic behaviour has a significant impact on emotional trust, cognitive trust, and behavioural trust. People’s emotional trust, cognitive trust, and behavioural trust of altruistic robots are significantly higher than those of the other two levels. Reciprocity has a significant impact only on emotional trust and behavioural trust but no impact on cognitive trust. In the non-reciprocity scenario, people’s emotional trust in robots is higher than that in the reciprocity scenario, and behavioural trust is the opposite. Perceived intelligence plays a mediating role in the influence of robots’ altruistic behaviours and reciprocity on emotional trust and cognitive trust but does not play a mediating role in the influence of behavioural trust.

According to the study results, human-robot trust should be explored from a multi-dimensional perspective. There may be differences that different dimensions of trust may be affected by scenario and robot factors. The generation mechanism of human-robot trust is similar to interpersonal trust including the fact that reciprocity and robots’ altruistic behaviours affect human-robot trust. In particular, this study proves that people are more inclined to trust altruistic robots, which is highly consistent with the research conclusions related to interpersonal trust, In addition, this study draws on the related research theories of dualistic interpersonal trust, measures emotional trust and cognitive trust, and finds that in the human-robot relationship, cognitive trust also has a significant impact on emotional trust, which is also consistent with the conclusions about interpersonal trust. In addition, in human-robot interaction scenarios, reciprocity can be adjusted to improve the emotional relationship between humans and robot partners. Furthermore, the study finds that only when robots show certain social intelligence can they carry out meaningful activities with people, and the altruistic tendency of robots significantly affects human-robot trust. This study provides implications for the design of future human-robot collaboration mechanisms. Further, it will help relevant researchers develop more reliable and trustworthy robot companions and design a more efficient human-robot interaction environment.

References

Hancock PA, Billings DR, Schaefer KE, Chen JY, De Visser EJ, Parasuraman R (2011) A meta-analysis of factors affecting trust in human-robot interaction. Hum Factors 53(5):517–527. https://doi.org/10.1177/0018720811417254

Lee JJ, Knox B, Baumann J, Breazeal C, DeSteno D (2013) Computationally modeling interpersonal trust. Front Psychol 4:893. https://doi.org/10.3389/fpsyg.2013.00893

Haring KS, Matsumoto Y, Watanabe K (2013) How do people perceive and trust a lifelike robot. In: Proceedings of the world congress on engineering and computer science, San Francisco, USA, pp 1–6

Sale, M, Lakatos G, Amirabdollahian F, Dautenhahn K (2015) Would you trust a (faulty) robot? Effects of error, task type and personality on human-robot cooperation and trust. In: 10th ACM/IEEE international conference on human-robot interaction (HRI), IEEE, pp 1–8.

McAllister DJ (1995) Affect-and cognition-based trust as foundations for interpersonal cooperation in organizations. Acad Manag J 38(1):24–59. https://doi.org/10.5465/256727

Schaubroeck J, Lam SS, Peng AC (2011) Cognition-based and affect-based trust as mediators of leader behavior influences on team performance. J Appl Psychol 96(4):863. https://doi.org/10.1037/a0022625

Gouldner AW (1960) The norm of reciprocity: a preliminary statement. Am Sociol Rev 25(2):161–178. https://doi.org/10.2307/2092623

Fogg BJ, Nass C (1997) How users reciprocate to computers: an experiment that demonstrates behavior change. In: CHI'97 extended abstracts on Human factors in computing systems, NewYork, NY, USA, pp 331–332. https://doi.org/10.1145/1120212.1120419

Lee SA, Liang Y (2016) The role of reciprocity in verbally persuasive robots. Cyberpsychol Behav Soc Netw 19(8):524–527. https://doi.org/10.1089/cyber.2016.0124

Salem M, Rohlfing K, Kopp S, Joublin F (2011) A friendly gesture: investigating the effect of multimodal robot behavior in human-robot interaction. In: 2011 Ro-Man, IEEE, pp 247–252. https://doi.org/10.1109/ROMAN.2011.6005285

Häring M, Eichberg J, André E (2012) Studies on grounding with gaze and pointing gestures in human-robot-interaction. In: International conference on social robotics, Springer, Singapore, pp 378–387. https://doi.org/10.1007/978-3-642-34103-8_38

Bartneck C, Croft E, Kulic D (2008) Measuring the anthropomorphism, animacy, likeability, perceived intelligence and perceived safety of robots. In: Proceedings of the metrics for human-robot interaction workshop in affiliation with the 3rd ACM/IEEE international conference on human-robot interaction (HRI 2008), Technical Report 471, Amsterdam, pp 37-44. https://doi.org/10.6084/m9.figshare.5154805

Powers A, Kiesler S, Fussell S, Torrey C (2007) Comparing a computer agent with a humanoid robot. In: Proceedings of the ACM/IEEE international conference on Human-robot interaction, pp 145–152. https://doi.org/10.1145/1228716.1228736

Bainbridge WA, Hart JW, Kim ES, Scassellati B (2011) The benefits of interactions with physically present robots over video-displayed agents. Int J Soc Robotics 3(1):41–52. https://doi.org/10.1007/s12369-010-0082-7

Batson CD, Powell AA (2003) Altruism and prosocial behavior. Handb Psychol. https://doi.org/10.1002/0471264385.wei0519

Penner LA, Dovidio JF, Piliavin JA, Schroeder DA (2005) Prosocial behavior: multilevel perspectives. Annu Rev Psychol 56:365–392. https://doi.org/10.1146/annurev.psych.56.091103.070141

Leider S, Möbiu MM, Rosenblat T, Do Q-A (2009) Directed altruism and enforced reciprocity in social networks. Q J Econ 124(4):1815–1851. https://doi.org/10.1162/qjec.2009.124.4.1815

Jordan JJ, Rand DG, Arbesman S, Fowler JH, Christakis NA (2013) Contagion of cooperation in static and fluid social networks. PLoS ONE 8(6):e66199. https://doi.org/10.1371/journal.pone.0066199

Christakis NA, Fowler JH (2009) Connected: The surprising power of our social networks and how they shape our lives. Little, Brown Spark

Fowler JH, Christakis NA (2010) Cooperative behavior cascades in human social networks. Proc Natl Acad Sci 107(12):5334–5338. https://doi.org/10.1073/pnas.0913149107

Hill AL, Rand DG, Nowak MA, Christakis NA (2010) Emotions as infectious diseases in a large social network: the SISa model. Proc Royal Soc B: Biol Sci 277(1701):3827–3835. https://doi.org/10.1098/rspb.2010.1217

Hill AL, Rand DG, Nowak MA, Christakis NA (2010) Infectious disease modeling of social contagion in networks. PLoS Comput Biol 6(11):e1000968. https://doi.org/10.1371/journal.pcbi.1000968

Keser C, Van Winden F (2000) Conditional cooperation and voluntary contributions to public goods. Scand J Econ 102(1):23–39. https://doi.org/10.1111/1467-9442.00182

Kocher MG, Cherry T, Kroll S, Netzer RJ, Sutter M (2008) Conditional cooperation on three continents. Econ Lett 101(3):175–178. https://doi.org/10.1016/j.econlet.2008.07.015

Imre M, Oztop E, Nagai Y, Ugur E (2019) Affordance-based altruistic robotic architecture for human-robot collaboration. Adapt Behav 27(4):223–241. https://doi.org/10.1177/1059712318824697

Correia F, Mascarenhas SF, Gomes S et al (2019) Exploring prosociality in human-robot teams. In: 14th ACM/IEEE international conference on human-robot interaction (HRI), IEEE, pp 143–151. https://doi.org/10.1109/HRI.2019.8673299

Yasumatsu Y, Sono T, Hasegawa K, Imai M (2017) I can help you: Altruistic behaviors from children towards a robot at a kindergarten. In: Proceedings of the companion of the 2017 ACM/IEEE international conference on human-robot interaction, pp 331–332. https://doi.org/10.1145/3029798.3038305

De Kleijn R, van Es L, Kachergis G, Hommel B (2019) Anthropomorphization of artificial agents leads to fair and strategic, but not altruistic behavior. Int J Hum Comput Stud 122:168–173. https://doi.org/10.1016/j.ijhcs.2018.09.008

Nishio S, Ogawa K, Kanakogi Y, Itakura S, Ishiguro H (2018) Do robot appearance and speech affect people’s attitude? Evaluation through the ultimatum game. In: Geminoid Studies, Springer, Singapore, pp 263–277. https://doi.org/10.1007/978-981-10-8702-8_16

Duffy BR (2003) Anthropomorphism and the social robot. Robot Auton Syst 42(3–4):177–190. https://doi.org/10.1016/S0921-8890(02)00374-3

Fehr E, Gächter S (1998) Reciprocity and economics: the economic implications of homo reciprocans. Eur Econ Rev 42(3–5):845–859. https://doi.org/10.1016/S0014-2921(97)00131-1

Hsieh TY, Chaudhury B, Cross ES (2020) Human-Robot Cooperation in prisoner dilemma games: people behave more reciprocally than prosocially toward robots. In: companion of the 2020 ACM/IEEE international conference on human-robot interaction, pp 257–259. https://doi.org/10.1145/3371382.3378309

Luria M (2018) Designing robot personality based on fictional sidekick characters. In: companion of the 2018 ACM/IEEE international conference on human-robot interaction, pp 307–308. https://doi.org/10.1145/3173386.3176912

Fraune MR, Oisted BC, Sembrowski CE, Gates KA, Krupp MM, Šabanović S (2020) Effects of robot-human versus robot-robot behavior and entitativity on anthropomorphism and willingness to interact. Comput Hum Behav 105:106220. https://doi.org/10.1016/j.chb.2019.106220

Muscolo GG, Recchiuto CT, Campatelli G, Molfino R (2013) A robotic social reciprocity in children with autism spectrum disorder. In: 5th international conference on social robotics, ICSR, pp 574–575.

Sandoval EB, Brandstetter J, Obaid M, Bartneck C (2016) Reciprocity in human-robot interaction: a quantitative approach through the prisoner’s dilemma and the ultimatum game. Int J Soc Robotics 8(2):303–17. https://doi.org/10.1007/s12369-015-0323-x

Broadbent E, Peri K, Kerse N, Jayawardena C, Kuo I, Datta C, MacDonald B (2014) Robots in older people’s homes to improve medication adherence and quality of life: a randomised cross-over trial. In: International conference on social robotics, Springer, Cham, pp 64–73. https://doi.org/10.1007/978-3-319-11973-1_7

Kahn PH, Friedman B, Perez-Granados DR, Freier NG (2006) Robotic pets in the lives of preschool children. Interact Stud 7(3):405–436. https://doi.org/10.1145/985921.986087

Kiesler S, Sproull L, Waters K (1996) A prisoner’s dilemma experiment on cooperation with people and human-like computers. J Pers Soc Psychol 70(1):47. https://doi.org/10.1037//0022-3514.70.1.47

Sandoval EB, Brandstatter J, Yalcin U, Bartneck C (2020) Robot likeability and reciprocity in human robot interaction: using ultimatum game to determinate reciprocal likeable robot strategies. Int J Soc Robot. https://doi.org/10.1007/s12369-020-00658-5