Abstract

In recent years there has been an increasing interest in deploying robotic systems in public environments able to effectively interact with people. To properly work in the wild, such systems should be robust and be able to deal with complex and unpredictable events that seldom happen in controlled laboratory conditions. Moreover, having to deal with untrained users adds further complexity to the problem and makes the task of defining effective interactions especially difficult. In this work, a Cognitive System that relies on planning is extended with adaptive capabilities and embedded in a Tiago robot. The result is a system able to help a person to complete a predefined game by offering various degrees of assistance. The robot may decide to change the level of assistance depending on factors such as the state of the game or the user performance at a given time. We conducted two days of experiments during a public fair. We selected random users to interact with the robot and only for one time. We show that, despite the short-term nature of human–robot interactions, the robot can effectively adapt its way of providing help, leading to better user performances as compared to a robot not providing this degree of flexibility.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Nowadays, Human–Robot Interaction (HRI) can be considered one of the major open research areas in the Robotics field. HRI is essential for the upcoming generation of robots that will have to directly interact with people, assistive and social robots [6] among them. Robots will be programmed to assist the elderly or people with disabilities [21], support military forces in search-and-rescue missions [17] or perform services in social environments [1].

The latest advances concerning robots in real-world environments have brought a series of challenges to be faced. In fact, taking robots out from the laboratory ecosystem is a complex task as it introduces new problems related to unpredictable scenarios [11] where it is necessary for the machines to act autonomously and irrespective of changes in their surroundings [23]. The minimal requirements for a robot to be interesting and accepted as useful is to be able to catch the users’ attention and to behave in a way that can be easily interpreted. It has to be robust to deal with a number of unexpected events and, as an essential and not negotiable characteristic, it has to act safely and in a reassuring manner. Alongside with those, a system of this kind must aim for the highest level of flexibility, adaptability, modularity and re-programmability.

A fundamental requirement for robots that need to interact shortly with many different people is quick adaptation. Long-term HRI (LTHRI) focuses on social robots able to interact with the same person over long periods of time to effect persistent behavioural changes. In contrast, here we are concerned with short-term HRI (STHRI) focusing on social robots interacting with new individuals all the time without prior information about them. STHRI can be applied in several contexts where the robot could assume different roles such as receptionist, shopping assistant, tour guide or, like in our case, helper while playing a game.

We define the main aspects of STHRI in our context following [24]:

-

the user does not have any training on how to interact with the robot and he/she is not fully aware of the robot capabilities;

-

each session of interaction involves a different user (with different age, background, personality, etc);

-

in each session, the robot starts learning from scratch and adapts to that user over time;

-

interactions must be effective, i.e., help the user to complete the game.

In this paper, we present the results of a two-day experiment at the Barcelona Maker Faire 2018.Footnote 1 Regarding the set-up, each participant visiting our booth was asked to play a puzzle game, consisting of composing the name of a Nobel Prize Winner with the assistance of the robot (see Fig. 1). The game was designed to be ambiguous enough to require assistance in order to be solved. For each user, we measured not only the time to complete the game but also the partial reaction time for each token to be placed. Finally, a post-questionnaire was administered to the user to evaluate his/her overall experience during the game.

Concerning STHRI, we introduced a Cognitive System embedded in a robot able to provide encouragement and several levels of assistance to help the user during the game. The Cognitive System, enhanced with an adaptive module is able to adapt to the tracked user behaviour and, at each stage of the game, select the most suitable assistive action. In parallel, a safety checker analyzes every unsafe gesture undertaken by the user and eventually reacts on it to ensure that no physical contact with the robot occurs.

In the STHRI context, we address the following research question:

-

Can a robot rapidly adapt to a specific user behaviour and have an impact on his/her overall performance?

Proposing an answer to this question could contribute to clarify the role of assistive robots for improving users’ performance in cognitive training exercises, especially in situations in which the robot has no prior information about the user and the number of interactions is limited.

This work has been carried out in the framework of the European Project SOCRATES,Footnote 2 which focuses on Robotics in Eldercare. The project aims to address the issues related to Interaction Quality (IQ) [5] in Social Robotics. Our role in the project is to develop a Cognitive System embedded in a robot that can be employed by a caregiver to administer cognitive exercises to people affected by Mild Cognitive Impairment or Alzheimer’s Disease, adapting the kind of assistance to the individual user’s needs. For this purpose, as an interim stage before experimenting the robot with elderly people and patients, we decided to validate our Cognitive System in a real-world environment. At the same time, there is the need to investigate whether the robot could meet the users’ expectations. This kind of context is undoubtedly more complex and challenging than the laboratory one and it has been chosen as a test scenario so as to expose the robot to untrained and nontechnical participants, who can offer valuable feedback and potentially highlight the weaknesses of the system.

2 Related Work

Deploying robot applications in public spaces is still a challenging task. However, there are some relevant examples in literature of a robotic platform acting in a museum, an exhibition hall, a shopping mall and so on.

Chen et al. [9] present a shopping mall service robot, called Kejia, which is designed for customers guidance, providing information and entertainment in a real environment. Kanda et al. [18] develop a robotic guide for a shopping mall, designed to interact with people and provide shopping information. Bennewitz et al. [4] present a robotic system, called Alpha, that makes use of visual perception, sound source localisation, and speech recognition, to detect, track and interact with potential users. Tonkin et al. [27] conduct experiments to validate a robot system in a shopping centre. In their work, they compare the performance of a robot and a human in promoting food samples and analyse the effects of the type of engagement used to achieve this goal.

With the aforementioned works, we share the idea of validating the robot in real-world environments, where it could be more exposed to unexpected events and to a wide variety of users. Contrarily to those works, we consider the robot as a social companion that provides assistance for completing a cognitive task rather than as a tool providing a service.

The majority of works on social assistive robots (SAR) employed for task learning and training use techniques that require the acquisition of a quite large amount of data in order to start providing the desired behaviour. Tsiakas et al. [28] propose a framework based on Interactive Reinforcement Learning (IRL) that combines task performance and engagement to achieve personalization in the context of cognitive training. The robot is able to select the type of feedback most tailored to the user to assist him/her to complete the game. Gao et al. [13] present a robot tutor to assist users in completing a grid-based logic puzzle. The Reinforcement Learning (RL) framework presented allows the robot to select verbal supportive behaviours to maximise the user’s task performance and positive attitude during the game. The generation of the appropriate robot behaviour for the user is selected using a Multi-Armed Bandit (MAB) approach. Leite et al. [20] present an empathic social robot that aims to interact with children in the context of a chess game scenario. Although they focus on long-term interaction, while we are interested in short-term one, their system is able to provide different levels of assistance based on the user’s positive or negative valence of feeling. In their work they use one of the MAB algorithms to define which strategy that maximises the reward, has to be selected. Hemminghaus and Kopp [15] explore how a social robot can learn and adapt from a task-oriented interaction with a user, through different social behaviours. The approach based on RL is implemented in a memory game scenario, in which the Furhat robot assists the user in guiding his attention. Gordon et al. [14] present a Tega robot able to provide personalised tutoring to children learning a second language through gaming on a tablet. Children’ valence and engagement are combined into a reward signal used by a RL algorithm that selects personalised motivation strategies for a given user. Chan and Nejat [8] developed a robot called Brian 2.0, which is able to act as a social motivator providing assistance, encouragement and celebration in a memory game scenario. The adaptive behaviour of Brian is based on a Hierarchical Reinforcement Learning (HRL) technique that provides the robot with the ability to adapt to new people and learn assistive behaviours.

In line with the aforementioned works, we believe in the potential employment of a robotic system for cognitive training, stimulation and learning. However, the presented approaches based on RL algorithms don’t fit our requirements. RL usually requires a considerable amount of data before converging to a reasonable solution. In our context, the number of possible interactions is not enough to be used in an RL framework, so a different approach needs to be evaluated. To deal with that, we propose to use planning to model HRI. Using planning, we are able to embed our own adaptive function directly in the planner cost function and provide effective adaptability already from the first interactions.

An interesting approach that doesn’t require a large amount of data is introduced by Tapus et al. [25]. In their work, they present an adaptive robotic system that provides personalised assistance through encouragements and companionship to individuals suffering from Mild Dementia (MD) and Alzheimer’s Disease (AD) while they are playing a song-discovery game.

Although in the current paper our Cognitive System interacts with the general public, our final goal is to have an experienced and robust system able to provide cognitive training to patients suffering from MD and AD. Inspired by the system presented in [25], we take into account in our adaptive algorithm not only the reaction time and the number of mistakes but also the levels of assistance provided and the game complexity. Differently from their system, we don’t run a supervised learning process to calibrate the levels of assistance for a given user; we learn this information directly during the interactions with the user. Moreover, the levels of assistance we developed are more complex (we combine speech with more complex robot motions) and different in that the game we propose is a board game.

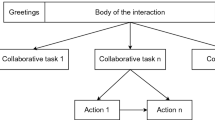

Differing from the related work presented so far, here we are interested in STHRI. One approach to deal with STHRI is to use automated planning to manage the interactions between the robot and the user. Using planning has several advantages in compactness of the representation and correctness of the solution provided in a given state. There are few works that use planning for modelling HRI. For example, a Human-Aware Planning is presented by Tomic et al. [26]. The system permits modelling and planning with social constraints. In the same direction is the work of Nardi and Iocchi [22] where the returned plan is modified such that social norms expressed as rules can be satisfied. A relevant paper on planning is presented by Alami et al. [2]. They develop a robotic framework that has the ability to manage the interaction with a human, which means not only it is able to accomplish its task but also to produce behaviour that supports engagement with the user. To do so, they integrate the management of human interaction as part of the robot architecture. Lallement et al. [19] present a Human Aware Task Planner (HATP), which is based on hierarchical task planning. According to [19], HATP offers a user-friendly domain representation language inspired by popular programming languages, offering the possibility to even be used by people with no specific skills in automated planning. An interesting work on the usage of planning for STHRI is the one presented by Sanelli et al. [24]. In their paper, they describe a method and an implementation of a robotic system using conditional planning for generating short-term interactions by a robot deployed in a public space.

Although we decided to use classical planning without including directly the user’s actions in the domain, we share with [24] the idea of using planning for modelling STHRI, since we consider it is an effective approach to deal with non-expert, untrained users.

3 System Platform Design

In this section, we introduce the Cognitive System, a framework that is embodied in a robot, which is able to perceive, adapt and react to user behaviour. The architecture is presented in Fig. 2. As it is shown, there are three main layers.

-

Perception layer: it is responsible to provide the Cognitive System with the information regarding the game state, detecting and recognizing the tokens on the board, and the user actions by tracking his/her hands during the game.

-

Behaviour layer: it is the core layer that manages the information coming from the perception layer. The perceived environment is translated into a symbolic representation that is used to model HRI through high-level planning. This layer contains the Adaptive Module that selects the most suited action of assistance for a given user based on their performance and the game state. Moreover, a Safety Checker is implemented to react to unexpected unsafe user actions.

-

Robotic layer: it is the lowest level in charge of translating the planner dispatched actions into low-level motions of the robot combined eventually with speech.

3.1 Perception Layer

The perception system is based on an RGB-D camera together with algorithms to monitor in real-time the state of the board and the user’s hands movements.

The board state is defined as a set \(\left\{ L_{1}, L_{2},..., L_{20} \right\} \) where each location \(L_i\) might contain a token k. Each token k is labelled with a given letter (where k \(\in \) {“A”, “B”, “C”, “D”, “E”, “G”, “I”, “O”, “R”, “U”}).

The token shape is detected using Circle Hough Transform (CHT) [29]. The main idea behind this technique is to find circles in imperfect image input based on a “voting” in the Hough parameter space and then select the local maxima. Once a token is detected, we use an Adaptive Template-Matching algorithm to recognize the different letters. This technique provides an acceptable trade-off between speed and accuracy and it is robust to changes in orientation, which is the most frequent issue that can occur in our scenario. The developed algorithm doesn’t tackle the problem that could arise if tokens appeared at different scales, since the distance between the camera and the board is fixed.

The user’s hand movement is detected using the depth sensor of the RGB-D camera. To simplify perception, the camera is placed on top looking downwards. Thus, with a simple threshold on the depth we can remove the background (board, tokens, and everything else below them). In this way, we can define a shape detector with a few geometrical features in order to detect an arm and a hand. This kind of scenario reduces a lot the possibility of getting false positives (see Fig. 1). First, because of the way the camera is oriented. Second, because naturally only hands or arms are going to enter the workspace. Nonetheless, false positives due to other detected shapes, noise generated from the inaccuracy of the camera, and spots are detected and removed with a simple filter based on size and shape.

3.2 Behavioural Layer

The behavioural layer is the core of our framework. In our previous work [3], we already demonstrated the possibility of modelling an HRI problem using the Planning Domain Definition Language (PDDL 2.1 [12]). In that work, FF-Metric [16] was used as planner and evaluated to manage the interactions between the robot and the user. Here we use the same logic formalism (PDDL) to define the entire game domain. PDDL can be considered a standard language for encoding classical planning tasks. To automate the planning process we used ROSPlan [7]. This framework supports PDDL activity planning, consisting of a domain defining the actions used for planning, and a problem file that contains a description of the initial state and goal(s) of the plan. ROSPlan supports different planning solvers, here we use the temporal planner POPF [10]. The Adaptive Module is integrated into ROSPlan and provides reasoning capabilities to the entire framework. The planner is able to select, given the current representation of the state (user actions history and status of the game), the most suitable action of assistance. Since the problem of finding the most adequate action is modelled as an optimisation problem, at each step t of the game, the POPF planner looks for the path with the minimum cost.

The cost \(A'(s)\) of performing the action of assistance s is defined as:

where A(s) is the cost at the previous iteration, C(s) is the total time cost, including the time to perform an action of assistance by the robot, the reaction time of the user and eventually the time to move back a token to its initial location by the robot, and the \(\gamma \) and \(\alpha \) parameters are useful to tune the algorithm action’s selection. The \(\gamma \) value defines how much significance is assigned to the outcome of the action of assistance s at a given step. If this parameter is close to 0 then the system will consider mainly the global cost C(s) while if it is close to 1 then the system will give more importance to the outcomes of the previous actions in the estimation of the next state. The \(\alpha \) value defines to which extent the newly acquired information will replace the previous one. If this parameter is close to 0 then the system will use very little the information about the previous actions s, while if \(\alpha \) factor is close to 1, it would make the system to consider the most the recent actions.

A key role is played by the reward function R(s), which defines the amount of reward or penalty given to an action of assistance s after a user performs a move. R(s) can be seen as a reward in the case the user performs the correct move or, on the contrary, as a penalty in the case the user makes the wrong move. This function balances the reward/penalty keeping into account the current game difficulty and the level of assistance provided. To gain a better intuition of R(s) we can consider two different scenarios.

In the first scenario, we consider the case in which the user is at the very early stages of the game, we suppose the robot provides the user with encouragement (Level 1 Table 1), and then he moves the correct token. In this case, his reward will be considerably high, considering the complexity of the game (maximum at the very beginning) and the lowest level of assistance provided. On the contrary, if the user makes a mistake he will not be penalized so much, since in the very early stages a mistake can be acceptable.

In the second scenario, we consider the case in which the user is at the very final stages of the game, suppose the robot provides the user with a suggestion (Level 2 Table 1), and then he moves the correct token. In this case, his reward will be quite small considering the complexity of the game (easy at the very end) and the level of assistance provided. On the contrary, if the user moves the wrong token, the penalization will be quite high since now the game is easier and the assistance provided enough for the user to succeed.

It is important to highlight that the Adaptive Module does not have any prior knowledge of the user and it can start from the level of assistance defined by the experimenter. In that specific context, we set it up to the intermediate level (Level 2 Table 1). During the interactions with the user, the robot learns from his behaviour which actions are really effective for his performance and uses them to assist him.

In addition to the Adaptive Module, a Safety Checker has been included into the behavioural layer to guarantee that all the actions executed by the robot will be safe for the user. Using the information from the perception layer, hands are detected and tracked when the robot is moving. As soon as the hands are detected in the workspace of the robot, it requests the user to remove them such that its actions can be executed without the risk to hit him. The robot will first attempt to persuade the user to have a more safe attitude but if he still keeps on his unsafe behaviour during the interactions, it can decide to stop the game and ask the experimenter to intervene. For the sake of completeness, we included this module since we are working on a more general Cognitive System that will interact with older adults with mental impairments. In this specific context, during the experiments, the Safety Checker has never stopped the robot actions since the participants understood the robot actions timing and the risks that can arise from the contact with the robot.

Example of robot’s assistive actions. In a the robot suggests a subset of solutions, moving its arm horizontally pointing over the tokens. In b the robot suggests where the correct token is, pointing its arm in the direction of the token. In c the robot picks the correct token and offers it to the user

3.3 Robotics Layer

PDDL actions are mapped directly to ROSPlan action components that refine the actions into low-level commands. This layer is responsible for the low-level actions of the robot. It implements the speech and the gestures related to the assistive actions. As reported in Table 1, the actions of assistance that the robot can provide to the user during the interactions are:

-

Level 1 (only speech):

Neutral: the robot tells the user that it is his turn.

Encouragement: the robot tries to provide hints in order for the user to perform the correct move.

-

Level 2 (speech with gestures):

Suggest subset: The robot combining voice and gestures points to an area of the board where the correct token is. By default, the number of tokens covered by this action is three. In Fig. 3a, the robot suggests a subset of solutions. Here the correct token is “U” and the robot moves its arm horizontally from left to right on letters “G”, “U”, and “B”.

-

Level 3 (speech with gestures):

Suggest solution: Combing voice and gestures, the robot points to the exact location where the correct token is. In Fig. 3b, the robot points with its arm in the direction of the correct token “I”.

Fully assistive: The robot picks the correct token and offers it to the user. In Fig. 3c, the robot tells the user that it will pick the correct token (“A” in this case) and will offer it to him.

As it is possible to note in Table 1, the robot has different ways of applying the same level of assistance. Once a level has been selected, the robot randomly selects one of the alternatives. We envisage, for long-term experiments, to gather user preferences and use them for the selection.

Besides the assistive actions, the robot can greet the user if a correct move has been performed, or move the token back to its original location if the performed move is incorrect, as reported in the last two rows of Table 1.

4 Research Questions and Hypotheses

In this study, we are interested in evaluating whether and to which extent an adaptive robot would affect the participants’ performance and their overall experience while they are playing a puzzle board game. We explore how the participants perceive the robot and how the selected robot’s actions of assistance are suited to a user, given a state of the game. Moreover, we aim to validate how the robot can adapt not only to different users but also based on the game complexity. As secondary research questions, we are interested in: (i) evaluating the impact of interaction modalities on user performance, (ii) comparing the performance of people of different ages and background. In order to evaluate our research questions, we test the following hypotheses in an experimental setting created in an international fair in an uncontrolled environment with untrained people:

-

Hypothesis 1: Participants that will receive assistance from an adaptive robot will perform better than participants that don’t.

-

Hypothesis 2: In a very noisy environment, the multimodality of the robot’s interaction has an impact. Participants not equipped with an external headset will perform worse from the ones that received speech assistance via a headset device.

-

Hypothesis 3: Participants with engineering and HRI background can perform better that participants with a different background.

-

Hypothesis 4: Younger participants can perform faster than older ones.

5 Experimental Design

5.1 Game Design: Puzzle Game

The Nobel Prize Winner puzzle game consists of a board of 20 cells and 10 tokens, each with a different letter as shown in Fig. 1. The tokens are randomly located in the last two rows of the board. The objective of the game is to compose a 5-letter Nobel Prize Winner name using all the tokens available on the board while making as fewest mistakes as possible and minimizing the robot intervention. In each turn, the user chooses one of the letters and places it on one of the squares available in the first row where the name has to be composed. The name needs to be formed sequentially, starting from the first letter. If the tokens are placed correctly, the robot greets the user and lets her/him continue. Otherwise, it picks the wrongly placed token and moves it back to its initial location while giving the user additional assistance. Then the participant shall have another chance to complete the task. For each user’s attempt, only one hint at a time is provided by the robot. In our scenario, there are only two possible solutions: Curie and Dirac. The maximum number of attempts available for each token is 4. If the participant fails for the fourth time, the robot then moves the correct token on her/his behalf. To speed up interactions and shorten the game duration, the robot will provide further assistance if the user performs no actions within 15 s.

For each game session, we store in a database the total number of mistakes and the elapsed time. On top of that, for each token we record the number of attempts and the user’s reaction time, that is, the time needed for the user to pick a token and place it on a different square. The game has been designed with the adequate complexity to require assistance for its resolution. The degree of complexity comes mostly from the 10 letters available, which generate ambiguity on the word to compose. Other factors that make the game more challenging are the names to guess (CURIE or DIRAC), which are not so popular for the general public, and the fact that there was only one possible solution for the task (one of the two Nobel Prize names was set by the experimenter as the goal for each particular session). In other words, the chance for the user to select the correct token without any help is very low, especially at the very early stages.

5.2 Participants Analysis

The study was conducted during the MakerFaire 2018 in Barcelona, an event for makers, scientific and technological research groups and companies to promote interaction among people of different areas and integration of Science and Technology. We were assigned a booth where we set up our scenario as shown in Fig. 4. The attendees varied greatly in their age (a lot of middle age people and a considerable number of young people were present, as reported in Table 2). Most people spoke Spanish with a small representation from other European countries. 40 participants played the Puzzle game with the robot. From the total number of experiments, only 29 are reported in this study. Among the 11 that were excluded, in 4 cases the participants decided to quit before the end of the game and in other 7 cases there were problems related to the robotic platform. Pre-questionnaires were distributed among all participants in order to understand whether they had or not any prior experience with this kind of experiments, specifically with robotic systems. The report is shown in Table 3. Participants were recruited in accordance with the goal of our study (see Sect. 4), keeping numbers balanced based on age and background.

As we expected, the variance between the participants is quite high and we can consider the population participating in the experiment a representative sample to validate our system.

5.3 Procedure

The experiments were conducted in a booth with uncontrolled light conditions and in a noisy environment where hundreds of visitors were wondering around. Upon arrival, each participant was welcomed by the experimenter, who provided her/him with the basic guidelines. Each participant was requested to fill out an informed consent form. Next, the experimenter explained the objective of the game and the kind of movements the robot was able to perform without going into details. They were told to respect any robot’s delay, such as the time waiting for each move, feedback and, where appropriate, assistance. Furthermore, the participants were requested to complete the game with the lowest number of mistakes possible while minimizing their reaction time for each move.

Next, participants were asked to sit at a table with a board on top already setup to start the game (see Fig. 1). A Tiago robot was placed opposite to them and they were told it would take care of providing information about the game. The entire game session was filmed as the participants had already allowed their session to be recorded in video.

5.4 Post-Experiment Questionnaire Analysis

After each session, we asked the participant to fill in a questionnaire about their perceived interaction with the robot. The questionnaire contains the following questions:

-

Interacting with the robot in the game was likeable

-

Interacting with the robot in the game was distractful

-

Interacting with the robot in the game was comfortable

-

Interacting with the robot in the game was useful

-

Which modality have you preferred the most?

The objective of those questions was to evaluate the overall experience of the user during the game. All questionnaire measures are on a 6-point scale {Strongly Disagree (1), Disagree (2), Somewhat Disagree (3), Somewhat Agree (4), Agree (5), Strongly Agree (6)}.

6 Experimental Conditions

In this section, we define under which conditions the experiments have been conducted.

In order to keep under control the possible impact on the user’s performance of the tokens’ initial locations, we decided to use always the same initial tokens distribution. As it can be observed in Fig. 1 the sequence of the tokens is as follows: “G”, “A”, “U”, “B”, “E” (third row), “C” “D” “I” “O” “R” (fourth row). In this way, the initial conditions are identical for all participants.

Between subjects, we changed three variables: (i) robot adaptability, (ii) robot speech setting, and (iii) game solution.

In NO ADAPTABILITY condition, a group of participants plays the game with a robot that provides always a constant level of assistance (Level 2 Table 1). In contrast, in ADAPTABILITY condition, a group of participants plays with an adaptive robot that selects assistance using Eq. 1.

In NO HEADSET condition, a group of participants plays the game with no external support for listening to the robot voice. In contrast, in WITH HEADSET condition, a group of participants plays the game wearing a headset to better understand the robot instructions.

Finally, in GAME SOLUTION condition, the participants can play the game with two possible outcomes. In the first case, the solution is “CURIE” while in the other case the solution is “DIRAC”. More details on how these conditions are evaluated, will be provided in Sect. 7.

7 Experimental Results

In this section, we summarize and analyze the results of the experiments so as to address the research questions presented in Sect. 4.

We performed two experiments. In the first one (Sect. 7.1), the goal was to validate our main research question, that is, whether and to which extent an adaptive robot could affect the users’ performance. In the second one, we tackled hypothesis 2 (Sect. 7.2), that is, evaluate how interaction modalities could affect the users’ performance in an extremely noisy environment. Using the data collected in the first experiment, we also attempted to address the other remaining hypotheses: (i) establishing if having a background in Engineering/HRI turned out in better performance (Sect. 7.3) and (ii) evaluating if and how younger subjects could complete the game faster than older subjects (Sect. 7.4).

7.1 Hypothesis 1:

Participants that receive assistance from an adaptive robot will perform better than participants that receive assistance from a non-adaptive robot.

The fist experiment was designed to evaluate the robot’s adaptability (ADAPTABILITY/NO ADAPTABILITY condition).

We recruited 24 participants and split them into two groups. In the first group, namely A, 12 participants played the game in which the solution was CURIE (GAME SOLUTION condition). Similarly, in the second group, namely B, 12 participants played the game in which the solution was DIRAC (GAME SOLUTION condition). All participants were wearing headset to better listen to the robot instructions (WITH HEADSET condition). The groups’ setting is reported in Table 4.

In order to have a baseline for comparing results, we additionally split groups A and B respectively into two sub-groups (see Table 4). Groups A1 and B1 interacted with a robot which provided always the same level of assistance (Level 2 of Table 1), regardless on the user performance (NO ADAPTABILITY condition). Instead, groups A2 and B2 interacted with a robot which was enhanced with adaptive capabilities (ADAPTABILITY condition), as presented in Sect. 3.2. In this case, the robot is able to shape its behaviour around the user’s responses, by altering dynamically the level of assistance. The objective was to compare the results in terms of human performances between groups A1/B1 and A2/B2, specifically, reaction time and percentage of mistakes for each move.

Figure 5a shows results in terms of average reaction time for each correct move of group A1 (mean reaction time 10.88 s) and A2 (mean reaction time 6.22 s) while in Fig. 5c results on the average percentage of mistakes are shown for the same groups (A1 mean percentage of mistakes 46% and A2 mean percentage of mistakes 21.38%). Participants of Group A2 performed better on each single move (average reaction time and average percentage of mistakes) and completed the game in less time compared to group A1. For our initial hypothesis to be valid, we need to demonstrate that the variation in reaction time for each move between the two groups is significant and it is due to the different levels of assistance provided by the robot rather than to randomness. To do so, we perform the ANOVA analysis since data are normally distributed (Shapiro-Wilk test \(p=0.954\)). The result obtained in this case for a significance level of \(\alpha = 0.05\) is \(F_{0.05} [1,10] = 4.96\) and \(p=0.025\). Thus, our hypothesis is validated by the results.

Figure 5b, d show the results for group B1 (mean reaction time 10.62 s and mean percentage of mistakes 46%) and B2 (mean reaction time 8.74 s and mean percentage of mistakes 33.25%). The same considerations are valid here. The results collected during the interaction with the robot, in terms of reaction time, are again validating our hypothesis: for \(\alpha = 0.05\), \(F_{0.05}[1,10] = 4.96\), \(p=0.05\) (Shapiro-Wilk test \(p=0.8851\)). We can conclude that an adaptive robot can be effective with game scenarios where complexity forces users to require assistance to complete the game successfully.

In Fig. 6 we report the analysis on the average percentage of times a level of assistance has been provided to the user in order to move the correct token. It is worth noticing that if a move does not appear on one of the levels, it means that the robot has not provided that level of assistance for that token. An interesting trend observable in the plots in Fig. 5a, b is that the average user reaction time for the third token (group A2) and the fourth token (group B2), respectively is higher than we expected.

For the participants in group A2 the same behaviour is visible with letter “R”. We envisage that this can be due to a reducing level of assistance provided by the robot. As it can be observed in Fig. 6a with the second (orange bar) and third move (yellow bar), the percentage of times Level 3 is provided starts decreasing in favour of Level 2 that, on the contrary, is increasing. This is mainly because the game is becoming easier and the user did perform well during the previous interactions. Moreover, due to the tokens initial location, it seems to be challenging for the participants to understand, when Level 2 is provided (that suggests a subset of solutions), which letter among “I” “O” and “R” is the one to choose.

Participants in group B2 display an increase in reaction time on letter “A”. We envisage the reason can be related to the tokens initial distribution on the board. In fact, when the third token has to be moved (letter “R”) (Fig. 6b yellow bar), the subset of solutions given by Level 2 consists of “O” and “R”, since the letter “I” has already been moved (second move “I”). On the contrary, with letter “R” (Fig. 6b violet bar), if the robot decides to provide a suggestion (Level 2), it takes a bit longer for the participant to select the correct move, given that the choice now is between three tokens. Analogously to the case of group A2, it can also be due to the levels of assistance provided. In the final stages, when most of the tokens have been already moved, the robot’s attitude is to furnish a lower degree of assistance. In other words, during this stage, the majority of support comes from Level 1 and 2 and only partially from Level 3, as it can be observed in Fig. 6b.

As a last remark, it is worth noticing that in Fig. 5c and 5d, the average percentage of mistakes is very similar for the last token (“E” for CURIE and “C” for DIRAC), independently of the robot adaptive logic. This is an expected scenario: when the game becomes easier, no matter which assistance the robot provides, the probability to guess the correct move is high. On top of that, the robot might be on the same level of assistance (Level 2) during this time.

Although most people enjoyed playing with the robot, there is a considerable difference between group A1 and group B1 and group A2 and group B2 as regards to the overall experience during the game.

The post-questionnaires handed to the participants after the game confirm our initial hypothesis. As reported in Tables 5 and 6, participants playing with the adaptive robot had better experience overall. In particular, groups A2 and B2 appreciated more interacting with the robot as they thought the robot assistance was useful for completing the game.

To evaluate the system more precisely, we confront the results of groups A2 and B2 to confirm that the effectiveness of the robot adaptability is not only evident when comparing it with their baselines (group A1 and B1) but also when there is a difference in the difficulty of the game. As it is possible to notice in Fig. 7a, b, there is a difference, in terms of average reaction time and percentage of mistakes on each move, between the two groups that play the game supported by adaptive assistance. The participants from group A2 perform faster and with fewer mistakes.

We believe this may be due to the word to guess. The Nobel prize winner Marie Skłodowska Curie seems to be more known than Paul Adrien Maurice Dirac. When the word to guess is Curie, some participants are able to guess the name before waiting to move the last token.

In Fig. 6 we report the average percentage of times a level of assistance has been provided during a game session for groups A2 and B2, respectively. As observable, the levels of assistance provided are quite different. The reason behind the robot’s different behaviours is due, not only to the evident difference in the number of mistakes (Fig. 7b), but also to the reaction time of the participants (Fig. 7a). The time a participant takes to perform a move has a considerable impact on the selection of the robot’s next helping action. For instance, suppose we have two users (Bill and Bob) performing the same, but Bill takes more time to move (higher reaction time) than Bob. In the case of Bill, the robot is more willing to change its levels of assistance than with Bob. Although it seems a reasonable behaviour, sometimes does not appear to be the most effective solution. For example, if a user plays badly but quite fast, the system needs a few steps before deciding to engage with him using additional support.

As reported in Fig. 6, the levels of assistance received by the participants of groups A2 (see Fig. 6a) and B2 (see Fig. 6b) are quite different. As shown, for group A2 the levels of assistance are constant until the third move (see Fig. 6a yellow bar) when the robot starts decreasing the assistance applied (decreasing Level 3 bars, increasing Level 2 bars) and this trend continues during the next moves. It can be appreciated how the robot starts introducing the lowest level of assistance in response to a better and faster performance by the participants due to less complex conditions (see Fig. 6a violet and green bars).

A different robot’s assistive behaviour is shown for participants in group B2. Most of the participants require more assistance (Level 3) to succeed in the task, as compared to A2. Since users struggle to guess the correct token in the first two moves, the robot provides increasing assistance (see Fig. 6b blue and orange bars). Then, after the second correct move, the robot starts switching its assistance in favour of a less supportive action (Level 1 yellow bar, Fig. 6b).

This last comparison between group A2 (game with Curie) and B2 (game with Dirac) shows how a robotic system is able to adapt not only to the user’s performance but also to the game complexity.

7.2 Hypothesis 2:

In a very noisy environment, the multimodality of the robot interaction has an impact. Participants not equipped with an external headset will perform worse than the ones that receive speech assistance via a headset device.

In this second experiment, we aimed to assess to which extent the interaction modalities are helping the user throughout the game when the robot instructions are not clear because of poor sound conditions. We divided the participants into two groups: the first one, namely C, with 5 participants and a second group, namely D, with 6 participants (note that group D is the same as group A2 in the previous experiment) as reported in Table 7. Both groups interacted with an adaptive robot (ADAPTIVE condition) and played a game whose solution is CURIE (GAME SOLUTION condition). Participants of group C interacted with the robot without wearing an external headset (NO HEADSET condition), while participants of group D interacted with the robot with an external headset (HEADSET condition). We expected, since the environment was very noisy, that users of group C would experience difficulties to understand the instructions and the assistance provided by the robot, and this would have consequences on the performance.

In Fig. 8a, we compare the average reaction time of groups C and D to guess the correct letter. In the case of participants in group C (see Fig. 8b blue line, mean reaction time 8.862 s), the time they take to perform a correct move is greater than the time taken by users with headset (mean reaction time 6.22 s). Also in this case, data are normally distributed (Shapiro-Wilk test \(p=0.9564\)), so we can apply ANOVA. The result obtained in this case for \(\alpha = 0.05\) is \(F_{0.05}[1,9] = 5.12\) and \(p=0.09\). Thus, our hypothesis is validated by the results. Along the same line, during the first move, the timeout (15 s) did elapse several times for the subjects in group C. This is caused mainly by their lack of understanding of the robot instructions. Only in the following moves, when they started getting used to the robot and its gestures, their performances improved, eventually reaching almost the same reaction time (4th and 5th moves). In Fig. 8b, we compare the average percentage of mistakes for each move made by the two groups (mean percentage of mistakes for group C is 32.76% and for group D is 21.38%). Here it is clear that, in the first move, having the headset on or not doesn’t affect the number of mistakes produced. However, the situation completely changes in the next moves, when participants wearing an external headset make less mistakes (Fig. 8b red line) than the participants of group C (Fig. 8b blue line).

Figure 9 compares the percentage of times a level of assistance has been provided for each move to group C (Fig. 9a) and group D (Fig. 9b), respectively.

The trends are quite different for the two groups. The failure rate of participants in group C is greater, as we have already seen in Fig. 8b (blue line) and they take more time to perform the correct move (Fig. 8a blue line). This results in a need of constant support with the highest level of assistance (Level 3 Fig. 9a). For the same reason, the lowest level of assistance was never initiated for group C. A different evaluation comes up for group D. In this case, the percentage of times the highest level of assistance has been given, decreases from the first letter to the fifth one. Observe that, in the fourth and the fifth moves (Fig. 9 violet and green bars), as the game becomes easier and the subject performs better, the robot applies more frequently the lowest level of assistance (Level 1 red bar).

The post-questionnaire filled in by the participants of groups C and D also confirm our initial hypothesis. In Tables 8 and 9 we report the results: the users that were able to listen properly to the robot thanks to the headsets had a better experience with the robot (see Table 9). At the question related to which interaction type they liked the most, the participants of group C expressed a clear preference for gesture (see Table 8). That was the expected result given the indoor noise contamination. Conversely, participants of group D did not express a clear preference (see Table 9).

7.3 Hypothesis 3:

Participants with engineering and HRI background can perform better than others with different backgrounds.

In order to verify this hypothesis, we analyzed all the data collected in the first experiment and we grouped the related results by participants’ background. By doing so, we aimed to evaluate whether and to which extent participants with technical background can perform better than the others. This assumption is based on the kind of experiment we proposed. Each participant could play the game only one time. This limitation makes impossible for the user to learn about the robot behaviour. Analysing performances of the two groups members can lead us to further insights such as obtaining a deeper comprehension on how the assistance provided is perceived and/or whether the system in play is logically understood by each user. We report in Table 10 the different groups split by the participants conditions related to Sect. 6. It is important to say first that grouping the participants only by their background might lead to wrong evaluations since other conditions can affect the results. However, we can see that, each sub-group \(E_{i}\) is balanced with the corresponding \(F_{i}\), and also the total amount of participant of group E is equal to group F. From the results reported in Table 10, we can already notice that, for what concerns average completion time and number of mistakes, there are very few differences between subgroups \(E_{i}\) and \(F_{i}\). In other words, as the trend is clear, we decided to merge the sub-groups as reported in Table 11. Then, we divided the participants into two groups: the first one, namely E, with 12 subjects coming from HRI/Engineering background and the second one, namely F, with 12 subjects with other backgrounds. Note that, in this experiment, we excluded the 5 participants (group C of Sect. 7.2), that played the game under condition NO HEADSET.

The results of this experiment are resumed in Fig. 10. As expected, the participants of group E could perform better but mainly in the first stages of the game. However, after few interactions, the average reaction time and the percentage of mistakes of group F (mean reaction time 8.94 s and mean percentage of mistakes 44.2%) equalizes the ones of group E (mean reaction time 8.65 s and mean percentage of mistakes 41.92%). The results are proved to be significant by performing an analysis of variance in terms of average reaction time with each move. The probability of the null hypothesis for \(\alpha = 0.05\), \(F_{0.05}[1,22] = 4.3\) is \(p=0.006\).

In conclusion, the two groups performed almost the same, with the most significant difference during the initial stages. We can conclude, from the collected data, that the diversity in participants background does not contribute to a significant difference in the interaction with the robot, due to the natural behaviour expressed by the latter during the interactions.

7.4 Hypothesis 4:

Younger participants can perform faster than older one.

In this section, as in Sect. 7.3, we analyse the data collected in the first experiment. The objective was to evaluate whether age has an impact on the user’s performance expressed as time and number of mistakes. In Table 12, we show each sub-groups divided by the conditions under which the participants played the game. As in the previous section, since the correlation and the trend between the sub-groups is clear and the number balanced, we performed a merge based on age range. Therefore, we divided the participants into two groups: group G with 11 participants with age between 18 and 39 and group H with 13 participants with age between 40 and 60 (as reported in Table 13).

Results of Fig. 11a confirm our initial hypothesis: group G (mean reaction time 6.42 s) is faster than group H (mean reaction time 8.025 s). Finally, to validate our assumptions, we run an analysis on variance (ANOVA). The probability of the null hypothesis to be true for \(\alpha = 0.05\), \(F_{0.05}[1,22] = 4.21\) is \(p=0.005\).

An interesting result, that turns out comparing the percentage of mistakes (see Fig. 11b), is that users of group H (mean percentage of mistakes 32%) made less mistakes than group G (mean percentage of mistakes 45%). We believe this could be due to the interaction routine deployed by the robot. The participants of group G were more inclined to move tokens without waiting for the robot assistance. On the contrary, the participants of group H pondered a bit more on the next move to perform, so to take the maximum advantage of the support provided by the robot.

8 Lessons Learned

Aside from the user study, we had also the objective of evaluating our robotic platform in a real environment while exposed to the common public in very short-term experiments to avoid user adaptation to the robot. The most relevant lessons learned are:

-

Prioritise the interaction modalities. The gestures interaction modality of the robot is sometimes perceived as too slow and time-consuming compared to speech. Even if it doesn’t affect the user performance, since the time is computed when the robot supportive action ends, the participants preferred faster ways of interaction.

-

Synchronization between speech and gestures matters. Speech and gesture interactions need to be perfectly synchronized, otherwise the strength provided by combining these two modalities may be undermined. Moreover, this is an essential requirement to provide the user with the impression of a natural interaction.

-

Perception system needs to be reliable. If the perception system relies on vision, it needs to be tested in more extreme conditions in the laboratory, since these conditions are the most common in the real world. A false positive in the detection has a huge impact on the entire system and that is not acceptable in applications that are supposed to work autonomously and deal with people. For instance, in the proposed board gaming context, a recognition of a wrong token can lead the system to a completely different representation of the current state of the environment and, consequently, the robot will behave differently than expected. In the proposed experiment, to prevent this kind of failures we fine-tuned the parameters of the algorithms presented in Sect. 3.1 to guarantee the constant detection and recognition of all the tokens.

-

Robustness to catch unexpected user’s behaviour. The Cognitive System needs the ability to deal with unexpected events. For instance, moving more than one token at a time is not allowed by the system and by the rules of the game, but some people are eager to complete the game as fast as possible, sometimes just to challenge the system or to validate its reliability. Moreover, if the system would be able to understand the intention of the user, it might decide not to provide any assistance when the participant decides to move another token just after he made a move. In other words, the robot should not interfere with the user; on the contrary, it should be able to interpret when he needs help because he is not able to make the correct move.

9 Conclusions and Future Work

In this work we have presented a Cognitive System, embedded in a robot, which aims at supporting, encouraging and socially interacting with users while they are playing a game in the context of a general public venue. We believe that the deployment of robots in real-world environments is an essential requirement for researchers in order to better understand the weaknesses and the limitations of their systems. Experimental results and especially the lessons learned in those scenarios are what leads to technological advancement in HRI.

We exposed the entire system to a two-day-long experimentation in a real-world environment, where the robot interacted with several untrained participants. The importance of STHRI in the context of board gaming has thus been investigated with a special focus on its effectiveness for improving user performance and overall experience during the game.

The results on STHRI adaptability provide evidence on how the robot can adapt to the learned user behaviour during only the interactions of one game session and be effective to improve user performance (Hypothesis 1, Sect. 7.1). Moreover, we obtained some interesting insights from our experimental results that can be used as a starting point for future work. First, the interaction modalities can make the difference in improving users’ performance when the environment is very noisy and the instructions to the user are not provided clearly (Hypothesis 2, Sect. 7.2). Second, people with different backgrounds can have almost the same performance as people with HRI and engineering background, meaning that the robot levels of assistance are not only effective but also easy to understand by a generic untrained user (Hypothesis 3, Sect. 7.3). Third, younger participants can perform moves faster but with worse results than older participants (Hypothesis 4, Sect. 7.4).

We intend to carry out future work in two directions. On the robotics side, we will improve our system by addressing the lessons learned during the experiments and reported in Sect. 8. On the user-study side, we are preparing the evaluation of the current robotic system in an extended user study with patients affected by MD and AD. This longitudinal study will allow us to eventually provide a useful tool for therapists and caregivers working in health-care facilities. In this way, we aim to reduce the caregivers’ burden and workload. Furthermore, the long-term user study will allow us to extend and evaluate the adaptability of our system in long-term interaction. In this context, the robot needs to take into account the entire history of user’s actions and be able to quickly react to the user’s performance changes over time. Also, user’s engagement is an essential requirement for long-term interaction. To this end, we aim to extend the robot with the capability to learn the user’s preferences on each level of assistance.

In conclusion, this work is an effort at developing an effective embodied assistive system in a real scenario, trying to address the issues that arise in an uncontrolled environment and with untrained users.

References

Aaltonen I, Arvola A, Heikkilä P, Lammi H (2017) Hello pepper, may i tickle you?: children’s and adults’ responses to an entertainment robot at a shopping mall. In: Proceedings of the companion of the 12th ACM/IEEE international conference on human–robot interaction. NY, USA, pp 53–54

Alami R, Clodic A, Montreuil V, Sisbot E, Chatila R (2005) Task planning for human–robot interaction. In: Proceedings of the joint conference on smart objects and ambient intelligence: innovative context-aware services: usages and technologies, pp 81–85

Andriella A, Alenyà G, Hernández-Farigola J, Torras C (2018) Deciding the different robot roles for patient cognitive training. Int J Hum Comput Stud 117:20–29

Bennewitz M, Faber F, Joho D, Schreiber M, Behnke S (2005) Towards a humanoid museum guide robot that interacts with multiple persons. In: Proceedings of the 5th IEEE-RAS international conference on humanoid robots, pp 418–423

Bensch S, Jevtić A, Hellström T (2017) On interaction quality in human-robot interaction. In: Proceedings of the 9th international conference on agents and artificial intelligence, vol 2. pp 182–189

Breazeal C, Dautenhahn K, Kanda T (2016) Social Robotics. In: Siciliano B, Khatib O (eds) Springer handbook of robotics. Springer, Cham, pp 1935–1972

Cashmore M, Fox M, Long D, Magazzeni D, Ridder B, Carrera A, Palomeras N, Hurtos N, Carreras M (2015) ROSPlan: planning in the robot operating system. In: Proceedings of international conference on ai planning and scheduling, pp 333–341

Chan J, Nejat G (2012) Social intelligence for a robot engaging people in cognitive training activities. Int J Adv Robot Syst 9:1–13

Chen Y, Wu F, Shuai W, Chen X (2017) Robots serve humans in public places-KeJia robot as a shopping assistant. Int J Adv Robot Syst 14(3):1–20

Coles AJ, Coles A, Fox M, Long D (2010) Forward-chaining partial-order planning. In: 20th international conference on automated planning & scheduling, pp 42–49

Dayoub F, Morris T, Corke P (2015) Rubbing shoulders with mobile service robots. IEEE Access 3:333–342

Fox M, Long D (2003) PDDL2.1: An extension to PDDL for expressing temporal planning domains. J Artif Intell Res 20:61–124

Gao Y, Barendregt W, Obaid M, Castellano G (2018) When robot personalisation does not help: Insights from a robot-supported learning study. In: RO-MAN 2018 - 27th IEEE international symposium on robot and human interactive communication, vol 1. pp 705–712

Gordon G, Spaulding S, Westlund JK, Lee JJ, Plummer L, Martinez M, Das M, Breazeal C (2016) Affective personalization of a social robot tutor for children’s second language skills. In: Proceedings of the 30th conference on artificial intelligence, pp 3951–3957

Hemminghaus J, Kopp S (2017) Towards adaptive social behavior generation for assistive robots using reinforcement learning. In: Proceedings of the 12th ACM/IEEE international conference on human–robot interaction. ACM Press, New York, USA, pp 332–340

Hoffmann J (2003) The metric-FF planning system: translating “ignoring delete lists” to numeric state variables. J Artif Intell Res 20:291–341

Hong A, Igharoro O, Liu Y, Niroui F, Nejat G, Benhabib B (2019) Investigating human–robot teams for learning-based semi-autonomous control in urban search and rescue environments. J Intell Robot Syst 94(3):669–686

Kanda T, Shiomi M, Miyashita Z, Ishiguro H, Hagita N (2009) An affective guide robot in a shopping mall. In: Proceedings of the 4th ACM/IEEE international conference on Human robot interaction, pp 173–180

Lallement R, de Silva L, Alami R (2014) HATP: an HTN planner for robotics. arXiv:1405.5345

Leite I, Castellano G, Pereira A, Martinho C, Paiva A (2014) Empathic robots for long-term interaction: evaluating social presence, engagement and perceived support in children. Int J Soc Robot 6(3):329–341

Matarić MJ, Scassellati B (2016) Socially assistive robotics. Springer Handb Robot 6:1973–1994

Nardi L, Iocchi L (2014) Representation and execution of social plans through human–robot collaboration. In: Fifth international conference on social robotics, pp 266–275

Sabanovic S, Michalowski MP, Simmons R (2006) Robots in the wild: observing human–robot social interaction outside the lab. In: 9th IEEE international workshop on advanced motion control, pp 596–601

Sanelli V, Cashmore M, Magazzeni D, Iocchi L (2017) Short-term human–robot interaction through conditional planning and execution. In: Proceedings of international conference on automated planning and scheduling, pp 540–548

Tapus A, Ţapus C, Matarić MJ (2009) The use of socially assistive robots in the design of intelligent cognitive therapies for people with dementia. In: IEEE international conference on rehabilitation robotics, pp 924–929

Tomic S, Pecora F, Saffiotti A (2014) Too cool for school—adding social constraints in human aware planning. In: Proceedings of the 9th international workshop on cognitive robotics, CogRob (ECAI)

Tonkin M, Vitale J, Ojha S, Williams MA, Fuller P, Judge W, Wang X (2017) Would you like to sample’l Robot engagement in a shopping centre. In: 26th IEEE international symposium on robot and human interactive communication, pp 42–49

Tsiakas K, Abujelala M, Makedon F (2018) Task engagement as personalization feedback for socially-assistive robots and cognitive training. Technologies 6(2):49

Yuen HK, Illingworth J, Kittler J (1988) Ellipse detection using the hough transform. In: Procedings of the Alvey vision conference, vol 8. pp 1–41

Funding

This project has been partially funded by the European Union’s Horizon 2020 research and innovation programme under the Marie Sklodowska-Curie grant agreement SOCRATES (No 721619), by the Spanish Ministry of Science and Innovation HuMoUR (TIN2017-90086-R), and by the Spanish State Research Agency through the María de Maeztu Seal of Excellence to IRI (MDM-2016-0656).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Ethical Standard

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Andriella, A., Torras, C. & Alenyà, G. Short-Term Human–Robot Interaction Adaptability in Real-World Environments. Int J of Soc Robotics 12, 639–657 (2020). https://doi.org/10.1007/s12369-019-00606-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-019-00606-y