Abstract

Humans show their emotions with facial expressions. In this paper, we investigate the effect of a humanoid robot’s head position on imitating human emotions. In an Internet survey through animation, we asked participants to adjust the head position of a robot to express six basic emotions: anger, disgust, fear, happiness, sadness, and surprise. We found that humans expect a robot to look straight down when it is angry or sad, to look straight up when it is surprised or happy, and to look down and to its right when it is afraid. We also found that when a robot is disgusted some humans expect it to look straight to its right and some expect it to look down and to its left. We found that humans expect the robot to use an averted head position for all six emotions. In contrast, other studies have shown approach-oriented (anger and joy) emotions being attributed to direct gaze and avoidance-oriented emotions (fear and sadness) being attributed to averted gaze.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Humans convey information about their emotional state with facial expressions. Emotions provide information about the inner-self of an individual, about the action which preceded that facial expression of emotion, and about the action that is most likely to occur after the facial expression [12]. Therefore, a humanoid robot capable of making facial expressions of emotion provides information to its surrounding environment about its most probable following action. Nadel et al. [23] showed emotional resonance (i.e., automatic facial feed-back during an emotional display) to robotic facial expressions. This shows that it is important for a robot to use artificial facial expressions when interacting with a human.

The human face uses more than 44 muscles combined in non-trivial ways to produce many different facial expressions [28]. Humanoid robot technology is not at the point that it can produce all of the human facial expressions. However, humanoid robots can imitate basic emotions with artificial facial expressions. Ekman and Friesen [13, 14] proposed that humans use facial expressions to convey six basic emotions: anger, disgust, fear, happiness, sadness, and surprise. In an earlier study [17], we used LED patterns around the eyes of the Aldebaran Nao robot [3] as artificial facial expressions to imitate human emotions. Each eye of the Nao robot consists of eight partitions, each partition containing a red, green, and blue LED. In a pre-test the colors that people associate to these basic emotions were determined and implemented in the robot. Most emotions were correctly recognized, but none of the LED patterns we used in that study were recognized as Disgust. One possible explanation for this is that in our study, the robot was looking directly at the participant and research has shown that humans tend to express approach-oriented emotions, such as anger, joy, and love, more with a direct gaze, while they express avoidance-oriented emotions, such as disgust, embarrassment, and sorrow, more with an averted gaze [2, 21]. Thus, it would seem that simply adding directed and averted head positions, to approach and avoidance oriented emotions would disambiguate them and restore recognition.

In addition to disambiguating avoidance-oriented emotions (e.g., Disgust), utilizing head position to express interpersonal attitudes and emotions is a motivation for this work [4]. For example, Ham et al. [16] observed that gazing alone increased the persuasive power of the Nao robot; and it was even more convincing when gestures were utilized with gazing. They also found that gestures without gazing made it less convincing.

In this paper, we investigated the effect of a humanoid robot’s head position on imitating human emotions. In an Internet survey through animation, we asked participants to adjust the head position of the Nao robot to express the six basic emotions: anger, disgust, fear, happiness, sadness, and surprise. We expected to find differences in head position between the six emotions.

2 Related Work

Some studies have shown that imitating human emotions is essential for a robot to be accepted by humans [5, 22]. Other studies have shown that imitating human emotions can have the opposite effect [25].

Regarding the imitation of emotions in robots, a number of authors have utilized gaze and head movements to emulate human emotions. Robots portray artificial facial expressions differently depending on their morphology. At one end of the morphology spectrum is the Geminoid F robot [8]. The Geminoid F robot is modeled to resemble a female human’s outer appearance to the finest detail. Its advanced facial mechanics allow it to express emotions closely resembling those of humans.

Probo is an example of a robot with a non-human morphology [27]. It is a huggable elephant-like robot with a 20 degrees-of-freedom head which can make facial expressions and eye contact.

Kismet [10] has less functionality than Geminoid F, but still uses facial expression to imitate emotion. Kismet is a robot head about 50% larger than an adult human head. Its eye gaze and head orientation each have three degrees-of-freedom. Its eyelids, eyebrows, lips, and ears have another 15 degrees-of-freedom. Similarly, BERT2 is a robot with a face that has eyebrows, eyelids, eyeballs, and a mouth with thirteen degrees-of-freedom [6].

An example with even less capabilities is EMYS [26]. This robot has eleven degrees-of-freedom. The head can move from side-to-side; however, the eyes cannot gaze the other way. EMYS also does not have any lower eyelids. The mouth can only open and close. This and the lack of a lower eyelid made it difficult to express some emotions.

Other robots with limited facial expression capabilities, like the Nao, are AMI [20] and Maggie [15]. AMI communicated nonverbally with gesture and posture. Maggie has only two black eyes and movable eyelids, a two degrees-of-freedom head, and mouth shape with colored lights synchronized with speech to imitate emotions.

Bethel [9] considered how to imitate emotions in search-and-rescue and military robots with limited expressive potential. She focused on utilizing multi-modal expression to communicate the robot’s emotional state and empathic behavior.

More closely related to our work with the Nao robot, Beck, Cañamero, and Bard [7] overcame the Nao’s incapability to communicate facial expressions by using body language. The KSERA project also imbued Nao with artificial emotions utilizing a combination of body posture, hand gestures, voice, and eye LED patterns [18, 19].

3 Methods

3.1 Design

This study used a repeated measures factorial design. Emotion was the independent variable: anger, disgust, fear, happiness, sadness, and surprise [13]. Head-movement direction and movement duration were the dependent variables. We defined head-movement duration as the time it took the robot to move its head from a neutral position to the position depicting the emotion. Thus, head movement duration is equivalent to head velocity, i.e., longer movement durations are slower velocities.

Although we used animations of the Nao robot in our experiment, we believe the results are applicable to any humanoid robot with the capability of changing the direction of its gaze either through head movement or eye movement

3.2 Participants

There were 44 participants (33 males and 11 females) from the Netherlands with an age range of 15–66 (mean = 29.8, SD = 15.5). Participants took part in the study willingly with a chance of winning a lottery in which there were 20 prizes of

3.3 Apparatus

The experiment was conducted as an internet survey which ran on a server at the Eindhoven University of Technology. The site used PHP v5.2 and MYSQL v5.5. Participants needed an internet browser capable of playing Flash 11 objects. The internet survey was available in both Dutch and English. Statistics about which language was used were not collected.

3.4 Procedure

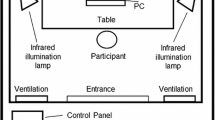

The internet survey started with a general questionnaire asking for gender, age, and email address. After the general questionnaire, the participant was presented with an explanation of how the interface worked and what they needed to do. The explanation did not inform them about the purpose of the study nor any specific emotion. After the explanation, they were shown the image shown in Fig. 1 and asked to choose the head direction and movement duration, so that they thought the Nao experienced a specific emotion.

All six emotions were presented three times in random order, with no consecutive emotions the same. The question was: “Choose the gaze so that you think the Nao experiences emotion?”, where emotion was replaced by one of the six emotions. The participant then chose the movement duration with a slider and the direction with a keypad, as shown in Fig. 1.

The movement duration slider ranged from 0.5 to 4 s with a default value of 2 s. Once the participant clicked one of the directions on the keypad, the robot’s head moved to the selected direction in the time indicated by the movement duration slider. The direction keypad controlled the yaw (i.e., left and right direction) and pitch (i.e., up and down direction) of the robot’s head as shown in Fig. 2. The participants could change the values of the movement duration and direction until they were satisfied that the direction and movement duration were what they would expect the robot to use when it imitated the specified emotion. The direction and movement duration were recorded when they clicked on the “Next” button at the bottom of the image.

The participants could replay the result of their combination of movement duration and head direction once they made a selection before moving to the next screen. This made it easier for the participants to watch the robot actually make the movement in the selected time so they could understand that the movement duration of head shifts they selected were optimal.

4 Results

The results of the participant’s selection for direction and movement duration will be reported separately.

4.1 Direction of Head: Yaw and Pitch

Figure 3 contains bubble charts for each emotion where the size of each bubble reflects the number of times the participants selected a given direction. The exact number is given in Table 1, with the predominant head direction bolded. The emotion, Disgust, had two equally predominant head directions. Figure 4 shows the corresponding postures of the Nao for the most predominant head direction as chosen by the participants for each emotion. Figure 3 and Table 1 also show that the participant’s preferences for the head direction for the emotions disgust and fear were much more equally distributed across head directions than for the other emotions.

Bubble charts for each emotion showing the number of times the participants selected each direction. The size of the bubble is given in Table 1

Table 2 shows the mean and SE (n = 132) of the yaw and pitch angles for each emotion. From Table 2 it is clear that the mean yaw angle is effectively zero for all emotions, whereas happiness and surprise are associated to looking up and sadness to looking down. To investigate this, we did a multi-variate ANOVA with yaw and pitch as dependent variables and emotion as the independent variable. This revealed a significant effect of emotion on pitch (F(5,786) = 120.607, \(p<0.001\)), but not on yaw (F(5,786) = 0.827, \(p=0.53\)). A post hoc test using Tukey’s HSD showed that the mean pitch angle differed between most emotions (absolute difference \(>\,8.1^{\circ }, p<0.001\)). Only for anger and fear (\(\Delta \hbox {m}=-5.1^{\circ }, p=0.11\)), and happiness and surprise (\(\Delta \hbox {m}=-1.3^{\circ }, p=0.987\)) was the pitch angle not significantly different (see Table 2).

4.2 Distribution of Head Direction: Circular Statistics

From the bubble chart (Fig. 3) it seems the emotions fear and disgust are much more uniformly distributed than anger, say. To assess this we determined the movement direction for each of the eight movements of the robot’s head. We defined up as \(0^{\circ }\), right-up as \(45^{\circ }\), right as \(90^{\circ }\) and so on. Note that for the straight ahead direction (central panel in Fig. 2) there is no movement, so this data is not included. Using circular statistics, the mean movement direction (\(\bar{\theta })\) can be calculated as follows. First the average sine and average cosine values are computed from the sampled direction angles:

where \(\theta _i \) is the ith sample of the direction angle selected by participants of the study. From the average sine and cosine values, the “average” direction angle \(\bar{\theta } \) is determined:

The standard deviation in degrees is given by:

For a unimodal distribution the SD represents the width of the peak and \(\bar{\theta }\) the location. Table 3 gives the mean movement direction and standard deviation for each emotion. Table 3 confirms the findings from Fig. 3 that anger, fear and sadness are associated with looking down, whereas happiness, surprise and disgust are associated to looking upward. To check whether participants preferred some directions over any other, we tested whether the probability distribution for choosing a particular direction deviated significantly from a flat distribution i.e. no preference. Using a Chi-squared Goodness-of-fit test we found that the differences between the directions the participants selected for each emotion differed significantly from a uniform distribution: the least significant emotion was fear with (\(\chi ^{2}(7) = 26.248, p<0.001\)). Nonetheless, fear and disgust are close to uniform, whereas the other emotions are unimodal. This can be seen from the SD in Table 3. Both fear and disgust are close to the theoretical limit of SD\(_\mathrm{max}=\surd 2 180/\pi \approx 81^{\circ }\), which corresponds to a uniform distribution. The other emotions are unimodal. The latter is clearly visible in Fig. 5, where the frequency of each movement direction is plotted for each emotion.

4.3 Direction of Head: Direct versus Averted

In order to compare our results with the approach-avoidance theory [1, 2], we analyzed whether the selected head directions were averted (in either direction) or not. Figure 6 compares the head direction in terms of direct (i.e., yaw and pitch are both \(0^{\circ }\)) and averted (i.e., not direct). There is a significant effect of emotion on the ratio of averted versus directed. We tested the positions on averted/directed. Since there were 8 target positions for averting and only 1 for directed, we expected a probability of 1/9 = 11.11% for directed and a probability of 8/9 = 88.89% for averted if subjects randomly choose one movement target. We tested for each emotion whether the frequency of choosing a directed or averted position differs significantly from random using a Chi-squared test: anger, happiness, sadness and surprise significantly deviated from chance (\(\chi ^{2}(1)> 5.761, p<0.016\)), where direct head position was chosen more often for anger, happiness and surprise, and less often for sadness. Head position aversion did not differ from chance for disgust (\(\chi ^{2}(1) = 1.031, p = 0.31\)) and fear (\(\chi ^{2}(1)=0.009, p=0.926\)). So, in combination with the fact that for fear and disgust the distribution of positions was relatively uniform (see Fig. 3), these results indicate the people mostly selected head positions at random. For the other emotions head positions have a unimodal distribution, so there is a clear directional preferences as was already shown in Tables 1 and 2.

4.4 Movement Duration for Head Direction to Change

Figure 7 shows the mean movement duration selected by the participants for each of the six emotions. A repeated measures ANOVA test was performed on the data with the six emotions as fixed factors and movement duration as the dependent variable. We found a significant effect of emotion (\(F(5, 215) = 4.225, p=0.0011, \eta ^{2}=0.089\)).

There were 40 out of 44 participants who at least once selected something other than the default value (2 s). Since it is difficult to know whether the other four participants selected the default value intentionally or not, we show the mean movement duration without those four included in Fig. 8. A univariate ANOVA test was performed on this data with movement duration as the dependent variable and the six emotions as fixed factor. The effect of emotion is still highly significant (\(F(5, 195) = 4.257, p = 0.0011,\eta ^{2}=0.098\)). A post-hoc analysis using Tukey’s HSD reveals that the movement durations are significantly different for almost all comparisons (\(p<0.045\)). Only the movement duration for surprise does not differ significantly from happiness (\(p=0.67\)), fear (\(p=0.44\)) and anger (\(p=0.35\)). Fear and anger also do not differ significantly from each other (\(p=0.88\)).

5 Discussion

In this study we investigated whether people prefer a certain head position to accompany different emotional expressions, and whether movements to these head positions should be fast or slow. In addition, we looked at whether the approach-avoidance theory applies to robotic head shifts.

5.1 Direction of Head

Participants mostly had a clear preference for a head direction. They preferred the robot to look straight down for both anger and sadness, and to look straight up for both surprise and happiness. We found that for these emotions the movement direction had a unimodal distribution, the SD of which was about \(35^{\circ }\). Their preferences for the head direction of fear and disgust were different. The distribution of chosen head directions is close to uniform, so no preference at all.

Starting with a straight-ahead direction and asking the participant to choose a direction shift that exhibits a given emotion might have led the participants to move the robot’s head rather than leave it looking straight-ahead. However, the results in Table 1 show that this probably is not the case because some of participants chose the straight-ahead direction for all six emotions. In fact, it was the second most preferred direction for anger, happiness, and surprise.

5.2 Averted Gaze Versus Direct Gaze

According to Adams and Kleck [1, 2] approach-oriented emotional dispositions (anger and joy) are attributed to direct gaze faces and more avoidance-oriented emotional dispositions (fear and sadness) are attributed to averted gaze faces. To compare our results with Adams and Kleck we analyzed for which emotions an averted head position was selected and for which a direct head position. We found that in general an averted position was chosen in more than 74% of the trials. Indeed we find that the emotions differ in the selected pitch with happiness and surprise associated to an upward position, and anger and sadness with a downward position. Fear and disgust have no clear pitch associated with them. For these emotions we found an almost uniform distribution of head directions. So our results (see Fig. 6) partially contradict the results of Adams and Kleck [1, 2] in the sense that anger and joy (happiness) are averted, and fear is not averted. A deeper look at our work and theirs provides a number of reasons why this might be the case. First, their experiments were with human faces and ours were with a robot face. It is possible that humans might regard direct and averted head positions of a robot differently from gaze direction of a human. Although this explanation cannot be excluded completely, we think it is not likely because it was shown that people perceive head directions and eye contact of the Nao robot similar to those of humans [11]. Second, an averted gaze in their experiments was constrained to yaw; in our experiments an averted position could be a combination of yaw and pitch. Looking down for instance is often associated with sadness, so it might be the case a directed gaze in the former experiment corresponds to a pitch down or up response in our experiment. This does indeed explain why anger and joy (happiness) were not averted in the former study, as a no-zero pitch angle was not included. However, it does not explain fear as we found no directional preference for this emotion. And third, in our experiments the emotion was given and the participant chose the matching direction. In their experiments the direction was given and the participant chose the matching emotion. The latter measures a distribution of emotions for each head direction, whereas the former measures a distribution of gaze directions for each emotion. This will only make a difference if the probability distributions are multimodal, but we found only unimodal and uniform distributions. So it seems that the approach-avoidance theory is not really supported for humanoid robots. For sadness we observe avoidance, but we also observe non-zero pitch for anger, happiness and surprise. The latter could have been missed in the earlier studies because we only found significant effects for the pitch angle and not the yaw angle. Therefore it would be interesting to repeat Adam and Kleck’s studies for human faces but with varying pitch angles included.

One possible confound in the reasoning above is that our task may have introduced a bias towards averted directions, because the initial position of Nao was always straight ahead. So participants may have been biased choosing an averted direction, also because movement duration does not make much sense otherwise. That is why we also compared the percentage of averted/directed to what one would obtain if participants chose randomly i.e. 89%/11%. Then we find that the percentage of averted/directed does not differ from random selection for fear and disgust, is more directed for anger, happiness and surprise, and is more averted for sadness. This pattern of results is consistent with the approach-avoidance theory in the sense that anger and joy (happiness) are directed and sadness is averted. Only fear is not consistent with this theory, because it is neither averted nor directed.

5.3 Movement Duration for Head Direction to Change

The difference between movement duration for head direction to change between the six emotions was statistically significant (see Fig. 7), varying between 1.32 s (happiness) and 1.95 s (sadness). We found that the participant’s preference for the head shift movement duration in order of longer to shorter is sadness, disgust, anger, fear, surprise, and happiness. This suggests a preference for longer movement durations for the negative emotions and shorter movement durations for the positive emotions. Interestingly, the two opposite emotions of sadness and happiness are at the two ends of the movement duration range.

6 Conclusion and Areas for Future Study

Our experiments show that the direction of a robot’s head position can be used to support artificial emotional expressions. Based on our results a robot should look straight down when it is angry or sad, and look straight up when it is surprised or happy. For fear and disgust the direction does not seem to matter much. This behavior pattern is not consistent with Adam and Kleck’s (2003, 2005) approach-avoidance theory. One possible cause is that Adam and Kleck did not vary the pitch angle, whereas we found a significant effect of emotion on pitch angle. It would be nice to repeat their study with variation of pitch included. So it seems the approach-avoidance theory does not apply to robotic emotional expressions. Instead we find slower, downward movements for negative emotions and faster, upward movements for positive emotions. On the other hand, if we compare results to random selection, we find partial support for the approach-avoidance theory as anger and joy are more directed, and sadness is more averted than random selection. To rule out any experimental bias it would therefore be very interesting to do an experiment where participants are shown pictures of robot faces in various positions and are asked which emotion they think the robot is trying to imitate. A second future experiment is to perform the animations with another humanoid robot to determine how universal these results are. Finally, based on our results, it is not possible to conclude whether LED color or head direction alone is sufficient to communicate artificial emotional expressions in a non-ambiguous manner. Thus, a third future experiment would be to combine the LED color combinations we found in our first study [17] with the head directions we found in this study to determine whether the combination is adequate to convey artificial emotions clearly. A fourth insightful experiment would be to test whether people are able to identify the emotions from the gaze behaviors derived from this research alone. A fifth experiment which would produce some meaningful results would involve real-time direct interaction with a NAO robot where the interlocutors would be standing at different eye level heights during the human–robot interaction.

It is also possible that variations in head direction reflect differences in the valence of emotions instead of the approach/avoidance theory. We found similar behaviors for happiness and surprise, and for anger and sadness. According to Olson et al. [24] circumplex model of affect anger, happiness, and sadness are high valence emotions and surprise has low valence. Therefore, our data does not support the idea that valence of an emotion drives behavior.

Limitations of this study are that no statistics were collected about the cultural ethnicity of the participants and all the participants were from Netherlands, hence probably representing the same culture. Cultural differences can have a profound impact on the way in which people display, perceive, and experience emotions.

References

Adams RB, Kleck RE (2003) Perceived gaze direction and the processing of facial displays of emotion. Psychol Sci 14(6):644–647

Adams RB, Kleck RE (2005) Effects of direct and averted gaze on the perception of facially communicated emotion. Am Psychol Assoc 5(1):3–11. https://doi.org/10.1037/1528-3542.5.1.3

Aldebaran Robotics (2012) Nao key features. www.aldebaran-robotics.com/en/Discover-NAO/Key-Features/hardware-platform.html. Access 17 July 2012

Argyle M, Cook M (1976) Gaze and mutual gaze. Cambridge University Press, Oxford

Bartneck C, Reichenbach J, Breemen VA (2004) In your face, robot! The influence of a character’s embodiment on how users perceive its emotional expressions. In: Proceedings of the design and emotion. Ankara, Turkey, pp 32–51

Bazo D, Vaidyanathan R, Lentz A, Melhuish C (2010) Design and testing of a hybrid expressive face for a humanoid robot. In: 2010 IEEE/RSJ international conference on intelligent robots and systems (IROS), pp 5317–5322

Beck A, Cañamero L, Bard K (2010) Towards an affect space for robots to display emotional body language. In: Ro-man, 2010 IEEE. IEEE, pp 464–469

Becker-Asano C, Hiroshi I (2011) Evaluating facial displays of emotion for the android robot Geminoid F. In: 2011 IEEE workshop on affective computational intelligence (WACI), pp 1–8

Bethel CL (2009) Robots without faces: non-verbal social human–robot interaction. University of South Florida

Breazeal C (2003) Emotion and sociable humanoid robots. Int J Hum Comput Stud 59:119–155

Cuijpers RH, van der Pol David (2013) Region of eye contact of humanoid Nao robot is similar to that of a human. In: Herrmann G, Pearson MJ, Lenz A, Bremner P, Spiers A, Leonards U (eds) Lecture notes in computer science: vol 8239: social robotics. Springer, Berlin, pp 280–289. https://doi.org/10.1007/978-3-319-02675-6_28

Ekman P (1999) Basic emotions. In: Dalgleish T, Power M (eds) Handbook of cognition and emotion, vol ch. 3. Wiley, Sussex, pp 45–60

Ekman P, Friesen WV (1969) The repertoire of nonverbal behavior: categories, origins, usage, and coding. Semiotica 1:49–98

Ekman P, Friesen WV (1978) Manual for facial action coding system. Consulting Psychologists Press, Palo Alto

Gorostiza JF, Barber R, Khamis AM, Pacheco MMR, Rivas R, Corrales A, Salichs MA (2006) Multimodal human–robot interaction framework for a personal robot. In: The 15th IEEE international symposium on robot and human interactive communication, 2006 (ROMAN 2006). IEEE, pp 39–44

Ham J, Bokhorst R, Cuijpers RH, van der Pol D, Cabibihan JJ (2011) Making robots persuasive: the influence of combining persuasive strategies (gazing and gestures) by a storytelling robot on its persuasive power. In: Social robotics. Springer, Berlin, pp 71–83

Johnson DO, Cuijpers RH, van der Pol D (2013) Imitating human emotions with artificial facial expressions. Int J Soc Robot 5(4):503–513

Johnson DO, Cuijpers RH, Juola JF, Torta E, Simonov M, Frisiello A, Beck C (2014) Socially assistive robots: a comprehensive approach to extending independent living. Int J Soc Robot 6(2):195–211

Johnson DO, Cuijpers RH, Pollmann K, van de Ven AA (2016) Exploring the entertainment value of playing games with a humanoid robot. Int J Soc Robot 8(2):247–269

Jung HW, Seo YH, Ryoo MS, Yang HS (2004) Affective communication system with multimodality for a humanoid robot, AMI. In: 2004 4th IEEE/RAS international conference on humanoid robots, vol 2. IEEE, pp 690–706

Kleinke CL (1986) Gaze and eye contact: a research review. Psychol Bull 100(1):78

Leite I, Pereira A, Martinho C, Paiva A (2008) Are emotional robots more fun to play with? In: The 17th IEEE international symposium on robot and human interactive communication, 2008 (RO-MAN 2008). IEEE, pp 77–82

Nadel J, Simon M, Canet P, Soussignan R, Blancard P, Canamero L, Gaussier P (2006) Human responses to an expressive robot. In: Proceedings of the sixth international workshop on epigenetic robotics. Lund University cognitive studies, vol 128, pp 79–86

Olson DH, Russell CS, Sprenkle DH (1980) Circumplex model of marital and family systems II: empirical studies and clinical intervention. Adv Fam Interv Assess Theory 1:129–179

Petisca S, Dias J, Paiva A (2015) More social and emotional behaviour may lead to poorer perceptions of a social robot. In: International conference on social robotics. Springer, Cham, pp 522–531

Ribeiro T, Paiva A (2012) The illusion of robotic life: principles and practices of animation for robots. In: Proceedings of the seventh annual ACM/IEEE international conference on human–robot interaction. ACM, pp 383–390

Saldien J, Goris K, Vanderborght B, Vanderfaeillie J, Lefeber D (2010) Expressing emotions with the social robot probo. Int J Soc Robot 2(4):377–389

Wu T, Butko NJ, Ruvulo P, Bartlett MS, Movellan JR (2009) Learning to make facial expressions. In: 8th International conference on development and learning, pp 1–6

Acknowledgements

We would also like to thank Jaap Stelma, Colin Lambrechts, Maurice Houben, Krystian Trninic, and Marijn de Graaf for their contributions to this work.

Funding

This study was funded by the 7th Framework Programme (FP7) for Research and Technological Development (Grant Number 2010-248085).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that they have no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Johnson, D.O., Cuijpers, R.H. Investigating the Effect of a Humanoid Robot’s Head Position on Imitating Human Emotions. Int J of Soc Robotics 11, 65–74 (2019). https://doi.org/10.1007/s12369-018-0477-4

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12369-018-0477-4