Abstract

A survey on the principles of chemical kinetics (the “science of change”) is presented here. This discipline plays a key role in molecular sciences, however, the debate on its foundations had been open for the 130 years since the Arrhenius equation was formulated on admittedly purely empirical grounds. The great success that this equation has had in the development of experimental research has motivated the need of clarifying its relationships with the foundations of thermodynamics on the one hand and especially with those of statistical mechanics (the “discipline of chances”) on the other. The advent of quantum mechanics in the Twenties and the scattering experiments by molecular beams in the second half of last century have validated collisional mechanisms for reactive processes, probing images of single microscopic events; molecular dynamics computational techniques have been successfully applied to interpret and predict phenomena occurring in a variety of environments: the focus here is on a key aspect, the effect of temperature on chemical reaction rates, which in cold environments show departure from Arrhenius law, so arguably from Maxwell–Boltzmann statistics. Modern developments use venerable mathematical concepts arising from “criteria for choices” dating back to Jacob Bernoulli and Euler. A formulation of recent progress is detailed in Aquilanti et al. 2017.

Graphical abstract

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

This article concludes a series of papers devoted to recent progress in our understanding of the “world of molecules” from the viewpoint of quantum mechanics. It amplifies the scope by discussing how what happens microscopically serves to model macroscopic phenomena, especially those involving chemical reactions and their rates.

1.1 Background

Here, we account for some remarks on the principles of chemical kinetics, a discipline that represent an important aspect of research in molecular sciences, but is based on assumptions whose justification has stimulated many discussions over the past 130 years. The date of birth of chemical kinetics as a science can be traced back to 1889 with the empirical formulation of the Arrhenius equation,Footnote 1 which—despite many decades of investigation—is still under scrutiny with respect to the interpretation of its two parameters A and Ea (see, Sect. 2.2).

The great success this equation has had in the development of experimental research motivates our attention in particular with regard to its relationship with the foundations of both chemical thermodynamics on the one hand (Lewis and Randall 1923) and statistical mechanics on the other (Fowler and Guggenheim 1949). The twentieth-century experimental results and their simulations in terms of classical and quantum molecular dynamics have definitively substantiated the collisional interpretation of reactive processes: in fact, computational techniques have been applied with a notable success in a large variety of scenarios to investigate reaction mechanisms at a microscopic level to both interpret and predict reactive processes, greatly extending our knowledge regarding a key feature, the dependence of chemical reactivity on temperature. This dependence is the subject of this article: we will take into account the progress made in the direction of a rigorous formulation of the fundamentals of chemical kinetics in terms of statistical mechanics, beyond the introduction by Arrhenius of the concept of activation energy and the ideas developed by Polanyi and Wigner (1928) and others: among them, Henry Eyring (Glasstone et al. 1941) played a leading role. These authors have based their treatments on the evolution of chemical reactions by identifying an intermediate complex (the transition state) in metastable equilibrium with the reagents. The presentation of this paper aims to revisit ideas essentially developed in two crucial decades, the Twenties and Thirties of the last century. A recent perspective is presented in Aquilanti et al. 2017 and a formulation that uniformly includes tunnel as a quantum mechanical effect in transition-state theory can be found in a previous study (Carvalho-Silva et al. 2017).

1.2 Motivation and plan of the paper

To understand and control the physical chemistry of materials in the biosphere and in a large variety of environments encountered in basic and applied scientific research and its applications, information on the kinetics of the involved elementary processes is needed, particularly on their rates, and often in a wide range of conditions and specifically as a function of temperature. At the end of the Nineteenth Century, the acquisition of an increasingly broad phenomenology led to its compaction in the context of the Arrhenius equation; in the mid-1930s various formulations were introduced, the most successful referred to as transition-state theory, satisfactory to describe the state of knowledge of chemical kinetics in those times (Polanyi and Wigner 1928; Eyring 1935), see also Kramers 1940; Truhlar and Garrett 1984; Marcus 1993; experimental progress in the present age of nanotechnology has been extended mainly to the range of low temperatures: important milestones have been achieved that have led to the emergency of new disciplines (astrochemistry, astrobiology,…) that motivate the advent of a panorama of alternative paradigms.

Modern computational progress is based on a variety of approaches: the royal path has been aimed at exploring rigorous methods of quantum mechanics, and also at developing classical or semi-classical approximations. The applications of the formulation of transition-state theory, which will be briefly discussed in Sect. 3.2, use methods of quantum chemistry and statistical mechanics where the majority of the system’s degrees of freedom are “frozen”, except for a few: usually only one is considered—this is precisely the one corresponding to the motion along the path of the reaction, which must find a pass in the landscape of potential energy surfaces, akin to a way to overcome a mountain range. The knowledge of potential energy surfaces is, therefore, fundamental and is modernly pursued through the ample body of theories and computational information coming from quantum chemistry calculations; this discipline uses advanced codes for the calculation of the electronic structures of molecular systems. The discussion will allow us in Sect. 5 to hint at the modern vision of a quantity, the apparent activation energy, Ea, by specifically defining its reciprocal, the transitivity γ, and admitting for the latter a development in terms of power series of β, the well-known reciprocal of the absolute temperature the fundamental parameter encountered in statistical thermodynamics. Based on this development, we elaborate a formulation beyond those of Arrhenius and Eyring, extending their applicability at low temperatures, as described later in Sects. 5 and 6 of the paper. Conclusions are in Sect. 7.

2 Chemistry, the science of change

2.1 The other side of chemistry

Chemistry is the science of the structure of matter and of its transformations: it is two-faced and its two faces, that of shapes and that of processes, are reflected in the two visions, that of thermodynamics and that of kinetics. Chemistry plays a fundamental role, both as a basic and as an applied science, of establishing a bridge between the macroscopic and the microscopic (molecular) world. While the former is perceived directly by our daily experience, the latter is still often regarded as inscrutable, but contrary to what was thought until a century ago—when atoms were something infinitely small compared to our ability to observe them—now single atoms (or single molecules) can be “seen” in the laboratory, using spectroscopic and general imaging techniques that follow them in space and time: we can manipulate molecules in their internal states, designing and creating materials that, although functional to macroscopic applications, are increasingly small, so that molecular dynamics allows us in many cases to complete the path connecting the simulation of elementary processes to the modeling of complex systems. The word that emerges here is “change”: It is elaborated elsewhere (Kasai et al. 2014) on this concept, and more generally on the vocation of chemistry in the direction of reaction kinetics as a science of change (Fig. 1). And this is a thread for the elaboration of a discourse concerning the foundations of chemistry, whose philosophy in the East is oriented towards the science of change, while in the West it is essentially perceived as the science of structures. From the conceptual point of view, establishing the details of this connection is the task of statistical mechanics, which makes use of the progress of atomic and molecular physics and suggests how to use them even when it is impossible or has little meaning (or even no interest) the detailed knowledge of individual processes, but indicates how to make opportune averages and probabilistic estimates by which the microscopic behavior is projected on a macroscopic scale. It is, therefore, very timely to develop the debate on the state of the foundations of chemistry, extending it to chemical kinetics beyond the thermodynamical aspects. This theme has proven to be very fertile, leading to considerable developments but also encountering inherent difficulties, since this task turns out to be harder than that of founding thermodynamics on solid bases: chemistry widely exploited thermodynamics, that had been born as the science of thermal machines, essentially towards the end of the last century, following the work of many, notably van’t Hoff (1852–1911), Nobel Laureate for chemistry in 1902. And as is well known, although van’t Hoff has inspired Arrhenius (Nobel Laureate for chemistry in 1903) for the formulation of his equation, it is to the latter that is due to the introduction of the concept of activation energy.

Left (adapted from Kasai et al. 2014): In the Chinese and Japanese languages, chemistry is written in characters, which literally mean “science of change”. The two important variants are shown: traditional, as used in Taiwan, Hong Kong and Macau; and simplified, as used in Mainland China, Singapore and also in Japanese “kanji”. In both cases, the first character means “change”, symbolized by a man, moving from standing and then sitting. The second symbol in traditional characters or in simplified characters means “study” or “science”; it suggests a kid in a school turning the pages of a book. Right: the main cover of the second edition (1929) of the remarkable book written by Hinshelwood (1926) on the foundations of modern chemical kinetics. For the subsequent treatise, Hinshelwood 1940, fully rewritten, see Fig. 7

2.2 The apparent activation energy (E a) according to Tolman and Laidler

At the beginning of the Preface of their influential textbook, Glasstone et al. 1941 remark:

One of the major unsolved problems of physical chemistry has been that of calculating the rates of chemical reactions from first principles, utilizing only such fundamental properties as the configurations, dimensions, interatomic forces, etc. of the reacting molecules. During the decade that has just ended the application of the methods of quantum mechanics and of statistical mechanics has resulted in the development of what has become known as the “theory of absolute reaction rates,” and this has brought the aforementioned problem markedly nearer solution. It cannot be claimed that the solution is complete, but one of the objects of this book is to show how much progress has been made. It may be pointed out, incidentally, that the theory of absolute reaction rates is not merely a theory of the kinetics of chemical reactions; it is one that can, in principle, be applied to any process involving a rearrangement of matter….

while the incipit of the Introduction states:

The modern development of the theory of reaction rates may be said to have come from the proposal made by S. Arrhenius to account for the influence of temperature on the rate of inversion of sucrose: he suggested that an equilibrium existed between inert and active molecules of the reactants and that the latter only were able to take part in the inversion process. By applying the reaction isochore to the equilibrium between inert and active species, it can be readily shown that the variation of the specific rate of the reaction with temperature should be expressed by an equation of the form:

$${ \ln }k\, = \,{ \ln }A - E_{a} /{\text{RT}},$$where Ea is the difference in heat content between the activated and inert molecules and A is a quantity that is independent of or varies relatively little with temperature. Subsequently, the Arrhenius equation was written in the equivalent form:

$$k\, = \, \, Ae^{{ - E_{a} /{\text{RT}}}} ,$$and it is now generally accepted that a relationship of this kind represents the temperature dependence of the specific rates of most chemical reactions, and even of certain physical processes; provided that the temperature range is not large, the quantities A and Ea can be taken constant. For reasons that will appear latter, the factor A has sometimes been called the “collision number,” but a more satisfactory term, which will be used throughout this book, is the “frequency factor.” The quantity Ea (Glasstone et al. 1941) is termed the “heat of activation” or “energy of activation” of the reaction; it represents the energy that the molecule in initial state of the process must acquire before it can take part in the reaction, whether it be physical or chemical. In its simplified form, therefore, the problem of making absolute calculations of reaction rates involves two independent aspects: these are the derivation of the energy of activation and of the frequency factor, respectively.

The decade of the Forties were the decisive years that saw the publication of the main treatises (Hinshelwood 1940; Glasstone et al. 1941), on which we base our discussion and which summarize the numerous original articles. Discussing the developments and highlighting the contribution of key personalities, we already mentioned Richard TolmanFootnote 2 entering the scene and soon exiting from it: his basic texts Tolman 1920 and Tolman 1927 are barely mentioned in Glasstone et al. 1941. Tolman perceived that the fundamental scientific revolution—the advent of quantum mechanics—his work outdated based on the Bohr–Sommerfeld’s “old” quantum theory. More Tolman achievements and personality are accounted for by the Canadian Laidler (1916–2003), a student of EyringFootnote 3 and co-author of the treatise Glasstone et al. 1941 and for decades a witness of the developments of the theories of reactivity: the today’s accepted formulation of Ea (Fig. 2) is inspired by Tolman (Laidler and King 1983; Laidler 1996).

Tolman (1920, 1927) established the relationship between the logarithmic derivative of the rate constant with respect to β as the difference between the average energies of all reacting species and that of all molecules: according to the International Union of Pure and Applied Chemistry, this is now taken as the definition of activation energy Ea (Laidler 1996)

3 The science of chance

3.1 Probability theory and statistical mechanics

One of the tasks of statistical mechanics is to provide the connection between the mechanics of systems at the atomic and molecular level and the observed macroscopic behavior, to arrive at a microscopic theory of e.g., the thermodynamic functions. Thus, one is facing the challenge proposed in one of Hilbert’s famous problems regarding the state of mathematics; in particular, the sixth of Hilbert’s problems, which concerns the application of mathematics to physics. In a recent update (Ruggeri 2017) it is argued that mathematicians developed the theory of limits of functions to derive the laws of continuous systems starting from the atomic level. The sixth of the Hilbert problem at the Mathematical Congress in Paris (1900) deals with the “Mathematical Treatment of the Axioms of Physics” and refers to the deficiencies of Boltzmann’s H-theorem:

Important investigations by physicists on the foundations of mechanics are at hand; I refer to the writings of Mach, Hertz, Boltzmann and Volkmann. It is therefore very desirable that the discussion of the foundations of mechanics be taken up by mathematicians also. Thus Boltzmann’s work on the principles of mechanics suggests the problem of developing mathematically the limiting processes, there merely indicated, which lead from the atomistic view to the laws of motion of continua. Conversely one might try to derive the laws of the motion of rigid bodies by a limiting process from a system of axioms depending upon the idea of continuously varying conditions of a material filling all space continuously, these conditions being defined by parameters. For the question as to the equivalence of different systems of axioms is always of great theoretical interest (Hilbert 1902).

Many aspects were tackled since then, but several are still open and challenged by the Scripps Institute in the year 2000. The line of thought of statistical mechanics that goes towards thermodynamics is basically based on the elaboration of microscopic models which, through a process of averaging, leads to the state functions, in particular entropy, in so doing continuing Boltzmann’s line of thought: celebrated is the work by Shannon who provided in 1948 the interpretation of entropy in terms of information theory. Regarding the “work in progress” in the field of statistical mechanics, further milestones were established by Onsager (Nobel Prize in 1968) and by Prigogine (Nobel Prize in 1977), who are credited for the development of the thermodynamics of irreversible processes. Returning to the foundations of chemical kinetics, we recall that the possibility of a predictive theory of the rates of chemical reactions became concrete only in the Thirties. Aspects still to be clarified remained open, in particular regarding a precise mathematical formulation of the limiting process connecting the microscopic world to the macroscopic counterpart. In Aquilanti et al. 2017 and later is this paper we outline some developments, whose origins however have their roots in Eighteenth Century’s mathematics (Figs. 3, 4).

The book by Jacob Bernoulli, Ars Conjectandi (the art of conjecturing), is considered as the one laying the mathematical foundations of probability theory; it was published posthumously. Bernoulli develops the theory of interest rates, leaving open the explicit summation of a series, to be obtained by Euler, see text and Fig. 5

3.2 From Arrhenius to Eyring

Arrhenius wrote his famous equation starting from a limited experimental set of observations on the velocity of chemical reactions and on their temperature dependence. Open remained the challenge providing substance to the characterization of the two parameters. Only in the Thirties expressions were given in such a way that the chemist had the tools for explicitly calculating them from reliable models. The characterization of the activation energy Ea became increasingly accurate starting in those years thanks to the development of quantum mechanics, which at least in principle allowed the calculation of potential energy surfaces and therefore the quantitative confirmation of how realistic it was to consider that a chemical process basically takes place when reactants acquire through collision a kinetic energy higher than that needed to overcome an energy barrier. Having assessed the key role of collisions, it became evident out that it is a kinetic scenario (and not a thermodynamic one) that leads to the exponential dependence of the Arrhenius formula for the reaction rate, and we will see in the later discussion that the essence of the treatment is essentially founded, from a mathematical point of view, on the limit of Euler which can be perceived in Fig. 5. It should be underlined the fact that it was a source of pride for Eyring (Glasstone et al. 1941) to have been able to give an interpretation of the pre-exponential factor A, an alternative to the mechanistic approach that proposes an expression in terms of the frequency of collisions (Trautz 1916; Lewis 1918; Hinshelwood 1940). Eyring gave a formulation based on an argument essentially based on statistical mechanics, assuming that a metastable equilibrium is established in the course of a chemical reaction between reactants and an intermediate complex (the transition state): the formulation leads to prescriptions that allow explicit calculations and gives the basis for understanding many phenomena, see Sect. 3.3.

Left: frontispiece of the book by Leonhard Euler, Introductio in Analysin Infinitorum (Introduction to the Analysis of Infinites). Working on the theory of interest rates much after the death of Jacob Bernoulli, he obtained the expression of the exponential function as a limit of a succession (i stands for infinity in the quotation from Euler’s book given in the right panel)

The justification for the use of instruments of the thermodynamics of equilibrium systems underlying Eyring’s formulation has raised lively discussions: see recent papers (Truhlar 2015; Warshel and Bora 2016), on the molecular dynamics simulations of enzymatic catalysis. The question is very timely to be posed, because modern experimental advances show a wide range of behaviors at low temperatures, especially under conditions such as interstellar space, and ultra-cold condensation: deviations from Arrhenius law at low temperatures also testify that often particles deviate from the behavior predicted by classical mechanics, leading to the manifestation of quantum phenomena [tunneling effect (Bell 1980), resonances] that can be described either exactly (Aquilanti et al. 2005, 2012), or by semiclassical theories, for which see Chapman et al. 1975; Aquilanti 1994; Aquilanti et al. 2017.

3.3 Beyond the quasi-equilibrium hypothesis

The theoretical developments that took place around the mid-Thirties, when quantum mechanics had already been formulated, were effectively implemented for calculations of atomic and molecular properties a several years later with the advent of very powerful computers, that were massively introduced only in the second half of the last century. The statistical mechanical approaches had reached a stage of sufficient maturity with particular reference to thermodynamics much before than with respect to kinetics: even in the approach of Fowler and Guggenheim 1939, who had brought remarkable rigor to the treatment of Tolman, it was noted the difficulty from the conceptual point of view of considering a chemical process as determined by an entity not so easy to be defined operationally. For this entity, the transition state, expedient was to resort to a thermodynamic hypothesis of the existence of an equilibrium with the reagents for all the degrees of freedom involved, with the exception of a translational degree of freedom, that constitutes the road to products. In this way, Eyring was right to argue that a decisive leap forward had taken place: the idea of Arrhenius that a reaction is determined by a barrier (and, therefore, the concept of activation energy) is augmented by an interpretation of the so-called pre-exponential factor in terms of quasi-equilibrium between the transition state and the initial states (Fig. 6). In more advanced versions reference is made to a dividing surface between reactants and products and to the calculation of the flow of “trajectories” that cross it (Miller 1993). This has lead naturally to the formulation of theories which can be effectively used in realistic calculations of atomic and molecular properties and as such have been enormously successful, including efforts towards modifications for applications of the chemical reaction theory to the kinetics of a whole series of experiments in extreme conditions, and especially at low temperatures. It appears, however, that in facing these themes indeed account must be taken of the problem that the Arrhenius equation does not have a satisfactory philosophical justification. Indeed, the hypothesis of quasi-equilibrium that we have mentioned is not to be considered founded on rigor comparable to that typical of thermodynamics.

The need, therefore, arises to tackle the problem by examining what are the fundamental tools that have been successful in the foundation of thermodynamics but which are still unexplored in kinetics. First of all, when we use a statistical theory, motivation for a compaction of the information arises not so much because we do not have the possibility to describe the individual behavior of the single particle of the systems, but also because knowing the individual behavior of a myriad of events often can be of no interest: for example in statistical mechanics, which was born as the evolution of the kinetic theory of gases, it is decided from the beginning to ignore the single motion of atoms and molecules, but the aim is to describe its “ average” collective behavior. We, therefore, need to establish initially which processes are of interest to us and which are not: this is equivalent to supporting the need to choose, that is to establish the criterion for which we must discern what to include and what to exclude, and to establish a criterion for defining such an average behavior.

4 “Provando e riprovando”: the choice

Quel sol che pria d’amor mi scaldò’l petto,

di bella verità m’avea scoverto,

provando e riprovando, il dolce aspetto4

(Dante Alighieri, Divina Commedia, Canto III, vv. 1–3)Footnote 4

The kinetic—non-thermodynamic—treatment of the exponential dependence of Arrhenius of the reaction velocities, even if it leaves out a lot to be desired from the point of view of mathematical rigor, reminds the solution of Euler to the problem of Jacob Bernoulli for bank interest rates: it is remarkable that it was introduced by Hinshelwood in the 1940 edition of his book (Hinshelwood 1940) (Fig. 7), but is absent in the previous versions of the Twenties that was seen in Fig. 2.

Crucial from the conceptual point of view is the transition of perspective from that of distributions of accessible states to the probability that an event takes place, concept at the root of the statistical theories introduced in science essentially in the Eighteenth Century by Jacob Bernoulli and known as that of Bernoulli’s urns (Fig. 4). Before him there had been very limited research from this point of view, implying lines of fracture with the Aristotelian scientific tradition, according to which practically the world had to be interpreted a priori starting from first principles by divine revelation. Even Newton was hesitant to take this step, not so Galileo a century earlier.

The guidelines and some details of mathematical formulations were developed in analogy with the distribution initially formulated by Bernoulli to solve the banking problem of how the interests associated to the accounts evolve over time; the solution had to wait many years, when the young Euler greatly surprised the descendants of Jacob Bernoulli, showing that the limit involved an exponential. The exponential trend is the one that frequently appears for example in the thermodynamics of equilibrium, remarkably in the derivation of the exponential distribution for the molecules velocities and the kinetic energy by Maxwell and Boltzmann. It should be remembered that in the alternative route indicated by Boltzmann, the correspondence with the thermodynamic properties is associated with the logarithm of the probabilities of the distribution of events. Note that the two approaches are conceptually very different. We observe that even if Eyring himself gave an only formal thermodynamic version of his theory, it should be fruitful to return to Maxwell [or rather to an accurate account of Maxwell’s ideas given by Sir James Jeans (Jeans 1913)].

Shortly after the appearance of Jeans’book, Tolman tried to find a statistical theory of chemical kinetics, a project which, as we have seen above, he later abandoned. There were attempts to move along this line (Eyring 1935; Uhlenbeck and Goudsmit 1935; Condon 1938; Walter et al. 1944) that deserve particular attention now that experimental instruments are available (for example molecular beams, laser-induced reactions) to follow reactions in a very short time (femtoseconds, attosecond, etc.), imaging temporal projections of process progressions; among the interpretative tools we have an advanced quantum–mechanical theory and its computational implementation (Aquilanti et al. 2005, 2012), or also the possibility of performing classical mechanics simulations (which in some cases are sufficient to deal with increasingly complex systems).

Therefore, we can say that although we are close to complete the description of the passage between the microscopic world and the macroscopic world, crucial is to realize that it is impossible and actually not even necessary to know in detail the motion of individual atoms and molecules, but we must resort to a description of the average behavior and then make predictions about macroscopic behavior. The role of a rigorous foundation of the statistical mechanics of chemical kinetics becomes decisive, when the deviations from quasi-equilibrium behavior that can be treated by thermodynamics become relevant.

5 The thermodynamic limit and thermometers

From the previous discussion the problem emerges of asking for the search of a guiding principle complementary to the so-called thermodynamic limit, which allows the transition from a microscopic gas model to the limit where the density of the system becomes larger and larger (Van Vliet 2008), permitting at high temperature to introduce the concept of the ideal gas thermometer. In general, specifically, in the case of chemical kinetics, substantial is the formulation of a macroscopic process in terms of sequences of Bernoulli operations (as we have seen previously in the Hinshelwood quote and in the spirit of the sixth of the Hilbert problems): the mathematical tool is the Euler limit, which allows the passage from the discrete to the continuous domain. In Aquilanti et al. 2017, it is described how to treat these behaviors in a unified manner, including both quantum statistics; that is, both the Bose–Einstein and the Fermi–Dirac cases, which are known to differ regarding the distributions in allowed quantum states, respectively, with no limitation (bosons) or admitting only one particle per state (Pauli exclusion principle, fermions). Other crucial effects arise at low temperatures: quantum tunneling across barriers can take place, and also the possibility arises that systems undergo a phase transition that “freezes” the reactivity. It has been found convenient to introduce the concept of transitivity, that in chemical kinetics is essentially the reciprocal of the reaction activation energy, \(\gamma = 1/E_{a}\). This quantity generalizes the concept of microcanonical velocity constant and related quantities, such as the cumulative reaction probability (Miller 1993), and can directly be related to the integral reaction cross section, a quantity that can now be experimentally measured by molecular beam scattering or directly calculated in simple cases. Remarkably, the introduction of this quantity, which allows us to circumvent the conceptual difficulty that there is a critical point in which the system is in metastable equilibrium with the reagents (the transition state), permits a statistical treatment that describes deviations from Arrhenius behavior, and uniformly accounts for properties such as quantum tunneling, transport properties and diffusion in the vicinity of phase transitions (Aquilanti et al. 2017).

To establish the foundations of chemical kinetics, one has to depart from statistical thermodynamics as essentially a theory of equilibrium states: we can start from the “zero principle” as common background and postulate the possibility of measuring a “temperature” by a kinetic device. Interestingly, it appears that the first mention of the “zero principle” is credited to Fowler and Guggenheim, 1939 at p. 56 (Fig. 8). The long chapter XII is devoted to chemical kinetics, although it starts with this sentence: “The subject of chemical kinetics strictly lies outside the province of this book.”

Fowler (1889–1944), professor at Cambridge, did not receive the Nobel Prize, but distinguished himself as a thesis supervisor of many famous students, three of them who got it: in 1933 the giant of theoretical physics Dirac, in 1977 Mott for the theory of metals and in 1983 the astrophysicist Chandrasekhar. Founder of modern statistical mechanics, Fowler with Guggenheim in the 1939 book at p. 56 formulated the zero principle of thermodynamics. Among others Fowler’s students, we mentioned here Lennard–Jones and Slater: the latter, in his 1959 book on unimolecular reactions, defined Tolman’s discussion of Ea as a “theorem”

We accepted the postulate of the existence of the Lagrange parameter, β = 1/kBT and look for a definition of a thermometer adapted to the present context. For a specific situation, it is convenient to establish a reference value of β, indicated as \(\beta_{0}\). The “attitude to perform a transition” can be measured and will have in general a “gamma of values”: it will be denoted, as anticipated by, \(\gamma \left( \beta \right)\), the transitivity, that at \(\beta_{0}\) will have a value \(\alpha\);

nearly constant in a small enough neighborhood of \(\beta_{0}\). In general, \(\gamma \left( \beta \right)\) will be assumed sufficiently well behaved to admit a Laurent expansion

From an operational viewpoint, the rate constant k of practical chemical kinetics at β is experimentally obtained when its logarithm is plotted vs β, so the transitivity can be “measured”. Assuming then that k \(\left( \beta \right)\) is measured and k’\(\left( \beta \right)\) exists, then

The Arrhenius relationship is obviously recovered:

For β0 = 0 and limiting to only two terms of the Taylor–McLaurin series (Eq. 2)

where E represents an energetic obstacle at high temperatures and O (β2) indicates neglect of terms of order higher than one. In (2), d is the first-order coefficient (c1) and is defined as the deformation parameter. Equation (5) is of first order in the variable β and is easily integrated yielding the deformed Arrhenius equation (d-Arrhenius)

In addition to the formulation of a specific kinetic limit, complementary to the thermodynamic one, we record the opportunity of an analogous formulation of the zero principle, i.e., the definition of temperature, which in a kinetic context needs an amended form alternative to the Fowler principle (see Fig. 8). We already noted the line of thought developed in thermodynamics, but also partly influenced by kinetics, which is due to Jeans 1913, and then to Uhlenbeck and Goudsmit 1935 (just the discoverers of the electron spin) and then Kennard 1938 and Condon 1938, and is also somewhat anticipated by Eyring 1935. Especially in this last reference, the possibility is hinted to define the temperature by means of a particle thermometer, without resorting to a thermostat which exchanges heat with the system. It is natural to advance the opinion that an “instrument” for measuring temperature starting from kinetic measurements can be based on a system in a range where the Arrhenius law is verified to be respected. In this way, in the similar spirit that the equation of state of ideal gases establishes the relationship between average kinetic energy and temperature (the ideal gas thermometer), a definition of an ideal reaction can be formulated as the one which obeys the Arrhenius law. By contrast, at the low temperatures where deviations are observed from Arrhenius law, the corresponding behavior demands consideration of an alternative limit. Some details are given in the next section.

6 Remarks on the kinetic limit

6.1 Perfection and ideality

The distinction between the perfect gas (that of the kinetic theory) and the ideal gas (the one that obeys the well-known equation of state), finds a correspondence in the Arrhenius equation as the link between the microscopic (molecular) and the macroscopic regimes. This is also interesting with regard to enzymatic processes: perfect enzymes are defined as those in which the catalytic behavior is determined only by diffusion (the catalyst goes to meet the site where it performs its function). Emphasis is placed not only on the concept that a microscopic perfect model describes an ideal macroscopic process, but that also the deviations from perfection can be supported by a microscopic model and exploited in explaining its macroscopic manifestation.

6.2 Deviations at low temperatures

This remark leads us to hint at the phenomenology that has accumulated in recent decades, originating both from laboratory experiments and computational molecular dynamics: these are expanding the understanding of rate processes at low temperatures, in which the deviations from linearity are revealed for the Arrhenius plots (Che et al. 2008; Aquilanti et al. 2010; Silva et al. 2013; Cavalli et al. 2014; Coutinho et al. 2015a, b, 2016, 2018; Carvalho-Silva et al. 2017; Sanches-Neto et al. 2017). The Arrhenius equation has been modified in the references cited for applications in various areas of molecular science: the dependence of the mutual energy of activation of Tolman as a function of the reciprocal of the absolute temperature defines a parameter d that uniformly covers the cases of quantum–mechanical tunnel (d < 0) and that can be shown to coincide with the statistical weight of Tsallis (Tsallis 1988) (d > 0) in his non-extensive formulation of thermodynamics.

The statistical weights in the limit of low temperatures are presented by relaxing the thermodynamic limit, respectively, for a binomial distribution or for a negative binomial distribution, formally corresponding to the Fermion or Boson statistics (the weight of Boltzmann–Gibbs is recovered for d = 0, see Aquilanti et al. 2017). The new paradigm and the current state are illustrated for: (i) super-Arrhenius kinetics, for example where transport mechanisms accelerate processes when increasing temperature; (ii) sub-Arrhenius kinetics, where quantum mechanical tunneling favors low temperature reactivity; (iii) anti-Arrhenius kinetics, where processes with no energetic obstacles are limited by molecular reorientation requirements. Examined cases concern for example (i) the treatment of diffusion and viscosity, (ii) the formulation of a transition rate theory for chemical kinetics involving quantum tunneling, (iii) the stereodirectional specificity for the dynamics of reactions severely hampered by an increase of the temperature.

For d < 0 (sub-Arrhenius behavior), the explicit identification of the parameter d with the characteristics of the potential energy barrier permits to obtain a deformed exponential formula for the description of quantum tunneling(Silva et al. 2013; Cavalli et al. 2014). For d > 0 (super-Arrhenius behavior) we obtain an interesting uniform generalization to classical mechanics, where, however, the distribution of Boltzmann–Gibbs is deformed precisely in the sense of the Pareto-Tsallis statistics [Here it is necessary to make the identification d = 1 − q, see (Tsallis 1988; Biró et al. 2014)]. It has been shown elsewhere that the deformed distribution can be interpreted following the lines of thought initiated by Maxwell and developed much later: it applies when the thermodynamic limit of an infinite number of particles (as in Van Vliet 2008) does not hold and corresponds to incomplete filling of available degrees of freedom. In Aquilanti et al. 2017, we present a formulation for which allows the description of the deviation from the thermodynamic limit as due to the interruption of a discrete temporal sequence of events, and is akin to avoiding assuming the hypothesis of a continuous time variable. In Fig. 7, we have seen how Hinshelwood 1940, gave an elementary illuminating hint on how the exponential expression that pervades all of physical chemistry can be specialized to provide the Arrhenius dependence from temperature of the reaction rate constants.

6.3 Approaching the kinetic limit

The evidence being recently accumulated on low temperatures deviations can be compacted describing propensities depending on the sign of d, that in Silva et al. 2013 we defined as classilcal (super-Arrhenius, d > 0) and quantum (sub-Arrhenius, d < 0). We remark that the anti-Arrhenius case occurs under special conditions, involving stereodynamical and roaming effects (Coutinho et al. 2015a, 2016, 2017, 2018). In essence, the low temperature limiting, Td, for the super-Arrhenius according to the formula (6), occurs at \(dE\beta \to 1\), defining \(\beta_{d} = 1/dE\) or \(T_{d} = dE/k_{B}\). According to Eq. 5, the transitivity connects linearly the two limits. Such an admittedly simple universality has an approximate complementarity in the d < 0 (quantum) behavior: formula (6) with d < 0 covers uniformly the so-called Arrhenius and moderate quantum mechanical regimes, while the ultimate regime obeys the Wigner limit; an intermediate deep tunneling and possibly resonance dominated regime is currently being explored.

7 Conclusions

7.1 Retrospect

It has been known for a long time (Perlmutter-Hayman 1976) that, if we want to maintain the exponential form of the Arrhenius equation, since there is an interdependence between the activation energy and the pre-exponential factor, if Ea is temperature dependent, the same must be true for A. The way out consistent with the definition of IUPAC of Ea, given in (Laidler 1996), is to consider the Tolman expression as leading to the definition as a differential equation for k, opening the alternative to the exponential form in favor of its “deformation” (Aquilanti et al. 2010). In the presentation of progress on reaction kinetics it was interesting to underline the unifying role of treatments of a different type of rates, those linked to interest in bank accounts. The description of the velocities of chemical reactions is a topic in which the main problem is to understand how systems deviate from equilibrium states and how they approach new ones: velocities are typically influenced exponentially by the inverse of temperature (Arrhenius behavior), analogously to Euler’s solution to the problem posed by Jacob Bernoulli in calculating bank rates (the last paragraph in Fig. 4). The key is Euler’s formula, established as a continuous limit of a discrete sequence of events: in essence the description of the reactivity at low temperature is based on the same elementary mathematical language. See on Wikipedia the page “Aquilanti–Mundim Deformed Arrhenius Model”.

7.2 Summary

In this presentation, we have attempted at giving an account of how perspectives are emerging in the last years for a strict formulation of the foundations of chemical kinetics in terms of statistical mechanics and relatively “liberated” from thermodynamics, well beyond the ingenious introductions, basic for a theory of chemical reaction rates, of the concepts of activation energy in Arrhenius times (ca. 1889), and of a transition state in Eyring times (ca 1935). Analyzing those achievements from the perspective of modern molecular dynamics, it has been argued to be crucial to relax the exponential dependence of Arrhenius equation in favor of a flexible power law functionality. Recent advances are uniformly on line with non-extensive thermodynamics (in case of classical-mechanics situations) and account for Bose or Fermi statistics under quantum mechanical situations, and for quantum tunnel: the inclusion of the role of the latter in transition-state theory is described in Carvalho-Silva et al. 2017. The focus of the presentation has been on the advances of concepts developed mainly in the years 1920–1940, while for the present status reference is made to Aquilanti et al. 2017.

7.3 Epilogue

An anonymous English proverb can effectively conclude this presentation: “To have a change, take the chance of making a choice”. This proverb seems to indicate how to follow the famous thesis of Marx, reported in his epitaph: “The philosophers so far have interpreted the world in different ways, now it is about changing it”: in retrospect, in Sect. 2 we posed the problem of facing the construction of a theory of reactivity of chemical processes, “the science of change” (Fig. 1); in Sect. 3, it was stated that it is decisive to establish a statistical procedure by “the discipline of chances” (chance and opportunity, see Fig. 3); finally, in Sect. 4, we have seen how it is crucial to establish a criterion of choice (“provando e riprovando”), thus conditioning the length of the sequence of favorable or unfavorable encounters, exemplified by Bernoulli trials.

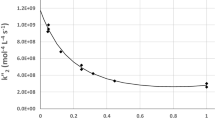

As illustrated in Fig. 9, along the downward path, from the macroscopic to microscopic (molecular) approaches, the analysis of chemical kinetics experiments or e.g. of molecular dynamics simulations [the science of change], provide rate coefficients k(T) as a function of the absolute temperature T: when heuristically reported as Arrhenius plots, they yield Ea (the apparent activation energy) as a logarithmic derivative of k with respect to the reciprocal of T, or specifically β, the Lagrange parameter of statistical mechanics [the theory of chance]: the dependence is that of Tolman theorem and typical of partition functions, see Condon 1938. A trivial definition leads to the concept of transitivity, γ = 1/Ea, that is a function related e.g. to the integral reaction cross section and the cumulative reaction probabilities, and thus to choices for microscopic events to be ‘favorable’ or not at the molecular level. The upward path is pursued in theoretical calculations of rates, when quantum mechanical or empirical knowledge of interaction and exact or approximate simulations of the dynamics are the tools for theoretically generating the rate coefficients. In the left lower graph, a typical illustration of transitivity is shown, either constant as a function of β in the Arrhenius regime, or linear in β the d-Arrhenius regime—increasing or decreasing for sub- super- Arrhenius cases, respectively. The rarer anti-Arrhenius case is considered in Coutinho et al. 2015a.

Graphical view of the sequence of steps from phenomenological rate coefficients to the transitivity function (downward along the main diagonal) and viceversa (upward). In the first-order formulation discussed in Sect. 5 the transitivity is linear in \(\beta {\text{ and }}k\left( \beta \right)\, = \,\mathop {\lim }\limits_{d \to 0} A(1 - dE\beta )^{1/d} = A e^{ - E\beta }\). For d > 0, (Super-Arrhenius, case of classically originated deviations) the transitivity goes to zero as Td \(\to\) d E/kB. For d < 0, (Sub-Arrhenius, propensity case of quantum tunneling) the rate constant tends to the Wigner limit as T \(\to\) 0

Notes

Svante Arrhenius (1859–1927) was a Swedish chemist and physicist, Nobel Prize in chemistry in 1903 for his theory of ion transfer seen as responsible for the passage of electricity. Arrhenius therefore did not get the Nobel Prize for his contribution to chemical kinetics. Actually, we read on the official website of the Nobel Prize that in 1903 he had been nominated for both chemistry and physics, and that the Chemistry Committee had proposed to give him half of each prize, but the Physics Committee did not accept the idea. Arrhenius is also famous for having introduced not only the controversial but currently revisited concept of "panspermia" (possible extraterrestrial origin of life), but above all already a century ago the concept of global warming by the “greenhouse” effect, as generated by human activity: curiously this effect, today documented and feared, appeared to Arrhenius as a hope to ease difficulties of Sweden to face cold winters.

Tolman (1881–1948) was an American chemist, physicist and mathematician. The program to apply to chemical kinetics a "new science", statistical mechanics, was developed by Tolman from 1920 (Tolman 1920). The quotation given above concludes his voluminous compendium (Tolman 1927) where he re-elaborates the article Tolman 1920 and other subsequent ones. We will see later that is optimism was later frustrated. He abandoned the field of chemical kinetics, but gained fame in other areas of science. He not only no longer provided any contribution, but even make no mention it in his monumental treatise, which since 1938 is the standard reference on the foundations of statistical mechanics (Tolman 1938). According to Laidler and King 1983, Tolman considered obsolete both the use of the old quantum theory and some hypotheses on the role of radiation in the activation of the chemical process. But Fig. 2 shows the relevance of what is now called "Tolman's Theorem" is well evidenced by a quantum–mechanical perspective provided by Fowler and Guggenheim 1939. As shown in Fig. 8, they dedicated a long chapter on chemical kinetics in their influential statistical thermodynamics treatise.

Henry Eyring (1901–1981) was a Mexican chemical from the United States. He dealt with physical chemistry, giving his major contribution to the study of the speed of chemical reactions and reaction intermediates. He received the Wolf Award for Chemistry in 1980 and the National Medal of Science in 1966 for developing the Theory of Absolute Velocities of Chemical Reactions (left panel), one of the most important twentieth-century developments in chemistry. The failure to award the Nobel Prize to Eyring was a surprise for many. Evidently the members of the committee did not promptly understand the importance of the theory as stated in the Nobel Prize website.

“Enlighted by the hot sun of my lover/I was first shown the road of truth’s grand beauty/approving or disproving, to discover”. Dante, Paradiso, incipit of the third canto. The term "provando e riprovando" dates back to Dante, which should be interpreted as "approve and reject", say yes and say no. On this filter Galileo insisted; in fact, the phrase is often considered a Galilean statement and was used by his school and then in the Accademia del Cimento. It seems that we can deduce that Dante is placed at the end of the Middle Ages and at the beginning of humanism: to say that somehow he had anticipated modern science is perhaps excessive, but certainly some signals are recognized. Galileo definitely overcomes the border and proposes the experiment as discriminating between what is true and what is false. Galileo tells his daughter Virginia: “The aim of science is not to open a door to infinite wisdom, but to set a limit to infinite error” Bertolt Brecht, Life of Galileo (ca. 1940), ninth scene. Keeping this attitude may suggest an interesting anticipation of Popper's theory that emphasizes falsifiability, as central to verifiability, thus leading us to the scientific debate of the 20th century.

References

Aquilanti V (1994) Storia e Fondamenti della Chimica, in G. Marino. Rendiconti Accademia Nazionale delle Scienze, Rome

Aquilanti V, Cavalli S, De Fazio D et al (2005) Benchmark rate constants by the hyperquantization algorithm. The F + H2 reaction for various potential energy surfaces: features of the entrance channel and of the transition state, and low temperatur e reactivity. Chem Phys 308:237–253

Aquilanti V, Mundim KC, Elango M et al (2010) Temperature dependence of chemical and biophysical rate processes: phenomenological approach to deviations from Arrhenius law. Chem Phys Lett 498:209–213

Aquilanti V, Mundim KC, Cavalli S et al (2012) Exact activation energies and phenomenological description of quantum tunneling for model potential energy surfaces. the F + H2 reaction at low temperature. Chem Phys 398:186–191

Aquilanti V, Coutinho ND, Carvalho-Silva VH (2017) Kinetics of low-temperature transitions and reaction rate theory from non-equilibrium distributions. Philos Trans R Soc London A 375:20160204

Bell RP (1980) The tunnel effect in chemistry. Champman and Hall, London

Biró T, Ván P, Barnaföldi G, Ürmössy K (2014) Statistical power law due to reservoir fluctuations and the universal thermostat independence principle. Entropy 16:6497–6514

Carvalho-Silva VH, Aquilanti V, de Oliveira HCB, Mundim KC (2017) Deformed transition-state theory: deviation from Arrhenius behavior and application to bimolecular hydrogen transfer reaction rates in the tunneling regime. J Comput Chem 38:178–188

Cavalli S, Aquilanti V, Mundim KC, De Fazio D (2014) Theoretical reaction kinetics astride the transition between moderate and deep tunneling regimes: the F + HD case. J Phys Chem A 118:6632–6641

Chapman S, Garrett BC, Miller WH (1975) Semiclassical transition state theory for nonseparable systems: application to the collinear H + H2 reaction. J Chem Phys 63:2710–2716

Che D-C, Matsuo T, Yano Y et al (2008) Negative collision energy dependence of Br formation in the OH + HBr reaction. Phys Chem Chem Phys 10:1419–1423

Condon EU (1938) A simple derivation of the Maxwell–Boltzmann law. Phys Rev 54:937–940

Coutinho ND, Sanches-Neto FO, Carvalho-Silva VH, de Oliveira HCB, Ribeiro LA, Aquilanti V (2018) Kinectics of the OH + HCl →H2O + Cl Reaction: Rate Determining Roles of Stereodynamics and Roaming and of Quantum Tunneling. J Comput Chem. https://doi.org/10.1002/jcc.25597

Coutinho ND, Silva VHC, de Oliveira HCB et al (2015a) Stereodynamical origin of anti-arrhenius kinetics: negative activation energy and roaming for a four-atom reaction. J Phys Chem Lett 6:1553–1558

Coutinho ND, Silva VHC, Mundim KC, de Oliveira HCB (2015b) Description of the effect of temperature on food systems using the deformed arrhenius rate law: deviations from linearity in logarithmic plots vs. inverse temperature. Rend Lincei 26:141–149

Coutinho ND, Aquilanti V, Silva VHC et al (2016) Stereodirectional origin of anti-arrhenius kinetics for a tetraatomic hydrogen exchange reaction: born-oppenheimer molecular dynamics for OH + HBr. J Phys Chem A 120:5408–5417

Coutinho ND, Carvalho-Silva VH, de Oliveira HCB, Aquilanti V (2017) The HI + OH → H2O + I reaction by first-principles molecular dynamics: stereodirectional and anti-arrhenius kinetics. In: Lecture notes in computer science. Computational Science and Its Applications – ICCSA. Springer, Trieste

Coutinho ND, Aquilanti V, Sanches-Neto FO et al. (2018) First-principles molecular dynamics and computed rate constants for the series of OH–HX reactions (X = H or the halogens): Non-arrhenius kinetics, stereodynamics and quantum tunnel. In: lecture notes in computer science. Computational Science and Its Applications – ICCSA. Springer, Melbourne

Eyring H (1935) The Activated Complex in Chemical Reactions. J Chem Phys 3:107–115

Fowler RH, Guggenheim EA (1939) Statistical thermodynamics: a version of statistical mechanics for students of physics and chemistry. Macmillan, London

Fowler R, Guggenheim EA (1949) Statistical Thermodynamics. Cambridge University Press, London

Glasstone S, Laidler KJ, Eyring H (1941) The theory of rate processes: the kinetics of chemical reactions, viscosity, Diffusion and Electrochemical Phenomena. McGraw-Hill, New York City

Hilbert D (1902) Mathematical problems. Bull Am Math Soc 8:437–479

Hinshelwood CN (1940) The kinetics of chemical change. Oxford Clarendon Press. Oxford

Jeans J (1913) The Dynamical Theory of Gases. Dover Publications Incorporated, Mineola

Kasai T, Che D-C, Okada M et al (2014) Directions of chemical change: experimental characterization of the stereodynamics of photodissociation and reactive processes. Phys Chem Chem Phys 16:9776–9790

Kennard EH (1938) Kinetic theory of gases: with an introduction to statistical mechanics. McGraw-Hill, New York City

Kramers HA (1940) Brownian motion in a field of force and the diffusion model of chemical reactions. Phys 7:284–304

Laidler KJ (1996) A glossary of terms used in chemical kinetics, including reaction dynamics. Pure Appl Chem 68:149–192

Laidler KJ, King MC (1983) Development of transition-state theory. J Phys Chem 87:2657–2664

Lewis WCM (1918) Studies in catalysis. Part IX. The calculation in absolute measure of velocity constants and equilibrium constants in gaseous systems. J Chem Soc 113:471–492

Lewis GN, Randall M (1923) Thermodynamics and the free energy of chemical substance. McGraw-Hill Book Company, New York

Marcus RA (1993) Electron transfer reactions in chemistry. Theory and experiment. Rev Mod Phys 65:599–610

Miller H (1993) Beyond transition-state theory: a rigorous quantum theory of chemical reaction rates. Acc Chem Res 26:174–181

Perlmutter-Hayman B (1976) Progress in inorganic chemistry: On the temperature dependence of Ea. In: Lippard SJ (ed) Wiley, New York, pp 229–297

Polanyi M, Wigner E (1928) Über die Interferenz von Eigenschwingungen als Ursache von Energieschwankungen und chemischer Umsetzungen. Z Phys Chem Abt A 139:439–452

Ruggeri T (2017) Lecture notes frontiere. Accademia Nazionale dei Lincei, Rome

Sanches-Neto FO, Coutinho ND, Carvalho-Silva VH (2017) A novel assessment of the role of the methyl radical and water formation channel in the CH3OH + H reaction. Physical Chemistry Chemical Physics 19:24467–24477

Silva VHC, Aquilanti V, De Oliveira HCB, Mundim KC (2013) Uniform description of non-Arrhenius temperature dependence of reaction rates, and a heuristic criterion for quantum tunneling vs classical non-extensive distribution. Chem Phys Lett 590:201–207

Slater NB (1959) Theory of unimolecular reactions. Cornell University Press, Ithaca

Tolman RC (1920) Statistical mechanics applied to chemical kinetics. J Amer Chem Soc 42:2506–2528

Tolman RC (1927) Statistical mechanics with applications to physics and chemistry. The Chemical catalog company. New York

Tolman RC (1938) The principles of statistical mechanics. Oxford University, London

Trautz M (1916) Das Gesetz der Reaktionsgeschwindigkeit und der Gleichgewichte in Gasen. Zeitschrift für Anorg und Allg Chemie 96:1–28

Truhlar DG, Garrett BC (1984) Variational transition state theory. Annu Rev Phys Chem 35:159–189

Tsallis C (1988) Possible generalization of Boltzman–Gibbs statistics. J Stat Phys 52:479–487

Truhlar DG (2015) Transition state theory for enzyme kinetics. Arch Biochem Biophys 582:10–17

Uhlenbeck GE, Goudsmit S (1935) Statistical energy distributions for a small number of particles. Zeeman Verhandenlingen Martinus N:201–211

Van Vliet CM (2008) Equilibrium and non-equilibrium statistical mechanics. World Scientific Pub, Singapore

Walter JE, Eyring H, Kimball GE (1944) Quantum Chemistry. Wiley, New York

Warshel A, Bora RP (2016) Perspective: defining and quantifying the role of dynamics in enzyme catalysis. J Chem Phys 144:180901

Acknowledgements

The authors acknowledge the Brazilian funding agencies Coordenação de Aperfeiçoamento de Pessoal de Nível Superior (CAPES) and Conselho Nacional de Desenvolvimento Científico e Tecnológico (CNPq) for financial support. Valter H. Carvalho-Silva thanks PrP/UEG for research funding through the PROBIP and PRO´-PROJETO programs, also thanks CNPq for research funding through the Universal 2016—Faixa A program. Ernesto P. Borges acknowledges National Institute of Science and Technology for Complex Systems (INCT-SC). Vincenzo Aquilanti acknowledgments financial support for the Italian Ministry for Education, University and Research, MIUR. Grant no. SIR2014(RBSI14U3VF) and Elvira Pistoresi for this assistance in the preparation of her manuscript.

Author information

Authors and Affiliations

Corresponding authors

Additional information

Dedicated to the memory of professor Maria Suely Pedrosa Mundim, physicist (1956–2017).

This contribution is the written peer-reviewed version of a paper presented at the International Conference “Molecules in the World of Quanta: From Spin Network to Orbitals” held at Accademia Nazionale dei Lincei in Rome, April 27 and 28, 2017.

Rights and permissions

About this article

Cite this article

Aquilanti, V., Borges, E.P., Coutinho, N.D. et al. From statistical thermodynamics to molecular kinetics: the change, the chance and the choice. Rend. Fis. Acc. Lincei 29, 787–802 (2018). https://doi.org/10.1007/s12210-018-0749-9

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12210-018-0749-9