Abstract

The Social Emotional Health Survey–Secondary (SEHS-S) was developed to assess adolescent’s core mindsets that are associated with positive psychosocial development. The present study extended SEHS–S research by examining its use with a nonwestern sample of Korean adolescents (Grades 7–12; N = 686) and examined the invariance of the SEHS–S factor structure for males and females. Factor analyses were conducted in two stages. In stage 1, using a split-half of the total sample (S1), confirmatory factor analysis (CFA) was employed to test the fit of the previously known factor structure of the SEHS–S. In stage 2, using S2, structural equation modeling was used to test two alternative SEHS–S factor structures and invariance across gender groups in a series of multigroup CFAs. Results indicated that the CFA analyses supported the SEHS–S measurement model. The multigroup invariance analysis found that the SEHS–S higher-order structure had full invariance across gender groups, which indicated that the measured latent traits have similar meaning across groups and that the students responded to the items in similar ways. Latent means analysis found that females more strongly (moderate effect size) endorsed belief-in-others items than males. A SEM analysis also found that the SEHS–S measurement model, including the second-order covitality latent trait was a significant positive predictor of subjective wellbeing. Finally, students with higher levels of covitality reported better school achievement and fewer depressive symptoms. Implications for the applied use of the SEHS–S in Korea to assess complete mental health are discussed.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

There is an emerging recognition that the mental health needs of South Korean students are not being addressed and this shortcoming contributes to substantial levels of student psychological distress. According to the Korean Youth Risk Behavior Web-based Survey (Korean Center for Disease Control 2013) involving 72,435 middle and high school students ages 13 to 18 years, 25 % of boys and 37 % of girls reported having depressive symptoms during the previous 12 months. About 17 % of students reported suicidal ideation and 4 % reported suicidal attempts. Although bullying (13 %) and victimization (12 %) were lower in 2012 compared to previous years, students reported experiencing more harmful and complex types of school violence (Korean Foundation for Preventing Youth Violence 2013). In recognition of this national youth mental health challenge and with the goal of improving student mental health, in 2012 the Korean government expanded its mental health screening (“Student Emotional and Behavioral Traits test”) to the entire school population from elementary to high school. The most recent national youth mental health screening in 2013 found that 7 % of students were classified as an at-risk group that might need counseling and 2 % as a high-risk group requiring immediate mental health services (Korean Ministry of Education 2013). Although laudable in its efforts to address the mental health needs of Korean youth, this national mental health screening did not provide information about the mental health status of almost 90 % of students. Although the majority of the students in this national screening did not report substantial levels of psychological distress, this survey did not include questions about students’ wellbeing and other quality of life indicators, which are part of a balanced dual-factor complete mental health paradigm (Suldo and Shaffer 2008).

In addition to students who experience emotional or behavioral distress, some students have diminished subjective wellbeing and psychosocial functioning; that is, at much lower levels than expected given that they do not concurrently report significant psychopathology or risk behaviors. These “vulnerable” students are traditionally unrecognized when using a risk-oriented screening survey. For example, a study by Antaramian et al. (2010), showed that these vulnerable students (i.e., low wellbeing combined with low psychopathology) are similar to the more easily identified group of troubled students (i.e., low wellbeing with high psychopathology) in that both groups were equally at risk of academic and behavior problems. In addition, the vulnerable group has been found to have low stability in terms of group membership. One study by Kelly et al. (2012) reported that among all students the vulnerable students had the highest probability of switching to the trouble group within 5 months. That is, without prevention, vulnerable students are at higher risk of getting worse over time, not better, which emphasizes the potential value of a balanced complete mental health screening process over the traditional psychopathology symptom-based screening process.

1.1 Dual-Factor Mental Health Model

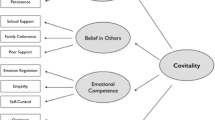

One framework for complete mental health screening is the dual-factor mental health model, which simultaneously examines positive psychological dispositions and negative psychological distress indicators (Greenspoon and Saklofske 2001; Keyes 2005; Suldo and Shaffer 2008). Most school-based surveillance measures, however, address only or primarily negative indicators or symptoms, based on a unidimensional model that regards absence of psychological distress as equivalent to psychological wellbeing (Keyes 2007). To further explore the viability of the dual-factor mental health paradigm, Furlong and colleagues (2014c) developed a school survey based on positive indicators of youth development to measure psychological mindsets or dispositions that are associated with thriving subjective wellbeing. The Social and Emotional Health Survey–Secondary (SEHS-S) measures core psychological dispositions associated with positive youth development and draws a single, latent meta-construct, “covitality,” from them. As a counterpart of comorbidity, covitality is the synergistic effect of positive mental health resulting from the interplay among multiple positive psychological mindsets (Furlong et al. 2014c). The SEHS–S conceptual model has 12 subscales that form four first-order domains (belief-in-self, belief-in-others, emotional competence, engaged living), which in turn contribute to the second-order meta-construct, covitality (see conceptual model in Fig. 1).

1.2 Social Emotional Health Survey–Secondary Research

To date, the SEHS-S has been validated in three studies using independent samples. The preliminary validation study involved 4,189 secondary students (Grades 8, 10, 12) and confirmatory factor analyses, invariance analysis, and latent means supported the SEHS–S theoretical model (Furlong et al. 2014c). The second-order covitality model was the most appropriate model regardless of gender. The level of covitality was a strong predictor of subjective wellbeing and associated with higher academic achievement, higher perceptions of school safety, lower substance use, and lower depressive symptoms. A second validation study with 2,240 students (Grades 9–12) reported similar results (You et al. 2014a). There was complete factorial invariance across four groups according to sex and age (ages 13–15, 16–18) and covitality negatively predicted psychological distress measured by the BESS (Behavioral and Emotional Screening System; Kamphaus and Reynolds 2007). A third validation study involved a sample of more than 14,000 California students and extended the SEHS–S’s validity evidence by showing that it had full factorial invariance for students from five sociocultural groups of U.S. students (White, Black, Latino/a, Asian, and blended sociocultural backgrounds; You et al. 2014b).

1.3 Study Purpose: Need for Strength Focus Survey in Korea

In Korea, there are currently few strength-based screening measures for student mental health. Lee and Jo’s (2005) Resilience Scale might be one of the few for this purpose. These authors developed an instrument addressing internal assets and external resources (four dimensions each) similar to the California Resilience Youth Development Module (Furlong et al. 2009; Hanson and Kim 2007). The Resilience Scale has 16 subscales (three items each), which compose eight dimensions; however, its conceptual model has had inconsistent support from confirmatory factor analyses (Lee and Jo 2006; Moon 2012). Consequently, most Korean researchers have reported the Resilience Scale total score in their studies; however, no study has tested the validity of the second-order, latent-factor model. Nevertheless, the Resilience Scale has been widely used by Korean researchers to measure the overall level of resilience. More recently, another instrument called the Resilience Scale for Adolescents (RSA; Ju and Lee 2011) was developed in an attempt to address the conceptual and statistical weaknesses of Lee and Cho’s Resilience Scale. Although the RSA was validated with better methodology and obtained multigroup equality across three age groups (ages 13–14, 15–16, and 17–18), it has a first-order structure with only four factors (27 items). It has a simple factor structure, but appears to be limited in its scope to address sufficiently resilience or positive youth development.

Similar to the aforementioned approaches, there are other efforts to measure indicators of positive youth development based on the ideas of the Search Institute’s 40 developmental assets model (Scales and Leffert 1998). However, such instruments have methodological problems. Some researchers have used the 58 items of the Developmental Asset Profile (DAP, Search Institute 2005) without any psychometric validation process. Others developed shorter versions more appropriate for Korean youth (38 items by Hwang and Jo 2004; 46 items by Jung 2010). They utilized the same factor structures of DAP with four internal assets (social support, empowerment, boundaries and expectation, and constructive use of time) and four external assets (commitment to learning, positive values, social competencies, and positive identity). However, the psychometric properties of these instruments need to be further examined. Another limitation of these instruments is that they have only been used research studies.

At present, it seems both researchers and practitioners in Korea do not have conceptually and technically well-validated instruments to measure indicators of positive youth development or social and emotional mental health. The aim of assessing Korean youths’ mental wellbeing would be enhanced with access to an easy-to-use, school-based measure that provides integrative information on key psychological mindsets associated with social and emotional health and their synergistic effects. The availability of a valid social emotional health measure would contribute substantially to the understanding of Korean students’ complete mental health and the development and evaluation of effective prevention strategies. Therefore, the aim of the present study was to evaluate the validity of the Social and Emotional Health Survey–Secondary (SEHS–S) for middle and high school students by examining the fit of the SEHS–S theoretical model for Korean youths and the associations of their responses with other quality of life outcome variables. Such invariance analyses are required prior to using the SEHS–S in research and applied contexts (Borsboom 2006).

2 Method

2.1 Participants

The participants in this study were 716 Korean students from six middle schools and seven high schools in South Korea. These schools are located in Seoul, the capital of South Korea (four schools) and four other big cities (nine schools). The medians of family annual incomes of these five cities range from $38,480 to $48,100 (National Youth Policy Institute 2013). According to participating teachers, the schools all serve students whose families experienced middle class socioeconomic circumstances. Originally, survey packets were distributed to 909 students whose classroom teachers showed interest to participate in this study. Informed consent was obtained from 716 students (consent rate of 79 %). As part of the initial review of the quality of student responses, data from 30 students (4 %) were dropped because the responses were not missing at random. These students completed less than half of the items or stopped before the end of the questionnaire. Therefore, a final usable sample size was 686 students, of which 301 were middle school students (44 %) and 385 were high school students (56 %). There were 387 girls (56 %) and 299 boys (44 %). The mean age of the sample was 15.9 years (SD = 1.3, range = 13–18 years). The 30 students removed from the analysis were not different from the usable sample in terms of sex and age.

For the confirmatory factor analyses described later, two randomly split subsamples (using SPSS Version 20 case selection random sample utility) were created, with subsample 1 (S1) and subsample 2 (S2) both having 343 students. The samples’ gender proportions were comparable (S1: 59 % female, 41 % male; S2: 54 % female, 46 % male). The students both subsamples ranged in age from 13 to 18 years and both had the same mean age of 15.9 years (SD = 1.3).

2.2 Measures

Social and Emotional Health Survey–Secondary (SEHS-S)

The SEHS–S (Furlong et al. 2014c) was translated into Korean by one of the authors (Lee) and three bilingual Korean graduate students (each had lived in the U.S. for more than 15 years) who worked independently to translate the SEHS–S items. First, one student translated the SEHS–S items into Korean. Next, another student translated the Korean version back into English. The third student translated the back-translated English version into Korean again. Finally, the lead author and the three graduate students met and discussed any translation discrepancies and used consensus to agree upon a final item wording that maintained semantic equivalence of the Korean items and the original SEHS–S items. This process was successfully completed for 33 of the 36 SEHS–S items. However, because of difficulties in identifying separate Korean words for three gratitude subscale items (grateful, thankful, appreciative), the Korean version used alternate items (three highest-loading items: 1, 2, and 4) taken from the Gratitude Questionnaire-6 (GQ-6; McCullough et al. 2002). Therefore, the gratitude scale of the Korean version asked about general gratitude attitudes rather than more immediate feelings of gratitude (since yesterday) as in the SEHS–S. Except for this change in the gratitude subscale, everything was the same with the original SEHS–S. For the total sample used in the present study, the internal consistency reliabilities of the four first-order latent traits were: belief-in-self (α = 0.84), belief-in-others (α = 0.85), emotional competence (α = 0.82), and engaged living (α = 0.88). The alpha for the combined 36-item total covitality score was 0.94, which compares to 0.92 (Furlong et al. 2014c) and 0.95 (You et al. 2014b) reported in previous studies. All subscales use a four-point response scale except for gratitude and zest subscales with a five-point response scale. Table 1 shows the structure of the original SEHS–S and response options for each of the 12 subscales. A copy of the Korean version of the SEHS–S is available from the authors.

Subjective Wellbeing

Similar to Furlong et al. (2014c) study, subjective wellbeing was measured with the combination of general life satisfaction and recent affective experiences. Life satisfaction was measured with the five items Furlong et al. (2014c) selected from the Student Life Satisfaction Scale (SLSS; Huebner 1991, 1995). The five items were translated into Korean through the back-translation procedure described previously. Instead of the six-point scale of the original SLSS, the Korean-version used a five-point response scale that ranged from 1 (not at all like me) to 5 (very much like me) to make the response format easier for Korean adolescents to understand. The internal consistency (Cronbach’s α) of the five-item SLSS was 0.81 in Furlong et al. (2014c) and 0.70 in this study. The 10-item Positive and Negative Affect Schedule for Children (PANAS; Ebesutani et al. 2012) was used to assess the students’ recent emotional experiences. It asks them to rate their feelings or mood during the last 1 month on a scale of 1 (not at all) to 5 (extremely). We excluded one PANAS positive item (lively) that corresponded to closely with to the SEHS–S zest subscale and the translation was complemented with the Korean-version PANAS (Lee et al. 2003). The internal consistencies for positive affect and negative affect were 0.86 and 0.82 in Ebesutani et al. (2012) and for this study, they were 0.86 and 0.77, respectively.

Quality of Life Indicators

To examine concurrent validity we used two brief measures of life indicators. One of the measures was a single item on self-reported school course grades during the previous 12 months (response options: 5 = very good [within top 20 %], 4 = good [top 21–40 %], 3 = average [41–60 %], 2 = poor [low 21–40 %], 1 = very poor [low 1–20 %]). Another measure consisted of a single item on depressive symptoms from the U.S. Youth Risk Behavior Surveillance Survey (YRBS; Eaton et al. 2012; During the past 12 months, did you ever feel so sad or hopeless almost every day for two weeks or more in a row that you stopped doing some usual activities? (response options: 0 = no, 1 = yes).

2.3 Procedure

Approval to conduct this study was obtained from the Institutional Review Board of Ewha Womans University in July 2013. The data were collected using a convenience sampling method from July to November 2013. One of the authors explained the nature and purpose of the study to teachers or school administrators. Teachers who decided to participate helped the author begin the process of acquiring parent consent at school. Both parent written consent and student assent were required for participation in this study. Classroom teachers administered the survey during one of their class periods, which took about 15 min to complete.

2.4 Overview of the Statistical Analyses

Figure 1 shows our conceptual factor structure for SEHS–S. In the initial analysis, 12 measured variables (mean scores) were computed using 36 items (three items each for measured variables). Initial confirmatory factor analyses using a third-order factor model did not converge due to model complexity. Alternatively, to test this conceptual model, we employed several steps. First, in stage 1, we tested the measurement model using 36 measured variables shown in Table 1. This measurement model provides a link between scores on a measuring instrument (i.e., three items each for 12 subfactors; total 36 items) and the underlying constructs (i.e., 12 covitality subfactors) they are designed to measure. After confirming that the 36 items well represent the 12 underlying constructs, we then computed 12 variables using the corresponding three items that comprised each of subfactor. Based on the stage 1 analysis, in stage 2, we used the 12 subfactors as measured variables.

Structural equation modeling was used to test two alternative factor structures of the SEHS–S and invariance across gender groups in a series of multigroup CFAs. The invariance testing process involved several steps in which increasingly restrictive levels of measurement invariance were explored. To assess measurement invariance, we followed the analysis steps suggested by Chen et al. (2005). Configural invariance is the first, but weakest type of invariance because it only tests whether the basic latent trait model structure (i.e., the pattern of salient and nonsalient factor loadings) is invariant across groups. Metric invariance is the second step in evaluating measurement invariance and it evaluates if the loadings of latent factors are equal across groups using the baseline model. If a latent factor has equal loadings across groups, this can be interpreted as evidence that each group responds to the items in the same way and that the latent factors can be compared across groups. Scalar invariance, the third step of invariance testing, evaluates if the intercepts of measurement items are equal across groups. In summary, our analyses examined five levels of measurement invariance in the following order: (a) configural equivalence; (b) first-order factor coefficient equivalence; (c) first- and second-order factor coefficient equivalence; (d) first- and second-order factor coefficient and intercepts of measured variables equivalence; and (e) first- and second-order factor coefficient, intercepts of measured variables, and first-order factors equivalence.

In the present study, for all analyses, the degree of model fit was assessed using several criteria, the combination of which informed judgments about the overall adequacy of the model fit: the Satorra-Bentler scaled statistic, comparative fit index (CFI; Bentler 1990), non-normed fit index (NNFI; Bentler & Bonett, 1980), standardized root-mean-square residual (SRMR), and root-mean-square error of approximation (RMSEA; Steiger and Lind 1980) with a 90 % confidence interval. Given that covitality items are ordinal variables that violate the assumption of multivariate normality and preliminary examination of students’ responses showed that these data were multivariately kurtoses (Mardia’s multivariate kurtosis coefficient = 178.76, p < 0.01). Maximum Likelihood (ML) estimates with robust statistics were conducted with EQS (V6.1) structural equation modeling program (Bentler 2006). Satorra-Bentler scaled statistic (S-B χ 2) was used because it provides a correction to the test statistics and standard errors when data are non-normally distributed. Specifically, SRMR was examined with a value lower than 0.08 desired. In addition to acceptable SRMR, values lower than 0.06 for the RMSEA, values close to 0.95 for the NNFI and CFI were used to determine a good-fitting model (Hu and Bentler 1999). In reporting on evidence of invariance, two criteria must be met. The first criterion is that the multigroup model exhibits an adequate fit to the data. Cheung and Rensvold (2002) recommended that the differences in CFI values between models are smaller than or equal to −0.01, which indicates that the null hypothesis of invariance should not be rejected. Further, Lagrange multiplier (LM) test modification indices were examined to find which equality constraints were untenable.

2.5 Missing Data

As in many studies using survey methods, our dataset contained several missing responses. In order to obtain unbiased estimates of the parameters of interest, despite the incompleteness of the data, this study employed EM ML estimation (Yuan and Bentler 2000).

3 Results

3.1 Construct Validity

Confirmatory Factor Analysis

Using S1 (n = 343), a CFA was conducted to test the fit of the previously known SEHS–S factor structure. Using 36 items shown in Table 1 as measured variables, we tested the 12-factor model. Specifically, three items each associated with the 12 latent constructs (e.g., self-efficacy, self-awareness, etc.). The model adequately fit the data, S-B χ 2 = 1337.62, df = 582, p < 0.05; SRMR = 0.069; NNFI = 0.958; CFI = 0.968; RMSEA = 0.062, 90 % CI [0.058, 0.067]. RMSEA values were not ideal but other fit indices were acceptable. As expected, each item showed high factor loadings on its corresponding 12 subfactors. All parameter estimates were found to be statistically significant (p < 0.01, see Table 1).

Next, a CFA was reassessed on S2 (n = 343) to validate the identified factor structure found with the S1 analysis. As with any measure, additional research is needed to verify the factor structure of the SEHS–S; therefore, several alternative factor structures were tested to identify plausible models that could explain the relations among the items. Recognizing that the four core positive psychology constructs were significantly correlated, Model 1 tested a fully-correlated model. It was found that all of the items loaded on to their respective latent constructs: belief-in-self, belief-in-others, social-emotional competence, and engaged living (loadings range: 0.51–0.94) and the model adequately fit the data, S-B χ 2 = 151.18, df = 48, p < 0.05; SRMR = 0.052; NNFI = 0.948; CFI = 0.958; RMSEA = 0.081, 90 % CI [0.066, 0.095]. RMSEA values were not ideal but other fit indices were acceptable. Model 2 extended the analysis by testing a second-order latent factor model with the four first-order latent constructs loading onto a general latent factor, which we labeled covitality. The model suggested an adequate fit to the data, χ 2 = 156.98, df = 50, p < 0.05, SRMR = 0.054; NNFI = 0.978; CFI = 0.979; RMSEA = 0.081, 90 % CI [0.066, 0.095]. RMSEA values were not ideal but other fit indices were acceptable. Both models yielded close fit to the data; therefore, we made a decision based on both statistical criteria and conceptual grounding. Since parsimony is one of the criteria for model selection and the four first-order SEHS–S constructs loaded significantly on the second-order latent construct, covitality, the second-order latent factor model was selected over the correlated four-factor model as the preferred solution. Therefore, there was sufficient support for the hypothesized SEHS-S second-order factor model and it was then used in the following validity analyses. Finally, to improve the model fit, we added four error covariances based on the modification indices. Specifically, four error covariances between first-order factors were added (i.e., factor 1 and 2, factor 1 and 3, factor 2 and 3, and factor 2 and 4). This addition is theoretically reasonable, thus the model was respecified. The respecified model yielded an excellent fit to the data, S-B χ 2 = 56.82, df = 46, p < 0.05, SRMR = 0.055; NNFI = 0.988; CFI = 0.992; RMSEA = 0.026, 90 % CI [0.000, 0.047].

Multigroup Invariance Testing

As previously mentioned, measurement invariance testing was performed in five steps using the entire sample. The second-order factor model was examined for each gender group. All groups showed an adequate fit to the data: (a) males: S-B χ 2 = 45.18, df = 46, p < 0.05; SRMR = 0.049; NNFI = 0.961; CFI = 0.973; RMSEA = 0.001, 90 % CI [0.001, 0.051]; (b) females: S-B χ 2 = 45.66, df = 46, p < 0.05; SRMR = 0.053; NNFI = 0.990; CFI = 0.990; RMSEA = 0.001, 90 % CI [0.000, 0.048]. All fit indices were acceptable. For each of these groups, the factor loadings were of all of satisfactory magnitude and were statistically significant at the p < 0.01 level. Because the same factor structure was tenable across the gender groups, configural invariance was ascertained.

Table 2 shows the model fit indices for invariance testing. Model 2 constrained all of the first-order factor loadings to be equal across groups. Comparing Model 1 and Model 2, ∆CFI was zero, indicating that the scores on the SEHS-S items showed the same amount of change across groups for the same amount of change on the first-order factors (Vandenberg & Lance, 2000). Model 3 constrained all first- and second-order factor loadings to be equal across groups. Comparing Model 2 and Model 3, ∆CFI was less than 0.01, indicating that the first-order SEHS–S factors had the same amount of change across the four groups for the same amount of change on the latent second-order covitality factor. Model 4 was equivalent to Model 3 except that the first-order dimensions measurement intercepts were set to be equal across groups. Comparing Model 3 and Model 4, ∆CFI was less than 0.01, indicating a common zero point across groups for each of the SEHS–S first-order factors. The final model (Model 5) tested for equivalence of intercepts of the first-order SEHS–S factors. This model yielded a good fit to the data along with ∆CFI was zero. This result established a common zero point on the second-order factor across groups. In sum, the results suggested that the second-order factor model showed sufficient invariance across the two gender groups. Therefore, it was appropriate to carry out additional analyses to compare latent mean across groups.

Test of Latent Mean Differences

Given that the assumptions of invariance were satisfied, the next step tested for latent means differences. We examined mean differences with male set as the reference group (see Table 3). Given the larger sample and to avoid over-interpreting trivial differences, we interpreted moderate and larger effect sizes only (d ≥ 0.50; Cohen, 1992). Results showed that none of the mean gender group differences for the covitality index and the four first-order factors were in the moderate or higher effect size range. The only cross-gender comparison in the small effect size range was that females more strongly endorsed belief-in-others items than males.

3.2 Predictive Validity

Test of Path Model for Subjective Wellbeing

Using S2, we examined the associations among the four first-order latent constructs (or positive mental health domains), the hypothesized second-order latent construct (covitality), and adolescents’ subjective wellbeing used as an outcome within a structural path model analysis. The means, standard deviations, and correlations among the latent traits included in the path model are shown in Table 4. As hypothesized, the analysis revealed significant, strong positive relations between the four positive-mental-health domains and adolescents’ covitality, as well as between covitality and the subjective wellbeing indicator (see Fig. 2). Moreover, the overall structural model proved to be a good fit to the data, S-B χ 2 = 271.22, df = 85, p < 0.05, SRMR = 0.062; NNFI = 0.956; CFI = 0.966; RMSEA = 0.082, 90 % CI [0.071, 0.093]. The RMSEA value was not ideal but other fit indices were acceptable.

3.3 Concurrent Validity

To further examine the conceptual validity and practical utility of the higher-order SEHS–S covitality construct, we first calculated the covitality composite scores for each student by summing each of the total scores of the 12 SEHS–S subscales. When examining the distribution of the covitality scores within the present sample, we found the variable was normally distributed (range = 51–150, M = 102.31, SD = 17.47, skewness = −0.01, kurtosis = −0.08). Following, the covitality scores, transformed to z-scores, were used to create four covitality groups: very low (scores < −1.0 SD, n = 103), low (scores −1.0 to 0 SD, n = 238), high (scores 0 to 1.0 SD, n = 212), and very high (scores > 1.0 SD, n = 113).

Self-reported school grades were significantly higher as the covitality level increased, F (3, 662) = 22.74, p < 0.001. The very high covitality group had the highest school grades (M = 3.68, SD = 1.14), followed by the high covitality group (M = 3.34, SD = 1.06), the low covitality group (M = 3.08, SD = 1.05), and very low covitality group (M = 2.53, SD = 1.01). Tukey post hoc analyses indicated all group differences were significant. Next, a chi-square test of association was conducted to test the relation between the covitality level and the presence of depressive symptoms experienced during the previous 12 months. Results indicated significant group differences on the presence of depressive symptoms, χ 2 (3, N = 667) = 21.90, p < 0.001. Post hoc comparisons using a Bonferroni correction indicated both the very low (26.2 %) and low (21.4 %) covitality groups had a higher proportion of students who experienced depressive symptoms than students in the high (10.4 %) and very high (8.8 %) covitality groups.

4 Discussion

Chen (2008) argued that research documenting that an instrument measures the same constructs across diverse samples of youths is a prerequisite for its use in other cultural contexts and for comparative research. Following from this suggestion, this study built on previous studies that examined the SEHS–S’s hierarchical factor structure that includes a second-order covitality factor (Furlong et al. 2014c; You et al. 2014b) and extended previous research by showing that the SEHS–S measurement model was replicated with students in an Asian cultural context. The one caveat is that the Gratitude Adjective Checklist items used in the English U.S. version could not be translated to the Korean linguistic context, which is consistent with research that has reported differences in the gratitude preferences expressed in the Korean and U.S. sociocultural contexts (Park and Lee 2012). This required us to explore, successfully, the use of items from the GQ–6. Although we have not yet fully tested the GQ-6 in U.S. samples, we anticipated that these items would fit the measurement model with the English version as well; nonetheless, this assumption will need to be evaluated empirically. In general, however, the SEHS–S was translated effectively into Korean, replicated the constructs and reliability found for the English version, and provided preliminary evidence supporting its use in cross-national comparative research and for use as a strength-based assessment with Korean secondary students.

Specifically, consistent with previous studies (Furlong et al. 2014c; You et al. 2014a), confirmatory factor analyses indicated all 36 items loaded well onto their respective 12 subscales. Item loadings ranged from 0.51 to 0.94, which is similar to previous findings (0.58–0.94 in Furlong et al. 2014c; 0.52–0.90 in You et al. 2014a). As with previous SEHS-S studies, cross-factor loading was not a problem with Korean data. The results of confirmatory factor analyses also supported the statistical model of the SEHS–S with the 12 subscales loading well onto the four first-order latent traits (belief-in-self, belief-in-others, emotional competence, and engaged living) and they, in turn, contributed to the covitality, second-order latent construct.

Furthermore, results of measurement invariance testing indicated this second-order latent factor model is equally applied to both males and females. It means the SEHS–S can be utilized as a measure of Korean adolescents covitality regardless of gender. Such measurement invariance allows meaningful comparisons of latent means across gender groups. So far, gender differences have been reported in social and emotional health-related variables such as self-efficacy (Kim and Lee 2011), empathy (Lee 2014), and social support (Cho 2005; Eom et al. 2008) in Korea. However, group differences were obtained mostly through ANOVA, which does not consider measurement errors. In this study, latent mean differences were minimal between genders in terms of the covitality index as well as the four first-order factors. On the other hand, Korean females had higher scores on belief-in-others (i.e., combination of family coherence, school support, and peer support) than males, although the effect size was small. This finding is consistent with previous studies. Especially on a validation study comparing five U.S. sociocultural groups (You et al. 2014b) Asian and Latina females endorsed more belief-in-others items than their counterparts (d ≥ 0.50). You et al. interpreted this finding in relation to cultural norms for females that value social cohesion and interdependence. Similarly, other studies have found that Korean female adolescents perceived more peer support (Cho and Bang 2003; Eom et al. 2008) and parental support (Cho 2005) than males.

The construct validity of the Korean-version SEHS–S was also supported in that the higher-order structural model including covitality significantly predicted subject wellbeing. Furthermore, the higher level of the covitality index was positively associated with self-reported academic achievement and negatively related with depressive symptoms. These findings were consistent with the results of previous studies (Furlong et al. 2014c; You et al. 2014a), which supported the use of the covitality index as a general indicator for youths’ positive social-emotional development.

Overall, the findings of the present study supported the validity of the SEHS–S Korean version as a strength-based instrument that provides information on core psychological mindsets along with the covitality score as an overall indicator of social emotional health. The introduction of the SEHS–S to Korea could be beneficial in that it would fill the void of instruments that measure indicators of positive youth development or social and emotional health. As described earlier, in Korea, there are few strength-based assessments with robust statistical qualities available for research and for universal mental health screening. For example, instruments measuring resilience (e.g., Ju and Lee 2011; Lee and Jo 2005) or developmental assets (e.g., Hwang and Jo 2004; Jung 2010) are valuable in terms of their emphasis on personal strengths and positive development. However, these measures currently need additional psychometric validation and their use has been limited only to research. At present, Korean teachers and school mental health professionals are not using strength-focused assessments with children and adolescents. This might be the case because available measures focus on risk factors, lack a more comprehensive whole-child focus, and are not easy to use in school settings. Therefore, the SEHS–S Korean version could serve as a strength-based measure with a comprehensive theoretical background, robust statistical qualities, and proven practicality for use in universal school-based screening (Dowdy et al. 2014; Furlong et al. 2014a).

4.1 Importance of the SEHS–S in the Korean Educational Context

Additional research is needed to examine the potential utility of how a dual-factor screening paradigm might provide information not just for interventions involving the students in greatest need of therapy and other supports, but also for early prevention involving vulnerable youths, and for programs that foster higher levels of psychological thriving in all youths. For example, the 2012 Programme for International Student Assessment (PISA; 2013) that assesses the skills and knowledge of 15-year-old students internationally also included items that asked students to rate their level of overall happiness and sense of belonging at school. Korean students ranked 5th out of 64 countries in overall in academic achievement, but had the lowest percentage of students reporting that they agreed or strongly agreed (60 %) that they felt happy and could make friends (79 %) at school, and were second lowest (65 %) in reporting that they were satisfied with their school. These 2012 PISA results tap into key markers related to the social emotional development of Korean students. While Korean students do very well on standardized academic assessments, this appears to come at some cost to other quality of life indicators. Although most Korean students are happy and satisfied at school and report that they have school friends, there is a sizable subgroup of students (30–40 %) who report less than optimal school belonging and engagement. In addition, stresses related to educational expectations are not just internal to the students as shown by the 2012 PISA report finding that more than 80 % (ranked highest among all countries) of Korean parents expect their children to complete a college degree. Given the incidence of Korean students’ internal distress and high external expectations, traditional mental distress screeners, such as the one adopted by the Korean Ministry of Education have value in support of efforts to reduce the suffering of the 3–10 % of students with more substantial mental distress symptoms. However, these assessments are not designed to provide the nuanced information needed to understand the broader social and psychological needs of the 20–25 % of vulnerable students who could be identified with assessments such as the SEHS–S. This is an important consideration because the 2012 PISA report showed that high academic achievement does not necessarily come at the cost of diminished happiness at school and other quality of life indicators. For example, the Shanghai-China students were ranked #1 in academics and more than 80 % of the students reported that they were happy at school.

4.2 How Might the SEHS–S Support Social Emotional Learning?

The PISA-happiness information naturally turns the discussion to how the SEHS–S might be used to understand more about the social emotional development of Korean students and which universal and targeted prevention programs might build the psychological dispositions that lead to higher levels of subjective wellbeing and that foster other quality of life indicators. Dowdy and colleagues (2014) suggested the combined use of the SEHS–S to the traditional risk-oriented survey would provide more balanced perspectives on students’ complete mental health. The information on the 12 psychological mindsets might also support the development of intervention strategies that include: (a) implementing a dual-factor complete mental health screening in schools (Suldo and Shaffer 2008) and (b) using the universal positive screening system to implement programs and services that foster school engagement and belonging such as psychoeducational programs that build gratitude (Furlong et al. 2014b).

In Korea, with the increase of adolescents’ problem behaviors such as suicide and school violence and the finding that Korean students are the second-highest in terms of intellectual performance, but the second-lowest in terms of social interaction abilities among 36 countries (Kim 2011), there is an increasing awareness that social and emotional competence needs to be directly fostered by schools. However, the subject of social emotional learning has not been fully recognized or actively studied in Korea, yet (Shin 2011). Instead, character education has been strongly emphasized and included as a part of school-related policies only since 2010. The introduction of the SEHS–S would be very opportune in that the Korean school system needs concrete information about their students’ social and emotional health status and how to promote such competence more effectively at school. The finding that students with higher covitality levels reported better school achievement could be persuasive to many Korean parents who value academic excellence over mental health. Therefore, combining strength-based assessment such as the SEHS–S to the risk-oriented survey currently being used in Korea would be an ideal option for promoting both school success and students’ wellbeing in Korean schools.

4.3 Study Limitations

A primary limitation of the present study is that although a sample from 13 schools was used to establish the preliminary validity of the Korean version of the SEHS–S, the results cannot be generalized to all Korean youths ages 13–18. However, the primary purpose of this investigation was to explore and evaluate if an adaptation of the SEHS–S for Korean schools had sufficient validity to warrant further investigation. The results of this study were a first step in the ongoing process of establishing and refining the validity of the Korean SEHS–S. Although the CFA analyses provided sufficient evidence to warrant additional research with the SEHS–S with Korean students, the results of this study need verification with other independent sample because of the less-than-optimal RMSEA fit indicators. Future investigations will need to examine student responses derived from regional and nationally representative samples and to further explore if any item modifications could produce more optimal overall fit statistics.

A second limitation is that the preliminary concurrent validity analyses were all based on student self-report data. Future research will need to establish that student SEHS–S responses are related to other sources of information such as teacher and parents reports, actual student academic achievement, and even observable school behaviors, such as participation in class activities. In addition, future research will need to extend the range of quality of life indicators used to establish concurrent and predictive validity. For example, the present study compared the covitality score with the single YRBS depression item and future research will need to examine this relation using a more robust measure of student psychological distress.

Finally, although in the U.S. the SEHS–S has been used successfully as part of a universal dual-factor complete mental health screening process, we still need to establish how the SEHS–S might be used most effectively with the Korean national mental health screening assessment. Here the goal should be how to use these measures not just for general surveillance purposes, but to inform school-based student care coordination teams about which specific students are in the greatest need of mental health services and how the school as a whole can implement care coordination to foster the happiness and wellbeing of all Korean students.

5 Conclusion

Information from the SEHS–S is needed because just knowing if students have high or low subjective wellbeing is insufficient to know how to help them. The current study provided preliminary construct and concurrent validity evidence for the SEHS–S social emotional health covitality model for Korean students. Efforts are now needed to implement pilot investigations to document and provide the evidence base on how best to implement culturally sensitive and responsive complete mental health assessment in Korean schools.

References

Antaramian, S. P., Heubner, E. S., Hills, K. J., & Valois, R. F. (2010). A dual-factor model of mental health: toward a more comprehensive understanding of youth functioning. American Journal of Orthopsychiatry, 80, 462–472.

Bentler, P. M. (1990). Comparative fit indices in structural models. Psychological Bulletin, 107, 238–246. doi:10.1037/0033-2909.107.2.238.

Bentler, P. M. (2006). EQS 6 structural equations program manual. Encino: Multivariate Software.

Borsboom, D. (2006). When does measurement invariance matter? Medical Care, 44, 176–181.

Chen, F. (2008). What happens if we compare chopsticks with forks? The impact of making inappropriate comparisons in cross-cultural research. Journal of Personality & Social Psychology, 95, 1005–1018. doi:10.1037/a0013193.

Chen, F. F., Sousa, K. H., & West, S. G. (2005). Testing measurement invariance of second order factor models. Structural Equation Modeling, 12, 471–492.

Cheung, G. W., & Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Structural Equation Modeling, 9, 233–255. doi:10.1207/S15328007SEM0902_5.

Cho, A. (2005). The mediating effect of social support in the relationship between adolescents’ stress and academic achievement. Korean Journal of Educational Research, 9(43), 137–155.

Cho, A., & Bang, H. (2003). The effect of parent, teacher, and friend social support on adolescents’ game addiction. Korean Journal of Youth Studies, 10(1), 249–275.

Cohen, J. (1992). A power primer. Psychological Bulletin, 112, 155–159.

Dowdy, E., Furlong, M. J., Raines, T. C., Price, M., Murdock, J., & Bovery, B. (2014). Enhancing school-based mental health services with a preventive and promotive approach to universal screening for complete mental health. Journal of Educational and Psychology Consultation, 4, 2014. doi:10.1080/10474412.2014.929951. First published online November.

Eaton, D. A., Kann, L., Kinchen, S., Shanklin, S., Flint, K. H., Hawkins, J. Wechsler, H. (2012). Youth risk behavior surveillance—United States, 2011. MMWR, Morbidity and Mortality Weekly Report, 61(4).

Ebesutani, C., Regan, J., Smith, A., Reise, S., Higa-McMillan, C., & Chorpita, B. F. (2012). The 10-item positive and negative affect schedule for children, child and parent shortened versions: application of item response theory for more efficient assessment. Journal of Psychopathology and Behavioral Assessment, 34, 191–203.

Eom, T., Kang, M., & Cho, J. (2008). Gender differences of the stress, hopelessness, depression, suicidal ideation, and social support in adolescents. Journal of Human Studies, 22, 5–30.

Furlong, M. J., Ritchey, K. M., & O’Brennan, L. M. (2009). Developing norms for the California resilience youth development module: internal assets and school resources subscales. California School Psychologist, 14, 35–46. doi:10.1007/BF03340949.

Furlong, M. J., Dowdy, E., Carnazzo, K., Bovery, B., & Kim, E. (2014). Covitality: Fostering the building blocks of complete mental health. NASP Communiqué, (June issue). Also available from www.readperiodicals.com/201406/3346560221.html

Furlong, M., Froh, J., Muller, M., & Gonzalez, V. (2014b). The role of student engagement in engaged living and psychological and social wellbeing: The centrality of connectedness/relatedness. In D. J. Shernoff & J. Bempechat (Eds.), National Society for the Study of Education yearbook—Engaging youth in schools: Empirically-based models to guide future innovations (Vol. 13, No. 1). New York: Columbia Teachers College.

Furlong, M. J., You, S., Renshaw, T. L., Smith, D. C., & O’Malley, M. D. (2014c). Preliminary development and validation of the social and emotional health survey for secondary students. Social Indicators Research, 117, 1011–1032. doi:10.1007/s11205-013-0373-0.

Greenspoon, P. J., & Saklofske, D. H. (2001). Toward an integration of subjective wellbeing and psychopathology. Social Indicators Research, 54, 81–108. doi:10.1023/A:1007219227883.

Hanson, T. L., & Kim, J. O. (2007). Measuring resilience and youth development: The psychometric properties of the Healthy Kids Survey. (Issues & Answers Report, REL 2007–No. 034). Washington, DC: U.S. Department of Education, Institute of Education Sciences, National Center for Education Evaluation and Regional Assistance, Regional Educational Laboratory West. Retrieved from http://ies.ed.gov/ncee/edlabs

Hu, L., & Bentler, P. (1999). Cutoff criteria for fit indexes in covariance structure analysis: conventional criteria versus new alternatives. Structural Equation Modeling, 6, 1–55. doi:10.1080/10705519909540118.

Huebner, E. S. (1991). Initial development of the Students’ life satisfaction scale. School Psychology International, 12, 231–243. doi:10.1177/0143034391123010.

Huebner, E. S. (1995). The students’ life satisfaction scale: an assessment of psychometric properties with black and white elementary school students. Social Indicators Research, 34, 315–323. doi:10.1007/BF01078690.

Hwang, M. S., & Jo, H. S. (2004). The relationship between developmental assets for young people and health risk behaviors. The Korean Journal of Health Psychology, 9(1), 85–98.

Ju, S., & Lee, Y. (2011). Development and validation of the resilience scale for adolescents (RSA). Korean Journal of Youth Studies, 18, 103–139.

Jung, C. W. (2010). Effects and relationships among developmental assets, locus of control, stress coping styles, and stress on the basis of adolescents’ perception. (Unpublished doctoral dissertation). Seoul, Korea: Chungnam National University.

Kamphaus, R. W., & Reynolds, C. R. (2007). BASC-2 Behavioral and Emotional Screening System manual. Bloomington: Pearson.

Kelly, R. M., Hills, K. J., Huebner, E. S., & McQuillin, S. D. (2012). The longitudinal stability and dynamics of group membership in the dual-factor model of mental health psychosocial predictors of mental health. Canadian Journal of School Psychology, 27, 337–355. doi:10.1177/0829573512458505.

Keyes, C. L. M. (2005). Mental illness and/or mental health? Investigating axioms of the complete state model of health. Journal of Consulting and Clinical Psychology, 73, 539–548. doi:10.1037/0022-006X.73.3.539.

Keyes, C. L. M. (2007). Promoting and protecting mental health as flourishing: a complimentary strategy for improving national mental health. American Psychologist, 62, 95–108. doi:10.1037/0003-066X.62.2.95.

Kim, K. H. (2011). 2010 report of diagnosing Korean youth key competence. Seoul: National Youth Policy Institute.

Kim, E. A., & Lee, S. Y. (2011). The roles of empathy, self-efficacy, and beliefs in classroom norm in defending behaviors among middle school students. The Korean Journal of Developmental Psychology, 24(1), 59–77.

Korean Center for Disease Control. (2013). The Ninth Youth Risk Behavior Web-based Survey report. Seoul, Korea: Author.

Korean Foundation for Preventing Youth Violence. (2013). 2012 National survey report on school violence in Korea. Seoul, Korea: Author.

Korean Ministry of Education. (2013). 2012 Report on national student mental health screening. Seoul, Korea: Author.

Lee, S. Y. (2014). The roles of empathy, social-self-efficacy, and perceived norms on defending behaviors to bullying among middle school students. The Korean Journal of Developmental Psychology, 27(3), 89–109.

Lee, H., & Jo, H. (2005). A study for development of the resilience scale of Korean adolescents. Studies on Korean Youth, 16, 161–206.

Lee, H., & Jo, H. (2006). A validation study of the resilience scale in Korean adolescents. The Korean Journal of Counseling and Psychopathology, 18, 353–371.

Lee, H. H., Kim, E. J., & Lee, M. K. (2003). A validation study of Korea positive and negative affect schedule: the PANAS scales. The Korean Journal of Clinical Psychology, 22(4), 935–946.

McCullough, M. E., Emmons, R. A., & Tsang, J. (2002). A grateful disposition: a conceptual and empirical topography. Journal of Social and Personality Psychology, 8, 112–127. doi:10.1037/0022-3514.82.1.112.

Moon, K. (2012). Effects of stress, self-resilience, and depression on suicide ideation among teenagers. Seoul: Korea University.

National Youth Policy Institute. (2013). Korea Children and Youth Panel Study (KCYPS)’s middle school first-grade third-year panel data (2012). Seoul, Korea: Author. Retrieved from http://archive.nypi.re.kr

Park, H. S., & Lee, H. E. (2012). Cultural differences in “thank you.”. Journal of Language and Social Psychology, 31, 138–156. doi:10.1177/0261927X12438536.

Proramme for International Student Assessment. (2013). PISA 2012 results in focus: What 15-year-olds know and what they do with what they know. Paris: France: Organisation for Economic Co-operation and Development. www.oecd.org/pisa/keyfindings/pisa-2012-results-overview.pdf

Scales, P. C., & Leffert, N. (1998). Developmental assets: A synthesis of the scientific research on adolescent development. Minneapolis, MN: Search Institute.

Shin, H. S. (2011). An inquiry into the necessity and sustainability of social and emotional leaning in schools pursuing academic excellence. The Korean Journal of School Psychology, 8, 175–197.

Steiger, J. H., & Lind, A. (1980). Statistically based tests for the number of common factors. Paper presented at the annual meeting of the Psychometric Society, Iowa City, IA.

Suldo, S. M., & Shaffer, E. J. (2008). Looking beyond psychopathology: The dual-factor model of mental health in youth. School Psychology Review, 37, 52–68.

Vandenberg, R. J., & Lance, C. E. (2000). A review and synthesis of the measurement invariance literature: Suggestions, practices, and recommendations for organizational research. Organizational Research Methods, 2, 4–69. doi:10.1177/109442810031002.

You, S., Furlong, M. J., Dowdy, E., Renshaw, T. L., Smith, D. C., & O’Malley, M. D. (2014a). Further validation of the social and emotional health survey for high school students. Applied Research in Quality of Life, 9, 997–1015. doi:10.1007/s11482-013-9282-2.

You, S., Furlong, M. J., Felix, E. D., & O’Malley, M. D. (2014). Validation of the Social and Emotional Health Survey for five sociocultural groups: Multigroup invariance and latent mean analyses. Psychology in the Schools.

Yuan, K. H., & Bentler, P. M. (2000). Three likelihood-based methods for mean and covariance structure analysis with nonnormal missing data. Sociological Methodology, 30, 165–200. doi:10.1111/0081-1750.00078.

Author information

Authors and Affiliations

Corresponding author

Electronic supplementary material

Below is the link to the electronic supplementary material.

ESM 1

(PDF 92 kb)

Rights and permissions

About this article

Cite this article

Lee, Sy., You, S. & Furlong, M.J. Validation of the Social Emotional Health Survey–Secondary for Korean Students. Child Ind Res 9, 73–92 (2016). https://doi.org/10.1007/s12187-014-9294-y

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12187-014-9294-y