Abstract

Purpose of the Review

The review aims to help clinicians understand the current landscape of artificial intelligence (AI) tools for atrial fibrillation (AF). It explores the readiness of these tools for clinical use and identifies areas requiring further research.

Recent Findings

AI has increasingly played a crucial role in supporting AF care across the continuum of screening, diagnosis, and management. First, AI optimizes AF screening by refining patient selection through AF risk prediction. While most of these models have undergone substantial validation, their performance and feasibility for large-scale implementation vary. Secondly, AI has demonstrated its effectiveness in enhancing the diagnostic capabilities of ECGs, mobile cardiac monitors, and wearables by providing accurate, real-time detection. Thirdly, in the management of AF patients, emerging causal machine learning models have been developed to personalize treatment choices, yet rigorous evaluation in routine clinical practice remains pending. Within these three domains, AI also holds promise for stroke risk stratification, clinical decision support, patient education, and enhancing patients’ and clinicians’ adherence to guideline-recommended care, but such areas are either underdeveloped or have not yet shown a significant impact.

Summary

AI holds promise in supporting the screening, diagnosis, and management of AF. AI has been most reliably applied to streamlining AF diagnosis and is ready for clinical use in selecting screening populations. However, there is still significant progress required before it can be used to tailor treatment decisions for individual patients.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

Traditionally, the management of atrial fibrillation (AF) seeks to address two primary goals – symptom control and stroke prevention. Over the past decade, the therapeutic armamentarium for AF management has expanded. For stroke prevention, direct oral anticoagulants (DOACs) have replaced warfarin as the first-line therapy for most AF patients; [5, 13, 40, 11] and percutaneous left atrial appendage closure (LAAO) has emerged as a viable option for patients who are not suitable for oral anticoagulation (OAC) [37]. For symptom control, while medical rate or rhythm control options remain, catheter ablation has been increasingly used as the first-line therapy and some data indicated the role of ablation in reducing AF progression and improving clinical outcomes [38, 29, 43].

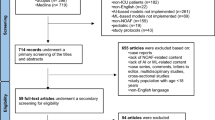

Advancements in AF management and a recognition that much of AF goes undiagnosed have sparked a growing interest in screening and early diagnosis. Artificial intelligence (AI) plays a pivotal role by enhancing population-based screening, targeting specific patient subgroups beyond traditional risk factors. AI also streamlines diagnosis, alleviating clinician cognitive load when analyzing ECGs. Furthermore, AI has the potential to personalize treatment decisions. In this review, we will examine the role of AI in screening, diagnosis, and management of AF to help clinicians understand how to best leverage AI in each of the stages of clinical decision making (Fig. 1).

Screening – AI May Tip the Balance of Benefit and Harm

AF screening essentially needs to address two questions – whom to screen and how to screen. Current guidelines vary in their recommendations regarding these questions. The 2023 American College of Cardiology (ACC)/ American Heart Association (AHA)/ American College of Clinical Pharmacy (ACCP)/ Heart Rhythm Society (HRS) guideline did not specify a population eligible for screening nor the screening approaches [34]. The US Preventive Services Task Force (USPSTF) concluded the current evidence is insufficient to assess the benefits and harms of AF screening [7]. By contrast, the 2020 European Society of Cardiology (ESC) guidelines stated opportunistic screening for AF by pulse taking or ECG rhythm strip is recommended in patients > = 65 years of age as a Class I Level B recommendation, indicating there is evidence and/or general agreement that the screening is beneficial, useful, effective with data from single randomized controlled trial (RCT) or large non-randomized studies [14].

Thus, if and how best to implement AF screening is unclear for frontline clinicians and the health systems. Clinicians and guideline writers alike wrestle with the fundamental assessments of the benefits and harms and the quality of the evidence supporting them. The potential benefits of AF screening include the opportunity for stroke prevention, the opportunity for early treatment and possible slowing or reversal of electrical and mechanical atrial remodeling, and the reduction of other AF-related morbidity and mortality [34, 39]. The harms might include additional healthcare costs, the burden of monitoring and receiving additional healthcare, anxiety caused by abnormal or ambiguous results, and the harm from treatments.

AI to Refine Screening Population

AI may help tip the balance between the benefits and harms of AF screening by refining the screening population – so-called AI-guided targeted screening. This approach may reduce the number of patients needed to be screened, thus increasing the diagnostic yield while limiting the potential harm to a smaller population. As shown in the ESC guidelines and prior AF screening RCTs, the current selection of the screening population is often based on advanced age and a few comorbidities [44, 14, 45]. However, considering the aging population and the fact that screening is often not a one-time task but a repeated or continuous task, this relatively unfocused strategy is not sustainable.

Refining the screening population depends on the stratification of the general population based on the predicted risk of AF. The most widely known risk prediction model is CHARGE-AF (Cohorts for Heart and Aging Research in Genomic Epidemiology model for AF), which relies on age, race, height, weight, blood pressure (BP), smoking, diabetes and myocardial infarction (MI) [1]. Another well-known model is the C2HEST score (coronary artery disease or chronic obstructive pulmonary disease [1 point each]; hypertension [1 point]; elderly [age > = 75 years, 2 points]; systolic heart failure [HF] [2 points]; thyroid disease [hyperthyroidism, 1 point]) which was derived and validated in Asian cohorts [23]. Lubitz et al. also developed a genetic risk score [27].

When applied to population-based screening, these traditional risk prediction models have a few limitations. First, outcome adjudication often relied on diagnosis codes and thus identified those who came to clinical attention in routine practice and missed many whom the screening programs are trying to catch. Second, the models generally predict AF over a fairly long term, e.g., 5 or 10 years. It is uncertain whether the clinicians should order a short-term diagnostic test once the model has been run, or at which timing/frequency should such diagnostic tests be ordered.

Another important limitation of traditional prediction models is the operationality challenges within the electronic health record (EHR). While clinicians can manually compute a risk score or use an online calculator by entering a patient’s medical history, modern medicine increasingly relies on computerized clinical decision support systems (CDS) to alert clinicians and patients at scale. This shift necessitates automated abstraction of EHR data to power the calculation. Unfortunately, some patients in the EHR lack complete or recent data such as age, race, height, weight, and BP. Additionally, for medical history, such as MI and HF, no EHR offers a definitive binary indicator (yes or no); rather, one would need to abstract diagnosis and procedure codes, lab and imagining results, medication prescriptions, and clinical notes to use an algorithm to decide whether a patient has a condition or not. Although the accuracy of adjudicating a condition might increase with more data and more complex algorithms, abstracting too much data and running too complex algorithms would require the health system to acquire additional computational power and slow down real-time calculation at the point of care. Furthermore, the accuracy of the algorithms to abstract medical history varies depending on the coding and documentation patterns of local health systems. More importantly, there is a lot of missing data for medical history, and the missingness is not random. Patients who have established primary care, have routine follow-up, do not switch providers, and receive most care from a single health system are less likely to have missing or misclassified conditions, but such patients are different in terms of social determinants of health.

Similarly, genetic testing for AF risk is uncommonly performed in routine practice and, thus, not readily available in EHR. Since the primary motivation for refining the screening population is to avoid screening the entire general population, prediction models based on genetic data, largely missing in the general population, are not likely to drive targeted screening at scale. However, it is possible that once genetic testing is widely available to inform a variety of clinical decisions, AI models will scour these data to drive screening of the population at a large scale.

One of the more promising AF prediction approaches involves deriving insights from ECGs already performed in routine clinical practice for various existing indications [3]. For example, an AI-ECG tool flags patients who are at a high risk of having unrecognized AF based on normal sinus rhythm ECGs. In other words, the patient was predicted to have AF, but it did not show up in the current 10-second, 12-lead ECG; as a result, a continuous cardiac monitoring device is recommended. In a prospective study, the AI-ECG outperformed CHARGE-AF for the identification of incident AF [32]. Several other AI-ECG algorithms have also been developed in a variety of populations and settings [18, 16].

In addition to better performance, these AI-ECG algorithms are easier to implement within EHR. First, they are based on data more widely available than genetic materials. In general, AF screening should prioritize patients with risk factors in the CHA2DS2-VASc in order to identify patients in whom a new diagnosis would change clinical management (i.e., initiating stroke prevention therapies). Those patients are predominantly older adults, most of whom have previously undergone a 12-lead ECG. ECGs captured from consumer wearable devices have also been increasingly available to health systems for AI applications [2]. Second, as we explained above about the challenges in implementing CHARGE-AF, the AI-ECG is based on a single data source, and the performance is less likely to be subject to a local health system’s coding and documentation patterns.

When applying the AI-ECG model to identify which patients should undergo more intensive cardiac monitoring, the performance of the model might be less optimal than what was reported in the original study that developed the algorithm. This discrepancy arises for several reasons. First, the prevalence of AF is likely higher in the original retrospective study which included patients who underwent multiple ECGs for clinical indications, rather than screening purposes. Second, when developing AI algorithms, investigators tend to use a diverse cohort including both older and younger adults, with and without comorbidities. However, when applying the algorithm to routine practice, clinicians and health systems will likely only alert patients with a high CHA2DS2-VASc score, considering the resources needed and the burden of monitoring. In other words, screening will be prioritized for patients for whom a new diagnosis of AF would change management. For instance, screening is not typically conducted for a 40-year-old asymptomatic individual without stroke risk factors. By contrast, those patients with a high CHA2DS2-VASc score are more homogenous in terms of advanced age and comorbidities. Moreover, the risk factors in CHA2DS2-VASc are largely also risk factors for AF, further reducing the AI algorithm’s discriminative ability. As a result, when apply the AI-ECG algorithm in such populations, the performance might be less optimal than what was originally reported, but the AI-ECG is still a good approach to target a subgroup for the more intensive cardiac monitoring.

AI to Reduce Harm in Screening-Detected AF

AI can also tip the balance between the benefits and harms of screening by selecting patients with screening-detected AF who might most benefit from stroke prevention. For a general asymptomatic population, the promise of early AF diagnosis lies in initiating OAC to prevent stroke; however, patients with screen-detected AF generally have a lower risk of stroke than patients whose AF was brought to clinical attention without screening. If the stroke risk is low, the benefit from stroke prevention would be low, and thus, the benefit of a population-based screening program might not outweigh the harm. The level of stroke risk depends on clinical characteristics and AF burden [28]. In the recent analyses of ARTESiA, patients with subclinical AF might require a higher threshold in terms of clinical risk factors to benefit from OAC, i.e., a CHA2DS2-VASc > = 5 in subclinical AF versus > = 2–3 in clinical AF [24]. It is reasonable to expect that future AI models will outperform CHA2DS2-VASc in predicting the risk of stroke in subclinical AF by incorporating clinical risk factors and AF burden and leveraging complex interactions and higher-order terms.

In summary, AI models have shown promise in addressing the two primary AF screening questions – whom to screen and how to screen. A number of AI algorithms have been developed to help increase the diagnostic yield by selecting patients at high risk of unrecognized AF who might benefit from more intensive monitoring. AI models will likely facilitate screening itself by allowing the automatic detection of AF by various monitoring devices and perhaps improving the efficiency of AF monitoring by reducing the monitoring time needed [10]. An ongoing randomized controlled trial (RCT) is testing whether AI-identified high-risk patients benefit from intermittent ECG recording using an Apple Watch [50].

Diagnosis –Streamline to Increase Efficiency and Accuracy

Compared to the predictive analytics required for targeted screening efforts in patients without known AF, diagnosing AF from a direct cardiac recording is more straightforward. The traditional approach to AF diagnosis typically involves tagging ECG features or fiducial points (e.g., QRS complex or R waves) and then detecting irregular R-R intervals [8]. More sophisticated models may involve detecting P waves to differentiate frequent premature atrial contractions (PACs), wandering atrial pacemakers, or sinus arrhythmia from AF.

AI has now demonstrated superior performance in diagnosing AF through the analysis of ECG, particularly from mobile cardiac monitors and wearable devices, where poor signals may make detection based on fiducial points more challenging. AI algorithms, employing various analytical frameworks and network architectures, have been developed to enhance AF detection from multiple data sources.

Several studies have shown that AI models can diagnose AF directly from a 12-lead ECG, which typically provides clean signals and excellent performance for arrhythmia diagnosis. Cai et al. developed a deep neural network to detect AF from 12-lead ECGs, achieving high performance with an accuracy of 99.35%, sensitivity of 99.19%, and specificity of 99.44% in the test dataset, indicating that AI can accurately diagnose AF from ECG waveforms [4]. Yang et al. introduced a component-aware transformer model that segments the ECG waveform into its components (P-wave, QRS complex, T-wave) and uses this information for AF diagnosis [46]. This model demonstrated superior performance compared to conventional deep learning techniques, with high accuracy in both single- and 12-lead ECG signals. Kong et al. proposed an IRBF-RVM model combining integrated radial basis function and relevance vector machine for AF diagnosis using 12-lead ECGs [21]. Their model achieved a classification rate of 98.16%, showcasing the effectiveness of AI in diagnosing AF. Collectively, these studies highlight the capability of AI models to diagnose AF directly from 12-lead ECGs with high accuracy, sensitivity, and specificity.

Clinical-grade mobile cardiac monitors provide continuous ECG monitoring, transmitting ECG data to AI systems that analyze the data in real-time, facilitating early detection and intervention. AI models implemented on wearable ECG devices have demonstrated high accuracy in detecting AF, with some achieving sensitivity and specificity rates above 98% [25, 30]. Insertable cardiac monitors can be used to follow patients longitudinally to detect incident AF in patients deemed to be high risk, such as those with strokes of undetermined source. Pürerfellner et al. demonstrated an ECG algorithm that detects P waves to differentiate AF from frequent PACs [41]. This algorithm improves AF detection in insertable cardiac monitors by incorporating P-wave identification. The original detection algorithm relies on R-R interval patterns over a 2-minute period to identify AF. The improved algorithm reduces the likelihood of AF detection if P waves are present, thus distinguishing AF from PACs more effectively. Validation using Holter data, which included 8442 h of recordings from 206 patients, showed that the algorithm correctly identified 97.8% of the total AF duration and 99.3% of the total sinus or non-AF rhythm duration. It detected 85% of all AF episodes ≥ 2 min in duration, with a significant reduction in inappropriate AF detection episodes and duration by 46% and 55%, respectively, while maintaining high sensitivity for true AF detection. This study highlights the efficacy of incorporating P-wave detection into AF algorithms to improve diagnostic accuracy, particularly in distinguishing AF from frequent PACs.

In contrast to the reliable, high-quality signals from 12-lead ECGs and clinical-grade monitors, wearable devices such as smartwatches and fitness trackers often use photoplethysmography (PPG) to monitor heart rhythms. Here, the algorithms rely on pulse waveforms and have less precision, relying mostly on irregular heartbeat detection. AI algorithms applied to PPG data can detect irregular heart rhythms indicative of AF, achieving high sensitivity and specificity, making them effective for large-scale screening and continuous monitoring [22, 26]. For example, the Fitbit Heart Study showed that a PPG-based algorithm could identify AF with a positive predictive value of 98.2% [26]. Manetas-Stavrakakis et al. conducted a systematic review and meta-analysis focusing on the diagnostic accuracy of AI-based technologies for AF detection, reporting pooled sensitivity and specificity of 95.1% and 96.2% for PPG and 92.3% and 96.2% for single-lead ECG, respectively [29]. This study highlights the high diagnostic accuracy of AI models in detecting AF using various technologies.

In summary, AI enhances the diagnostic capabilities of ECGs, mobile cardiac monitors, and wearables by providing accurate, real-time detection of AF. These advancements hold promise for improving AF screening, early diagnosis, and management, which, with time, hopefully will translate to better patient outcomes.

Treatment – Support Clinical Decision Making and Care Delivery

AI to Personalize Treatment Selection

Over the past decade, the medical field has seen the approval and integration of a number of new AF treatments into standard care. The advancement in AF management requires new approaches to determine which patients are most likely to benefit from specific treatments—essentially, understanding heterogeneous treatment effects (HTE) to tailor personalized therapy choices. For instance, in the pivotal RCTs, DOACs all had a similar risk of stroke and systemic embolism, but a lower risk of intracranial bleeding than vitamin K antagonist (VKA), and some DOACs had a lower risk of major bleeding [5, 13, 40, 11]. The population-level treatment benefit has been clear from the RCTs and subsequent real-world data [47, 48], but it is uncertain whether there is any HTE. In the standard subgroup analyses of RCTs, no HTE was found. However, the traditional one-factor-at-a-time subgroup analyses can be subject to false negatives, i.e., failing to identify HTE, due to underpowered subgroups, and can miss HTE induced by large complex interactions beyond a single factor [17]. They are also subject to false positives, i.e., mistakenly identifying HTE when it does not exist, due to multiple testing. Consequently, AI offers a promising methodological improvement in predicting HTE, enabling clinicians and patients to personalize treatment selection beyond what traditional subgroup analyses can reveal.

Here, we would like to call out a specific class of machine learning methods – causal machine learning [9]. Machine learning methods described in the previous screening and diagnosis sections aim to predict a single outcome. For example, AI used for screening is to predict whether a patient has unrecognized AF. However, when AI is used to predict HTE, it is not predicting the probability of a single outcome, but rather, the difference between outcomes under different treatment options, e.g., the difference in outcomes between warfarin versus a DOAC, to determine the selection of treatment for an individual [31].

Traditionally, OAC decision-making relies on risk scores to predict stroke risk (e.g., CHA2DS2-VASc) and bleeding (e.g., HAS-BLED). This is because there was only one option for OAC – vitamin K antagonist (VKA), and the choice was merely OAC versus no OAC. Therefore, the key to this decision was to balance the risks of stroke and bleeding. Over the past decade, with more options such as DOACs with a lower risk of bleeding, only 10% of AF patients who have a low risk of stroke would not benefit from OAC [35, 20]. Therefore, the recent guidelines use CHA2DS2-VASc to identify patients with a low risk of stroke, and for the rest of 90% of the patients (i.e., men with CHA2DS2-VASc > = 2 and women with CHA2DS2-VASc > = 3), OAC is indicated as a Class I level A recommendation [34]. Guidelines also recognized the limited use of bleeding risk scores in OAC decision making [34]. For example, European guidelines stated the use of bleeding risk score should be used to identify modifiable risk factors and to enable early and more frequent follow up rather than withholding anticoagulation [14].

For the majority of patients with clinical AF, the guidelines already recommend OAC, the treatment decision now is about which OAC to use or whether LAAO should be used instead of lifelong medication therapies [33]. Some of the early machine learning work using causal forest did not identify any patient subgroup that benefited more from warfarin versus DOACs, which is reassuring evidence to support current guidelines [31]. There seemed to be some subgroups of patients who might benefit more or less from one DOAC versus another, and there has been ongoing work on causal machine learning to identify HTE for LAAO versus DOACs [49].

Personalizing treatment decisions for rhythm control is challenging due to the absence of outcomes like AF recurrence, symptoms, and quality of life in large administrative databases. These endpoints are typically gathered in ablation trials, which are not ideal for developing machine learning models due to the trials’ inclusion and exclusion criteria, leading to homogenous populations that differ from those in routine clinical practice. Moreover, AI algorithm training usually requires a much larger patient cohort than those in the trials. Future research is needed to pool multiple trials and registries or utilize surrogate outcomes for AF occurrence in large administrative datasets complemented by validation at the local health system level.

AI to Address Clinicians’ Non-Adherence to Guidelines

Another application of AI in AF management is to improve clinicians’ adherence to guideline-recommended therapies via CDS tools. For example, despite the availability of DOACs, some of the patients still did not receive OACs – some clinicians might not prescribe the medications, and some patients might not adhere to the prescriptions [47, 48, 51]. In prior studies, the computerized CDS consistently increase the prescription rate of OACs [42, 6]. AI can further enhance such CDS by better characterizing patients and the previous treatments. For example, to alert clinicians to prescribe OAC, the CDS needs to identify patients with AF and calculate CHA2DS2-VASc from EHR, and the challenge is – in the EHR, there is not a clear binary variable for whether or not patients had AF or stroke, but rather EHR contains structured and unstructured data such as diagnoses, prescriptions, procedures, and notes. Computerized CDS depends on certain rules or algorithms to abstract data from EHR to characterize patients, and advancement in AI can help enhance the performance of such algorithms, and thus, reduce the inaccurate alerts to clinicians due to the misclassification of patients [36]. Furthermore, in the future, AI and large language models (LLMs) can be used to abstract clinical notes regarding whether OAC was already discussed in the past and the reasons for declining OAC to either avoid an unnecessary alert or suggest a different action such as discussing LAAO.

AI to Enhance Patient Education and Adherence

Numerous interventions have been developed and examined to improve patients’ adherence to medications across diseases [19]. There is hope that AI tools, such as a chatbot, can help improve adherence. In the Nudge trial, approximately 10,000 patients with cardiovascular and related diseases were randomized into one of the four study arms: usual care (no intervention), generic nudge (a generic text reminder), optimized nudge (a behavioral nudge text), and optimized nudge plus interactive AI chatbot [12]. The initial findings indicated the AI chatbot did not further improve medication adherence beyond text messages [15].

In conclusion, we reviewed the role of AI in advancing AF care across the screening, diagnosis, and management domains. First, AI optimizes AF screening by refining patient selection through ML models to predict AF risks. These models have been largely well validated, but the model performance and feasibility for large-scale implementation within EHR varies. Second, AI has already been increasingly applied to enhance the diagnostic capabilities of ECGs, mobile cardiac monitors, and wearables by providing accurate, real-time detection. Lastly, for the management of AF patients, emerging causal machine learning models have been developed to personalize treatment choices, yet rigorous evaluation in routine clinical practice remains pending. Within these three domains, AI also holds promise for stroke risk stratification, patient education, and enhancing patients’ and clinicians’ adherence to guideline-recommended care, but such areas are either underdeveloped or have not shown a significant impact (Table 1). The full transformative potential of AI in AF care awaits further exploration.

Data Availability

No datasets were generated or analysed during the current study.

Abbreviations

- AI:

-

Artificial intelligence

- ACC:

-

American College of Cardiology

- ACCP:

-

American College of Clinical Pharmacy

- AHA:

-

American Heart Association

- AF:

-

Atrial fibrillation

- BP:

-

Blood pressure

- CDS:

-

Clinical decision-support

- ECG:

-

Electrocardiogram

- EF:

-

Ejection fraction

- EHR:

-

Electronic health records

- HF:

-

Heart failure

- HRS:

-

Heart Rhythm Society

- LAAO:

-

Left atrial appendage closure

- LHS:

-

Learning health system

- LLM:

-

Large language models

- MI:

-

Myocardial infarction

- OAC:

-

Oral anticoagulation

- PAC:

-

Premature atrial contraction

- RCT:

-

Randomized controlled trial

- USPSTF:

-

US Preventive Services Task Force US Preventive Services Task Force

References

Alonso A, et al. Simple risk model predicts incidence of atrial fibrillation in a racially and geographically diverse population: the CHARGE-AF consortium. J Am Heart Assoc. 2013;2(2): e000102.

Attia ZI, et al. Prospective evaluation of smartwatch-enabled detection of left ventricular dysfunction. Nat Med. 2022;28(12):2497–503.

Attia ZI, et al. An artificial intelligence-enabled ECG algorithm for the identification of patients with atrial fibrillation during sinus rhythm: a retrospective analysis of outcome prediction. Lancet. 2019;394(10201):861–7.

Cai W, et al. Accurate detection of atrial fibrillation from 12-lead ECG using deep neural network. Comput Biol Med. 2020;116: 103378.

Connolly SJ, et al. Dabigatran versus warfarin in patients with atrial fibrillation. N Engl J Med. 2009;361(12):1139–51.

Cox J, et al. Computerized clinical decision support to improve stroke prevention therapy in primary care management of atrial fibrillation: a cluster randomized trial. Am Heart J. 2024;273:102–10.

Davidson KW, et al. Screening for Atrial Fibrillation: US Preventive Services Task Force Recommendation Statement. Jama. 2022;327(4):360–7.

Duan J, et al. Accurate detection of atrial fibrillation events with R-R intervals from ECG signals. PLoS One. 2022;17(8): e0271596.

Feuerriegel S, et al. Causal machine learning for predicting treatment outcomes. Nat Med. 2024;30(4):958–68.

Gadaleta M, et al. Prediction of atrial fibrillation from at-home single-lead ECG signals without arrhythmias. NPJ Digit Med. 2023;6(1):229.

Giugliano RP, et al. Edoxaban versus warfarin in patients with atrial fibrillation. N Engl J Med. 2013;369(22):2093–104.

Glasgow RE, et al. The NUDGE trial pragmatic trial to enhance cardiovascular medication adherence: study protocol for a randomized controlled trial. Trials. 2021;22(1):528.

Granger CB, et al. Apixaban versus warfarin in patients with atrial fibrillation. N Engl J Med. 2011;365(11):981–92.

Hindricks G, et al. 2020 ESC Guidelines for the diagnosis and management of atrial fibrillation developed in collaboration with the European Association for Cardio-Thoracic Surgery (EACTS): The Task Force for the diagnosis and management of atrial fibrillation of the European Society of Cardiology (ESC) Developed with the special contribution of the European Heart Rhythm Association (EHRA) of the ESC. Eur Heart J. 2021;42(5):373–498.

Ho PM, Bull S. Personalized patient data and behavioral nudges to improve adherence to chronic cardiovascular medications (The Nudge Study); 2023. Retrieved 6/22/2024, from https://dcricollab.dcri.duke.edu/sites/NIHKR/KR/GR-Slides-11-17-23.pdf.

Hygrell T, et al. An artificial intelligence-based model for prediction of atrial fibrillation from single-lead sinus rhythm electrocardiograms facilitating screening. Europace. 2023;25(4):1332–8.

Kent DM, et al. Personalized evidence based medicine: predictive approaches to heterogeneous treatment effects. BMJ 363. https://www.bmj.com/content/363/bmj.k4245

Khurshid S, et al. ECG-Based Deep Learning and Clinical Risk Factors to Predict Atrial Fibrillation. Circulation. 2022;145(2):122–33.

Kini V, Ho PM. Interventions to Improve Medication Adherence: A Review. Jama. 2018;320(23):2461–73.

Komen JJ, et al. Oral anticoagulants in patients with atrial fibrillation at low stroke risk: a multicentre observational study. Eur Heart J. 2022;43(37):3528–38.

Kong D, et al. A novel IRBF-RVM model for diagnosis of atrial fibrillation. Comput Methods Programs Biomed. 2019;177:183–92.

Lee S, et al. Artificial Intelligence for Detection of Cardiovascular-Related Diseases from Wearable Devices: A Systematic Review and Meta-Analysis. Yonsei Med J. 2022;63(Suppl):S93–s107.

Li YG, et al. A Simple Clinical Risk Score (C(2)HEST) for Predicting Incident Atrial Fibrillation in Asian Subjects: Derivation in 471,446 Chinese Subjects, With Internal Validation and External Application in 451,199 Korean Subjects. Chest. 2019;155(3):510–8.

Lopes RD, et al. Apixaban versus aspirin according to CHA2DS2-VASc score in subclinical atrial fibrillation: Insights from ARTESiA. J Am Coll Cardiol. 2024;84(4):354–64.

Lown M, et al. Machine learning detection of Atrial Fibrillation using wearable technology. PLoS One. 2020;15(1): e0227401.

Lubitz SA, et al. Detection of Atrial Fibrillation in a Large Population Using Wearable Devices: The Fitbit Heart Study. Circulation. 2022;146(19):1415–24.

Lubitz SA, et al. Genetic Risk Prediction of Atrial Fibrillation. Circulation. 2017;135(14):1311–20.

Mahajan R, et al. Subclinical device-detected atrial fibrillation and stroke risk: a systematic review and meta-analysis. Eur Heart J. 2018;39(16):1407–15.

Manetas-Stavrakakis N, et al. Accuracy of artificial intelligence-based technologies for the diagnosis of atrial fibrillation: A systematic review and meta-analysis. J Clin Med. 2023;12(20):6576.

Marsili IA, et al. Implementation and validation of real-time algorithms for atrial fibrillation detection on a wearable ECG device. Comput Biol Med. 2020;116: 103540.

Ngufor C, et al. Identifying treatment heterogeneity in atrial fibrillation using a novel causal machine learning method. Am Heart J. 2023;260:124–40.

Noseworthy PA, et al. Artificial intelligence-guided screening for atrial fibrillation using electrocardiogram during sinus rhythm: a prospective non-randomised interventional trial. Lancet. 2022a;400(10359):1206–12.

Noseworthy PA, et al. Percutaneous left atrial appendage occlusion in comparison to non-vitamin K antagonist oral anticoagulant among patients with atrial fibrillation. J Am Heart Assoc. 2022b;11(19):e027001.

Members WC, Joglar JA, Chung MK, Armbruster AL, Benjamin EJ, Chyou JY, Cronin EM, Deswal A, Eckhardt LL, Goldberger ZD, Gopinathannair R. 2023 ACC/AHA/ACCP/HRS Guideline for the diagnosis and management of atrial fibrillation: A report of the american college of cardiology/american heart association joint committee on clinical practice guidelines. J Am Coll Cardiol. 2024 Jan 2;83(1):109–279. https://doi.org/10.1016/j.jacc.2023.08.017

O’Brien EC, et al. Effect of the 2014 atrial fibrillation guideline revisions on the proportion of patients recommended for oral anticoagulation. JAMA Intern Med. 2015;175(5):848–50.

Ong CJ, et al. Machine learning and natural language processing methods to identify ischemic stroke, acuity and location from radiology reports. PLoS One. 2020;15(6):e0234908.

Osmancik P, et al. 4-Year Outcomes After Left Atrial Appendage Closure Versus Nonwarfarin Oral Anticoagulation for Atrial Fibrillation. J Am Coll Cardiol. 2022;79(1):1–14.

Packer DL, et al. Effect of Catheter Ablation vs Antiarrhythmic Drug Therapy on Mortality, Stroke, Bleeding, and Cardiac Arrest Among Patients With Atrial Fibrillation: The CABANA Randomized Clinical Trial. Jama. 2019;321(13):1261–74.

Paludan-Müller C, et al. Atrial fibrillation: age at diagnosis, incident cardiovascular events, and mortality. Eur Heart J. 2024;45(24):2119–29.

Patel MR, et al. Rivaroxaban versus warfarin in nonvalvular atrial fibrillation. N Engl J Med. 2011;365(10):883–91.

Pürerfellner H, et al. P-wave evidence as a method for improving algorithm to detect atrial fibrillation in insertable cardiac monitors. Heart Rhythm. 2014;11(9):1575–83.

Sennesael A-L, et al. Do computerized clinical decision support systems improve the prescribing of oral anticoagulants? A systematic review. Thrombosis Research. 2020;187:79–87.

Sohns C, et al. Catheter Ablation in End-Stage Heart Failure with Atrial Fibrillation. N Engl J Med. 2023;389(15):1380–9.

Steinhubl SR, et al. Effect of a Home-Based Wearable Continuous ECG Monitoring Patch on Detection of Undiagnosed Atrial Fibrillation: The mSToPS Randomized Clinical Trial. Jama. 2018;320(2):146–55.

Svennberg E, et al. Clinical outcomes in systematic screening for atrial fibrillation (STROKESTOP): a multicentre, parallel group, unmasked, randomised controlled trial. Lancet. 2021;398(10310):1498–506.

Yang MU, et al. Automated diagnosis of atrial fibrillation using ECG component-aware transformer. Comput Biol Med. 2022;150: 106115.

Yao X, et al. Effect of adherence to oral anticoagulants on risk of stroke and major bleeding among patients with atrial fibrillation. J Am Heart Assoc. 2016a;5(2):e003074.

Yao X, et al. Effectiveness and safety of dabigatran, rivaroxaban, and apixaban versus warfarin in nonvalvular atrial fibrillation. J Am Heart Assoc. 2016b;5(6).

Yao X, et al. Machine learning identified subset of af patients who benefit from left atrial appendage occlusion versus noac. J Am Coll Cardiol. 2022;79(9_Supplement): 29-29.

Yao X, et al. Realtime diagnosis from electrocardiogram artificial intelligence-guided screening for atrial fibrillation with long follow-up (REGAL): Rationale and design of a pragmatic, decentralized, randomized controlled trial. Am Heart J. 2024a;267:62–9.

Yao X, et al. Ten-year trend of oral anticoagulation use in postoperative and nonpostoperative atrial fibrillation in routine clinical practice. J Am Heart Assoc. 2024b;13(13):e035708.

Author information

Authors and Affiliations

Contributions

X.Y. and P.A.N. wrote the manuscript text; P.A.N. prepared Fig. 1; X.Y. prepared Table 1. All authors reviewed and revised the manuscript.

Corresponding author

Ethics declarations

Human and Animal Rights and Informed Consent

This article does not contain any studies with human or animal subjects performed by any of the authors.

Competing Interests

PAN and Mayo Clinic have filed patents related to the application of AI to the ECG for diagnosis and risk stratification.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yao, X., Noseworthy, P.A. Artificial Intelligence Across the Continuum of Atrial Fibrillation Screening, Diagnosis, and Treatment. Curr Cardiovasc Risk Rep (2024). https://doi.org/10.1007/s12170-024-00747-4

Accepted:

Published:

DOI: https://doi.org/10.1007/s12170-024-00747-4