Abstract

Accurate prediction of the water level will help prevent overexploiting groundwater and help control water resources. On the other hand, water level predicting is a highly dynamic and non-linear process dependent on complex factors. Therefore, developing models to predict water levels to optimize water resources management in the reservoir is essential. Thus, this work recommends various supervised machine learning algorithms for predicting water levels with groundwater level correlation. The predicting models have Linear Regression (LR), Support Vector Machines (SVM), Gaussian Processes Regression (GPR), and Neural Network (NN). This study includes four scenarios; The first scenario (SC1) uses lag 1; second scenario (SC2) uses lag 1 and lag 2; third scenario (SC3) uses lag 1, lag 2, and lag 11 and the fourth scenario (SC4) uses lag 1, lag 2, lag 11 and lag 12. These scenarios have been determined using the autocorrelation function (ACF), and these lags represent the month. The results showed that for SC1, SC2, and SC4, all model performance in GPR gave good results where the highest R equal to 0.71 in SC1, 0.78 in SC2, and 0.73 in SC4 using the Matern 5/2 GPR model. For SC3, the Stepwise LR model gave a better result with an R of 0.79. It can be concluded that Matern 5/2 of Gaussian Processes Regression Models is a reliable model to predict water level as the method gave a high performance in each scenario (except SC3) with a relatively fastest training time. The NN model had the worst performance to the other three models since it has the highest MAE values, RMSE, and lowest value of R in almost all four scenarios of input combinations. These results obtained in this study serves as an excellent benchmark for future water level prediction using the GPR and LR with four scenarios created.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

A dam is an artificial structure of construction preserving water on a large scale and operates a water reservoir (Maimunah et al. 2019). It is essential as some dam provides hydroelectric power production and river navigation for better water management. Furthermore, it also provides flood mitigation by allowing the dam to store extra precipitation for future use (drought season) or release it under controlled conditions to replenish the irrigation water supply downstream of the dam. Farther-reaching profit of dams reservoir: it holds clean water which provides freshwater for domestic and municipal purposes. The most significant influence on water levels is hydrology: precipitation, evaporation, and groundwater. Due to its behaviour as a large water reserve underneath the Earth’s surface (Famiglietti 2014), groundwater is a significant contributor to the dam reservoir’s water level changes. More than one-third of the water consumed comes from underground (Famiglietti 2014). However, climate change is expected to accelerate the water cycle as global temperature increases the evaporation rate worldwide (Trenberth 2011). Impacts of higher evaporation and precipitation rates can be seen in many Malaysian areas, and the impacts are estimated to increase in intensity over this century as climate warms (Trenberth 2011). As a result, climate change-related unstable factors have an impact on groundwater systems. Climate change can impact the amount of soil infiltration, deeper percolation and hence groundwater recharge. At the same time, rising temperatures increase evaporative demand over land (Berg et al. 2016), limiting the amount of water available to refill groundwater (Wu et al. 2020). Other than that, operating the reservoir usually affects surface flow qualities and its relation between surface water where groundwater is also affected by it, like changes in feeding-discharge relations trigger changes in water panel level, particularly changes in the amount of water used by humankind (ÇELİK, R. 2018). The direct effect of dams on the groundwater is expected to be between upstream and downstream (ÇELİK, R. 2018). As a result, reliable prediction models and understanding the impact of groundwater factors on water levels have become critical for executing water supply management systems and maintaining water use quality. More accurate prediction of the water level will help prevent overexploiting groundwater and help control water resources. On the other hand, water level forecasting is a highly dynamic and non-linear process dependent on several complex factors (Chang and Chang 2006). Therefore, developing models to predict water levels accurately to optimize water resources management in the reservoir is essential.

Researchers have used numerous mathematical methods in recent decades to estimate the water level. Due to the multiplicity of input and structural parameters, multiple calibrations, and long-term efficiency, these methodologies have been developed to match the complexity of hydrological factors (Karami et al. 2018). Over the last few years, a more recent study has used soft computing models to predict water, especially water quality (Kaya et al. 2018) and lake level (Gong et al. 2016). The use of Machine Learning (ML) algorithms in this problem have been massive since ML shifts from a knowledge-driven approach to a data-driven approach for learning large amounts of data and conclude from the results. The artificial neural networks (ANN) and support vector machines (SVM) become popular since 90an and are a primary tool used in ML. ANN also has been in numerous study of hydrological factors such as groundwater forecasting (Karami et al. 2018; Kaya et al. 2018; Gong et al. 2016; Daliakopoulos et al. 2005), rainfall forecasting (Hung et al. 2009; Canchala et al. 2020; Lee et al. 2018) and streamflow forecasting (Adhikary et al. 2018; Reza et al. 2018; Wang et al. 2006). One of the approaches using ML algorithms for reservoir water level prediction was presented by Siegelmann and Sontag (1995), a study using ANN and neuro-fuzzy system on a short-term water level prediction showing both algorithms performed well and accurate compared to the linear statistical models. In (Chang and Chang 2006), the study develops two adaptive network-based fuzzy inference system (ANFIS) models with the emphasis of input variable: typhoon and rainfall data were used and one with a human decision and one without the human decision. Different input uses clearly show superior performance with a human option as input. It shows that ANFIS successfully gave high precision and reliability in the following three hours for the reservoir water level. The time series (TS) regression model was also considered by Khai et al. (2019) alongside SVM for estimating daily water level using historical inflows and water level as input at the Klang Gate dam. The TS model provides a better result than the SVM. In conclusion, the results will be different based on input–output variables and ML algorithms used. Most ML model can be applied in hydrological events.

This study expands on previous studies (Sapitang et al. 2020) which aim to predict the reservoir water level but with different input variables. The previous study consists of several scenarios and time horizon with four learning algorithms known as Boosted Decision Tree Regression (BDTR), Decision Forest Regression (DFR), Bayesian Linear Regression (BLR) and Neural Network Regression (NNR). The results show BLR and BDTR are the most outperformed model of the other two. The reservoir water level can be predicted by seeing various predictive variables (input data) (Phanindra et al. 2020) because it can be affected by other hydrological factors. Hence, the purposes of this study are to predict the monthly reservoir water level by finding the relationship between the input of monthly historical groundwater and water level using different machine learning algorithms like Linear Regression (LR), Support Vector Machines (SVM), Gaussian Processes Regression (GPR), and Neural Network (NN) to find the best modal mimicking the actual values of water level and the best input. The autocorrelation function (ACF) was also presented to decide on the best combination of lag between groundwater and water level. The model performances were compared using various statistical performance indices. The motivation of this study is necessary to control the release and store of water level for better water management at the dam reservoir and the groundwater recharge. The prediction of water level provides novel approaches by comparing the model of machine learning algorithms. Hence, this study can serve modellers and decision-makers in addressing site-specific and real-time water level prediction and management issues.

Methodology

Input Selection using Autocorrelation Function (ACF)

One of the main tasks in Machine Learning (ML) is to choose input parameters that will affect output parameters. It would necessitate focus and a thorough interpretation of the underlying physical mechanism dependent on causal factors and statistical analysis of potential inputs and outputs (Ahmed et al. 2019). For this research, the autocorrelation function (ACF) will be used to determine the input selection. The degree of correlation of the same variables over two successive time intervals is referred to as ACF. It calculates the lagged version of a variable’s value in a time series compared to the original version (Berne et al. 1966). The ACF standard estimator equation below is one of the most widely studied in literature and commonly used in computer programmes (Zieba and Ramza 2011).

where, \({r}_{k}\) is the estimator, \({x}_{i}\) of analyzed data, \(\stackrel{-}{x}\) mean of data and \(n\) is the sample size. The main applications of ACF are to assess statistical correlations between observations in a single data series and test the models’ validity. A significant advantage of ACF measures the level of linear dependency between outcomes of a time series separated by a lag \(k\) (Parmar and Kinjal Mistree 2017).

Figure 1a and 1b show two different lags for water and groundwater levels. Figure 1a and 1b show actual data corresponding to 60 lags, comprising 60 months from January 2013 to December 2017. Sixty lags correspond to the same number of months, where lags 1 to 12 correspond to 2013, lags 13 to 24 correspond to 2014, lag 25 to 36 correspond to 2015, lag 37 to 48 correspond to 2016 lag 49 to 60 correspond to 2017. Lag 1, 13, 25, 37, 49 corresponds to January, whilst lags 2, 14, 26, 38, 50 correspond to February, lag 11, 23, 35, 47, 59 correspond to November and lag 12, 24, 36, 48, 60 correspond to December. For water level (Fig. 1a), it can be observed that January, February, November, and December have dependencies each year. However, several years also consist of dependencies on March and October values, while other months do not have such dependencies. Hence, several years witness the rise in water levels beginning in October and ending in March. Groundwater level (Fig. 1b) shows dependencies in January, February, November, and December.

There are four different scenarios for predicting monthly water level, listed in Eqs. 2 to Eq. 5 following the ACF results.

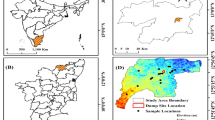

where \({WL}_{t}\) is the historical water level at monthly t as output for all the scenarios. For output, Eq. 2 of \({GW}_{t}+{\left(GW+WL\right)}_{t-1}\) represents lag 1 (starting January) as scenario 1 (SC1), Eq. 3 of \({GW}_{t}+{\left(GW+WL\right)}_{t-1}+{\left(GW+WL\right)}_{t-2}\) represents lag 1 and 2 (starting January, February) as scenario 2 (SC2), Eq. 4 of \({GW}_{t}+{\left(GW+WL\right)}_{t-1}+{\left(GW+WL\right)}_{t-2}+{\left(GW+WL\right)}_{t-11}\) represents lag 1, 2, and 11 (starting January, February, November) as scenario 3 (SC3) and Eq. 5 of \({GW}_{t}+{\left(GW+WL\right)}_{t-1}+{\left(GW+WL\right)}_{t-2}+{\left(GW+WL\right)}_{t-11}+{\left(GW+WL\right)}_{t-12}\) represents lag 1, 2, 11, and 12 (starting January, February, November, December) as scenario 4 (SC4). All the data using the historical groundwater level and water level. The input and output’s primary statistical parameters in this study are presented in Table 1, taking an average total of 100 monthly historical data for 10 points of observation boreholes (OH) and water level data from 2012 until 2019. This study’s data were secondary, a total of 100 monthly historical data for groundwater level and water level data between the year 2012 until 2019 in Terengganu, Malaysia.

Machine Learning Algorithms

Various machine learning algorithms can be found, such as mention in the introduction section. This study uses various algorithms such as Linear Regression (LR), Support Vector Machines (SVM), Gaussian Processes Regression (GPR), and Neural Network (NN). LR is a graphical model that demonstrates probabilistic relationships among various factors derived from the Bayes theorem (Caral et al. 2019). The basic principle of linear regression is depicted in Eq. 6, which shows the relationship between a dependent or response, variable \(y\) and one or more independent or predictor, variables \({x}_{1},\dots ,{x}_{n}\) (Browne 1975). \({\beta }_{0}\) is the \(y\)-intercept and \({\beta }_{1}\) is the slope (or regression coefficient) and \(\varepsilon\) is the error. There are three types of regression were used in this study: Linear Regression (LR), Robust Linear Regression (RLR) and Stepwise Linear Regression (SLR). Outliers are less susceptible to RLR than standard LR. This implies that if the distribution of errors is asymmetric or prone to outliers, model assumptions are invalidated, and parameter estimates, confidence intervals and other derived statistics will be unreliable (Hampel et al. 2011). Hence, RLR, which assigns a weight to each data point using an iteratively reweighted least square method, is less sensitive to substantial changes in small parts of the data than a standard LR (Hampel et al. 2011; Ronchetti et al. 1997). SLR is a technique for systematically adding and removing components from a multilinear model based on their statistical significance in a regression. The technique begins with an initial model then the explanatory power of increasingly more prominent and smaller models is compared (Zhou et al. 2012).

An SVM is a mathematical entity that maximizes a particular mathematical function (kernel) concerning a given data collection, as shown in Fig. 2. The support vectors (dots) strive to find the hyperplane that minimizes the separation between two classes, and it also tries to find the hyperplane that is best for patterns that can be separated linearly and mapped into new space by transforming the original data (Phanindra et al. 2020). The four basic SVM concepts are the separating hyperplane, the maximum margin hyperplane, the soft margin, and the kernel function (Meyer et al. 2003; Noble 2006). The biggest drawback to the SVM algorithms is that it only handles binary classifications. The only way to solve this is to train multiple, one versus all classifiers, but SVM proved to solve problems quite fast even if given thousands of datasets (Noble 2006). However, the disadvantages of SVM is that it requires plenty of training data to estimate the underlying function and their accuracy need to be improved (Gao et al. 2018). The three types of SVM regression used in this study are Fine Gaussian SVM (FG-SVM), Medium Gaussian SVM (MG-SVM) and Course Gaussian SVM (CG-SVM). The difference between these three methods is that the variance of the data classifier that explains FG-SVM makes finely detailed distinctions. MG-SVM makes fewer distinctions than an FG, and CG-SVM makes coarse distinctions (Ali et al. 2019).

A GPR is expressive, interpretable, avoids over-fitting, and has impressive predictive performance in many thorough empirical comparisons (Rasmussen 1997). GPR is a process regression developed out of neural networks research as the number of hidden units approached infinity, and this technique became the cornerstone of subsequent Gaussian process models (Neal 2012; Wilson et al. 2011). It is a nonparametric kernel-based probabilistic model with a limited set of random variables and multivariate distribution (Gao et al. 2018). The three types of GPR regression used in this study: Squared Exponential GPR (SE-GPR), Matern 5/2 GPR (M5/2-GPR) and Rational Quadratic GPR (RQ-GPR). SE-GPR is the function space representation of a radial basis function regression model with an infinite number of basis functions. The benefits of this algorithm include the fact that it is unlikely to cause substantial errors when dealing with massive data sets. The M5/2-GPR kernel uses the stationary kernel’s spectral densities to compute Fourier transforms of the RBF kernel, whereas the RQ-GPR kernel can represent data at various scales (Zhang et al. 2018). The covariance (kernel) function is a fundamental component in GPR, and data similarity among is vital; hence, Eq. 7–9 describes the covariance function of each algorithm used in this study (Gao et al. 2018).

A NN regression is a form of artificial intelligence imitating the human brain and nervous system’s function. It is widely used because of its capability to train and understand the output from a given input to simulate large-scale complex non-linear problems (Chau et al. 2005; Rumelhart et al. 1994). It can be defined as a chain of linear operations that have been scattered with different non-linear activation functions (Tan et al. 2017). These defaults are related to the network; input layer – hidden layer – output layer (Damian 2019) as explained in Fig. 3. Even though an NN is a flexible and efficient mapping tool, incorrectly allocated weights and biases can result in local convergence (Chau et al. 2005). There are three types of NN regression used in this study: Narrow NN (N-NN), Medium NN (M-NN), and Wide NN (W-NN). The ability of a NN model to simply predict interactions and nonlinearities may also be a disadvantage because it may result in overfitting a training data set and poor performance in external test data sets (Tu 1996).

Model Performance Indicators

Model performance indicator was used to imply the successful scoring (datasets) by a trained model to replicate the output parameter’s actual values.

-

i)

Mean Absolute Error, MAE (Hyndman and Koehler 2006), signifies the degree of absolute error between the actual and predicted data as in Eq. 10.

$$MAE=\frac{1}{n}\sum _{i=1}^{N}{|MSL}_{p}-{MSL}_{o}|$$(10) -

ii)

Root Mean Square Error, RMSE (Hyndman and Koehler 2006), measure the distance between the actual and predicted values for each model, so it measures how spread these residuals are in Eq. 11.

$$RMSE=\sqrt{\frac{\sum _{i=1}^{n}{{(MSL}_{p}\mathrm{ }-{MSL}_{o})}^{2}}{N}}$$(11) -

iii)

Coefficient of determination, R (Nagelkerke 1991) demonstrates the predicting model’s performance where zero means the model is random while 1 means a perfect fit.

$${R}^{}=\frac{\sum _{i=1\mathrm{ }}^{n}\left({MSL}_{o\mathrm{ }}-{\stackrel{-}{MSL}}_{o}\right)({MSL}_{p}-{\stackrel{-}{MSL}}_{p})}{\sqrt{\sum _{i=1}^{n}{({MSL}_{o}-{\stackrel{-}{MSL}}_{o})}^{2}}\sum _{i=1}^{n}{{(MSL}_{p}-{\stackrel{-}{MSL}}_{p})}^{2}}$$(12)

In a nutshell, each model performs better when the value of R is close to one, except for RMSE and MAE, where the model performs better when the value is close to zero (Cheng et al. 2015).

Uncertainty Analysis

Uncertainty analysis (UA) aims to calculate the variation of output caused by input variability. It is performed to identify the spectrum of potential outcomes based on the input uncertainty and to investigate the effect of the model’s lack of knowledge or errors. Consideration is given to the percentage of measured data bracketed by 95% Prediction Uncertainty (95PPU) determined by Abbaspour et al. (2007). This factor is calculated at the 2.5% XL and 97.5% XU levels of an output variable where it refused 5% of the very bad simulations.

where k represents the total of actual data at test phases, based on Eq. 13, the value of “Bracketed by 95PPU” is greater (or 100%) when all measured data at testing stages are inserted between the XL and XU. If the accessed data is of outstanding consistency, 80% or more of it should be within the 95PPU. If data are lacking in a few areas, 50% of data in 95PPU will suffice (Noori et al. 2010). D-factors will be used to approximate the average width of the interim uncertainty band, with a value less than one indicating the best value (Noori et al. 2010) as presented in Eq. 14.

\({\sigma }_{x}\) represents the standard deviation of actual data x and \({\stackrel{-}{d}}_{x}\) is the average distance between the upper and lower bands (Noori et al. 2015) as in Eq. 15.

Figure 4 depicts the study model development flow diagram.

Results and discussion

This study targeted to estimate the water level at time t, imitating the nearest values to actual by utilizing various ML algorithms for all scenarios. Mean Absolute Error (MAE), Root Mean Square Error (RMSE), and Coefficient of Determination (R) was the index used to validate the performance of each model. The detailed findings are described in the subsequent sections.

Models Performance for Each Scenario

Tables 2, 3, 4 and 5 summarises each of the machine learning algorithms’ metrics for each scenario. Each evaluation performance demonstrates different results in which these models can learn and find the correlation between the input and output variable. Column 1 presents the scenario, column 2 presents the model used, column 3 to 4 presents the model performance, column 5 presents the training time for each model, and column 6 summarises the three-best model. As summarised in Tables 2, 3, 4 and 5, the performance varies from each of the models. Each result indicated a different result for each machine learning algorithm.

For SC1, Gaussian Processes Regression Models can perform well in predicting the water level with an R of average 0.70 and RMSE and MAE close to 0. The outcome of the results shows that Matern 5/2 outperformed the other models with MAE (1.0304), RMSE (1.499), and R (0.71) with a training time of 0.98308 s. When the GPR model scenarios are compared, it is clear that the model performs better in terms of R than the other model, indicating that the closest the outcome is to 1, the better the model’s accuracy and the closer the MAE and RMSE are to 0, the more accurate and reliable the model.

For SC2 in Table 3, R shows that the performance increases. The GPR models are still the best models to predict the water level in SC2 since an R-value close to 1 indicates a perfect fit. The Matern 5/2 GPR model gives the best performance in predicting water level compared to other ML algorithms. When referring to R, Matern 5/2 GPR has the highest value for training (0.87603, 1.3394, 0.78) with training time 0.67311 s followed by Squared Exponential GPR (0.89877, 1.3436, 0.7) with a training time of 0.73902 and Rational Quadratic GPR (0.89908, 1.3459, 0.77) with a training time of 0.80871.

For SC3 in Table 4, three models that give the best value mimicking the actual values are Linear Regression, Gaussian Processes Regression, and Support Vector Machines models. These three models give R’s acceptable value, but the Stepwise Linear Regression is the most outperformed than the two models with the highest result of 0.92367, 1.2701, and 0.79 with training time 12.166. Likewise, the two models only result from Exponential GPR (1.0291, 1.5301, 0.69) and Rational Quadratic GPR (1.049, 1.602, 0.66), much lower from Linear Regression models.

Lastly, for SC4 in Table 5, the Gaussian Processes Regression Models again have outperformed the other model with the highest value is Matern 5/2 GPR (1,0106, 1.4078, 0.73) followed by Rational Quadratic GPR (1.0166, 1.4343, 0.72) and Squared Exponential GPR (1.0169, 1.435, 0.72). It can be concluded that Matern 5/2 of Gaussian Processes Regression Models is the most reliable model to predict water level as the model gave a high performance in each scenario (except SC3) with a relatively fastest training time. The NN model performed the worst compared to the other three models because it has the highest MAE values, RMSE, and the lowest R-value in almost all four scenarios of input combinations. It mainly shows the negative result of R, indicating the models are overfitting the training data set with poor performance in external data sets.

A response plot plotted the predicted response against the observation in vertical lines. Figure 5a to 5d shows the response plot between the actual and predicted value. The diagram with red lines demonstrated the error between the actual (blue dot) and predicted (orange dot). The error shows the comparable pattern for each scenario (SC1, SC2, SC3, and SC4) since they have the value of R between 0.71 and 0.79, which rationalizes the response plot result.

A predicted versus actual plot can be used to evaluate the model performance. The model’s predicted response is plotted against the actual, true response. An ideal regression model would have a predicted response identical to the observed values in the plot, so all of the points would be on the diagonal line. However, as this would not occur in real life, the target is for the points to be as close to the diagonal line as possible and scattered roughly symmetrically around the line. Figures 6a to 6d represent the scatter plot of each scenario’s best models. SC2 and SC3 show that the plot/distribution is close to the diagonal line compared to SC1 and SC4. Figure 6b, SC2 of Matern 5/2 GPR model has a great plot in predicting the water level in the reservoir. Similarly, the same performance has been noticed for SC3 when Stepwise Linear was used. These findings suggest that using scenarios of SC2 and SC3, both two models can predict changes in water levels.

While Fig. 7a to 7d illustrates a residuals plot of the four best-fitted models used from Tables 2,3, 4 and 5. A fitted model’s residuals are defined as the discrepancies between the response data and the fit to the response data at each predicted value (residual = data – fit). If the residuals tend to behave randomly, it indicates that the model adequately matches the results. However, if the residuals exhibit a systemic trend, it is evident that the model does not adequately match the result. Figure 7 depicts the residuals that behave randomly, indicating that the model describes the data well.

Uncertainty Analysis of Best Models

The best model for SC1, SC2, and SC4 is Matern 5/2 GPR, while SC3 is Stepwise Linear was assessed using 95PPU and d-factor. Table 6 represents the UA results for each best model for each scenario in predicting water level.

Table 6 specifies 96.69%, 96.42%, and 95.85% of data for the best GPR in SC1, SC2, and SC4 and 95.89% of data for the best LR SC3. Hence, the d-factor has values of 0.026410, 0.028430, 0.036327 and 0.035352 for SC1, SC2, SC3, and SC4, respectively. According to the uncertainty analysis results, the suggested model has high precision in predicting the water level. All 95PPU for the four scenarios were greater than 80%, and the d-factor value was highly satisfactory, falling below 1.

Taylor Diagram

A Taylor diagram is a method of plotting three statistics on a 2-D graph that illustrates how closely a pattern matches actual data in terms of their correlation, their root-mean-square (RMS) difference and the variance ratio (Taylor 2001). Equation 16 show the theoretical basis for the diagram, where all four of the statistic (\(R \left(correlation coefficient\right), {E}^{\text{'}}(RMS), {\sigma }_{f}, and {\sigma }_{r}(standard deviations))\) is the key of constructing the taylor (Taylor 2001). These statistics and direct visual comparisons make it simple to distinguish the overall RMS difference in patterns due to variance and how much is due to poor pattern correlation. Figure 8 shows the comparison of the simulated; namely, GPR presents Gaussian Processes Regression, LR presents Linear Regression, SVM presents Support Vector Machines, NN presents Neural Network and Actual presents the observed monthly water level. From the diagram, it can be concluded that the most correspondence between the modelled and observed behaviour is GPR except for SC2, which shows LR models were in relatively good agreement with observations.

Conclusions

This study focuses on predicting water levels using groundwater level data with different scenarios of input utilizing ML algorithms such as Linear Regression (LR), Support Vector Machines (SVM), Gaussian Processes Regression (GPR), and Neural Network (NN) to identify each optimal prediction method. The autocorrelation function (ACF) was used to determine the input scenarios based on the two monthly historical variables (groundwater and water level) for 100 monthly data sets from 2012 to 2019. From the ACF, four scenarios created (using the lag behaviour) then each scenario were computed through the ML algorithms. The results showed that for SC1, SC2, and SC4, all model performance in GPR gave good results where the highest R equal to 0.71 in SC1, 0.78 in SC2, and 0.73 in SC4 using the Matern 5/2 GPR model. For SC3, the Stepwise LR model gave a better result with an R of 0.79. It can be concluded that Matern 5/2 of Gaussian Processes Regression Models is the most reliable model to predict water level as the model gave a high performance in each scenario (except SC3) with a relatively fastest training time. The NN model had the worst performance to the other three models since it has the highest MAE values, RMSE, and lowest value of R in almost all four scenarios of input combinations. 95PPU and Taylor diagram was also used to assess the uncertainty and better understand the best model by comparing the observed and predictive model in three statistics; correlation coefficient, RMSE and standard deviation. The uncertainty analysis results show that the suggested model has high precision in predicting the water level. All 95PPU for the various time horizons were greater than 80%, and the d-factor value was highly satisfactory, falling below 1. These results obtained in this study serves as an excellent benchmark for future water level prediction using the GPR and LR with four scenarios created. Hence, this study can assist modellers and decision-makers in addressing real-time water level prediction. Because there are countless methods proposed to predict water level, further work can be performed by comparing the performance and robustness among parametric and nonparametric methods under different scenarios and study the uncertainty of the identified modes.

References

Aakash P, Kinjal Mistree MS (2017) Machine learning techniques for rainfall prediction: a review. Int Conf Innov Inf Embed Commun Syst Vol 3

Abbaspour KC, Yang J, Maximov I, Siber R, Bogner K, Mieleitner J, Zobrist J, Srinivasan R (2007) Modelling hydrology and water quality in the pre-alpine/alpine thur watershed using SWAT. J Hydrol 333(2–4):413–430. https://doi.org/10.1016/j.jhydrol.2006.09.014

Adhikary SK, Muttil N, Yilmaz AG (2018) Improving streamflow forecast using optimal rain gauge network-based input to artificial neural network models. Hydrol Res 49:1559–1577. https://doi.org/10.2166/nh.2017.108

Ahmed AN, Othman FB, Afan HA, Ibrahim RK, Fai CM, Hossain MS, Ehteram M, El-Shafie A (2019) Machine learning methods for better water quality prediction. J Hydrol 578:124084. https://doi.org/10.1016/j.jhydrol.2019.124084

Ali MZ, Shabbir MNSK, Liang X, Zhang Y, Hu T (2019) Machine learning-based fault diagnosis for single- and multi-faults in induction motors using measured stator currents and vibration signals. IEEE Trans Ind Appl 55(3):2378–2391. https://doi.org/10.1109/TIA.2019.2895797

Berg A, Findell K, Lintner B, Giannini A, Seneviratne SI, Van Den Hurk B, Lorenz R, Pitman A, Hagemann S, Meier A, Cheruy F, Ducharne A, Malyshev S, Milly PCD (2016) Land-atmosphere feedbacks amplify aridity increase over land under global warming. Nat Clim Chang 6(9):869–874. https://doi.org/10.1038/nclimate3029

Berne BJ, Boon JP, Rice SA (1966) On the calculation of autocorrelation functions of dynamical variables. J Chem Phys 45(4):1086–1096. https://doi.org/10.1063/1.1727719

Browne MW (1975) Predictive validity of a linear regression equation. Br J Math Stat Psychol 28(1):79–87. https://doi.org/10.1111/j.2044-8317.1975.tb00550.x

Canchala T, Alfonso-Morales W, Carvajal-Escobar Y, Cerón WL, Caicedo-Bravo E (2020) Monthly rainfall anomalies forecasting for southwestern colombia using artificial neural networks approaches. Water (Switzerland) 12(9):2628. https://doi.org/10.3390/w12092628

Caral C, Ece K, Arslan B, Akbulut A (2019) Benchmarking of regression algorithms and time series analysis techniques for sales forecasting. Balk J Electr Comput Eng 7(1):20–26. https://doi.org/10.17694/bajece.494920

ÇELİK R (2018) Impact of dams on groundwater static water level changes: a case study kralkızı and dicle dam watershed. Uluslararası Muhendis. Arastirma ve Gelistirme Derg 10(2):119–126. https://doi.org/10.29137/umagd.442483

Chang FJ, Chang YT (2006) Adaptive neuro-fuzzy inference system for prediction of water level in reservoir. Adv Water Resour 29(1):1–10. https://doi.org/10.1016/j.advwatres.2005.04.015

Chau KW, Wu CL, Li YS (2005) Comparison of several flood forecasting models in Yangtze River. J Hydrol Eng 10(6):485–491. https://doi.org/10.1061/(asce)1084-0699(2005)10:6(485)

Cheng CT, Feng ZK, Niu WJ, Liao SL (2015) Heuristic methods for reservoir monthly inflow forecasting: a case study of xinfengjiang reservoir in Pearl River, China. Water (Switzerland) 7(8):4477–4495. https://doi.org/10.3390/w7084477

Daliakopoulos IN, Coulibaly P, Tsanis IK (2005) Groundwater level forecasting using artificial neural networks. J Hydrol 309(1–4):229–240. https://doi.org/10.1016/j.jhydrol.2004.12.001

Damian DC (2019) A critical review on artificial intelligence models in hydrological forecasting how reliable are artificial intelligence Models. Int J Eng Tech Res 8:365–378.

Famiglietti JS (2014) The global groundwater crisis. Nat Clim Change 4(11):945–948. https://doi.org/10.1038/nclimate2425

Gao W, Karbasi M, Hasanipanah M, Zhang X, Guo J (2018) Developing GPR model for forecasting the rock fragmentation in surface mines. Eng Comput 34(2):339–345. https://doi.org/10.1007/s00366-017-0544-8

Gong Y, Zhang Y, Lan S, Wang H (2016) A comparative study of artificial neural networks, support vector machines and adaptive neuro fuzzy inference system for forecasting groundwater levels near Lake Okeechobee, Florida. Water Resour Manag 30(1):375–391. https://doi.org/10.1007/s11269-015-1167-8

Hampel FR, Ronchetti EM, Rousseeuw PJ, Stahel WA (2011) Robust statistics: the approach based on influence functions. John and Wiley Sons, Vol 196

Hung NQ, Babel MS, Weesakul S, Tripathi NK (2009) An artificial neural network model for rainfall forecasting in Bangkok, Thailand. Hydrol Earth Syst Sci 13(8):1413–1425. https://doi.org/10.5194/hess-13-1413-2009

Hyndman RJ, Koehler AB (2006) Another look at measures of forecast accuracy. Int J Forecast 22(4):679–688. https://doi.org/10.1016/j.ijforecast.2006.03.001

Khai WJ, Alraih M, Ahmed AN, Fai CM, El-Shafie A (2019) Daily forecasting of dam water levels using machine learning. Int J Civ Eng Technol 10(6):314–323

Karami H, Mousavi SF, Farzin S, Ehteram M, Singh VP, Kisi O (2018) Improved krill algorithm for reservoir operation. Water Resour Manag 32(10):3353–3372. https://doi.org/10.1007/s11269-018-1995-4

Kaya YZ, Üneş F, Demirci M (2018) Groundwater level prediction using artificial neural network and m5 tree models. Air and Water Components Environ Conf Proc 195–201. https://doi.org/10.24193/awc2018_23

Lee J, Kim CG, Lee JE, Kim NW, Kim H (2018) Application of artificial neural networks to rainfall forecasting in the Geum River Basin, Korea. Water (Switzerland) 10(10):1448. https://doi.org/10.3390/w10101448

Maimunah, Yeni M, Kumala D (2019) The influence of water level fluctuation reservoir stability of the earth dam. IOP Conference Series: Mater Sci Eng 506:012032. https://doi.org/10.1088/1757-899X/506/1/012032

Meyer D, Leisch F, Hornik K (2003) The support vector machine under test. Neurocomputing 55(1–2):169–186. https://doi.org/10.1016/S0925-2312(03)00431-4

Nagelkerke NJD (1991) A Note on a General Definition of the Coefficient of Determination. Biometrika 78(3):691–692

Neal RM (2012) Bayesian learning for neural networks, vol 118. Springer Science and Business Media

Noble WS (2006) What is a support vector machine? Nat Biotechnol 24(12):1565–1567. https://doi.org/10.1038/nbt1206-1565

Noori R, Hoshyaripour G, Ashrafi K, Araabi BN (2010) Uncertainty analysis of developed ANN and ANFIS models in prediction of carbon monoxide daily concentration. Atmos Environ 44(4):476–482. https://doi.org/10.1016/j.atmosenv.2009.11.005

Noori R, Yeh HD, Abbasi M, Kachoosangi FT, Moazami S (2015) Uncertainty analysis of support vector machine for online prediction of five-day biochemical oxygen demand. J Hydrol 527:833–843. https://doi.org/10.1016/j.jhydrol.2015.05.046

Phanindra BR, Pralhad RN, Raj AAB (2020) Machine learning based classification of ducted and non-ducted propeller type quadcopter. Int Conf Adv Comput Commun Syst 1296–1301. https://doi.org/10.1109/ICACCS48705.2020.9074307

Rasmussen CE (1997) Evaluation of gaussian processes and other methods for non-linear regression. Dissertation, University of Toronto, Canada

Reza M, Harun S, Askari M (2018) Streamflow forecasting in Bukit Merah watershed by using ARIMA and ANN. Portal J Tek Sipil 9(1). https://doi.org/10.30811/portal.v9i1.612

Ronchetti E, Field C, Blanchard W (1997) Robust linear model selection by cross-validation. J Am Stat Assoc 92(439):1017–1023. https://doi.org/10.1080/01621459.1997.10474057

Rumelhart DE, Widrow B, Lehr MA (1994) The basic ideas in neural networks. Commun ACM 37(3):87–93

Sapitang M, Ridwan WM, Kushiar KF, Ahmed AN, El-Shafie A (2020) Machine learning application in reservoir water level forecasting for sustainable hydropower generation strategy. Sustain 12 (15):6121. https://doi.org/10.3390/su12156121

Siegelmann HT, Sontag ED (1995) On the computational power of neural nets. J Comput Syst Sci 50(1):132–150. https://doi.org/10.1006/jcss.1995.1013

Tan LK, McLaughlin RA, Lim E, Abdul Aziz YF, Liew YM (2017) Fully automated segmentation of the left ventricle in cine cardiac MRI using neural network regression. J Magn Reson Imaging 48(1):140–152. https://doi.org/10.1002/jmri.25932

Taylor KE (2001) Summarizing multiple aspects of model performance in a single diagram. J Geophys Res 106(D7):7183–7192. https://doi.org/10.1029/2000JD900719

Trenberth KE (2011) Changes in precipitation with climate change. Clim Res 47(1–2):123–138. https://doi.org/10.3354/cr00953

Tu JV (1996) Advantages and disadvantages of using artificial neural networks versus logistic regression for predicting medical outcomes. J Clin Epidemiol 49(11):1225–1231. https://doi.org/10.1016/S0895-4356(96)00002-9

Wang W, Van Gelder PHAJM, Vrijling JK, Ma J (2006) Forecasting daily streamflow using hybrid ANN models. J Hydrol 324(1–4):383–399. https://doi.org/10.1016/j.jhydrol.2005.09.032

Wilson AG, Knowles DA, Ghahramani Z (2011) Gaussian Process Regression Networks. In: Proceedings of the 29th International Conference on Machine Learning, ICML 2012, pp 599–606

Wu WY, Lo MH, Wada Y, Famiglietti JS, Reager JT, Yeh PJF, Ducharne A, Yang ZL (2020) Divergent effects of climate change on future groundwater availability in key mid-latitude aquifers. Nat Commun 11(1):1–9.https://doi.org/10.1038/s41467-020-17581-y

Zhang N, Xiong J, Zhong J, Leatham K (2018) Gaussian process regression method for classification for high-dimensional data with limited samples. Int Conf Inf Sci Technol 358–363

Zieba A, Ramza P (2011) Standard deviation of the mean of autocorrelated observations estimated with the use of the autocorrelation function estimated from the data. Metrol Meas Syst 18(4):529–542. https://doi.org/10.2478/v10178-011-0052-x

Zhou N, Pierre JW, Trudnowski D (2012) A stepwise regression method for estimating dominant electromechanical modes. IEEE Trans Power Syst 27(2):1051–1059. https://doi.org/10.1109/TPWRS.2011.2172004

Acknowledgements

This research was supported by the Ministry of Education (MOE) through Fundamental Research Grant Scheme (FRGS/1/2020/TK0/UNITEN/02/16).

Author information

Authors and Affiliations

Contributions

Data curation, Michelle Sapitang and Wanie M. Ridwan Formal analysis, Michelle Sapitang and Wanie M. Ridwan; Methodology, Ali Najah Ahmed, Chow Ming Fai, and Ahmed El-Shafie; Writing – original draft, Michelle Sapitang; Writing – review & editing, Michelle Sapitang and Ali Najah Ahmed.

Corresponding author

Ethics declarations

Conflicts of interest

The authors declare no conflict of interest.

Additional information

Communicated by: H. Babaie

Publisher’s note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Sapitang, M., Ridwan, W.M., Ahmed, A.N. et al. Groundwater level as an input to monthly predicting of water level using various machine learning algorithms. Earth Sci Inform 14, 1269–1283 (2021). https://doi.org/10.1007/s12145-021-00654-x

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12145-021-00654-x