Abstract

Geo-environmental information is an important basis for geohazard analysis and the integration of geo-environmental data is crucial in the construction of urban emergency management systems. In existing urban spatial information systems, the integration of geo-environmental data is neither intuitive nor efficient enough to support the analysis of geohazards well. On the basis of Web virtual globe, this paper proposes a comprehensive framework for the integration and analysis of geo-environmental data. This framework can effectively integrate geological data with a 3D geological model as a carrier, seamlessly connect geographic data, dynamically load real-time monitoring data, and build 3D visualisation and analysis scenes of urban full-space temporal information in the browser. The application example shows that the proposed framework can provide good geo-environmental data and practical data analysis functions for geohazard early warning and decision making, and improve the efficiency of government departments’ response to geohazards.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

With the continuous development of industrialisation, human activities have intensified urban geological changes, resulting in frequent geohazards, such as landslides, mudslides, ground fissures, soil erosion, land desertification, earthquakes and volcanoes. In modern cities, due to the relative concentration of enterprises and populations, sudden geohazards usually have very serious negative effects on society. The scientific prevention and management of geohazards have gradually become important issues in urban safety work. Their solution is inseparable from the support of multi-source and heterogeneous geo-environmental data, including geological survey data of basic geology, engineering geology, hydrogeology and mineral resources, as well as dynamic monitoring data of real-time traffic and human flows in the city. When geohazards occur, if geological experts can quickly analyse the hazard situation and generate rigorous response plans based on intuitive urban geo-environmental information, they can effectively reduce urban economic and ecological losses and protect people’s lives and properties.

In the past few decades, countries around the world have launched urban geological surveys related to geohazards. It is estimated that the total amount of static data and dynamic data obtained in each large and medium-sized city can reach terabyte levels. In order to integrate the multi-element geo-environmental data effectively, scholars have conducted a huge number of program designs and technical studies. The existing integration modes of urban geo-environmental data can be broadly classified into three types. The first type is the integration mode based on an Information Management System (IMS). The authors developed an urban-scale geological IMS based on the Browser/Server (B/S) structure (Zhou 2019). The authors proposed a spatial database-based geological data integration method (Peters et al. 2018). However, IMS focuses on the storage, retrieval and management of original geo-environmental data and lacks data rendering performance, which makes it difficult to provide intuitive data visualisation effects (Blodgett et al. 2016; Gray 2016; Liu et al. 2019; Ortolano et al. 2015; Turganbaev et al. 2015; Wu et al. 2020). The second integration mode is based on a single machine version of a geographic information system (GIS). The authors proposed a BIM/GIS-based integration method for urban infrastructure data (Kang and Hong 2015). The authors designed a GIS-based approach for surface/subsurface data integration and geological modelling in order to study the geology of the Ravenna Coastal Plain (Scarelli et al. 2017). Although the single machine version of GIS has powerful data expression capabilities, it usually needs to be deployed as standalone software in a specific operating system and the loaded data are often limited to local data. Frequent software maintenance and data updates are not conducive to multi-user collaboration (Aksoy and San 2019; Carrino et al. 2018; Chen et al. 2018b; Yousif and Sracek 2016). The third integration mode is based on a Web Geographic Information System (WebGIS). The authors developed a visualisation system for spatial information and monitoring data based on WebGIS (Qi et al. 2013). The authors designed a WebGIS-based big geological data integration system (Yong-Peng et al. 2018). Traditional WebGIS is an extension and development of GIS, which has the advantages of wide access, simple operation, easy sharing and no platform limitations. However, the back-end data are usually only reviewed and released by the administrator, and the browser’s multiple requests and rendering of large-scale data prolong the system response time, so this mode is not good at meeting the demand for massive data and timeliness during geohazard management (Kim et al. 2012; Lee et al. 2018; Wang et al. 2018).

In view of the limitations of the above modes, this paper proposes a WebGL-based urban geo-environmental data integration and analysis framework to support the demand for geo-environmental data in the process of geohazard management. To solve the problems in the IMS mode, this paper builds a 3D full-space virtual scene at the urban scale based on Cesium virtual global (https://cesium.com/cesiumjs/) to realise the spatial–temporal perspective and analysis of geo-environmental big data. To solve the problems in GIS mode, this paper designs the system architecture based on the proposed framework as the B/S structure and builds a cloud-based geo-environmental thematic database so that users do not need to worry about software maintenance and data updates, thus improving team collaboration. To solve the problems in the WebGIS mode, this paper improves the data transmission and response speed by ‘light browser/heavy server’ and ‘front-end and back-end separation’, and increases the user’s authority to release data in the cloud-based database independently to expand the back-end data sources and data volume. Through the above methods, be it at the geohazard site or the command room, and regardless of whether the mobile terminal is a tablet or a PC, the expert team can quickly perform 3D visual analysis of the full-space temporal data related to a geohazard through the browser to assist in the management of geohazards.

The paper is structured as follows. Chapter 2 introduces the general architecture of the proposed framework and the key technologies used. Chapter 3 introduces the organisation, management and sharing of data in the thematic database based on the cloud mode. Chapter 4 introduces the preprocessing method of original geo-environmental data. Chapter 5 introduces the visualisation technology of multi-source and heterogeneous geo-environmental data based on the Cesium virtual globe. Chapter 6 verifies the effectiveness of the framework proposed in this paper by taking the Shenzhen geohazard emergency management system as an example. Chapter 7 summarises and analyses the proposed framework and proposes goals for future improvement.

General architecture

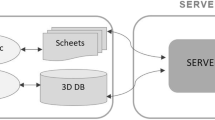

The system architecture based on the comprehensive framework proposed in this paper consists of a client-side application layer, a server-side logic layer and a data source layer. In order to overcome the limitations of traditional WebGIS (single form of back-end data release and long response time of browser), this paper provides a more detailed classification and construction on the basis of these three layers, as shown in Fig. 1.

Client side

The web application is created in the Vue.js framework (https://vuejs.org/index.html) using HTML5, CSS and JavaScript. The open-source 3D virtual globe framework Cesium.js is introduced to integrate pre-processed and released multi-source and heterogeneous geo-environmental data. Through these steps, users can open a browser in a mobile phone, tablet or laptop and enter the system access address to perform 3D visual analysis of geohazard-related full-space temporal data.

Server side

The static resources (such as HTML/JavaScript/CSS/IMG) are deployed on Web servers such as Nginx and back-end applications (data query functions) are deployed on application servers such as Tomcat. The user terminal can access the Web server in the wide area network, while the application server can only be accessed through the Web server in the local area network. With the advantages of separation of dynamic and static files, reverse proxy and load balancing, Nginx completes the tasks of requesting and forwarding data between the browser and the server. In addition, because the geo-environmental data related to geohazards involves massive spatial information, it is necessary to set up a dedicated geographical data server so that users can call WMTS, WMS, WFS, sensor observation services and other service interfaces that conform to OGC standards (https://www.ogc.org/).

Data side

A cloud-based geo-environmental thematic database is built, consisting of geological survey files and open-source data. The geological survey files contain image, vector, raster and 3D data, which need to be continuously collected and processed. The open-source data can be downloaded in NASA (https://www.nasa.gov/), Amap (https://lbs.amap.com/), BIGEMAP (http://www.bigemap.com/) and other software on demand. The above data can be released by the administrator or users separately to expand the data volume and data sources of the database. In addition, this paper also studies the disaster-tolerant backup function of the database, which regularly transfers data to other servers to avoid unexpected situations that cause database failure or data loss.

In the above architecture, the browser is only responsible for data display and analysis, and the server is only responsible for data processing, querying and forwarding. The two form a ‘light browser /heavy server’ system. In traditional Web applications, the front end is usually equated with the browser and the back end with the server. However, this paper adds Node.js to the server and places it under the responsibility of the front-end developers, who can write functional modules according to the business requirements to enhance the reusability of the interfaces in different terminals. Node.js (https://nodejs.org/en/) acts as a bridge between the browser and the server to realise the ‘front-end and back-end separation’ during project development, and is suitable for scenarios with high concurrency and intensive Input/Output. Not only can it receive and collect multiple consecutive requests from the browser and then forward them to the server together, it can also preprocess the requested JavaScript Object Notation (JSON) data and then asynchronously return them to the browser. In this way, the number of HTTP connections established between the mobile terminal and the server can be decreased, the pressure on the browser to process data can be relieved and the system response delay can be reduced, thereby allowing it to focus more on data rendering.

Thematic database based on cloud mode

Data characteristics are an important foundation in a database construction scheme. Geo-environmental data have three basic characteristics. First, there is the large scale. In recent years in particular, the amount of image data has increased geometrically with the use of satellites and drones. The second basic characteristic is the strong level of professionalism involved. The data related to geology, remote sensing, geophysical prospecting and monitoring, and so on, are usually held by a few professional individuals. The third characteristic is the diversity of the data. In terms of storage form, the data can be divided into paper documents, spreadsheets and image and video files. In terms of type, the data can be divided into attribute data, vector data, raster data and three-dimensional data. Therefore, the construction of a geo-environmental database needs to solve the following problems: ① data redundancy and storage structure confusion, which in turn lead to low retrieval efficiency and long system response time, and ② in the case of geohazards, the difficulty faced by the server administrator in collecting data comprehensively and releasing data quickly (Xiong et al. 2011).

In order to overcome the above limitations, a thematic database based on the cloud mode is necessary. First, the multi-element geo-environmental data are classified and stored according to the hierarchical model. Second, the data in the database no longer rely solely on the server administrator to review and update, and users can release data independently through the client.

Logical model of cloud-based database

Data are usually stored in a database according to a certain logical model, such as a hierarchical model, a network model or a relational model. Given the basic characteristics of geo-environmental data, this paper builds the database according to the organisational structure of a hierarchical model. As shown in Fig. 2, the data are classified by data types, including attribute data, vector data, raster data and 3D data. Moreover, according to data specialisation, attribute data include real-time traffic, weather, hot news, underground borehole data and sensor monitoring data. Vector data include building groups and vector information about the city boundary line, for example. Raster data include remote sensing imagery, geological maps and traffic maps, while 3D data include digital elevation models, building information models and 3D geological model.

Compared with network and relational models, the way of storing geo-environmental data in a hierarchical model makes the data structure simple and clear, with no complex interrelationships. The developers can easily analyse the relationships between data and achieve some operations of the database through simple programming to improve the efficiency of database storage and retrieval.

Data update and sharing

The existing method of updating back-end data generally follows the steps of collecting, organising, processing and releasing data, all performed by the server administrator, making the data used by users relatively fixed. Especially when managing sudden geohazards, this is not conducive to the rapid convergence of relevant data. In order to solve this problem, this paper changes the approach from one in which only the server administrator operates the database to one that increases the user’s authority to release and share data independently in order to build a cloud-based geo-environmental database. The basic data (such as remote sensing imagery, geological maps, traffic maps and terrain elevation data) are uploaded to the common storage space by the administrator or system-level users, allowing them to be commonly used by all those who analyse geohazards and improving the uniformity of the urban spatial reference. The rest of the specific business data are uploaded directly to the private storage space by users from different departments through the client and the access rights of other users are set to realise data sharing.

The cloud-based data updating and sharing are shown in Fig. 3. Through a ‘three-way handshake’ to achieve the authentication of the user’s login, the control connection is established between the user and the server interface. This connection is a special channel used to transmit FTP commands, which realises ‘minimising delay’, but its purpose is very limited, only implementing simple permission verification, file viewing and file deletion. To improve the efficiency of data transmission between the browser and the server, this paper establishes a special data connection channel for each data transmission task. Since the connection channel is only used for data transmission, it can achieve ‘maximising throughput’. In addition, the transmission of large amounts of data increases the probability of ‘break’ events. The transmission breakpoint (the byte length of the transmitted part) is recorded when the network connection is abnormal. The FTP connection will be re-established when the network returns to normal and the data after the breakpoint continue to be transmitted on the data connection channel. With the above method, the main responsibility of the server administrator is changed to permission review and allocation of storage space, assisting users in data scheduling management. Users can apply for their own storage space from the administrator in order to use functions such as data uploading, data downloading, data queries and breakpoint transmission. Moreover, users who upload data can set the sharing permissions for their own data. The shared objects include single users, user groups and all users. The access rights include inaccessible, free access and access licence applications. The users who are granted the appropriate permissions can use data or models of others via a key (sharing code).

Preprocessing of geo-environmental data

Geo-environmental data related to geohazards specifically comprise historical remote sensing images, terrain data, geological maps, traffic maps, geological models, urban building information, borehole data, real-time traffic and sensor data of various monitoring sites. For the integrated display of the above data, there are the following difficulties: ① the large volume of city-level data and low rendering efficiency; ② the need for multiple processing tools and integration interfaces to deal with data in diverse storage formats; ③ the fact that the data are isolated from each other and the difficulty of seamlessly connecting the spatial location of the model with the geographic data; and ④ the need to associate the attribute data with entities in an intuitive form. In order to solve the above-mentioned difficulties, this chapter divides the original geo-environmental data into attribute data, 2D map data, 3D model data and terrain data, and then introduces the respective preprocessing methods, including the steps of format conversion, error correction and attribute editing.

Processing of attribute data

The attribute data contain static data and dynamic data, and the preprocessing method is shown in Fig. 4. The static data mainly comprise geological survey data stored in XLS or TXT files, and the dynamic data mainly comprise sensor monitoring data. The static data need to be organised in a standard format; the first column of the table is the location information, the second column is the time, the third column is the research goal and the fourth column is the value. The transmission of dynamic data must rely on 52°North (https://52north.org/), which is an open-source Web framework-supporting sensor observation service. The data interface of the sensor is bound to the server IP, and the data transmission is realised through Socket (Kussul et al. 2009). The data obtained through the above steps are converted into an array or json format, and Echarts.js (http://echarts.apache.org/en/index.html) is used to associate the attribute data with the model in a graphical form on the virtual globe.

Processing of 2D maps

It is usually the case that 2D maps, including remote sensing imagery, geological maps and traffic maps, are stored in.tif format. Integration of such maps on a virtual globe requires spatial adjustment. The map is imported into ArcMap and projected under the EPSG:4326 (WGS84 coordinate system code) geographic coordinate system. Then, the map is evenly marked with latitude and longitude information of 10–20 points, so that the whole map has the world coordinate property and can be loaded onto the actual location of the virtual globe surface.

To express the urban information in more detail, including streets, buildings, parking lots, highways, hydropower stations and gardens, the images or maps need to be high resolution and high precision, and will therefore occupy a large memory space. Usually, the data volume of an urban remote sensing image with an accuracy of five metres is above 6G. If all the details of city-level maps and images are rendered on a Web browser simultaneously, the smoothness of user interaction cannot be guaranteed. Therefore, it is necessary to slice the maps by resolution level and region and store them in different hierarchical folders. As an example, a common slicing rule is shown in Fig. 5, in the form of a quadtree, in which a square image tile is sliced into four small tiles, and each small tile is sliced into four smaller tiles. Slicing makes it convenient for the browser to load only the maps within the current visible range by the index directory. This approach realise the gradual loading of city-level maps and to reduce the waste of CPU and GPU resources.

Processing of 3D models

The 3D model has strong advantages in display effect; in particular, the 3D geological model provides the most intuitive way of showing the underground 3D spatial structure of a hazard site. Consequently, the processing of 3D models is important. It includes the building vector model, building information model and 3D geological model. The large area and complex geology of a city, the large number of buildings and their various internal structures make it more difficult to render a 3D geological model and building model in the browser. To solve this problem, this paper uniformly converts a multi-format 3D model into 3D Tiles (https://www.ogc.org/standards/3DTiles), a spatial data specification created on the basis of a hierarchical level of detail. The 3D Tiles model consists of a tree structure comprising a number of tiles in the format b3dm, with the organisation defined by root and children in the tileset.json. The tree structure can be a quadtree similar to that used for 2D maps, or a more complex k-d tree, octree. Each node in the tree represents a 3D tile and contains the geometriError property, which defines the true geometric error of the currently displayed 3D tile set. The root node of the top layer represents the roughest model with the largest geometric error, and the leaf nodes of the bottom layer represents the intact model with the smallest geometric error. The geometric error of the model is converted in the 2D screen into a virtual screen space error. The deeper the level of detail is, the smaller the virtual screen space error value is. When the virtual error value of the loaded 3D tiles in a model is greater than the default threshold, deeper 3D tiles in the tileset.json should be searched and loaded by depth-first search technology or by breadth-first search to make the model’s current virtual screen space error less than the default threshold. Through the above method, the hierarchical loading of the 3D model is realised, which can effectively improve the rendering speed of the city-level 3D model in the browser (Xu et al. 2020).

The preprocessing process of the 3D model is shown in Fig. 6. This process requires the use of Cesiumlab software (https://www.cesiumlab.com/). The building vector model stored in.shp and.kml formats can be directly converted to 3D Tiles, while the building information model stored in.rvt,.dwg,.cgr, etc., and the 3D geological model stored in.fbx,.gltf, etc., are first converted to the intermediate formats.clm or.obj, and then to 3D Tiles. In the process of converting the above models, information such as model position, texture and scale can be modified. For the gap between the model and the terrain in particular, the spatial coordinate or elevation data can be imported into the model conversion process in the form of attachments to achieve the seamless connection of terrain, surface building model and subsurface geological model, which significantly enhances the display effect of the city-level full-space 3D model.

Processing of terrain data

With regard to the slicing and storage of 2D maps, the original terrain data can be converted and sliced into quantised-mesh terrain data by selecting the storage type, trigonometric algorithm, GZIP compression and water surface vector in the Cesiumlab toolbox. The processed terrain data are stored in a terrain folder, which contains multi-layer subfolders for storing terrain tiles of different levels and areas (Chen et al. 2018a). The terrain tile is a binary file, which consists of header data, vertex data, index data, extension data. The header data store the tile, the coordinates of the centre point, the coordinates of the spherical boundary and the coordinates of the horizontal occlusion point. Following the header data, the vertex data store the number of vertices, vertex coordinates and elevation information. Two adjacent tiles are connected by vertex data. The rightmost vertex of the left tile is the leftmost vertex of the right tile, and they share a set of coordinate values and elevation values. Following the vertex data, the index data is responsible for connecting all vertices in a tile to render a complete tile. Following the index data, the extension data supply additional information about the tile, such as terrain lighting and water surface effects.

Visualisation method

This chapter will introduce Cesium, a 3D virtual globe framework that is widely used in the field of spatial information visualisation, and describe how it can be used to present geohazard-related urban geo-environmental data.

Cesium

Cesium is a JavaScript-based open-source Web virtual globe framework, and WebGL is used in the bottom layer to accelerate the rendering of the 3D scene, so that it can run smoothly in common systems and browsers. Cesium supports the multi-scale display of massive amounts of full-space 3D models, map data, elevation data and vector data, etc., which can be used for tasks such as building a 3D digital earth, creating virtual scenes and visualising spatial information. In addition, entities such as polylines, polygons, billboards and labels can be drawn in Cesium virtual globe, the camera can follow a specified path and animation effects can be realised through a timeline (Zhu et al. 2019). From the perspective of geohazard visualisation and analysis, Cesium is a good carrier for integrating and analysing geo-environmental data.

The way to create the Cesium virtual globe is to first introduce the two basic libraries Cesium.js and Widgets.css in the Cesium source package. Then, a div element with the id cesiumContainer needs to be created to mount the viewer. Finally, a token is requested on the Cesium official Website and used to create the viewer visualisation object.

Visualisation method based on cesium

The visualisation method of geo-environmental data based on Cesium virtual globe is shown in Fig. 7. The object describing a 2D map consists of attributes such as the map storage path, minimum display layer, maximum display layer, background transparency and projection coordinate system. The visualisation of the 2D map first needs declaration as an UrlTemplateImageryProvider instance containing the map object, and then addition of the instance to the imageryProvider property of the viewer object to form a map collection, indicating the type of map layers and the order of layering. The object describing the 3D terrain is composed of properties such as terrain storage paths and water effects. The visualisation of the 3D terrain first needs declaration as a CesiumTerrainProvider instance containing the terrain object, and then addition of the instance to the terrainProvider property of the viewer object to compose the terrain collection. The object describing the 3D model consists of properties such as the model storage path, show and model matrix. The visualisation of the 3D model first needs declaration as an instance of Cesium3DTileset containing the model object, and then addition of the instance to the primitives property of the viewer object to form a model collection. The 3D model consists of a number of tiles, each with a unique id value, so that the colour, position and state of each tile can be controlled individually. The visualisation of spatial attribute data can be done with the third-party open-source library Echarts.js and Cesium’s own widgets, using Echarts.js, for example, to display static statistics and dynamic sensor data in the form of visual charts, using particles to create weather effects such as rain and snow, using billboards to mark emergency events on the ground, using heatmaps to express real-time crowd gathering and using entities to draw polyhedrons of different heights within each district to represent the comparative relationship of regional population totals and other data.

Application

Taking the Shenzhen geohazard emergency management system as an example, we use the framework proposed in this paper to integrate a large amount of urban geo-environmental data and build a real-time geohazard analysis scene with full-space 3D visualisation for this system. The overall system interface is shown in Fig. 8.

Background

Shenzhen is a sub-provincial city under the jurisdiction of Guangdong Province, China. It is located in the south of Guangdong, with Daya Bay and Dapeng Bay in the east, the Pearl River Estuary and Lingding Ocean in the west, the Shenzhen River in the south, connecting it to Hong Kong. The city has nine districts, with a total area of 1997.47 square kilometres. Its particular geographical location makes Shenzhen vulnerable to typhoons and heavy rains, which can lead to geohazards such as landslides and mudslides. In addition, the rapid development of Shenzhen's infrastructure industry in recent years has seriously damaged the originally stable urban geological structure, resulting in the frequent occurrence of geohazards such as ground fissures, ground subsidence and water inrushing into tunnels (Jiang et al. 2018). The geo-environmental data integration and analysis framework proposed in this paper is applied to the geohazard management work in Shenzhen in order to test the feasibility and effectiveness of this framework.

Detailed application

As shown in Fig. 9, we simulate the analysis and management scene of a landslide under heavy rainfall in the system. The yellow-green buildings in Fig. 9a represent houses that may be affected by the landslide and the blue buildings represent the surrounding houses that are not affected. Users can click on the house model with the left mouse button, and view the building’s construction date, number of floors, area and category in the dialogue box. The red double arrow in Fig. 9b represents the trend of the landslide, the wall-like mound below is the simulated landslide fence and the yellow circles represent the areas that may be affected by the landslide. The green, saffron and orange single arrows represent the clear, crowded and more crowded channels in the evacuation routes, which are calculated by the real-time traffic path planning API of Amap (https://lbs.amap.com/) and Baidu Maps (http://lbsyun.baidu.com/). In Fig. 9c, a visual chart drawn by Echarts.js in canvas is shown, which enables dynamic visualisation of the groundwater monitoring data at the geohazard site in virtual globe.

In addition to natural geohazards, such as landslides and mudslides, in order to effectively respond to geohazards caused by human activities, such as ground subsidence and ground fissures, we integrate geo-environmental data as shown in Fig. 10 and provide corresponding analysis tools. Figure 10a shows the segmentation rule of the Shenzhen geological model that is built by means of multi-point statistics (Chen et al. 2020, 2018c). We achieve dynamic loading based on the scheduling algorithm, as well as the underground view, sectioning and explosion of a certain subsurface 3D geological model. As early as the early twentieth century, in order to alleviate the residential pressure caused by urbanisation, the Shenzhen government implemented a coastal land reclamation project in Qianhai Bay. Figure 10b shows the surface changes of Qianhai Bay. The continuous remote sensing images with timestamps are released through geoserver software and bound with the timeline in Cesium so as to realise the transformation of historical remote sensing images. In Fig. 10c, the subsurface borehole column in Qianhai Bay is simulated. By using ‘transparent surface’ and ‘underground mode’ functions, the borehole can be observed and analysed. Each small column in a borehole is generated by the column instance of the entity class, and its spatial position and thickness are set according to real data. Figure 10d shows the simulated tunnel excavation and tunnel roaming for the geological body; the roaming function is implemented by calculating the pitch, yaw and roll angles of two consecutive points in the centreline of the tunnel, and then changing the camera orientation and flight path. Figure 10e shows the heatmap drawn on the surface of the remote sensing image based on real-time crowd gathering; red represents the densely populated area and green represents the sparsely populated area.

The surface and subsurface geo-environmental data and analysis tools. (a) the segmentation and analysis of the 3D geological model; (b) the changes of remote sensing images in Qianhai Bay; (c) the visualisation of borehole; (d) the simulated tunnel excavation and tunnel roaming; (e) the real-time crowd heatmap

Conclusions

In this paper, we propose a comprehensive framework for the integration and analysis of geo-environmental data for geohazards. First, the application background of this framework is a Web application, and users do not need to be concerned with its deployment and maintenance, meaning it can effectively improve the collaboration of user groups. At the same time, the dual mode of ‘light browser/heavy server’ and ‘front-end and back-end separation’ not only reduces the data response delay but also improves the browser rendering speed. Second, the cloud-based database means that the data can undergo preprocessing and be released together by administrators and users to expand the back-end data sources and data types. Finally, Cesium virtual globe is used to create a 3D visualisation and simulation scene, integrating the full-space temporal data related to geohazards with the urban geological model as a carrier. Verification, using the example of the Shenzhen geohazard emergency management system, shows that the framework proposed in this paper can effectively improve the aggregation, storage, sharing and integration efficiency of geo-environmental data and can realise the 3D visualisation and analysis of geohazards, which can assist the judgement and decision making of geological experts.

However, the framework proposed in this paper lacks the ability to proactively detect geohazard locations. Future work will focus on how to actively discover potential geohazard sites based on the existing geo-environmental data integration framework, combined with hydrological monitoring, remote sensing and machine-learning technologies, to fully realise early warning and analysis of geohazards, and to enhance the integrity and practicality of the system.

References

Aksoy E, San BT (2019) Geographical information systems (GIS) and Multi-Criteria Decision Analysis (MCDA) integration for sustainable landfill site selection considering dynamic data source. Bull Eng Geol Env 78:779–791. https://doi.org/10.1007/s10064-017-1135-z

Blodgett D, Lucido J, Kreft J (2016) Progress on water data integration and distribution: a summary of select US Geological Survey data systems. J Hydroinf 18:226–237. https://doi.org/10.2166/hydro.2015.067

Carrino TA, Crosta AP, Toledo CLB, Silva AM (2018) Hyperspectral remote sensing applied to mineral exploration in southern Peru: a multiple data integration approach in the Chapi Chiara gold prospect. Int J Appl Earth Obs Geoinf 64:287–300. https://doi.org/10.1016/j.jag.2017.05.004

Chen QY, Liu G, Ma XG, Mariethoz G, He ZW, Tian YP, Weng ZP (2018a) Local curvature entropy-based 3D terrain representation using a comprehensive Quadtree. ISPRS J Photogramm Remote Sens 139:30–45. https://doi.org/10.1016/j.isprsjprs.2018.03.001

Chen QY, Liu G, Ma XG, Yao Z, Tian YP, Wang HL (2018b) A virtual globe-based integration and visualization framework for aboveground and underground 3D spatial objects. Earth Sci Inf 11:591–603. https://doi.org/10.1007/s12145-018-0350-x

Chen QY, Mariethoz G, Liu G, Comunian A, Ma XG (2018c) Locality-based 3-D multiple-point statistics reconstruction using 2-D geological cross sections. Hydrol Earth Syst Sci 22:6547–6566. https://doi.org/10.5194/hess-22-6547-2018

Chen QY, Liu G, Ma XG, Li XC, He ZW (2020) 3D stochastic modeling framework for Quaternary sediments using multiple-point statistics: a case study in Minjiang Estuary area, southeast China. Comput Geosci 136:14. https://doi.org/10.1016/j.cageo.2019.104404

Gray DJ (2016) Integration of historic groundwater data into the continent scale geochemistry initiative. Aust J Earth Sci 63:427–451. https://doi.org/10.1080/08120099.2016.1218932

Jiang X, Song JS, Lin YY, Gong YX (2018) A practical approach to constructing hierarchical networks for urban hazard mitigation planning using GIS: the case of Futian, Shenzhen. Int J Disaster Risk Reduct 28:629–639. https://doi.org/10.1016/j.ijdrr.2018.01.014

Kang TW, Hong CH (2015) A study on software architecture for effective BIM/GIS-based facility management data integration. Autom Constr 54:25–38. https://doi.org/10.1016/j.autcon.2015.03.019

Kim SA, Shin D, Choe Y, Seibert T, Walz SP (2012) Integrated energy monitoring and visualization system for Smart Green City development designing a spatial information integrated energy monitoring model in the context of massive data management on a web based platform. Autom Constr 22:51–59. https://doi.org/10.1016/j.autcon.2011.07.004

Kussul N, Shelestov A, Skakun S (2009) Grid and sensor web technologies for environmental monitoring. Earth Sci Inf 2:37–51. https://doi.org/10.1007/s12145-009-0024-9

Lee PC, Wang YH, Lo TP, Long DB (2018) An integrated system framework of building information modelling and geographical information system for utility tunnel maintenance management. Tunn Undergr Space Technol 79:263–273. https://doi.org/10.1016/j.tust.2018.05.010

Liu X, Hao LN, Yang WN (2019) BiGeo: a Foundational PaaS framework for efficient storage, visualization, management, analysis, service, and migration of geospatial big data-a case study of Sichuan Province, China. ISPRS Int J Geo-Inf 8:449. https://doi.org/10.3390/ijgi8100449

Ortolano G, Cirrincione R, Pezzino A, Tripodi V, Zappala L (2015) Petro-structural geology of the Eastern Aspromonte Massif crystalline basement (southern Italy-Calabria): an example of interoperable geo-data management from thin section - to field scale. J Maps 11:181–200. https://doi.org/10.1080/17445647.2014.948939

Peters SE, Husson JM, Czaplewski J (2018) Macrostrat: a platform for geological data integration and deep-time earth crust research. Geochem Geophys Geosyst 19:1393–1409. https://doi.org/10.1029/2018gc007467

Qi Y, Fang M, Zheng Z (2013) Webgis-based system of “monitoring and preventing geological disaster rely on the masses”. In: 2013 IEEE International Geoscience and Remote Sensing Symposium-IGARSS. IEEE, pp 664–667

Scarelli FM, Barboza EG, Cantelli L, Gabbianelli G (2017) Surface and subsurface data integration and geological modelling from the little ice age to the present, in the Ravenna coastal plain, northwest Adriatic Sea (Emilia-Romagna, Italy). Catena 151:1–15. https://doi.org/10.1016/j.catena.2016.12.005

Turganbaev E, Beldeubayeva Z, Rakhmetullina S, Krivykh V (2015) Information system of efficient data management of groundwater monitoring the Republic of Kazakhstan. In: 2015 9th International Conference on Application of Information and Communication Technologies. International Conference on Application of Information and Communication Technologies. IEEE, pp 72–75

Wang CB, Ma XG, Chen JG (2018) Ontology-driven data integration and visualization for exploring regional geologic time and paleontological information. Comput Geosci 115:12–19. https://doi.org/10.1016/j.cageo.2018.03.004

Wu ZN, Shen YX, Wang HL, Wu MM (2020) An ontology-based framework for heterogeneous data management and its application for urban flood disasters. Earth Sci Inf 13:377–390. https://doi.org/10.1007/s12145-019-00439-3

Xiong PC, Chi Y, Zhu SH, Moon HJ, Pu C, Hacigumus H (2011) Intelligent management of virtualized resources for database systems in cloud environment. In: Ieee 27th International Conference on Data Engineering. IEEE International Conference on Data Engineering. IEEE, pp 87–98

Xu Z, Zhang L, Li H, Lin YH, Yin S (2020) Combining IFC and 3D tiles to create 3D visualization for building information modeling. Autom Constr 109:102995. https://doi.org/10.1016/j.autcon.2019.102995

Yong-Peng F, Yi-Yong L, Lei W, Ling-Feng Z, Xue-Ping L (2018) Design and implementation of geological environment survey big data platform for Danjiangkou reservoir water source area based on WebGIS. In: 2018 4th Annual International Conference on Network and Information Systems for Computers (ICNISC). IEEE, pp 203–207

Yousif M, Sracek O (2016) Integration of geological investigations with multi-GIS data layers for water resources assessment in arid regions: El Ambagi Basin, Eastern Desert, Egypt. Environ Earth Sci 75:1–5. https://doi.org/10.1007/s12665-016-5456-1

Zhou CY et al (2019) Key technologies of a large-scale urban geological information management system based on a browser/server structure. IEEE Access 7:135582–135594. https://doi.org/10.1109/access.2019.2941348

Zhu LF, Chen XL, Li ZW (2019) Multiple-view geospatial comparison using web-based virtual globes. ISPRS J Photogramm Remote Sens 156:235–246. https://doi.org/10.1016/j.isprsjprs.2019.08.016

Acknowledgements

This research is funded by National Natural Science Foundation of China (U1711267), Natural Science Foundation of Hubei Province (2020CFB507), Fundamental Research Funds for the Central Universities (CUGL180823), Open Funding of Hubei Provincial Key Laboratory of Intelligent Geo-Information Processing (KLIGIP-2018B15). The authors thank the anonymous reviewers for their valuable comments.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare that there is no conflict of interest regarding the publication of this paper.

Additional information

Communicated by: H. Babaie

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Zhang, X., Zhang, J., Liu, G. et al. Comprehensive framework for the integration and analysis of geo-environmental data for urban geohazards. Earth Sci Inform 14, 2387–2399 (2021). https://doi.org/10.1007/s12145-021-00642-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12145-021-00642-1