Abstract

Burnout has been studied extensively in work-related contexts and more recently investigated in academic settings. The present study aimed to analyze the psychometric properties of the student version of the Oldenburg Burnout Inventory (OLBI-S; Reis et al., 2015) on an Italian sample of university students (n = 339, Mage = 22.67 ± 4.10), and its measurement invariance with a USA sample (n = 553, Mage = 23.47 ± 5.96). Separate sample analyses provided psychometric support for 14 but not all 16 of the original OLBI-S items. Cross-sectional model comparisons supported partial metric invariance for the 14-item OLBI-S items when comparing the Italian and USA samples. However, scalar invariance was not supported. Finally, we found good internal reliability, and convergent, concurrent, and discriminant validity for the 14-item Italian OLBI-S. Hence, results supported using the 14-item OLBI-S in future studies of academic burnout in Italian and USA samples, and as a possible screening instrument to identify students experiencing burnout, especially during academic periods associated with higher stress. However, the 14-item OLBI-S should not be used to compare levels of burnout factors between Italian and USA students due to the lack of scalar invariance. Future work could entail further scale revisions or the development of burnout measures specifically related to the academic context rather than adapting work-related burnout scales to the study area.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Burnout and its antecedents have been widely studied in the work-related context since the 60s (Manzano García & Ayala, 2017; Sun et al., 2022; Zhen et al., 2022; Tomczak, 2023). However, only in the last decades, there has been an increasing interest in the analysis of burnout in academic settings (Akungu et al., 2021; Lee et al., 2013; Wickramasinghe et al., 2023; Zhang et al., 2007; Zhu et al., 2023).

Maslach and Leiter (2017) described burnout as encompassing three major dimensions: “an overwhelming exhaustion, feelings of cynicism and detachment from the job, and a sense of ineffectiveness and lack of accomplishment” (p. 37). Although often it is measured with a scale developed by Maslach and Jackson (1981), some have identified conceptual and psychometric limitations with the scale (e.g., Demerouti et al., 2010; Halbesleben and Demerouti, 2005). Therefore, the deficiencies shown by traditional burnout measures have created the necessity of developing alternative evaluation instruments. Some of these measures are the Spanish Burnout Inventory (Gil Monte & Manzano-García, 2015), the Stanford Professional Fulfilment Index (PFI; Trockel et al., 2018), the Burnout Assessment Tool (BAT; Schaufeli et al., 2020), and the Oldenburg Burnout Inventory (OLBI; Demerouti et al., 2010). One of the proposed scales that has achieved greater relevance in academic settings is the student version of the Oldenburg Burnout Inventory (OLBI-S; see Campos et al., 2012a and Reis et al., 2015). There are also other scales for evaluating burnout among students, such as the student version of the MBI (MBI-SS; Schaufeli et al., 2009), the Copenhagen Burnout Inventory – Student version (Campos et al., 2012b), and the student version of the BAT (Popescu et al., 2023).

Demerouti et al. (2010) highlighted that the Maslach Burnout Inventory-General Survey (MBI-GS; Maslach et al., 1996) is characterized by a main psychometric shortcoming: its items are all framed in the same direction; more specifically, exhaustion and cynicism items are phrased negatively, while professional efficacy items are phrased positively. Moreover, they pointed out that the psychometric literature reported one-sided scales are weaker than scales that include positively and negatively worded items. Therefore, Demerouti et al. (2010) addressed this issue by proposing a measure that evaluates exhaustion and disengagement through positive and negative items. Thus, the OLBI exhaustion scale includes items referring to vigor, while the OLBI disengagement scale includes items about dedication. Finally, Demerouti et al. (2010)’s scale addresses the affective dimension of exhaustion (like the MBI-GS), as well as physical and cognitive features.

Therefore, given the strengths of the OLBI (Demerouti et al., 2010), the current study examines the psychometric properties of its student version (OLBI-S; see Campos et al., 2012a and Reis et al., 2015) on Italian youths, and we used samples in Italy and the USA to analyze measurement invariance.

The OLBI-S measures two burnout dimensions also reflected in the original OLBI. The exhaustion dimension refers to emotional, physical, and cognitive fatigue due to academic demands and the disengagement dimension is defined as “an attitude of withdrawal and detachment from one’s studies” (Reis et al., 2015, p. 10). The two factors align with the job demands resources model (Demerouti et al., 1999) in which exhaustion is linked to students’ study characteristics and disengagement can be linked to limited or absent resources for students. Because college students confront numerous academic pressures combined with anticipatory stress of seeking employment or post-graduate training after undergraduate studies, it is important to have measures sensitive to detecting academic burnout in students.

Several different language versions of the OLBI-S have been developed. Campos et al. (2012a) revised phrasing of the original OLBI items to measure burnout for students (OLBI-S). They created a Portuguese language version by first translating and back translating the English OLBI items (Halbesleben & Demerouti, 2005). They also evaluated item content validity and conducted a series of factor analyses of item responses based on a large sample of students from Brazil and Portugal. Although most items performed well with relatively high factor loadings and the hypothesized two-factor structure was supported, some items had low loadings or were inconsistent in their loadings between samples. Indeed, based on measurement invariance tests, Campos et al. (2012a) were unable to cross-validate the factor loadings in sample comparisons of students from the two countries. Reis et al. (2015) began with the English language version of the original OLBI items then similar to Campos et al. (2012a), revised those items to be consistent with stressful, burnout-related experiences of students, translated those items to Greek and German, then conducted back-translation to English to confirm content equivalence. In factor analyses, the hypothesized two-factor model was supported with only minor issues detected (i.e., one item in one sample had a low factor loading). Measurement invariance analyses supported metric invariance when comparing students and workers in the subsamples, as well as when comparing subsamples of students from Germany and Greece. Scalar invariance (tests of item intercepts) was not supported in both sets of analyses, suggesting potential item response bias. Although scalar invariance is a prerequisite for comparing factor means between groups, recent research using the OLBI-S has not examined invariance models prior to conducting such comparisons (e.g., Molodynski et al., 2021). This is unfortunate because one group of interest in the current study, Italian students, was part of this recent multinational study, but inferences regarding similarities or differences between students in different countries must first be supported by confirming comparable psychometrics between groups.

In the current study, we translated the English language version of the OLBI-S developed by Reis et al. (2015) into Italian then back-translated that scale to English. We also translated and back-translated a second indicator of burnout developed by Humphrey (2013) to be used in convergent validity analyses. Measurement invariance analyses were then planned to extend confidence in the factor structure of the OLBI-S derived from the Italian sample. To that end, in addition to the Italian sample, a comparison sample in the USA also completed the OLBI-S. Both countries are western democracies but with differences in terms of individualism, uncertainty avoidance, and long-term orientation (Hofstede Insights, 2021) that suggest country level differences in the potential for burnout. Thus, similar to findings in other studies, we anticipated metric invariance (similar factor loadings) for the two countries. Although we had no specific reasons for expecting directional item-level bias between the groups, we tested rather than assumed scalar invariance because any such factor mean comparisons are predicated on first documenting scalar invariance at the item level (Chen, 2008). Going further, we expected that practical implementation of measures of academic burnout would more likely involve measured, raw scores based on the scales rather than factor means analyses requiring sophisticated statistical modeling. Therefore, if scalar invariance could be confirmed, we anticipated evaluating residual invariance prior to testing country differences in structural parameters (variances, covariances, and factor means) (Chen, 2008; Schmitt & Ali, 2015).

Next, we gathered data on the OLBI-S, the additional indicator of burnout, and other measures to be used for concurrent and discriminant validity analyses in Italy. Specifically, we evaluated the association between the OLBI-S and (a) academic work engagement among students (Schaufeli & Bakker, 2004), (b) positive and negative affect (Watson et al., 1998), and (c) intention to drop out of school (Hardre & Ravee, 2003). Work engagement is a construct similar to the converse of burnout and reflects different ways in which students convey their enthusiasm, involvement, and dedication for their academic pursuits. Aspects of emotional functioning have long been implicated in burnout, hence the focus on positive and negative affect. Withdrawing from work in one way or another (e.g., absenteeism, quitting) has also been a longstanding concern in burnout research, and the relevant corresponding construct for students would be intention to drop out of their studies.

Method

Participants and procedure

Table 1 shows the descriptive statistics of the Italian and USA (convenient) samples. In Italy, a total of 339 college students (86.4% women) were recruited from Italian universities before the coronavirus disease (COVID-19) outbreak. Participants were volunteers recruited from an undergraduate psychology course (no credits given for their participation in the research) and from student groups on Facebook. On average, Italian students were 22.67 years old (SD = 4.10, range 18–53). The percentages of students in their first to fifth year of study were 6.5%, 8.6%, 64.0%, 8.3%, 10.5%, along with 2.1% in their sixth year of a medical degree program. Half of the sample (51%) was majoring in psychology and the other half represented majors in education, languages, and medicine.

In the USA, a total of 553 students (68.7% women) were recruited from a university research subject pool. On average, the USA students were 23.47 years old (SD = 5.96, range 18–66). The distribution of year of study was 1.6% freshmen, 11.4% sophomores, 37.6% juniors, and 49.4% seniors. Most were pursuing a business-related major (32.8%) or majoring in one of the social and behavioral sciences (18%). The other half represented majors in the arts, education, health-related professions, and sciences.

Measures

Oldenburg burnout inventory - student version (OLBI-S; Reis et al., 2015)

The 16-item OLBI-S was used to measure academic burnout in its two main dimensions: exhaustion (e.g., “Over time, one can become disconnected from this type of study”) and disengagement (e.g., “I always find new and interesting aspects in my studies”). Each subscale is made up of 8 items. Item responses use a rating scale ranging from 1 (strongly agree) through 4 (strongly disagree). The Italian version of the scale was created through a back-translation process.

One-item school/work burnout scale (Humphrey, 2013; Rohland et al., 2004)

The single-item was adapted by Humphrey (2013) from Rohland et al.’s (2004) one-item measure of burnout aiming to be used with students. This scale asks participants to classify their level of burnout by selecting one of the five options provided. For scoring purposes, the higher the number of the option selected (i.e., “I feel completely burned out and often wonder if I can go on. I am at the point where I may need some changes or may need to seek some sort of help”) the higher the level of burnout. Because “burnout” is a word that in the Italian language is usually used with a more general meaning (i.e., as a synonym of being stressed at very high levels), we provided the Italian students with the following definition of burnout to be used as reference for their classification: “Burnout is a psychological condition characterized by high stress, negative attitudes about work/study, and loss of confidence in one’s abilities, that can reduce work/academic performance and satisfaction.” Next, we presented the Italian translation (reached through a back-translation process) of the five options.

Although single-item indicators have limitations, as Allen et al. (2022) pointed out, they can be justified provided the item addresses a unidimensional construct and other evidence exists supporting psychometric features of the item. Response options and prior research suggest the item taps the emotional exhaustion aspect of burnout. For example, Rohland et al. (2004) found a 0.64 correlation between the one-item measure and Maslach et al.’s (1996) emotional exhaustion factor, similar to the correlation reported by Hansen and Girgis (2010). Dolan et al. (2015) found substantially higher correlations between emotional exhaustion and the one-item score in the upper 0.70 range across five different and large samples. The one-item score also was sensitive to positive change in a stress intervention study (Humphrey, 2013).

Utrecht work engagement scale for students (UWES-9 S; Schaufeli & Bakker, 2004)

The UWES-9 S is a 9-item instrument that measures three components of study engagement: Vigor (e.g., “When I’m doing my work as a student, I feel bursting with energy”), Dedication (e.g., “I am enthusiastic about my studies”), and Absorption (e.g., “I feel happy when I am studying intensely”). Participants respond to each item using a 7-point scale ranging from 0 (Never) to 6 (Always, Everyday). We administered the Italian version (Loscalzo & Giannini, 2019a) of the scale, which has good psychometric properties. Loscalzo and Giannini (2019a) reported Cronbach’s coefficients alpha as 0.82 (Vigor), 0.88 (Dedication), 0.76 (Absorption), and 0.90 (total score). In the present sample, the alpha values were, respectively, 0.82, 0.91, 0.74, and 90.

Positive and negative affect schedule (PANAS; Watson et al., 1998)

The state version of the PANAS is a 20-item scale that measures recent positive (e.g., “determined”) and negative (e.g., “afraid”) affect. Participants respond to items using a 5-point scale ranging from 1 (Very slightly or not at all) to 5 (Extremely). We administered the Italian version (Terracciano et al., 2003) of the PANAS. Terracciano et al. (2003) reported good Cronbach’s coefficients alpha for both negative (0.85) and positive (0.83) affect. In the current sample, the alpha values were 0.94 for negative affect and 0.89 for positive affect.

Intention to drop out of university (Hardre & Ravee, 2003)

Three items were used to measure dropout intention. The response format is a 5-point scale ranging from 1 (Never) to 5 (Very often). We used the Italian translation (Alivernini & Lucidi, 2008) of Hardre and Reeve’s (2003) items with the minor change in wording (“university” instead of “school”) supported by Loscalzo and Giannini (2019b). An example item is “I sometimes consider dropping out of university.” Validity was supported by Vallerand et al. (1997) who reported that prior participant responses consistent with intention to drop out predicted subsequent drop out one year later. In the present sample, the alpha value is 0.85.

Data analysis

IBM SPSS Version 28 (2022) and Mplus Version 8.6 (Muthén & Muthén, 1998–2021) were used in analyses. Measurement models were tested using confirmatory factor analyses (CFAs) with the robust MLR estimator in Mplus. Full information maximum likelihood was used to address missingness and to estimate unbiased parameter estimates. Effects coding constraints were used in the measurement models such that factor loadings for each factor would average to 1.0 and intercepts would average to 0 (Brown, 2015; Little et al., 2006).

Evaluating measurement invariance involves a series of model comparisons. Initially, separate analyses are undertaken to evaluate fit for both samples and to make any possible modifications to the measurement model. Configural invariance is tested by estimating a model that allows for freely estimated parameters between samples. Metric invariance is tested by constraining item-to-factor loadings to be invariant between samples and evaluating the extent to which those constraints worsen fit from the configural model. Metric invariance is important because it confirms that the unit of measurement is consistent between samples. Furthermore, metric invariance is necessary for confidence in that strengths of association can be compared between groups. Scalar invariance is tested by constraining item intercepts as well as factor loadings to be equivalent between groups. Confidence in inferences based on factor mean comparisons between samples is justified when scalar invariance is supported. Residual invariance is tested by constraining measurement errors associated with the items to be equivalent between groups. Residual invariance is needed for confidence in group differences when tested based on raw scores. Although invariance might be considered as a pass-fail evaluation, often researchers consider partial invariance models in which a relatively small subset of freed parameters is permitted along with other constrained parameters.

Evaluation of model fit was based on Brown (2015) and Hu and Bentler (1999). Generally, good to excellent fit corresponds to a Comparative Fit Index (CFI) in the 0.90-0.95 range, the Root Mean Square Error of Approximation (RMSEA) in the 0.06-range, and the Standardized Root Mean Square Residual (SRMR) in the 0.08-range. However, it should be noted there are no gold standards with regard to fit and inconsistency in fit indices is relatively common (Lai & Green, 2016). Thus, we expected to explore reasons for differences in fit statistics and possible alternative models.

Several sources provided guidance specific to invariance tests. Possible non-invariance might be revealed when comparing more and less constrained models if there is a significant difference based on the Yuan-Benter Chi-Square difference test. However, that index also is susceptible to significance with larger sample sizes. As alternatives, Cheung and Rensvold (2002) concluded that differences in CFI could be consistent with non-invariance and Kang et al. (2016) concluded that McDonald’s noncentrality index (MNCI; McDonald & Marsh, 1990) was especially sensitive to measurement noninvariance under a variety of study conditions. Therefore, we also considered ΔCFI > − 0.010 (Cheung & Rensvold, 2002) and ΔMNCI > − 0.010 (Kang et al., 2016) might detect non-invariance in the model comparisons. In instances when some indices support invariance and others suggest non-invariance, we weighted ΔMNCI over the other statistics (Kang et al., 2016).

Finally, for the Italian sample, we evaluated the internal consistency of the OLBI-S subscales and their concurrent, convergent and discriminant validity with Pearson and Spearman correlations.

Results

Preliminary analyses

The USA students were significantly older than the Italian students, t(881) = 2.16, p = .031, although the effect size for that difference was small, d = 0.15. There were proportionally more women in the Italian sample than in the USA sample, χ2 (1, N = 888) = 35.69, p < .001. We also examined missingness at the item level for the OLBI-S. Little’s Missing Completely at Random test (Little, 1988) was not significant, χ2 (105, N = 892) = 103.87, p = .513. Thus, the missing data mechanism was at least missing at random and possibly missing completely at random.

Separate sample analyses of the measurement model

Prior to measurement invariance analyses, the original measurement structure for the 16-item, two-factor OLBI-S was initially tested separately for the Italian and USA samples. Model fit was not uniformly strong across indices for the Italian sample: χ2 (103, N = 339) = 317.27, p < .0001, CFI = 0.851, RMSEA = 0.078 (0.069, 0.088), and SRMR = 0.068. Likewise, model fit indices for the USA sample also revealed inconsistencies: χ2 (103, N = 553) = 397.13, p < .0001, CFI = 0.838, RMSEA = 0.072 (0.064, 0.079), and SRMR = 0.063. In reviewing model parameter estimates, the same Disengagement indicator (“Questo è l’unico campo di studi che posso immaginare di studiare” – “This is the only field of study that I can imagine myself doing”) had a conspicuously low standardized factor loading in both samples (0.12 for the Italian sample and 0.19 for the USA sample). Remaining items had standardized factor loadings ranging from approximately 0.40 to 0.70 in both samples.

Although prior studies on college students did not identify problems with that item, in a study of Portuguese and Brazilian employees, Sinval et al.’s (2019) psychometric analyses also failed to support that item. Our interpretation of the item phrasing is that students (or workers) experiencing high Disengagement could reasonably rate strong agreement or disagreement with that item and that a high agreement might also indicate high engagement (instead of disengagement) for some workers/students who are fully convinced about the choice of their job/major. Thus, we tested an alternative measurement model after excluding that item from the analyses. For the Italian sample, fit for the alternative model still revealed a relatively lower CFI, but RMSEA and SRMR were more reasonable: χ2 (89, N = 339) = 277.25, p < .0001, CFI = 0.865, RMSEA = 0.079 (0.069, 0.090), and SRMR = 0.066. The USA sample had similar results regarding fit indices: χ2 (89, N = 553) = 324.64, p < .0001, CFI = 0.864, RMSEA = 0.0.069 (0.061, 0.077), and SRMR = 0.061.

To further investigate possible misfit, we examined correlation residuals within each sample based on the 15-item measurement model (Kline, 2016). Although several items in both samples had relatively high correlation residuals, one of the items stood out from the others in both samples: “Dopo una lezione o aver studiato, generalmente mi sento sfinito e stanco” – “After a class or after studying, I usually feel worn out and weary” (Exhaustion indicator). Modification indices (MIs) based on the separate sample analyses suggested that fit could be improved by allowing a large number of correlations between residuals involving that item with other items. Because we could generate no clear or testable theory for allowing those correlated residuals, we took a more parsimonious approach and tested another model that excluded that item. Fit results for the Italian sample were: χ2 (76, N = 339) = 196.51, p < .0001, CFI = 0.901, RMSEA = 0.068 (0.057, 0.080), and SRMR = 0.060. Fit for the USA sample was: χ2 (76, N = 553) = 270.59, p < .0001, CFI = 0.871, RMSEA = 0.068 (0.059, 0.077), and SRMR = 0.056. After exploring several explanations for the low CFI compared with other indices, and with generally good factor loadings and fit results for the two factors across samples, it seemed less likely that model misfit was the reason for inconsistency in indices and instead the causes might be attributable to data distributions and the fit function (Lai & Green, 2016). Therefore, the 14-item version of the OLBI-S was advanced for measurement invariance analyses.

Measurement invariance

Fit statistics for the different measurement invariance models appear in Table 2. As anticipated from the separate sample analyses, the configural invariance or baseline model did not provide a uniformly good fit for the data across indices (e.g., CFI = 0.884, RMSEA = 0.068). The metric invariance model revealed a worsening of fit compared with the configural model (e.g., ΔMNCI = -0.012). We compared the unstandardized factor loadings for the two groups based on the configural model. Two items had conspicuously different loadings between the samples: “Mi sento sempre più impegnato nei miei studi” – “I feel more and more engaged in my studies” (reverse Disengagement indicator) and “Dopo una lezione o aver studiato, ho abbastanza energie per le mie attività di svago” – “After a class or after studying, I have enough energy for my leisure activities” (reverse Exhaustion indicator). Because modification indices reported by Reis et al. (2015) also detected those items as targets for adjustment in their scalar invariance test, we tested a partial invariance model in which loadings for those items were allowed to be freely estimated between the samples. Partial metric invariance was supported with that minor adjustment (see Table 2). Scalar invariance was not supported after constraining item intercepts for the two samples, with the exception of the two items with freed loadings in the partial metric model (see Table 2). Based on a comparison of intercepts, the largest discrepancies between the samples occurred for a reverse Disengagement item: “Mi sento sempre più impegnato nei miei studi” – “I feel more and more engaged in my studies,” and for a reverse Exhaustion item: “Dopo una lezione o aver studiato, ho abbastanza energie per le mie attività di svago” – “After a lesson or studying, I have enough energy for my leisure activities.” In both cases, the Italian sample tended to rate those items higher than did the USA sample. After allowing those intercepts to be freely estimated, partial scalar invariance still could not be supported (i.e., ΔMNCI = -0.074). Four other intercepts indicated a systematic tendency for the USA sample to rate the items higher than the Italian. However, even after allowing those intercepts to be freely estimated, partial scalar invariance still could not be supported (i.e., ΔMNCI = -0.019). Invariance testing was discontinued at that point. The results regarding scalar invariance meant that factor mean comparisons should not be conducted.

Because results supported partial metric invariance, we could evaluate the similarity of correlations (covariances) between the Disengagement and Exhaustion factor for the two groups. Based on the partial metric invariance model, the estimated factor correlations between Disengagement and Exhaustion for the Italian sample (ρ = 0.693) and the USA sample (ρ = 0.689) were highly similar. In general, students’ ratings of disengagement also predicted their ratings for exhaustion, though the two factors were not isomorphic. Additional tests of a single factor measurement structure for the OLBI-S, or of a model in which the factor correlation was constrained to 1.0, produced significantly worse-fitting models than when allowing for the two-factor structure and an estimated correlation between factors.

Internal reliability and concurrent, convergent and discriminant validity in Italian students

First, we calculated the internal reliability of the Italian OLBI-S subscales, and we found good Omega values for both the 7-item subscales: 0.80 (Disengagement) and 0.81 (Exhaustion).

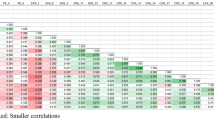

We analyzed convergent validity with the Humphrey’s single-item measure of burnout. Based on the Spearman correlation, the correlation with both the OLBI-S subscales was strong and positive: rs =0.47 for Disengagement and 0.57 for Exhaustion. To evaluate concurrent and discriminant validity, we used Pearson correlations between the two 7-item OLBI-S subscales, the UWES-9 S subscales and total score, the PANAS scales, and drop-out intention. More specifically, we used the UWES-9 S and the PANAS positive subscale to evaluate discriminant validity, while we used the PANAS negative subscale and drop-out intention to test concurrent validity. Table 2 shows the results of these analyses. In sum, both the OLBI-S subscales showed good concurrent and discriminant validity, as they have good values of negative correlation with all the UWES-9 S subscales, the UWES-9 S total score, and PANAS positive affect (values ranging between 0.45 and 0.65). Moreover, the OLBI-S subscales were significantly and positively correlated with PANAS negative affect and drop-out intention (values ranging between 0.49 and 0.57).

Discussion

Burnout is a construct that has been widely analyzed in the work context; however, in recent years, burnout also has received attention in the academic field. There also has been increasing attention to cross-cultural aspects of burnout, which might be especially relevant for between country comparisons with different emphases on, and structures for, education. Therefore, to add these research agendas, we aimed at analyzing the psychometric properties and cross-cultural invariance of the student version of the Oldenburg Burnout Inventory (OLBI-S; Reis et al., 2015), a measure of two academic burnout dimensions: exhaustion and disengagement.

As a first step, we determined that the original 16-item 2-factor structure did not fit comparably well in the Italian and USA samples. In particular, one item seemed to be a very poor indicator of disengagement, in line with Sinval et al.’s (2019) study in which that item was a problematic indicator for Portuguese and Brazilian employees and with our speculation that this might be an ambiguous item, which might be associated to higher scores for both highly disengaged and engaged workers/students. Deleting this item from the measurement model led to an improvement for both the Italian and the USA samples. An indicator of the exhaustion factor also had some problematic psychometric features – as modification indexes suggested to allow many correlations between residuals involving that item with other items – and overall model fits were improved by eliminating that item as well. Although we were reluctant to drop an indicator that refers to feeling “worn out and weary” for an exhaustion factor, results in this study did not suggest that eliminating that item resulted in dire consequences for the exhaustion factor. The remaining exhaustion items seemed to adequately represent the construct psychometrically (good factor loadings and reasonable model fit) and conceptually. As examples, several remaining items referred to energy levels or used other terms to capture a sense of fatigue consistent with burnout, such as students feeling “emotionally drained” while studying, or needing “more time than in the past in order to relax and feel better” after having a class or studying. Based on these results, we recommended using a 14-item OLBI-S version for both the Italian and the USA samples. Our results also highlight the importance of not assuming that the original structure of a scale will perform as intended across all sample comparisons, therefore future studies should continue to evaluate the OLBI-S items in cross-cultural comparisons.

That 14-item measurement structure, with another minor adjustment, performed well in the metric invariance tests, providing some confidence in its use for future Italian-USA comparative research on student burnout. Based on other findings, such research could reasonably compare strengths of associations between predictors and outcomes of burnout in the two countries. However, because of the failure to support scalar invariance, unless newer methods are applied (e.g., alignment method; Asparouhov and Muthén, 2014), comparisons of burnout levels should not yet be undertaken in such studies. Instead, additional research might examine potential factors contributing to bias in item responses between the samples. For example, there seemed to be the potential for item content issues and possibly item phrasing issues that might produce systematic differences in the pattern of response ratings between the samples. The two largest gaps in intercepts were for items that suggested Italians may manage their cognitive and energy resources better than Americans when engaged in academic work. However, the next set of large differences between item intercepts occurred for a set of reverse-coded items, with no clear pattern in the content that might help explain the discrepancies. Indeed, with one exception, the reverse-coded items revealed a systematic tendency to elicit lower ratings from the Italians compared with the Americans. Perhaps such findings support other calls to avoid the use of reverse-worded items, especially in cross-cultural studies involving translated scales (Weijters et al., 2013; Wong et al., 2003), even if one of the strengths of the OLBI-S – as reported by Demerouti et al. (2010) – is just the use of items which are phrased both positively and negatively.

We extended the psychometric analysis of the Italian OLBI-S by analyzing the internal reliability, convergent, concurrent, and discriminant validity of the 14-item version. Interestingly, in line with previous studies (Dolan et al., 2015; Hansen & Girgis, 2010; Rohland et al., 2004), the correlation between the OLBI-S Exhaustion scale and the Humphrey single-item is higher than the correlation with the Disengagement scale. Hence, from a methodological level, we suggest using the OLBI-S to analyze both aspects of burnout. However, if scholars are interested only in emotional exhaustion and have concerns about survey length, they might consider using Humphrey’s single-item measure of burnout.

From a preventive level, the single-item scale might be used as a periodic (brief) screener of students’ level of burnout, with a subsequent administration of the OLBI-S in case of high scores on the screener. This might be especially important during academic periods associated with high stress, like during examinations or when completing thesis requirements. Moreover, considering the adverse health and academic effects which many students experienced during the Covid-19 pandemic (Loscalzo & Giannini, 2021; Loscalzo et al., 2021), it might be valuable to screen college students for the possible presence of academic burnout, aiming to provide them with interventions to reduce burnout, restore their well-being, and facilitate a return to their academic path.

Among the limitations of this study, we evaluated convergent validity using Humphrey’s (2013) single-item indicator of burnout and did not include longer burnout scales. Hence, future studies could deepen the analysis of convergent and discriminant validity by including other burnout measures. Moreover, there is a higher prevalence of female and third-year psychology students in the Italian sample, and a low representation of first- and second-year students in the USA sample. The cross-sectional design of the current study also limits understanding directions of effects and provides no information about trajectories of change in burnout over time. To address such limitations and because academic burnout is a risk factor for student retention, we suggest future studies focus on students early in the first years of their academic studies and conduct subsequent follow-up evaluations of the students. Doing so would have the practical benefit of early detection of at-risk students and the methodological and substantive benefits of tracking student burnout across multiple years of academic experiences.

Despite the current study’s limitations, we presented a thorough analysis of the factor structure of the OBLI-S in both Italian and US students, highlighting a 14-item and 2-factor model for both samples. Model fit and related psychometric results suggested removing from the scoring one item from each subscale: an item from the Disengagement subscale (“This is the only field of study that I can imagine myself doing”) and an item from the Exhaustion subscale (“After a class or after studying, I usually feel worn out and weary”). Fortunately, the OLBI-S had enough other items measuring those two dimensions that breadth of construct representation did not suffer, and psychometrics improved, with that minor revision. Although results supported the use of the 14-item OLBI-S in correlation- or regression-based comparisons between Italian and USA students, in line with the results from measurement invariance analyses, results did not support the use of factor means to compare levels of academic burnout between Italian and USA students. Moreover, concerning Italy, our convergent validity findings revealed expected associations between the two burnout factors based on the 14-item OLBI-S with Humphrey’s one-item burnout scale. In fact, those analyses could also be interpreted as supporting the single-item indicator as a reasonable alternative to measure academic burnout (emotional exhaustion), especially in screening efforts and with larger projects involving many variables and scales.

In conclusion, our study provided evidence for using the OLBI-S in future studies aiming to analyze youths’ academic burnout in Italian and US students. These studies are critical, considering the academic pressures college students face, which might harm their well-being, academic performance, and eventual ability to contribute meaningfully to the workforce. Moreover, the OLBI-S might be used to screen students for their levels of burnout to detect the ones needing psychological counseling interventions to reduce their burnout and improve their well-being and academic success. Though, it should be noted that while our results supported the use of the 14-item OLBI-S for Italian-USA comparative research regarding burnout predictors and outcomes, we did not find support for comparing burnout levels across the two countries. Hence, we recommend that future studies examine further the factor structure and invariance of the 14-item and possibly other OLBI-S item sets across different countries.

Finally, we also suggest that analyzing burnout in the academic context might require developing an operationalization of the construct specific to students’ life instead of adapting the concept (and instruments) from the work context to the study area. As a similar example, Loscalzo and Giannini (2017, 2018) pointed out the importance of acknowledging the differences between the work and academic contexts and cautioned against transferring workaholism concepts and measures to the academic area. However, to gather preliminary evidence concerning academic burnout in Italian and US students, the reduced 14-item and 2-factor version might be helpful because to the best of our knowledge, it is currently the only instrument cross-validated in these two countries. Moreover, the deepening of the factor analysis of the OLBI-S might also inform items/facets of organizational burnout that should be deleted or modified when referring to academic burnout.

Data Availability

The datasets is available from the corresponding author on reasonable request.

References

Akungu, O. A., Chien, K. P., & Chen, S. (2021). The longitudinal interaction of adolescents’ interest in physical education, school burnout, and disturbed sleep related to social media and phone use. Current Psychology, 42, 3725–3722. https://doi.org/10.1007/s12144-021-01709-4.

Alivernini, F., & Lucidi, F. (2008). The academic motivation scale (AMS): Factorial structure,invariance, and validity in the italian context. TPM-Testing Psychometrics Methodology in Applied Psychology, 15(4), 211–220.

Allen, M. S., Iliescu, D., & Greiff, S. (2022). Single item measures in psychological science: A call to action. European Journal of Psychological Assessment, 38(1), 1–5. https://doi.org/10.1027/1015-5759/a000699.

Asparouhov, T., & Muthén, B. (2014). Multiple-group factor analysis alignment. Structural Equation Modeling, 21(4), 495–508. https://doi.org/10.1080/10705511.2014.919210.

Brown, T. A. (2015). Confirmatory factor analysis for applied research (2nd ed.). The Guilford Press.

Campos, J. A. D. B., Carlotto, M. S., & Marôco, J. (2012a). Oldenburg Burnout inventory–student version: Cultural adaptation and validation into portuguese. Psicologia: Reflexão e Crítica, 5, 709–718. https://doi.org/10.1590/S0102-79722012a000400010.

Campos, J. A. D. B., Marôco, J., & Carlotto, M. S. (2012b). Copenaghen Burnout inventory – student version: Adaptation and transcultural validation for Portugal and Brazil. Psicologia: Reflexao e Critica, 26(1), 87–97. https://doi.org/10.1590/S0102-79722013000100010.

Chen, F. F. (2008). What happens if we compare chopsticks with forks? The impact of making inappropriate comparisons in cross-cultural research. Journal of Personality and Social Psychology, 95(5), 1005–1018. https://doi.org/10.1037/a0013193.

Cheung, G. W., & Rensvold, R. B. (2002). Evaluating goodness-of-fit indexes for testing measurement invariance. Structural Equation Modeling: A Multidisciplinary Journal, 9(2), 233–255. https://doi.org/10.1207/S15328007SEM0902_5.

Demerouti, E. (1999). Burnout: Eine Folge Konkreter Abeitsbedingungen bei Dienstleistungs und Produktionstdtigkeiten. (burnout: A consequence of specific working conditions among human service and production tasks). Frankfurt/Main: Lang.

Demerouti, E., Mostert, K., & Bakker, A. B. (2010). Burnout and work engagement: A thorough investigation of the independency of both constructs. Journal of Occupational Health Psychology, 15(3), 209–222. https://doi.org/10.1037/a0019408.

Dolan, E. D., Mohr, D., Lempa, M., Joos, S., Fihn, S. D., Nelson, K. M., & Helfrich, C. D. (2015). Using a single item to measure burnout in primary care staff: A psychometric evaluation. Journal of General Internal Medicine, 30(5), 582–587. https://doi.org/10.1007/s11606-014-3112-6.

Gil-Monte, P. R., & Manzano-García, G. (2015). Psychometric properties of the spanish burnout inventory among staff nurses. Journal of psychiatric and mental health nursing, 22(10), 756–763. https://doi.org/10.1111/jpm.12255.

Halbesleben, J. R. B., & Demerouti, E. (2005). The construct validity of an alternative measure of burnout: Investigating the English translation of the Oldenburg Burnout Inventory. Work & Stress, 19(3), 208–220. https://doi.org/10.1080/02678370500340728.

Hansen, V., & Girgis, A. (2010). Can a single question effectively screen for burnout in australian cancer care workers? BMC Health Services Research, 10, 341–344. https://doi.org/10.1186/1472-6963-10-341.

Hardre, P. L., & Reeve, J. (2003). A motivational model of rural students’ intentions topersistin, versus drop out of, high school. Journal of Educational Psychology, 95(2), 347–356. https://doi.org/10.1037/0022-0663.95.2.347.

Hofstede Insights (2021). Country comparison: Italy and the United States. Downloaded from https://www.hofstede-insights.com/country-comparison/italy,the-usa/.

Hu, L. T., & Bentler, P. M. (1999). Cutoff criteria for fit indexes in covariance structure analysis: Conventional criteria versus new alternatives. Structural Equation Modeling: A Multidisciplinary Journal, 6(1), 1–55. https://doi.org/10.1080/10705519909540118.

Humphrey, K. R. (2013). Using a student-led support group to reduce stress and burnout among BSW students. Social Work with Groups, 36(1), 73–84. https://doi.org/10.1080/01609513.2012.712905.

Kang, Y., McNeish, D. M., & Hancock, G. R. (2016). The role of measurement quality on practical guidelines for assessing measurement and structural invariance. Educational and Psychological Measurement, 76(4), 533–561. https://doi.org/10.1177/0013164415603764.

Kline, R. B. (2016). Principles and practice of structural equation modeling (4th ed.). Guilford Press.

Lai, K., & Green, S. B. (2016). The problem with having two watches: Assessment of fit when RMSEA and CFI disagree. Multivariate Behavioral Research, 51(2–3), 220–239. https://doi.org/10.1080/00273171.2015.1134306.

Lee, J., Puig, A., Lea, E., & Lee, S. M. (2013). Age-related differences in academic burnout of korean adolescents. Psychology in the Schools, 50(10), 1015–1031. https://doi.org/10.1002/pits.21723.

Little, R. J. A. (1988). A test of missing completely at random for multivariate data with missing values. Journal of the American Statistical Association, 83(404), 1198. https://doi.org/10.1080/01621459.1988.10478722

Little, T. D., Slegers, D. W., & Card, N. A. (2006). A non-arbitrary method of identifying and scaling latent variables in SEM and MACS models. Structural equation modeling, 13(1), 59–72. https://doi.org/10.1207/s15328007sem1301_3.

Loscalzo, Y., & Giannini, M. (2017). Studyholism or study addiction? A comprehensive model for a possible new clinical condition. In A. M. Columbus (Ed.), Advances in psychology research, volume 125 (pp. 19–37). New York: Nova Publishers.

Loscalzo, Y., & Giannini, M. (2018). Response to: Theoretical and methodological issues in the research on study addiction with relevance to the debate on conceptualising behavioural addictions: Atroszko (2018). Psychiatria i Psychologia Kliniczna, 18(4), 426–430. https://doi.org/10.15557/PiPK.2018.0051.

Loscalzo, Y., & Giannini, M. (2019a). Study engagement in Italian University students: Aconfirmatory factor analysis of the Utrecht Work Engagement scale – student version. Social Indicator Research, 142, 845–854. https://doi.org/10.1007/s11205-018-1943-y.

Loscalzo, Y., & Giannini, M. (2019b). Heavy Study Investment in Italian College students. Ananalysis of Loscalzo and Giannini’s (2017) Studyholism comprehensive model. Frontiers in Psychiatry, 10, 489. https://doi.org/10.3389/fpsyt.2019.00489.

Loscalzo, Y., & Giannini, M. (2021). Covid-19 outbreak: What impact of the lockdown on college students’ academic path and attitudes toward studying? BPA - Applied Psychology Bulletin (Bollettino Di Psicologia Applicata), 291, 63–74. https://doi.org/10.26387/bpa.291.5.

Loscalzo, Y., Ramazzotti, C., & Giannini, M. (2021). Studyholism e Study Engagement inrelazione alle conseguenze sullo studio dovute alla pandemia da Covid-19. Uno studio pilota quali-quantitativo su studenti universitari [Studyholism and Study Engagement in relation to the consequences of the Covid-19 pandemic on studying. A quali-quantitative pilot study on university students]. Counseling, 14(2), 79–91. https://doi.org/10.14605/CS1422106.

Manzano-García, G., & Ayala, J. C. (2017). Insufficiently studied factors related to burnout in nursing: Results from an e-Delphi study. PloS one, 12(4): e0175352. https://doi.org/10.1371/journal.pone.0175352.

Maslach, C., & Jackson, S. E. (1981). The measurement of experienced burnout. Journal of Occupational Behavior, 2(2), 99–113. https://doi.org/10.1002/job.4030020205.

Maslach, C., Jackson, S. E., & Leiter, M. P. (1996). Burnout inventory manual (3rd ed.). Palo Alto, CA: Consulting Psychologist Press.

Maslach, C., & Leiter, M. P. (2017). Understanding burnout: New models. In C. L. Cooper, & J. C. Quick (Eds.), The handbook of stress and health: A guide to research and practice (pp. 36–56). Wiley Blackwell. https://doi.org/10.1002/9781118993811.ch3.

McDonald, R. P., & Marsh, H. W. (1990). Choosing a multivariate model: Noncentrality and goodness of fit. Psychological Bulletin, 107(2), 247–255. https://doi.org/10.1037/0033-2909.107.2.247.

Molodynski, A., Lewis, T., Kadhum, M., Farrell, S. M., Lemtiri Chelieh, M., Falcão De Almeida, T., Masri, R., Kar, A., Volpe, U., Moir, F., Torales, J., Castaldelli-Maia, J. M., Chau, S. W. H., Wilkes, C., & Bhugra, D. (2021). Cultural variations in wellbeing, burnout and substance use amongst medical students in twelve countries. International Review of Psychiatry, 33(1–2), 37–42. https://doi.org/10.1080/09540261.2020.1738064

Muthén, L. K. & Muthén, B. O. (1998–2021). Mplus user’s guide (8th ed.). Los Angeles, CA: Muthén & Muthén.

Popescu, B., Maricutoiu, L. P., & De Witte, H. (2023). The student version of the Burnout assessment tool (BAT): Psychometric properties and evidence regarding measurement validiy on a romanian sample. Current Psychology. Online Advanced Publication. https://doi.org/10.1007/s12144-023-04232-w.

Reis, D., Xanthopoulou, D., & Tsaousis, I. (2015). Measuring job and academic burnout with the Oldenbourg Burnout Inventory (OLBI): Factorial invariance across samples and countries. Burnout Research, 2, 8–18. https://doi.org/10.1016/j.burn.2014.11.001.

Rohland, B. M., Kruse, G. R., & Rohrer, J. E. (2004). Validation of a single-item measure of burnout against the Maslach Burnout Inventory among physicians. Stress and Health: Journal of the International Society for the Investigation of Stress, 20(2), 75–79. https://doi.org/10.1002/smi.1002.

Schaufeli, W. B., & Bakker, A. (2004). Utrecht Work Engagement Scale: Preliminary manual. Utrecht: Occupational Health Psychology Unit, Utrecht University.

Schaufeli, W. B., Desart, S., & De Witte, H. (2020). Burnout Assessment Tool (BAT) – development, validity, and reliability. International Journal of Environment and Research Public Health, 17(24), 9495. https://doi.org/10.3390/ijerph17249495.

Schaufeli, W. B., Leiter, M. P., & Maslach, C. (2009). Burnout: 35 years of research and practice. The Career Development International, 14(3), 204–220. https://doi.org/10.1108/13620430910966406.

Schmitt, N., & Ali, A. A. (2015). The practical importance of measurement invariance. In C. E. Lance, R. J. Vandenberg, C. E. Lance, & R. J. Vandenberg (Eds.), More statistical and methodological myths and urban legends (pp. 327–346). New York, NY, US: Routledge/Taylor & Francis Group.

Sinval, J., Queirós, C., Pasian, S., & Marôco, J. (2019). Transcultural adaptation of the Oldenburg Burnout Inventory (OLBI) for Brazil and Portugal. Frontiers in Psychology, 10, 338. https://doi.org/10.3389/fpsyg.2019.00338.

Sun, R., Yang, H. M., Chau, C. T. J., Cheong, I. S., & Wu, A. M. S. (2022). Psychological empowerment, work addiction, and burnout among mental health professionals. Current Psychology. https://doi.org/10.1007/s12144-022-03663-1. Online advanced publication.

Terracciano, A., McCrae, R. R., & Costa, P. T. Jr. (2003). Factorial and construct validity of the Italian positive and negative affect schedule (PANAS). European Journal of Psychological Assessment, 19(2), 131–141. https://doi.org/10.1027/1015-5759.19.2.131.

Tomczak, M. T., & Kulikowski, K. (2023). Toward an understanding of occupational burnout among employees with autism - the Job Demands-Resources theory perspective. Current Psychology. Online advanced publication. https://doi.org/10.1007/s12144-023-04428-0.

Trockel, M., Bohman, B., Lesure, E., Hamidi, M. S., Welle, D., Roberts, L., & Shanafelt, T. (2018). A brief instrument to assess both burnout and Professional Fulfillment in Physicians: Reliability and validity, including correlation with self-reported medical errors, in a sample of Resident and practicing Physicians. Academic Psychiatry, 42(1), 11–24. https://doi.org/10.1007/s40596-017-0849-3.

Vallerand, R. J., Fortier, M. S., & Guay, F. (1997). Self-determination and persistence in a reallife setting:Toward a motivational model of high school dropout. Journal of Personality and Social Psychology, 72, 1161–1176. https://doi.org/10.1037//0022-3514.72.5.1161.

Watson, D., Clark, L. A., & Tellegen, A. (1998). Development and validation of brief measure ofpositive and negative affect: The PANAS scales. Journal of Personality and Social Psychology, 54, 1063–1070. https://doi.org/10.1037/0022-3514.54.6.1063.

Weijters, B., Baumgartner, H., & Schillewaert, N. (2013). Reversed item bias: An integrative model. Psychological Methods, 18(3), 320–334. https://doi.org/10.1037/a0032121.supp(Supplemental).

Wickramasinghe, N. D., Dissanayake, D. S., & Abeywardena, G. S. (2023). Student burnout and work engagement: A canonical correlation analysis. Current Psychology, 42, 7549–7556. https://doi.org/10.1007/s12144-021-02113-8.

Wong, N., Rindfleisch, A., & Burroughs, J. E. (2003). Do reverse-worded items confound measures in cross-cultural consumer research? The case of the material values Scale. Journal of Consumer Research, 30(1), 72–91. https://doi.org/10.1086/374697.

Zhang, Y., Gan, Y., & Cham, H. (2007). Perfectionism, academic burnout and engagement among chinese college students: A structural equation modeling analysis. Personality and Individual Differences, 43(6), 1529–1540. https://doi.org/10.1016/j.paid.2007.04.010.

Zhen, B., Yao, B., & Zhou, X. (2022). : Acute stress disorder and job burnout in primary and secondary school teachers during the COVID-19 pandemic: The moderating effect of sense of control. Current Psychology. Online advanced publication. https://doi.org/10.1007/s12144-022-03134-7.

Zhu, Q., Cheong, Y., Wang, C., & Tong, C. (2023). The impact of maternal and parental parenting styles and parental involvement on chinese adolescents’ academic engagement and burnout. Current Psychology, 42, 2827–2840. https://doi.org/10.1007/s12144-021-01611-z.

Funding

The authors have no relevant financial or non-financial interests to disclose.

Author information

Authors and Affiliations

Contributions

All authors contributed to the study conception and design. Data analyses were performed by Yura Loscalzo and Kenneth G. Rice. The first draft of the manuscript was written by Yura Loscalzo and Kenneth G. Rice and all authors commented on previous versions of the manuscript. All authors read and approved the final manuscript.

Corresponding author

Ethics declarations

Ethics approval and consent to participate

The study was approved by the research ethics committee of University of Florence and Georgia State University and the study was performed in accordance with the ethical standards as laid down in the 1964 Declaration of Helsinki and its later amendments or comparable ethical standards.

Informed consent

Informed consent was obtained from all individual participants included in the study.

Additional information

Publisher’s Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Loscalzo, Y., Rice, K.G. & Giannini, M. Psychometric properties of the Italian oldenburg burnout inventory (student version) and measurement invariance with the USA. Curr Psychol 43, 8241–8251 (2024). https://doi.org/10.1007/s12144-023-05020-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12144-023-05020-2