Abstract

Film clips are widely utilized to evoke emotional responses in the laboratory. We found, however, that different fields tend to select emotional film clips using different approaches. Specifically, psychologists focus more on the discreteness of emotions, whereas computer scientists focus more on the valence and arousal of emotions. Different concerns lead to distinct film selection methods, which may challenge the validity of the emotional databases and hinder communication between disciplines. In recent years, the hybrid discrete–dimensional model has been developed. Based on this hybrid theory, in this study, we attempted to synthesize the diverse approaches and developed a possible unified criterion for emotional film selection across disciplines. Twenty-eight film clips aimed at eliciting four basic emotions (i.e., anger, sadness, fear, and happiness) were evaluated by 70 participants. We examined both discrete and dimensional indicators and applied a new integrative film selection criterion. The results showed that compared with the discrete model or the dimensional model, the hybrid model presented the most reasonable film clip selection outcomes, and 12 film clips were recommended to induce strong and discrete emotions. These findings may enlighten further research on emotion in both theoretical and methodological ways.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Emotion, as a psychophysiological state (Cacioppo & Gardner, 1999), has always been a popular research topic in psychological and human development studies (Deng et al., 2017). Over the past two decades, various emotional stimuli databases have been developed to elicit emotion in a laboratory setting. Given the advantages of inducing emotions through movies (Gerrards-Hesse et al., 1994; Westermann et al., 1996), such as the high capability of movies to capture both auditory and visual attention (Rottenberg et al., 2007; Simons et al., 2003; Uhrig et al., 2016), the high ecological validity of the eliciting of emotions (Deng et al., 2017; Gross & Levenson, 1995; Zupan & Eskritt, 2020), and the high possibility of exploring the time course of an emotional state (Carvalho et al., 2012; Kring & Gordon, 1998), a large number of emotional film databases have been developed in various fields, including psychology (Gilman et al., 2017), biomedicine, and computer science (Wang et al., 2022).

Multidisciplinary studies on emotion contribute to a deeper understanding of emotion. Nonetheless, the standards for the construction of emotional film databases have not been unified among the disciplines. The two predominant approaches to model emotion are discrete and dimensional (Harmon-Jones et al., 2017). We have found that different fields tend to conceptualize emotion and select film clips using different approaches. Specifically, psychologists tend to pay more attention to the discreteness of the target emotion, whereas biomedicine and computer scientists are more concerned with the valence and arousal of emotion. Different concerns have led to distinct film selection methods and data presentation norms, which may challenge the validity of the emotional databases and hinder communication between different disciplines. In this study, we synthesized these diverse approaches and developed a possible unified criterion to build emotional film databases across disciplines.

Emotional film databases in different fields

The current state-of-the-art research included hundreds of individual studies about emotional film database construction or application (see reviews in Fernández-Aguilar et al., 2019; Wang et al., 2021), and the number of studies on emotional film databases continues to increase (Behnke et al., 2022; Correa et al., 2021; Saganowski et al., 2022; Zupan & Eskritt, 2020). Although more recent studies (Behnke et al., 2022; Saganowski et al., 2022) have included more psychological and physiological signals in the dataset, they have not discussed or improved the method of emotional film selection. As for the studies on emotional film databases, most of the studies in psychology have been aimed at building a larger number of databases containing richer emotions with a larger sample size, and most of the studies in computer science have been more concerned about the inclusion of physiological indicators in the film database. The method of emotional film construction—in particular, how emotional films are selected, which is part of the determinative process of effective emotion eliciting—has not been improved in time with the development of emotion research.

Development of emotional film databases in psychology

Gross and Levenson (1995) first standardized the process of building a film database from a psychological perspective. In their study, to evaluate the film clips, they used two criteria (i.e., the hit rate and the intensity) to calculate the success index. Based on the success index, Gross and Levenson developed a film set with 16 film clips that elicited eight different emotional states: amusement, contentment, anger, disgust, fear, sadness, neutrality, and surprise. This film set has been widely used in emotion-related studies (Dunn & Schweitzer, 2005; Herring et al., 2011; Nasoz et al., 2004; Rottenberg et al., 2002).

Following Gross and Levenson's approach, much progress has been made in subsequent studies, such as eliciting more categories of positive emotion (Ge et al., 2019; Schaefer et al., 2010a, b) or mixed emotion (Samson et al., 2016), developing more effective neutral film clips (Jenkins & Andrewes, 2012), making the stimuli suitable for different age-groups or people with different cultural backgrounds (Deng et al., 2017; Hewig et al., 2005), establishing a continuously updated database (Zupan & Eskritt, 2020), and conducting research online (Gilman et al., 2017; Wang et al., 2021).

With the development of physiological measurement in recent decades, more and more emotional studies have emphasized the importance of physiological indicators in the construction of emotional databases (Carvalho et al., 2012; Deng et al., 2017; Ge et al., 2019; Zupan & Eskritt, 2020) because psychophysiological responses are primary components of the emotion response (McHugo et al., 1982; Rottenberg et al., 2007; Scott, 1930; Sternbach, 1962; Witvliet et al., 1995). Carvalho et al. (2012) first added physiological indicators (skin conductance response [SCR] and heart rate [HR]) when developing the emotional film database, which extended the previous studies. Deng et al. (2017) also measured the physiological responses (HR and respiration rate [RR]) when developing a new emotional film database for Chinese. The authors of both of these studies, however, admitted that these simple physiological indicators were not sufficient to represent the complex physiological patterns of the nervous system. More advanced physiological measurements, like event-related potentials (ERPs) or functional magnetic resonance imaging (fMRI), would be needed in emotion measurement (Carvalho et al., 2012; Ge et al., 2019).

Development of emotional film databases in computer science

With the development of artificial intelligence (AI) in the twenty-first century, emotion has become a key topic in many fields, especially in computer science and biomedical engineering. Many emotional databases contain not only the emotional stimuli and self-reported data but also peripheral or central physiological data induced by the stimuli. These emotional databases have been developed in the computer science area, such as the DEAP (Database for Emotion Analysis Using Physiological Signals; Koelstra et al., 2011), MAHNOB-HCI (a multimodal database for affect recognition and implicit tagging; Soleymani et al., 2011), DECAF (a MEG-based Multimodal Database for Decoding Affective Physiological Responses; Abadi et al., 2015), and AMIGOS (a Dataset for Multimodal Research of Affect, Personality Traits, and Mood on Individuals and Groups; Correa et al., 2021). The DEAP database contains the electroencephalogram (EEG), galvanic skin response (GSR), blood volume pressure, respiration rate, skin temperature (ST), and electrooculogram (EOG) patterns of 32 viewers watching 40 one-minute music video clips that induced different emotions (Koelstra et al., 2011). The MAHNOB-HCI database synchronizes recordings of face video, audio signals, eye gaze data, and physiological signals (electrocardiogram [ECG], GSR, respiration amplitude [RA], ST, and EEG) of 27 participants watching 20 emotional movie or online clips in one experiment, and 28 images and 14 short videos in another (Soleymani et al., 2011). The DECAF database is composed of the affective responses of 30 participants to 36 emotional movie clips and 40 one-minute music video segments (used in the DEAP), with synchronously recorded magnetoencephalogram (MEG), near-infra-red (NIR) facial videos, horizontal electrooculogram (hEOG), ECG, and trapezius-electromyogram (tEMG) peripheral physiological responses (Abadi et al., 2015). The AMIGOS database investigates the affect, personality traits, and mood of individuals and groups using short and long emotional videos, with physiological signals (including EEG, ECG, and GSR) recorded by wearable sensors (Correa et al., 2021). Various physiological indicators are provided in these databases.

Diverse film selection methods in different fields

Contrary to the strong desire of psychologists for advanced physiological indicators, they rarely use the emotional film databases from computer science (Abdulrahman & Baykara, 2021; Rahman et al., 2021). One possible reason is that the criteria for the inclusion and exclusion of emotional film clips differ between psychology and computer science. As such, the validity of the emotional film database is not guaranteed across disciplines. The distinctions in these film selection methods in different fields also might be caused by the disparate ways researchers have conceptualized emotion (Gabert-Quillen et al., 2015). The two predominant approaches to model emotion are discrete and dimensional (Harmon-Jones et al., 2017).

Film selection based on the discrete model of emotion

The discrete model of emotion claims that emotional space is composed of a circumscribed collection of different emotions (Barrett et al., 2007), and the basic emotions are recognized universally (Ekman & Friesen, 1975). The most popular discrete model is the six basic emotions model, which categorizes emotions into fear, anger, disgust, sadness, happiness, and surprise (Ekman & Friesen, 1975). Most of the emotional film databases in psychology are based on discrete frameworks (Gabert-Quillen et al., 2015; Ge et al., 2019; Jenkins & Andrewes, 2012; Liang et al., 2013; Philippot, 1993; Schaefer et al., 2010a, b; Xu et al., 2010).

In the discrete model of emotion frameworks, the intensity and the discreteness of the elicited response are critical indicators for selecting effective emotional film clips (Gross & Levenson, 1995). Specifically, intensity is operationalized as the mean level at which the target emotion is rated, and discreteness is operationalized by deriving an idiographic hit rate index (the percentage of participants who indicated that they had felt the target emotion at least at one point more intensely than any of the nontarget emotions). Success indexes are obtained by adding the two normalized scores of intensity and discreteness, thus allowing for the identification of the most successful film clip for a particular target emotion (Ge et al., 2019; Gilman et al., 2017; Gross & Levenson, 1995).

Film selection based on the dimensional model of emotion

Compared with the discrete model, the dimensional model of emotion assumes that emotions are organized along generic and continuous dimensions (Davidson, 1992, 1993; Lang et al., 1993; Plutchik, 1980; Russell, 1980; Watson et al., 1988). Arousal (the degree to which an emotion feels active) and valence (the degree to which an emotion feels pleasant) are the two most common dimensions used in emotion studies (Bradley & Lang, 2000; Gray, 2001). Film clip databases based on the dimensional model are less commonly used in psychology but are popular in computer science. Affective computing (assigning computers the human-like capabilities of the observation, interpretation, and generation of affect features), in particular, is quite in need of such databases, and several databases have been established for this task (Abadi et al., 2015; Baveye et al., 2013, 2015; Correa et al., 2021; Erdem et al., 2015; Koelstra et al., 2011; Malandrakis et al., 2011; Soleymani et al., 2011).

In the dimensional model of emotion frameworks, emotional film clips were selected according to arousal and valence. Consider DECAF as an example: after normalization of all valence (V) and arousal (A) annotations, discarding outliers, computing μ/σ from the inlier V-A ratings for each film clip, and making the scatter diagram, the film clips close to the corners of each quadrant in the V-A two-dimensional space were finally selected (Abadi et al., 2015).

Film selection based on the hybrid discrete–dimensional model of emotion

With the advancement of research on emotion, a hybrid discrete–dimensional model has been developed (Barrett, 2006; Christie & Friedman, 2004; Gabert-Quillen et al., 2015; Harmon-Jones et al., 2017; Russell, 2003). Although no unified definition has been formed, the core idea of the hybrid frame is to combine both the discrete model and the dimensional model. Similar to the wave-particle duality of light, we speculated that emotion has dimensional–discrete duality. Harmon-Jones and his colleagues (2017) took birds as an analogy: birds not only can be categorized discretely into specific species but also can be described in terms of dimensions (body size or the distance they fly), both of which provide valuable information. Likewise, both discrete and dimensional perspectives are valuable because both perspectives contribute to the generation of new information (scientific evidence). Christie and Friedman (2004) described the location of discrete emotions within dimensional affective space using both self-report and autonomic nervous system (ANS) variables, and their results supported the hybrid model. Additionally, some recent studies (Mihalache & Burileanu, 2021; Trnka et al., 2021) unified emotions’ discrete and continuous paradigms by directly tying them together through mapping. Evaluating both the discrete and the dimensional indicators makes communication possible between researchers who conceptualize emotions in different ways, and this approach could facilitate work with varying research agendas (Gilman et al., 2017). Thus, for closer interdisciplinary communication and deeper exploration of emotions, it is necessary to promote the formation of database norms based on the hybrid discrete–dimensional framework.

Current study

To the best of our knowledge, although some previous studies have reported both discrete and dimensional indicators (Gabert-Quillen et al., 2015; Ge et al., 2019), these two indicators have not yet been combined to select emotional film clips. In this study, we constructed an integrated method of emotional film selection to specifically provide new indicators and comprehensive film selection criteria. We considered both Abadi and colleagues’ (2015) film clip selection method, which was based on valence and arousal, and Gross and Levenson’s (1995) film clip comparison method, which was based on intensity and discreteness. We made some necessary improvements to Abadi’s selection method. First, they normalized all valence and arousal annotations and took zero as the neutral state. Such a neutral state, however, happens only when the entire film clips are evenly distributed in the V-A two-dimensional space, which usually is not guaranteed. Thus, we used an absolute neutral point to avoid the bias caused by the initial film set. Second, they treated the participants who were less similar than others as the outliers and discarded all of their data, whereas we viewed the existence of individual differences as normal and identified outliers based on unexpected problems in the process of conducting experiments rather than based on individual differences. Third, they subjectively selected the film clips in the V-A two-dimensional space without clear and quantitative selection criteria. In this study, we provided a new indicator, absolute emotional distance (AED), to synthesize V-A more specifically. Additionally, compared with Gross and Levenson’s (1995) method, we adjusted the initial nine-point Likert scales to continuous scales to improve the fineness of the measurements. We believe this study is the first to practically apply a hybrid discrete–dimensional model of emotion in the emotional material selection, and thus, it contributes to the extension of this emotion theory.

Along with the proposed integrated film selection method, we provided an example of hybrid discrete–dimensional film selection and a film clip set in the field of computer science, DECAF (Abadi et al., 2015), was examined. This study could be the pioneer study that contributes to interdisciplinary work in the field of emotional film database construction and that attempts to promote the formation of multidisciplinary database construction norms.

To consider the effectiveness of film clips in a more detailed way, variables that may affect the perception of stimuli (i.e., gender, familiarity, liking, and avoidance behaviors) also were examined. Because the effectiveness and efficiency of materials for eliciting an emotional response can be influenced by culture and language (Liang et al., 2013; Mesquita & Haire, 2004), this study also extends prior studies on a cultural level by examining the Western emotional film database in a Chinese sample.

Methods

Participants

We recruited participants online from the school social forum through convenience sampling. A total of 70 undergraduate and graduate students (35 males and 35 females) whose average age was 22.7 years (range = 17–30, SD = 2.5) took part in this experiment. The inclusion criteria of the participants were as follows: (a) normal color vision and normal or corrected-to-normal visual acuity; (b) no identified hearing impairment; (c) native Chinese speakers; and (d) no history of neurological or mental disorders. All participants provided signed informed consent before the formal experiment. They were told that their responses were completely anonymous and that they could drop out of the experiment whenever they wanted. Participants were compensated with 20 yuan (about US$3) for their time.

Materials

In this study, we used the film clip set in the DECAF, which was authorized by the DECAF authors. We selected this film clip set because DECAF is a typical database widely used in computer science (see review in Wang et al., 2022), and this database has tried to classify film clips based on discrete emotions (Abadi et al., 2015). Thirty-six movie clips and two practice clips were included in DECAF, which reportedly elicit nine different emotions: amusement, fun, happiness, excitement, anger, disgust, fear, sadness, and shock (Abadi et al., 2015). The classification of emotions induced by the film clips was determined by the emotion tag most frequently reported by participants. These tags, however, do not reveal the extent to which these film clips effectively induced these specific emotions.

This study did not include all 36 movie clips that are included in DECAF. In this study, we focused only on basic emotions because various studies have recognized these emotions across cultures (Ekman & Friesen, 1975; Laukka & Elfenbein, 2021; Sauter et al., 2010) and because these primary states often are encountered in everyday interactions (Nowicki & Duke, 1994; Rothman & Nowicki Jr., 2004; Zupan & Babbage, 2017). According to the six basic emotions model (Ekman & Friesen, 1975), basic emotions refer to happiness, anger, sadness, fear, disgust, and surprise. For ethical considerations, disgust was not included in this study. Additionally, the film clips in DECAF are not aimed at surprise. Although shock is included in DECAF, this emotion is not the same as surprise. Therefore, in this study, we included 24 DECAF film clips: nine happy film clips, four angry film clips, seven sad film clips, three fearful film clips, and one practice film clip.

Because neutral clips are not included in DECAF, and the number of angry or fearful film clips was much smaller than the number of happy or sad film clips, we also added four film clips (two neutral film clips, an angry film clip, and a fearful film clip) from Gabert-Quillen’s study (2015). Neutral film clips (i.e., calmness) are considered to have no valence to represent a state of relaxation (Hewig et al., 2005).

Thus, we included a total of 28 film clips in this study. The time length of these film clips ranged from 51 to 191 s, with an average length of 97 s. The clips were from feature-length films in English with Mandarin subtitles and were commercially available for purchase. See Table 1 for detailed descriptions of the clips.

Measures

In this study, we used two main scales: the Self-Assessment Manikin and the Emotion Assessment Scale.

In the Self-Assessment Manikin (SAM; Lang, 1980), valence (from an unhappy to a happy figure) and arousal (from a calm figure to an excited heart-exploding figure) are presented in graphic images. Participants are asked to rate the two aspects on a continuous scale from 1 to 9 according to their feelings elicited by each film clip. SAM has been widely used in research on emotion and also has been regarded as the main method of validation for emotional databases (Backs et al., 2005; Carvalho et al., 2012).

The Emotion Assessment Scale assesses the intensity of different emotions after viewing each film. It was modified from Ge’s (2019) emotion evaluation scale. This measurement is a traditional self-report method of emotion (Ekman et al., 1980; Gross & Levenson, 1995; Philippot, 1993). This study included four items from the emotion assessment scale: happiness, sadness, anger, and fear. The participants were also asked to rate the items on a continuous scale from 1 (“very weak and almost absent”) to 9 (“very strong”). Ratings from this scale served as the basis for analyzing the intensity and discreteness of emotions elicited by the film clips and calculating the success index (Gross & Levenson, 1995; Liang et al., 2013).

We also collected demographic information, including gender, age, and grade. Referring to previous studies (Carvalho et al., 2012; Gabert-Quillen et al., 2015) and the Post Film Questionnaire (Rottenberg et al., 2007), in this study, we also included items to measure familiarity, liking, and avoidance behavior. Familiarity was rated on a continuous scale from 1 (“not familiar at all and have never watched it”) to 9 (“so familiar with the film clip that I know its exact source”). Liking was also rated on a continuous scale from 1 (“dislike very much”) to 9 (“like very much”). As for avoidance behavior, participants were required to answer a question for each film clip: “Have you closed your eyes or looked away during the clip presentation?” Participants reported “yes” or “no.” If they demonstrated avoidance behavior, they may have missed a vital part of the film clip.

Procedure

We conducted this experiment in groups in the behavioral laboratories in November 2021. Each group included one to three participants who were separated into different small rooms. During the experiment, participants wore headphones, watched film clips, and assessed their emotional states on computers. They never heard or observed each other’s voices or reactions and remained independent of each other. Participants performed the task under dim light and appropriate temperature conditions and were seated at a 90-degree angle facing the screen. The experimental program was an EXE file written using Python 3.8 and PyQt5.

Before the experiment, participants provided informed consent. They were broadly informed that this was a study on emotion and that a series of video clips would be displayed. They were required to watch the videos attentively. They were informed that some film clips might portray potentially shocking scenes. If they found the films too distressing or if they felt uncomfortable, they could look away or shut their eyes or withdraw from the experiment at any time (Deng et al., 2017). They were also informed that their name would not be recorded to ensure their anonymity and that the data would be used for scientific research only. After providing written informed consent, participants watched a video with the experimental instructions, which had been synthesized by the computer in advance to avoid the experimenter effect. According to the instructions, participants had to report their emotional reactions to each film clip by completing the questionnaire immediately after watching each excerpt. They reported their feelings by pulling a slider for each item, with the left end scoring 1 and the right end scoring 9. The score obtained by dragging the slider was presented on the right side of the item in real time. Participants were encouraged to answer all of the questions based on their actual feelings rather than what they expected their feelings or general mood should be when watching the video (Philippot, 1993; Schaefer et al., 2010a, b).

In the formal trials, 27 film clips (nine eliciting happiness, five anger, four fear, seven sadness, and two calmness) were divided into two similar groups to avoid the fatigue effect that can result from excessive experimental time (Samson et al., 2016). Group A included 14 film clips (four eliciting happiness, three anger, two fear, three sadness, and two calmness). Group B included 15 film clips (five eliciting happiness, two anger, two fear, four sadness, and two calmness). Calm film clips (the baseline videos) and practice film clips were repeated in group A and group B. We completed the grouping of videos before we recruited the participants. The specific grouping is shown in Table 2. Participants were randomly assigned to one of the two groups.

Participants first viewed the practice film clip. Then we presented the formal film clips in a pseudo-random trial order to ensure that identical categories were not presented consecutively and to avoid a potential order effect. Each trial lasted 1–3 min to view the film clip, which was followed by 30 s for self-reporting. To eliminate the impact of the last film clip on the subsequent film clip, the participants were instructed to complete two calculation questions as a distraction task after filling in the questionnaires, which was followed by 30 s of rest. Finally, each film clip was viewed by more than 30 participants, which was similar to previous studies (Ge et al., 2019; Gross & Levenson, 1995) and was used to determine the number of participants in this study. Participant gender was balanced in each group.

Statistical analysis

We conducted data analysis in five stages and using SPSS 25 (IBM).

-

Stage 1: Screen for outliers. If a participant reported avoidance behavior and items in the scales were at the default value of 1 in a trial, the participants did not watch this film clip, and the trial data were regarded as outliers. According to this criterion, we discarded the data from three trials. Although two participants completed half of the trials in groups A and B rather than a complete group A or B because of computer problems, their data seemed to be similar to that of other participants, thus being retained.

-

Stage 2: Examine the effectiveness of emotional film clips. On the basis of the dimensional model, we examined the effectiveness of the emotional film clips. We calculated the means (M) and standard deviations (SD) of valence and arousal for each film clip and then calculated the values of (M − 5)/SD for arousal (A) and valence (V) to eliminate the influence of dimensions and to transfer the center of the V-A two-dimensional space to the absolute neutral point (5, 5). We drew the V-A two-dimensional diagram with labels using MATLAB R2020a. We calculated the absolute AED, which was the distance between each point in the V-A diagram and the origin (0, 0), to investigate the effectiveness of each film clip in inducing emotion.

-

Stage 3: Examine and rank the emotional film clips based on the discrete model. We calculated the means and standard deviations of the intensity of each emotion and the hit rate for each film clip. We performed separate within-subject repeated measures analysis of variance (ANOVA) with four levels (happiness, anger, fear, and sadness) for the intensity of each emotion. We analyzed the significance of the difference between target emotional intensity and nontarget emotional intensity by post hoc comparison with Bonferroni correction. The level of statistical significance was set at p < 0.05. Finally, the success index of each film segment in the group with the same target emotion was calculated by summing the normalized hit rate and the normalized intensity of the target emotion, providing the ranking of the film clips in each group from the discrete view.

-

Stage 4: Combine the results of discrete and dimensional indicators to select film clips. Specifically, the AED should be larger than 1, which was recommended by the results in this study; the hit rates of all the film clips should be more than 25% (i.e., the random probability of hitting the target emotion); the intensity of target emotions should be larger than 5 (i.e., the middle value of the nine-point scale); and the intensity of all nontarget emotions should be smaller than 3, which was recommended by previous studies (e.g., Ge et al., 2019).

-

Stage 5: Investigate the influence of demographic variables. With arousal, valence, target emotional intensity, and hit rate as dependent variables and gender as an independent variable, we conducted a univariate between-subject ANOVA for each film clip. We also calculated the Pearson correlation between the familiarity and liking with arousal, valence, target emotional intensity, and the hit rate for each film clip.

Results

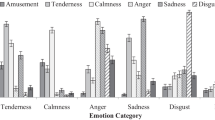

Dimensional indicators: valence, arousal, and AED

The means (M) and standard deviations (SD) of valence and arousal for each film clip are given in Table 3 and a V-A two-dimensional scatterplot is shown in Fig. 1. Quadrant I, which was high in both arousal and valence, included four films that elicited happiness. Quadrant II, which was high in arousal (fear, sadness, anger), included unpleasant film clips. Quadrant III included only one film that elicited anger (Gentleman’s Agreement) and was rated as unpleasant and a little low in arousal. Quadrant IV, which was low in arousal but pleasant in valence, included two neutral films (calmness) and four films that elicited happiness.

Representation of 28 film clips in V-A two-dimensional space. Note: The numbers next to the dots are matched with the ID of the film clips in Table 1

The AEDs, that is, the distances of film clips from the neutral point (0, 0), were all larger than 0.5 except for the practice film clips. The smaller the AED, the more similar the emotion induced by the film clip to the neutral emotion. According to the scatterplot diagram, we conservatively estimated that an AED larger than 1 meant that the emotion elicited was relatively strong. Based on this criterion, five film clips (Sad 3 [Up (part II)]; Happy 2 [August Rush], 7 [Life Is Beautiful (part I)], 8 [Slumdog Millionaire], and 9 [House of Flying Daggers]) were not recommended when requiring intensive emotion with strong subjective and physiological responses. Compared with Pride and Prejudice (Neutral 1), Searching for Bobby Fischer (Neutral 2) was deemed to be much closer to a neutral state.

Discrete indicators: intensity, discreteness, and success index

The intensity of different emotions and the hit rate for each film clip are shown in Table 4. Within-subject repeated measures ANOVA and post hoc comparisons with Bonferroni correction showed that the intensity of target emotions was significantly higher than that of nontarget emotions for all film clips (p < 0.001). The hit rates of all film clips were far more than 25% (1/4), which meant that the probability of each film clip successfully inducing target emotion was higher than the random probability. The intensities of target emotions were all larger than 5, which meant that each film clip could elicit strong target emotion. For eight film clips (Sad 3 [Up (part II)], 4 [Life Is Beautiful (part II)], and 7 [Prestige]; Anger 2 [Lagaan], 4 [Crash], and 5 [Gentleman’s Agreement]; and Fear 3 [Psycho] and 4 [The Ring]), however, the intensity of at least one nontarget emotion was higher than 3, which meant that these film clips seemed to elicit mixed emotions, thus having a low level of discreteness. The neutral film clips appeared to induce a relatively high level of happiness.

The success index combining intensity and discreteness indicated the ranking of the film clips in each group. Among the film clips targeting happiness, Up (part I) and Wall-E were the top two; among the film clips targeting sadness, My Girl and Bambi were the top two; among the film clips targeting anger, Gandhi and My Bodyguard were the top two; and among the film clips targeting fear, Black Swan and The Shining were the top two.

Recommended film clips

According to the film selection criteria described for Stage 4 (see Methods), and combined with an analysis of the dimensional indicators and the discrete indicators, we identified 12 film clips (four happy film clips: 1 [Up (part I)], 3 [Truman Show], 4 [Wall-E], and 6 [Remember the Titans (part I)]); four sad film clips: 1 [My Girl], 2 [Bambi], 5 [Remember the Titans (part II)], and 6 [Titanic]); two angry film clips: 1 [Gandhi] and 3 [My Bodyguard]); and two fearful film clips: 1 [The Shining] and 2 [Black Swan]) to elicit strong and discrete emotions.

We identified eight film clips (three sad film clips: 3 [Up (part II)], 4 [Life Is Beautiful (part II)], and 7 [Prestige]); three angry film clips: 2 [Lagaan], 4 [Crash], and 5 [Gentleman’s Agreement]); and two fearful film clips: 3 [Psycho] and 4 [The Ring]) for use in research on mixed emotions. The happy film clip (8 [Slumdog Millionaire]) was so close to a neutral state in the V-A two-dimensional space that it was not recommended. The four happy film clips (2 [August Rush], 5 [Love Actually], 7 [Life Is Beautiful (part I)], and 9 [House of Flying Daggers]) were in Quadrant IV. Because they were pleasant in valence but low in arousal, which may not be consistent with the definition of happiness, they were considered to elicit other positive emotions like tenderness rather than happiness.

Demographic factors: gender, liking, and familiarity

Gender differences were not significant for the majority of film clips. Eight exceptions included one angry film clip (2 [Lagaan]), two sad film clips (1 [My Girl] and 6 [Titanic]), one happy film clip (5 [Love Actually]), and all four fearful film clips (1 [The Shining], 2 [Black Swan], 3 [Psycho], and 4 [The Ring]). In response to the angry film clip, Lagaan, women reported more anger [F(1, 31) = 4.489, p = 0.042] and a higher level of arousal [F(1, 31) = 5.725, p = 0.023] than men. In response to the two sad film clips, women reported more sadness [F(1, 30) = 7.659, p = 0.010] and a higher level of arousal [F(1, 35) = 4.637, p = 0.038] for My Girl but had a lower hit rate [F(1, 35) = 4.339, p = 0.045] for Titanic. In response to the happy film clip, Love Actually, women reported more happiness [F(1, 32) = 5.299, p = 0.028] and more pleasure [F(1, 32) = 11.948, p = 0.002] and had a higher hit rate [F(1, 32) = 5.792, p = 0.022] than men. In response to the four fearful film clips, women reported higher levels of fear [F(1, 31) = 6.942, p = 0.013] and more unpleasantness [F(1, 31) = 6.630, p = 0.015] for The Shining; more unpleasantness [F(1, 33) = 7.451, p = 0.010] for Black Swan; higher levels of fear [F(1, 35) = 4.164, p = 0.049] for Psycho; and more unpleasantness [F(1, 32) = 11.836, p = 0.002], a higher level of arousal [F(1, 32) = 8.126, p = 0.008], and more fear [F(1, 32) = 5.313, p = 0.028] for Psycho.

Liking was significantly correlated with emotional responses for the majority of film clips except for one angry film clip (2 [Lagaan]), two sad film clips (1 [My Girl] and 6 [Titanic]), and one fearful film clip (3 [Psycho]). The Pearson correlations among familiarity and arousal, valence, target emotional intensity, and hit rate were not significant in the majority of film clips except for three sad film clips (3 [Up (part II)], 4 [Life Is Beautiful (part II)], and 5 [Remember the Titans (part II)]), two fearful film clips (1 [The Shining] and 4 [The Ring]), and five happy film clips (1 [Up (part I)], 3 [Truman Show], 4 [Wall-E], 8 [Slumdog Millionaire], and 9 [House of Flying Daggers]). For more details, see Supplementary Material 1.

Discussion

The current study formed an integrative emotional film selection method based on the hybrid discrete–dimensional model (Barrett, 2006; Christie & Friedman, 2004; Harmon-Jones et al., 2017) and examined the validity of this method with previous emotional film databases, mainly DECAF (Abadi et al., 2015; Gabert-Quillen et al., 2015). We provided both discrete and dimensional indicators for emotional responses to the film clips, while considering the influence of demographic factors, thus making it easier for researchers to select emotional films according to their specific needs. A set of emotional film clips was recommended from mixed perspectives.

A major contribution of the present work was to provide the validity of the integrative method for the selection of emotional film clips. The selection of film clips was not based on the discrete or dimensional indicators alone but on both, the importance of which was shown in our results. If we selected the film clips based only on the dimensional indicators (arousal and valence), we had to exclude five film clips that were close to the neutral state, with the rest of the included film clips all being from Quadrant I and Quadrant II. In the same way, most researchers in computer science have classified each of the videos into one of four quadrants of the V-A space—that is, HVHA, HVLA, LVHA, and LVLA (with H, L, A, and V standing for high, low, arousal, and valence, respectively) (e.g., Correa et al., 2021; Koelstra et al., 2011; Soleymani et al., 2011). They further analyzed the selected videos from the same quadrant and regarded them as a group. Our results showed that the film clips that elicited sadness, anger, and fear were all in the same quadrant. These three emotions are distinct from each other not only in subjective feelings but also in peripheral physiological activity (Ekman et al., 1983; Levenson et al., 1990; Rainville et al., 2006) and central nervous system response (Schmidt & Trainor, 2001; Vytal & Hamann, 2010). For example, Rainville et al. (2006) found inhibitory excitation in the parasympathetic nervous system when sadness or fear was induced but not when anger was generated. If we analyzed the different emotions in the same quadrant as a whole, much information would be lost.

If, however, we selected the film clips only relying on the discrete indicators, all nine happy film clips could be considered to elicit happiness successfully. In this case, we might not find that these film clips initially targeting happiness need to be further subcategorized into different positive emotions based on high and low arousal. In addition, without considering the V-A two-dimensional space, it would be neglected that there were almost no emotional film clips in Quadrant III, which meant that the current film clip set was biased. This phenomenon was found in many existing emotional film databases (Deng et al., 2017; Gabert-Quillen et al., 2015; Ge et al., 2019). Attentions should be given to the limitations of using these databases. Additionally, it is notable that the success index could not test the effectiveness of the emotional film clips but instead only ranked them. Even in a low-quality film clip set, in which all of the film clips did not elicit strong and discrete emotions, there would still be a film clip with the highest success index.

This psychological study was the first to examine an emotional film database from computer science. Many other emotional databases have both subjective and physiological responses in computer science (Wang et al., 2022). Their emotion-eliciting materials need to be retested and expanded in a more detailed way. This study proposed an integrated selection method to perform a detailed retest and gave an example of the process. This method can be used to develop emotional databases recognized by both psychology and computer science, which may facilitate interactions between different fields.

On the basis of the results and the film selection criteria, we provided emotional film recommendations. Among the 25 film clips that targeted specific emotions in this study, 12 film clips that meet all of the selection criteria were recommended to induce strong and discrete emotions, and eight film clips that elicited at least one nontarget emotion higher than the threshold were considered to elicit mixed negative emotions. Four film clips initially targeting happiness showed high valence and low arousal, such that they seemed to evoke other positive emotions like tenderness rather than happiness, and one happy film clip was too close to a neutral state to be recommended. Consistent with previous studies (Ge et al., 2019; Gross & Levenson, 1995; Philippot, 1993; Xu et al., 2010), we found that anger and sadness were often expressed together. We found an overlap between sad and angry film clips in the V-A space, and discrete indicators also showed the mixture of anger and sadness. Several film clips, however, still induced sadness and anger separately. After rough content analysis, we found that mixed emotions were induced when both the victim and the persecutor were depicted, whereas discrete emotions were induced when only one side was shown. This finding may enlighten the selection of other film clips that elicit discrete or mixed emotions. As for happiness, some researchers have not regarded it as a discrete emotion because it is not specific enough, does not show a discrete subjective experience pattern, and may be associated with several positive feelings simultaneously (Herring et al., 2011). Joy, which refers to emotion with a sense of well-being, success, or good fortune (Fredrickson, 2013), may better describe the discrete happiness identified in this study. Researchers have held a relatively consistent view of joy as a discrete positive emotion (Izard, 1977; Shiota et al., 2004). Other positive emotions, such as tenderness (Kalawski, 2010), still need more exploration.

Our study also examined the influence of demographic factors, specifically, gender, liking, and familiarity. The results indicated no gender differences in most film clips, but gender differences existed in one angry film clip, two sad film clips, one happy film clip, and all of the fearful film clips. In these film clips, women reported higher intensity of the target emotion, higher arousal, or more extreme valence than men. These results were consistent with other findings (Gross & Levenson, 1995; Schaefer et al., 2010a, b) that women often report more intense emotional experiences than men. This may be explained by the neurobiological differences in emotional systems across genders (Cahill et al., 2004) or the influence of sociocultural stereotypes about gender roles (Brody & Hall, 2000; Feldman-Barrett et al., 1998; Kelly & Hutson-Corneaux, 1999) but also may be due to the content of certain scenes (e.g., sexual assault of a woman by a man; Gabert-Quillen et al., 2015). Thus, gender-relevant content should be considered carefully when selecting materials for stimuli. Strong correlations between liking and emotional response were found in our study. This finding, however, also was not surprising, as liking generally elicits more positive emotions. Similar to previous studies (Gabert-Quillen et al., 2015), we also found an impact of familiarity on participant responses. For positive film clips, the positive reactions were strengthened by familiarity, which was perhaps caused by the recall of the previous pleasant film-viewing experiences. For negative film clips, the negative responses were weakened by familiarity, as the subjects had already experienced them and could prepare for the negative events. Hence, participants’ familiarity also should be taken into consideration when the emotion-eliciting materials are popular or have high exposure.

Limitations

It is important to note several limitations of the current study. First, like much of the behavioral sciences (Gilman et al., 2017), our research is highly dependent on college samples. The restricted age range and the high education level of the samples may affect the generalizability of the results (Ge et al., 2019). Future studies are needed to increase the sample size and include, for instance, a wider range of ages, education backgrounds, and socioeconomic status.

Second, all of the data presented were based on participants’ self-reports, which could be affected by stereotypes or cultural expectations (Zupan & Eskritt, 2020). To avoid measurement bias caused by subjective factors, more behavioral or physiological measurements are needed. Nonetheless, we do believe that finding a series of materials (whether film clips or others) that produce the desired profile of self-reported emotion is still applicable to other kinds of measurements. Because film clips offer an advantage in understanding the time course of an emotional state, the use of rating dials (Gottman et al., 1998) or throttles (Fayn et al., 2022) would be a promising method to provide continuous self-reports in emotional evaluation, which also could be synchronized with changes in physiological response.

Third, the current study focused only on four specific types of basic emotions: anger, sadness, fear, and happiness. Other basic emotions, more types of positive emotions, and mixed emotions also hold great interest to researchers. Although the current study did not analyze other emotions, it served as a guide for examining other emotional film clips based on hybrid discrete–dimensional frames.

Fourth, only the two most common emotional dimensions (i.e., valence and arousal) were used in this analysis. Other dimensions like dominance and motivation (Deng et al., 2017) also should be considered.

Furthermore, this validation study was conducted in a specific cultural context (Chinese-speaking Asians). As the film clip set was initially built by Western studies, our findings expanded the prior studies. Because cultural differences among different countries may have an impact on responses, it is necessary to use these stimuli to perform more comparative studies and identify more materials to extend the choice of stimuli to various cultures.

Implications

This study was important methodological research in the field of emotional film databases. We found that depending only on discrete or dimensional indicators led to biased results that could go unnoticed. The integrated film selection method proposed in this study helped avoid this blindness and formed a possible unified criterion for emotional film database building across disciplines, including psychology, AI, biomedicine, and other human development areas. The effective emotional film databases that have been developed to date not only contribute to the investigation of emotion itself but also facilitate various practical research on topics such as emotion recognition based on computers (Hasnul et al., 2021; Saganowski et al., 2022), parent–child interaction (Ravindran et al., 2021), mental disorders like dementia (Fernández-Aguilar et al., 2021), depression (Bylsma, 2021), and substance addiction (Hsieh & Hsiao, 2016).

After we compared and selected a set of film clips, we identified 12 film clips to effectively induce strong and discrete emotion, which provided a reference for subsequent research in selecting emotional videos. In addition, these film clips also turned out to successfully elicit discrete emotion in studies conducted in other countries (Abadi et al., 2015; Gabert-Quillen’s study, 2015). Thus, the recommended video materials have cross-cultural consistency and support the feasibility of building an international universal video database.

Finally, this study made the first attempt to practically apply the hybrid discrete–dimensional model of emotion in the context of emotional material selection. We creatively proposed that emotion has dimensional–discrete duality, similar to the wave-particle duality of light. The nature of dimension is close to the nature of a wave, the nature of discreteness is matched with the nature of a particle, and both discreteness and dimension represent the nature of emotion. Our results supported this finding by demonstrating the importance of combining both discrete and dimensional indicators. Nonetheless, only a preliminary attempt has been made in this study, and the topic of a hybrid discrete–dimensional model of emotion deserves further discussion and investigation.

Conclusions

Emotion is an extremely complex psychological concept. The debate on the structure of emotion (i.e., the discrete model versus the dimensional model) has lasted more than a century. This topic has attracted exploration from numerous researchers in various fields. This study emphasized the importance of both the discrete and dimensional approaches. Following previous studies, we developed and tested an integrated emotional film selection method based on the hybrid discrete–dimensional model. The results showed the value of this integrated approach. Although the current study analyzed a limited selection of film clips, the findings provided necessary theoretical and methodological guidance for follow-up studies across disciplines to further understand emotions.

References

Abadi, M. K., Subramanian, R., Kia, S. M., Avesani, P., Patras, I., & Sebe, N. (2015). DECAF: MEG-based multimodal database for decoding affective physiological responses. IEEE Transactions on Affective Computing, 6(3), 209–222. https://doi.org/10.1109/TAFFC.2015.2392932

Abdulrahman, A., & Baykara, M. (2021). A comprehensive review for emotion detection based on EEG signals: Challenges, applications, and open issues. Traitement du Signal, 38(4), 1189–1200. https://doi.org/10.18280/ts.380430

Backs, R. W., da Silva, S. P., & Han, K. (2005). A comparison of younger and older adults’ self- assessment manikin ratings of affective pictures. Experimental Aging Research, 31, 421–440.

Barrett, L. F. (2006). Solving the emotion paradox: Categorization and the experience of emotion. Personality and Social Psychology Review, 10(1), 20–46. https://doi.org/10.1207/s15327957pspr1001_2

Barrett, L. F., Mesquita, B., Ochsner, K. N., & Gross, J. J. (2007). The experience of emotion. Annual Review of Psychology, 58(1), 373–403.

Baveye, Y., Dellandrea, E., Chamaret, C., & Chen, L. (2015). LIRISACCEDE: A video database for affective content analysis. IEEE Transactions on Affective Computing, 6(1), 43–55.

Baveye, Y., Bettinelli, J. N., Dellandréa, E., Chen, L., & Chamaret, C. (2013). A large video database for computational models of induced emotion. In 2013 Humaine Association Conference on Affective Computing and Intelligent Interaction (pp. 13–18). IEEE.

Behnke, M., Buchwald, M., Bykowski, A., Kupiński, S., & Kaczmarek, L. D. (2022). Psychophysiology of positive and negative emotions, dataset of 1157 cases and 8 biosignals. Scientific Data, 9(1), 1–15.

Bradley, M. M., & Lang, P. J. (2000). Measuring emotion: Behavior, feeling, and physiology. In R. D. Lane & L. Nadel (Eds.), Cognitive neuroscience of emotion (pp. 242–276). Oxford University Press.

Brody, L. R., & Hall, J. A. (2000). Gender, Emotion, and Expression. In M. Lewis & J. M. Haviland-Jones (Eds.), Handbook of emotions: Part IV: Social/personality issues (2nd ed., pp. 325–414). Guilford Press.

Bylsma, L. M. (2021). Emotion context insensitivity in depression: Toward an integrated and contextualized approach. Psychophysiology, 58(2), e13715.

Cacioppo, J. T., & Gardner, W. L. (1999). Emotion. Annual Review of Psychology, 50, 191–214. https://doi.org/10.1146/annurev.psych.50.1.191

Cahill, L., Uncapher, M., Kilpatrick, C., Alkire, M. T., & Turner, J. (2004). Sex-related hemispheric lateralization of amygdala function in emotionally influenced memory: An fMRI investigation. Learning & Memory, 11, 261–266.

Carvalho, S., Leite, J., Galdo-Álvarez, S., & Gonçalves, Ó. F. (2012). The emotional movie database (EMDB): A self-report and psychophysiological study. Applied Psychophysiology and Biofeedback, 37(4), 279–294.

Christie, I. C., & Friedman, B. H. (2004). Autonomic specificity of discrete emotion and dimensions of affective space: A multivariate approach. International Journal of Psychophysiology, 51, 143–153. https://doi.org/10.1016/j.ijpsycho.2003.08.002

Correa, J. A. M., Abadi, M. K., Sebe, N., & Patras, I. (2021). AMIGOS: A dataset for affect, personality and mood research on individuals and groups. IEEE Transactions on Affective Computing, 12, 479–493.

Davidson, R. J. (1992). A prolegomenon to the structure of emotion: Gleanings from neuropsychology. Cognition and Emotion, 6, 245–268.

Davidson, R. J. (1993). Parsing affective space: Perspectives from neuropsychology and psychophysiology. Special section: Neuropsychological perspectives on components of emotional processing. Neuropsychology, 7, 464–475.

Deng, Y., Yang, M., & Zhou, R. (2017). A New standardized emotional film database for Asian culture. Frontiers in Psychology, 8, 1941. https://doi.org/10.3389/fpsyg.2017.01941

Dunn, J. R., & Schweitzer, M. E. (2005). Feeling and believing: The influence of emotion on trust. Journal of Personality and Social Psychology, 88(5), 736–748. https://doi.org/10.1037/0022-3514.88.5.736

Ekman, P., & Friesen, W. V. (1975). Unmasking the face: A guide to recognizing emotions from facial clues. Prentice-Hall.

Ekman, P., Freisen, W. V., & Ancoli, S. (1980). Facial signs of emotional experience. Journal of Personality and Social Psychology, 39(6), 1125–1134. https://doi.org/10.1037/h0077722

Ekman, P., Levenson, R. W., & Friesen, W. V. (1983). Autonomic nervous system activity distinguishes among emotions. Science, 221(4616), 1208–1210. https://doi.org/10.1126/science.6612338

Erdem, C. E., Turan, C., & Aydin, Z. (2015). BAUM-2: A multilingual audio-visual affective face database. Multimedia Tools and Applications, 74(18), 7429–7459.

Fayn, K., Willemsen, S., Muralikrishnan, R., Castaño Manias, B., Menninghaus, W., & Schlotz, W. (2022). Full throttle: Demonstrating the speed, accuracy, and validity of a new method for continuous two-dimensional self-report and annotation. Behavior Research Methods, 54(1), 350–364. https://doi.org/10.3758/s13428-021-01616-3

Feldman-Barrett, L., Robin, L., Pietromonaco, P. R., & Eyssel, K. M. (1998). Are women the more emotional sex? Evidence from emotional experiences in social context. Cognition and Emotion, 12, 555–578.

Fernández-Aguilar, L., Navarro-Bravo, B., Ricarte, J., Ros, L., & Latorre, J. M. (2019). How effective are films in inducing positive and negative emotional states? A meta-analysis. Plos One, 14(11), e0225040. https://doi.org/10.1371/journal.pone.0225040

Fernández-Aguilar, L., Lora, Y., Satorres, E., Ros, L., Melendez, J. C., & Latorre, J. M. (2021). Dimensional and discrete emotional reactivity in Alzheimer’s disease: Film clips as a research tool in dementia. Journal of Alzheimer’s Disease, 82(1), 349–360.

Fredrickson, B. L. (2013). Positive emotions broaden and build. Advances in Experimental Social Psychology, 47, 1–53.

Gabert-Quillen, C. A., Bartolini, E. E., Abravanel, B. T., & Sanislow, C. A. (2015). Ratings for emotion film clips. Behavior Research Methods, 47(3), 773–787.

Ge, Y., Zhao, G., Zhang, Y., Houston, R. J., & Song, J. (2019). A standardised database of Chinese emotional film clips. Cognition and Emotion, 33(5), 976–990.

Gerrards-Hesse, A., Spies, K., & Hesse, F. W. (1994). Experimental inductions of emotional states and their effectiveness: A review. British Journal of Psychology, 85(1), 55–78.

Gilman, T. L., Shaheen, R., Nylocks, K. M., Halachoff, D., Chapman, J., Flynn, J. J., Matt, L. M., & Coifman, K. G. (2017). A film set for the elicitation of emotion in research: A comprehensive catalog derived from four decades of investigation. Behavior Research Methods, 49(6), 2061–2082.

Gottman, J. M., Coan, J., Carrere, S., & Swanson, C. (1998). Predicting marital happiness and stability from newlywed interactions. Journal of Marriage and the Family, 5–22.

Gray, J. R. (2001). Emotional modulation of cognitive control: Approach-withdrawal states double-dissociate spatial from verbal two-back task performance. Journal of Experimental Psychology: General, 130, 436–452.

Gross, J. J., & Levenson, R. W. (1995). Emotion elicitation using films. Cognition & Emotion, 9(1), 87–108.

Harmon-Jones, E., Harmon-Jones, C., & Summerell, E. (2017). On the importance of both dimensional and discrete models of emotion. Behavioral Sciences, 7(4), 66. https://doi.org/10.3390/bs7040066

Hasnul, M. A., Aziz, N. A. A., Alelyani, S., Mohana, M., & Aziz, A. A. (2021). Electrocardiogram-based emotion recognition systems and their applications in healthcare—a review. Sensors, 21(15), 5015.

Herring, D. R., Burleson, M. H., Roberts, N. A., & Devine, M. J. (2011). Coherent with laughter: Subjective experience, behavior, and physiological responses during amusement and joy. International Journal of Psychophysiology, 79(2), 211–218.

Hewig, J., Hagemann, D., Seifert, J., Gollwitzer, M., Naumann, E., & Bartussek, D. (2005). A revised film set for the induction of basic emotions. Cognition & Emotion, 19(7), 1095–1109. https://doi.org/10.1080/02699930541000084

Hsieh, D. L., & Hsiao, T. C. (2016). Respiratory sinus arrhythmia reactivity of internet addiction abusers in negative and positive emotional states using film clips stimulation. Biomedical Engineering Online, 15(1), 1–18.

Izard, C. E. (1977). Human emotions. Plenum Press.

Jenkins, L. M., & Andrewes, D. G. (2012). A new set of standardised verbal and non-verbal contemporary film stimuli for the elicitation of emotions. Brain Impairment, 13(2), 212–227.

Kalawski, J. P. (2010). Is tenderness a basic emotion? Motivation and Emotion, 34(2), 158–167.

Kelly, J. R., & Hutson-Corneaux, S. L. (1999). Gender emotion stereotypes are context specific. Sex Roles, 40, 107–120.

Koelstra, S., Muhl, C., Soleymani, M., Lee, J.-S., Yazdani, A., Ebrahimi, T., Pun, T., Nijholt, A., & Patras, I. (2011). DEAP: A database for emotion analysis; using physiological signals. IEEE Transactions on Affective Computing, 3(1), 18–31.

Kring, A. M., & Gordon, A. H. (1998). Sex differences in emotion: Expression, experience, and physiology. Journal of Personality and Social Psychology, 74(3), 686–703. https://doi.org/10.1037/0022-3514.74.3.686

Lang, P. J. (1980). Behavioral treatment and bio-behavioral assessment: Computer applications. In J. B. Sidowski, J. H. Johnson, & T. A. Williams (Eds.), Technology in mental health care delivery systems (pp. 119–137). Ablex Publishing.

Lang, P. J., Greenwald, M. K., Bradley, M. M., & Hamm, A. O. (1993). Looking at pictures: Affective, facial, visceral, and behavioral reactions. Psychophysiology, 30(3), 261–273. https://doi.org/10.1111/j.1469-8986.1993.tb03352.x

Laukka, P., & Elfenbein, H. A. (2021). Cross-cultural emotion recognition and in-group advantage in vocal expression: A meta-analysis. Emotion Review, 13(1), 3–11.

Levenson, R. W., Ekman, P., & Friesen, W. V. (1990). Voluntary facial action generates emotion-specific autonomic nervous system activity. Psychophysiology, 27, 363–384. https://doi.org/10.1111/j.1469-8986.1990.tb02330.x

Liang, Y.-C., Hsieh, S., Weng, C.-Y., & Sun, C.-R. (2013). Taiwan corpora of Chinese emotions and relevant psychophysiological data - standard Chinese emotional film clips database and subjective evaluation normative data. Chinese Journal of Psychology, 55(4), 601–621.

Malandrakis, N., Potamianos, A., Evangelopoulos, G., & Zlatintsi, A. (2011). A supervised approach to movie emotion tracking. Paper presented at the IEEE International Conference on Acoustics, Speech and Signal Processing, Prague, Czech Republic.

McHugo, G. J., Smith, C. A., & Lanzetta, J. T. (1982). The structure of self-reports of emotional responses to film segments. Motivation & Emotion, 6(4), 365–385.

Mesquita, B., & Haire, A. (2004). Emotion and culture. Encyclopedia of Applied Psychology, 1, 731–737.

Mihalache, S., & Burileanu, D. (2021). Dimensional models for continuous-to-discrete affect mapping in speech emotion recognition. University Politehnica of Bucharest Scientific Bulletin, Series C, 83(4), 137–148.

Nasoz, F., Alvarez, K., Lisetti, C. L., & Finkelstein, N. (2004). Emotion recognition from physiological signals using wireless sensors for presence technologies. Cognition, Technology & Work, 6(1), 4–14.

Nowicki, S., Jr., & Duke, M. P. (1994). Individual differences in the nonverbal communication of affect-the Diagnostic Analysis of Nonverbal Accuracy Scale. Journal of Nonverbal Behavior, 18(1), 9–35.

Philippot, P. (1993). Inducing and assessing differentiated emotion-feeling states in the laboratory. Cognition & Emotion, 7(2), 171–193.

Plutchik, R. (1980). Emotion: A psychobioevolutionary synthesis. Harper & Row.

Rahman, M. M., Sarkar, A. K., Hossain, M. A., Hossain, M. S., Islam, M. R., Hossain, M. B., … & Moni, M. A. (2021). Recognition of human emotions using EEG signals: A review. Computers in Biology and Medicine, 136, 104696.

Rainville, P., Bechara, A., Naqvi, N., & Damasio, A. R. (2006). Basic emotions are associated with distinct patterns of cardiorespiratory activity. International Journal of Psychophysiology, 61(1), 5–18. https://doi.org/10.1016/j.ijpsycho.2005.10.024

Ravindran, N., Zhang, X., Green, L. M., Gatzke-Kopp, L. M., Cole, P. M., & Ram, N. (2021). Concordance of mother-child respiratory sinus arrythmia is continually moderated by dynamic changes in emotional content of film stimuli. Biological Psychology, 161, 108053.

Rothman, A. D., & Nowicki, S. J., Jr. (2004). A measure of the ability to identify emotion in children’s tone of voice. Journal of Nonverbal Behavior, 28(2), 67–92.

Rottenberg, J., Kasch, K. L., Gross, J. J., & Gotlib, I. H. (2002). Sadness and amusement reactivity differentially predict concurrent and prospective functioning in major depressive disorder. Emotion, 2(2), 135–146.

Rottenberg, J., Ray, R. D., & Gross, J. J. (2007). Emotion elicitation using films. In J. A. Coan & J. J. B. Allen (Eds.), Handbook of emotion elicitation and assessment (pp. 9–28). Oxford University Press.

Russell, J. A. (1980). A circumplex model of affect. Journal of Personality and Social Psychology, 39, 1161–1178.

Russell, J. A. (2003). Core affect and the psychological construction of emotion. Psychological Review, 110(1), 145–172. https://doi.org/10.1037/0033-295x.110.1.145

Saganowski, S., Komoszyńska, J., Behnke, M., Perz, B., Kunc, D., Klich, B., … & Kazienko, P. (2022). Emognition dataset: emotion recognition with self-reports, facial expressions, and physiology using wearables. Scientific data, 9(1), 1–11.

Samson, A. C., Kreibig, S. D., Soderstrom, B., Wade, A. A., & Gross, J. J. (2016). Eliciting positive, negative and mixed emotional states: A film library for affective scientists. Cognition and Emotion, 30(5), 827–856. https://doi.org/10.1080/02699931.2015.1031089

Sauter, D. A., Eisner, F., Ekman, P., & Scott, S. K. (2010). Cross-cultural recognition of basic emotions through nonverbal emotional vocalizations. Proceedings of the National Academy of Sciences of the United States of America, 107(6), 2408–2412. https://doi.org/10.1073/pnas.0908239106

Schaefer, A., Nils, F., Sanchez, X., & Philippot, P. (2010a). Assessing the effectiveness of a large database of emotion-eliciting films: A new tool for emotion researchers. Cognition & Emotion, 24(7), 1153–1172.

Schaefer, A., Nils, F., Sanchez, X., & Philippot, P. (2010b). Assessing the effectiveness of a large database of emotion-eliciting films: A new tool for emotion researchers. Cognition and Emotion, 24(7), 1153–1172. https://doi.org/10.1080/02699930903274322

Schmidt, L. A., & Trainor, L. J. (2001). Frontal brain electrical activity (EEG) distinguishes valence and intensity of musical emotions. Cognition and Emotion, 15(4), 487–500. https://doi.org/10.1080/02699930126048

Scott, J. C. (1930). Systolic blood-pressure fluctuations with sex, anger, and fear. Comparative Psychology, 10(2), 97–114.

Shiota, M. N., Campos, B., Keltner, D., & Hertenstein, M. J. (2004). Positive emotion and the regulation of interpersonal relationships. In P. Philippot & R. S. Feldman (Eds.), The regulation of emotion (pp. 127–155). Erlbaum.

Simons, R. F., Detenber, B. H., Cuthbert, B. N., Schwartz, D. D., & Reiss, J. E. (2003). Attention to television : Alpha power and its relationship to image motion and emotional content. Media Psychology, 5(3), 283–301. https://doi.org/10.1207/S1532785XMEP0503

Soleymani, M., Lichtenauer, J., Pun, T., & Pantic, M. (2011). A multimodal database for affect recognition and implicit tagging. IEEE Transactions on Affective Computing, 3(1), 42–55.

Trnka, M., Darjaa, S., Ritomský, M., Sabo, R., Rusko, M., Schaper, M., & Stelkens-Kobsch, T. H. (2021). Mapping discrete emotions in the dimensional space: An acoustic approach. Electronics, 10(23), 2950.

Uhrig, M. K., Trautmann, N., Baumgartner, U., Treede, R.-D., Henrich, F., Hiller, W., & Marschall, S. (2016). Emotion elicitation: A comparison of pictures and films. Frontiers in Psychology, 7(180), 1–12. https://doi.org/10.3389/fpsyg.2016.00180

Vytal, K., & Hamann, S. (2010). Neuroimaging support for discrete neural correlates of basic emotions: A voxel-based meta-analysis. Journal of Cognitive Neuroscience, 22(12), 2864–2885. https://doi.org/10.1162/jocn.2009.21366

Wang, T., Zhao, Y., Xu, Y., & Zhu, Z. (2021). Comparison of response to Chinese and Western videos of mental-health-related emotions in a representative Chinese sample. PeerJ, 9, e10440–e10440. https://doi.org/10.7717/peerj.10440

Wang, Y., Song, W., Tao, W., Liotta, A., Yang, D., Li, X., … & Zhang, W. (2022). A systematic review on affective computing: Emotion models, databases, and recent advances. Information Fusion, 83, 19–52. https://doi.org/10.48550/arXiv.2203.06935

Watson, D., Clark, L. A., & Tellegen, A. (1988). Development and validation of brief measures of positive and negative affect: The PANAS scales. Journal of Personality & Social Psychology, 54(6), 1063–1070.

Westermann, R., Spies, K., Stahl, G., & Hesse, F. W. (1996). Relative effectiveness and validity of mood induction procedures: A meta-analysis. European Journal of Social Psychology, 26(4), 557–580.

Witvliet, C. V. O., & Vrana, S. R. (1995). Psychophysiological responses as indices of affective dimensions. Psychophysiology, 32, 436–443.

Xu, P., Huang, Y., & Luo, Y. (2010). Establishment and assessment of native Chinese affective video system. Chinese Mental Health Journal, 24(7), 551–554+561.

Zupan, B., & Babbage, D. R. (2017). Film clips and narrative text as subjective emotion elicitation techniques. The Journal of Social Psychology, 157(2), 194–210. https://doi.org/10.1080/00224545.2016.1208138

Zupan, B., & Eskritt, M. (2020). Eliciting emotion ratings for a set of film clips: A preliminary archive for research in emotion. The Journal of Social Psychology, 160(6), 768–789.

Acknowledgements

We thank all the participants in the study. We thank LetPub (www.letpub.com) for its linguistic assistance during the preparation of this manuscript.

Author information

Authors and Affiliations

Contributions

XYW and SLC designed the study, XYW analyzed the data and wrote the manuscript, HLZ helped with the writing of the introduction, XYW, HLZ, ZBZ, WCJ, JWF, WCX and YFX prepared the experimental materials and collected the data, ZBZ wrote the code of the experimental program, JWF contacted the DECAF staff and obtained authorization, SLC and HC supervised the study and revised the manuscript.

Corresponding author

Ethics declarations

Ethical approval and consent to participate

The study was approved by the Institutional Review Board (IRB) of the Department of psychology and behavioral science, Zhejiang University (IRB approval code: [2022]059). Informed consent was obtained from all individual participants included in the study.

Conflict of interest

The authors have no relevant financial or non-financial interests to disclose. The film clip set (DECAF; Abadi et al., 2015) examined in this study was authorized to be used by the DECAF authors.

Additional information

Publisher's note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Supplementary Information

Below is the link to the electronic supplementary material.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Wang, X., Zhou, H., Xue, W. et al. The hybrid discrete–dimensional frame method for emotional film selection. Curr Psychol 42, 30077–30092 (2023). https://doi.org/10.1007/s12144-022-04038-2

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12144-022-04038-2