Abstract

The correspondence between facial expressions and emotions has been widely examined in psychology. However, studies have yet to record spontaneous facial expressions under well-controlled circumstances, thus the characteristics of these expressions remain unclear. Therefore, we compared the morphological and dynamic properties of spontaneous and posed facial expressions related to four different emotions: surprise, amusement, disgust, and sadness. First, we secretly recorded participants’ spontaneous facial expressions as they watched films chosen to elicit these four target emotions. We then recorded posed facial expressions of participants when asked to intentionally express each emotion. Subsequently, we conducted detailed analysis of both the spontaneous and posed expressions by using the Facial Action Coding System (FACS). We found different dynamic sequences between spontaneous and posed expressions for surprise and amusement. Moreover, we confirmed specific morphological aspects for disgust (the prevailing expressions of which encompassed other emotions) and posed negative emotions. This study provides new evidence of the characteristics for genuinely spontaneous and posed facial expressions corresponding to these emotions.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

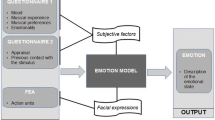

The correspondence between facial expression and experienced emotion is one of the most researched themes in psychology (e.g., Ekman 1980; Izard 1977). The best-known theory about this correspondence is basic emotion theory (BET; Ekman 1994), which assumes universal coherence between specific combinations of facial movement and basic emotions such as anger, surprise, disgust, fear, happiness, and sadness (Ekman 1993, 2003; Ekman et al. 2002). This theory has been supported by many previous studies (Ekman et al. 1980; Levenson et al. 1992; Mauss et al. 2005; Rosenberg and Ekman 1994). Ekman et al. (2002) probed these relationships using the Facial Action Coding System (FACS; Ekman and Friesen 1978). FACS is an objective, comprehensive system based on anatomy that has been often used to describe visible facial movements (e.g., Ekman and Rosenberg 2005; Sato and Yoshikawa 2007). Facial movements are identified in FACS by Action Units (AUs). For example, movement of the zygomatic major muscle to pull the corners of the lips (AU12) and contraction of the outer orbicularis oculi muscle, which raises the cheeks (AU6), imply happiness in BET (Ekman et al. 2002).

Although BET has been widely accepted, several studies have emphasized two problems of previous BET studies (e.g., Tcherkassof et al. 2007; Krumhuber and Scherer 2011). First, the dynamic aspects of facial expressions for various emotions have been ignored. The importance of dynamic aspects of facial expression has been underscored in previous studies of facial mimicry, facial recognition, and neuroimaging (e.g., Sato and Yoshikawa 2007; Wehrle et al. 2000; Mühlberger et al. 2011). For example, in a neuroimaging study, Mühlberger et al. (2011) compared how areas of the brain responded to the starting and stopping of the same emotional expression. It was indicated that the onset and endpoint of emotional facial expressions involved different activity in the brain networks, highlighting the importance of temporal characteristics of facial expressions for social communication. Thus, in order to comprehensively understand the characteristics of facial expressions, it is important to examine their dynamic aspects.

Second, although there are significant differences between spontaneous and posed facial expressions (Buck and VanLear 2002), most studies based in BET have relied on posed facial movements (e.g., Kaiser and Wehrle 2001; Matsumoto 1992), while few studies have investigated spontaneous movements (e.g., Dimberg et al. 2000; van der Schalk et al. 2011). The movements inherent to posed facial expressions display an emotion an expresser ostensibly intends to convey, whereas spontaneous facial expressions correspond to an expresser’s actual, unmitigated emotional experiences. Johnston et al. (2010) found that static spontaneous smiles were evaluated more positively than posed smiles. This result indicates that the evaluation of morphological aspects of posed and spontaneous facial expressions can differ significantly. Although several studies have found actors’ expressions to be relatively similar to spontaneous expressions (e.g., Carroll and Russell 1997; Gosselin et al. 1995; Krumhuber and Scherer 2011; Scherer and Ellgring 2007), actors’ expressions are designed to emphasize a message, and should be regarded as pseudo-spontaneous displays that involve intentional and strategic manipulation (Buck and VanLear 2002). That is, the artificial facial expressions generated by professional actors are different from the spontaneous expressions made in our daily lives.

Two studies have examined the morphological aspects of spontaneous facial expressions of athletes (Matsumoto and Willingham 2009) and those of blind and sighted children aged 8 to 11 (Galati et al. 2003). However, because the facial expressions in these studies were recorded with another person present, these study designs may have inhibited participants’ natural facial expressions. Social intention is related to emotion itself in these facial expressions (Hess and Kleck 1997; Kunzmann et al. 2005). Moreover, these previous studies did not examine the participants’ actual experiences of emotion and the dynamic aspects of facial expressions. To understand the correspondence between facial expressions and experienced emotions, we need to record spontaneous facial expressions under well-controlled circumstances (i.e., in the absence of other persons), so as to obtain spontaneous facial expressions that reflect only emotional aspects. We need to confirm participants’ experiences of the target emotions and the dynamic aspects of the face’s movements.

There have been several studies on the dynamic aspects of spontaneous facial expressions (e.g., Hess and Kleck 1997; Schmidt et al. 2006; Weiss et al. 1987), however, these studies had some methodological issues and experimental limitations. The findings of Weiss et al. (1987) were limited in scope as they employed a hypnotically induced affect approach in a restricted sample of only three female participants. Schmidt et al. (2006) only explored movement differences in posed and spontaneous smiling using tracking points. Hess and Kleck (1997) attempted to distinguish dynamic aspects of facial expressions, but only measured duration and did not report specific facial actions. In summary, several studies have examined the dynamic aspects of facial expressions, but the characteristics of spontaneous facial expressions of emotion remain unclear.

This study investigates the morphological and dynamic aspects of spontaneous facial expressions that reflect emotional experiences and excludes the confounding effects of social factors. We compared spontaneous and posed facial expressions of emotion in terms of the types of AUs displayed and the sequence of AUs using FACS. We defined the emotional facial expressions displayed intentionally by participants as posed, and those demonstrated by participants as they watched films that tended to elicit particular emotions as spontaneous. Many previous studies have used films as stimuli to examine the correspondence between emotions and facial expressions (e.g., Ekman et al. 1980; Fernández-Dols et al. 1997; Rosenberg and Ekman 1994). Films are recognized as appropriate material for experiments because of their predictable arousal of emotion, ecological validity, and ease of use (Gross and Levenson 1995). Following the example of these previous studies, we used films as stimuli to elicit specific emotions. We asked participants to watch the films alone to rule out any confounding effect of social factors as well as to ensure that well-controlled, spontaneous facial expressions are elicited. Furthermore, to prevent the conscious awareness of being on camera that has contributed to confounding factors in past studies, we made the recordings without the awareness of the participants.

We first recorded spontaneous facial expressions of emotion: surprise, amusement, disgust, and sadness. Next, we recorded posed facial expressions of emotion, instructing participants to express the target emotions. Then, we coded the facial expressions recorded in this study and analyzed each sequence. Finally, we compared the morphological and dynamic properties of spontaneous and posed facial expressions of emotion.

Method

Participants

Thirty-one undergraduate students (13 males; M age = 20.19, SD = 1.37, range = 18–24) at Hiroshima University participated in this experiment on a voluntary basis, and were given a monetary compensation of ¥500 ($4.56). All participants were native Japanese speakers with normal or corrected-to-normal vision. There was no evidence of the presence of neurological and psychiatric disorders. Written informed consent was obtained from each participant before the investigation, in line with protocols set and approved by the Ethical Committee of the Graduate School of Education, Hiroshima University.

Stimuli

From the films developed by Gross and Levenson (1995), we selected one film for each emotion: When Harry Met Sally, Pink Flamingos, The Champ, and Capricorn to represent amusement, disgust, sadness, and surprise, respectively. These films had been confirmed as eliciting the desired emotional experiences in Japanese participants (Sato et al. 2007). As a neutral film, we used a sample video (Wildlife in HD) preloaded onto the Windows 7 operating system. After we had collected data from 10 participants, we noticed that When Harry Met Sally elicited unintended negative emotions (e.g., confusion and embarrassment) more than the target emotion of amusement. Therefore, we replaced this film with another one, Trololo Cat. The clip lengths were as follows: 30 s for neutral, 51 s for amusement, 30 s for disgust, 171 s for sadness, and 49 s for surprise. All of the films had sound. Japanese subtitles were added in the films intended to elicit amusement and sadness.

For the emotional assessment of each film, we implemented the following methods used by Sato et al. (2007).

Discrete Emotions

For discrete emotional assessment, we used a 16-item emotion self-report inventory. The items included amusement, anger, arousal, confusion, contempt, contentment, disgust, embarrassment, fear, happiness, interest, pain, relief, sadness, surprise, and tension on a 9-point scale, ranging from 0 (not at all) to 8 (the strongest in my life). These discrete terms of emotion were presented in a randomized sequence for each participant.

Valence and Arousal

For broad emotional assessment, we used an affect grid to estimate emotional state in terms of valence and arousal (Russell et al. 1989). For valence, the scale ranged from 1 (unpleasant) to 9 (pleasant); for arousal, the scale ranged from 1 (sleepiness) to 9 (high arousal).

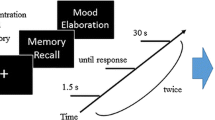

Procedure

The participants were tested individually. Participants were seated facing a personal computer screen (VPCF14AFJ, SONY). They were instructed to watch the films presented on the PC screen and to evaluate the emotions elicited by the films. Participants were also instructed to clear their mind of all thoughts, emotions, and memories between film presentations. After the instructions, participants were left alone in the room and initiated the presentation of each film stimulus by clicking the mouse button. After the neutral film was presented as a practice, the other four films followed. The order of the films was varied using incomplete counterbalancing. After viewing each film, participants assessed their emotional state using the 16-item emotion self-report inventory and the affect grid for rating valence and arousal. Half of the subjects completed the 16-item emotion self-report inventory before completing the affect grid, whereas the other did so in the reverse order. As they were watching each film, we secretly recorded their facial expressions via the camera embedded on the PC screen. The recordings were then used to analyze their spontaneous emotional facial expressions.

In debriefing sessions, participants were informed about the previously undisclosed recording of their facial expressions by embedded camera and were given the choice to either sign a second consent form permitting analysis of the recorded facial expressions or have us delete the data. If we did not obtain consent, the experiment was terminated at that point. If we obtained consent, we then continued with the recording of posed facial expressions. We instructed the participants “to express the target emotions” while gazing at the center of the PC screen until they felt they gave their best take. We recorded the resulting posed facial expressions according to the procedure of Takahashi and Daibo (2008). The order of the emotion categories was randomized across participants. We confirmed that none of the participants noticed that they were being recorded by camera during sessions.

Emotional Experiences of Participants

To confirm the validity of the stimuli intended to induce spontaneous emotional facial expressions, we examined the emotional experiences of participants when they were viewing the films. Figure 1 shows the mean intensity of the discrete emotion ratings. To investigate whether each film elicited the target emotion more strongly than the other emotions, planned comparisons were carried out according to the procedure of Sato et al. (2007) using Steel’s one-tailed t-test for each target emotion (e.g., the surprise ratings on the surprise film vs. the other ratings on the surprise film). For clarity, we have not shown the complete affect grid. More detailed results are available upon request.

In amusement film 2, the emotion of amusement was rated significantly higher than that of the other emotions (ts(40) > 2.29, ps < 0.05, gs > 0.69) except for happiness and interest (ts(40) > 1.82, ps < 0.10, gs > 0.55). As noted by a previous study (Sato et al. 2007), amusement, interest, and happiness can be synonymous; therefore, we considered the significantly high rating for these three emotions reasonable. Consequently, amusement film 2 was considered effective in eliciting the target emotion. For the surprise film, the surprise rating was significantly higher than that of the other emotions (ts(60) > 2.80, ps < 0.01, gs > 0.70) except for arousal (t(60) = 1.56, p = 0.11, g = 0.39). The co-occurrence of surprise with arousal was considered reasonable. Consequently, the surprise film was perceived as successful in eliciting the target emotion of surprise. In the disgust film, the target emotion of disgust was rated significantly higher than in the other emotions (ts(60) > 2.27, ps < 0.05, gs > 0.57). Among the nontarget emotions, contempt and embarrassment were marginally significant (ts(60) > 1.83, ps < 0.10, gs > 0.46), while surprise was not significantly different (ts(60) = − 0.35, ps = 0.37, gs = 0.09). This film elicited not only disgust but also surprise. With regard to the high-activation state, the content of the film, which depicts a woman eating dog feces, elicited strong negative emotions and feelings of surprise from participants, in accord with the previous study (Sato et al. 2007). Therefore, the co-occurrence of disgust and surprise was reasonable. In the sadness film, the target for sadness was rated significantly higher than the other emotions (ts(60) > 2.85, ps < .001, gs > 0.71). The sadness film is appropriate for the intended purpose.

In summary, some films elicited nontarget emotions as well as the target emotion, but the particular nontarget emotions experienced in this study seemed reasonable and consistent with the target emotion. Therefore, we assume that three of the stimuli (i.e., amusement film 2, the surprise film, and the sadness film) used in the present study could elicit the desired target emotion as well as those used in the previous study (Sato et al. 2007), whereas the disgust film as stimulus elicited both the desired target emotion and unrelated nontarget emotions.

Selection of Apex for Spontaneous Emotional Expressions

To detect the onset and apex of each spontaneous facial expression, eight people unaffiliated with the experiment were designated as evaluators (four males; M age = 21.75, SD = 0.71, range = 21–23; Fig. 2). The recorded facial expressions to be evaluated were presented without sound so that the audio accompanying the film would not affect the ratings. Each evaluator viewed and rated all 106 videos of spontaneous facial expressions, viewing each one only once. The length of each video was the same as that of the emotion elicitation films (30 fps). The order of the videos was randomized. The onset point was defined as the moment when any individual facial actions appeared, whereas the apex point was according to evaluators’ ratings. These apex points can be described as the perceptual apex. However, no facial movements of sadness were found, and hence we could not detect the onset and apex for spontaneous facial expressions of sadness. Therefore, the following analysis does not include expressions of sadness.

Facial Coding

We coded the facial expressions recorded in the spontaneous and posed sessions using FACS. We used facial expressions only from the neutral status to the apex of the target expression and did not examine the period after the apex because overt endpoints were vague and hard to identify. During the debriefing session, one participant refused to permit the use of her facial expressions. In addition, the spontaneous facial expressions of one participant and the posed facial expressions of two participants were not available due to problems with the camera. Furthermore, as discussed above, we replaced the amusement film after initiating the study. For these reasons, 19 participants’ spontaneous facial expressions of amusement, 29 participants’ spontaneous expressions of surprise and disgust, and 28 participants’ posed facial expressions of all four emotions were available for the following analyses.

A trained FACS coder scored all expressions frame by frame (30 fps) and recorded all AUs until each apex was reached. We excluded blinking (AU45), head movement, and gaze direction (AUs 50 to 66) because we were focusing on emotional facial movements.

To check reliability, a second trained FACS coder coded the same dataset frame by frame. Mean agreement regarding the presence of AUs (Cohen’s κ = 0.83) and reliability of FACS (.84; Ekman and Friesen 1978) were high enough to suggest intercoder reliability.

Statistical Analysis

To examine the differences in morphological aspects between spontaneous and posed facial expressions, we tested the frequency of appearance of each AU individually by following the method used in previous studies (Carroll and Russell 1997; Galati et al. 2003; Gosselin et al. 1995).

In order to determine the dynamic aspects of the expressions, there must be at least two kinds of movement in the face to permit inspection of sequences (Krumhuber and Scherer 2011). Moreover, if we used the most frequent occurrences of four or more types of AUs, the proportion of participants in which these AUs co-occurred decreased, and hence we were not able to examine dynamic aspects substantially. Therefore, we used the most frequent occurrences of three kinds of AUs, as observed at the apex of each emotional portrayal, to compare the differences in the sequential occurrence of AUs between spontaneous and posed expressions.

Results

Comparison between Spontaneous and Posed Expressions: Morphological Aspects

To compare the AUs between the posed and spontaneous expressions, we conducted McNemar’s test. Figure 3 shows the results of comparisons between two expressions for each emotion. Table 1 shows the frequency of occurrence of each AU at apex for surprise. Five AUs of posed expressions were more frequent than in spontaneous expressions: the Eyebrow Raiser (AU1 and AU2), the Upper Lid Raiser (AU5), the Lips Part (AU25), and the Jaw Drop (AU26), (χ2s (1, N = 28) > 4, ps < 0.05, ORs > 4.55).

Results from comparing the frequencies of spontaneous and posed facial expressions. We obtained direct permission from the participants to report their spontaneous expressions. The red outer frame indicates the characteristics of spontaneous expressions, and the green outer frame indicates the characteristics of posed expressions

With regard to amusement, each AU analyzed yielded no significant differences between spontaneous and posed expressions (Table 2).

With regard to disgust, the Upper Lip Raiser (AU10) and the Lip Corner Puller (AU12) occurred more often in spontaneous than in posed expressions (χ2s (1, N = 28) > 4.17, ps < 0.04, ORs > 11.5), whereas the Chin Raiser (AU17) appeared significantly more often in posed than in spontaneous expressions (χ2 (1, N = 28) = 5.14, p = 0.02, OR =13.5; Table 3).

Sequences of Spontaneous and Posed Expressions: Dynamic Aspects

Figure 4 shows the three most frequent types of AUs at apex. In the following analysis, an extended Fisher’s exact test was performed on the sequential model of each emotional portrayal of surprise and amusement. An exact binomial test was performed on the sequential model of disgust since the AUs at apex differed between spontaneous and posed expressions.

For surprise, the most co-occurred facial actions were composed of raising the eyebrow and opening eyes (AU1, AU2 and AU5; Fig. 4). AU5 occurred earlier in sequence in spontaneous surprise than in posed surprise, whereas AUs 1 + 2 or AUs 1 + 2 + 5 occurred earlier in sequence in posed than in spontaneous surprise (spontaneous n = 6, posed n = 15, p = 0.02; Fig. 5).

For amusement, smiling with opening the mouth and raising the cheeks were most commonly observed AUs (AU6, AU12, and AU25; Fig. 4). In spontaneous amusement, AU12 and AUs 6 + 12 occurred earlier in sequence than AU25, whereas AU25 occurred earlier than AU6 and AU12 in sequences of posed expressions. There was a significant difference in the sequential model of AUs between spontaneous and posed amusement (spontaneous n = 12, posed n = 13, p = 0.02; Fig. 5).

For disgust, the three most frequently observed AUs for spontaneous disgust were composed of squinting eyes and raising the upper lip (AU6, AU7 and AU10; Fig. 4). Although AU6 and AU10 occurred earlier than AU7, there was no significant sequential difference (n = 8, p = 0.18). For posed disgust, the most co-occurred facial actions were a glare and raising the chin (AU4, AU7, and AU17; Table 3). We could not identify a consistent sequential model of posed disgust, because only four participants displayed all three of these AUs. However, a general tendency for AU4 or AU 4 + 7 to occur earlier than the other AUs was observed. Although AU25 tied for third place among spontaneous expressions (13 of 29, 45 %) and was third among posed expressions (8 of 28, 29 %), we excluded it because this AU offered less dynamic information (e.g., in the posed sessions, four participants left their mouths open from the beginning of the recording).

For posed sadness, AU4 (6 of 28, 21 %), AU7 (3 of 28, 11 %), and AU17 (10 of 28, 36 %) were the three most frequently observed AUs (Fig. 4). However, as only three participants displayed more than two AUs, there was minimal information from which to construct a sequence. Thus, we did not test any statistical hypothesis.

Discussion

The present study investigated the characteristics of spontaneous facial expressions of emotion, focusing on both morphological and dynamic properties. First, we compared the frequency of each AU between spontaneous and posed expressions. Then, to examine the sequences, we identified the three most frequent AUs at apex for each emotion. Finally, to examine the dynamic aspects of the expressions, we compared the sequences between spontaneous and posed expressions or confirmed the specific sequences of individual emotions.

Surprise

We observed significant morphological differences in the frequency of each AU between spontaneous and posed expressions at apex. The Eyebrow Raiser (AU1 and AU2), the Upper Lid Raiser (AU5), the Lips Part (AU25), and the Jaw Drop (AU26) were less frequently observed in spontaneous than in posed expressions (Fig. 3). These AUs are regarded as main components in BET (Ekman et al. 2002). One might suspect that this difference in frequency could be the consequence of a relative failure to observe spontaneous expressions of surprise. However, the percentage (11 %) of co-occurrences of AU1, AU2, AU5, and AU25 to express spontaneous surprise in this study is greater than that in previous studies that examined the co-occurrence of these AUs, to which the percentage of participants exhibiting the same set of AUs in response to surprise events ranged from 0 % to 7 % (e.g., Reisenzein et al. 2006; Vanhamme 2003; Wang et al. 2008). The results of this study thus identify the existence of a spontaneous surprise expression more clearly than previous studies, and they also support the claim that posed facial expressions are more exaggerated than spontaneous facial expressions (Hess and Kleck 1994).

There was a difference between spontaneous and posed expressions for the dynamic aspect of surprise (Fig. 5). In spontaneous surprise, AU5 was displayed first, followed by AUs 1 + 2. This spontaneous sequence accords with sequences rooted in perceptual expectations of observers (Jack et al. 2014). However, in posed surprise, AUs 1 + 2 preceded AU5, or AUs 1 + 2 + 5 were simultaneously expressed. We thus determined that the sequential pattern of spontaneous surprise among participants was different from that in posed expressions. Considering that someone who is surprised wants to search for information to understand an unexpected situation, the opening of the eyes may be an adaptive response for gathering more visual information (Darwin 1872). Susskind et al. (2008) also provided evidence of enhanced visual-field size in situations of fear and explained that faster eye movements are important for locating the source of a surprising event. Our study supports these claims that adaptive functions in eye movements occur because of spontaneous surprise.

Overall, the results for the morphological aspects of surprise were in accord with previous studies (Ekman et al. 2002; Reisenzein et al. 2006), but with regard to the dynamic aspects, the sequential pattern of spontaneous surprise differed from that of posed surprise. This result shows the importance of the appearance order of AUs when judging facial expressions.

Amusement

We did not find any significant differences in the frequency of each AU between spontaneous and posed expressions of amusement. Frank et al. (1993) pointed out that the presence of the Cheek Raiser (AU6) in spontaneous expressions of amusement was considered the distinguishing factor from posed expressions. However, we did not observe this distinction. As AU6 in posed expressions of amusement has been observed in previous studies (Carroll and Russell 1997; Krumhuber and Scherer 2011), it is possible that AU6 is, in fact, not a consistent distinguishing factor.

As Fig. 4 and Table 2 show, although the three most commonly observed AUs at apex were the same in both spontaneous and posed expressions: AU6, the Lip Corner Puller (AU12), and the Lips Part (AU25), there was a difference in the dynamic sequences (Fig. 5). In the sequential model for amusement, sequences of spontaneous expressions were characterized by AU12 and AU6, followed by AU25. On the other hand, in posed expressions AU25 usually preceded AU12, followed by AU6. In posed expressions, the preceding activity of opening the mouth might represent the preparatory state of a more exaggerated smile and thus might reflect a strategic motivation, whereas a spontaneous sequence displays smiles that indicate authentic pleasure (Ekman 2003).

These results indicate that differences between spontaneous and posed expressions of amusement were observed not in terms of the frequency of facial actions but in the order of facial actions.

Disgust

We found significant differences in the frequency of each AU between spontaneous and posed expressions of disgust. The Upper Lip Raiser (AU10) and the Lip Corner Puller (AU12) occurred more often in spontaneous than in posed expressions (Fig. 3). AU10 has been regarded as the central component of disgust in BET (Ekman et al. 2002). The hypothesized origin of disgust as a rejection impulse to avoid disease through toxic or contaminated food suggests that AU10 has evolved as a response to something revolting (Darwin 1872; Rozin et al. 2008). Thus, AU10 might represent disgust in negative experiences (Galati et al. 2003). AU12 in spontaneous disgust showed the possibility that elicited emotions might be not only restricted to disgust but also other negative emotions. For example, in our film depicting a woman consuming fecal matter, AU12 might play a role in the emotional elicitation of contempt or embarrassment. Alternatively, to decrease stress by producing strong negative emotions including surprise, this action might have been developed for adaptation (Kraft and Pressman 2012). Meanwhile, the Chin Raiser (AU17) appeared significantly more often in posed than in spontaneous expressions (Fig. 3). Kaiser and Wehrle (2001) indicated that AU17 was observed in posed negative emotions. Thus, AU17 might reflect more of a posed negative expression than other AUs.

As for the AUs of disgust expressions at apex (Fig. 4 & Table 3), in spontaneous expressions, the Cheek Raiser (AU6), the Lid Tightener (AU7), and AU10 were the three most frequently expressed AUs. As already noted, AU10 is anticipated in expressions of disgust according to BET (Ekman et al. 2002). AU7 has been frequently observed in fear situations (Galati et al. 2003), and the eye constriction associated with AU6 and AU7 has been found in infant cry-faces to convey distress (Messinger et al. 2012). AU6 and AU7 are consistent with visual sensory rejection, and disgust has been shown to dampen perception (Susskind et al. 2008). Therefore, this study would provide a different view of the typical facial expressions used to represent disgust. In the case of posed expressions, the Brow Lowerer (AU4), AU7, and AU17 were the three most frequently observed AUs (Fig. 4). Kaiser and Wehrle (2001) proposed that the combination of AUs 4 + 7 + 17 represents posed sadness or hot anger. Nevertheless, these AUs were observed in posed expressions of disgust in this study, suggesting that posed expressions of disgust, sadness, and hot anger might have similar morphological aspects.

For the sequential model of the emotional portrayal of disgust, we could not establish a consistent sequence model of both expressions. It is possible that the processing of each facial action is carried out without regard to order in these cases.

Given this inconsistent evidence, no specific sequence could be observed with regard to dynamic aspects of disgust. However, the results present the possibility that posed expressions might differ substantially from spontaneous expressions across various negative emotions in terms of their morphological aspects.

Sadness

For morphological properties of sadness, spontaneous facial expressions were not observed. One possible reason for this finding is that the film used in this study is too short or too unconnected to the viewer to elicit visible expressions of sadness; it could be argued that the nature of sadness requires a longer-term experience or one to which the individual feels personally linked (Bonanno and Keltner 1997; Ekman 2003). In posed expressions, AU4, AU7, and AU17 were most frequently observed (Fig. 4 & Table 4). As stated above, the combination of AUs 4 + 7 + 17 was observed in posed sadness, hot anger (Kaiser and Wehrle 2001), and posed disgust in this study. This result suggests the possibility that these AUs especially AU17, are involved in the display of negative emotional content with intention.

As for the dynamic aspects of posed sadness, only three participants expressed the co-occurrence of more than two AUs; thus, no sequence could be observed.

As a whole, the fact that no visible facial movements were identified in spontaneous facial expressions of sadness could simply indicate that the film used was not suitable to elicit strong feelings of sadness. Similarly, the inconsistencies in the properties of posed expressions of sadness may demonstrate that this expression is difficult for nonprofessional actors to express intentionally.

Limitations and Future Studies

Although the present study has revealed new morphological characteristics and dynamic aspects that may help to identify the spontaneous facial expressions corresponding to surprise, amusement, and disgust, several limitations must be noted. First, some methodological issues were present. We reasoned that generating posed expressions invokes some degree of conscious awareness because of the fact that cameras and observers in prior experiments were shown to cause a change in participants’ facial expressions (Barr and Kleck 1995; Ekman et al. 1980; Bainum et al. 1984; Matsumoto and Willingham 2006). Therefore, the order of generation was constant in this study. As a result, choosing to begin with the spontaneous generation of expressions might have affected the posed generation of them in undetermined ways. Moreover, the present films elicited not only the desired target emotions but also nontarget emotions. Thus, it might be difficult to conclude that a specific facial expression is related to a single emotion because of its associations with several emotions. The comparison between spontaneous facial expressions and posed facial expressions may be problematic because of these differences. In order to investigate the characteristics of facial expression that correspond more closely to the pure experience of a single emotion, future studies should incorporate a method that more effectively separates target emotions from nontarget emotions.

Generalizing our observed sequences of various portrayals of emotions to daily life is difficult because we analyzed only the non-social sequences of participants who expressed the three most observed AUs for each emotion. Thus, future studies will be necessary to carefully distinguish social spontaneous facial expressions from non-social ones. In addition, as the results of this study are based on small samples of young Japanese participants, generalization to other groups is uncertain.

Although we wished to investigate the dynamic properties of facial expressions, the duration and endpoint of each facial movement were not measured in this study. We considered only the one apex after onset. For example, a few expressions returned to a neutral state until the end of the video clip. Therefore, this study focused on facial expressions from no facial actions to apex based on perceptual ratings. However, several studies have shown that suddenness of onset and ending of facial motions, along with overall duration, can be important in distinguishing between spontaneous and posed expressions (e.g., Hess and Kleck 1997; Schmidt et al. 2006). To obtain better information about the differences between spontaneous and posed facial expressions, further examination of these cues will be necessary. Furthermore, our limitations in dynamic analysis underscore the need for a more systematic means of interfacing with data in this domain. It could be useful for future research to employ automated methods for detecting and recognizing facial expressions (Corneanu et al. 2016). Such methods might entail 3D face trackers that create wireframe models and extract appearance feature geometry (Cohen et al. 2003; Zeng et al. 2009). Multimodal approaches may enable more precise ways to represent and capture the elusive dynamic dimensions of facial expressions.

Summary and Conclusion

This study provides evidence of differences between spontaneous and posed facial expressions of emotions. With regard to surprise, there were differences in both morphological and dynamic aspects; with regard to amusement, there were differences in dynamic aspects; with regard to disgust, there were differences in morphological aspects. Moreover, we confirmed specific morphological aspects for posed negative emotions. Research on emotions has depended heavily on the use of posed and static facial expressions that have been based on BET. However, the dynamic sequences of facial movements associated with actual emotional experiences have been ignored (e.g., Ekman and Friesen 1976; Elfenbein and Ambady 2002; Russell 1994). Through well-controlled investigation of the characteristics of spontaneous facial expressions, research in this area can be more productive in capturing how emotions affect facial expressions.

References

Bainum, C. K., Lounsbury, K. R., & Pollio, H. R. (1984). The development of laughing and smiling in nursery school children. Child Development, 1946–1957. doi:10.2307/1129941.

Barr, C. L., & Kleck, R. E. (1995). Self-other perception of the intensity of facial expressions of emotion: do we know what we show? Journal of Personality and Social Psychology, 68(4), 608–618. doi:10.1037/0022-3514.68.4.608.

Bonanno, G. A., & Keltner, D. (1997). Facial expressions of emotion and the course of conjugal bereavement. Journal of Abnormal Psychology, 106(1), 126–137. doi:10.1037/0021-843X.106.1.126.

Buck, R., & VanLear, C. A. (2002). Verbal and nonverbal communication: distinguishing symbolic, spontaneous, and pseudo-spontaneous nonverbal behavior. Journal of Communication, 52(3), 522–541. doi:10.1111/j.1460-2466.2002.tb02560.x.

Carroll, J. M., & Russell, J. A. (1997). Facial expressions in Hollywood’s portrayal of emotion. Journal of Personality and Social Psychology, 72(1), 164–176. doi:10.1037/0022-3514.72.1.164.

Cohen, I., Sebe, N., Garg, A., Chen, L. S., & Huang, T. S. (2003). Facial expression recognition from video sequences: temporal and static modeling. Computer Vision and Image Understanding, 91(1), 160–187. doi:10.1016/S1077-3142(03)00081-X.

Corneanu, C. A., Oliu, M., Cohn, J. F., & Escalera, S. (2016). Survey on RGB, 3D, thermal, and multimodal approaches for facial expression recognition: history, trends, and affect-related applications. IEEE Transactions on Pattern Analysis and Machine Intelligence, 99. doi:10.1109/TPAMI.2016.2515606.

Darwin, C. (1872). The expression of emotion in man and animals. New York: Oxford University Press.

Dimberg, U., Thunberg, M., & Elmehed, K. (2000). Unconscious facial reactions to emotional facial expressions. Psychological Science, 11(1), 86–89. doi:10.1111/1467-9280.00221.

Ekman, P. (1980). The face of man. New York: Garland Publishing, Inc..

Ekman, P. (1993). Facial expression and emotion. American Psychologist, 48(4), 384–392. doi:10.1037/0003-066X.48.4.384.

Ekman, P. (1994). All emotions are basic. In P. Ekman & R. J. Davidson (Eds.), The nature of emotion: Fundamental questions (pp. 15–19). New York: Oxford University Press.

Ekman, P. (2003). Emotions revealed. New York: Times Books.

Ekman, P., & Friesen, W. V. (1976). Pictures of facial affect. Palo Alto: Consulting Psychologists Press.

Ekman, P., & Friesen, W. V. (1978). Facial action coding system. Palo Alto: Consulting Psychologists Press.

Ekman, P., & Rosenberg, E. (2005). What the face reveals (2nd ed.). New York: Oxford University Press.

Ekman, P., Freisen, W. V., & Ancoli, S. (1980). Facial signs of emotional experience. Journal of Personality and Social Psychology, 39(6), 1125–1134. doi:10.1037/h0077722.

Ekman, P., Friesen, W. V., & Hager, J. C. (2002). Facial action coding system (2nd ed.). Salt Lake City: Research Nexus eBook.

Elfenbein, H. A., & Ambady, N. (2002). On the universality and cultural specificity of emotion recognition: a meta-analysis. Psychological Bulletin, 128(2), 203–235. doi:10.1037/0033-2909.128.2.203.

Fernández-Dols, J. M., Sanchez, F., Carrera, P., & Ruiz-Belda, M. A. (1997). Are spontaneous expressions and emotions linked? An experimental test of coherence. Journal of Nonverbal Behavior, 21(3), 163–177. doi:10.1023/A:1024917530100.

Frank, M. G., Ekman, P., & Friesen, W. V. (1993). Behavioral markers and recognizability of the smile of enjoyment. Journal of Personality and Social Psychology, 64(1), 83–93. doi:10.1037/0022-3514.64.1.83.

Galati, D., Sini, B., Schmidt, S., & Tinti, C. (2003). Spontaneous facial expressions in congenitally blind and sighted children aged 8-11. Journal of Visual Impairment and Blindness, 97(7), 418–428.

Gosselin, P., Kirouac, G., & Doré, F. Y. (1995). Components and recognition of facial expression in the communication of emotion by actors. Journal of Personality and Social Psychology, 68(1), 83–96. doi:10.1037/0022-3514.68.1.83.

Gross, J. J., & Levenson, R. W. (1995). Emotion elicitation using films. Cognition & Emotion, 9(1), 87–108. doi:10.1080/02699939508408966.

Hess, U., & Kleck, R. E. (1994). The cues decoders use in attempting to differentiate emotion-elicited and posed facial expressions. European Journal of Social Psychology, 24(3), 367–381. doi:10.1002/ejsp.2420240306.

Hess, U., & Kleck, R. (1997). Differentiating emotion elicited and deliberate emotional facial expressions. In P. Ekman & E. L. Rosenberg (Eds.), What the face reveals (2nd ed., pp. 271–288). New York: Oxford University Press.

Izard, C. E. (1977). Human emotions. New York: Plenum Press.

Jack, R. E., Garrod, O. G., & Schyns, P. G. (2014). Dynamic facial expressions of emotion transmit an evolving hierarchy of signals over time. Current Biology, 24(2), 187–192. doi:10.1016/j.cub.2013.11.064.

Johnston, L., Miles, L., & Macrae, C. N. (2010). Why are you smiling at me? Social functions of enjoyment and non-enjoyment smiles. British Journal of Social Psychology, 49(1), 107–127. doi:10.1348/014466609X412476.

Kaiser, S., & Wehrle, T. (2001). Facial expressions as indicators of appraisal processes. In K. R. Scherer, A. Schorr, & T. Johnstone (Eds.), Appraisal processes in emotion: Theory, methods, research(285–300). New York: Oxford University Press.

Kraft, T. L., & Pressman, S. D. (2012). Grin and bear it the influence of manipulated facial expression on the stress response. Psychological Science, 23(11), 1372–1378. doi:10.1177/0956797612445312.

Krumhuber, E. G., & Scherer, K. R. (2011). Affect bursts: dynamic patterns of facial expression. Emotion, 11(4), 825–841. doi:10.1037/a0023856.

Kunzmann, U., Kupperbusch, C. S., & Levenson, R. W. (2005). Behavioral inhibition and amplification during emotional arousal: a comparison of two age groups. Psychology and Aging, 20(1), 144–158. doi:10.1037/0882-7974.20.1.144.

Levenson, R. W., Ekman, P., Heider, K., & Friesen, W. V. (1992). Emotion and autonomic nervous system activity in the Minangkabau of west Sumatra. Journal of Personality and Social Psychology, 62(6), 972–988. doi:10.1037/0022-3514.62.6.972.

Matsumoto, D. (1992). American-Japanese cultural differences in the recognition of universal facial expressions. Journal of Cross-Cultural Psychology, 23(1), 72–84. doi:10.1177/0022022192231005.

Matsumoto, D., & Willingham, B. (2006). The thrill of victory and the agony of defeat: spontaneous expressions of medal winners of the 2004 Athens Olympic games. Journal of Personality and Social Psychology, 91(3), 568–581. doi:10.1037/0022-3514.91.3.568.

Matsumoto, D., & Willingham, B. (2009). Spontaneous facial expressions of emotion of congenitally and noncongenitally blind individuals. Journal of Personality and Social Psychology, 96(1), 1–10. doi:10.1037/a0014037.

Mauss, I. B., Levenson, R. W., McCarter, L., Wilhelm, F. H., & Gross, J. J. (2005). The tie that binds? Coherence among emotion experience, behavior, and physiology. Emotion, 5(2), 175–190. doi:10.1037/1528-3542.5.2.175.

Messinger, D. S., Mattson, W. I., Mahoor, M. H., & Cohn, J. F. (2012). The eyes have it: making positive expressions more positive and negative expressions more negative. Emotion, 12(3), 430–436. doi:10.1037/a0026498.

Mühlberger, A., Wieser, M. J., Gerdes, A. B., Frey, M. C., Weyers, P., & Pauli, P. (2011). Stop looking angry and smile, please: start and stop of the very same facial expression differentially activate threat- and reward-related brain networks. Social Cognitive and Affective Neuroscience, 6(3), 321–329. doi:10.1093/scan/nsq039.

Reisenzein, R., Bördgen, S., Holtbernd, T., & Matz, D. (2006). Evidence for strong dissociation between emotion and facial displays: the case of surprise. Journal of Personality and Social Psychology, 91(2), 295–315. doi:10.1037/0022-3514.91.2.295.

Rosenberg, E. L., & Ekman, P. (1994). Coherence between expressive and experiential systems in emotion. Cognition & Emotion, 8(3), 201–229. doi:10.1080/02699939408408938.

Rozin, P., Haidt, J., & McCauley, C. R. (2008). Disgust. In M. Lewis, J. M. Haviland-Jones, & L. F. Barrett (Eds.), Handbook of emotions (3rd ed., pp. 757–776). New York: Guilford Press.

Russell, J. A. (1994). Is there universal recognition of emotion from facial expressions? A review of the cross-cultural studies. Psychological Bulletin, 115(1), 102–141. doi:10.1037/0033-2909.115.1.102.

Russell, J. A., Weiss, A., & Mendelsohn, G. A. (1989). Affect grid: a single-item scale of pleasure and arousal. Journal of Personality and Social Psychology, 57(3), 493–502. doi:10.1037/0022-3514.57.3.493.

Sato, W., & Yoshikawa, S. (2007). Spontaneous facial mimicry in response to dynamic facial expressions. Cognition, 104(1), 1–18. doi:10.1016/j.cognition.2006.05.001.

Sato, W., Noguchi, M., & Yoshikawa, S. (2007). Emotion elicitation effect of films in a Japanese sample. Social Behavior and Personality: An International Journal, 35(7), 863–874. doi:10.2224/sbp.2007.35.7.863.

Scherer, K. R., & Ellgring, H. (2007). Are facial expressions of emotion produced by categorical affect programs or dynamically driven by appraisal? Emotion, 7(1), 113–130. doi:10.1037/1528-3542.7.1.113.

Schmidt, K. L., Ambadar, Z., Cohn, J. F., & Reed, L. I. (2006). Movement differences between deliberate and spontaneous facial expressions: Zygomaticus major action in smiling. Journal of Nonverbal Behavior, 30(1), 37–52. doi:10.1007/s10919-005-0003-x.

Susskind, J. M., Lee, D. H., Cusi, A., Feiman, R., Grabski, W., & Anderson, A. K. (2008). Expressing fear enhances sensory acquisition. Nature Neuroscience, 11(7), 843–850. doi:10.1038/nn.2138.

Takahashi, N., & Daibo, I. (2008). The results of researches on facial expressions of the Japanese: its issues and prospects. Institute of Electronics, Information, and Communication Engineers, 108(317), 9–10.

Tcherkassof, A., Bollon, T., Dubois, M., Pansu, P., & Adam, J. M. (2007). Facial expressions of emotions: a methodological contribution to the study of spontaneous and dynamic emotional faces. European Journal of Social Psychology, 37(6), 1325–1345. doi:10.1002/ejsp.427.

Van Der Schalk, J., Fischer, A., Doosje, B., Wigboldus, D., Hawk, S., Rotteveel, M., et al. (2011). Convergent and divergent responses to emotional displays of in-group and outgroup. Emotion, 11(2), 286–298. doi:10.1037/a0022582.

Vanhamme, J. (2003). Surprise … surprise. An empirical investigation on how surprise is connected to customer satisfaction (No. ERS-2003-005-MKT). ERIM Report Series Research in Management, Erasmus Research Institute of Management (ERIM). Retrieved from http://hdl.handle.net/1765/273 .

Wang, N., Marsella, S., & Hawkins, T. (2008). Individual differences in expressive response: a challenge for ECA design. Proceedings of the 7th International Joint Conference on Autonomous Agents and Multiagent Systems, Portugal, 3, 1289–1292.

Wehrle, T., Kaiser, S., Schmidt, S., & Scherer, K. R. (2000). Studying the dynamics of emotional expression using synthesized facial muscle movements. Journal of Personality and Social Psychology, 78(1), 105–119. doi:10.1037/0022-3514.78.1.105.

Weiss, F., Blum, G. S., & Gleberman, L. (1987). Anatomically based measurement of facial expressions in simulated versus hypnotically induced affect. Motivation and Emotion, 11(1), 67–81. doi:10.1007/BF00992214.

Zeng, Z., Pantic, M., Roisman, G. I., & Huang, T. S. (2009). A survey of affect recognition methods: audio, visual, and spontaneous expressions. IEEE Transactions on Pattern Analysis and Machine Intelligence, 31(1), 39–58. doi:10.1109/TPAMI.2008.52.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Funding

This research was supported by the Center of Innovation Program of the Japan Science and Technology Agency (JST) and by the Japan Society for the Promotion of Science (JSPS) KAKENHI Grants 26285168 and 25870467.

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical Approval

All procedure performed in studies involving human participants were in accordance with the Ethical Committee of the Graduate School of Education, Hiroshima University.

Informed Consent

Written informed consent was obtained from all individual participants before and after the investigation.

Rights and permissions

About this article

Cite this article

Namba, S., Makihara, S., Kabir, R.S. et al. Spontaneous Facial Expressions Are Different from Posed Facial Expressions: Morphological Properties and Dynamic Sequences. Curr Psychol 36, 593–605 (2017). https://doi.org/10.1007/s12144-016-9448-9

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12144-016-9448-9