Abstract

Introduction

The Sniffin’ Sticks test battery is currently considered the best alternative for the measurement of olfactory threshold, discrimination and identification capabilities. These tests still suffer from limitations, however. Most noticeably, the olfactory threshold test is an intensive task which requires participants to smell a large number of olfactory stimuli. This proves especially problematic when measuring olfactory performance of elderly patients or screening research subjects, as sensory adaptation plays an important role in olfactory perception.

Methods

In the current study, we have determined that the cause of this limitation lies with the test’s single-staircase procedure (SSP). Consequentially, we have devised an alternative ascending limits procedure (ALP). We here compared data obtained using both procedures, following a within-subject design with 40 participants. Olfactory threshold scores as well as number of trials required to complete the two procedures were investigated.

Results

The results show that the ALP provides reliable and correct olfactory threshold ratings, as the values showed a good correlation with those obtained using the SSP and mean values did not differ significantly. Task duration, however, did show a highly significant difference, completing the SSP required participants to complete over 40% more trials compared to the ALP.

Discussion

The here presented methodological improvement can save time and, more importantly, reduce participants’ cognitive and sensory strain, which is not only more comfortable, but also limits the influence of adaptation, making any measured data more reliable.

Implications

Improving standard screening methods can directly enhance the reliability of any future study using this procedure.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Methods of psychophysics have been continually improving for over one and a half centuries (Fechner 1860). No matter the modality of interest, all psychophysical methods, and research in general, are forced to make a trade-off between accuracy and measurement time. In the visual domain this trade-off is relatively small, as functional paradigms can be designed using a short stimulus time as well as a short inter-stimulus interval, easily allowing the presentation of over 1000 trials per subject (Zizlsperger et al. 2012; Zizlsperger et al. 2014). Using such paradigms to investigate olfactory perception is impossible due to multiple factors including the type of stimulus required and relatively strong sensory adaptation and saturation processes (Dalton 2000; Jacob et al. 2003).

The Chemosensory Clinical Research Centre (CCCRC) test (Cain et al. 1988) plays an important role in the development of olfactory threshold testing, forming a basis for current and future threshold testing methods. This test used plastic squeeze bottles containing an n-butanol solution in up to 11 different concentrations (4% at highest concentration, diluted by a factor of three per step). The test was performed following a one-directional, two-alternative forced choice ascending limits procedure. The test ended upon four consecutive correct answers at the same concentration level. A major limitation of this two-alternative procedure is the relatively high chance level for correct answers; following the criterion of four sequential correct answers grants a chance level, of establishing an incorrect threshold, of 6.25% per concentration level. Hummel et al. (1997) described further limitations of this test and similar alternatives, regarding task specificity, limited reusability and cost-effectiveness. Consequentially, Hummel et al. (1997) introduced the “Sniffin’ Sticks” test which, among others, includes a threshold testing method. Their test also uses n-butanol as a target odour, but the authors replaced the squeeze bottles with plastic odour-filled pens. The test uses 16 concentration steps (4% at highest concentration, diluted by a factor of two per step) and a three-alternative forced choice paradigm and follows a single-staircase procedure (Doty 1991). This test has become a widely accepted standard for both clinical and research use.

Despite having significantly improved olfactory research methodology, a new limitation of the Sniffin’ Sticks threshold test is recognised. By combining a three-alternative forced choice task with a staircase procedure with a potential total of 16 intensity levels, the number of stimuli that a patient or research subject must smell to determine their olfactory threshold increases drastically. This additional attentional load is especially problematic when the test is conducted on elderly or diseased patients or when the threshold task or, alternatively, the full olfactory testing battery for threshold, discrimination and identification (TDI) performance (Hummel et al. 1997; Wolfensberger 2000) is used as a screening method before further research participation. To reduce the number of trials and time required to obtain olfactory threshold scores, we here suggest and verify a testing method using the Sniffin’ Sticks stimulation method following an ascending limits procedure.

Materials and Methods

Participants

Forty-two physically healthy participants (26 females) aged between 19 and 53 years (mean (M) age = 28 years; standard deviation (SD) = 8.5 years) took part in the study. Participants were screened for known olfactory deficits or limitations. Data of two subjects, who reported losing attention during either one or both tasks, was excluded from further analysis (mean age after exclusion = 28 years, SD = 8.6 years, 24 females).

Sniffin’ Sticks

The Sniffin’ Sticks describe the olfactory testing materials first used by Kobal et al. (1996) and Hummel et al. (1997). These materials consist of felt tip plastic pens containing 4 ml of liquid odourant solutions. To present an odour, pen caps were removed shortly before presenting each pen and reattached directly after to prevent the pens from drying out or releasing more odours into the room. The Sniffin’ Sticks set used here to measure olfactory thresholds consists of 16 target pens filled with n-butanol diluted in aqua conservata (purified water with 0.075% methyl-4-hydroxybenzoate and 0.025% propyl-4-hydroxybenzoate), adhering to the standards of the German Medicinal Materials Codex (Deutscher Arznemittel-Codex, DAC). The pen with the highest concentration contained 4% n-butanol; subsequent levels were diluted by orders of two. Additionally, the set contained 32 blanks (two per target), containing just the solvent.

Testing Procedure

Participants were instructed to not use strong smelling shampoo, cologne or perfume and to not smoke, eat or drink anything except water up to 1 h before testing. Olfactory stimuli were presented using three Sniffin’ Sticks per trial. Two Sniffin’ Sticks per triplet contained only the relatively odourless solvent; the third contained the n-butanol solution. Each Sniffin’ Stick was presented at approximately 2 cm from the subject’s nostrils, alternating between them twice over a total presentation duration of, approximately, 4 s. A break of 6 s was given between the presentations of each single pen to allow the participant’s nose to clear. A break of at least 20 s was assured between triplet presentations. As such, the minimum time needed per triplet equals 44 s. Participants completed the tasks twice, using both a single-staircase and an ascending limits procedure. The two procedures were separated by a 15-min break during which the participants filled out a sociodemographic questionnaire. Testing order was pseudorandomised between participants. During the explanation of the task, participants were presented the highest intensity pen to familiarise them with the target odour and to exclude the possibility of an anosmia towards this odourant.

The single-staircase procedure (SSP) was randomised to start with a triplet of the lowest or second lowest intensity level. Incorrectly identifying the n-butanol pen would increase the testing intensity by two concentration steps. This procedure was repeated until the participant correctly identified the n-butanol pen of a single intensity level twice in a row; this triggered a reversal of the staircase, reducing the intensity level by one, continuing until the next incorrect answer. This would again trigger a reversal of the staircase, though the intensity level increased by just one. This procedure was then repeated until the seventh reversal. The final threshold score was determined by averaging the last four reversals.

The ascending limits procedure (ALP) started with a triplet of the lowest intensity level. An incorrect answer increased the intensity level by one step; correct answers were followed by a triplet of the same intensity. The task ended when a participant correctly identified the target at a single intensity level four times in a row; the threshold score equals this last intensity level. In the scenario where a participant correctly identified the n-butanol pen three times in a row before providing an incorrect answer, an additional trial was presented at the same intensity level. Correctly identifying the n-butanol pen in this additional trial did not end the task. Instead, they were logged to be interpreted in later analysis. These additional trials were conducted to account for potential accidental misses of above threshold intensities which could artificially increase the ALP threshold scores.

In addition to obtaining threshold scores, the number of triplets presented (trials) for each test was documented.

Data Analysis

Data were analysed using IBM SPSS Statistics software Version 22 (Armonk, New York, United States) and MATLAB (The MathWorks, Inc., Natick, Massachusetts, United States). Data obtained through both procedures was compared and verified using multiple statistical tests. First, in order to identify covariates and variables of interest, we ran a linear mixed model, with maximum likelihood estimation for “Test score” (measured olfactory threshold). This yielded an optimal model including the repeated fixed variable “Test type” (defining which of the procedures was used) and fixed variable “Test order” (defining whether the procedure was performed first or second), the factors gender as well as age were added as covariates. Additionally, the interaction term “Test type” × “Test order” was created, allowing us to explore whether this effect differed between testing procedures. Post hoc tests were subjected to Bonferroni corrections to account for multiple dependent comparisons.

In order to verify the data obtained using ALP, we calculated Pearson’s R correlation between the SSP and ALP test scores (ScoreSSP and ScoreALP). To help interpret differences in correlations compared to previous studies, mean absolute error (MAE) values were calculated for both our data through \( \frac{\sum_{i=1}^n\left|{\mathrm{Score}}_{\mathrm{SSP}i}-{\mathrm{Score}}_{\mathrm{ALP}i}\right|}{n} \)and data obtained by Albrecht et al. (2008), which was made available to us, at four time points, separated by 35 min, 105 min, and 35.1 days through \( \frac{\sum_{i=1}^n\left|{\mathrm{Score}}_{\mathrm{Time}\ \mathrm{point}\ 1}-{\mathrm{Score}}_{\mathrm{Time}\ \mathrm{point}\ x}\right|}{n} \) where x represents each follow-up measurement. An MAE approach is chosen over the mean squared error alternative to avoid differential influences of outliers resulting from differential sample sizes between data sets.

To compare the duration of the procedures, we ran a linear mixed model, similar to the one for “Test score”, for the measure “Trials”, which defines the number of Sniffin’ Sticks triplets that had to be presented in order to obtain an olfactory threshold.

Results

With regard to threshold scores, no significant effects of age (F(1, 40) = 0.000, p = .995) or gender (F(1, 40) = 0.391, p = .536) were found. Mean threshold scores, 8.2 (SD = 2.17) for SSP and 8.0 (SD = 2.38) for ALP, did not differ significantly (F(1, 40) = 0.488, p = .489). The main effect of testing order did not approach significance either (F(1, 40) = 0.014, p = .908). Interestingly, test type and test order did show a significant interaction (F(1, 40) = 6.79, p = .013). Post hoc t tests indicate that subjects who performed the ALP first, obtained higher scores for both the SSP (mean difference = 1.58, p = .017) and the ALP (mean difference = 1.50, p = .041) compared to subjects who performed the SSP first (Fig. 1).

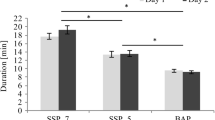

Mean olfactory threshold scores and trial numbers. Mean olfactory threshold scores (error bars represent SD), separated by test type (a) and test order (b). Neither of the effects approached significance. c Interaction between test order and test type. Scores obtained using the SSP were higher when the test was performed second; ALP scores were higher when the test was performed first. d Number of trials required to complete the SSP and ALP. Each trial consists of three Sniffin’ Sticks. The SSP took over 40% more trials to complete, compared to the ALP. Statistical significance is indicated by one asterisk for p < .05 and two asterisks for p < .001

Examining the difference in threshold scores between the two procedures further, we found a strong, positive correlation (r 38 = .591, p < .001, Fig. 2) between ScoreSSP and ScoreALP. The MAE for our current data was 1.60. For the data obtained by Albrecht et al. (2008), MAE’s for time points 2, 3 and 4 as compared to ScoreTime point 1 equal 0.92, 0.92 and 1.79, respectively.

When comparing test duration through the number of trials, we find a strong, significant effect of test type (F(1, 74) = 48.8, p < 0.001); on average, participants were required to smell an additional 6.7 (range = − 7 to 17) triplets in order to complete the SSP (M = 23.25 trials, SD = 3.88) compared to the ALP (M = 16.55 trials, SD = 5.09). Assuming a minimum trial duration of 44 s (three stimuli, two short breaks and one long break before the next triplet), this translates to a reduction in the time required of, on average 5 min, ranging up to 12 min. No significant effects were found for age (F(1, 74) = 2.26, p = .140) and gender (F(1, 74) = 0.773, p = .382). Similar to the result for threshold score, no significant main effect for test order was established (F(1, 74) = 0.611, p = .437), yet a significant interaction was found with test type (F(1, 75) = 4.60, p = .035). Neither of the post hoc comparisons reached significance (p = .260 and p = .073 for SSP and ALP, respectively), indicating a relatively weak interaction. Note that due to the structure of the procedure, number of trials required to complete the ALP correlated significantly with ScoreALP (r 38 = − 0.839, p < 0.001) while this was not the case for ScoreSSP (r 38 = − 0.108, p = .507), which would explain this effect.

Discussion

Regarding threshold levels, our results indicate that using our iteration of an ALP yields olfactory threshold values similar to those obtained through the SSP which is commonly used for the Sniffin’ Sticks olfactory threshold test. Subjects’ age did not influence their respective olfactory threshold scores. While Hummel et al. (1997) did report a significant age effect, this is, most likely, related to the higher mean value and wider range of age (M = 49.5, range = 18–84 years). For example, Albrecht et al. (2008), whose subjects’ age (M = 27.9) resembled our sample (M = 28), also failed to show an effect of age. Furthermore, mean threshold scores did not differ between genders or testing orders.

Comparing the total number of trials required to complete each of the threshold tasks, we find that the SSP took over 40% more trials than the ALP did. Reducing the time required to complete the task by an average of 5 min is a small yet useful benefit; the main improvement is, however, seen in the reduced sensory and cognitive strain placed on research participants or patients, limiting the influence of adaptation or exhaustion. Note that in the current ALP iteration, we included additional trials when participants provided three correct answers followed by an incorrect answer. Initially, we were concerned whether “accidental misses”, would artificially lower the mean threshold scores since these would have a larger influence on ALP than on SSP; this was, however, not the case. As a result, these additional trials remain unused by the actual task and could thus, theoretically, be subtracted from the total number of trials required, further increasing the average difference between trial numbers of both tasks to 7.1, rendering the SSP 44% longer than the ALP. For statistical correctness, however, they were included in all analyses as we cannot, with absolute certainty, state that these additional trials did not affect the measurement.

The interaction between test order and test type revealed that participants who performed the ALP first obtained higher scores, indicating higher olfactory sensitivity, on both procedures. We have considered multiple scenarios which might explain this effect; in both hypothetical cases of participant fatigue and a learning effect, we would expect to see a main effect rather than an interaction, effectively ruling out these explanations. In another hypothesised scenario where only the test with the longer duration (SSP) induces fatigue, we would expect to see an interaction with a single significant slope rather than two anti-correlated slopes, as is currently the case. Having ruled out these causes, we conclude that this small effect is the results of between-subject differences, caused by several subjects who scored relatively high or low on both tasks; these differences are, however, not harmful to our data as our analyses of interest rely on within-subject differences.

To verify the reliability of the ALP scores, we correlated them to the SSP scores yielding a significant Pearson’s R of 0.59. Having tested each subject twice in short succession, we expect to have limited the influence of any factors besides procedure type as much as possible (Stevens et al. 1988). While olfactory thresholds are relatively variable, any remaining factors are expected to have affected both procedures equally. Several studies have conducted test-retest reliability measures for the SSP (Albrecht et al. 2008; Hummel et al. 1997; Oleszkiewicz et al. 2017), as commonly used with the Sniffin’ Sticks, in addition to multiple suggested variations on this procedure. Results of several of these tests range from r = 0.20 to r = 0.85. For the initial development of the Sniffin’ Sticks olfactory threshold test, Hummel et al. (1997) calculated both test-retest reliability and compared threshold scores obtained using the Sniffin’ Sticks, to those obtained using a variation on the CCCRC threshold task (Cain et al. 1988). For the Sniffin’ Sticks, the authors report a test-retest reliability of 0.61. For the CCCRC variation, a test-retest reliability of 0.34 was reported. Scores obtained through both tests showed a correlation of 0.36. Using the Sniffin’ Sticks, Albrecht et al. (2008) obtained relatively high test-retest reliability values when comparing the test performed within 30 to 105 min of each other (r 62 = 0.79–0.85), these values dropped drastically when test results were correlated with those obtained several weeks later (r 62 = 0.43–0.54). As we have calculated mean absolute errors (MAE) for both our data and that obtained by Albrecht et al. (2008), we can quantify this variation difference; in the current study, threshold scores obtained using the different procedures showed a mean absolute difference of 1.60 intensity levels. The olfactory threshold as measured by Albrecht et al. (2008) at the first time point, showed a mean absolute difference of 0.92 intensity levels compared to the second and third measurements, this difference increased to 1.79 intensity levels when compared to the fourth measurement per subject. This increase in variance, of a maximum of 0.68 intensity levels, is well within the acceptable range considering the benefits offered by this procedure, especially when considering the lower test-retest reliability reported by the other studies discussed here.

In a recent study, Oleszkiewicz et al. (2017) showed that increasing the chemical complexity of odours used improved measurement reliability for an SSP. Using standard n-butanol solutions, presented with Sniffin’ Sticks, the authors report a test-retest reliability of just 0.31. This value dropped to a non-significant correlation of 0.2 when using a phenyl ethyl alcohol (PEA) solution, presented in glass bottles. Combined with the findings of previous studies (Zernecke et al. 2011; Zernecke et al. 2010) that failed to show any correlation between threshold scores obtained using n-butanol, PEA and isoamyl butyrate in normosmic subjects, this indicates that n-butanol provides the most reliable threshold values. When presenting mixtures of three or six odours, however, reliability of threshold scores increased to 0.57 and 0.56, respectively. Combining these findings with our own data raises the idea of maximising reliability by measuring olfactory thresholds using odours of high chemical complexity, tested using Sniffin’ Sticks following an ALP.

By exchanging the SSP for an ALP, the current study altered only a single methodological variable compared to the psychophysical testing procedure used by Hummel et al. (1997). On a larger scale, future improvements should explore all variables involved in these methods. Two main variables, only shortly touched upon in the current study, are the number of alternatives per trial and the pre-set, threshold defining, limits. While an increase to four, or more, stimuli per trial becomes problematic for olfaction, a reduction to two, similar to Cain et al. (1988), can be considered. This significant increase in chance level should, however, be compensated by a change in threshold level; increasing the number of correct sequential answers from four to six brings the influence of chance to a level similar that in the current study. Similarly, reducing the number of turning points in the SSP reduces the number of trials required; at the cost of accuracy, the size of this effect would have to be verified in a future study.

Additionally, more complex methods can be considered; Linschoten et al. (2001) provided a thorough comparison of an ALP, a one-up-two-down staircase method (Wetherill and Levitt 1965), and a maximum-likelihood parameter estimation by sequential testing (ML-PEST) method (Hall 1968), using two-alternative forced choice (AFC) tasks. Rather than comparing results of the different procedures, the authors applied Monte Carlo simulations to estimate predetermined threshold values using each of the three methods. Being a fully adaptive method, ML-PEST sacrifices a certain level of simplicity offered by SSP and, especially, ALP methods for a significant reduction in prediction error. Psychophysiological accuracy will always be limited by what is feasible within the measured modality, what the patient or participant can endure and, at a more basic level, what are the time and resources available. This trade-off between accuracy and efficiency should be considered by each researcher on a case-by-case basis; patients have different needs than research participants and a screening serves a different purpose than olfactory sensitivity as a variable. The use of Monte Carlo simulations is an ideal way to bypass the impracticality of comparing a multitude of variables by testing numerous participants; we therefore highly recommend future studies to use this approach to verify the statistical basis of their procedures. It is, however, important that the practicality of any devised method is verified by testing actual participants using actual stimuli, as a theoretical model cannot fully recognise all limitations of a study. Two important, difficult to model factors are, for example, participant fatigue and adaptation.

As for the current study, considering the relatively strong correlation and small MAE between thresholds obtained using the ALP and the proven SSP, we can state that olfactory sensitivity scores as measured following the, here described, ALP, using Sniffin’ Sticks, are a reliable alternative to the current SSP standard. Above all, this alternative requires a participant to smell 21 fewer odour-filled pens, rendering the test more comfortable and suitable for participants, in clinical as well as research settings, and researchers alike.

Authorship Contributions

Rik Sijben designed and performed the study, analysed the data and wrote the manuscript. Claudia Panzram prepared, tested and maintained olfactory stimuli and assisted with obtaining and analysing of the data. Rea Rodriguez-Raecke assisted in data analysis and reviewed the manuscript. Thomas Haarmeier provided analytic tools required for data analysis and interpretation, obtained funding for the project and reviewed the manuscript. Jessica Freiherr designed the study, assisted in data analysis, obtained funding for the project and reviewed the manuscript.

References

Albrecht J, Anzinger A, Kopietz R, Schöpf V, Kleemann AM, Pollatos O, Wiesmann M (2008) Test–retest reliability of the olfactory detection threshold test of the Sniffin’Sticks. Chem Senses 33(5):461–467. https://doi.org/10.1093/chemse/bjn013

Cain WS, Goodspeed RB, Gent JF, Leonard G (1988) Evaluation of olfactory dysfunction in the Connecticut chemosensory clinical research center. Laryngoscope 98(1):83–88. https://doi.org/10.1288/00005537-198801000-00017

Dalton P (2000) Psychophysical and behavioral characteristics of olfactory adaptation. Chem Senses 25(4):487–492. https://doi.org/10.1093/chemse/25.4.487

Doty RL (1991) Olfactory system. Smell and taste in health and disease. Raven Press, New York

Fechner TG (1860) Elemente der Psychophysik vol 1. Breitkopf und Härtel, Leipzig

Hall J (1968) Maximum-likelihood sequential procedure for estimation of psychometric functions. The Journal of the Acoustical Society of America 44(1):370–370. https://doi.org/10.1121/1.1970490

Hummel T, Sekinger B, Wolf SR, Pauli E, Kobal G (1997) ‘Sniffin’ Sticks’: olfactory performance assessed by the combined testing of odor identification, odor discrimination and olfactory threshold. Chem Senses 22(1):39–52. https://doi.org/10.1093/chemse/22.1.39

Jacob TJ, Fraser C, Wang L, Walker V, O’Connor S (2003) Psychophysical evaluation of responses to pleasant and mal-odour stimulation in human subjects: adaptation, dose response and gender differences. International Journal of Psychophysiology: Official Journal of the International Organization of Psychophysiology 48(1):67–80. https://doi.org/10.1016/S0167-8760(03)00020-5

Kobal G, Hummel T, Sekinger B, Barz S, Roscher S, Wolf S (1996) “Sniffin’sticks”: screening of olfactory performance. Rhinology 34(4):222–226

Linschoten MR, Harvey LO, Eller PM, Jafek BW (2001) Fast and accurate measurement of taste and smell thresholds using a maximum-likelihood adaptive staircase procedure. Attention Perception Psychophysics 63(8):1330–1347. https://doi.org/10.3758/BF03194546

Oleszkiewicz A, Pellegrino R, Pusch K, Margot C, Hummel T (2017) Chemical complexity of odors increases reliability of olfactory threshold testing. Sci Rep 7:39977. https://doi.org/10.1038/srep39977

Stevens JC, Cain WS, Burke RJ (1988) Variability of olfactory thresholds. Chem Senses 13(4):643–653. https://doi.org/10.1093/chemse/13.4.643

Wetherill G, Levitt H (1965) Sequential estimation of points on a psychometric function. Br J Math Stat Psychol 18(1):1–10. https://doi.org/10.1111/j.2044-8317.1965.tb00689.x

Wolfensberger M (2000) Sniffin’Sticks: a new olfactory test battery. Acta Otolaryngol 120(2):303–306. https://doi.org/10.1080/000164800750001134

Zernecke FT, Haegler K, Albrecht J, Bruckmann H, Wiesmann M (2011) Correlation analyses of detection thresholds of four different odorants. Rhinology 49:331–336

Zernecke et al (2010) Comparison of two different odorants in an olfactory detection threshold test of the Sniffin’Sticks. Rhinology 48:368–373

Zizlsperger L, Sauvigny T, Haarmeier T (2012) Selective attention increases choice certainty in human decision making. PLoS One 7(7):e41136. https://doi.org/10.1371/journal.pone.0041136

Zizlsperger L, Sauvigny T, Händel B, Haarmeier T (2014) Cortical representations of confidence in a visual perceptual decision. Nat Commun 5:3940. https://doi.org/10.1038/ncomms4940

Author information

Authors and Affiliations

Corresponding authors

Ethics declarations

Funding

The project was funded by a grant from the Interdisciplinary Centre for Clinical Research within the Faculty of Medicine at the RWTH Aachen University (IZKF Project N7-9).

Conflict of Interest

The authors declare that they have no conflicts of interest.

Ethical Approval

All procedures performed in studies involving human participants were in accordance with the ethical standards of the institutional and/or national research committee and with the 1964 Helsinki declaration and its later amendments or comparable ethical standards.

The study was approved by the ethics board of the Medical Faculty of the University Hospital, RWTH Aachen University.

Informed Consent

Informed consent was obtained from all individual participants included in the study.

Rights and permissions

About this article

Cite this article

Sijben, R., Panzram, C., Rodriguez-Raecke, R. et al. Fast Olfactory Threshold Determination Using an Ascending Limits Procedure. Chem. Percept. 11, 35–41 (2018). https://doi.org/10.1007/s12078-017-9239-1

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12078-017-9239-1