Abstract

An RGB YCbCr Processing method (RYPro) is proposed for underwater images commonly suffering from low contrast and poor color quality. The degradation in image quality may be attributed to absorption and backscattering of light by suspended underwater particles. Moreover, as the depth increases, different colors are absorbed by the surrounding medium depending on the wavelengths. In particular, blue/green color is dominant in the underwater ambience which is known as color cast. For further processing of the image, enhancement remains an essential preprocessing operation. Color equalization is a widely adopted approach for underwater image enhancement. Traditional methods normally involve blind color equalization for enhancing the image under test. In the present work, processing sequence of the proposed method includes noise removal using linear and non-linear filters followed by adaptive contrast correction in the RGB and YCbCr color planes. Performance of the proposed method is evaluated and compared with three golden methods, namely, Gray World (GW), White Patch (WP), Adobe Photoshop Equalization (APE) and a recently developed method entitled “Unsupervised Color Correction Method (UCM)”. In view of its simplicity and computational ease, the proposed method is recommended for real-time applications. Suitability of the proposed method is validated by real-time implementation during the testing of the Autonomous Underwater Vehicle (AUV-150) developed indigenously by CSIR-CMERI.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

1 Introduction

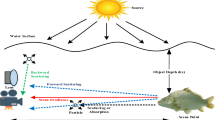

It is well known that underwater image processing differs extensively from visual image processing, primarily due to three major underwater channel impairments, i.e. absorption, scattering and refraction [1–5]. These factors, responsible for introduction of noise, color cast, low contrast and lower brightness, motivate the development of suitable algorithms to nullify these effects without any manual supervision. Similar is the issue of color fading, whereby colors like red and yellow almost disappear with increasing depths [6], which is the reason for domination of either the blue or the green color. Numerous spatial domain methods have been developed to filter out the aforementioned image quality impairments.

To account for color cast problem, Iqbal et al [7] proposed a color equalization method to enhance the quality of underwater images. The method involves calculation of the scale factor of the dominating color plane in the RGB color space for equalizing the remaining colors. However, blind color equalization [7–9] also degrades the color quality of the image which is highly undesirable. Another method to reduce color cast is based on the Beer’s law. Beer’s law is commonly employed to correct the pixel intensity by calculating the amount of light absorption in water. In this method, missing wavelengths are calculated assuming that all the objects in the scene are at the same depth. Though this approach improves the quality of image, assuming a homogeneous medium and equal depth of all objects in a scene might not be valid in all situations. Moreover, the color components depend upon various factors like depth of the scene, size of water molecules, density of water medium and local temperature [10], which necessitates precise calibration of the enhancement parameters.

As mentioned earlier, absorption of light by the water is one of the major causes for image quality degradation. In order to compensate for light absorption, underwater vehicles illuminate the ambience by artificial lights for image acquisition, though non-uniform artificial illumination generates shadows in the scene [11]. To counteract these problems, methods based on the physics of image formation are developed to neutralize the detrimental effects of absorption and backscattering. Schechner & Karpel [3] proposed an algorithm based on a group of images through a polarizer at different angles to nullify the physical effects of image quality degradation. Improvement in lighting and sensing systems [12] for illuminating an object before capturing of the image is an alternative approach in those methods. Another prominent method of addressing the enhancement aspect is to use a lens filter [13], whereby enhancement of the image is ensured due to decrements in absorption and backscattering effects. Since the lens filter absorbs a portion of illumination, the approach is therefore more appropriate for terrestrial image enhancement [3; 12; 14].

Statistical methods [15] are also employed for color correction using Markov random field by correlating the prior color information with the current one. In these methods, the underlying assumption is that the neighboring scene is almost similar to that of the current image. In practice, effective utilization of on-board electrical energy is highly important for increased mission endurance of AUVs, and for this reason, on-board lights are typically flashed only at the time of the image capture, leading to insufficient information of the neighboring scene. Prior information may not also be similar to the current one in heterogeneous underwater ambience – a reason that might render enhancement based on statistical methods infeasible. Researchers have also worked on color image enhancement using various thresholding schemes [16] which might not be applicable globally as predefining the threshold values may not be appropriate for images affected by color cast.

In spite of the availability of a wide variety of methods, color cast remains an issue in the context of underwater image enhancement. In this work, to address the color cast aspect, and subsequently improve the contrast and brightness of underwater images, a hybrid transform domain enhancement method has been proposed. The purpose of the proposed RGB YCbCr Processing (RYPro) is to improve the visual quality of the image by mitigating color cast with subsequent contrast enhancement. In the proposed method, instead of conventional blind color equalization, development of an automatic quality enhancement technique has been attempted involving noise reduction using linear and non-linear filters, color enhancement in the RGB color space and lastly enhancement in the YCbCr color space. Performance of the proposed algorithm is evaluated and compared with four prominent methods.

The rest of the paper is organized as follows: Section 2 discusses the contributions of our work. The proposed RYPro method is presented in Section 3. Section 4 presents the performance analysis, and comparison with four prominent methods reported in literature. Section 5 describes the methodology adopted for online implementation. Section 6 concludes the paper with a few inferences and remarks on the RYPro method, and the scope of future work.

2 Key contributions of the work

The basic objective of the present work is to enhance the quality of underwater images in real time. As discussed in Section 1, a wide variety of methods are available for the said requirement, each with its inherent advantages and disadvantages. All therefore might not be implemented in real-time, at least in the underwater context. In this regard, the authors have perceived a strong need to come up with a suitable algorithm for real-time imaging in underwater ambience.

The method, proposed in this work is different from the traditional color equalization techniques in several aspects. In Iqbal et al [7], the dominant color plane is selected by comparing the maximum intensity values from each R-G-B plane; corresponding multiplicative factors are calculated for color equalization purpose. Owing to inhomogeneous intensity distribution of underwater imagery, a few pixels may possess larger intensities in the least dominant plane as well. One may thus quickly realize that image enhancement based on correcting factors in dominant planes may end up in image quality deterioration. The proposed RYPro method addresses the issue by using contrast stretching and Contrast Limited Adaptive Histogram Equalization (CLAHE) in place of blind color equalization in the less dominant color planes. It must be highlighted that considering performance of the proposed method based on five recently developed image quality assessment parameters (described in Section 4), the proposed method outcompetes three conventional golden methods and one of the recently developed methods. Current results indicate improved contrast and color enhancement while leaving visual information of the imagery almost untouched. Furthermore, time required to execute RYPro is also reasonably low, which might justify its usage in real-time image enhancement applications. Utility of the proposed RYPro on real-life underwater images captured by AUV-150 symbolizes its real-time suitability.

3 Proposed RYPro method

The present section describes the proposed automatic image quality enhancement technique. As may be seen in figure 1, the basic processing sequence of the proposed method are – noise reduction using linear and non-linear filters (to nullify the effect of noise introduced by scattering), adaptive contrast correction in the RGB color space (to neutralize the low contrast and color cast effects due to scattering and refraction, and absorption, respectively) and enhancement of the luminance component (Y) of the YCbCr color space (to diminish the effects of low brightness due to absorption). The following subsections describe the sequence of operations for the proposed algorithm in detail.

3.1 Noise reduction using linear and non-linear filters

It is well known that backscattering of light in the underwater channel effectively introduces noise on the captured image. The effect of such noise is typically mitigated by employing a suitable linear and/or non-linear filter. In this work, a two dimensional (2D) Weierstrass transform [17] having kernel size of 13×13 and standard deviation (σ) of 0.5 is adopted for linear noise reduction (i.e. noise reduction using linear filters). However, noise reduction with linear filters results in blurring the noisy edge pixels. To alleviate the backscattering noise (associated with region pixels), anisotropic and median filters are employed in the proposed algorithm (i.e. noise reduction using non-linear filters). Anisotropic diffusion [18] is defined as

where Δ denotes the Laplacian, ∇ denotes the gradient, ÷(⋅⋅) is the divergence operator on the parameters in the parentheses and c(x,y,t) is the diffusion coefficient. The term c(x,y,t) controls the rate of diffusion and is usually chosen as a function of the image gradient so as to preserve the edges of the image under test.

Perona & Malik [18] and Gerig et al [19] proposed two functions for the diffusion coefficient, which are defined as follows

and

It needs to be mentioned here that considering the fact that brightness values and conduction coefficients are linked with the vertices and arcs, respectively [18], the proposed method applies the concept of anisotropic diffusion by discretizing (1) on a square lattice.

In the proposed algorithm, one of the basic objectives is to remove noise using non-linear filters rather than smoothening the image edges. Therefore, the diffusion coefficient as defined in (2) (with diffusion constant K as unity) is used for correcting the region pixels rather than the boundary points, thereby allowing (2) to decay more rapidly.

In underwater imaging, light is reflected not only from the target but also from the suspended particles. It is these reflected rays which interfere with those of the line-of-sight rays, thus introducing backscattering phenomena [3]. This phenomenon of constructive/destructive interference ends up as a granular noise in the captured underwater image, which is commonly referred to as “speckle”. It needs to be mentioned here that such granular noise is also a common feature of synthetic aperture radar (SAR) and ultrasonic imagery, which is typically suppressed using adaptive or non-adaptive median filters [20; 21]. Motivated by this, as a final step a median filter is used for reduction of speckle and salt-pepper noise as defined in (4).

3.2 Enhancement in RGB color space

As a natural phenomenon, underwater images have low contrast alongside showing a tendency for one color dominancy and low sharpness. These channel impairments can be diluted to a great extent via methodical processing in the RGB color space after noise removal as discussed in Subsection 3.1. Figure 2 depicts the sequence of operations in the RGB color space adopted in the proposed method for this purpose.

3.2.1 Contrast stretching

Due to low contrast of underwater images, the dynamic range of the histogram is quite low. Contrast stretching is therefore adopted to redistribute the pixel values between 0 and 255. A contrast stretching algorithm [7] uses a linear scaling function of the pixel values as

where I N (x, y) = normalized pixels intensity value after contrast stretching;

I(x,y)= pixel intensity value before contrast stretching;

I M i n = lowest intensity of the parent image;

I M a x = highest intensity of the parent image;

I d M i n = minimum pixel intensity in desired range;

I d M a x = maximum pixel intensity in desired range.

A contrast stretching operation assumes sufficient dynamic range in the image signal, which is contrastingly not the case for underwater imagery. For this reason, the minimum and maximum pixel intensity values are obtained from the parent image in the proposed method. It is observed that on applying the contrast stretching algorithm in the lesser dominant color planes (estimated by comparing the average pixel intensity values of the R, G and B planes) of a underwater color image, the low contrast problem remains properly addressed, producing an image suitable for further processing.

3.2.2 Contrast limited adaptive histogram equalization

As mentioned earlier, color cast is a major reason for degraded underwater image quality. Having performed contrast stretching, histogram equalization is considered as a next step. It is observed that typically the distribution of pixel intensities is non-homogeneous in underwater imagery and for the same reason, global histogram equalization [22] is not suitable to address the color cast problem. In the proposed approach, the well-known Contrast Limited Adaptive Histogram Equalization (CLAHE) algorithm [23] is adopted for this purpose. The CLAHE algorithm is suitable for images with lighter as well as dark portions. It equalizes the histogram of different sections of an image and exploits the intensity normalized pixels to redistribute lightness values, thus improving local contrast of the image to extract the hidden information. The procedure adopted for CLAHE operation is shown in figure 3.

As described in Pizer et al [23], if P k denotes the frequency of the occurrence of a pixel’s intensity value that is associated with a bin for each possible intensity, then P k is defined as

where n k is the number of pixels associated with k th intensity value.

As a next step, the cumulative distribution function (cdf) of each of the intensity values is estimated as

where x=1,2,3,…,M(number of rows), y=1,2,3,…,N(number of columns). The histogram equalized image I ′(x,y) is given as

where c d f m i n is the minimum cdf value of the corresponding section.

After testing several images with different combinations of parameters, the following procedure is adopted for CLAHE operation in the proposed algorithm. First, the entire image is subdivided into 2x2 sized tiles and individual histograms are equalized, where a clip limit of 0.05 is considered to avoid over amplification of contrast. As a last step, tiles are seamlessly conjoined via interpolation. On that front, region pixels are bilinearly interpolated, whereas the boundary pixels are linearly interpolated. A comparative result obtained by applying conventional histogram equalization method and CLAHE on an underwater image is shown in figure 4. In figure 4, the x-axis of the histogram (original and enhanced images) ranges from 0 to 255. Clearly, CLAHE, as expected, generates better visual images than that obtained utilizing the conventional histogram equalized method.

3.3 Color preserving processing in YCbCr color space

It is well known that by processing only the luminance component of an image and leaving the chromatic components undisturbed, one can preserve colors in the enhanced image. One may note that contrast enhancement of the luminance component improves the brightness of an image. For this purpose, the popular YCbCr color model used extensively in digital video and photography has been considered for transform domain operation in the proposed method (as shown in figure 5). In this model, Y, Cb and Cr represent the luminance; the difference between the blue component and a reference value; and the difference between the red component and a reference value, respectively.

By virtue of the property of the YCbCr model, enhancing the luminance component automatically enhances the brightness of the image, leaving Cb and Cr untouched. This assures improvement in underwater image quality, which commonly suffer from both low and non-uniform brightness issues. It is for this reason that the RGB image is first transformed into the YCbCr domain using (9) for subsequent operation in the transform domain.

After transforming into the YCbCr plane, the contrast stretching method (as in (5)) is applied on the luminance component. In order to alleviate the noise introduced in the process, if any, median and Laplacian filters are employed subsequently for image smoothening and edge sharpening, respectively. After the enhancement of the luminance component in the YCbCr plane, the enhanced image is transformed back to the RGB domain using (10), which concludes the proposed method.

Figure 6 depicts the stepwise results obtained by applying RYPro on Hammer (a1–h1) and Wheel (a2–h2) images captured from 20.574 cm. Figures 7 and 8 demonstrate the results and performance of the proposed image enhancement algorithm for different distances. The next section evaluates and discusses the performance of the RYPro method.

Stepwise images: (a1,a2) Original image; (b1,b2) After noise removal; (c1,c2) After contrast stretch; (d1,d2) After applying CLAHE; (e1,e2) RGB to YCbCr transformed image; (f1,f2) After contrast stretching of luminance component; (g1,g2) After noise removal of luminance component; (h1,h2) YCbCr to RGB transformed – Final image.

4 Performance evaluation and discussion

The present section details the performance analysis of the proposed image enhancement algorithm. As mentioned in Section 3, the basic idea of the method is to alleviate the color cast problem via contrast stretch and subsequent CLAHE operations. Understandably, such an improvement can be assessed by visual inspection and by quantifying typical enhancement parameters. In this work, performance evaluation is based on four standard parameters, namely,

-

(a)

Statistical measures from Histogram (Relative Contrast Enhancement Factor (RCEF) and Measure of Entropy (MOE)).

-

(b)

Measure of Enhancement (EME).

-

(c)

Perceptual Quality Metric (PQM).

-

(d)

Color Enhancement Factor (CEF).

More precisely, performance of the proposed method (in terms of the above performance metrics) is compared with those of four methods, namely, Gray World (GW) [7–9], White Patch (WP) [7; 9], Adobe Photoshop Equalization (APE) [7] and Unsupervised Color Correction method (UCM) [7].

For the present analysis, images are acquired with an underwater camera (with a standard resolution of 640×480), manufactured by Kongsberg Simrad, Model No. OE14-110/111. In order to demonstrate the suitability of the proposed method for various circumstances, images were captured from different measured distances between the object and the camera as indicated in figure 7 and figure 8.

Since the proposed method was to be employed for an online application, an exercise of measuring the required computational time was also taken up towards performance evaluation. Section 5 details the issues in online implementation of the proposed method with AUV-150 [24; 25].

Performance of the proposed RYPro method is better explained by considering any one of the columns of figures 7 and 8. For instance, in the first column of figure 7 (Hammer), the images in the rows 1 through 6 depict the original, GW enhanced, WP enhanced, APE enhanced, UCM and RYPro enhanced images, respectively. The figures in the seventh row depict the original out-of-water (in air) images captured by a high resolution general purpose camera. Further, individual columns of figures 7 and 8 indicate the distances between the object and the camera in a descending order. The original underwater images at six different distances are hereafter referred to as H1–H6 and W1–W6 in figure 7 and figure 8, respectively.

It can be commented by visual inspection from figures 7 and 8 that the proposed method offers better enhancement and higher visual quality than existing methodologies reported in the literature. The claim is further validated by defining two quantitative parameters related to contrast enhancement and average information contained in the enhanced images, namely, Relative Contrast Enhancement Factor (RCEF) and Measure of Entropy (MOE). The defined parameters have been calculated from the respective histograms of original and enhanced images as explained in the following Subsection.

4.1 Statistical measures from histogram

Histogram of an image is a tool which is used to analyze the performance of an enhancement algorithm by comparing the tonal distribution of original and enhanced images. Conventionally, a wider histogram indicates an image with good visual quality. Two quantitative parameters RCEF and MOE are calculated from the histograms of images after being enhanced using proposed and other conventional methods.

4.1.1 Relative contrast enhancement factor (RCEF)

In general the width of the histogram for underwater images decreases with an increase in depth, which may be attributed to incremental color cast. Metric of RCEF signifies the dynamic range of histogram calculated using the global variance (σ 2) and mean (μ) of enhanced and original images [26] as defined in (11). In order to verify the contrast enhancement, RCEF values of enhanced and original images (using proposed and four existing methods) are evaluated and tabulated in table 1.

where σ E and σ O are standard deviation of enhanced and original images, respectively; μ E and μ O are their respective means.

In the proposed method, CLAHE, followed by contrast stretch of less dominant color plane in the RGB domain significantly contributes in enhancing the RCEF values of the image under test. This may be observed from the RCEF value of the proposed method (table 1), which is distinctly greater for all the specified depths as compared to other methods. This in turn indicates better contrast enhancement, thereby signifying substantial improvement of visual quality of the images.

4.1.2 Measure of entropy (MOE)

In image processing, entropy, a statistical parameter, represents the randomness in the texture of an image. The Shannon information entropy H(I) of an image is defined as

where P(i,j) is the probability of occurrence of a particular pixel’s intensity value with spatial coordinate (i,j).

Clearly, the more the randomness in texture, more is the variation in pixel intensity. But randomness in intensity is not a characteristic of an underwater image arising due to the color cast problem. This results in lesser entropy. Intuitively, once an underwater image is enhanced, intensity variation would increase, which in turn results in higher average information. In order to verify this average information characteristic of an underwater image, entropy of the enhanced image using the proposed method and four existing methods are evaluated and compared in table 2. It may be noted that the average information content in the images enhanced using RYPro is higher than those enhanced using the other methods. An intuitive reason for the under performance of the GW and APE methods could be due to the fact that the methods are primarily based on blind color equalization. On the contrary and in spite of subsequent operations followed by color equalization, WP and UCM methods are also unable to outperform the proposed algorithm in MOE calculation, resulting in relatively higher improved information content in RYPro enhanced images.

4.2 Measure of enhancement (EME)

Weber’s contrast based measure of enhancement (EME) [27] is another useful “no-reference image quality assessment” (NR-IQA) metric to quantify the value of contrast enhancement obtained in the image. EME value signifies the contrast quality improvement after enhancement, this is used in the present context as a quantitative NR-IQA parameter. EME value is defined as [27],

where

Here the image is divided into (k 1×k 2) blocks and \(I_{max:i,j}^{w}\) and \(I_{min:i,j}^{w}\) are the maximum and minimum pixel intensity values, respectively, in any particular block w.

In order to compute EME, first enhanced gray image is divided into 3×3 sections (k 1= k 2 = 3) and then enhancement index of each of the sections is evaluated using (14). Finally, contrast based enhancement indices of each of the sections is averaged to obtain the final EME value using (13). For the present analysis, EME values of the enhanced images are evaluated using the proposed method and the four mentioned conventional methods. As may be observed from table 3, the evaluated EME values using the proposed RYPro method are the highest when compared to the four existing methods, which in turn signifies the necessity of contrast enhancement in the RGB domain.

4.3 Perceptual quality metric (PQM)

Another NR-IQA parameter, perceptual quality metric (PQM) [28], is used for evaluating the perceptual quality of enhanced images using the proposed and other comparative methods. It is reported [26] that the quality of an enhanced image may be termed as good if the value of PQM is close to 10.

A comparative study of PQM measures has been tabulated in table 4. From the values in table 4, it is clear that PQM values of enhanced images using the proposed RYPro method are closest to 10 as compared to other existing methods. PQM of an image depends mainly on three factors, e.g. contrast, colorfulness and brightness, which are properly addressed via processing on both RGB and YCbCr domains in the proposed algorithm, resulting in higher PQM values.

4.4 Color enhancement factor (CEF)

Color enhancement is also desirable along with the measurement of contrast quality improvement. For this purpose, a NR metric called colorfullness metric (CM) is used by [28]. The metric, CM, as mentioned in (15), denotes post-enhancement color improvement taking care of all the three color planes R,G,B [28]. The metric is defined as

where α = R−G, β=((R + G/2))−B, σ α and σ β are standard deviations of α and β, respectively and μ α and μ β are the respective means.

For a particular method, CM is calculated for the original and enhanced images. Finally, color enhancement factor (CEF) is obtained as the ratio of CM of the enhanced image to that of the original image. The tabulated CEF values (table 5) indicate that the proposed RYPro method provides greater color enhancement than all the four compared methods, signifying improved post-enhancement visual color quality.

4.5 Computation time of algorithm

As mentioned earlier, the proposed image enhancement method was in principle targeted for online AUV applications. As far as online implementation was concerned, the basic objective was to acquire improved quality images within a stipulated time. The computational time required for the proposed method, as well as for the three mentioned methods has been measured and compared. To that end, a general purpose computer with Intel core i3 processor, frequency 2.20 GHz, 4 GB RAM and Windows 7, 64 bit Operating System was used. The computational times for the GW, WP and RYPro methods are tabulated in table 6. Execution time required for APE based enhancement is excluded from the comparison, as enhancement is carried offline using Adobe Photoshop CS4 software.

From table 6, it may be observed that the GW method consumes largest execution time as it enhances the less dominant color planes by multiplication with the necessary correction factors. On the other hand, the WP method computes the maximum intensity value in the RGB plane, and hence needs the least execution time. Though the WP method is computationally fastest among all compared methods (as illustrated in Section 4.1 through 4.4), it exhibits lower performance in terms of enhancement and visualization when compared to the proposed RYPro method. From the figures and the performance of the RYPro approach as compared to four prominent methods it can be concluded that in terms of execution time and enhancement the proposed method proves to be satisfactory, making it suitable for online applications.

4.6 Comparison of RYPro enhanced and out-of-water images

In this subsection, qualitative as well as quantitative performance of RYPro enhanced images is compared with those acquired out-of-water (with a Microsoft make L2 LifeCam HD 6000 Notebooks Win USB camera, model: 7PD-00011). Generally the background of the image plays a larger role in evaluation of performance parameters, and more so in the present scenario, as the environment of image acquisition and the cameras (used for the purpose) are completely different. For this reason, performance analysis is carried on objects extracted (Hammer & Wheel) from RYPro enhanced and from original out-of-water images (see figure 9 and table 7). Both the images provide almost the same information which is reflected from the quantitative findings. However, it is observed that the colors of RYPro enhanced underwater images and original out-of-water images (in case of both hammer and wheel) are distinctly different (which is also reflected both from the corresponding Colorfullness Metric (CM) as seen in table 7). The difference in CMs of RYPro enhanced underwater and out-of-water images is primarily due to dissimilar camera specifications as well as individual environmental conditions of imaging and subsequent application of RYPro in the domain of color enhancement in case of underwater images.

5 Real-time implementation of RYPro with AUV-150

The proposed method has been tested rigorously over several images obtained at the shallow basin facility of CSIR-CMERI. As was the case with Hammer and Wheel images in Section 4, it is once again noted from the qualitative and quantitative analysis of the online images that the performance of RYPro is indeed acceptable in terms of all the parameters including computational time. In view of its suitability for online applicationsFootnote 1, the proposed method has been implemented online with AUV-150 [24; 25].

Images in figure 10 were captured by another underwater camera that was deployed to observe the motion of AUV during mission. In figure 10, (a1, b1) are the original images captured by the external camera and (a2, b2) are their corresponding RYPro enhanced images.

During online implementation, video is captured by the on-board camera residing in the nose module of the AUV. The frame grabber attached to the on-board host PC acquires video at a rate of 30 frames per second with a standard resolution of 640×480. For enhancing the captured images using the proposed method, images are extracted online from the captured video at an interval of 1 s. A few of the online extracted frames (a1 to d1) and their corresponding RYPro enhanced images (a2 to d2) are shown in figure 11.

Performance analysis of the algorithm has also been carried on some of the online images obtained at a depth of 5 m. Such analysis is tabulated for one of the online images as shown in figure 11 (b1). As was the case earlier, performance of the proposed algorithm was compared for visual improvement (figure 12). The performance parameters were also evaluated for the above mentioned image, and tabulated in table 8. Once again, table 8 demonstrates that the improvement in terms of the performance metrics is largest for the proposed method, which in turn signifies the suitability of the proposed method for online-enhancement of underwater images.

6 Conclusion

It is apparent that enhancement of underwater images is essential for further processing, since otherwise segmentation, object recognition and relevant parameter calculation may introduce greater difficulty. In this paper, a multi-step image enhancement algorithm (RYPro) has been developed, which not only eliminates the undesirable underwater noise, but also enhances the contrast, luminance and visual quality of the image under test without the loss of any visual information. The proposed RYPro method provides improved enhancement than the existing algorithms. A comparative study of assessment parameters also ensured noteworthy results of the proposed method for further post processing [29]. Execution time analysis of the proposed algorithm also indicates the suitability of the proposed method for real-time applications. With suitable modifications, the computational cost can be further reduced for direct application of RYPro in real-time video enhancement.

Notes

1 In general, vision (e.g. camera) aided navigation requires on-board real-time enhancement of images acquired by the AUV.

References

Chiang J Y and Chen Y. -C. 2012 Underwater image enhancement by wavelength compensation and dehazing. IEEE Trans. Image Process. 21 (4): 1756–1769

Pegau W S, Gray D and Zaneveld J R V 1997 Absorption and attenuation of visible and near-infrared light in water: Dependence on temperature and salinity. Appl. Opt. 36 (24): 6035–6046

Schechner Y Y and Karpel N 2005 Recovery of underwater visibility and structure by polarization analysis. IEEE J. Oceanic Eng. 30 (3): 570–587

Sedlazeck A and Koch R 2011 Simulating deep sea underwater images using physical models for light attenuation, scattering, and refraction. Vision, Modeling, and Visualization Workshop, pp 49–56

Trucco E and Olmos-Antillon A T 2006 Self-tuning underwater image restoration. IEEE J. Oceanic Eng. 31 (2): 511–519

Iqbal K, Salam R A, Osman A and Talib A Z 2007 Underwater image enhancement using an integrated colour model. IAENG Int. J. Comput. Sci. 32 (2): 239–244

Iqbal K, Odetayo M, James A, Salam R A and Talib A 2010 Enhancing the low quality images using unsupervised colour correction method. IEEE Int. Conf. Syst. Man Cybern. (SMC), pp 1703–1709

Kwok N, Wang D, Jia X, Chen S, Fang G and Ha Q 2011 Gray world based color correction and intensity preservation for image enhancement. Int. Congress Image Signal Process. (CISP) 2: 994–998

Provenzi E, Gatta C, Fierro M and Rizzi A 2008 A spatially variant white-patch and gray-world method for color image enhancement driven by local contrast. IEEE Trans. Pattern Anal. Mach. Intell. 30 (10): 1757–1770

Ahlen J, Sundgren D, Lindell T and Bengtsson E 2005 Dissolved organic matters impact on colour reconstruction in underwater images. Image Anal.: pp 1148–1156

Chikane V and Fuh C -S 2006 Automatic white balance for digital still cameras. J. Inf. Sci. Eng. 22 (3): 497–509

Gupta M, Narasimhan S G and Schechner Y Y 2008 On controlling light transport in poor visibility environments. IEEE Conference on Computer Vision and Pattern Recognition pp 1–8

Bier E, Stone M and Pier K 1997 Enhanced illustration using magic lens filters. IEEE Comput. Graph. Appl. 17 (6): 62–70

Tan K and Oakley J P 2001 Physics-based approach to color image enhancement in poor visibility conditions. J. Opt. Soc. Am. A 18 (10): 2460–2467

Torres-Mendez L A and Dudek G 2005 A statistical learning-based method for color correction of underwater images. In: Gelbukh A and Monroy R (Eds.) Research on computer science Vol. 17, Advances in artificial intelligence theory, pp 151–160

Lam C 2011 Image enhancement using thresholding techniques and histogram equalization. PhD thesis, University of California, Irvine

Karunakaran V and Venugopal T 1998 The Weierstrass transform for a class of generalized functions. J. Math. Anal. Appl. 220 (2): 508–527

Perona P and Malik J 1990 Scale-space and edge detection using anisotropic diffusion. IEEE Trans. Pattern Anal. Mach. Intell. 12 (7): 629–639

Gerig G, Kubler O, Kikinis R and Jolesz F A 1992 Nonlinear anisotropic filtering of MRI data. IEEE Trans. Med. Imaging 11 (2): 221–232

Loupas T, McDicken W N and Allan P L 1989 An adaptive weighted median filter for speckle suppression in medical ultrasonic images. IEEE Trans. Circuits Syst. 36 (1): 129–135

Qui F, Berglund J, Jensen J R, Thakkar P and Ren D 2004 Speckle noise reduction in SAR imagery using a local adaptive median filter. GISci. Remote Sensing 41 (3): 244–266

Singhai J and Rawat P 2007 Image enhancement method for underwater, ground and satellite images using brightness preserving histogram equalization with maximum entropy. International Conference on Conference on Computational Intelligence and Multimedia Applications 3: 507–512

Pizer S M, Amburn E P, Austin J D, Cromartie R, Geselowitz A, Greer T, ter H. R. B, Zimmerman J B and Zuiderveld K 1987 Adaptive histogram equalization and its variations. Computer Vis. Graph. Image Process. 39 (3): 355–368

Shome S N, Nandy S, Das S K, Pal D, Mahanty B, Kumar V, Ray R and Banerji D 2010 Autonomous underwater vehicle for 150m depth – development phases and hurdles faced. In: Vadakkepat P et al (Eds.) Trends in Intelligent Robotics, pp 49–56

Shome S N, Nandy S, Pal D, Das S K, Vadali S R K, Basu J and Ghosh S 2012 Development of modular shallow water AUV: Issues & trial results. J. Inst. Eng. (India): Series C 93 (3): 217–228

Jha R K, Chouhan R, Biswas P K and Aizawa K 2012 Internal noise-induced contrast enhancement of dark images. IEEE International Conference on Image Processing (ICIP), pp 973–976

Agaian S S, Panetta K and Grigoryan A M 2000 A new measure of image enhancement. In: IASTED International Conference on Signal Processing & Communication, pp 19–22

Mukherjee J and Mitra S K 2008 Enhancement of color images by scaling the DCT coefficients. IEEE Trans. Image Process. 17 (10): 1783–1794

Banerjee J, Ray R, Vadali S R K, Layek R K and Shome S N 2013 Shape recognition based on shape-signature identification and condensibility: Application to underwater imagery. IEEE National Conference on Computer Vision, Pattern Recognition, Image Processing and Graphics (NCVPRIPG), pp 1–4

Acknowledgements

The authors would like to thank CSIR and to the Ministry of Earth Sciences, Govt of India for providing financial assistance to carry out the work . The authors wish to express sincere thanks to all Robotics & Automation Group members for their help and support. We are thankful to Dr. Rajlaxmi Chouhan of Computer Vision Laboratory, Dept. of E & ECE, Indian Institute of Technology, Kharagpur for her valuable insights in preparing the manuscript.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

BANERJEE, J., RAY, R., VADALI, S.R.K. et al. Real-time underwater image enhancement: An improved approach for imaging with AUV-150. Sadhana 41, 225–238 (2016). https://doi.org/10.1007/s12046-015-0446-7

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12046-015-0446-7