Abstract

This research aims to formalize the fundamental relationship between user experience, interaction, and metaphors. By doing so, we provide a tool and a methodology to enable the classification of interactive products and consequently propose some guidelines for designing interactive products. In complement, we make explicit the notion of metaphors in interaction design through three dimensions: the environment, the product (target), and the perceived reference (source). We finally define a theoretical model of user experience and interaction (experiential interaction) containing human affective and cognitive processes. This research establishes a taxonomy of interactive products based on a survey in which 176 participants evaluated 50 interactive products. This research can be of interest for researchers in interactive products from disciplines of user experience design, human factors, computer science, artificial intelligence, and design science.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Research on user experience and interaction leads to a deeper consideration of the subjective perception rather than objective dimensions. This point of view has been broadly launched by research on experience design [1], human perception [2, 3] and more recently on metaphors [4]. This separation between objective properties and subjective areas led us to consider the link between physical and digital interactions. The structure of this paper starts with state of the art (Sect. 1) on user experience, interaction, and metaphors. It results in both a theoretical model of design information that user experience and interaction encompass (notes as \(^{(\mathrm{i})}\)), followed by a three-dimensional framework (notes as \(^{(\mathrm{d})}\)), which differentiates interactive products on the base of the physical and digital paradigm. State of the art led our research toward a question presented in Sect. 2, focusing on the impact of the three dimensions\(^{(\mathrm{d})}\) (environment, target, and source) on the different design information\(^{(\mathrm{i})}\) that a user is experiencing when interacting with a product (affective and cognitive responses). Section 3 presents the experimentation performed through a survey completed by 176 participants, which consists of assessing 50 interactive products. Finally, Sect. 4 concludes our results and future opportunities.

2 Interaction and user experience

This chapter offers a state of the art to improve the understanding of the concept of interaction and metaphors in user experience. The literature review helps to define both a theoretical model of the design information\(^{(\mathrm{i})}\) to consider in early design and a taxonomy based on three dimensions\(^{(\mathrm{d})}\) (environment, target, and source) for discriminating interactive products in user experience.

2.1 User experience

The way a user experiences a product has been studied for several years. Many models and frameworks focus on user experience [1, 5,6,7,8,9]. They all presented user experience as multifaceted phenomena that involve manifestations such as subjective feelings [10], behavioral reactions, expressive reactions, and physiological reactions [6]. These parameters are direct consequences of attachment that acknowledge the relationship that can exist between a user and a product. Furthermore, authors emphasize that the user experience is determined by many factors interacting with each other in complex ways. According to Ortiz Nicolas & Aurisicchio [11] and later Gentner [1], the user experience is composed of a user, an artifact, a context, and the interaction that occurs. According to Desmet and Hekkert [6], the user is the one living the experience. Furthermore, Parrish [12] emphasizes that an experience is highly contextual.

This understanding allows us to define the user experience as a dynamic phenomenon originating in user and artifact characteristics that interact in a specific context.

2.2 User experience and interaction: experiential interaction

Some recent works have formalized the concrete link between user experience and interaction [1, 13], pointing out that interaction is the action of a user or an artifact on each other that influences or modifies the user’s motor, perceptive, cognitive, and affective systems [14]. Interaction can be physical (driving a car) or non-physical (contemplating a car) [15]. Interaction binds user, artifact, and even context [1]. Furthermore, according to Desmet and Hekkert [6], interaction refers to instrumental (function), non-instrumental (no function), and even non-physical interaction (no touch) because each of these consequences can generate physiological, motivational, or motor responses [16, 17]. Therefore, we consider interaction to be a dialogue between user and artifact in a particular context, and this dialogue is not based specifically on the use of advanced technology. In a given context, a product can play a critical role in the user’s cognitive and affective responses [18]. Indeed, according to Rasmussen [19], Atkinson-Shiffrin [20] and Helander & Kahlid [21], the user creates personal and specific responses due to his or her sensory inputs. These sensory registers are visual, auditory, haptic (...) skills. Through cognitive and affective processes, the user creates responses that allow decision-making, control (...) and materialization of mental processes through accurate responses. This interactive approach proposes an alternative way for designing Interactions through affective and cognitive responses.

Thus, six crucial elements can be highlighted: interaction is the core of the user experience; interaction is a multidimensional phenomenon composed of abstract and concrete design information; interaction is subjective, because it depends on the user’s affective and cognitive processes; the notion of temporality is decisive in understanding how interaction occurs; interaction is not characterized by the materiality of the product but by the effect and affect of the product on the user; finally, interaction can be instrumental, non-instrumental, and even non-physical, as long as there is feedback (changes in the user or changes in the product).

By defining Interaction through the User Experience scope, we pointed out the activities of designing and taking decision in product development and manufacturing. This process of designing interactions in the human-artifact system can be defined as the interactive approach. It encompasses the different activities of the design process (activities of Information, Generation, Evaluation and Communication presented by Bouchard 2003) based on a human centered approach. This interactive approach uses tools and methodologies for designing products by focusing on human affective and cognitive responses.

2.3 Physical and digital interactions

This research aims to focus on the physical and digital paradigm in human-product interaction, to propose a taxonomy that classifies interactions from the user-experience approach based on different dimensions\(^{(\mathrm{d})}\). Developments in material science, fabrication processes, and electronic miniaturization have dramatically altered the types of objects and environments we can construct [22] and interact with: augmented reality products, virtual environments, tangible interfaces, mixed reality, immersion...

Thus, developing a taxonomy (‘interactive taxonomy’) could strengthen our understanding of these interactive products. The originality of this research lies in the fact that this taxonomy is user-experience centered. Thus, it is not only focused on the objective reality of the product, but also in the perception of the user between the two notions of physical and digital attributes when interacting, according to cognitive science researchers. Some taxonomies of interactive products already exist [23,24,25,26]. Nevertheless, even if they provide a great understanding of interactive products they do not approach the subjective perception of an artifact by a user. However, it appears already that two elements can be extracted from these works to feed our taxonomy: following Milgram and Colquhoun [23], we acknowledge the relevance of ‘environment’ when considering such taxonomy. In their work, they proposed an understanding of environment from real to the virtual environment. Furthermore, following Pine [26] we acknowledge the notion of product properties that he defines as ‘matter’ (atom) and ‘no matter’ (bits). Nevertheless, what Pine [26] is proposing is an objective understanding of product properties. Following our state of the art on user experience and cognitive psychologies, we can also define this axis from the user perception point of view. Following the ‘Sign Theory,’ a major theory of product perception [2, 3], we can acknowledge the notion of ‘perceived product properties.’ Thus, the user can perceive physical properties (atom) or digital properties (bits) when interacting. This objective understanding of what the product is versus what the user perceives when interacting led us to approach another major field of research: the metaphor in product design. Indeed, before closing the state of the art of the three axes highlighted previously (environment, objective properties, and perceived properties), we could consider the metaphor in research, which proposes an understanding of target and source that can be linked to our objective and perceived properties.

2.4 Metaphors

One central definition of ‘metaphor’ has been proposed by Lakoff and Johnson [27]. In their work on linguistics, they defined metaphors as ‘understanding and experiencing one kind of thing regarding another.’ Although Lakoff and Johnson highlighted this notion of metaphors through the linguistic approach, it has been shown recently by Hekkert & Cila [4] that ‘metaphorical thinking is an innate capability we all possess.’ Thus, this understanding of metaphor has been more and more extended toward different research fields: Gibbs [27] in cognitive psychology, Forceville [29] in advertising; Cienki & Muller [30] & Chung [31] on gestures; Hekkert and Cila [4] on design science. Among these growing territories of research where metaphors are used, a common understanding is shared: a metaphor is an association of a target and a source. The definition of metaphor and its constituents (target and source) might slightly change. Nevertheless, as it is defended by several authors, metaphors underlie how people think, reason, and imagine in everyday life [27, 28, 32, 33]. Recently, fields of design and engineering approached this notion of metaphor [4, 29, 31]. By doing so, they highlighted the link between linguistics (language) and form (design). Indeed, according to Forceville [29], metaphor can be used in product design. Recently Hekkert & Cila [4] defined metaphor as ‘any kind of product whose design intentionally references the physical properties of another entity for precise, expressive purposes.’ They described the target as the product, whose shape alludes to a more or less disparate entity. On the other hand, they defined the source as the remote entity whose characteristics are associated with the target to assign a particular meaning to it. Nevertheless, if there are common elements in the understanding of metaphor across the different research fields, there are also some differences: focusing on the metaphor understanding in linguistics versus in product design, we can highlight that in product metaphors, source and target are physically incorporated into a single element (the product). According to Forceville [4], we can call those the ‘integrated metaphors’ whereas Caroll [34] calls that ‘homospatiality’ Carroll [34]. This difference from linguistics highlights a notion of merging the target and the source that can explain a new complexity for the product design field of research. Additionally, another significant difference relies upon the modality within which users can perceive metaphors. Indeed, if metaphor in linguistics is mono-modal [4], metaphor in a product can be multimodal.

Even if studies have been conducted on interaction design and metaphor, research that proposes a definition of metaphor and interaction within the scope of user experience is still lacking. Thus, we propose the following definition, as a draft, based on the definition of product design and metaphor [4]: interaction metaphor relates to any kind of artifact, whose interactive perception intentionally references another entity, to raise more meaningful interaction and user experience. Thus, interaction metaphor also consists of an ‘association’ between two entities: a target (artifact properties whose design alludes to a more or less disparate entity) and a source (the remote entity whose perceived characteristics are associated with the target to assign new and more meaningful experiences). The right association of a target and a source should raise meaningful interactions and user experiences. This meaningfulness can be defined as the qualitative and quantitative impact of affective and cognitive responses users can live.

Furthermore, approaching metaphor through the interaction design field led us to explore the notion of time in metaphor. For example, Krippendorff [36] linked narrative process to metaphors and design by making clear the central role of language in making artifacts meaningful. Indeed, what can explain the difference between metaphor in product design and metaphor in interaction design is this notion of temporality. Temporality implies ideas of step by step, of sequence, story, and narration in everyday uses of products. According to Neale and Carroll [34], metaphors can help map familiar to unfamiliar knowledge, and help users reflect on and learn new domains based on their previous experiences. Using this understanding within the interaction design scope can help users in facilitating the learning, by supporting the transformation of existing knowledge to improve the comprehension of novel situations, as defended by Alty; Knott; Anderon & Smyth [35] for example. Indeed, using metaphorical schemas in interaction leads to easily understandable ‘use cues’ or ‘affordance’ [37]. It can also facilitate active learning [38], because it bridges perception, action, and higher verbal and non-verbal representations [38]. These representations that metaphorical interactions raise in the human cognitive system have been demonstrated as being operated automatically and beneath conscious awareness by Hurtienne [38]. These natural links enable the selection and application of existing models of familiar objects and experiences to comprehend novel situations or artifacts [35, 36]. Thus, metaphors are in every human-product interaction, consciously perceived by the user, or not. These metaphorical schemas can be useful for different reasons: for helping to approach new technologies through familiar interactions (through clues for examples); or by contrast, metaphors can be used to break with too familiar and too predictable products (linkable to a notion of contrast in perceptual expectations: [39]).

Finally, the notion of target is very close to the understanding of objective properties, and the source can be related to perceived properties. By using the words from research on metaphor rather than those from existing models and taxonomy [26], we construct a taxonomy based on more experiential approach, closer to sign theory and other theories of perception. Thus, what the understanding of target\(^{(\mathrm{d})}\) and source\(^{(\mathrm{d})}\) bring to our research is a strong interdependency summarized through one single word: metaphor.

2.5 Three-dimensional\(^{(\mathrm{d})}\) taxonomy

Following the previous understanding of both metaphors and the physical and digital paradigm, we regrouped the product properties and the perceived product properties through the terms target\(^{(\mathrm{d})}\) and source\(^{(\mathrm{d})}\). Additionally, we used the notion of environment\(^{(\mathrm{d})}\) proposed by [26]. These terms (environment\(^{(\mathrm{d})}\), target\(^{(\mathrm{d})}\), and source\(^{(\mathrm{d})}\)) structure the taxonomy as three dimensions\(^{(\mathrm{d})}\) that are presented hereafter more precisely.

2.5.1 The environment\(^{(\mathrm{d})}\)

Through the term environment, we collected various research. Among them, Wang & Schnabel [40] approached the environment notion through the name of Reality, defining the real and physical world as a realm of elements within the world that exists. According to Wang & Schnabel [40], reality offers high sensory engagement, because of the factual existence of the elements. But also, in the real environment, only a low level of abstraction can be experienced. Furthermore and in contrast to the virtual environment, a strictly real world environment is constrained by the laws of physics (gravity, time, and material properties) according to Milgram [41]. On the other hand, several researchers have presented the complete virtual environment as an entirely computer-simulated environment. The commonly held view of a virtual environment is one in which the participant observer is totally immersed in an entirely synthetic world, which may more or less mimic the properties of the real world environment, either existing or fictional. The virtual environment may also exceed the bounds of physical reality by creating a world in which the physical laws govern gravity, time, and material properties [40,41,42].

Thus, based on their understanding we decided to use the term environment\(^{(\mathrm{d})}\), as defended by Pine [26], from real to virtual as advocated by Wang & Schnabel [40].

2.5.2 The target\(^{(\mathrm{d})}\)

We use the word ‘target’ to encompass physical and digital properties that researchers are defending [43,44,45]. This target approaches the properties of the artifact pointed out from the user interaction system.

2.5.3 The source\(^{(\mathrm{d})}\)

The term ‘source’ has been chosen to approach the perceived characteristics associated with the target, rising to more meaningful experience. This term is also used in the field of metaphor. It lends the name of ‘source’ to the metaphor field, whereas it is built on a strong influence from sign theory research. One fundamental problem in defining the source is choosing an adequate form of reference (physical or digital). Thus, physical sources are not limited to physical targets, but when the interface references physical notions, it is already a physical source for the user. For example, while interacting with an electro-vibration system (Disney Research), you may interact with a digital target (the data); the user can read the physical source like he can feel different textures through this screen. Thus, this digital target is combined with physical sources. On the other hand, the source may also reference digital principles. According to the community, a source is digital when it breaks with physical properties [46] (directness of effort, locality of effort, visibility of state) or when it uses computational language: windows, menus, or icons, materialized through Graphical User Interfaces thanks to Xerox 8010 Star System [47] and characterized as ‘the fluidity of Bits’ by Ishii [44]. For example, interacting with a shape that changes its structure and reacts like pixels [44] or a chirurgical intervention across the world are some sources that reference the digital world in interaction.

Finally, these three dimensions\(^{(\mathrm{d})}\) can be of value to discriminate the physical and digital paradigm of interactive products though the user experience perspective. By using these three dimensions\(^{(\mathrm{d})}\) as axes, we can already highlight eight areas\(^{(\mathrm{a})}\) that compose the direct orthonormal system. Thus, in this paper, we will call the eight spaces highlighted by the three-dimensional\(^{(\mathrm{d})}\) axis the areas \(^{(\mathrm{a})}\).

Furthermore, the research community acknowledges another understanding that can be used for finer understanding of interactive products: the image schema vocabulary, briefly presented in the understanding of metaphor. It is used as a metalanguage for designing interfaces [37] whereas it started with Johnson [48] from the philosophical perspective. Image schemas can be useful as a list that can be used for an empirical study. Nevertheless, according to Johnson [48], one danger of such inventories of image schemas is that they are never complete. Applied to the interaction design scope, we can use the word ‘principle’ that is more related to ‘action enablement’, to what the product allows the user to do. Thus, this user experience approach of image-schema leads us to use the word ‘principle\(^{(\mathrm{p})}\) during this research.

2.6 State of the art summary

From this state of the art, we can highlight four key elements: design information\(^{(\mathrm{i})}\), three dimensions\(^{(\mathrm{d})}\), eight areas\(^{(\mathrm{a})}\), and principles\(^{(\mathrm{p})}\) .

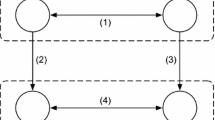

First of all, our user experience and interaction design approach led us to create a model: the design information model\(^{(\mathrm{i})}\) (e.g. Fig. 1). This model has been developed based on a model defended by Gentner [1]. Furthermore, a precise understanding of every term presented in this model has been supported in Mahut [49]. This model represents a vision of interaction from the user experience field. It maps the user, the artifact, and the environment. It is based on two axes: the vertical axis sorts the different design information from abstract design information (top) to concrete design information (bottom); the horizontal axis emerges out of the user-artifact system highlighted in the state of the art. At the core of the user experience, we can see the interaction space (dotted). The user, through his characteristics (such as personality, senses, etc.) may perceive stimuli and react to them, according to affective and cognitive processes. These reactions affect the perception of the artifact in creating artifact meaning.

This model is mapping the different components of the user experience, highlighting the scope of interaction, and pointing out the different design information\(^{(\mathrm{i})}\) that we have to consider in the interactive approach of designing or evaluating user experiences and interactions in early phases. It simply helps to consider all the design information\(^{(\mathrm{i})}\) related to user experience and interaction, from a human-centered approach. Thus this model presents and highlights the design information related to the Interactive Design Approach. By using this model, designers can choose between considering the listed design information\(^{(\mathrm{i})}\) when designing during early phases, or assess products by measuring their impact on both the interactive and the user experience scopes.

The second highlighted element that can be emphasized is a way to classify interactive products (‘interactive taxonomy’ e.g. Fig. 2) according to the physical and digital paradigm. To make explicit this understanding of interactive products from the user experience point of view, we used the three dimensions\(^{(\mathrm{d})}\): the environment\(^{(\mathrm{d})}\) (from real to virtual), the target\(^{(\mathrm{d})}\) (from physical to digital attributes), and the source\(^{(\mathrm{d})}\) (from physical to digital perceived attributes).

The third element ensues from the three-dimensional representation\(^{(\mathrm{d})}\). It consists of eight areas\(^{(\mathrm{a})}\) where interactive artifacts can be displayed according to the different dimensions\(^{(\mathrm{d})}\) (e.g. Fig. 2). This principle of interactive taxonomy could strengthen the understanding of Interactive Products, and improves the design process when merged with design methods.

The last highlighted element is the notion of principles\(^{(\mathrm{p})}\) that can be used to better define and understand users’ perception of interactive products.

These four elements that have been highlighted are summarized in Table 1. They all support the Design Process in early design phases. These elements can be considered as tools that can be used for both evaluating or creating Interactive Products. Among this paper, we will propose a method as a way to implement these tools within a design process. Nevertheless, we encourage the research community to implement these tools in new and different design processes.

3 Research question

Following our state of the art research, four insights have been raised: The first one is the understanding of interaction as the core of the user experience. This approach proposes to focus on the interactive design information\(^{(\mathrm{i})}\) (affective and cognitive responses) from the model [49] (e.g., Fig. 1), rather than on the product materiality when evaluating or designing user experiences. The second insight corresponds to the three dimensions\(^{(\mathrm{d})}\) proposed through the taxonomy of interactive products. According to our experiential approach of the physical and digital paradigm, the three dimensions of environment\(^{(\mathrm{d})}\), target\(^{(\mathrm{d})}\), and source\(^{(\mathrm{d})}\) have been highlighted as a way to classify interactive products. This three-dimensional\(^{(\mathrm{d})}\) approach allows us to highlight the eight areas\(^{(\mathrm{a})}\) that compose this figure. Finally, the last insight that has been presented is this principle-based approach\(^{(\mathrm{p})}\). It relates to what the interactive product enables the user to do.

Nevertheless, even if we have highlighted this interactive design information\(^{(\mathrm{i})}\) and these three dimensions\(^{(\mathrm{d})}\) structured through eight areas\(^{(\mathrm{a})}\), we could not find in the research community knowledge on the impact of such dimensions\(^{(\mathrm{d})}\) on the interactive design information\(^{(\mathrm{i})}\) (user’s affective and cognitive responses). This lack of information leads us to point out the following question:

How does the three dimensions\(^{(\mathrm{d})}\) (environment, target & source) influence the different design information\(^{(\mathrm{i})}\) from the model (users’ affective and cognitive responses) when a user is interacting with an artifact?

Our hypothesis is that a better understanding of the impact of the three dimensions\(^{(\mathrm{d})}\) of the different interactive design information\(^{(\mathrm{i})}\) could improve the way we design interaction from a user experience perspective. This interactive approach could be valuable for researchers needing insight on interactive products and user experience, and designers who are sensitive to interactive products’ perception and impact on users’ affective and cognitive responses. The following experimentation tests this hypothesis.

4 Experimentation

The objective of the experimentation is to test the influence of the three dimensions\(^{(\mathrm{d})}\) (environment, target, and source) on the different design information\(^{(\mathrm{i})}\) that the interaction’s scope is covering in the theoretical model (e.g. Fig. 1). The objective is to highlight how dimensions\(^{(\mathrm{d})}\) are impacting the design information\(^{(\mathrm{i})}\) (boxes underlined by the theoretical model). By doing so, we could identify for each area\(^{(\mathrm{a})}\) (eight areas proposed by the three-dimensional mapping \(^{(\mathrm{d})}\)) their influence on the design information\(^{(\mathrm{i})}\) and the different design principles\(^{(\mathrm{p})}\) that they are tackling and they could address in the future.

This experimentation is divided into three parts. The first part presents an understanding of our objectives. The second part defines a method to achieve them. Finally, the third part shows the results.

4.1 Objective

The experimentation tests the hypothesis by studying the link between the three dimensions\(^{(\mathrm{d})}\) (environment, target, and source) and its eight areas\(^{(\mathrm{a})}\), the different design information presented through the theoretical model\(^{(\mathrm{i})}\) (e.g. Fig. 1) and the principles\(^{(\mathrm{p})}\) that interactive products are using.

Our goal is to formalize the link between the dimensions\(^{(\mathrm{d})}\), the different design information\(^{(\mathrm{i})}\) (users’ affective and cognitive responses), the areas\(^{(\mathrm{a})}\) and the principles\(^{(\mathrm{p})}\), and to quantify this impact in order to propose a tool that guides and supports designers’ choices in early phases.

By doing so, this experimentation aims to provide three outputs:

-

1

A better understanding of the interactive taxonomy and its constituents based on the three dimensions \(^{(\mathrm{d})}\) : This experimentation aims to classify the range of interactive products through the three dimensions\(^{(\mathrm{d})}\). By doing so, it can improve our understanding of the three dimensions\(^{(\mathrm{d})}\) and its eight areas\(^{(\mathrm{a})}\) with definitions and examples.

-

2

-Formalizing dimensions’ impact \(^{(\mathrm{d}) }\) on the design information \(^{(\mathrm{i})}\) : By doing so, we should also be able to point out the impact of each interactive product’s areas\(^{(\mathrm{a})}\) to the different design information\(^{(\mathrm{i})}\). It could highlight whether some areas\(^{(\mathrm{a})}\) are more meaningful and impactful regarding users’ affective and cognitive responses.

-

3

Highlighting principles \(^{(\mathrm{p})}\) for new design challenges: This experimentation aims to highlight guidelines, new design challenges, and opportunities for interaction design. To do so, this experimentation states the different possible principles\(^{(\mathrm{p})}\) of interactive products. These principles\(^{(\mathrm{p})}\) consist of some keywords, mostly related to what the interactive product enables the user to do.

4.2 Method

The experimentation uses a particular architecture of evaluation, a step-by-step sequence of questions based on self-evaluation method, to assess an interactive product. Interactive products that have been evaluated are based on 45-second videos. We are not using real products for simple reasons: using video-based products allows us to evaluate more interactive products in less time; it also allows us to evaluate products that are prospective and concept-based, and therefore that do not yet exist. Furthermore, it allows to simply and quickly test products that usually require complex material and complex technological support. Finally, this experimentation does not expect precise affective and cognitive responses from evaluations, rather clues and tendencies that can help us to understand the impact of the three dimensions on users’ affective and cognitive responses. Thus, 50 products have been selected to cover the wider possible spectrum of the three dimensions of the tested framework.

This study was organized through an online survey where participants were asked to consider that they were the actors, interacting in the video thanks to human empathy [50]. This section has been divided into three parts: The first part focuses on the protocol to evaluate videos. The second part is dedicated to the procedure. And finally the last part proposes solutions for analyzing the gathered data.

4.2.1 Protocol

This protocol has been divided into two parts. First of all, we organized a preliminary experimentation that aimed to highlight a list of interactive product principles. These interactive product principles have been used as inputs for the final experimentation. This final experimentation aimed to evaluate 50 videos of interactive products and the answers to several questions to score each video.

Preliminary Experimentation- Principles Oriented

The preliminary experimentation aimed to create an exhaustive list of product principles\(^{(\mathrm{p})}\) that will be used in the main experimentation. It started with 12 videos of interactive products that four experts in interaction design discussed.

The input data

For this preliminary experimentation, two inputs have been used: the 12 videos and the question submitted to the panel.

-

The 12 videos: We selected 12 videos based on their originality, and to cover the widest range of interactive products as possible. We collected videos of both existing products, and of prospective and futuristic products like in science fiction movies (fake interactive products) as well as research-based interactive products. These videos have been re-framed to 30 seconds.

-

The question: “After watching the video X, what principle(s) or ability(ies) do this interactive product use, according to you?”

4.2.2 Procedure of the preliminary experimentation

This procedure details both the panel of participants that joined this preliminary experimentation and the different steps followed.

Panel: We asked four interaction design and user experience experts to take part in this experimentation. They are Toyota Motor Europe members, working in research and development.

The different steps: To conduct this preliminary experimentation, the four participants performed 12 loops, based on the four following steps:

-

Step 1:

Watch one video: First of all, participants were asked to watch the first video. This step lasted 30 seconds.

-

Step 2:

Answers to the question: After the video, participants were asked to answer the question presented previously: “After watching the video X, what principle(s) or ability(ies) do this interactive product use, according to you?”. Each participant had 90 seconds to work on it independently.

-

Step 3:

Present to others: Each participant was asked to present the principles he highlighted to others. This time was also used for discussion, principle improvement, and new principle creation.

-

Step 4:

Principles highlighted and discussed were then displayed on Post-its on a wall.

Finally, this four-step loop was reproduced for each video (12 times).

-

Final step:

At the end, we took 45 minutes to discuss, gather, and organize every Post-it presented on the wall.

4.2.3 Result preliminary experimentation

The procedure presented above allowed us to collect 17 principles to use in the Experimentation. These principles have been clustered in six boxes.

These words, presented as principles of interactive products have been used as input of the final experimentation given hereafter see Table 2.

Final Experimentation

4.2.4 The input data and panel

The experimentation aims to evaluate videos of interactive products. To evaluate interactive products, we decided to assess four elements presented through a three-part survey: the three dimensions\(^{(\mathrm{d})}\), the eight areas\(^{(\mathrm{a})}\) that ensue, the subjective perception of the interactive product based on the design information\(^{(\mathrm{i})}\) of the theoretical model in Fig. 1, and the different principles\(^{(\mathrm{p})}\) extracted from the preliminary experimentation. To succeed, we used 50 videos (to be evaluated), an architecture of questions (to evaluate), and the internet (as a framework to evaluate).

-

The videos: We selected 50 45-second videos, based on their originality, and to cover the widest possible range of interactive products and the best possible spectrum of the three dimensions\(^{(\mathrm{d})}\). Researchers in cognitive neuroscience and cognitive psychology proved this methodology of watching videos of people interacting to be valuable. According to them, the neuron systems allow users who watch someone performing an action and to feel the physiological mechanism for the perception/action coupling [51]. The neuroscientific community argued that the neurons “mirror” the behavior of the other, as though the observer was himself acting just by looking at the other according to Rizzolatti [50]. Based on a video, users can understand goals and intentions [52], feel empathy [51, 53], understand actions and intentions [54], and infer another person’s mental state (beliefs and desires) from experience of their behavior [55, 56]. We assumed that using real products could lead to more accurate evaluations. Nevertheless, collecting clues and tendencies of results could also prompt us to conclusions on the three dimensions’ impact on users’ affective and cognitive responses. Table 3 presents some examples of those 50 videos (e.g., Table 3).

-

The architecture of questions: The different questions we organized were based on a three-part architecture. It started with an introduction, focusing on participants’ personal information (Table 4). Part 1 was composed of questions to position the interactive product according to the three dimensions\(^{(\mathrm{d})}\) (e.g. Part 1 in Table 4). Part 2 focused on the understanding of the metaphor and its interactive principles\(^{(\mathrm{p})}\). For this part, the 17 principles\(^{(\mathrm{p})}\) extracted from the preliminary experimentation were used. Based on Likert scales from 0 to 6, participants were asked to judge every video and their correspondences with every principle\(^{(\mathrm{p})}\). Furthermore, an empty box was displayed during the survey for participants who wanted to add a new principle\(^{(\mathrm{p})}\) not already in the list (e.g. Part 2 in Table 4). Part 3 was based on several questions, constituting an overall perception of the interaction by considering design information\(^{(\mathrm{i})}\) boxes highlighted by the theoretical model (e.g. Part 3 in Table 4). This part was the most subjective one because it implied questions related to affective and cognitive responses.

-

The internet survey: These questions and videos were presented through an internet survey. We used the Typeform (www.typeform.com) Internet web system to collect answers and to present the video hosted on the YouTube platform. Using an internet system was an opportunity to be able to send the survey to different types of participants, not only Toyota Motor Europe workers, and to collect the data easily.

-

Panel: The different videos were evaluated by two different panels of participants: experts and novices. For the experts, we asked 26 engineers and designers working on product development to take part in this experimentation. They completed every question related to Parts 1 to 3 (see Table 4). The 50 videos were separated into two surveys of 25 videos. The novices only completed the most subjective part (Part 2 in Table 4). They were Europeans from diverse backgrounds. The 50 videos were separated into five surveys of 10 videos. In total 150 surveys were completed (30 participants per survey).

4.2.5 Data analysis and processing

The procedure presented above allowed us to collect 176 surveys fully completed.

Each video was assessed by 13 expert participants and 30 novices. The following table (see Table 5) summarizes these elements.

4.3 Results

Following the experimentation’s objectives, we present hereafter our results according to four parts:

-

3.3.1 (d)/(a): Focuses on the link between the different dimensions\(^{(\mathrm{d})}\) and the areas\(^{(\mathrm{a})}\) raised from the classification

-

3.3.2 (p)/(a): Highlights the different principles\(^{(\mathrm{p})}\) that have been associated with the different areas\(^{(\mathrm{a})}\)

-

3.3.3 (d)/(i) : Formalizes the influence of the dimensions\(^{(\mathrm{d})}\) on the design information\(^{(\mathrm{i})}\)

-

3.3.4 (a)/(i) : Compares the areas\(^{(\mathrm{a})}\) to the design information\(^{(\mathrm{i})}\) in order to highlight their impact.

The results are summarized in Table 6.

4.3.1 (d)/(a): interactive taxonomy

Through a seven-point scale, participants scored the 50 videos. This score allows us to map the videos according to the 3 dimensions \(^{(\mathrm{d})}\).

The three dimensions have been illustrated visually into a 3D cube consisting of eight areas\(^{(\mathrm{a})}\) (e.g. Fig. 2). It allows us to locate each interactive product according to the score (along axes) participants gave to each video (e.g. Table 6 Column ‘Part 1’). According to an ANOVA (variance analysis; e.g. Table 7), the 50 videos scored following the three dimensions\(^{(\mathrm{d})}\) of ‘environment’, ‘target’, and ‘source’ can be split into several groups according to their differences of variation: it appeared that the ‘environment\(^{(\mathrm{d})}\)’ axis can be split into two groups (real and virtual), the ‘target\(^{(\mathrm{d})}\)’ axis into four groups (one is clearly what we showed as ‘digital’, but the ‘physical’ part have been separated by the analysis in three groups, as three nuances of the physical target), and the ‘source\(^{(\mathrm{d})}\)’ in nine groups (as nine significant nuances from the physical sources to digital one).

This ANOVA analysis proved that in these 50 videos, some significant differences can be highlighted. It also showed that the environment\(^{(\mathrm{d})}\) is real or virtual, the target\(^{(\mathrm{d})}\) is digital or physical (with three sub-categories of physicality) and finally, that the source\(^{(\mathrm{d})}\) can be separated into nine small groups of videos. Additionally, we calculated the ‘variance analysis’ to point out rather participants were agreed between them or not. It pointed out three axis-based results. The closer the score is to zero, the more participants agreed; on the other side the closer it is to six, the more people answered different responses. The degrees of variance were the following: environment\(^{(\mathrm{d})}\) = 1.06; target\(^{(\mathrm{d})}\) = 0.92; source\(^{(\mathrm{d})}\) = 0.73. Thus, the results collected were proportional to the number of groups highlighted by the ANOVA, highlighting that even if the source\(^{(\mathrm{d})}\) was composed of nine sub-categories, people mostly agreed on the answers.

Nevertheless, because this typology of interactive products was based on user perception, we pointed out interesting notions on these three dimensions\(^{(\mathrm{d})}\): the way users consider that an environment\(^{(\mathrm{d})}\) is real or virtual is closely related to the perception of the link between the interface\(^{(\mathrm{d})}\) and the environment\(^{(\mathrm{d})}\). Indeed, according to a linear regression, there is a close link between the environment\(^{(\mathrm{d})}\) (from real/virtual) and the question about interface and environment (from separated to merged). The linear regression highlighted that the more an environment\(^{(\mathrm{d})}\) looks to be merged with an interface, the more it appears to be real for the user because there is no separation between his reality and the interactive product. On the other side, if the interactive product and the environment\(^{(\mathrm{d})}\) look separate, it appears to be perceived as more virtual for the user, because the separation between both brings something that feels ‘fake,’ or ‘created.’ When watching, for example, the Minority Report video (No. 31) and the Iron Man one (No. 22), we can acknowledge that they are very close in terms of interactions. Nevertheless, for the No. 31, the data is represented on a screen, separate from the environment, whereas the No. 22 video represents the data through a holographic system that convinces the user that his environment\(^{(\mathrm{d})}\) is merged with the interactive product. It resulted in a more appreciated interaction. Thus, the way we design the product impacts the perception of the reality according to this notion of merging with the environment\(^{(\mathrm{d})}\) where the user interacts and the interactive product itself.

4.3.2 (p)/(a): interactive principles

This part is related to the different principles\(^{(\mathrm{p})}\) that the interactive metaphors provide. Indeed, based on the preliminary experimentation, we extracted 17 principles\(^{(\mathrm{p})}\) of both the physical and the digital properties. According to the assessment of every video through the ‘Principles part’ presented previously (‘Principles part’ in Table 4), participants had the opportunity to link each video to one or more principle\(^{(\mathrm{p})}\). It resulted in a score for each video, which has been used to characterize the eight areas\(^{(\mathrm{a})}\) previously highlighted through an average reduction.

What the highlighted interactive principles\(^{(\mathrm{p})}\) provided is a richer and finer understanding of the eight interactive areas\(^{(\mathrm{a})}\) (Table 8). Furthermore, we also performed a PCA (Principal Component Analysis) (see Fig. 3) to see how ‘interactive principles\(^{(\mathrm{p})}\) ’ could be organized on a surface. The total variance is 75%. Both the notion of ‘target\(^{(\mathrm{d})}\)’ (vertical axis), and ‘source\(^{(\mathrm{d})}\)’ (horizontal axis) are represented on this PCA. Nevertheless, we removed areas\(^{(\mathrm{a})}\) 5 and 6 because they were not adequately representative (only one video composed these areas\(^{(\mathrm{a})}\)).

It appeared that there is a clear opposition between physical sources\(^{(\mathrm{d})}\) and digital ones\(^{(\mathrm{d})}\) (horizontal axis). Nevertheless, all of these physical sources\(^{(\mathrm{d})}\) cover the vertical axis from physical to digital target\(^{(\mathrm{d})}\). It means that the videos used very physical sources\(^{(\mathrm{d})}\) with physical targets\(^{(\mathrm{d})}\) like ‘transformable\(^{(\mathrm{p})}\)’ or ‘movable\(^{(\mathrm{p})}\)’. But it also shows that physical sources\(^{(\mathrm{d})}\) could also be used for digital target\(^{(\mathrm{d})}\).

For example, the terms ‘manipulable\(^{(\mathrm{p})}\)’, ‘graspable\(^{(\mathrm{p})}\)’ and ‘malleable\(^{(\mathrm{p})}\)’ that have been considered as physical sources\(^{(\mathrm{d})}\) have been mainly used to characterize digital target\(^{(\mathrm{d})}\). This observation is also valid for digital sources\(^{(\mathrm{d})}\): ‘programmable\(^{(\mathrm{p})}\), ’connectable\(^{(\mathrm{p})}\)’ or ‘voidable\(^{(\mathrm{p})}\)’ have been considered digital sources\(^{(\mathrm{d})}\) mainly used in the videos to support a physical target\(^{(\mathrm{d})}\). Thus, it showed that, independently from real or virtual notions, the physical or digital sources\(^{(\mathrm{d})}\) could be used by and through physical and digital targets\(^{(\mathrm{d})}\).

4.3.3 (d)/(i): influence of dimensions\(^{(\mathrm{d})}\) on design information\(^{(\mathrm{i})}\)

The aim of this part is to use the evaluation of the interactive products to compare the three dimensions\(^{(\mathrm{d})}\) from the taxonomy with the design information\(^{(\mathrm{i})}\) from the model (e.g. Fig. 1). To do so, we used the results extracted from the ANOVA (e.g. Table 7) for each dimension\(^{(\mathrm{d})}\), which we analyzed with the six central design information\(^{(\mathrm{i})}\) boxes from the model (e.g. Fig. 1) through a linear regression method. The results are presented in Fig. 4 (e.g. Fig. 4).

It appears that each dimension\(^{(\mathrm{d})}\) impacted the design information\(^{(\mathrm{i})}\) extracted from the theoretical model differently. As we showed in the state of the art, an interactive experience combines the environment\(^{(\mathrm{d})}\), the target\(^{(\mathrm{d})}\), and the source\(^{(\mathrm{d})}\) in an entire experience. Thus, rather than observing the differences between dimensions\(^{(\mathrm{d})}\), the following part took the interactive artifact’s point of view by analyzing each area\(^{(\mathrm{a})}\) extracted from the taxonomy. Therefore, through an average of video results for each of the eight areas\(^{(\mathrm{a})}\) highlighted by the three dimensions’\(^{(\mathrm{d})}\) mapping, we have represented on the theoretical model the varying impact on the design information\(^{(\mathrm{i})}\) in percentage. The Table 6, Part ‘results design information’, summarizes these results (e.g. Table 6).

4.3.4 (a)/(i): eight areas\(^{(\mathrm{a})}\) and their impact on the design information\(^{(\mathrm{i})}\)

This part paralleled the eight areas\(^{(\mathrm{a})}\) highlighted by the threedimensional\(^{\mathrm{(d)}}\) taxonomy with the results from the videos about the impact on the different design information(i) of the model (e.g. Fig. 1): To better see how these interactive areas\(^{(\mathrm{a})}\) have impacted both user responses and user perception\(^{(\mathrm{i})}\) of the interactive product, we used a PCA (e.g. Figure XX). For this PCA, we only used the areas 1\(^{(\mathrm{a})}\), 2\(^{(\mathrm{a})}\), 3\(^{(\mathrm{a})}\), 4\(^{(\mathrm{a})}\), 7\(^{(\mathrm{a})}\), and 8\(^{(\mathrm{a})}\). The two areas 5(a) and 6(a), represented by only one video have been ignored because of their weak representation. Two elements have been correlated: the eight areas\(^{\mathrm{(a)}}\) (composed of an average of every video in Table 3), and the six design information\(^{(\mathrm{i})}\) highlighted by the theoretical model (e.g. Fig. 1). Thus, we obtained the following representation (see Fig. 5). Based on this PCA (total variance: 88.80%) we created two new axes to better understand it: The first one differentiates positive from negative results. Based on this axis, we can clearly see that areas 2\(^{(\mathrm{a})}\), 3\(^{(\mathrm{a})}\) and 7\(^{(\mathrm{a})}\) have been considered more meaningful (qualitative and quantitative impact of affective and cognitive responses users live) than areas 8\(^{(\mathrm{a})}\), 4\(^{(\mathrm{a})}\) and 1\(^{(\mathrm{a})}\). What they have in common is the following: areas 2\(^{(\mathrm{a})}\), 3\(^{(\mathrm{a})}\) and 7\(^{(\mathrm{a})}\) combine both physical and digital elements (independently from the notion of real or virtual) (physical target\(^{(\mathrm{d})}\) with digital source\(^{(\mathrm{d})}\), or digital target\(^{(\mathrm{d})}\) with physical source\(^{\mathrm{(d)}}\)). Thus, it appears that areas\(^{(\mathrm{a})}\) combining both dimensions\(^{(\mathrm{d})}\) of physical and digital were more appreciated and more related to meaningful experiential interactions. Areas 8\(^{(\mathrm{a})}\), 4\(^{(\mathrm{a})}\) and 1\(^{(\mathrm{a})}\) independently from the notion of environment\(^{(\mathrm{d})}\) (real or virtual) are either completely physical (physical target\(^{(\mathrm{d})}\) and physical source\(^{(\mathrm{d})}\): area 1\(^{(\mathrm{a})}\)) or completely digital (digital target\(^{(\mathrm{d})}\) and digital source\(^{(\mathrm{d})}\): areas 4\(^{(\mathrm{a})}\) and 8\(^{\mathrm{(a)}}\)).

Finally, according to the evaluation of 50 videos by 176 participants, a taxonomy of eight areas\(^{(\mathrm{a})}\) of interactive artifacts have been highlighted. We pointed out how participants characterized the impact of these eight interactive areas\(^{(\mathrm{a})}\) through the model of design information\(^{(\mathrm{i})}\) (Fig. 1). These different elements have been represented in Table 6.

4.4 Discussion

In this part, we discussed the analytical part named ‘results’ through five sections:

-

3.4.1 (d)/(a): the first part approaches the notion of taxonomy which we pointed out through the three dimensions\(^{(\mathrm{d})}\)

-

3.4.2 (a)/(i): the second part discusses the different areas\(^{(\mathrm{a})}\) through the notion of design information\(^{(\mathrm{i})}\)

-

3.4.3 (a)/(p): the third part discusses future design challenges, based on principles\(^{(\mathrm{p})}\)’ results

-

3.4.4: the fourth part discusses metaphor and interaction design through the angle of temporality to improve the definition of metaphor

-

3.4.5 finally, the last part presents the limitations.

These sections aim to transform the previous analysis in a description of possible methodologies.

4.4.1 (d)/(a): the interactive taxonomy: a three-dimensional\(^{(\mathrm{d})}\) view

The proposed taxonomy is one way to differentiate an interactive product from the human perception of physical and digital properties. Eight areas\(^{(\mathrm{a})}\) have been highlighted according to three dimensions\(^{(\mathrm{d})}\) (environment, target, and source). This taxonomy could be even more precise, by differentiating more groups within the eight areas\(^{(\mathrm{a})}\), as the ANOVA highlighted (e.g. Table 5). Nevertheless, starting with only eight areas\(^{(\mathrm{a})}\) to classify interactive products is already challenging because the classification is not static: Indeed, according to our analysis, interactive products can be assessed differently depending on the people’s knowledge, culture, society, and many more parameters that have been highlighted by the theoretical model of design information\(^{(\mathrm{i})}\) (e.g. Fig. 1). Furthermore, as showed in the results, we collected several rules. For example, according to the Fig. 4, a real environment seems to be more impactful regarding ‘semantics.’ Designers can specifically use these rules as methods and clues to design new interactive products. Thus, the three dimensions become some dimensions the designer can play with to influence users’ affective and cognitive responses\(^{(\mathrm{i})}\).

Both designers and researchers can use this taxonomy and its analytical results to see and understand the impact of different conception choices on users’ affective and cognitive responses, such as environment, target (product properties), and source (reference of perceived properties).

4.4.2 (a)/(i): from physical to digital, a two-worlds vision

The Sect. 3.3.3 highlighted that meaningful interactions (interactions impacting the different design information from the theoretical model) were interactions that combined both the physical and digital worlds (areas 2, 3, and 7 from the interactive taxonomy); in opposition to interactions that combined two identical worlds (areas 1, 4, and 8). Indeed, both worlds (the physical and the digital) have their characteristics and advantages. It can be summarized as following: what the physical brings to any interaction is the human language of things. Thus, humans are attached to materiality that seems to impact them through richer perception (senses) of the product (practical, aesthetic, and symbolic notions). On the other side, the digital and virtual worlds bring larger possibilities regarding ‘action enablement’ and ‘user’s behavioral impact.’ Furthermore, emotions have been highlighted as more influential in the virtual environment.

Nevertheless, the user experience approach of interactive products leads us not to consider the dimensions independently from each other, but instead, the full experience of the interactive product: the combination of the three dimensions perceived by the user and hosted in one interactive product. It has been highlighted as the key. Thus, taking advantages of both worlds (physical target + digital source or digital target + physical source) increase the impact on users’ actions and perception of the interactive product, creating more meaningful experiential interaction. Following this understanding of combining two worlds, it has been showed that areas 2, 3 and 7 were more meaningful than the others. Furthermore, we assume that though area 6 has not been covered by enough videos to be evaluated, it can also be considered as one strong territory of research.

Thus, if these eight areas of interaction cover the scope of physical and digital interaction from a user experience point of view, the study highlighted that interactive areas number 2, 3, 6 and 7 are four fields that should be considered when designing meaningful interactive products. These interactive areas only give clues and guidelines for understanding and designing future interactive products. The right balance between the physical and digital target and source, depending on the environment where it takes place, still needs to be designed.

4.4.3 (a)/(p) future design challenges

The interactive principles that have been presented can be considered as notions or clues for future design challenges. Actually, following the understanding previously presented which defended that both worlds (physical and digital) should be considered when designing interactive products, we can assume that both a physical target which uses digital sources (Boxes 2 and 6, named hereafter as Territory 1) or a digital target which uses physical sources (Boxes 3 and 7, named hereafter as Territory 2) can be improved. The following representation (see Fig. 6) extracted from the previous PCA (see Fig. 3), shows some elements that can be assumed as new and future opportunities for concept creation: what we highlighted as Territory 1 is composed of physical targets that use digital sources could be improved by the interactive principles that could move into this territory (represented by dotted arrows). Indeed, by doing so, designers could improve the experience of interaction.

Elsewhere, this is also something that can be defended for the second territory. This digital target, which uses physical sources, could be enhanced by principles like ‘transformable’ or ‘movable’. By doing so, we could improve or create concepts that use even more of these physical principles and finally improve the experience of interaction.

As it has been said of this state of the art about image schemas [48], one danger of such inventories of principles is that they are never complete. Thus, these principles can be used as clues or challenges for designing more efficient or powerful experiential interaction. Nevertheless, this list not exhaustive; it must grow and be improved.

4.4.4 The metaphor and interaction design

Going back to the state of the art, and more specifically to the definition of metaphors, the study highlighted the power of metaphors in an interactive product. Even if this study focused on physical and digital proprieties of both the target\(^{(\mathrm{d})}\) and the source\(^{(\mathrm{d})}\) in metaphor, the study combined both the understanding of interactive product and metaphor. It raises powerful notions of interaction and metaphors. Furthermore, the principles\(^{(\mathrm{p})}\) that have been highlighted in the preliminary experimentation are mainly related to what the product allows one to do, and to dynamic properties (like ‘moveable\(^{(\mathrm{p}){\prime }}\)or ‘manipulable\(^{(\mathrm{p}){\prime }}\)). These principles\(^{(\mathrm{p})}\) outline one key characteristic of interaction design: the notion of time and sequence. Indeed, as presented in the definition of interaction, time is decisive, because interactions are sequences of actions. Thus, metaphor and interaction design can be easily differentiated from metaphor and product design through this notion of sequence and temporality in metaphors. Even if the conceptual structure of metaphor defended by Lakoff and Johnson [27] is an involvement of all natural dimensions of our experience (color, shape, texture, sound...), the notion of metaphor and interaction make sense through the notion of time, narration, and temporality. Thus, metaphor in interaction design relates to sequential conceptual structure, where the source references another entity to raise more meaningful interactions and user experiences.

Finally, according to this point about sequence and time, we encourage designers to make a point in exploring the benefit of metaphors in interaction design through the angle of a sequence of actions when considering the source\(^{(\mathrm{d})}\).

4.4.5 Limitations

First of all, even if it has been shown in the procedure that evaluating videos of people interacting is valuable, results could have been more precise if evaluations were performed with real product tests. Thus, we should consider the results as tendencies and clues rather than as exact results. Furthermore, the sustainability of the examples displayed in this taxonomy can be short, because human perception evolves very quickly. Thus, it needs to be updated, fed, and improved by new evaluations of everyday products and new concepts to keep this taxonomy updated. Additionally, we need to conduct more evaluations with products that can be associated with areas 5 and 6. Indeed, it appears that only one video per area has been linked. This can occur due to several reasons: These interactive areas might not be enough, or not yet investigated by the design community; the videos that were selected may not cover the full scope of interactive products, or these typologies are too strange to be covered by the design community.

Finally, the approach being developed requires to be confortable with designing for subjective perception (affective and cognitive reactions). We do understand that this approach can be difficult and confusing to tackle because it is complicated to rely with measurable data. Nevertheless the added value of such User-experience and Interactive product approach is high and deserves to be implemented in the design process.

5 Conclusion

This research developed a taxonomy resulting in eight areas\(^{(\mathrm{a})}\) of interactive products through the human perception\(^{(\mathrm{i})}\) of physical and digital dimensions\(^{(\mathrm{d})}\): environment\(^{(\mathrm{d})}\), target\(^{(\mathrm{d})}\) (product properties), and sources\(^{(\mathrm{d})}\) (perceived references). It resulted in a method, a tool, a taxonomy, and design challenges. These elements contribute to the development of the interactive approach.

First of all, the study matches a method to quickly evaluate and classify interactive products through the subjective human perception. This protocol takes into consideration the senses involved, the physical and digital interactions, and the subjective perception (overall perception) of user experiential interaction. It proposes an open-basis and a step-by-step architecture for evaluating any interactive products. This simple common base can then be augmented and improved through more scales, keywords, and questions depending on the purposes of any researchers in interactive products from disciplines of user experience design, human factors, computer science, artificial intelligence or researchers in design methodology. This method and database are today used in Toyota Motor Europe to evaluate and correlate both interactive products from every horizon and automotive-related interactive products (such as the steering wheel, the navigation system, the HVAC, etc.). By clustering all these interactive products according to the taxonomy, we can link automotive-related components with everyday and future interactive products. By doing so, we can compare and improve automotive products with any interactive products (like those evaluated in this experimentation) through the physical and digital paradigm. This approach opens up new perspectives within the design process by confronting automotive-related parts with any kind of products. Thus, this interactive approach can be considered as a method for enlarging designers’ scope, in order to improve interactive products design.

Secondly, we highlighted a three-dimensional\(^{(\mathrm{d})}\) tool that gives clues and challenges for designers through the physical and digital perception. For example, this tool can be used to expand, improve, and clarify an interaction design brief in early design by encouraging designers to consider the balance between the three dimensions\(^{(\mathrm{d})}\), as well as the impact of each dimension\(^{(\mathrm{d})}\) on the human affective and cognitive responses. Additionally, the principles\(^{(\mathrm{p})}\) that have been highlighted are the beginning of an endless list, which can be used as clues and key notions to evaluate interactive products, and as challenges when designing interactive products.

The method, to evaluate and classify interaction through the subjective perception, and the three-dimensional\(^{(\mathrm{d})}\) tool can used at different phases of the design process. For example, considering the four design activities proposed by Bouchard (2003) (Information, Generation, Evaluation and Communication), the method to evaluate interaction could be used to evaluate and located a generated concept compared to existing one. Furthermore, it can also be used in the Information activities in order to evaluate and classify existing interactive product for highlighting possible territories of creation. Thus, the defined method and tool can be used at different stages of the design process, depending of expected outputs.

Thirdly, this research presented the taxonomy itself, through a database of 50 interactive products evaluated by 176 participants. Through this database, we emphasized the link between physical and digital interactions, leading us to a key finding that highlighted and proved that experiential interactions (independently from the environment) are more meaningful when designed with both physical and digital principles\(^{(\mathrm{d})}\): physical targets\(^{(\mathrm{d})}\) combined with digital sources\(^{(\mathrm{d})}\), or digital targets\(^{(\mathrm{d})}\) combined with physical sources\(^{(\mathrm{d})}\). Thus, it sets a new milestone in both the understanding of interaction design through physical and digital properties within the scope of user experience and in the understanding of metaphors in interaction design.

Finally, these three elements presented along this paper can be considered as universal, because they have been conceived based on an international state of the art. Nevertheless, the conducted study was based on a European evaluation because participants were European. Thus, we assume that this same evaluation, performed in another cultural context could be a way to highlight the impact of culture on perception of Interactive product (affective and cognitive perception).

References

Gentner, A.: Thesis: Definition and representation of user experience intentions in the early phase of the industrial design process: a focus on the kansei process (2014)

Saussure, F.: Cours de linguistique générale, Payot (Collection:Bibliothèque scientifique Payot) (ISBN -228-50065-8 et 2-228-88165-1) (1916)

Peirce, C.: Elements of Logic Recueil: Écrits sur le signe, Seuil 1978 (1903)

Hekkert, P., Cila, N.: Handle with care! Why and how designers make use of product metaphors. Des. Stud. 40, 196–217 (2015)

Forlizzi, J., Battarbee, K.: Understanding experience in interactive systems. In: Proceedings of the 2004 Conference on Designing Interactive Systems (DIS 04): Processes, Practices, Methods, and Techniques, p. 261. ACM, New York (2004)

Desmet, P.M.A., Hekkert, P.: Framework of product experience. Int. J. Des. 1(1), 57–66 (2007)

Hekkert, P., Schifferstein, H.N.J.: Introducing product experience. In: Schifferstein, H.N.J., Hekkert, P. (eds.) Product Experience. Elsevier, Amsterdam (2008)

Mahlke, S., Thüring, M.: Studying antecedents of emotional experiences in interactive contexts. In: Proceeding of the SIGCHI Conference on Human Factors in Computing Systems, pp. 915–918. ACM Press (2007)

Hassenzahl, M.: The interplay of beauty, goodness, and usability in interactive products. Hum. Comput. Interact. 19(4), 319–349 (2004)

Mugge, R., Schifferstein, H.N.J., Schoormans, J.P.L.: Product attachment and satisfaction: understanding consumers’ post-purchase behavior. J. Consum. Mark. 27(3), 271–282 (2010)

Ortíz Nicólas, J.C., Aurisicchio, M.: A scenario of user experience. In: Culley, S.J., Hicks, B.J., McAloone, T.C., Howard, T.J., Badke-Schaub, P. (eds.) Proceedings of the 18th International Conference on Engineering Design (ICED 11), Impacting through Engineering Design: Vol. 7, pp. 182–193. Human Behaviour in Design (2011)

Parrish, P.: A Design and Research Framework for Learning Experience. University of Colorado Denver, The COMET Program Brent G, Wilson (2008)

Hassenzahl, M.: User experience and experience design. In: Soegaard, M., Dam, R.F. (eds.) Encyclopedia of Human-Computer Interaction. The Interaction-[X] Design.org Foundation. http://www.interaction-design.org/encyclopedia/user_experience_and_experience_design.html (2011)

Gil, S.: Comment mesurer les émotions en laboratoire ? Revue électronique de (2009)

Hassenzahl, M., Tractinsky, N.: User experience–a research agenda. Behav. Inf. Technol. 25(2), 91–97 (2006)

Norman, D.A.: Emotional Design: Why We Love (or Hate) Everyday Things. Basic Books, New York (2004)

Bongard-Blanchy K.: Bringing the user experience to early product design: From idea generation to idea evaluation? Ph.D Dissertation, Arts et Metiers Paristech, France (2013)

Crilly, N., Moultrie, J., Clarkson, P.J.: Seeing things: consumer response to the visual domain in product design. Des. Stud. 25, 547–577 (2004)

Rasmussen, J.: What can be learned from human error reports? In: Duncan, K., et al. (eds.) Changes in Working Life. Wiley, Chichester (1980)

Atkinson, R.C., Shiffrin, R.M.: Chapter: human memory: a proposed system and its control processes. In: Spence, K.W., Spence, J.T. (eds.) The Psychology of Learning and Motivation, vol. 2, pp. 89–195. Academic Press, New York (1968)

Helander, M.G. Khalid, H.M.: Affective and pleasurable design. In: Salvendy G. (eds.) Handbook of Human Factors and Ergonomics, 3rd edn. Wiley, Hoboken (2006). doi:10.1002/0470048204.ch21

Coelho, M., Maes, P.: Sprout I/O: a texturally rich interface. In: TEI ’08, pp. 221–222. ACM Press (2007)

Milgram, P., Colquhoun, H.: A taxonomy of real and virtual world display integration. In: Ohta, Y., Tamura, H. (eds.) Mixed Reality-Merging Real and Virtual Worlds, pp. 5–30. Springer, New York (1999)

Jacob, R.J.K., Girouard, A., Hirshfield, L.M., Horn, M.S., Shaer, O., Treacy, E.S., Zigelbaum, J.: Reality-based interaction: a framework for post-WIMP interfaces. In: To appear in Proceedings of CHI 2008. ACM Press (2008)

Benford, S.C., Gail, G., Gail, R., Chris, B., Boriana, K.: Understanding and constructing shared spaces with mixed reality boundaries. TOCHI 5(3), 185–223 (1998)

Pine J.: Joseph Pine: What consumers want [Video file] (2004). Retrieved from https://www.ted.com/talks/joseph_pine_on_what_consumers_want?language=fr#t-13682

Lakoff, G., Johnson, M.: Metaphors We Live by. University of Chicago Press, Chicago (1980)

Gibbs, R.W., Beitel, D.A., Harrington, M., Sanders, P.E.: Taking a stand on the meanings of stand: bodily experience as motivation for Polysemy. J. Semant. 11, 231–251 (1994)

Forceville, C.: Metaphor in pictures and multimodal representations. In: Gibbs, R.W. (ed.) The Cambridge Handbook of Metaphor and Thought, p. 462e482. Cambridge University Press, New York (2008)

Cienki, A., Muller, C.: Metaphor, gesture, and thought. In: Gibbs, R.W. (ed.) The Cambridge Handbook of Metaphor and Thought, p. 483e501. Cambridge University Press, New York (2008)

Chung, W.: Theoretical structure of metaphors in emotional design. In: Proceeding in 6th International Conference on Applied Human Factors and Ergonomics (AHFE 2015) and the Affiliated Conferences, AHFE 2015 (2015)

Sweetser, E.: From Etymology to Pragmatics: Metaphorical and Cultural as- Pects of Semantic Structure. Cambridge University Press, Cambridge (1990)

Turner, M.: Figure. In: Katz, A.N., Cacciari, C., Gibbs, R.W., Turner, M. (eds.) Figurative Language and Thought, p. 44e87. Oxford University Press, New York (1998)

Carroll, N.: A note on film metaphor. J. Pragmat. 26, 809e822 (1996)

Alty, J.L., Knott, R.P.: Metaphor and human-computer interaction: a model based approach. In: Nehaniv, C. (ed.) Computation for Metaphors, Analogy, and Agents, p. 307e321. Springere, Berlin (1999)

Krippendorff, K.: The Semantic Turn: A New Foundation for Design. Taylor & Francis, New York (2006)

Hurtienne, J., Blessing, L.: Design for intuitive usedTesting image schema theory for user interface design. In: Paper Presented at the International Conference on Engineering Design, ICED’07. Paris (2007)

Carroll, J., Mark, R.: Metaphors, computing systems and actice learning. Int. J. Man Mach. Stud. 22, 39–57 (1985)

Yanagisawa, H.: A computational model of perceptual expectation effect based on neural coding principles. J. Sens. Stud. 31, 430–439 (2016). doi:10.1111/joss.12233

Schnabel, M.A.: Framing mixed reality. In: Wang, X., Schnabel, M.A. (eds.) Mixed Reality Application in Architecture, Design and Construction. Springer, Netherlands (2006)

Milgram, P., Kishino, F.: A taxonomy of mixed reality visual displays. IEICE Trans. Inf. Syst. E77–D(12), 1321–1329 (1994)

Anders, P.: Cynergies: technologies that hybridize physical and cyberspaces. In: Connecting Crossroads of Digital Discourse, Association for Computer Aided Design in Architecture (ACADIA), pp. 289–297. Indianapolis (2003)

Djajadiningrat, T., Wensveen, S., Frens, J., Overbeeke, K.: Tangible products: redressing the balance between appearance and action. Pers. Ubiquitous Comput. 8(5), 294–309 (2004)

Ishii, H., Lakatos, D., Bonanni, L., Labrune, J.: Radical atoms: beyond tangible bits, toward transformable materials interactions. (2012). doi:10.1145/2065327.2065337

Poupyrev, I., Nashida, T., Okabe, M.: Actuation and tangible user interfaces: the Vaucanson duck, robots, and shape displays. In: Proceedings of TEI ’07, pp. 205–212. ACM Press (2007)

Kamaruddin, A., Dix, A.: Understanding physicality on desktop: prelimi- nary results. In: Lancaster, First International Workshop for Physicality (2006)

Xerox 8010 Star System (1982)

Johnson, M.: The Body in the Mind: The Bodily Basis of Meaning Imagination, and Reason. The University of Chicago Press, Chicago (1987)

Mahut, T., Bouchard, C., Omhover, J.F., et al.: Interdependency between user experience and interaction: a kansei design approach. Int. J. Interact. Des. Manuf. (2017). doi:10.1007/s12008-017-0381-4

Rizzolatti, G., Fadiga, L.: Resonance behaviors and mirror neurons. Ital. Biol. 137, 85–100 (1999)

Keysers, C.: The empathic brain: How the discovery of mirror neurons changes our understanding of human nature. Lulu. com (2011)

Fogassi, L., Francesco, P.F., Gesierich, B., Rozzi, S., Chersi, F., Rizzolatti, G.: Parietal lobe: from action organization to intention understanding. Science 308(5722), 662–667 (2005)

Decety, J.: Naturaliser l’empathie [Empathy naturalized]. L’Encéphale 28, 9–20 (2002)

Lacoboni, M., Woods, R.P., Brass, M., Bekkering, H., Mazziotta, J., Rizzolatti, G.: Cortical Mechanisms of Human Imitation (1999)

Gordon, R.: Folk psychology as simulation. Mind Lang. 1(2), 158–171 (1986). doi:10.1111/j.1468-0017.1986.tb00324.x

Goldman, A.: Interpretation psychologized. Mind Lang. 4(3), 161–185 (1989). doi:10.1111/j.1468-0017.1989.tb00249.x

Blackwell, A.F.: The reification of metaphor as a design tool. ACM Trans. Comput. Hum. Interact. 13(4), 490–530 (2013)

Lang, P.J.: The emotion probe: studies of motivation and attention. Am. Psychol. 50(5), 372–385 (1995)

Lalmas, M., O’brien, H., Yom-Tov, E.: Measuring user engagement. In: Proceeding in 22nd International World Wide Web Conference 2013 rio de Janeiro (2013)

Ryan, R., Deci, E.: Intrinsic and Extrinsic Motivations: Classic Definitions and New Directions. University of Rochester, Rochester (2000)

Moussette C.: Simple Haptics, Sketching Perspectives for the Design Interaction. Ph.D Dissertation (2012)

Dias, R.A., Gontijo, L.A.: Aspectos ergonômicos relacionados aos materiais. Ergodes. HCI 1, 22–33 (2013)

Lund, A.M.: Measuring usability with the USE questionnaire. STC Usability SIG Newsletter, 8:2 (2001)

Camere, S., Schifferstein, H.N.L.: The experience map. A tool to support experience-driven multisensory design. Politecnico di Milano. In: Proceedings of Desform 2015 (2015)

Acknowledgements

The authors want to thank the support for this research afforded by Toyota Motor Europe, who provided conditions to assist this research. Furthermore we would like to thank all the participants who took part in the experimentation, for the time they spend and for the rich discussions it raised. Finally, we would like to thank the reviewers for their meaningful feedbacks.

Author information

Authors and Affiliations

Corresponding author

Rights and permissions

About this article

Cite this article

Mahut, T., Bouchard, C., Omhover, JF. et al. Interaction Design and Metaphor through a Physical and Digital Taxonomy. Int J Interact Des Manuf 12, 629–649 (2018). https://doi.org/10.1007/s12008-017-0419-7

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s12008-017-0419-7