Abstract

The prevalence and characteristics of research misconduct have mainly been studied in highly developed countries. In moderately or poorly developed countries such as Croatia, data on research misconduct are scarce. The primary aim of this study was to determine the rates at which scientists report committing or observing the most serious forms of research misconduct, such as falsification , fabrication, plagiarism, and violation of authorship rules in the Croatian scientific community. Additionally, we sought to determine the degree of development and the extent of implementation of the system for defining and regulating research misconduct in a typical scientific community in Croatia. An anonymous questionnaire was distributed among 1232 Croatian scientists at the University of Rijeka in 2012/2013 and 237 (19.2 %) returned the survey. Based on the respondents who admitted having committed research misconduct, 9 (3.8 %) admitted to plagiarism, 22 (9.3 %) to data falsification, 9 (3.8 %) to data fabrication, and 60 (25.3 %) respondents admitted to violation of authorship rules. Based on the respondents who admitted having observed research misconduct of fellow scientists, 72 (30.4 %) observed plagiarism, 69 (29.1 %) observed data falsification, 46 (19.4 %) observed data fabrication, and 132 (55.7 %) respondents admitted having observed violation of authorship rules. The results of our study indicate that the efficacy of the system for managing research misconduct in Croatia is poor. At the University of Rijeka there is no document dedicated exclusively to research integrity, describing the values that should be fostered by a scientist and clarifying the forms of research misconduct and what constitutes a questionable research practice. Scientists do not trust ethical bodies and the system for defining and regulating research misconduct; therefore the observed cases of research misconduct are rarely reported. Finally, Croatian scientists are not formally educated about responsible conduct of research at any level of their formal education. All mentioned indicate possible reasons for higher rates of research misconduct among Croatian scientists in comparison with scientists in highly developed countries.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

Introduction

The prevalence and characteristics of research misconduct have increasingly been studied over the last several decades. Studies on research misconduct first appeared in highly developed countries, like the USA, Canada, and Western European (Fanelli 2009; Marušić et al. 2011; Pupovac and Fanelli 2015) about 30 years ago. More recently, studies on research misconduct have been developed in moderately and poorly developed countries, and in these countries, research misconduct shows a substantially higher rate than in developed countries (Dhingra and Mishra 2014; Okonta and Rossouw 2014; Sullivan et al. 2014).

In addition to the differences in the frequency of reported research misconduct between highly developed, moderately developed and poorly developed countries, there are also significant differences in the system to prevent and manage research misconduct. For example, in highly developed countries, the forms of research misconduct and ways in which it is managed are clearly defined in numerous legal documents, ethics manuals, codes of practice, and guidelines at several levels, i.e. at the level of professional associations , scientific journals, departments, institutions, the state, and even between the states. Scientists in developed countries are routinely educated about responsible conduct of research (RCR) and are encouraged to discuss research misconduct through workshops, courses and educational materials (Resnik and Master 2013). Developing countries usually do not have any national or institutional systems to prevent and manage research misconduct, or such systems are only just beginning to emerge and thus provide an ethical framework for scientific work. Cases of research misconduct are still unwillingly discussed and education on research misconduct is largely absent in developing countries (Ana et al. 2013).

Croatia is a moderately developed Eastern European country in which the prevalence of research misconduct is expected to be higher and the measures for regulating research misconduct weaker than those in developed countries. However, Croatia is unusual for its early start with activities for promoting research integrity. In 2006, the Croatian Parliament founded a national ethics body—the Committee for Ethics in Science and Higher Education (CESHE) with the goal to prevent and manage research misconduct; and to promote education on research integrity (Petrovecki and Scheetz 2001; Puljak 2007). As a result, the CESHE developed The National Code of Ethics. Simultaneously, in the mid-2000s, the first studies on academic dishonesty or misconduct in Croatia were performed. These studies found a high prevalence of academic dishonesty and cheating among medical students (Bilić-Zulle et al. 2005; Hrabak et al. 2004; Pupovac et al. 2008), positive and permissive attitudes toward accepting academic dishonesty amongst students of medicine and pharmacy (Kukolja Taradi et al. 2010, 2012; Pupovac et al. 2010), and that the application of penalties for those who plagiarized were the only effective measure for preventing plagiarism in student essays (Bilic-Zulle et al. 2008). Research misconduct has been less investigated than academic dishonesty, and such studies were usually related to scientific journals. For example, Croatian Medical Journal (CMJ) investigated the plagiarism rate in submitted manuscripts and found plagiarism in 85 papers, 14 % of which were authored by Croatian researchers (Baždarić et al. 2012). Similarly, a study that investigated how many Croatian open access journals addressed ethical issues in their instructions for authors found that only 12 % of journals addressed the topic of research misconduct (Stojanovski 2015). Despite many studies conducted in the Croatian scientific community, data on the prevalence of research misconduct are still lacking. It is not known to what extent, if at all, the Code of Ethics is being implemented at the institutional level and how aware Croatian scientists are regarding research integrity.

The primary aim of this study was to determine the rates at which scientists report committing or observing the most serious forms of research misconduct, such as falsification, fabrication, plagiarism, and violation of authorship rules (Steneck 2006) in the Croatian scientific community. In addition, the study sought to differentiate the rates of research misconduct with respect to scientists’ work experience and field of science. The second aim of the study was to determine the degree of development and the extent of implementation of the system for defining and regulating research misconduct in a typical scientific community in Croatia.

Subjects and Methods

In 2012/2013 an anonymous survey was carried out among academic scientists at the University of Rijeka, the second largest university in Croatia according to the number of scientists employed (Zwirn Periš and Avilov 2013). For the survey, the term ‘academic scientist’ included research fellows, teaching/research assistants, and faculty (assistant professors and higher ranks). Employees engaged on a contract for service and external and internal associates were excluded from the study. The subjects of the study represented a typical Croatian scientist.

The survey was conducted over a four-week period. First, a notice of the survey was sent by email to all 1232 eligible scientists, with a letter of request to complete the relevant questionnaire and a link to the questionnaire. Over the following 3 weeks, a weekly reminder was automatically sent by the system to those who had not yet completed the questionnaire.

Questionnaire

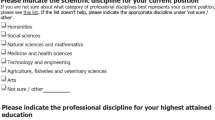

Questionnaires from two previously published systematic reviews of studies that assessed the prevalence of research misconduct, one of which was co-authored by the author of this study (V.P.), served as the basis for the development of the questionnaire for this study (Fanelli 2009; Pupovac and Fanelli 2015). The forms of research misconduct most commonly recognized in previous studies and, accordingly, included in our questionnaire were: data fabrication, data falsification, and plagiarism. Violation of authorship rules for research papers was also included because it is a questionable research practice most commonly reported to the Council of Honor of the University of Rijeka (oral communication from the President of the Council of Honor, Prof. Elvio Baccarini).

The questionnaire consisted of 12 questions. With permission from the authors (Eastwood et al. 1996) four of the questions were taken from a previous study, and adjusted for the needs of the present survey. The questions were divided into three groups. The first group of questions were related to the prevalence and causes of research misconduct: reporting observed misconduct of students and fellow scientists, admitting to having committed research misconduct, and possible causes of misconduct. Questions asking scientists about observed misconduct of students (e.g., cheating on exams, plagiarizing in seminars, etc.) were used in order to soften questions that were likely to produce a social desirability bias (see below) and the answers to these types of questions were not further discussed in this study. The second group of questions regarded the development of the system for promoting research integrity: awareness of the documents for regulating research misconduct, the most frequently recognized authorities on research integrity, ways of learning about research integrity, and habits of reporting the observed research misconduct. The questions in the third group were used to collect data on the scientist’s age, gender, academic rank, affiliated school or department within the university, and number of authored papers in the Thompson Reuters Web of Knowledge database.

As a number of the questions were sensitive, i.e., asking the scientists to admit to their own unethical behavior, a high rate of socially desirable responses was expected. According to previous studies, respondents tend to underreport personal misconduct and over-report misconduct observed in their colleagues (Fanelli 2009; Pupovac and Fanelli 2015). A particular survey methodology was chosen to soften the socially desirable bias. Systematic reviews and meta-analyses of survey data in a prevalence of research misconduct showed that questionnaires avoiding explicit expressions (e.g. “plagiarism”, “falsification”) and using indirect questions (e.g. “Have you ever suspected….” or “Have you heard…”) yielded a higher rate of reported research misconduct (Fanelli 2009; Pupovac and Fanelli 2015). Thus, in order to reduce the effect of socially desirable responses, descriptions of unethical behavior were used instead of explicit expressions. However, when asking about having observed fellow scientists committing research misconduct, direct questions (e.g. “Have you ever witnessed…” or “Have you observed…”) were used in order not to increase the over-reporting of observed research misconduct.

The online survey software SurveyMonkey (Palo Alto, USA) was used to create and disseminate the questionnaire and to collect data.

Statistical Analysis

We determined the absolute (N) and relative frequencies (%), with 95 % confidence intervals (CI), of respondents who admitted to having engaged in research misconduct and the frequency of respondents who reported having observed research misconduct of their colleagues (individually for data fabrication, data falsification, plagiarism, and violation of authorship rules). The differences in the frequency of research misconduct with respect to the field of science and academic rank were tested using χ2 test. The differences, with respect to the field of science, were tested by comparing respondents employed at the School of Medicine with those employed at other Schools or Departments from the University of Rijeka. The differences, with respect to academic rank, were tested by comparing the respondents holding the rank of research or teaching assistant (mostly PhD students or postdoctoral associates) with respondents holding the rank of assistant professor or higher. Statistical analysis was performed using the statistical program MedCalc 11 (MedCalc Software, MariaKerke, Belgium; licensed to the Department of Medical Informatics, University of Rijeka School of Medicine, Rijeka, Croatia). The level of statistical significance was set at p < 0.05.

Results

A total of 237 (19.2 %) scientists replied to the questionnaire. From those that responded, 92 (40 %) were men and 138 (60 %) were women. As many as 144 (48 %) were scientists active for 5 to 20 years, 77 (33 %) for less than 5 years, and 46 (19 %) for more than 5 years. Regarding the number of authored papers in the Thompson Reuters Web of Knowledge database, 155 (65.4 %) respondents had published less than 5 papers, 67 (28.2 %) had published up to 30 papers, and 15 (6.3 %) had published more than 30 papers. The distribution of the respondents by academic rank showed that teaching or research assistants without a PhD degree had a lower response rate, whereas teaching or research assistants with PhD had a higher response rate in comparison with their proportion in the population (Table 1). The scientific field of the respondents was assigned according to their affiliated institution, and as a result we were not able to distinguish the discipline of scientists from the humanities and social sciences (Table 1).

Self-Reported And Observed Research Misconduct

Based on the respondents who admitted to research misconduct, 9 (3.8 %) admitted to plagiarism, 22 (9.3 %) to data falsification, 9 (3.8 %) to data fabrication, and 60 (25.3 %) to violation of authorship rules (Table 2). The respondents reported they had engaged in research misconduct multiple times only in the case of violation of authorship rules. As many as 14 (5.9 %) admitted that they had “added to the list of authors the name of a scientist who did not meet the authorship criteria”.

Based on the respondents who admitted having observed research misconduct of their fellow scientists, 72 (30.4 %) observed plagiarism, 69 (29.1 %) observed data falsification, 46 (19.4 %) observed data fabrication, 132 (55.7 %) respondents admitted having observed violation of authorship rules (Table 3). Out of 145 (61.2 %) scientists who observed research misconduct only 9 (5.4 %) of them took steps to report the misconduct to the authorities (Table 5). The most common causes of research misconduct, listed by respondents was the pressure to publish and lack of their own standards of ethics (Table 4).

The Development of the System for Promoting Research Integrity

We asked respondents whether they were familiar with the content of two types of policies for research integrity: the university’s policy which tends to be more general and applicable to all scientific fields; and the school’s policy which tends to be more detailed and specific for a particular scientific field. Ninety six (40.5 %) respondents reported being familiar with the content of a policy for research integrity promulgated by the university, 67 (28.3 %) respondents reported that they were not familiar with the content of the policy, 71 (30 %) respondents reported not being aware that such a policy existed, whereas 3 (1.3 %) respondents replied that there was no policy for research integrity. Sixty five (27.4 %) respondents reported being familiar with the content of the policy promulgated by the affiliated school, 85 (35.8 %) respondents reported they were not familiar with its content, 65 (27.4) respondents reported not being aware of the policy, whereas 22 (9.3 %) respondents replied that the policy did not exist.

Most respondents, 93 (39.2 %), replied that the authority in research integrity was a principal investigator, 67 (28.3 %) replied that it was the School’s professional body, whereas a professional person or body outside the school was recognized as the authority by only 6 (2.5 %) respondents (Table 6).

Ninety-four respondents (39.7 %) learned about research ethics from their mentor while working on a research project and publishing research results, 68 (28.7 %) learned about it independently, whereas as many as 37 (15.6 %) replied they had never learned about research integrity (Table 7).

Research Misconduct in Biomedical Versus Other Scientific Fields

Significant differences were found in the frequency of reporting research misconduct across scientific fields. The respondents who work in biomedical sciences (N = 80) admitted to having violated the authorship rules more often than the respondents working in other scientific fields (N = 157; (Table 1) [30 (37.5 %) vs. 30 (19.1 %), χ2 = 8.53; P = 0.035]. Also, more respondents working in the biomedical field than respondents working in other scientific fields reported having observed data falsification [31 (39.0 %) vs. 38 (24.0 %), χ2 = 4.75; P = 0.029], data fabrication [30 (38.0 %) vs. 16 (10.0 %), χ2 = 23.55; P < 0.001], and violation of authorship rules [57 (71.9 %) vs.75 (48.0 %), χ2 = 10.9; P = 0.001], which most commonly consisted of listing undeserving authors on a paper [56 (70.0 %) vs. 66 (42.0 %), χ2 = 15.48; P = 0.001, respectively]. Statistical power of the χ2 tests are above 0.85 for all comparisons except for test comparison between rates on observed data falsification which is 0.64.

Research Misconduct of Scientists Holding Higher Versus Lower Academic Rank

The study found significant differences in the reported rates of research misconduct with respect to the academic rank. Violation of authorship rules was admitted by 37 of 103 (36.0 %) respondents holding a higher academic rank (assistant professor or higher) and 23 of 134 (17 %) respondents holding a lower academic rank (teaching or research assistant) (Table 1; χ2 = 9.86, P = 0.002, power of the test = 0.91). The reported rates of research misconduct were not significantly different with respect to academic rank for reporting observed research misconduct.

The reported rates of research misconduct were not significantly different with respect to gender, neither for admitting to research misconduct nor for observing research misconduct of fellow scientists (data not presented).

Discussion

This is the first study that has quantified the frequency of research misconduct in Croatian scientific community by scientists who have either admitted personally to having committed research misconduct or have observed other scientists’ committing research misconduct. Our results have shown that the system for promoting research integrity and managing research misconduct in Croatia is poor. One in seven Croatian scientists admitted to the most severe forms of research misconduct (falsification, fabrication, and plagiarism), and almost half observed such behavior in their colleagues. After the violation of authorship rules (form of QRP) was included in the analysis, about one-third of surveyed Croatian scientists admitted to research misconduct and about two-thirds reported having observed such behavior in their colleagues. The prevalence of research misconduct appears to be substantially higher in the Croatian scientific community than in the international scientific community. For example, 3.8 % of Croatian scientists surveyed in our study admitted to having committed plagiarism at least once, whereas a meta-analysis of international studies estimated that plagiarism was admitted, on average, by about 1.7 % (95 % CI 1.2–2.4 %) of international scientists. As for falsification and fabrication, the differences were even larger: 9.3 % of Croatian scientists versus 1.2 % (95 % CI 0.5–2.8 %) of international scientists (Pupovac and Fanelli 2015). One-third of Croatian scientists and one-third of international scientists reported having observed plagiarism and 29 % of Croatian scientists and 14 % of international scientists (95 % CI 10–20 %) reported having observed falsification and fabrication of research by their colleagues (Pupovac and Fanelli 2015). The most prevalent form of researchers’ misbehavior in Croatia is the violation of authorship rules, 60 (25 %) respondents admitted to this type of misconduct, while 132 (56 %) admitted to observing it amongst colleagues. A meta-analytic estimation of researchers that committed or observed a colleague committing violation of authorship rules is substantially lower 29 % (95 % CI 24–35 %) in international scientific communities (Marušić et al. 2011).

Our survey results were somewhat unexpected and contrary to the findings of a previous meta-analysis, which showed that the rates at which scientists report committing or observing research misconduct in international scientific publications had been declining or stagnating over the last three decades (Pupovac and Fanelli 2015). However, the meta-analysis included studies that were conducted in highly developed countries, such as USA and Western European countries which could explain the discrepancy in these results.

The differences in the prevalence of research misconduct at the national or institutional levels are often explained by the comprehensiveness of the system for handling misconduct cases and for the promotion of RCR. For example, the prevalence of research misconduct is lower in work environments with clearly defined and implemented rules and procedures for preventing and sanctioning research misconduct (Crain et al. 2013; Honig and Bedi 2012) or in work environments where scientists perceive the organizational and distributive procedures as just and fair (e.g., procedures for distribution of resources, career advancement, work of professional bodies, manuscript review process, and similar) (Martinson et al. 2006).

The results of our study indicate that the efficacy of the system for managing research misconduct in the Croatian scientific community is poor. Less than 5 % of surveyed scientists reported having taken steps when they observed research misconduct of their colleagues. Croatian scientists are less likely than their peers in the USA to undertake anything that would lead to sanctioning the observed misconduct. In a survey carried out in the USA, a quarter of surveyed scientists reported the observed research misconduct to the authorities (Titus et al. 2008). Ranstam et al. (2000) found that 22 % of the members of the International Society of Clinical Biostatistics (ISCB) reported the observed research misconduct to the authorities, whereas Kattenbraker (2007) found that 36 % of the faculty at medical schools in the USA reported research misconduct to the responsible person. There is a cultural influence on the readiness to report research misconduct that should also be taken into account. Students from former communist countries less often report the observed misconduct than students from the USA (Magnus et al. 2002). The most common reasons reported by Croatian scientists for not taking any action against research misconduct were distrust in the efficacy of professional bodies and not knowing to whom to report such behavior.

The problem of distrusting the work of ethical committees largely depends on the documents standardizing and regulating research misconduct. Such documents define the basic values and expectations that the scientific community places upon a scientist and describe the work of ethical committees regarding the detection and sanctioning of research misconduct. At the University of Rijeka, and any other Croatian university, there is no document dedicated exclusively to research integrity, describing the values that should be fostered by a scientist and clarifying the forms of research misconduct and what constitutes a questionable research practice. However, almost 40 % of the scientists at the University of Rijeka reported that they were familiar with the content of such a document promulgated by the university and 36 % of them believed they were familiar with the content of such a document promulgated by the school. A possible explanation is that the respondents mistook the University or School Code of Ethics, in which individual forms of research misconduct and questionable research practice are sporadically mentioned, for the document regulating research integrity. The answer “such a policy does not exist”, chosen by 1 % of respondents for a document regulating research misconduct at the school level and 9 % of respondents for a document regulating research misconduct at the university level, is far more revealing of the scientists’ lack of familiarity with the documents regulating research misconduct. The documents specifically defining and regulating research misconduct, which would correspond with the National Code of Ethics in Science (written by CESHE), would increase the trust of scientists in the work of ethical committees. Thereby, the proportion of scientists choosing to report the observed research misconduct might increase, eventually leading to a decrease in the rate of misconduct. However, the CESHE has an unusual status, which is why its Code of Ethics is mostly ineffective. Although the CESHE was founded by the Croatian Parliament, it still does not have any legitimate authority like its equivalent in the USA, the Office of Research Integrity (ORI). The CESHE function is only advisory, similar to the UK Research Integrity Office (UKRIO). However, unlike the Code of Practice for Research published by the UKRIO, the code of conduct for research by CESHE has not been officially adopted and implemented by scientific institutions and organizations in Croatia.

With the exception of institutional and national documents for regulation of research misconduct, there are various European and international scientific and funding organizations (e.g. European Science Foundation, European Network of Research Integrity Offices, Science Europe, All European Academies etc.) that have developed and disseminated guidelines, policies and recommendations on research integrity (ESF and ALLEA 2011; Science Europe Working Group on Research Integrity 2015; World Conference on Research Integrity 2010, 2013). Substantial efforts should be invested to increase the availability of, and to raise awareness of such documents among Croatian scientists. The lack of knowledge about research integrity is reflected in the result that 16 % of scientists never received any education on research integrity. A majority of respondents cited having learned about research integrity from their mentor or due to their own intellectual curiosity. The survey results indicate that much is expected from mentors; over one-third of the scientists queried would ask the principal investigator for ethics-related advice in conducting and publishing their research results (PhD students would ask their mentor), and about 15 % of scientists thought that poor mentoring was the cause of research misconduct.

Previous research showed that the scientists’ awareness of, and knowledge about forms of research misconduct were associated with a lower rate of misconduct (Adeleye and Adebamowo 2012; John et al. 2012); the education on research misconduct is therefore considered necessary. However, there is still no consensus about who should provide the education, in what manner, and how often (Godecharle et al. 2013). According to previous research, formal education about research integrity has less influence than expected on the reduction of prevalence of research misconduct (Antes et al. 2009) whereas advice on research ethics from a mentor is more effective than formal education (Anderson et al. 2007). Experts emphasize that too little time is dedicated to education on research integrity during the process of scientific research and that this discussion should be transferred from the classrooms to the laboratories and led by highly motivated mentors, who would receive additional education and encourage dissemination of information, and therefore play the main role as educators (Kalichman 2014).

Although there is no consensus on the most effective form of education on research integrity, the Croatian scientific community could implement several measures to promote research integrity. Leaders of scientific institutions and funding organizations should strongly emphasize scientific values and standards presented in the international scientific community, or its own documents that promote research integrity. In this way, they could motivate scientists and students to think and act in accordance with such standards. Furthermore, a course that discusses cases of research misconduct and the harmful effects should be developed for PhD and postdoctoral students that aims to improve the moral reasoning skills of students and their understanding of the social and political context of research. Another course that explains the process of writing and the correct way of using citations would help students better understand the definition of plagiarism. Professional and student associations should contribute to the creation of a cooperative working atmosphere by organizing informal workshops, presentations and panel discussions about ethical issues specific to their scientific field.

In our study, we compared the prevalence of research misconduct among scientists working in biomedical fields with that of scientists from other fields. The higher rate of reporting the observed data falsification and fabrication among medical scientists can be explained in several ways. First, the fact that empirical research is not performed in some humanities and social sciences, precludes any data manipulation. Second, it is possible that frequent discussions about research misconduct in medical circles are associated with medical scientists’ increased level of awareness and knowledge about research misconduct, which is why medical scientists more easily recognize and, thereby, report research misconduct of their colleagues. For example, the first Croatian scientific journal to have a Research Integrity Editor was CMJ (Petrovecki and Scheetz 2001), and the first case of plagiarism in Croatia was revealed in the medical field (Chalmers 2006).

Our finding that medical scientists more than other scientists violate the rules of authorship can be interpreted in the context of the “publish or perish” pressure, which was the most commonly recognized cause of research misconduct (see Table 4). The criteria for academic career advancement differs across scientific disciplines. In the medical sciences, a larger number of researchers are allowed on a team, and, consequently, there are a larger number of authors on a paper (up to 10 authors). On the other hand, in the humanities, social, and technical sciences, research teams are smaller and as such so are the number of authors on a paper (up to 3 to claim full authorship) (Nacionalno vijeće za znanost 2013). There is a credible possibility that medical scientists, pressured to advance academically, use the liberty of having larger research teams to mutually add each other’s names to the list of authors for research in which they did not participate, all with the aim of making their academic advancement easier.

Our survey showed that the respondents holding a higher academic rank were more likely to admit to research misconduct, which is in line with some previous findings (Bedeian et al. 2010; Martinson et al. 2006; Swazey et al. 1993), but contrary to others (Fanelli 2009; Pupovac and Fanelli 2015). In our study, scientists holding higher academic ranks more often violated the rules of authorship, which is a form of questionable research practice. A possible explanation could be that scientists with more research experience are more aware of how particular forms of misconduct are regulated and what forms of misconduct are the most difficult to detect, so they tend to commit those forms of research misconduct. Violation of authorship rules is difficult to recognize and consequently, to regulate, because it is based on the internal agreement of involved scientists (Kleinert and Wager 2010; Marušić et al. 2011). In addition, it is obvious that the violation of authorship rules is quite common and kept in silence, and as such the consequences are ignored. The longer the scientists work in such an environment, as is the case with scientists holding higher academic ranks, the more numerous the occasions they have for such behavior.

The main limitation of our survey is the low response rate of 19.1 %. The fact that more than 80 % of the contacted scientists did not reply to the survey implies that the main problem behind research misconduct is the low level of awareness of its importance. It is also possible that they do not realize the negative impact that research misconduct has on their scientific community. An alternative explanation for the low response rate could be the scientists’ unwillingness to reply to the questions that were asked about their own misconduct. This is not surprising and has been confirmed in previous similar studies. For example, the lowest response rate in similar studies included in a systematic review was 10 %, with more than half of the studies with a response rate of 33 % or lower (Pupovac and Fanelli 2015).

The low response rate of our study brings into question whether our sample size is representative of the scientific community in Croatia. Our study sample is representative of the general population in terms of academic rank although the proportion of the PhD students in the survey is lower than the proportion of PhD students in the university population, and proportion of the postdocs is higher in the survey than in the university population (see Table 1). This sample profile can be explained by a greater interest of postdoctoral associates as it is likely that they have already encountered misconduct-related issues in their doctoral and other research work, whereas PhD students have likely not had such experience.

Conclusion

The results of our survey show that greater efforts are needed to reduce the prevalence of research misconduct in the Croatian community. The rates at which scientists report committing or observing all forms of research misbehavior, especially violation of authorship rules, is higher among Croatian scientists than among scientists in highly developed countries. Furthermore, Croatian scientists have a low-level of trust in the system for managing research misconduct. This is not surprising given the lack of systematic education on ethical issues in science and the scarcity or poor visibility of the documents defining and regulating research misconduct. Documents that would clearly define the forms of research misconduct and ways to recognize and process them, need to be designed. The next step should be to increase the awareness of such documents and research misconduct in general, primarily by introducing mandatory education in research ethics. Given the results of our survey, which indicate the importance of the mentor in education on research integrity, it would be worthwhile to consider providing additional education in research integrity for mentors.

References

Adeleye, O. A., & Adebamowo, C. A. (2012). Factors associated with research wrongdoing in Nigeria. Journal of Empirical Research on Human Research Ethics, 7(5), 15–24. doi:10.1525/jer.2012.7.5.15.Factors.

Ana, J., Koehlmoos, T., Smith, R., & Yan, L. L. (2013). Research misconduct in low- and middle-income countries. PLoS Medicine, 10(3), 1–6. doi:10.1371/journal.pmed.1001315.

Anderson, M. S., Horn, A. S., Risbey, K. R., Ronning, E. A., De Vries, R., & Martinson, B. C. (2007). What do mentoring and training in the responsible conduct of research have to do with scientists??? Misbehavior? Findings from a national survey of NIH-funded scientists. Academic Medicine, 82(9), 853–860. doi:10.1097/ACM.0b013e31812f764c.

Antes, A., Murphy, S., Waples, E., Mumford, M., Brown, R., Connelley, S., & Devenport, L. (2009). A meta-analysis of ethics instruction effectiveness in the sciences Alison. Ethics and Behavior, 19(5), 379–402. doi:10.1016/j.drugalcdep.2008.02.002.A.

Baždarić, K., Bilić-Zulle, L., Brumini, G., & Petrovečki, M. (2012). Prevalence of plagiarism in recent submissions to the croatian medical journal. Science and Engineering Ethics, 18(2), 223–239. doi:10.1007/s11948-011-9347-2.

Bedeian, A., Taylor, S., & Miller, A. (2010). Management science on the credibility bubble: Cardinal sins and various misdemeanors. Academy of Management Learning and Education, 9(4), 715–725. doi:10.5465/AMLE.2010.56659889.

Bilic-Zulle, L., Azman, J., Frkovic, V., & Petrovecki, M. (2008). Is there an effective approach to deterring students from plagiarizing? Science and Engineering Ethics, 14(1), 139–147. doi:10.1007/s11948-007-9037-2.

Bilić-Zulle, L., Frković, V., Turk, T., Azman, J., & Petrovecki, M. (2005). Prevalence of plagiarism among medical students. Croatian Medical Journal, 46(1), 126–131.

Chalmers, I. (2006). Role of systematic reviews in detecting plagiarism: case of Asim Kurjak. BMJ, 333(September), 594–596.

Crain, L. A., Martinson, B. C., & Thrush, C. R. (2013). Relationships between the survey of organizational research climate (SORC) and self-reported research practices. Science and Engineering Ethics, 19(3), 835–850. doi:10.1007/s11948-012-9409-0.

Dhingra, D., & Mishra, D. (2014). Publication misconduct among medical professionals in India. Indian Journal of Medical Ethics, 23(2), 293–294. doi:10.4103/0970-9290.100447.

Eastwood, S., Derish, P., Leash, E., & Ordway, S. (1996). Ethical issues in biomedical research: perceptions and practices of postdoctoral research fellows responding to a survey. Science and Engineering Ethics, 2(1), 89–114. doi:10.1007/bf02639320.

ESF, & ALLEA. (2011). The European code of conduct for research integrity, 1–20. doi:10.1037/e648332011-002.

Fanelli, D. (2009). How many scientists fabricate and falsify research? A systematic review and meta-analysis of survey data. PLoS One, 4(5), e5738. doi:10.1371/journal.pone.0005738.

Godecharle, S., Nemery, B., & Dierickx, K. (2013). Integrity training: Conflicting Practices. Sciences, 340(June), 1403–1404.

Honig, B., & Bedi, A. (2012). The fox in the hen house: A critical examination of plagiarism among members of the academy of management. Academy of Management Learning and Education, 11(1), 101–123. doi:10.5465/amle.2010.0084.

Hrabak, M., Vujaklija, A., Vodopivec, I., Hren, D., Marušić, M., & Marušić, A. (2004). Academic misconduct among medical students in a post-communist country. Medical Education, 38(3), 276–285.

John, L. K., Loewenstein, G., & Prelec, D. (2012). Measuring the prevalence of questionable research practices with incentives for truth telling. Psychological Science, 23(5), 524–532. doi:10.1177/0956797611430953.

Kalichman, M. (2014). A modest proposal to move RCR education out of the classroom and into research. Journal of Microbiology and Biology Education, 15(2), 93–95.

Kattenbraker, M. (2007). Health education research and publication: Ethical considerations and the response of health educators. Carbondale: Graduate School Southern Illinois University Carbondale.

Kleinert, S., & Wager, E. (2010). Responsible research publication: international standards for authors A position statement. 2nd world conference on research integrity, Singapore, July 22–24, 2010. http://publicationethics.org/files/Internationalstandards_authors_forwebsite_11_Nov_2011_0.pdf. Accessed 14 Dec 2015.

Kukolja Taradi, S., Taradi, M., & Dogas, Z. (2012). Croatian medical students see academic dishonesty as an acceptable behaviour: a cross-sectional multicampus study. Journal of Medical Ethics, 38(6), 376–379.

Kukolja Taradi, S., Taradi, M., & Knezevic, T. (2010). Students come to medical schools prepared to cheat: a multi-campus investigation. Journal of Medical Ethics, 36(11), 666–670.

Magnus, J. R., Polterovich, V. M., Danilov, D. L., & Savvateev, A. V. (2002). Tolerance of Cheating: An analysis across countries. The Journal of Economic Education, 33(2), 125–135. doi:10.1080/00220480209596462.

Martinson, B., Anderson, M., Crain, A., & De Vries, R. (2006). Scientists’ perceptions of organizational justice and self-reported misbehaviors. Journal of empirical research on human research ethics, 1(1), 51–66. doi:10.1525/jer.2006.1.1.51.

Marušić, A., Bošnjak, L., & Jerončić, A. (2011). A systematic review of research on the meaning, ethics and practices of authorship across scholarly disciplines. PLoS One, 6(9), e23477. doi:10.1371/journal.pone.0023477.

Nacionalno vijeće za znanost, (National Council for Science). (2013). Pravilnik o uvjetima za izbor u znanstvena zvanja (Ordinance on Election into Scientific Titles) Narodne novine.

Okonta, P. I., & Rossouw, T. (2014). Misconduct in research: a descriptive survey of attitudes, perceptions and associated factors in a developing country. BMC Medical Ethics, 15(1), 25. doi:10.1186/1472-6939-15-25.

Petrovecki, M., & Scheetz, M. (2001). Croatian Medical Journal introduces culture, control, and the study of research integrity. Croatian Medical Journal, 42(1), 7–13.

Puljak, L. (2007). Croatia founded a national body for ethics in science. Science and Engineering Ethics, 13(2), 191–193. http://apps.isiknowledge.com/full_record.do?product=UA&search_mode=GeneralSearch&qid=5&SID=V2s1Lh3tIewOnt2cQDB&page=1&doc=6. Accessed 18 Dec 2015.

Pupovac, V., Bilic-Zulle, L., Mavrinac, M., & Petrovecki, M. (2010). Attitudes toward plagiarism among pharmacy and medical biochemistry students—Cross-sectional survey study. Biochemia Medica, 20(3), 279–281.

Pupovac, V., Bilic-Zulle, L., & Petrovecki, M. (2008). On academic plagiarism in Europe: An analytical approach based on four studies. DIGITHUM: The Humanities in the Dital Era, (10), 13–18. http://www.uoc.edu/digithum/10/dt/eng/pupovac_bilic-zulle_petrovecki.pdf.

Pupovac, V., & Fanelli, D. (2015). Scientists admitting to plagiarism: A meta-analysis of surveys. Science and Engineering Ethics, 21(5), 1331–1352. doi:10.1007/s11948-014-9600-6.

Ranstam, J., Buyse, M., George, S. L., Evans, S., Geller, N. L., Scherrer, B., et al. (2000). Fraud in medical research: an international survey of biostatisticians. ISCB Subcommittee on Fraud. Controlled Clinical Trials, 21(2000), 415–427. doi:10.1016/S0197-2456(00)00069-6.

Resnik, D. B., & Master, Z. (2013). Policies and initiatives aimed at addressing research misconduct in high-income countries. PLoS Medicine, 10(3), 1–4. doi:10.1371/journal.pmed.1001406.

Science Europe Working Group on Research Integrity. (2015). Seven reasons to care about integrity in research.

Steneck, N. H. (2006). Fostering integrity in research: definitions, current knowledge, and future directions. Science and Engineering Ethics, 12(1), 53–74. doi:10.1007/pl00022268.

Stojanovski, J. (2015). Do Croatian open access journals support ethical research? Content analysis of instructions to authors. Biochemia Medica, 25(1), 12–21.

Sullivan, S., Aalborg, A., Basagoitia, A., Cortes, J., Lanza, O., & Schwind, J. (2014). Exploring perceptions and experiences of Bolivian health researchers with research ethics. Journal of Empirical Research on Human Research Ethics, 10(2), 185–195.

Swazey, J. P., Anderson, M. S., & Seashore, L. K. (1993). Ethical problems in academic research. American Scientist, 81, 542–543.

Titus, S. L., Wells, J. A., & Rhoades, L. J. (2008). Repairing research integrity. Nature, 453(June), 980–982. doi:10.1038/453980a.

World Conference on Research Integrity. (2010). Singapore statement on research integrity. http://www.singaporestatement.org/statement.html. Accessed 15 Jan 2016.

World Conference on Research Integrity. (2013). Montreal statement on research integrity in cross-boundary research collaborations. http://www.researchintegrity.org/Statements/MontrealStatementEnglish.pdf. Accessed 15 Jan 2016.

Zwirn Periš, Ž., & Avilov, M. (2013). Nastavnici i suradnici u nastavi na visokim učilištima u ak. g. 2012./2013. - priopćenje [Teaching staff at institutions of higher education 2012/2013 academic year - first relase]. Croatian Bureau of Statiatics. http://www.dzs.hr/Hrv_Eng/publication/2013/08-01-01_01_2013.htm. Accessed 25 Nov 2015.

Acknowledgments

We thank Prof. Elvio Baccarini, president of the Council of Honor of the University of Rijeka, on his constructive advice for designing the survey questionnaire and Dr. Aleksandra Mišak and Elizabeth Hughes Komljen for excellent language consultations.

Funding

The study is part of two research projects: “Acceptance and awareness of the principles of research ethics of University of Rijeka scientists” (Project Number: 3%-12-33) funded by the University of Rijeka and “Attitude toward scientific plagiarism, its prevalence and characteristics” (Project Number: 13.06.1.2.29) funded by the University of Rijeka Foundation.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Appendix

Appendix

Rights and permissions

About this article

Cite this article

Pupovac, V., Prijić-Samaržija, S. & Petrovečki, M. Research Misconduct in the Croatian Scientific Community: A Survey Assessing the Forms and Characteristics of Research Misconduct. Sci Eng Ethics 23, 165–181 (2017). https://doi.org/10.1007/s11948-016-9767-0

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11948-016-9767-0