Abstract

The issue of underwater image haze removal is investigated in this paper. The exponential attenuation phenomenon in the underwater light propagation process causes the low contrast, color distortion, and blurred edges problems of underwater images and consequently limits the application of the vision-based underwater technology. To overcome these problems, an adaptive color correction method is proposed for underwater single image haze removal. First of all, the estimated transmission map by image blurriness is adopted in the image formation model to remove the haze of underwater images. Secondly, the alternating direction method of multipliers and the histogram displacement in the Lab color space are used to improve the uniform brightness and to correct the color distortion of the restored underwater images. Finally, both qualitative and quantitative experimental results show that the proposed method can produce better restoration results in different underwater scenes compared to other state-of-the-art underwater image restoration methods.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

Haze is a key factor restricting the development and application of underwater vision-based technology. Different from terrestrial images, underwater images are typical with blue-green tones and severely blurry edges. Haze causes issues in the area of underwater photography when the light penetrates water to meet imaging conditions. This results in the visual effect of low contrast, blurry edge in the object [1, 2]. Meanwhile, wavelength-dependent lights encounter different attenuation rates that cause the color distortion of the image captured by the camera. Moreover, the uneven illumination caused by artificial light still needs to be resolved. Hence, haze removal is highly desired in the application of underwater vision technology.

Single image haze removal is a challenging task whether in the terrestrial or underwater because it is highly ill-posed. At present, many effective image dehazing methods have been presented for processing the terrestrial images, including enhanced-based methods [3], image formation model(IFM)-based methods [4], learning-based methods [5]. Although these methods have good dehazing effect on degraded terrestrial images, they cannot obtain good dehazing in underwater scenes due to the complicated underwater environment and lighting conditions where the image is degraded by wavelength-dependent attenuation and scattering [6]. By analyzing the characteristics of underwater images, various methods have been applied to underwater image dehazing. Some methods are products of dark channel prior (DCP), which can effectively restore the haze of underwater images, but the color distortion of the images has not been improved [7, 8]. In addition, since the estimated transmission map (TM) has block-like artifacts, these methods need to be fined by the guided filtering [9] or the soft matting [10], which leads to high computational complexity. The papers [11, 12] propose a revised underwater imaging formation model that can produce good dehazing effect and color correction, but the high complexity limits its applicability. Many researchers attend to use end-to-end machine learning methods to achieve dehazing of underwater images [14, 20,21,22]. Li et al. [13] propose an end-to-end deep underwater image enhancement network (DUIENet) as a baseline and call for the development of deep learning-based underwater image enhancement. However, it is difficult to train learning-based methods for different underwater scenes, because calibrated underwater datasets are expensive and logistically difficult to acquire.

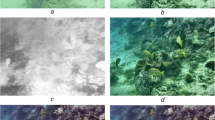

To capture the realistic haze-free underwater images, this paper proposes an adaptive color correction method for underwater single image haze removal. The estimated TM by image blurriness is adopted in the IFM to remove the haze of underwater images, because larger camera-to-object distance causes more object blurriness for underwater images. Immediately afterward, the dehazed images are converted to Lab color space, where the alternating direction method of multipliers (ADMM) is used to optimize and correct the \(\varvec{L}\) component to achieve uniform brightness correction. The histogram displacement is used to process the a and b components to correct color distortion. The results of underwater image dehazing using our method are shown in Fig. 1. The dehazed images obtained by the proposed method in this paper have better qualitative and quantitative metrics compared to other state-of-the-art underwater image restoration methods. To summarize, the main contributions of this paper are as follows:

-

The image blurriness is used to estimate TM, which can obtain refined TM without guided filter or soft matting.

-

To obtain more realistic and vivid haze-free underwater images, an adaptive color correction method using ADMM and histogram displacement in Lab color space is proposed to improve uniform brightness and to eliminate color distortion.

-

Compared with other state-of-the-art underwater image restoration methods, the proposed method achieves a nature color correction and superior or equivalent visibility improvement.

The rest of this paper is organized as follows. Section 2 presents the proposed underwater image restoration method in this paper. The comparative experiments are given and analyzed both qualitatively and quantitatively in Sect. 3. Finally, Sect. 4 concludes this paper.

2 Proposed method

In this section, we propose an adaptive color correction method based on both IFM and Lab color space for underwater single image haze removal. The refined TM is estimated, and the color distortion is corrected by the proposed adaptive color correction method. The flowchart of the proposed underwater image dehazing method is shown in Fig. 2. The estimation method of background light (A) in the proposed method comes from the UDCP method [7], which first pick the top 0.1% brightest pixels in the dark channel of blue and green channels, and then the highest intensity of these pixels in the original image is selected as the background light.

2.1 TM estimation based on image blurriness

As larger camera-to-object distance causes more object blurriness for underwater images, the TM can be estimated by image blurriness. And the image can be seen as the product of a clear image and a blurred image [16]. Combining the IFM, the underwater optical imaging model can be written as:

where K(x, c) is the coefficient matrix and \(K(x,c)=[I(x,c)-A^cB(x,c)]/B(x,c)\). x is the pixel coordinate; c is one of the red, green and blue channels, \(c\in (r,g,b)\); I, t and B represent raw image, transmission map and blurriness image, respectively. From Eq. (1), we can know that B(x, c) is the key parameter for obtaining t(x, c). The blurriness image B(x, c) can be estimated by the Gaussian surround function. The function is defined in the following way:

where \(\lambda \) is the normalization scale so that \(\iint F(x,\sigma )\, dx\,dy=1\) and \(\sigma \) is the Gaussian surround scale. The contrast and color distortion of the hazed images are subject to the \(\sigma \). The details of the dark area can be better enhanced by the small value of \(\sigma \) and the chroma consistency is better kept by the large value of \(\sigma \). The multi-scale Gaussian surround function is used to acquire the B(x, c). Hence, the expression can be written as :

where “\(*\)” represents the convolution operation, \(N_k\) is the number of scales, \({\omega _k}\) represents the weight coefficient of the scale, and \(\sum \nolimits _{k=1}^{N_k}{\omega _k}=1\). The general parameter settings are as follows: \(N_k=3\), \({\omega _1} = 0.5\), \({\omega _2} = 0.4\), \({\omega _3} = 0.1\), \({\sigma _1} = 15\), \({\sigma _2} = 80\), and \({\sigma _3} = 200\).

The t(x, c) can be estimated from I(x, c) when the background light \(A^c\) and B(x, c) are known. The t(x, c) can be derived as:

According to the Jaffe-McGlamery imaging model [4], the dehazed underwater image J(x, c) can be written as:

where \(t_0=0.01\) represents the lower bound of the transmission map t(x, c), which prevents \(J(x,c) \rightarrow \infty \) when \(t(x,c) \rightarrow 0\).

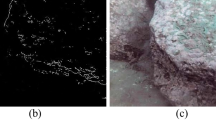

Figure 3 shows the estimated TM by the proposed method, and the result shows that the proposed method can obtain refined TM without guided filter or soft matting. Later in this paper, Section III will present other examples and methods, and quantitatively analyze the restoration performances of these methods on underwater images.

2.2 Adaptive color correction using ADMM and histogram displacement

The dehazed underwater image J(x, c) can be obtained from Eqs. 4 and 5. But there are still the problems of low brightness and color distortion. Here, the ADMM and histogram displacement methods are adopted to improve uniform brightness and to correct color distortion in the Lab color space for the dehazed underwater image.

Inspired by [17], the \(\varvec{L}\) component in Lab color space can be optimized by constructing optimization equation that improves uniform brightness while maintaining the overall structure and smooth the textural details. The \(\varvec{L}\) component is used as the initial illumination map (\(\varvec{\hat{S}}\)). The optimization function can be written as:

where \({\left\| \cdot \right\| _F}\) is the Frobenius, \({\left\| \cdot \right\| _1}\) is the 1 norms, \(\beta \) represent the constant coefficient (the default value is 0.09), and \(\varvec{W}=1/(\nabla \varvec{S}+\varsigma )\) represent the weight matrix. \(\varsigma =0.0001\) represents the lower bound of \(\nabla \varvec{S}\), which prevents \(\mathbf{{W}} \rightarrow \infty \) when \(\nabla \varvec{S} \rightarrow 0\). And \(\nabla \varvec{S}\) is the first-order derivative filter. \(\nabla \varvec{S} = \varvec{Z}\) is added as a constraint in Eq. (6). So that, the corresponding augmented Lagrangian function can be naturally written as:

where \(\varvec{k}\) represents the augmented Lagrangian multiplier, \(\rho \) is the augmented Lagrangian constant and \(\rho >0\). From Eq. (7), there are three variables that need to be solved, including \(\varvec{S}\), \(\varvec{Z}\) and \(\varvec{k}\). Here, the augmented Lagrangian multiplier method is selected to optimize the solution of the three variables step by step by updating one variable at a time by fixing the others. The corresponding iterative functions are expressed as:

Equation (11) is the termination condition of the iteration and \(\varepsilon \) is typically a very small value, e.g., \(\varepsilon \le {10^{ - 4}}\). Since the Gamma correction [18] can make the brightness of image more suitable for human vision, it is used to further adjust the global illumination map \(\varvec{S}\).

where the default value of \(\gamma \) is 0.85.

The components \(\varvec{a}\) and \({\varvec{b}}\) are two color channels in Lab color space. We found that the histograms of \({\varvec{a}}\) and \({\varvec{b}}\) are mostly distributed near the zero value by statistical analysis of bright-colored fog-free images. Hence, to eliminate color distortion of the underwater dehazed image, the histogram of \({\varvec{a}}\) and \({\varvec{b}}\) is redistributed and adjusted. The adjustment equations are written as:

where \({\bar{a}}\) and \({\bar{b}}\) are the average values of \({\varvec{a}}\) and \({\varvec{b}}\), \({\varvec{{a^ * }}}\) and \({\varvec{{b^ * }}}\) are the adjusted component values. The component \({\varvec{S}}\), \({\varvec{{a^ *}} }\) and \({\varvec{{b^ * }}}\) are combined and converted into the RGB space to obtain the final clear underwater image. The comparison result with and without color correction is shown in Fig. 4, where the result of color correction has better visual effect.

3 Experimental results

In this section, in order to verify the efficiency of the proposed adaptive color correction method in this paper, the qualitative comparison and the quantitative comparison are made with other state-of-the-art underwater image restoration methods including the dark channel prior (DCP) method [4], the underwater dark channel prior (UDCP) method [7], the underwater dark channel prior method based on automatic red channel (RDCP) [8], the Peng’s method (IBLA) [15], and the underwater light attenuation prior (ULAP) method [19]. All experiments are performed using Matlab 2020b on a Windows 10 PC with Intel(R) Core(TM) i7-9700F CPU at 3.00GHz and 16GB RAM. Five original underwater images with different scenes presented in Fig. 5 are used for testing.

3.1 Qualitative analysis

In Fig. 5, the images 5a, b are commonly used underwater images in the literature and are greenish-tones images with the degree of color cast and fogging increasing in sequence. The images 5c–e come from the real underwater images of our ROV and are bluish tones images with the color cast and fogging degree increasing in sequence. Here, Figs. 6 and 7 show the comparison results of different restoration methods in these five underwater images, respectively.

It can be seen from Figs. 6 and 7 that all methods have a certain recovery effect for these scenes except the DCP method. The DCP method shows poor performance because the methodology is a statistical prior for the three channels of RGB without considering different attenuation effects in the different channels of underwater images. The UDCP method has a certain degree of dehazing effect (Figs. 6b, 7b). Due to the lack of the red channel information, the color distortion is still serious and the global brightness is dark. The restored images obtained by the RDCP method are meaningful. However, the RDCP method uses the inverse of the red channel to compensate for the serious attenuation of the red light, and results in a white fog in the restored images (Figs. 6c, 7c). Results from the IBLA and ULAP methods look more significantly, which indicate the underwater image can be restored well using the light attenuation prior and the blurred information of the origin image. However, these two methods cannot achieve a good restoration effect in Fig. 5b which has more color distortion and blur. Figures 6f and 7f present the processing results of the proposed method in this paper. It can be seen from the restored images that the proposed method not only have the best deblurring effect, contrast and saturation, but also can better reflect the real tones of object in terrestrial; for instance, the color of stones in 6f and Fig. 7f is black instead of blue or green. In particular, the restored results in the second row of Fig. 6 show that only the proposed method in this paper can work well for underwater image with very serious blur and color distortion. In Figs. 6 and 7, the estimated TM using different methods is shown. It can be seen that the estimated TM by the proposed method in this paper is very well.

Compared with other state-of-the-art method, the proposed method in this paper has obtained good visual effects on images in different degrees of color cast and tones. The restored images have more thorough dehazing effect and higher image clarity and contrast. The object color of restored image is more natural, and the brightness is also improved.

3.2 Quantitative analysis

From the perspective of subjective visual effects, the proposed method in this paper can achieve better processing effects of underwater images in different degrees of color cast, tones and environments. In order to further verify the efficiency of the proposed method in this paper, this section compares the proposed method with aforementioned five methods using several objective metrics to conduct a quantitative analysis. Considering the aspects of information richness, naturalness, sharpness, and the overall index of contrast, chroma and saturation, four evaluation metrics, namely the average gradient (AG), the information entropy (IE), and the underwater color image quality evaluation metric (UCIQE), and naturalness image quality evaluator (NIQE), are chosen to comprehensively evaluate the restoration effect. The average gradient can sensitively reflect the ability to express small details. The larger the gradient value is, the better the image detail expression ability. The information entropy is used to measure the information level of the image, which effectively represents the level of detail contained in the image. The UCIQE uses the linear combination of chroma, saturation and contrast as measurement indicators. The larger the UCIQE value is, the more subjective visual effects of the image conform to the human visual characteristics. Compared with indicators such as PSNR and SSIM, the NIQE has better prediction stability, monotonicity and consistency. The smaller the NIQE value is, the more visual effects of the image. Table 1 and Table 2 present the calculation results of the four indicators of average gradient, information entropy, UCIQE and NIQE of restored images obtained by the above methods. The optimal values of these four indicators are marked in bold font in Tables 1 and Table 2.

From Tables 1 and 2, it can be seen that the image processed by the method proposed in this paper performs significantly better than the other five methods on the AG. This reflects the strong ability to express small details and high image detail clarity. The IE and UICQE values of the proposed method are generally higher than other state-of-the-art underwater image restoration methods. Although the IE value of the restoration image in Fig. 5d obtained by the ULAP method is the highest, the center of the restored image in Fig. 6e is obviously overexposed. As the images restored by the IBLA and ULAP methods in Fig. 6b, c have high chromaticity index, the values of UCIQE are the best. Additionally, the recovered images obtained by the proposed method have better NIQE values. Compared with the comparison methods, the proposed method in this paper has a better ability to express the details, improve the clarity, and effectively remove the blue–green color distortion of the image.

To compare the processing time of these methods, 150 underwater test images with the size of \(720 \times 450\) are selected. It can be seen from Table 3 that the average processing time for the IBLA is up 43.0186s, which is obviously not suitable for underwater applications. The average processing time of the proposed method in this paper is 4.2675s, which is basically the same as the processing times of the ULAP method. Compared with the DCP method, the UDCP method, the RDCP method and the IBLA method, the proposed method is fastest because the TM is estimated by our method without guided filter or soft matting.

3.3 Comparison with DUIENet

At present, the CNN-based method has shown good performance in the field of image restoration. In order to further analyze the effectiveness of the proposed method in this paper, we compare it against a CNN-based underwater image enhancement method (DUIENet [13]). The experiments are conducted on the testing set which includes 150 underwater images. Several results are shown in Fig. 8.

As shown in Fig. 8, the proposed method effectively removes the haze on the underwater images and remits color correction. From the quantitative results of AG and UCIQE on the corresponding underwater images, it can been seen that the proposed method has better performance than the DUIENet.

4 Conclusion

In this paper, we present an adaptive color correction method for underwater single image haze removal through IFM and color correction in the Lab color space. The transmission map is estimated by the blurriness image, which can avoid the long processing time caused by the guided filtering or soft matting. The ADMM method and histogram displacement method are used to optimize \({\varvec{L}}\) component, \({\varvec{a}}\) component and \({\varvec{b}}\) component to effectively improve the uniform brightness and to correct the color distortion of the restored underwater image. Both qualitative and quantitative experimental results verify that the proposed method is more effective to express the details, improve the brightness, and restore more natural color of the underwater images with different scenes and tones.

References

Wang, Y., Song, W., Fortino, G., et al.: An experimental-based review of image enhancement and image restoration methods for underwater imaging. IEEE Access. 7, 140233–140251 (2019)

Raveendran, S., Patil, M.D., Birajdar, G.K.: Underwater image enhancement: a comprehensive review, recent trends, challenges and applications. Artif. Intell. Rev. 54, 5413–5467 (2021)

Xie, H., Liang, J., Wang, Z., et al.: Scanning imaging restoration of moving or dynamically deforming objects. IEEE Trans. Image Process. 99, 1–1 (2020)

He, K., Sun, J., Fellow, et al.: Single image haze removal using dark channel prior. IEEE Trans. Pattern Anal. Mach. Intell. 33(12), 2341–2353 (2011)

Ren, W., Pan, J., Zhang, H., et al.: Single image dehazing via multi-scale convolutional neural networks with holistic edges. Int. J. Comput. Vis. 128(8), 240–259 (2019)

Han, M., Lyu, Z., Qiu, T., et al.: A review on intelligence dehazing and color restoration for underwater images. IEEE Trans. Syst. Man Cybern. Syst. 50, 1–13 (2018)

Drews-Jr, P.D., Nascimento, E. D, Moraes, F., et al.: Transmission estimation in underwater single images. In: The IEEE International Conference on Computer Vision Workshops. Washington, USA, pp. 825-830 (2013)

Galdran, A., Pardo, D., Picón, A., et al.: Automatic red-channel underwater image restoration. J. Vis. Commun. Image Represent. 26, 132–145 (2015)

He, K., Sun, J., Tang, X.: Guided image filtering. IEEE Trans. Pattern Anal. Mach. Intell. 35(6), 1397–1409 (2013)

Levin, A., Lischinski, D., Weiss, Y.: A closed-form solution to natural image matting. IEEE Trans. Pattern Anal. Mach. Intell. 30(2), 228–242 (2007)

Akkaynak, D., Treibitz, T.: A Revised underwater image formation model. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition (CVPR), pp. 6723–6732 (2018)

Akkaynak, D., Treibitz, T.: Sea-thru: A Method For Removing Water From Underwater Images. In: Proceedings of the IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR), pp. 1682–1691 (2019)

Li, C., Guo, C., Ren, W., et al.: An underwater image enhancement benchmark dataset and beyond. IEEE Trans. Image Process. 29, 4376–4389 (2020)

Long, C., Lei, T., Feixiang, Z. et al.: A Benchmark dataset for both underwater image enhancement and underwater object detection. In: 2020 IEEE/CVF Conference on Computer Vision and Pattern Recognition (CVPR). IEEE (2020)

Peng, Y.T., Cosman, P.C.: Underwater image restoration based on image blurriness and light absorption. IEEE Trans. Image Process. 26(4), 1579–1594 (2017)

Jobson, D.J., Rahman, Z.U., Woodell, G.A.: Properties and performance of a center/surround Retinex. IEEE Trans. Image Process. 6(3), 451–462 (1997)

Guo, X., Li, Y., Ling, H.: LIME: low-light image enhancement via illumination map estimation. IEEE Trans. Image Process. 26(2), 982–993 (2017)

Lee, S.: An efficient content-based image enhancement in the compressed domain using Retinex theory. IEEE Trans. Circuits Syst. Video Technol. 17(2), 199–213 (2007)

Song, W., Wang, Y., Huang, D., Tjondronegoro, D.: A rapid scene depth estimation model based on underwater light attenuation prior for underwater image restoration. In: Advances in Multimedia Information Processing pp. 678–688 (2018)

Xue, X., Hao, Z., Ma, L., et al.: Joint luminance and chrominance learning for underwater image enhancement. IEEE Signal Process. Lett.rs 28, 818–822 (2021)

Jiang, K., Wang, Z., Yi, P., et al.: Rain-free and residue hand-in-hand: a progressive coupled network for real-time image Deraining. IEEE Trans. Image Process. 30, 7404–7418 (2021)

Jiang, K., Wang, Z., Yi, P., et al.: Decomposition makes better rain removal: an improved attention-guided Deraining network. IEEE Trans. Circuits Systems Video Technol. 14(8), 1–14 (2020)

Author information

Authors and Affiliations

Corresponding author

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

This work was supported in part by the National Key Research and Development Program of China (Project No.2016YFC0301700), in part by the National Natural Science Foundation of China (Project No.61903304), in part by the Fundamental Research Funds for the Central Universities (Project No.3102020HHZY030010), and in part by the 111 Project under Grant No.B18041.

Rights and permissions

About this article

Cite this article

Zhang, W., Liu, W., Li, L. et al. An adaptive color correction method for underwater single image haze removal. SIViP 16, 1003–1010 (2022). https://doi.org/10.1007/s11760-021-02046-6

Received:

Revised:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11760-021-02046-6