Abstract

The retail sector presents several open and challenging problems that could benefit from advanced pattern recognition and computer vision techniques. One such critical challenge is planogram compliance control. In this study, we propose a complete embedded system to tackle this issue. Our system consists of four key components as image acquisition and transfer via stand-alone embedded camera module, object detection via computer vision and deep learning methods working on single-board computers, planogram compliance control method again working on single-board computers, and energy harvesting and power management block to accompany the embedded camera modules. The image acquisition and transfer block is implemented on the ESP-EYE camera module. The object detection block is based on YOLOv5 as the deep learning method and local feature extraction. We implement these methods on Raspberry Pi 4, NVIDIA Jetson Orin Nano, and NVIDIA Jetson AGX Orin as single-board computers. The planogram compliance control block utilizes sequence alignment through a modified Needleman–Wunsch algorithm. This block is also working along with the object detection block on the same single-board computers. The energy harvesting and power management block consists of solar and RF energy-harvesting modules with suitable battery pack for operation. We tested the proposed embedded planogram compliance control system on two different datasets to provide valuable insights on its strengths and weaknesses. The results show that the proposed method achieves F1 scores of 0.997 and 1.0 in object detection and planogram compliance control blocks, respectively. Furthermore, we calculated that the complete embedded system can work in stand-alone form up to 2 years based on battery. This duration can be further extended with the integration of the proposed solar and RF energy-harvesting options.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

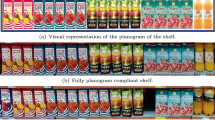

Technological advancements have been transforming the retail sector. To be more specific, integration of sensor networks, internet of things, computer vision, machine learning, and data analysis is making smart retail stores increasingly common and efficient. These technologies are practically applied to automatize operations and enhance customer experience. A significant challenge in retail is planogram compliance control which can be addressed through these technologies. Planogram is the diagram showing placement of products in shelves of a retail store. A shelf is considered planogram compliant when all the products on it are in the right place and quantity. Planogram compliance, crucial for maintaining proper product placement and stock levels, is often undermined by human error or stock issues, leading to sales loss and penalties. Typically, around 70% compliance is observed in stores and resetting planograms can boost sales by up to 7.8% in 2 weeks [1].

Traditionally, planogram compliance is manually checked by employees, a process prone to labor intensity and errors. Alternatives include using mobile devices for photo-based checks [2], installing fixed IP cameras [3], or employing robots [4,5,6]. However, these methods can be costly or laborious. Recently, embedded systems have been used to capture shelf images for efficient planogram control [7,8,9]. In the literature, various computer vision and pattern recognition methods address planogram compliance control and object detection in shelf images, as summarized in our previous study [10]. There, we introduced a novel approach for planogram compliance control in smart retail, utilizing object detection using local feature extraction and implicit shape model (ISM) and iterative search processes, with sequence alignment via a modified Needleman–Wunsch algorithm. Melek et al. [11] developed a multi-stage system for grocery shelf product recognition, combining detection, classification, and refinement.

In this study, we extend our previous work on planogram compliance control in two different ways. First, we propose using YOLO for bounding box detection and feature filtering, replacing ISM for object detection. Second, we form a complete embedded planogram compliance control system with all its components. While forming the system, we followed design constraints as follows. The system should operate stand-alone in a retail environment over extended periods, without needing for shelf cabling due to safety considerations. Given multiple shelves within a retail store, the system should also be cost-effective. High-resolution cameras, and high-end computer systems or cloud computing resources are impractical for this application due to their high power consumption and high cost, thus necessitating the use of embedded systems. The planogram compliance control system can work twice a day. For example, the first control can be made in the morning before the store opens. Hence, the employee can reorganize the shelves if compliance is below the desired level. To handle all these constraints, we propose an embedded system summarized as in Fig. 1.

In grocery stores, installing electrical systems on shelves is impractical, unsafe, and costly. As can be seen in Fig. 1, our system uses battery-powered camera modules (first stage) for image acquisition. Image processing (second stage) is performed by single-board computers (SBC) which are not battery operated. They provide decentralized processing within the store. Using SBCs for localized processing enhances system reliability and reduces network load. While transferring images directly to a central PC server might seem to cut setup costs, it introduces potential bottlenecks and single point of failure. Moreover, a high-capacity server and extensive network infrastructure can increase initial costs. The distributed approach with SBCs allows for better scalability and adaptability to varying network conditions.

The first stage of the system is the image acquisition and transfer block formed by an embedded camera module with Wi-Fi connectivity. The camera to be used for this purpose should be low cost, low power, and easy to acquire. The best fit for these constraints is the ESP-EYE module from Espressif Systems [12]. In the image acquisition and transfer block, the system captures shelf images at predetermined time intervals and transmits them to the second stage if it detects a significant change compared to the previous captured image. We will explain the details of this block in Sect. 2. The image acquisition and transfer block is positioned in front of the shelf to work in stand-alone form. Therefore, there should be an accompanying energy harvesting and power management block to supply power to it. Moreover, the energy-harvesting and power management block should have RF and solar energy-harvesting modules to enhance the battery life. We will explain the working principles of this block in detail in Sect. 5.

The second stage of the embedded system is formed by a single-board computer (SBC) to implement the object detection and planogram compliance control blocks. The SBC offers a cost-effective alternative to conventional PCs and can be distributed throughout the store for increased efficiency. In this study, we test Raspberry Pi 4, NVIDIA Jetson Orin Nano, and NVIDIA Jetson AGX Orin SBCs. The object detection block works such that it preprocess the shelf image and employs YOLO based bounding box detection and local feature extraction frameworks upon receipt an image from the first stage. In the planogram compliance control block, detected objects are converted into a planogram-compatible format. Then, the modified Needleman–Wunsch algorithm is applied for planogram compliance control of the shelf image. Furthermore, the focused and iterative search technique is used for improved results [10]. We will explain the usage scenarios for object detection and planogram compliance control blocks in Sects. 3 and 4, respectively.

We will explain working principles of the proposed embedded planogram compliance control system in the following sections in detail. We test the proposed methods on two different datasets for the object detection and planogram compliance control steps. We will also analyze the overall system power consumption via tests. Furthermore, we test our methods on PC for benchmark.

2 The image acquisition and transfer block

The image acquisition and transfer block is in the first stage of the proposed embedded planogram compliance control system. We will start with explaining its hardware properties in this section. Then, we will provide details on change detection and controlled image transfer operations performed on this hardware.

2.1 Hardware used in operation

The camera to be used for the image acquisition and transfer block should be low cost and power. The best fit for these constraints is the ESP-EYE module from Espressif Systems [12]. This module incorporates an embedded camera and Wi-Fi chip. The camera can capture up to 2-Megapixel resolution images and store them in the external memory of the module. Therefore, the ESP-EYE module serves as a good candidate to implement and realize the image acquisition and transfer block in our embedded system. We use the C language to program the ESP-EYE module, benefiting from the Arduino IDE and ESP32 library.

A convenient retail store with 1000 m\(^2\) sales area can have shelf length up to 1200 m. An ESP-EYE can cover an average of 4 m wide shelf blocks with three racks. Hence, it can cover a total of 12 m shelf area. As a result, we will need approximately 100 of ESP-EYE modules to cover all the shelves in a typical retail store.

2.2 Change detection and controlled image transfer

Planogram compliance control is necessary only when there is a major change in the shelf. Therefore, we pick the image rationing with preprocessing method from our previous work [13]. In this method, we first acquire the shelf image, \(I_{\text {l}}\), in grayscale format with QVGA resolution and then apply filtering to obtain \({\tilde{I}}_{\text {l}} = G*I_{\text {l}}\), where G is the simple Gaussian blurring filter kernel. The previously captured and filtered image, \({\tilde{I}}_{\text {r}}\), is stored in flash memory of the ESP-EYE module for reference during low-power hibernation mode. Subsequently, we calculate ratio of the images, where each pixel ratio approaches zero with no change and nears \(\pi /4\) with maximum change. After thresholding each pixel ratio with a predetermined value \(\tau _p\) and counting the changed pixels, we determine if the shelf image has changed based on a predefined threshold, \(\tau _c\), of all pixels. If any change is detected in a shelf image, a new JPEG image with UXGA resolution I is acquired and transmitted to a remote location via Wi-Fi. Finally, the ESP-EYE module enters low-power hibernation mode, awaiting the next timer trigger to wake up. We provide the pseudocode for the overall operation in Algorithm 1.

3 The object detection block

When any change in the shelf image is detected, we should find objects in the shelf image to compare them with the actual planogram. We will explain the operations needed for the object detection step in this section. We will start with the hardware used in operation next.

3.1 Hardware used in operation

The object detection block in our embedded planogram compliance system requires high processing power and memory. Therefore, we deploy an embedded SBC to implement it. To be more specific, we use SBCs with high processing power as Raspberry Pi 4, NVIDIA Jetson Orin Nano, and NVIDIA Jetson AGX Orin to find objects in the image. We utilize advanced software and libraries on these embedded devices, leveraging their capability to run the Linux OS. We use OpenCV library for local feature extraction and object detection operations. We picked NodeJS as the development framework at SBCs. Furthermore, we compile the OpenCV from its source to deploy the DNN and image processing functions in the NodeJS environment. We enabled the CUDA support for OpenCV while compiling it in NVIDIA Jetson devices which gives us to use their GPU parallel processing capability for DNN operations.

3.2 Image retrieval and preprocessing

As the image acquisition and transfer block sends the shelf image to the object detection block, an end-point should receive it. We implement this operation using Express which is NodeJS web application framework [14]. We create a web server application which listens for the incoming image data and upon receive, it saves the image data to a file.

The received image contains full shelf view containing several shelf racks. However, the planogram compliance control block works with a single shelf rack. Thus, we split the image horizontally into the number of shelf racks using OpenCV library functions. Depending on the distance of the camera to the shelf, there are three to four shelf racks in the shelf view. Hence, a shelf rack image approximately has dimensions as \(1600 \times 400\) pixels after splitting. We also add padding to the top and bottom of the image. Hence, it becomes suitable to be processed in the bounding box step via YOLO. Finally, we employ a blurring filter to the image using OpenCV library functions to remove noise.

3.3 Local feature extraction and matching

Objects on shelves often display complex and closely resembling appearances in the retail environment. This study employs local feature extraction, specifically the SIFT method for object detection, a technique chosen based on its superior performance in our previous study [10]. Let I(x, y) represent a shelf image with J different objects in it. Assume that the jth object on the shelf has a model (or planogram) image \(I_j(x, y)\) for \(j = 1, \ldots , J\). Let \(w_j\) and \(h_j\) be the width and height of \(I_j(x, y)\), respectively. We extract local feature vectors, \(\overrightarrow{f_{jl}}\) for \(l = 1, \ldots , L_j\) from \(I_j(x, y)\) to represent the jth object with \(L_j\) keypoints, using SIFT functions in OpenCV library. Similarly, we extract the local feature vectors, \(\overrightarrow{f_m}\) for \(m = 1, \ldots , M\) from the shelf image, I(x, y), to detect objects in it. Here, M is the total number of extracted keypoints from the shelf image. We provide the pseudocode for the feature extraction operation in Algorithm 2.

One way of finding the object of interest in the shelf image is matching extracted local feature vectors from the jth object, \(\overrightarrow{f_{jl}}\), and shelf image, \(\overrightarrow{f_m}\). Here, we use the brute force matching functions in OpenCV library to find the matching features. We provide the pseudocode for this operation in Algorithm 3.

Furthermore, we also use the ratio test proposed by Lowe [15] to keep only strong matches. Lowe suggests setting \(\tau =0.8\) gives the best result for SIFT. Instead of such a constant value, we set the matching threshold as \(\tau _\alpha = 0.95 - 0.2\alpha\) where \(\alpha\) is the iteration parameter (initially set to one) to be introduced in 4.2. Assume that we have \(N_j\) local feature vectors from I(x, y) satisfying the threshold constraint for the jth object. Hence, we will have \(\left\{ \overrightarrow{f_{jn}}\right\} \subset \left\{ \overrightarrow{f_m}\right\}\) for \(n = 1, \ldots , N_j\) and \(N_j \le M\).

3.4 Bounding box extraction and filtering

YOLO is a real-time object detection method combining bounding box prediction and classification [16]. It uses a grid-based approach for faster processing. YOLOv5 incorporates cross-stage partial networks (CSPNet) and spatial pyramid pooling (SPP) layers [17]. Hence, it offers multiscale and multiformat model options. In this study, we picked the YOLOv5s and YOLOv5x models for bounding box extraction. We trained both models on the SKU110K dataset using NVIDIA Jetson AGX [18]. Settings included \(640\times 640\) image size, 100 epochs, and batch sizes of 48 and 144 for YOLOv5x and YOLOv5s, respectively. YOLOv5x has been trained in 16.6 h with an mAP50 of 0.59. YOLOv5s has been trained in 11.4 h with an mAP50 of 0.56.

We employ the OpenCV DNN library to perform inference on shelf images using the YOLOv5 model. OpenCV DNN library cannot use the YOLOv5 model file directly. However, it can use ONNX format for model file. Hence, we first export our custom trained YOLOv5 model to the ONNX format. The model produces all the candidate bounding boxes as a result of the inference operation. Each bounding box, \(B_b\), is characterized by a tuple containing the confidence score and spatial dimensions as \((cs_b, x_b, y_b, w_b, h_b)\) for \(b = 1, \ldots , B\). To identify the best candidates for the jth object, we apply filtering and threshold techniques. Specifically, we use the width \(w_j\), height \(h_j\), and aspect ratio \(\frac{w_j}{h_j}\) of the jth object as reference. We discard any bounding box with dimensions and aspect ratio falling below half or exceeding twice these reference values. Subsequently, we apply a confidence score threshold, which we set it at 5% of the maximum confidence score, to further refine our candidates. Hence, we discard any bounding box with very low confidence score. Following these steps, we obtain \(C_j\) candidate bounding boxes for the jth object. We provide the pseudocode explaining these steps in Algorithm 4.

As we detect possible object bounding boxes for the jth object in the shelf image, the next step is finding matching local features inside bounding boxes. Therefore, we find the number of features inside each bounding box, \(s_c\). We further eliminate weak objects which have lower feature number than 5% of the number of features of the jth object, \(L_j\). Then, we normalize it with the total number of feature of the jth object, \(L_j\). We multiply it with the confidence score of the bounding box to use it as the detection score for the detected object. Some bounding boxes may overlap, meaning that there may be more than one candidate object in the given location. Therefore, we eliminate weak candidates using the greedy algorithm for non-maxima suppression (NMS) of bounding boxes. At the end of this operation, we detect a total of D objects, \(D \le \sum _{j = 1}^{J} C_{j}\), from the shelf image I(x, y). We provide the pseudocode to filter out matching features not lying in a bounding box in Algorithm 5.

4 The planogram compliance control block

Object detection results in Sect. 3 lead to planogram compliance control. After representing detection results in an abstract planogram format, we use the modified Needleman–Wunsch algorithm to align and compare the detected and reference planograms as suggested in our previous study [10]. Then, we use the iterative search method to focus on undetected or empty object locations in the shelf image. We explain these steps in detail next. Here, we use the same hardware used in Sect. 3 as well as the NodeJS framework.

4.1 Sequence alignment

To compare the detected objects in previous section with the given planogram data, we first need to convert detected object list into planogram format. Here, we sort the detected objects from left to right according to their center coordinates. Then, we group the items of same object hence we can compare their location and quantity, later. We also keep the start and end coordinates hence we can iteratively search for undetected objects only as explained in our previous study [10]. Based on this, we represent the detected objects in the shelf image (in sorted and grouped form) as \(L_{\text {s}} = \left[ o_{\text {d}}, q_{\text {d}}, B_{\text {d}}\right]\) and reference planogram as \(L_{\text {r}} = \left[ o_{\text {t}}, q_{\text {t}}, B_{\text {t}}\right]\).

To control planogram compliance, we compare reference \(L_{\text {r}}\) and detected \(L_{\text {s}}\) planograms using the modified Needleman–Wunsch (NW) algorithm [19]. The NW algorithm involves initialization (setting up score matrix F), matrix filling (using gap penalties \(g_{\text {ins}}\), \(g_{\text {del}}\), and substitution score \(s(o_{\text {d}}, o_{\text {t}})\)), and tracing back matrix entries as explained in Algorithm 6. Here, gap penalties are set dynamically as delete operation penalties equal the number of missing objects in \(L_{\text {r}}\) and insert penalties correspond to extra items in \(L_{\text {s}}\). The trace back step involves revisiting decisions made during matrix filling.

Following the steps in Algorithm 6, we can obtain aligned object groups \(\hat{o}_{\text {d}}\) and \(\hat{o}_{\text {t}}\) and their quantities \(\hat{q}_{\text {d}}\) and \(\hat{q}_{\text {t}}\). We then calculate the planogram match ratio, \(\mu _{\text {s}}\), ranging from 0 (no compliance) to 1 (full compliance). A \(\mu _{\text {s}}\) of 0 means all items are misplaced, while 1 signifies complete planogram compliance. A match ratio between 0 and 1 indicates partial compliance, with some items missing, extra, or incorrectly placed.

4.2 Focused and iterative search

The object detection method and modified NW algorithm introduced in the previous sections may not produce exact planogram alignment result in one iteration. Therefore, we use the focused and iterative search method to improve the results [10]. Here, we repeat the object detection operation by relaxing the detection constraints and focusing on the region of interest (ROI) in the shelf image I(x, y). As we form the ROI, we iteratively decrease the value of \(\alpha\) as \(\alpha _{{\text {new}}} = 0.75 \alpha _{{\text {old}}}\). We detect new objects based on the new \(\alpha\) value at each iteration and apply the NW algorithm to the updated \(L_{\text {s}}\) and \(L_{\text {r}}\) until \(\mu = 1\) or \(\mu\) does not change for six successive iterations. We provide the pseudocode for the focused and iterative search operation in Algorithm 7. As the iterations end, we have the planogram compliance control result at hand.

5 The energy harvesting and power management block

The energy harvesting and power management block is crucial for ensuring the continuous operation of the battery-powered ESP-EYE modules without frequent battery replacements. Although they increase the initial cost, these blocks reduce long-term maintenance costs and enhance system reliability and sustainability using renewable energy sources. Therefore, one important part of the proposed embedded planogram compliance control system is the energy harvesting and power management block. Various energy-harvesting methods, including RF and WPT, are increasingly utilized to extend the battery life of embedded systems [20,21,22,23]. Kadir et al. [24] introduced a Wi-Fi energy harvester suitable for indoor, low-power applications, featuring multiple antennas and providing up to 2 V output. Tepper et al. [25] explored the use of WPT systems in aircraft for powering sensors, employing commercial Powercast 2110 evalkit with a transmitter operating at 915 MHz.

In this section, we explain suitable modules for solar and RF energy harvesting. We use them in our embedded planogram compliance control system to extend battery life, either separately or combined using complementary methods or power ORing [26]. Therefore, we start with explaining the battery pack used in operation.

5.1 Battery pack

The battery generally has the largest size in an embedded system with its size directly proportional to its capacity and price. Hence, determining the battery life and capacity plays a vital role while determining the system physical dimensions and overall cost. We maximize the battery life with the help of ambient energy harvesting options in the proposed system. Therefore, we should use a rechargeable battery to store harvested energy. To be more specific, we picked the LiFePO4 battery, which is a type of Lithium-Ion (Li-Ion) battery, to supply the system since its voltage range is suitable directly use it with ESP-EYE module. Moreover, we minimize the current drawn via utilizing hibernation mode for the ESP-EYE and periodic wake-ups via timer interrupts. This operation significantly saves battery power and maximizes the battery life.

5.2 Solar energy harvesting

The simplest energy-harvesting method is to use ambient light with the help of photovoltaic (PV) cells, as there is usually constant artificial lighting in a retail store. We use a power management integrated circuit (PMIC) to charge the battery from the harvested energy, track the maximum power point of PV cells, and supply the regulated voltage to the system. We use the SPV1050 from STMicroelectronics as the PMIC that can be used for indoor light energy harvesting [27]. This module harvests energy from solar cells and stores the harvested energy in a capacitor. Then, it supplies the regulated output voltage to system components. It can harvest energy from PV cells even if the output voltage of the PV cell is as low as 150 mV. It also supports secondary input where other energy-harvesting methods can be connected in complementary to the PV cell. Hence, it can harvest energy from this input if there is not enough lighting on PV cell. De Rossi et al. [28] show that an amorphous silicon (a-Si) PV cell can produce about 13 \(\upmu\) W/cm\(^2\) under 500 lux LED light illumination, which is the average illumination level in a retail store. Hence, we pick the a-Si PV cell in the proposed system.

5.3 RF energy harvesting

We can also use the RF energy-harvesting chip P2210B from Powercast to back up the battery power of ESP-EYE module. Basically, the system converts RF energy to DC voltage and stores it in a capacitor. Then, the chip regulates and supplies the voltage to output. The P2210B chip can harvest power level below to − 12 dBm from 902 to 928 MHz RF signals. It can provide an output current of 0.2 mA at an input power level of 0 dBm. The 0 dBm average RF power at indoor is quite acceptable when it is recognized that maximum power level is 36 dBm and reduced down to 4 dBm at 915 MHz for GSM communication [29].

6 Experiments

We test the proposed system in this section. To do so, we benefit from two datasets. We first introduce them. Then, we provide the object detection performance of the proposed method on these datasets. To note here, we take the performance of the SIFT method in our previous work as the baseline method [10]. Furthermore, we re-assess object detection performance the baseline method using intersection over union (IoU) value of 50%. Afterward, we provide experimental results on the planogram compliance control step. We also provide timing and power consumption performance of the proposed embedded system.

6.1 Datasets used in experiments

In the absence of a dedicated dataset for evaluating embedded system object detection in retail, we utilized available datasets relevant to similar problems, such as object detection in retail stores and bounding box extraction. Specifically, we adapted a subset of the grocery product dataset annotated by Tonioni and Di Stefano [30, 31]. We split the images for single shelf rack focus and resized them to 400 pixels in height, matching the dimensions used by the image retrieval block in our system. We also employed the Migros dataset, selecting its first subset, comprising 28 shelf images with 312 objects, already split for single rack views and resized to align with our system’s requirements [10]. This approach allows an effective assessment of our method and comparison with previous works.

6.2 Object detection performance

We focus on the object detection performance of the proposed method in this section. Here, we use IoU value of 50% while computing the precision, recall, and F1 score as our performance metrics. We tested the proposed object detection method on the Migros and grocery product dataset. We provide a representative image from each dataset for the detected objects in Fig. 2. As can be seen in this figure, all objects are detected correctly with the proposed method.

We provide object detection performance of the proposed method on the Migros dataset in Table 1. As can be seen in this table, the proposed method in this study achieves higher object detection performance compared to the results of baseline object detection method. To be more specific, the overall performance increases using YOLO instead of ISM while detecting bounding boxes. To note here, bounding box detection and classification steps depend on the SIFT keypoint extraction and feature matching performance in the baseline method. We benefit from YOLO to detect bounding boxes in the proposed system. Therefore, we can reach F1 scores around 1 for both YOLOv5 models. This is a remarkable result for the given problem.

We provide object detection results on the grocery product dataset in Table 2. As can be seen in this table, the proposed method with YOLOv5x model achieves higher object detection performance on this dataset compared to the baseline object detection method. However, overall performance of the YOLO models decrease compared to the Migros dataset. Lower scores in this dataset are because of two main reasons. First, images in this dataset are blurry and have low resolution. Second, the proposed method is sensitive to the shelf height and full visibility of the shelf. There are some images in the grocery product dataset which violate this constraint. Therefore, they led to low performance. Even with these negative effects, the proposed method was able to produce F1 scores around 0.927 with the YOLOv5x model.

We compared the proposed method with the results obtained by Tonioni and Di Stefano [31], who used the grocery product dataset for their experiments. Tonioni and Di Stefano reported an F1 score of 0.904 for their object detection method, which uses BRISK and best-buddies similarity method. Our method, using SIFT and YOLOv5x, achieved an F1 score of 0.927. This comparison demonstrates that our proposed method provides higher accuracy in object detection compared to the method by Tonioni and Di Stefano.

6.3 Planogram compliance control performance

There is no consensus on measuring the planogram compliance control performance in literature. The closest study for this purpose is by Liu et al. [32]. Unfortunately, this study focuses on a graph theory based representation. Therefore, we provide our own measures based on precision, recall, and F1 score values. We define true positives as alignment results matching the ground truth, false positives as misalignments not in the ground truth, and false negatives as ground truth object groups missing in the alignment results.

We provide planogram compliance control performance results for the Migros dataset in Table 3. As can be seen in this table, the proposed method achieves higher performance compared to the baseline method. This is because the planogram compliance control performance is directly related to the object detection performance given in Table 1. This can be expected since correctly detecting objects in the shelf image directly affects the corresponding planogram formation from the shelf.

6.4 Timing and power consumption performance of the image acquisition and transfer block

Timing performance of the image acquisition and transfer block is important since we would like to have a stand-alone system to achieve this operation. Therefore, we analyzed the timing performance and power consumption metrics of the methods running on the ESP-EYE module. To the best of our knowledge, there is no existing study that provides these metrics on the same datasets or experimental setup. As we report these results, we expect that this study will serve as a benchmark in the literature, providing a valuable reference for future research in this area.

We first analyzed timing of the methods running on the ESP-EYE module. We measured the results as 3.5 and 8.5 s for image acquisition and transfer operations, respectively. Based on this timing performance results and working scenario provided in Sect. 2, we can report the average current drawn from the 3.3 V power supply (including wireless transfer) as 243.2 mA. Assuming that the 18650 sized LiFePO4 battery has 1500 mAh capacity, we can claim that the ESP-EYE based first section of the proposed embedded system can last approximately 24 months. This duration can be enhanced by increasing battery capacity or adding an energy-harvesting module to the system.

According to our tests, the SPV1050 solar energy harvester and \(52\times 27\) mm sized a-Si PV cell can extend battery life approximately 12 to 24 months depending on light intensity levels 250 lux and 500 lux, respectively. Moreover, the P2110B RF energy harvester can feed 3.3 V and 0.018 mA continuously to the system under − 8 dBm average input power. Hence, operation durations can be expanded further by approximately 12 more months. The harvesting options can be used as complementary to further increase the battery life. Moreover, if the light intensity increases above 1000 lux, a double sized a-Si PV cell is used or the average input RF power increases above to − 3 dBm, then the system can operate infinitely without recharging or replacing the battery. Hence, the battery size became insignificant and a LiFePO4 battery with the smallest capacity can be used.

6.5 Timing performance of the object detection block

We analyzed timing performance of the object detection block on selected hardware platforms at maximum GPU and CPU power levels in this section. While calculating the timing performance for the object detection block, we pick the average time required to process one shelf rack image as a measure. This analysis was conducted using images from the Migros dataset. We provided the results in Table 4. It is important to note that if the object detection block fails to identify all objects in a shelf image, iterations in the focused and iterative search method increase, significantly impacting the timing performance.

As can be seen in Table 4, YOLOv5s emerges as the fastest model across all hardware platforms despite having the lowest object detection score. Nevertheless, it cannot outperform the baseline method in terms of speed. On the other hand, YOLOv5x has the slowest timing performance, attributable to its larger model size and increased number of parameters. Therefore, the reader should take these values into account while picking the appropriate method.

NVIDIA Jetson AGX Orin stands out as the fastest SBC for all models when the hardware platforms of interest are compared. To be more specific, with GPU support, it is almost 1.7 times faster than a PC with an Intel i7-7600U CPU while using YOLOv5s and 2.6 times faster while using YOLOv5x. As expected, Raspberry Pi4 is the slowest SBC due to its less powerful CPU. These durations imply that complete planogram compliance control in a store equipped with 100 ESP-EYE modules can take approximately 2.4, 0.5, and 0.3 h with YOLOv5s on a single Raspberry Pi4, NVIDIA Jetson Orin Nano, and AGX Orin, respectively. The same operations can take around 3, 0.6, and 0.3 h with YOLOv5x on the Raspberry Pi4, NVIDIA Jetson Orin Nano, and AGX Orin, respectively. The time taken for the same operations on a PC with Intel i7-7600U CPU is approximately 0.5 and 0.8 h with YOLOv5s and YOLOv5x, respectively. Therefore, we can conclude that the NVIDIA Jetson AGX Orin is the most efficient in terms of processing time, followed by the NVIDIA Jetson Orin Nano, PC, and Raspberry Pi4.

We should also consider the cost of the mentioned SBCs and PCs in comparison. The price for the NVIDIA Jetson AGX Orin and Nano boards are USD 1999 and USD 499, respectively. The price for the Raspberry Pi4 board is USD 75. The average cost of a PC with Intel i7-7600U CPU which is suitable for these tasks is approximately USD 1500. Based on the price of the SBCs, PCs, and timing values, the same tasks using YOLOv5x could be completed at a cost of USD 1999 with a single NVIDIA Jetson AGX Orin SBC, USD 998 with two NVIDIA Jetson Orin Nano SBCs, USD 750 with ten Raspberry Pi 4 SBCs, or USD 4500 with three PCs. Therefore, we can conclude that the NVIDIA Jetson Orin Nano offers a balanced trade-off between cost and performance, making it a cost-effective solution for planogram compliance control. In addition, the Raspberry Pi4 presents the most economical solution despite being the slowest in terms of processing time.

7 Conclusions

In this study, we proposed an embedded planogram compliance control system with all its components. The methodology used in the system is the improved version of our previous work. To be more precise, the improvement has been done by adding YOLO or object detection. This way, our system precisely detects and aligns objects on shelves which is crucial for planogram compliance control accuracy. Our proposed embedded system architecture is designed for effective operation within the constraints of power consumption, cost, and physical space in retail environments. Therefore, we benefit from low-cost ESP-EYE modules for image capture and more powerful single-board computers like Raspberry Pi 4 or NVIDIA Jetson for implementation. We also applied solar and RF energy-harvesting techniques to extend the battery life of our system while maintaining its compactness. This aspect is crucial for seamless integration into retail environments. Our scalability analysis, involving various hardware configurations, demonstrates the flexibility of the system in adapting to diverse performance requirements and budget constraints. Our experimental validation conducted using public datasets showcases the strengths of our approach in comparison to existing methods. The results affirm the viability of our system in smart retail applications, highlighting its scalability, efficiency, and cost-effectiveness. The next step for us is extending our method to employ machine learning and AI algorithms for better planogram alignment and matching. Also, we can train lightweight models to run on low-power embedded systems. Hence, it can be used in stand-alone form in retail sector.

Data availability

No datasets were generated or analyzed during the current study.

References

Shapiro, M.: Executing the best planogram. Technical report, Professional Candy Buyer, Norwalk, CT, USA (2009)

Planorama: Image recognition for Retail Execution and Merchandising. https://planorama.com/

Shelfie. https://shelfieretail.com/

Simbe Robotics. https://www.simberobotics.com/

FellowAI: Autonomous Navigation and Mapping. https://www.fellowai.com/

Badger Technologies: Retail Automation. https://www.badger-technologies.com/

Shelf Cameras - Focal Systems. https://focal.systems/topstock-backroom-cameras-1

Pricer ShelfVision. https://www.pricer.com/products/platform/shelf-vision

Improve On-Shelf Availability with Captana. https://www.ses-imagotag.com/products/captana/

Yücel, M.E., Ünsalan, C.: Planogram compliance control via object detection, sequence alignment, and focused iterative search. Multimedia Tools and Applications (2023)

Melek, C.G., Battini Sonmez, E., Ayral, H., Varli, S.: Development of a hybrid method for multi-stage end-to-end recognition of grocery products in shelf images. Electronics 12(17) (2023) https://doi.org/10.3390/electronics12173640

ESP-EYE. https://www.espressif.com/en/products/devkits/esp-eye/overview

Yücel, M.E., Ünsalan, C.: Shelf control in retail stores via ultra-low and low power microcontrollers. Journal of Real-Time Image Processing 19 (2022)

Express. https://expressjs.com/

Lowe, D.: Distinctive image features from scale-invariant keypoints. International Journal of Computer Vision 60(2), 91–110 (2004)

Redmon, J., Divvala, S., Girshick, R., Farhadi, A.: You only look once: Unified, real-time object detection. arXiv preprint arXiv:1506.02640 (2015)

Jocher, G.: ultralytics/yolov5: v7.0 - YOLOv5 SOTA Realtime Instance Segmentation. Zenodo (2022). https://doi.org/10.5281/zenodo.7347926

Goldman, E., Herzig, R., Eisenschtat, A., Goldberger, J., Hassner, T.: Precise detection in densely packed scenes. In: IEEE/CVF Conference on Computer Vision and Pattern Recognition, pp. 5222–5231 (2019)

Needleman, S.B., Wunsch, C.D.: A general method applicable to the search for similarities in the amino acid sequence of two proteins. Journal of Molecular Biology 48(3), 443–453 (1970)

Riva, C., Zaim, A.: A comparative study on energy harvesting battery-free lorawan sensor networks. Electrica 23, 40–47 (2022)

Choi, H.-S.: Application for outdoor dust monitoring using rf wireless power transmission, pp. 196–199 (2018)

Tripathi, A., Nasipuri, A.: Information based smart rf energy harvesting in wireless sensor networks, pp. 197–198 (2019)

Warnakulasuriya, D.A., Mikhaylov, K., Alcaraz López, O.: Wireless power transfer for bluetooth low energy based iot device: an empirical study of energy performance, pp. 1–7 (2022)

Kadir, E., Hu, A., Biglari-Abhari, M., Aw, K.: Indoor wifi energy harvester with multiple antenna for low-power wireless applications. (2014)

Tepper, J., Baltaci, A., Schleicher, B., Drexler, A., Duhovnikov, S., Ozger, M., Tavana, M., Cavdar, C., Schupke, D.: Evaluation of rf wireless power transfer for low-power aircraft sensors. In: 2020 AIAA/IEEE 39th Digital Avionics Systems Conference (DASC), pp. 1–6 (2020)

Estrada-López, J.J., Abuellil, A., Zeng, Z., Sánchez-Sinencio, E.: Multiple input energy harvesting systems for autonomous iot end-nodes. Journal of Low Power Electronics and Applications 8(1) (2018)

SPV1050. https://www.st.com/resource/en/datasheet/spv1050.pdf

De Rossi, F., Pontecorvo, T., Brown, T.: Characterization of photovoltaic devices for indoor light harvesting and customization of flexible dye solar cells to deliver superior efficiency under artificial lighting. Applied Energy 156, 413–422 (2015)

3gpp ts 05.05 v8.20.0 - 3rd generation partnership project, technical specification group GSM/EDGE, radio access network, radio transmission and reception. Standard, ETSI (2005-11)

George, M., Floerkemeier, C.: Recognizing products: A per-exemplar multi-label image classification approach. In: European Conference on Computer Vision, pp. 440–455 (2014)

Tonioni, A., Di Stefano, L.: Product recognition in store shelves as a sub-graph isomorphism problem. In: International Conference on Image Analysis and Processing, pp. 682–693 (2017)

Liu, S., Li, W., Davis, S., Ritz, C., Tian, H.: Planogram compliance checking based on detection of recurring patterns. IEEE MultiMedia 23(2), 54–63 (2016)

Acknowledgements

This work is supported by TUBITAK under project no 5190042.

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of interest

The authors declare no conflict of interest.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

Springer Nature or its licensor (e.g. a society or other partner) holds exclusive rights to this article under a publishing agreement with the author(s) or other rightsholder(s); author self-archiving of the accepted manuscript version of this article is solely governed by the terms of such publishing agreement and applicable law.

About this article

Cite this article

Yücel, M.E., Topaloğlu, S. & Ünsalan, C. Embedded planogram compliance control system. J Real-Time Image Proc 21, 145 (2024). https://doi.org/10.1007/s11554-024-01525-6

Received:

Accepted:

Published:

DOI: https://doi.org/10.1007/s11554-024-01525-6