Abstract

Super-resolution is generally defined as a process to obtain high-resolution images form inputs of low-resolution observations, which has attracted quantity of attention from researchers of image-processing community. In this paper, we aim to analyze, compare, and contrast technical problems, methods, and the performance of super-resolution research, especially real-time super-resolution methods based on deep learning structures. Specifically, we first summarize fundamental problems, perform algorithm categorization, and analyze possible application scenarios that should be considered. Since increasing attention has been drawn in utilizing convolutional neural networks (CNN) or generative adversarial networks (GAN) to predict high-frequency details lost in low- resolution images, we provide a general overview on background technologies and pay special attention to super-resolution methods built on deep learning architectures for real-time super-resolution, which not only produce desirable reconstruction results, but also enlarge possible application scenarios of super resolution to systems like cell phones, drones, and embedding systems. Afterwards, benchmark datasets with descriptions are enumerated, and performance of most representative super-resolution approaches is provided to offer a fair and comparative view on performance of current approaches. Finally, we conclude the paper and suggest ways to improve usage of deep learning methods on real-time image super-resolution.

Similar content being viewed by others

Explore related subjects

Discover the latest articles, news and stories from top researchers in related subjects.Avoid common mistakes on your manuscript.

1 Introduction

In image processing area, image generally describes more visual details with higher resolution. To better understand semantic meanings of real-world images, it is an essential task for researchers to provide high-resolution (HR) images with sharp and clear object boundary or rich visual descriptions. However, obtaining HR images by possible hardware-based approaches is difficult and expensive [74]. For example, one of the possible methods, i.e., decreasing the pixel size would decrease the amount of light achieved by sensors, results in shot noise and sensitivity to diffraction effects. Another possible way, i.e., increasing sensor size, slows down the charge transfer rate and greatly increases the cost of image systems. Therefore, it is more favorable to apply algorithmic-based methods to pursue HR images from low-resolution (LR) resource images, rather than utilizing hardware-based solutions.

Algorithmic-based methods are usually named as super-resolution (SR) methods, which intend to reconstruct a HR image from input of LR observations captured in the same scene. It has been proved that resolution raising via SR methods can largely increase the amount of available information and thus leads to an accurate and robust vision-based machine learning system [70, 75]. Therefore, SR methods have gained great success in multiple domains, such as aerial imaging [138, 139], medical image processing [47, 118], automated mosaicking [13, 54], compressed image/video enhancement [11, 62], action recognition [73, 89], pose estimation [43, 44], face [18, 132], iris [4, 71], fingerprint [61, 101] and gait recognition [3, 140], scene text image improvement and reading [121, 122], and so on.

For the past two decades, numerous methods have been proposed to perform SR task. One of the most classical taxonomy ways to category SR methods relies on the number of LR images involved: single image or multiple images based SR methods [74]. Essentially, there exist fundamental differences on thoughts to solve SR problems in terms of number of LR images. Specifically, single-image based SR methods tend to hallucinate missing image details with relationship learned from training datasets. Multiple-image based SR methods generally utilize global/local geometric or photometric relation between multiple LR images to reconstruct HR images.

We must address that several surveys [39, 77, 107, 135] have been conducted on single-image, multi-based based SR or both, which is the basis to build up our paper. For example, Nasrollahi and Moeslund [74] provide a comprehensive overview of most of published and related papers up to 2012, which has offered plenty of reading resources to learn fundamentals and developing history for beginners in this field. Yue et al. [135] reviews SR methods and applications based on machine learning techniques up to 2016. Most recently, Hayat [41] focuses on the deep learning-based progress of three aspects in multimedia, i.e., image, video and multi-dimensions. Nguyen et al. [75] comprehensively survey the super-resolution approaches proposed for a special application domain, i.e., biometrics including fingerprint, iris, gait, face, etc. Built on but differing from these survey papers, our paper focuses on the review of deep learning-based SR methods with real-time response, which could enlarge possible application scenarios of SR methods to embedding systems, cell phone, drones and so on by effective performance and low computation cost.

In this paper, we attempt to establish a baseline for future work by providing a comprehensive literature survey of deep learning methods in real-time SR research. Incremental advances or thoughts to the state of the art thus could be made or inspired on our provided baseline. Specifically, we first summarize the fundamental problems, categorize existing methods and review the applications to the benefit of beginners. We then briefly introduce significant progress in deep learning methods. By involving deep learning structures to solve problem of SR, quantity of deep learning-based SR methods are proposed. We highlight deep learning methods for real-time SR by analyzing, comparing, and concluding their core ideas. Afterwards, performance of the most representative deep learning approaches on benchmark datasets is compared and analyzed. Finally, we conclude to bring out open questions and future trends on improving deep learning methods for real-time SR performance.

The rest of the paper is organized as follows: Sect. 2 presents background concepts on super-resolution and deep learning methods for the benefit of beginners. Section 3 highlights and analyzes deep learning-based methods for real-time SR. Benchmark datasets, evaluation methods and performance comparisons of quantity of methods are presented in Sect. 4. Finally, the paper is summarized with discussions about remaining problems and future trends in Sect. 5.

2 Super-resolution formation

To offer an overall view on deep-learning based SR methods, it is useful to provide background information about the underlying problems. In this section, we first introduce mathematic definition of SR problem by reviewing the relevant imaging model. Then, we categorize the existing methods, which helps readers not only know history of SR, but also comprehend deep learning-based SR methods by comparing them with other types of SR methods. Afterwards, we review SR applications in different domains and focus on applications requesting real-time performance, which should be considered during the design of real-time SR methods. Finally, we explain fundamental thoughts of CNN and GAN structures for readers’ convenience, since this paper mainly focuses on real-time methods for SR with CNN and GAN structures.

2.1 Definition of super-resolution problem

Although SR methods give rise to many applications, the fundamental goal of SR is to collect missing detail information by reconstructing super-resolved images from LR observations. In the literature, SR can be regarded as a heavily ill-posed problem, since the informative information represented in LR images is often insufficient to complete the task of reconstruction. Therefore, SR methods need to complete three main tasks: up-sampling of LR images to increase image resolution, removing artifacts including blur and noise during SR process, and registration or fusion of multiple input LR images for a better representation of target HR image.

Based on these three tasks, we first describe the most common imaging model [51, 95] to generate LR images in the simplest case, where this process can be modeled linearly as

where \(L_i\) is an observed LR image, R is the original HR scene, q is a decimation factor or sub-sampling parameter which is assumed to be equal for both x and y directions, x and y are the coordinates of the HR image, and m and n of the LR images. The imaging model in Eq. 1 states that an LR observed image has been obtained by averaging the HR intensities over a neighborhood of \(q^2\) pixels.

This model becomes more realistic when the other parameters involved in the imaging process are taken into account, which is shown in Fig. 1, including stepwise representations for warping, down-sampling, noise adding, and blurring. It is noted that SR methods could be generally considered as an inverse processing workflow to generate HR images based on LR images. Supposing the real-world image R is captured by n different located cameras to form multi-view images, the generated LR \(L_i\) is constructed by the following formulas:

where x, y and \(x', y'\) refer to coordinates of one pixel in real-world image R and generated LR images \(L_i,L_j\), respectively, \(\omega _i()\) is a warping function determined by locations and rotations of multiple cameras, \(\delta _i()\) is a own-sampling function, \(\mu _i()\) is a blurring function, \(\eta _i()\) is an additive noise, \(\tau _{i,j}()\) is a coordinate transformation function including horizontal shift, vertical shift and rotation, and \(\rho _{i,j}()\) is a pixel intensity transformation function. Specifically, if the LR image of \(L_i\) is displaced from the HR scene of R by a translational vector as (a, b) and a rotational angle of \(\theta\), the warping function in homogeneous coordinates could be represented as

In matrix form, Eq. 2 can be written as follows:

in which A stands for the above-mentioned degradation factors. This imaging model has been used in many SR works. It is noted that the first line in Eq. 2 refers to warping, down-sampling, noise adding, and blurring on a single HR image and corresponds to inverse operations of task 1 and 2; meanwhile, the second line refers to multi-view transformation on two different LR image and corresponds to inverse operation of task 3. Moreover, we often define process of image registration [48, 80, 102] including functions \(\tau _{i,j}()\) and \(\rho _{i,j}()\) to handle multi-view images during SR. Essentially, the process is to geometrically align multiple images of the same scene onto a common reference plain, where images can be captured at different times and from different views, or by multiple sensors.

Illumination of the most common used imaging model, where the forward pipeline represents the process of generating multiple LR images \(\{L_i,\ldots ,L_n\}\) from a real-world image R by warping (\(\omega\)), down-sampling (\(\delta\)), blurring (\(\mu\)), and adding noise (\(\eta\)) , and the backward pipeline refers to a basic method of reverse super-resolution to reconstruct the real-world or HR image from one input LR image or multiple LR images by de-blurring, up-sampling, aligning, and image registration (\(\tau\) and \(\rho\))

2.2 Categorization of super-resolution methods

Following the description of imaging model and basic categorization in [74], we further categorize the existing SR methods into single image SR (SISR) or multiple images SR (MISR) as shown in Fig. 2. In the following discussions, we would follow Fig. 2 to state the categorization of current SR methods.

Being a highly ill-posed problem without sufficient information about the original image sets, early SISR methods tend to utilize analytical interpolation to reconstruct HR images. Several famous interpolation-based SISR methods can be listed as linear, bicubic, cubic splines interpolation methods, Lanczos up-sampling [29], New Edge Directed Interpolation (NEDI) [63] and so on. These methods are very simple and effective ways in smooth parts with real-time performance. However, simple rule in interpolation brings in overly smooth and blurring details, which harm the visual effect of image discontinuities like edges, boundaries, and corners. Hence more sophisticated insight to recover image details via reasonable SR ways is required.

Besides interpolation-based methods, researchers have proposed another two categories of SISR methods, i.e., reconstruction-based and learning-based methods. Reconstruction-based methods suppose there exist certain priors or constraints in the form of distribution, energy function or score function between HR and the original LR images. Therefore, researchers try a variety of methods to perform SR tasks by establishing reconstruction priors like sharpening of edge details [21], regularization [5] or de-convolution [97].

Learning-based methods try to restore missing high-frequency image details by establishing implicit relationship between LR patches and their corresponding HR patches via machine learning models. This category of methods has achieved more and more attention from researchers due to its promising and visually desirable reconstruction results. It is a general idea to enhance SR quality by learning relationship from large quantity of training data. However, applying over data might introduce spurious high frequencies, resulting in noise and blur details. Therefore, it is important to keep balance between size of training data and reconstruction visual effects.

With the development of machine learning technologies, researchers have tried quantity of learning models to solve SR problem. We further classify learning-based methods into five groups based on differences of their core ideas: neighbor embedding methods [7, 15], sparse coding methods [14, 28], self-exemplar methods [34, 46], locally linear regression methods [38, 129], and deep learning methods [26, 52, 67, 104]. In this paper, we focus on utilizing deep learning-based methods to solve SR problem, due to their significant HR reconstruction results. Deep learning-based methods will be comprehensively discussed in Sect. 3. In the following discussions, we will briefly introduce other four groups of learning-based methods for comparisons with deep learning methods.

Neighbor embedding (NE) methods consider that similar local geometries property is shared between LR patches and their corresponding HR patches. Due to the similar local geometry property of LR and HR feature space, patches in the HR feature domain can be computed with a form of a weighted average of local neighbors. After construction weight scheme, the whole SR computation process can share the same weights within LR feature domain. Based on this thought, Chang et al. [15] propose a SR method by applying a typical kind of manifold learning method, i.e., locally linear embedding (LLE) [87] on weight learning. Their proposed method assumes each sample and its neighbors lie on or near a locally linear patch of the manifold, the idea of which has greatly influenced the subsequent coding-based methods in early times.

Sparse coding methods consider image patches as a sparse linear combination of elements, which could be selected from a pre-constructed and sparse enough dictionary. By exploiting reasonable and sparse enough representation for each patch of low-resolution inputs, the process of generating high-resolution outputs can be represented as coefficients computing. For example, Yang et al. [130] train a joint dictionary to find a highly sparse and over-complete coefficient matrix, which directly and clearly describes relations between LR image patches and corresponding HR image patches.

However, these sparse coding approaches are generally slow in computing speed, since sparse encoding largely increases memory usage and more elements are thus required to be processed.

Natural images generally have self-similarity property, which inspires researchers to utilize internal similar properties among LR patches to help effective and qualified SR. Based on this core idea of self-exemplar methods, Glasner et al. [34] propose a scale space pyramid, which can be trained with internal class data, i.e., local pathes. Furthermore, self similarity information represented by the trained pyramid can help explicitly describe the mapping between LR and HR pairs. However, high computation cost, especially in building scale space, has prevented the further usage of self-exemplar methods.

The thought of locally linear regression methods sources from Timofte et al. [109], which focuses on solving computational speed shortcomings of sparse coding methods by replacing complex dictionary with many and light-scale ones. Following this trend, researchers try quantity of supervised machine learning techniques to replace heavy sparse coding dictionary, which not only directly learns mapping relationship embedded between LR image domain to HR image domain, but also helps keep low values in computation cost. The most popular supervised machine learning techniques to learn mapping relationship can be listed as anchored neighborhood regression [15, 109, 110], random forest [93, 94] , manifold embedding [79] and so on.

For multiple-image SR, three main types of multi-frame methods can be listed: interpolation-based methods [8, 78], frequency-domain methods [23, 49, 145] and regularization-based methods [6, 106]. For a detailed description on category of MISR, we highly suggest the readers to read [19, 74]. Unlike interpolation-based methods for SISR, MISR consists of registration, interpolation, and deblurring, where extra image regression step reconstructs both local and global geometry based on multiple input LR images. Frequency-domain methods generally utilize DFT, DCT, DWT or other frequency transform methods to discover high-frequency details of HR images. Regularization-based methods are specially designed to perform SR task with conditions like a small number of LR images or heavy blur operators. With either deterministic or stochastic regularization strategy, these methods try to form and incorporate prior knowledge of all the unknown HR images.

2.3 Super-resolution applications

Over the past two decades, there have been numerous SR-related applications, which are the most basic concerns and requirements to improve SR methods. We thus offer some successful application examples of SR to analyze user needs for SR methods.

Medical diagnosis Doctors need various medical images to comprehend healthy messages sent by the inside human body structure. Unfortunately, resolution limitations of medical images do great harm to such precise diagnosis. By reconstructing high-resolution magnetic resonance images (MRI) [86], positron emission tomography (PET) [37] or other key medical images [25, 114], SR methods help maintain geometry structure captured by original 3-D imaging system. For instance, to collect similar images to establish a database [113], researchers apply example-based SR for single frames to create clear enough samples. Specifically, the adopted training database was established with a set of five standard images, including computed tomography (CT) and MRI images from various parts of the human body. Since medical imaging systems are originally designed to acquire multi-view and multiple medical images, the main challenge of SR methods for medical diagnosis lies in the precise reconstruction with less errors and higher robustness.

Text image enhancement and reading Text detection and recognition has been applied in many real-life applications such as iTown, Rosetta and many other smart city developments [72, 131]. These applications encounter problems when performing natural scene semantics understanding or analysis, due to the low resolutions of images captured by cell phone or CCTV cameras, large variations in text rotations or illumination embedded in complex backgrounds with buildings, trees, etc. It is needed to increase the image quality and processing speed of captured text images and by appropriate real-time SR methods, in order to fulfil requests of robust text reading and implementations on embedding systems, cell phone or CCTV cameras [81, 83].

Biometrics Biometrics is defined as a reliable method to automatically identify individual persons based on their behavioral and physiological characteristics, i.e., faces [115, 151], fingerprints [134], and iris images [30]. Even though biometrics system has achieved great success [105], it still faces several challenges. Among these challenges, short distance of image acquisition has become the major one, since it prevents further wide usage of such systems. With proper SR methods, image details like shapes and structural texture can be clearly enhanced. Meanwhile, SR could help maintain the global structure of images. All these advantages can greatly improve the recognition ability in biometrics-related applications.

Remote sensing In the past decades, researchers have applied SR techniques to improve quality of remote sensing images. After years of efforts, there exist successful and applicable examples in remote sensing area [138]. For example, Skybox Imaging Plan utilizes SR techniques to help provide real-time remote sensing images under a sub-meter resolution, which shows that SR methods could help improve outputs of remote sensing applications. The main challenge for remote sensing image SR lies in two aspects: (1) how to deal with scene variations in case of temporal differences, and (2) how to modify the existing and successful SR methods to handle large amounts of remote sensing images captured by satellites every day.

Based on former discussions, we can observe the variety of application scenarios of SR methods. Among these applications, medical diagnosis requires to process with less errors and higher robustness, while remote sensing requires to overcome temporal differences and handle massive amounts of input. Besides, both categories of applications have common property that users, i.e., doctors and scientists, could buy computation resource abundant devices to handle SR for edge computing or big data services [35, 127]. In other words, they can easily access better equipments for more satisfying SR results, meanwhile keeping low computing time. For biometrics and text image reading applications, they mainly run on embedding devices and have high request for real-time responses to improve user experience. Therefore, it is a key problem for such applications to keep balance between SR quality and computation cost. In this paper, we would like to emphasize applications like biometrics and text image reading, thus reviewing SR methods for real-time computing and fulfilment of their emergency requests.

2.4 Deep learning background

The most popular structures related to SR can be roughly categorized into the two groups: convolutional neural network (CNN) and generative adversarial networks (GAN). These deep structures have high capability to represent information abundant and distinctive features by self-learning strategies. For comparison, traditional learning structures require humans to observe and design manual features to perform classification tasks. In this subsection, we focus on explanation of fundamental thoughts of CNN and GAN structures.

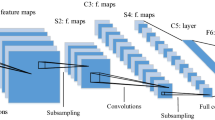

Convolutional neural network Inspired by the promising classification results achieved by a typical CNN, i.e., AlexNet [55], quantity of trials on structures, learning strategies and applications have been made, some of which can be listed as: VGG [100], Googlenet [103], ResNet [42], R-CNN [33] and so on. Several common types of layers are involved to construct CNN structure: convolutional layers, pooling layers, and fully connected layers.

Convolutional layers are designed to gather information of neighboring pixels. In fact, each pixel is closely associated with neighboring pixels and nearly irrelevant with pixels in long range, which is named as local receptive field. We show the comparison between local connection and full connection adopted by fully connected layer in Fig. 3. Essentially, a convolution kernel can only extract one specific feature in a local sense. Researchers thus design multiple convolution kernels to extract a variety of features from input images. With different feature maps produced by multiple convolution kernels, convolutional layers lead to better understanding of image content.

Unlike convolutional layers, pooling layers are defined without parameters. Pooling layers utilize down-sampling operation to extract features from feature map, which reduce data size without modifications on data characteristics. The resulting abstract feature not only owns generalization ability from feature maps, but also has a certain degree of variety for translation, rotation, and scaling invariance. Pooling layers thus help improve robustness and generalization performance of the whole network.

Fully connected layers locate at the end of CNN, where each neuron is connected to all neurons in the upper layer. With such processing, features locally extracted are globally involved to output the final result. Above all, CNN structure combines abilities provided by convolutional, pooling and fully connected layers, which help approximate any continuous function and ensure to perform difficult classification and recognition tasks successfully.

Generative adversarial network As one of the most significant improvements on the research of deep generative models, GAN [36] provides a novel way to learn depth representation of features without large labeled training data. With this power for distribution modeling, GAN is extremely suitable to for unsupervised tasks [69, 148] including image generation [24], image editing [150], and representation learning [84].

The key idea of GAN stems from a two-player game preformed by a generator and a discriminator, where we show the basic structure of a typical GAN for generating hand-written digital images in Fig. 4. Specifically, the discriminator is responsible to judge whether an input image generated by the generator appears natural or could be found with artifacts; meanwhile the generator is to create images with the goal of making discriminator believe the created image is a natural image without any artifacts. After rounds of training, Nash Equilibrium will be achieved, where the trained generator would have the ability to understand inherent and intern representation of real images, thus generating real enough images.

GAN is still developing with great leading steps on structures, training algorithms and so on. With the development of GAN structure, more related applications have been applied by researchers. However, training GAN for data augmentation is challenging, since the training process can be easily trapped into the mode collapsing problem. Essentially, mode collapsing problem is defined as that where the generator only concentrates on producing samples lying on a few modes, instead of the whole data space [16, 92]. It is noted this problem exists in SR applications with GAN model as well, which will be further explained in Sect. 3.2.

3 Deep learning methods for real-time super-resolution

Despite there exist quantity of review papers on deep learning methods for SR [39, 77, 107, 135], there is a lack of reviewing of methods for real-time SR to the benefit of researchers. In this section, we attempt to survey deep learning literature, including CNN, GAN and other deep learning methods, with the view of real-time super-resolution.

In the following discussion of each subsection, we first introduce several typical methods, which have achieved significant HR reconstruction results but failed to obtain real-time performance. Then, we highlight fast and real-time deep learning methods, where we would carefully explain their core ideas, innovations, algorithm steps and performances. Finally, we conclude current state and discuss future developing trend. Furthermore, it is noted that we fuse SISR and MISR methods in this section, since many deep learning- based SR methods have ability to perform SISR and MISR.

3.1 CNN-based methods in real-time image super-resolution

3.1.1 CNN-based methods for SR

CNN-based SR methods are quite large in amount, due to their impressive HR image reconstruction results. The first work to solve SISR problem by CNN structure is proposed by Dong et al. [26], which constructs a three-layer CNN named as Super-Resolution Convolutional Neural Network (SRCNN) to learn mapping between LR patches and corresponding HR patches. Utilizing a bicubic interpolation for pre-processing, SRCNN optimizes and learns nonlinear mapping in manifold space based on information abundant feature maps produced by convolutional layers. With the high distinguishing power of deep structure, SRCNN has achieved promising reconstruction results outperforming majority of former methods, such as Self-Ex [46], A+ [110] and Kernel-based learning. Although SRCNN claims efficiency with a lightweight structure, its performance is still far from real-time response due to its time-consuming pre-processing step, i.e., bicubic interpolation.

Essentially, SRCNN is an important work to offer inspirations on utilizing deep structure for SR purposes. After its publication, SRCNN appeared in many other works as a baseline method for comparisons or a basic structure to modify for new learners. More important, its structure with only convolutional layers has greatly affected later CNN-based SR methods, which successfully avoid down-sampling effects brought by pulling or subsampling layers. However, the additional convolutional layers largely increase size of parameters, resulting in more possibility to be overfit with training dataset and be harder to achieve real-time responds. How to keep a balance between desirable reconstruction performance and real-time computing speed thus becomes a major challenge in utilizing deep structures for SR.

With the remarkable success to construct very deep structures achieved by Res-Net, its core idea of residual learning is adopted by researchers to perform SR tasks. Residual learning not only offers capability to construct larger number of layers for better HR reconstruction results, but also reduce difficulty in training process with fast convergence and a small number of epoches. For example, Kim et al. [52] first try residual learning for SR with a novel Very Deep Convolutional Networks (VDSR), which imitates VGG-net structure [100] to build 20 convolutional layers as a a very deep network. Following the trend of applying residual learning on SR, Tai et al. [104] propose Deeply Recursive Residual Network (DRRN), which first utilizes global residual learning to identify branch during inference and then proposes new concept of local residual learning to optimize local residual branch.

Recently, the main focus of CNN-based SR research is to utilize proper technologies for either improved HR reconstruction results or fast computing speed. For example, Deep Back-Projection Networks (DBPN) [40] propose iterative up- and down- sampling layers to form an error feedback scheme, which help transmit projection errors among different layers. With such scheme, they can represent the process of image degradation and super-resolution by simply connecting up- and down-sampling layers, thus improving HR reconstruction results with large scaling factors.

Network structure of Shi et al. [99] for real-time SR, where they propose sub-pixel convolutional layers to perform up-sampling operations

Related to the topic of real-time SR, Lim et al. [65] develop an enhanced deep super-resolution network (EDSR) with performance exceeding current state-of-the-art SISR methods. Their proposed method performs optimization by removing unnecessary modules in conventional residual networks and expands model depth with a stable training procedure. Inspired by the development of attention models, Zhang et al. [142] adopt an existing channel attention mechanism to construct very deep residual channel attention networks (RCAN). Their proposed residual in residual (RIR) structure is specially designed to bypass abundant low-frequency information for learning of high-frequency information. Hu et al. [45] propose a channel-wise and spatial feature modulation (CSFM) network, which connects feature-modulation memory (FMM) modules with stack connections for transforming low-resolution features to high informative features. Considering that most of CNN-based methods have not fully exploited all the features of the original low-resolution image, Shamsolmoali et al. [96] proposed an effective model based on dilated dense network operations to accelerate deep networks for image SR, which supports the exponential growth of the receptive field parallel by increasing the filter size.

3.1.2 CNN-based methods for real-time SR

CNN-based SR methods have demonstrated remarkable performance in quality of reconstructed HR images, compared with the previous non deep learning based models. However, high computation cost and large computing time

prevent its further practical usage, especially in phones or wearable devices that demand small computing burden and real-time performance. There are thus many trials to accelerate network for real-time performance, in order to enlarge possible application scenarios of CNN-based SR methods.

One of the most famous successful and inspiring trials is advised and performed by Shi et al. [99], who find that utilizing a single filter, usually bicubic interpolation, before reconstruction to up-scale input LR images is sub-optimal and time-consuming. They thus prefer to avoid such pre-interpolation operation by utilizing an end-to-end and unified CNN structure named as Efficient Sub-pixel CNN (ESPCN) for SR tasks, which tries to directly learn a up-scaling filter, i.e., sub-pixel convolution layer, and integrates it into the structure of CNN network. We show the structure of ESPCN in Fig. 5, where we can notice feature maps to fill up image detail information is extracted in the LR space, rather than performing in HR space by most of the SR methods. Afterwards, extracted feature map in different layers is fed into sub-pixel convolution layer for further processing.

As far as we know, ESPCN is the first CNN-based SR methods with real-time performance, which is reported to perform real-time SR tasks on 1080p videos using a K2 GPU device. Besides, authors report reconstruction results achieved by ESPCN is better than SRCNN by +0.15dB on Set14 dataset images.

Network structure of FSRCNN [27], which successfully accelerates SRCNN by several modifications

Nearly the same time with ESPCN [99], Dong et al. [27] successfully accelerate SRCNN by constructing a compact hourglass-shape CNN structure, named as FSRCNN. As shown in Fig. 6, their structure modification on accelerating SRCNN lies in three aspects: (1) they replace bicubic interpolation operation of SRCNN with a de-convolution layer located at the end of CNN network, thus avoiding bicubic pre-interpolation with visible reconstruction artifacts and unnecessary computational cost; (2) they train four convolution layers in a joint optimization manner to complete three tasks during feature extraction, i.e., shrinking, mapping, and expanding. Essentially, structure design of placing mapping layer after shrinking layer will greatly reduce feature dimensions, leading to smaller computation cost; (3) they utilize smaller filter sizes and more mapping layers, in order to achieve desirable reconstruction results and less computing burden at the same time.

FSRCNN is reported to achieve real-time performance (> 24 fps) on test images in all benchmark datasets, which is almost a 40-time improvement than SRCNN in computing speed. Compared with ESPCN [99] which achieves real-time performance on GPU, FSRCNN [27] could process LR images in real-time on a CPU-based platform, which largely expands its possible applicable scenarios.

Network structure of Lai et al. [56], where a careful design with pyramid structure and two working branches is adopted to progressively reconstruct sub-band residuals of HR images

Following the idea to replace time-consuming up-sampling with partly design of neural network in ESPCN [99] and FSRCNN [27], Yamanaka et al. [128] integrate network in network (NIN) structure [66], i.e., Parallelized 1 \(\times\) 1 CNNs, into the whole network as a post processing step for efficient up-sampling operations. Specifically, they first involve deep CNN layers and skip connection layers to extract feature maps by gathering information from both local and global areas. Afterwards, they utilize NIN layers to perform up-sampling operation for reconstruction of HR images. Authors report 10 times lower computation cost achieved by their proposed work than typical deep residual network for SR tasks, thus ensuring real-time performance. However, its simple structure design results in relatively worse reconstruction results, comparing with results achieved by FSRCNN.

To achieve real-time performance, ESPCN [99], FSRCNN [27] and Yamanaka et al. [128] replace bicubic up-sampling operation with sub-pixel convolution layers, de-convolution layers, and NIN layers, respectively. However, Lai et al. [56] argue that sub-pixel convolution, de-convolution or NIN layer adopts small size networks, which cannot guarantee to describe complicated mappings with their limited network representation capacity. They thus propose the Laplacian Pyramid Super-Resolution Network (LapSRN) to progressively reconstruct sub-band residuals for visually desirable HR images, where we show its structure in Fig. 7. During construction of each pyramid, feature extraction branch first predicts missing high-frequency residuals of HR images based on the input of coarse-resolution feature maps. Afterwards, image reconstruction branch adopts transposed convolutions to perform up-sampling operation, thus generating finer and coarser feature maps as input for next level. Furthermore, they design recursive layers as parameters sharing scheme across and within pyramid levels, which helps reduce the number of parameters in a large amount. With the careful design of pyramid structure, LapSRN claims to adaptively build model with different up-sampling scales, thus reducing computational complexity and achieving real-time computing speed on public testing datasets.

Network structure of Tong et al. [112], which represents that all levels of features are combined via skip connections as input to reconstruct HR images

Besides pyramid structure, another solution to improve small network without enough representation ability is constructing neural networks with skip connections, which not only help go deeper of neural networks by preventing gradient loss, but also relieve computation burden with unnecessary computing steps. Tong et al. [112] thus introduce dense skip connections in a very deep neural network for SR tasks, where we show its structure in Fig. 8. In each dense block, we can notice input low-level features and generated high-level features are combined in a reasonable way to boost reconstruction performance. To properly fuse low- and high-level features, dense block structure propagates feature maps generated by each layer into all subsequent layers and designs dense skip connection to allow for deeper structure. Since deep network generally leads to large computation cost, they further integrate de-convolution layers to reduce number of parameters for boosting speed of reconstruction process. By employing algorithm on a platform with a Titan X GPU, their proposed method could achieve an average running time of 36.8ms to reconstruct a single image from B100 dataset, thus guaranteeing real-time SR effects.

Unlike former CNN-based methods for real-time SR to utilize up-sampling operation and residual learning, Johnson et al. [50] tend to consider SR as an image transformation problem between input LR and output HR images. They thus model SR as a global optimization problem under a given objective function, which is formed as the sum of per-pixel loss between HR and ground-truth images and perceptual loss based on high-level features extracted from pre-trained neural networks. Such optimization problem could be solved by Gatys et al. [32] in real-time, thus achieving similar qualitative results but with three times faster running speed than SRCNN and other comparative methods. Essentially, their usage of perceptual loss functions to train feed-forward networks for SR problems and other image transformation tasks is novel and offers inspirations to many other similar works.

Network structure of Ahn et al. [2], where a, b represent plain ResNet and CARN structure, respectively. In the CARN model, each residual block is changed to a cascading block

Inspired by A+ [110] and ARN [1] which build mapping between input LR space and output HR space by learning proper local linear functions, Li et al. [64] construct convolutional anchored regression network (CARN) to learn representative mapping function for fast and accurate SR. Different from A+ and ARN, regression blocks inside CARN are built on the basis of automatically extracting feature map by convolutional filters, other than limited and hand-crafted features used by A+ and ARN. Besides, CARN transforms all key concepts during SR operation, such as feature extracting, anchor detection, and regressor construction, into convolution operations with different parameters, so that users can jointly optimize all steps in an end-to-end manner. Such end-to-end design relieves the burden of complicated step-wise optimization and thus decreases running time without lose of accuracy. CARN is reported to achieve 10 times lower computation cost than SRCNN, thus reaching real-time performance on most platforms.

Due to the requirement of heavy computation, deep learning methods cannot be easily applied to realworld applications. To address this issue, Ahn et al. [2] propose an accurate and lightweight deep network for image super-resolution. Specifically, they design an architecture that implements cascading connections starting from each intermediary layer to the others upon a residual network, where we show its special structure design in Fig. 9. Such connections are made on both the local and global levels, which allows for the efficient flow of information and gradient.

To explore feature correlations of intermediate layers rather than focus on wider or deeper architecture design, Dai et al. [22] explore to enhance the representational power of CNNs for more powerful feature expression and feature correlation learning. Specifically, they propose a second-order attention network (SAN) to adaptively rescale the channel-wise features by using second-order feature statistics for more discriminative representations. Furthermore, they present a non-locally enhanced residual group (NLRG) structure, which not only incorporates non-local operations to capture long-distance spatial contextual information, but also contains repeated local-source residual attention groups (LSRAG) to learn increasingly abstract feature representations. All these improvements in structure have been shown in Fig. 10.

To solve the problem of lack of realistic training data and information loss of the model input, Xu et al. [124] propose a new pipeline to generate realistic training data by simulating the imaging process of digital cameras. To further remedy the information loss of the input, they develop a dual convolutional neural network to exploit the originally captured radiance information in raw images. They gain favorable reconstruction results both quantitatively and qualitatively, and their proposed method is declared to enable super-resolution for real captured images.

Framework of second-order attention network (SAN) [22] and its sub-modules

After precise description on quantity of works about CNN-based methods for real-time SR, we can conclude their general structure. Specifically, in order to widen the receptive field, increasing network depth is one way adopted by quantity of methods, which is to construct network by a convolutional layer with filter size larger than a \(1\times 1\) or a pooling layer that reduces the dimension of intermediate representation. However, such designing may have a major drawback: a convolutional layer introduces more parameters and a pooling layer typically discards some pixel-wise information.

For the first issue of too many convolutional layers, we can see that each convolutional layer represents a new weight layer so that deep structure with quantity of convolutional layers bring disadvantages of too many parameters. This problem might lead to over-fitting concern, difficulty to achieve real-time performance, and huge size of trained model to store. Therefore, light structure of CNN without additional and time-consuming designs is required and pursued by researchers, which is the main trend of CNN-based methods for real-time SR.

Regarding image restoration problems such as super-resolution and denoising, image details are very important. Therefore, most deep-learning approaches for such problems do not use pooling or sub-sampling layers, so that important image details can be saved during process of SR tasks. For example, DRCN [53] repeatedly applies the same convolutional layer as many times as desired and does not apply pooling layers in their network architecture. The number of parameters does not increase while more recursions are performed, which offers a promising idea on network structure.

3.2 GAN and other deep learning-based super-resolution methods

Due to the unsupervised training property of GAN [90], GAN-base SR methods could use a large dataset of unlabeled images and work without any prior knowledge between inputting LR and HR image, which is essentially the main feature of GAN-based SR methods. Since GAN is originally designed to generate images, GAN-based SR methods could achieve super performance in generating photo-realistic SR images. However, we find no real-time methods are proposed for SR at current time. In fact, the most common use of GAN is to regard its generator part as a CNN network to perform low-to-high SR task. Without special design like statements in last subsection, it is hard for such GAN-based methods to achieve real-time performance. In our thought, researchers on GAN are still focusing on solving several most important problems of GAN structure, like mode collapsing and hard to train.

At last but not least, we introduce other deep learning methods for SR, including deep auto-encoder and deep reinforcement learning.

Architecture of super-resolution generative adversarial network [60]

Ledig et al. [60] find that most commonly used measurements to evaluate SR performance, such as MSE and PSNR, are designed with pixel-wise property. Since human perception often relies on evaluation in a global sense, some SR methods with high PSNR and MSE values would result in poor visual effects during SR process. Inspired by the significant property of GAN, they thus proposed super-resolution generative adversarial network (SRGAN) with a novel measurement, named as perceptual similarity. We show the structure of SRGAN in Fig. 11. Specifically, perceptual similarity is measured by a perceptual loss function, which serves as a sum form of an adversarial loss and a content loss. It is noted that adversarial loss ensures SRGAN could generate high-quality and photo-realistic SR images with help of a discriminator network, which is trained to classify the generated SR images are whether super-resolved images or original natural scene images.

Comparisons on SR quality, PSNR, and SSIM of different SR methods. It is noted that four images refer to HR images generated by bicubic interpolation, deep residual network optimized by MSE measurement, deep residual generative adversarial network designed with human perception loss [60], and original HR image, respectively

We show sampling images of SRGAN and other comparison samples in Fig. 12. From Fig. 12, we could notice that SRGAN, designed with perceptual loss function, could recover photo-realistic textures from low-resolution images sampled from public benchmarks. Meanwhile, SR methods designed with MSE-based measurement generate poorly visual HR images, even achieving high PSNR values at the same time. Therefore, we could conclude that SRGAN focuses on global contextual information to generate HR images, thus achieving lower pix-wise PSNR values than comparative methods as shown in Fig. 12. However, human perception is visual effect in a global sense; we thus observe photo-realistic textures from samples generated by SRGAN.

With the first and successful trial in SRGAN, Johnson et al. [50] further modify SRGAN on design of loss function, which could be improved as a sum form of pixel-wise loss, perceptual loss, and texture matching loss. In the context of combining GAN and CNN, Sajjadi et al. [91] propose EnhanceNe for automated texture synthesis, which utilizes feed-forward fully convolutional neural networks in an adversarial training setting. Their proposed network successfully create realistic textures, rather than optimizing for a pixel-accurate reproduction of ground truth images during training.

By leveraging the extension of the basic GAN framework [149], Yuan et al. [133] propose an unsupervised SR algorithm with a Cycle-in-Cycle network structure, which is inspired by the recent successful image-to-image translation applications. They further expand the algorithm to a modified version, i.e., MCinCGAN [143], which utilizes a multiple Cycle-in-Cycle network structure to deal with the more general case of SR tasks, using multiple generative adversarial networks (GAN) as the basis components. More precisely, their proposed first network cycle aims at mapping the noisy and blurry LR input to a noise-free LR space. On the basis of first network cycle, a new cycle with a well-trained \(\times 2\) network model is orderly introduced to super-resolve the intermediate output of the former cycle. In this way, the number of total cycles depends on the different up-sampling factors (\(\times 2\), \(\times 4\), \(\times 8\)), which is presented in Fig. 13. Finally, users could get the desired HR images with different scale factors by training all modules in an end-to-end manner .

The framework of the proposed MCinCGAN [143], where G1, G2, G3, G4, and G5 are generators and D1, D2, D3, and D4 are discriminators. It is noted that a–c show the frameworks for \(\times 2\), \(\times 4\) and \(\times 8\), respectively

Bulat et al. [10] propose a two-stage process involving the idea of using a GAN to learn how to perform image degradation at first and then learn image super-resolution with trained GAN, where we show its network structure in Fig. 14. Specifically, they train a High-to-Low GAN to learn degradation and down-sampling operations on HR images during the first stage. Once training process of High-to-Low GAN is finished, they utilize pairs of low- and high-resolution images computed by High-to-Low GAN as training samples for a new Low-to-High GAN. After training based on enough and variant training pairs generated by High-to-Low GAN, the resulting Low-to-High GAN could output desirable HR images The most interesting point for Bulat et al. [10] lies in the fact that their proposed method only requires unpaired image data for training so that annoying work of pairing low and high-resolution images can be avoided. By applying this two-stage process, the proposed unsupervised model effectively increases the quality of super-resolving real-world LR images and obtains large improvement over previous state-of-the-art works. Although Bulat et al. [10] can simulate more complex degradation, there is no guarantee that such simulated degradation can approximate the authentic degradation in practical scenarios which is usually very complicated. Therefore, Zhao et al. [146] improves it by exploring the relationship between reconstruction and degradation with bi-cycle structure, which jointly stabilizes the training of SR reconstruction and degradation networks. Most importantly, their degradation model is trained in an unsupervised way, i.e., without using paired images. since there are no pairs of LR-HR images in practice,

Architecture design of Bulat et al. [10]. It is noted that LR and HR images are not paired in the training dataset

Bulat and Tzimiropoulos [9] propose Super-FAN to complete two tasks simultaneously, i.e., improves resolution of face images and detects facial landmarks inside improved face images. Essentially, super-FAN constructs two subnetworks to first optimize loss function for constructing of a convinced heat map and then perform face alignment through heat map regression. By jointly training both sub-networks, they report desirable HR and detection results based on not only input LR images, but also real-world images affected by variant factors.

To further enhance the visual quality, Wang et al. [116] propose enhanced Super-Resolution Generative Adversarial Network (ESRGAN) to generate realistic textures, which introduces the Residual-in-Residual Dense Block (RRDB) without batch normalization as the basic network building unit. Furthermore, they successfully modify network structure with relativistic GAN and improve the perceptual loss with features before activation. Benefiting from these improvements, ESRGAN achieves consistently better visual quality with more realistic and natural textures and wins the first place in the PIRM2018-SR Challenge.

Deep Reinforcement Learning (DRL) has also been introduced recently. Following the thought of reinforce learning, DRL generally designs a learning policy to guide spatial attention. In other words, they utilize reward scheme of reinforce learning to navigate up-scaling regions, which results in an adaptively optimizing way for SR based on the characteristics of inputting images. For instance, Cao et al. [12] propose a novel attention-aware Face Hallucination framework, which follows principles of DRL to sequentially discover patches required to up-scale at first. Afterwards, they follow the resulting optimization sequence to perform facial patch enhancement by exploiting and involving global characteristics of the inputting facial image. Work of Cao et al. [12] is quite new in concept and provides a novel thought on how to adaptively process high-resolution images. However, the computation of [12] is much larger than SRCNN, reporting four times larger than SRCNN, due to the high computation cost of its deep structure.

4 Comparisons

4.1 Datasets and measurements

Many image datasets are popular to be adopted to prove and compare effectiveness of different super resolution methods in SR community. We list most of them with cites and descriptions in Table 1, where we can notice their original usages are different such as segmentation, classification, etc. Therefore, there are no actually construction rules to organize a specific dataset on SR topic. Among all these dataset, four datasets, i.e., SET5, SET14, B100, and URBAN100, are mostly commonly used for comparison in SR community. Our following performance will be performed on these four datasets.

There are two standard quality measures, i.e., peak signal-to-noise ratio (PSNR) and structural similarity index (SSIM) [37], which have been mostly used for measuring the quality of super resolution methods. Specially, the definition of PSNR relies on MSE; we thus define these three measures as follows:

where m and n refer to the width and the height of the image, I and P represent output image after operation of super resolution and the input original image respectively, \(\mu _x\) and \(\mu _y\) represent the means of x and y, \(\sigma _x^2\) and \(\sigma _y^2\) represent the variances of x and y, \(\sigma _{xy}\) is the covariance of x and y, and \(c_1\) and \(c_2\) are two preset variables.

PSNR is often adopted to measure the quality of reconstruction during super resolution by calculating power ratio of noisy signal introduced by super resolution. Higher values of PSNR represents better quality of reconstructed image. Meanwhile, SSIM is quantitative measure used to quantify the similarities of structure between original and HR image. High SSIM value can reflect that the process of super resolution does not affect image basic structure, thus proving better reconstruction quality.

4.2 Performance analysis

Table 2 reports SR performance with five usually adopted dataset. All these statics are obtained by variety of approaches like sparse coding, CNN-based, GAN-based, and so on. We observe that SAN outperforms other algorithms in most datasets with scale factor \(2\times\), \(3\times\), \(4\times\), and \(8\times\), meanwhile DualGAN achieves best results with scale factor \(8\times\) and B100 dataset. However, it is noted that we only collect a few performance examples with smaller scale factors for most GAN-based methods, since most GAN-based methods perform experiments on large dataset and with higher scale factor. Therefore, it is not fair to say SAN is better at SR with smaller scale factor. Essentially, SAN could be regarded as an improved version of EDSR, which utilizes attention architecture to generate more task-specified feature map for SR task. Meanwhile, DualGAN modifies original structure of GAN to two generators and discriminators to learn a mixture of many distributions from prior to the complex distribution. Both methods have shown enough progress on improvement of CNN or GAN based methods.

Based on the success of DRRN and other residual-based learning methods for SR like DRRN, EDSR, LapSRN, etc. we could conclude that residual learning brings many benefits to SR image reconstruction, since it captures and models the most important characteristics of SR. For example, Ms-LapSRN is an improved version of LapSRN by reconstructing the sub-band residuals of HR images at multiple pyramid levels. DSRN exploits both lowre-solution (LR) and high-resolution (HR) signals jointly, where recurrent signals are exchanged between these states in both directions (both LR to HR and HR to LR) via delayed feedback. Based on the high performance of these residual learning network, we can conclude that CNN-based methods are still mainstream to achieve high performance on SR.

In Table 3, we compare average frame rate (FPS) of different methods on SET5, SET14, BSDS100, URBAN100, and MANGA109 with the scale factors \(2\times\), \(4\times\), and \(8\times\), respectively. It is noted that since the codes of SRCNN and FSRCNN for testing are based on CPU implementations, we reconstruct these models in MatConvNet with the same network weights to measure the run time on GPU. By evaluating the execution time of each algorithm on a machine with 3.4 GHz Intel i7 CPU (64G RAM) and Nvidia Titan X GPU (12G Memory), we upscale input images by scale factors \(2\times\), \(4\times\), and \(8\times\) in experiments, respectively.

From Table 3, we can notice that FSRCNN is a fast enough algorithm, since it applies several convolution operations on LR images and has fewer network parameters. LapSRN achieves large FPS value, since it continually upscales images in a pyramid structure and adaptively applies different number of convolutional layers. D2GAN could achieve better runtime performance with smaller scale factors, while it achieves much lower FPS value than FSRCNN with larger scale factor. This fact indicates GAN is not stable in running time and requires further development to obtain consistent performance. Besides, runtime performance of SRCNN, VDSR, RFL, SCN, DRCN, A+, and LapSRN all depend on the size of output images, while the speed of FSRCNN is almost constant. FSRCNN, LapSRN, ProGAN, and D2GAN achieve real-time speed (i.e., 24 frames per second) on most datasets. Runtime performance of SRCNN could be commented as fast, while FPS values of other methods are quite low, even implemented with a powerful GPU card. Therefore, there is still much work on adapting deep networks to achieve real-time SR.

4.3 Comparisons between CNN and GAN-based SR methods

Based on results achieved by CNN and GAN-based SR methods, we aim to generally compare differences and future developments of these two categories. Essentially, CNN and GAN-based SR methods can be regarded as typical supervised and unsupervised learning algorithms respectively, which is the main reason for their huge differences. More precisely, supervised CNN methods attempt to learn directly the mapping between LR and HR images and highly depend on predetermined assumptions. Meanwhile, GAN-based networks are much more flexible with promising performance due to incorporated unsupervised training.

How to appropriately collect training data with sufficient enough information to support SR tasks has become a major problem in CNN-based SR methods. For majority researchers, they collect image data for SR by first down-sampling original HR to get LR images, and then using LR images as input to pursue HR results. However, such collecting process is not exactly the same as what happened in the application scenario, since patterns to induce quality loss can be various, such as image transmission, different compression algorithms and so on. Simply utilizing down-sampling to generate LR images would do great harm to flexibility of CNN-based SR methods with respect to different scenarios. On the contrary, GAN-based methods require less image data for SR tasks, since they use a largely unsupervised training process on the real images. Therefore, they do not require label or prior condition between LR and HR image.

Major difficulty in constructing GAN-based SR methods lies in their designs of architecture and loss function. It is noted the most common used measurement, i.e., MSE, is adopted in favor of maximizing PSNR. However, hallucinated details of generated HR images are often accompanied with unpleasant artifacts, even achieving high PSNR values. In other words, traditional measurements expose several constraints to human perception. However, GAN has ability to produce better results only with the integrated perceptual assessment other than single and simple measurement. In order to further improve visual quality, it is thus required to improve key components of GAN models for SR, i.e., network architecture and loss function, which are now progressively explored and developed by researchers. All these truths imply GAN is still developing and is promising to achieve better reconstruction results than CNN-based methods. Moreover, GAN is hard to train due to mode collapsing problem, which leads to early stopping by concentrating on only a few modes instead of the whole data space. On the contrary, CNN-based methods have been developed for several generations with visually desirable reconstruction results. With back-propagation training methods and well-developed CNN construction softwares, it is much easier to implement and train a CNN-based SR method than performing with GAN model.

Above all, we can conclude that GAN-based SR methods have high potential to achieve better reconstruction results than CNN-based SR methods, due to its property to describe variety of SR patterns with less labeled data. However, GAN model needs to be further developed to be easily trained and implemented.

5 Summary

Super-resolution is a hot and important research topic in computer vision and image processing community. By applying SR technologies, users can not only improve the resolution and visual appearance of inputting images, but also help improve accuracy and effectiveness of vision-based machine learning systems which generally regard high-resolution images as input. Inspired by the significant performance of deep learning methods, this paper focuses on reviewing current deep learning methods for real-time image super-resolution. As the first comprehensive survey on such topic, it has analyzed recent approaches, classified them according to as many as criteria, and illustrated performance for the most representative approaches.

In the past decade, research in this field has progressed as improved methods emerge. However, the small number of deep learning- based SR methods achieving real-time performance shows that ample room remains for future research. Essentially, the main reason for less methods on real-time SR lies in the fact that deep learning methods are hard to achieve fast processing speed without high computation resource. However, applying deep learning methods on SR is a reasonable way to achieve high performance with the current large datasets, named as “big data”. We thus present main challenges of deep learning for real-time SR: how to adapt deep learning-based SR methods with acceleration strategies to deal with “big data” situation. Furthermore, low-resolution images captured in extreme imaging conditions require robust enough algorithms to deal with such real-life complexities.

It is thus essential to develop novel methods, which are not only effective and efficient for “big data” processing, but also robust enough to handle extremely processing. Although abundant optimization methods have been proposed for real-time SR with deep learning structures in Sect. 3, high efficiency, effectiveness, and robustness for SR on specific application area are still highly required by industry and require further improvement. Despite designing alternative and high effective neural network structures, cloud computing is another simple and efficient solution to improve effectiveness of SR. By providing enough computing and storage services over the Internet [82, 123, 125, 126] for local SR tasks, a powerful computing platform with easy access and high-scalability could be utilized locally and help users accomplish their SR goals. There exist other novel methods to help improve SR from different aspects. For example, deep compression methods could help prune neural networks for SR, resulting in less storage and computation consume.

Based on all these analyses, we believe that this review is useful for developers who are willing to improve performance of their SR solutions in both running time and accuracy. Our review will serve as a guidance and dictionary for further research activities in this area, especially in the deployment of real-time super-resolution with deep learning methods.

References

Agustsson, E., Timofte, R., Van Gool, L.: Anchored regression networks applied to age estimation and super resolution. In: Proceedings of International Conference on Computer Vision, pp. 1652–1661 (2017)

Ahn, N., Kang, B., Sohn, K.: Fast, accurate, and lightweight super-resolution with cascading residual network. In: Proceedings of European Conference on Computer Vision, pp. 256–272 (2018)

Akae, N., Makihara, Y., Yagi, Y.: Gait recognition using periodic temporal super resolution for low frame-rate videos. In: Proceedings of International Joint Conference on Biometrics, pp. 1–7 (2011)

Alonso-Fernandez, F., Farrugia, R.A., Bigun, J., Fierrez, J., Gonzalez-Sosa, E.: A survey of super-resolution in iris biometrics with evaluation of dictionary-learning. IEEE Access (2018)

Aly, H.A., Dubois, E.: Image up-sampling using total-variation regularization with a new observation model. IEEE Trans. Image Process. 14(10), 1647–1659 (2005)

Belekos, S.P., Galatsanos, N.P., Katsaggelos, A.K.: Maximum a posteriori video super-resolution using a new multichannel image prior. IEEE Trans. Image Process. 19(6), 1451–1464 (2010)

Bevilacqua, M., Roumy, A., Guillemot, C., Alberi-Morel, M.L.: Low-complexity single-image super-resolution based on nonnegative neighbor embedding. In: Proceedings of British Machine Vision Conference (2012)

Bose, N.K., Ahuja, N.A.: Superresolution and noise filtering using moving least squares. IEEE Trans. Image Process. 15(8), 2239–2248 (2006)

Bulat, A., Tzimiropoulos, G.: Super-fan: Integrated facial landmark localization and super-resolution of real-world low resolution faces in arbitrary poses with gans. In: Proceedings of Computer Vision and Pattern Recognition, pp. 109–117 (2018)

Bulat, A., Yang, J., Tzimiropoulos, G.: To learn image super-resolution, use a gan to learn how to do image degradation first. In: Proceedings of the European Conference on Computer Vision, pp. 185–200 (2018)

Caballero, J., Ledig, C., Aitken, A., Acosta, A., Totz, J., Wang, Z., Shi, W.: Real-time video super-resolution with spatio-temporal networks and motion compensation. In: Proceedings of Computer Vision and Pattern Recognition, pp. 2848–2857 (2017)

Cao, Q., Lin, L., Shi, Y., Liang, X., Li, G.: Attention-aware face hallucination via deep reinforcement learning. CoRR abs/1708.03132 (2017)

Capel, D., Zisserman, A.: Automated mosaicing with super-resolution zoom. In: Proceedings of Computer Vision and Pattern Recognition, pp. 885–891 (1998)

Chai, Y., Ren, J., Zhao, H., Li, Y., Ren, J., Murray, P.: Hierarchical and multi-featured fusion for effective gait recognition under variable scenarios. Pattern Anal. Appl. 19(4), 905–917 (2016)

Chang, H., Yeung, D.Y., Xiong, Y.: Super-resolution through neighbor embedding. Proc. Comput. Vis. Pattern Recognit. 1, 275–282 (2004)

Che, T., Li, Y., Jacob, A.P., Bengio, Y., Li, W.: Mode regularized generative adversarial networks. CoRR arxiv:abs/1612.02136 (2016)

Chen, R., Qu, Y., Li, C., Zeng, K., Xie, Y., Li, C.: Single-image super-resolution via joint statistical models-guided deep auto-encoder network. Neural Comput. Appl. pp. 1–11 (2019)

Chen, Y., Tai, Y., Liu, X., Shen, C., Yang, J.: Fsrnet: End-to-end learning face super-resolution with facial priors. In: Proceedings of Computer Vision and Pattern Recognition, pp. 2492–2501 (2018)

Cristóbal, G., Gil, E., Šroubek, F., Flusser, J., Miravet, C., Rodríguez, F.d.B.: Superresolution imaging: a survey of current techniques. In: Advanced signal processing algorithms, architectures, and implementations XVIII, vol. 7074, p. 70740C (2008)

Dai, D., Timofte, R., Van Gool, L.: Jointly optimized regressors for image super-resolution. Comput. Graph. Forum 34(2), 95–104 (2015)

Dai, S., Han, M., Xu, W., Wu, Y., Gong, Y.: Soft edge smoothness prior for alpha channel super resolution. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 1–8 (2007)

Dai, T., Cai, J., Zhang, Y., Xia, S.T., Zhang, L.: Second-order attention network for single image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 11065–11074 (2019)

Demirel, H., Anbarjafari, G.: Image resolution enhancement by using discrete and stationary wavelet decomposition. IEEE Trans. Image Process. 20(5), 1458–1460 (2011)

Denton, E.L., Chintala, S., Fergus, R., et al.: Deep generative image models using a laplacian pyramid of adversarial networks. In: Proceedings of Advances in neural information processing systems, pp. 1486–1494 (2015)

Dey, N., Li, S., Bermond, K., Heintzmann, R., Curcio, C.A., Ach, T., Gerig, G.: Multi-modal image fusion for multispectral super-resolution in microscopy. In: Proceedings of Medical Imaging 2019: Image Processing, p. 109490D (2019)

Dong, C., Loy, C.C., He, K., Tang, X.: Learning a deep convolutional network for image super-resolution. In: Proceedings of European Conference on Computer Vision, pp. 184–199 (2014)

Dong, C., Loy, C.C., Tang, X.: Accelerating the super-resolution convolutional neural network. In: Proceedings of European Conference on Computer Vision, pp. 391–407 (2016)

Dong, W., Zhang, L., Shi, G., Wu, X.: Image deblurring and super-resolution by adaptive sparse domain selection and adaptive regularization. IEEE Trans. Image Process. 20(7), 1838–1857 (2011)

Duchon, C.E.: Lanczos filtering in one and two dimensions. J. Appl. Meteorol. 18(8), 1016–1022 (1979)

Fahmy, G.: Super-resolution construction of iris images from a visual low resolution face video. In: National Radio Science Conference, pp. 1–6 (2007)

Fujimoto, A., Ogawa, T., Yamamoto, K., Matsui, Y., Yamasaki, T., Aizawa, K.: Manga109 dataset and creation of metadata. In: Proceedings of International Workshop on Comics Analysis, Processing and Understanding, pp. 2–3 (2016)

Gatys, L.A., Ecker, A.S., Bethge, M.: A neural algorithm of artistic style. CoRR arxiv:abs/1508.06576 (2015)

Girshick, R., Donahue, J., Darrell, T., Malik, J.: Rich feature hierarchies for accurate object detection and semantic segmentation. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 580–587 (2014)

Glasner, D., Bagon, S., Irani, M.: Super-resolution from a single image. In: Proceedings of IEEE International Conference on Computer Vision, pp. 349–356 (2009)

Gong, W., Qi, L., Xu, Y.: Privacy-aware multidimensional mobile service quality prediction and recommendation in distributed fog environment. Wireless Communications and Mobile Computing 2018, (2018)

Goodfellow, I.J., Abadie, J.P., Mirza, M., Xu, B., Farley, D.W., Ozair, S., Courville, A.C., Bengio, Y.: Generative adversarial nets. In: Proceedings of Neural Information Processing Systems, pp. 2672–2680 (2014)

Greenspan, H.: Super-resolution in medical imaging. Comput. J. 52(1), 43–63 (2009)

Gu, S., Sang, N., Ma, F.: Fast image super resolution via local regression. In: Proceedings of International Conference on Pattern Recognition, pp. 3128–3131 (2012)

Ha, V.K., Ren, J., Xu, X., Zhao, S., Xie, G., Vargas, V.M.: Deep learning based single image super-resolution: A survey. In: International Conference on Brain Inspired Cognitive Systems, pp. 106–119 (2018)

Haris, M., Shakhnarovich, G., Ukita, N.: Deep back-projection networks for super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1664–1673 (2018)

Hayat, K.: Multimedia super-resolution via deep learning: a survey. Digital Signal Processing (2018)

He, K., Zhang, X., Ren, S., Sun, J.: Deep residual learning for image recognition. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 770–778 (2016)

Hong, C., Yu, J., Tao, D., Wang, M.: Image-based three-dimensional human pose recovery by multiview locality-sensitive sparse retrieval. IEEE Trans. Ind. Electr. 62(6), 3742–3751 (2015)

Hong, C., Yu, J., Wan, J., Tao, D., Wang, M.: Multimodal deep autoencoder for human pose recovery. IEEE Trans. Image Process. 24(12), 5659–5670 (2015)

Hu, Y., Li, J., Huang, Y., Gao, X.: Channel-wise and spatial feature modulation network for single image super-resolution. CoRR arXiv:abs/1809.11130 (2018)

Huang, J.B., Singh, A., Ahuja, N.: Single image super-resolution from transformed self-exemplars. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 5197–5206 (2015)

Huang, Y., Shao, L., Frangi, A.F.: Simultaneous super-resolution and cross-modality synthesis of 3d medical images using weakly-supervised joint convolutional sparse coding. arXiv preprint arXiv:1705.02596 (2017)

Hung, K.W., Siu, W.C.: New motion compensation model via frequency classification for fast video super-resolution. In: Proceedings of IEEE International Conference on Image Processing, pp. 1193–1196 (2009)

Ji, H., Fermüller, C.: Robust wavelet-based super-resolution reconstruction: theory and algorithm. IEEE Trans. Pattern Anal. Mach. Intell. 31(4), 649–660 (2009)

Johnson, J., Alahi, A., Fei-Fei, L.: Perceptual losses for real-time style transfer and super-resolution. In: Proceedings of European Conference on Computer Vision, pp. 694–711 (2016)

Joshi, M.V., Chaudhuri, S., Panuganti, R.: Super-resolution imaging: use of zoom as a cue. Image Vis. Comput. 22(14), 1185–1196 (2004)

Kim, J., Kwon Lee, J., Mu Lee, K.: Accurate image super-resolution using very deep convolutional networks. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1646–1654 (2016)

Kim, J., Kwon Lee, J., Mu Lee, K.: Deeply-recursive convolutional network for image super-resolution. In: Proceedings of the IEEE Conference on Computer Vision and Pattern Recognition, pp. 1637–1645 (2016)

Krämer, P., Benois-Pineau, J., Domenger, J.P.: Local object-based super-resolution mosaicing from low-resolution video. Signal Process. 91(8), 1771–1780 (2011)

Krizhevsky, A., Sutskever, I., Hinton, G.E.: Imagenet classification with deep convolutional neural networks. In: Proceedings of Advances in neural information processing systems, pp. 1097–1105 (2012)

Lai, W., Huang, J., Ahuja, N., Yang, M.: Deep laplacian pyramid networks for fast and accurate super-resolution. In: Proceedings of IEEE Conference on Computer Vision and Pattern Recognition, pp. 5835–5843 (2017)

Lai, W., Huang, J., Ahuja, N., Yang, M.: Fast and accurate image super-resolution with deep laplacian pyramid networks. CoRR arXiv:abs/1710.01992 (2017)

Lai, W.S., Huang, J.B., Ahuja, N., Yang, M.H.: Deep laplacian pyramid networks for fast and accurate super-resolution. In: Proceedings of Computer Vision and Pattern Recognition (2017)

LeCun, Y., Bottou, L., Bengio, Y., Haffner, P., et al.: Gradient-based learning applied to document recognition. Proc. IEEE 86(11), 2278–2324 (1998)

Ledig, C., Theis, L., Huszar, F., Caballero, J., Cunningham, A., Acosta, A., Aitken, A.P., Tejani, A., Totz, J., Wang, Z., Shi, W.: Photo-realistic single image super-resolution using a generative adversarial network. In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition, pp. 105–114 (2017)

Li, J., Feng, J., Kuo, C.C.J.: Deep convolutional neural network for latent fingerprint enhancement. Signal Process. Image Commun. 60, 52–63 (2018)

Li, K., Zhu, Y., Yang, J., Jiang, J.: Video super-resolution using an adaptive superpixel-guided auto-regressive model. Pattern Recognit. 51, 59–71 (2016)

Li, X., Orchard, M.T.: New edge-directed interpolation. IEEE Trans. Image Process. 10(10), 1521–1527 (2001)

Li, Y., Agustsson, E., Gu, S., Timofte, R., Van Gool, L.: Carn: convolutional anchored regression network for fast and accurate single image super-resolution. In: Proceedings of European Conference on Computer Vision, pp. 166–181 (2018)

Lim, B., Son, S., Kim, H., Nah, S., Lee, K.M.: Enhanced deep residual networks for single image super-resolution. In: Proceedings of 2017 IEEE Conference on Computer Vision and Pattern Recognition Workshops, pp. 1132–1140 (2017)

Lin, M., Chen, Q., Yan, S.: Network in network. CoRR arXiv:abs/1312.4400 (2013)