Abstract

Purpose

Biomechanical simulation of anatomical deformations caused by ultrasound probe pressure is of outstanding importance for several applications, from the testing of robotic acquisition systems to multi-modal image fusion and development of ultrasound training platforms. Different approaches can be exploited for modelling the probe–tissue interaction, each achieving different trade-offs among accuracy, computation time and stability.

Methods

We assess the performances of different strategies based on the finite element method for modelling the interaction between the rigid probe and soft tissues. Probe–tissue contact is modelled using (i) penalty forces, (ii) constraint forces, and (iii) by prescribing the displacement of the mesh surface nodes. These methods are tested in the challenging context of ultrasound scanning of the breast, an organ undergoing large nonlinear deformations during the procedure.

Results

The obtained results are evaluated against those of a non-physically based method. While all methods achieve similar accuracy, performance in terms of stability and speed shows high variability, especially for those methods modelling the contacts explicitly. Overall, prescribing surface displacements is the approach with best performances, but it requires prior knowledge of the contact area and probe trajectory.

Conclusions

In this work, we present different strategies for modelling probe–tissue interaction, each able to achieve different compromises among accuracy, speed and stability. The choice of the preferred approach highly depends on the requirements of the specific clinical application. Since the presented methodologies can be applied to describe general tool–tissue interactions, this work can be seen as a reference for researchers seeking the most appropriate strategy to model anatomical deformation induced by the interaction with medical tools.

Similar content being viewed by others

Avoid common mistakes on your manuscript.

Introduction

Ultrasound (US) imaging is extensively used in several routine procedures, mainly due to its cost-effectiveness, non-invasiveness and real-time capabilities. Its main limitation is the low image quality, which highly depends on proper acoustic coupling between the probe and the tissues. The identification of the optimal transducer positioning that allows to obtain acceptable image quality heavily relies on the radiologist’ expertise and requires the sonographer to apply a certain level of compression to the anatomy, which can reach several centimetres depending on the imaged tissue [1, 2]. In recent years, robotic ultrasound systems (RUSs) either assisting or automating the entire procedure have been proposed to improve the performance of manual acquisition systems, due to the high precision, dexterity and repeatability that robotic manipulators can bring [3, 4]. The growing popularity of RUSs has raised interest towards robot simulation environments, which can support the test and validation of new systems, allowing to identify possible problems and predict potentially dangerous situations [5]. Simulation plays a crucial role in RUSs, where understanding the stresses and deformations arising from the interaction between the probe and the tissues is of paramount importance to guarantee patient’s safety. Modelling and simulation of US probe-induced deformations is useful in many other applications. For example, image fusion techniques for the alignment of high-resolution pre-operative images (MRI/CT) and intra-operative US during image-guided procedures have to account for the compressional effects induced to the tissues by the US probe [1]. A biomechanical model of probe–tissue interaction can also be exploited to correct for deformations in the 3D US reconstruction process [2, 6]. Eventually, the development of computer-based ultrasound training systems that allow radiologists to practice the scanning technique have to realistically simulate probe-induced deformations in real-time [7, 8].

The preferred approach to model tissue deformations relies on the finite element (FE) method, which allows to account for soft tissues mechanical behavior exploiting the laws of continuum mechanics. However, this method usually comes at the expenses of high computational complexity, especially when interactions with other objects have to be modelled. Realistic modelling of the contacting bodies is very complex when dealing with living systems, which are usually characterised by highly irregular geometries, non-linear constitutive models and nearly incompressible materials. These factors can lead to ill-conditioned problems and introduce significant numerical issues, often causing instabilities in the simulations. This represents a major weakness especially within a robotic framework, where simulation stability is recognised as the most important feature [9]. Some alternative modelling strategies which are not based on physical descriptions of deformations also exist and have the potential to be both fast and stable for soft tissues modelling [7, 8, 10]. However, they do not rely on real tissue mechanical properties, posing some challenges in the identification of model parameters.

In this paper, we analyse the main FE-based approaches which can be exploited for modelling the interaction between the US probe and a deformable organ. Our aim is to evaluate their performances in terms of accuracy, computation time and stability, and to compare the results with those obtained by a non-physically based method. We refer to the three most popular modelling strategies which can be employed to model probe–tissue interaction: (i) imposing penalty forces [8], (ii) describing the contact as a constraint [11] or (iii) directly displacing the surface nodes [1, 6]. Input of our simulations is always represented by US probe displacement, to ensure the widest applicability and generalisation capability of the methods. We do not consider scenarios where deformations are driven by forces, which would require force sensing apparatus, difficult to incorporate within the common clinical devices [2]. The rationale of this work comes from the fact that, to the best of the authors’ knowledge, a comparison of different strategies available for modelling probe–tissue interaction cannot be found in the literature, but it would be extremely helpful to support the choice of the most appropriate model [12]. Furthermore, we make our simulations publicly availableFootnote 1 to encourage the adoption of the methods.

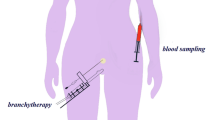

Patient-specific simulations obtained with the detailed methods are compared with experimental data from the ultrasound scanning of the breast, a challenging structure to model due to the huge nonlinear deformations it undergoes during the scanning process. A peculiarity of this work is that, in order to accurately describe breast behavior, we have to rely on hyperelastic constitutive laws, which are not usually exploited in contact problems due to the additional complexity they introduce. Linear elastic or corotated formulations usually represent the preferred choices [1, 8, 13]. Modelling the probe–tissue interaction for this anatomical structure is particularly relevant for two main reasons. First of all, many attempts have been made lately towards the development of autonomous RUSs for the breast, a very active and promising field [14]. Secondly, US-guided biopsy is the preferred technique for breast cancer diagnosis, where US images are mainly used to track the needle. However, due to the fact that distinguishing target lesions on US can be really challenging, an accurate and fast model of the probe-induced deformations can support in the online prediction of the displacement of MRI/CT-detected lesions [15].

The paper is organised as follows: First, the different strategies to simulate probe–tissue interaction are described in “Methods” section. In “Results” section, the different methods are assessed on US scanning of the breast, and we discuss the obtained results in “Discussion” section. Finally, “Conclusion” section presents our conclusions.

Methods

The finite element method

The finite element method is an approach that converts the systems of partial differential equations describing the dynamic equilibrium motion equation (Newton’s second law) into systems of algebraic equations which can be solved numerically. In the solution process, such equations are discretised both in space and time, leading to a problem which can be synthetically formulated as:

where \(\mathbf {a},\mathbf {x},\mathbf {v},\mathbf {f}\) are, respectively, the acceleration, position, velocity and force (both internal and external) vectors and \(\mathbf {M}\) stands for the mass matrix. A time integration scheme is used to numerically solve the problem in time, allowing to formulate (1) as a linear system. We perform numerical integration using a backward Euler scheme, which offers a good trade-off between robustness, convergence and stability. Velocities and positions are updated based on accelerations at the end of each time step h:

We consider the first-order approximation of \(\mathbf {f}\) (one per time step):

where \(\mathbf {K}\) is the stiffness matrix and \(\mathbf {B}\) the damping matrix. Substituting (2) and (4) into (3) provides the final linearised system:

where \(\mathbf {dv}= h\mathbf {a}= \mathbf {v}^{t+h} - \mathbf {v}^{t}\).

The obtained set of linear equations is solved for \(\mathbf {dv}\) using either direct or iterative solvers. Direct solvers compute the solution exactly, either calculating the actual inverse or a factorisation of the system matrix \(\mathbf {A}\). Although these methods are often too costly, some optimised libraries exist that allow to parallelise these operations on CPU [16]. On the other hand, iterative solvers such as the conjugate gradient (CG) produce a sequence of approximate solutions approaching the exact one. These methods can be very fast especially if they are tuned to stop when acceptable accuracy is reached, even if it is before convergence. However, they can converge slowly for ill-conditioned problems.

Penalty method

In the penalty method, contacts are solved by applying a spring-like force \(\mathbf {f_{pen}}\) proportional to the amount of penetration \(\delta \) at each contact point, in the direction \(\mathbf {n}\) normal to the surface:

This force is treated as an external force and contributes to the right hand side of (1). The higher the value of the proportionality coefficient \(k_s\), called contact stiffness, the better the constraint is satisfied. However, large values of \(k_s\) make the condition number of the system matrix \(\mathbf {A}\) worse, often causing problems in convergence and instabilities in the simulations. The selection of \(k_s\) is also problem-dependent, and heavily depends on the ratio of the material stiffness between the contacting objects, making this method limited for our applications. Despite these stability issues, the penalty method is the easiest to implement and can be very fast.

Lagrange multipliers method

Differently from the penalty approach, methods based on Lagrange multipliers (LM) allow to solve the contact condition exactly by treating contacts as constraints. The equation system (1) is extended to include constraints contribution \(\mathbf {H}^T \lambda \):

which, after integration, leads to:

In this case, the contact force is represented by the unknown vector \(\lambda \) of LM, which imposes the impenetrability condition defined by Signorini’s law [17]. The equation system to solve becomes more complex since, at the beginning of each time step, both the multiplier values and the new positions are unknown. The solving process involves three main phases:

-

(1)

Free motion A free configuration \(\mathbf {dv}_i^{\mathrm {free}}\) is obtained for each interacting object (in the following, i denotes the index of simulated body) by solving the corresponding Eq. (8) independently, and setting \(\lambda = 0\).

-

(2)

Collision detection The free motion results in new configurations of bodies, making it necessary to detect possible collisions. The output of this phase is represented by the constraint matrices \(\mathbf {H}_i\) and actual violations of the constraints \(\varvec{\delta }^{\mathrm {free}}\) due to the free motion.

-

(3)

Collision response After linearisation of the constraint laws [17], we obtain:

$$\begin{aligned} \varvec{\delta } = \varvec{\delta }^{\mathrm {free}} + h \sum _i \mathbf {H}_i\mathbf {dv}_i^{\mathrm {corr}} \end{aligned}$$(9)

With \(\mathbf {dv}_i^{\mathrm {corr}}\) being the unknown corrective motion (\(\mathbf {dv}= \mathbf {dv}^{\mathrm {free}} + \mathbf {dv}^{\mathrm {corr}}\)) when solving (8) with \(\mathbf {b}_i = 0\). By gathering (8) and (9), we get:

We obtain the value of \(\lambda \) using a projected Gauss–Seidel algorithm that iteratively checks and projects the various constraint laws (see [18]). Finally, the corrective motion is computed as follows:

The LM approach is the method of choice to obtain a stable and robust handling of contacts, but to the detriment of computational performances. Another advantage of this method is that interaction forces are accurately estimated, which can be very helpful within robot control loops, provided that the real mechanical properties of the organ of interest are known.

Prescribed displacements method

The last method considered models probe–tissue interaction as a Dirichlet boundary condition on the organ surface. Since the US probe is represented as a rigid body, we can assume that when the anatomy is deformed during the scanning process, points on the organ surface below the US probe are displaced of the same exact amount as the probe itself. This modelling strategy is less general than the previous two approaches, since it relies on two major assumptions: (i) probe motion is completely known a priori, which allows us to precompute contact points, and (ii) the contacting surface does not change during the scanning, i.e., no relative motion exists between the contact surfaces. From a formulation point of view, this approach is very similar to the penalty method described in 2.1.1, except that it does not require the collision detection phase. Despite this fact limiting its general applicability, it makes the model promising to achieve high computational performances. Furthermore, it represents a displacement-zero traction problem and as such it has the advantage that it does not require patient-specific mechanical properties [19].

Experimental setup

The performances of the presented approaches are evaluated on ultrasound scanning of a realistic multi-modality breast phantom (Model 073; CIRS, Norfolk, USA) with some internal stiff masses (diameter of 5–10 mm), whose 3D models are obtained by segmenting the phantom CT image. In our experimental protocol, we select one lesion at a time and we reposition the US probe on the breast surface such that the considered lesion is visible on the US image. Lesions position at rest (i.e., without applying any deformation, when the probe is only slightly touching the surface) are manually extracted from the corresponding US images and considered as landmarks to track, both in the real and in the simulated environments. They are selected as points of the lesion contour lying closest to the probe, exploiting in this way the better visibility of interfaces on US images and avoiding possible inaccuracies introduced by centroid computation. We then impose four probe-induced deformations of increasing extent in the direction normal to the surface in correspondence to 10 segmented masses. Image acquisition is performed with a Freehand Ultrasound System (FUS) based on a Telemed MicrUs US device (Telemed, Vilnius, Lithuania) equipped with a linear probe (model L12-5N40). An optical tracking system MicronTracker Hx40 (ClaronNav, Toronto, Canada) is exploited to know in real-time the position and orientation of the FUS, thus of the US image plane (Fig. 1a). The overall probe spatial calibration error is below 1 mm (± 0.7147), and below 0.5 mm (± 0.334) for the pointer used for fiducial points localisation required for the initial rigid registration. This setup allows us to extract the three-dimensional position of any pixel belonging to the image, and as a consequence to obtain 3D lesion positions relative to each applied deformation, which represent our ground truths.

Simulated scenario

We evaluate the described approaches in terms of accuracy, computation time and simulation stability. The proposed methods are compared to a non-physically based method which relies on the position-based dynamics (PBD) formulation, which has already demonstrated capable of describing the same scenario [10]. Within the range of existing heuristic methods, PBD is particularly promising for our target scenario, because of the unconditional stability and high speed that characterise this approach.

All the simulations are run on a laptop equipped with an Intel i7-8750H processor, 16 GB RAM and a NVIDIA GeForce GTX 1050 GPU, and share the same assumptions and approximations. In both FE and PBD simulations, the breast is modelled as a homogeneous object using deformation parameters estimated specifically for the same breast phantom used in our experiments, which makes the considered models patient-specific [10, 20]. Probe–breast interaction is treated as a rigid-soft frictionless contact problem, where the US probe moves at fixed velocity of 0.01 m/s. Gravity load is not explicitly considered, since the geometry model is already acquired within the gravitational field (being extracted from a CT image). As boundary condition, all the points belonging to the lowest phantom surface are constrained in all directions. The same fixed time step of [10] is used for all the simulations (\(h=0.02\,\hbox {s}\)). Since a robust comparison of the performances of different modelling strategies requires the reliance on a common framework, we choose to perform all FE-based simulations within SOFA, the state-of-the-art engine for interactive FE-based medical applications (Fig. 1b) [21, 22]. Simulations for each method are repeated considering increasing levels of spatial discretisation (i.e., volume mesh resolution). Since mesh resolution is one of the variables most influencing the performances of FE-based simulations, the introduction of this additional variable in the evaluation allows us to estimate the relative impact that the choice of the method and the discretisation level have on the performances. In all cases, collision detection is performed using the default pipeline provided by SOFA [22] on a surface mesh composed of 1004 triangles, which proves able to maintain a good accuracy while keeping the number of active constraints to a minimum. The conjugate gradient algorithm is used to solve the system of equations of the penalty (Penalty) and the prescribed displacements (PrescrDispl) methods. The maximum number of allowed CG iterations is set to 25, a value which proved able to speed up the solving process while keeping the simulation accuracy aligned with that of the other methods. Simulations relying on the LM method require the use of a direct solver, for the computation of the matrix \(\mathbf {W}\). To this purpose, we exploit the state-of-the-art solver Pardiso, whose multithreading implementation allows to achieve enhanced performance [16].

Results

We compare the presented methodologies considering three main performance criteria: accuracy, speed and stability, at different discretisation levels. For each lesion, at each deformation level, accuracy is evaluated by comparing model-predicted lesion positions \(\mathbf {X}_{\mathbf {model}}\) with the real lesion coordinates extracted from US images \(\mathbf {X}_{\mathbf {US}}\), tracked as described in “Experimental Set-up” section. Localisation error at deformation l relative to tumor n is computed as:

where \(\Vert \cdot \Vert \) represents the Euclidean distance. Figure 2 reports the distribution of the errors produced by each method on all the tumors and all deformations, at increasing mesh resolutions. Boxplots are obtained including only errors relative to “valid” deformations, i.e. those which have been successfully simulated without any instabilities by all the three methods. In this way, we prevent the occurrence of any possible bias in the distributions which could arise if a method has been more stable than the others (and as such, it would have had more error values). From a more detailed analysis of the results it has emerged that largest errors are obtained at high input deformations and in correspondence of deeper lesions. The PBD method described in [10] achieves on the same data a median error (interquartile range) of \(4.69\,(3.32{-}6.12)\,\hbox {mm}\).

The performances of the presented methods are also evaluated in terms of speed. Boxplots in Fig. 3 show how the computation time \(t_{\mathrm{comp}}\) required by each method to simulate a time step h changes at different discretisation levels. When dealing with dynamic simulations, one usually evaluates the capability of a method to meet the real-time requirement (i.e. \(t_{\mathrm{comp}} \le h\)) and/or to guarantee interactivity, which translates into ensuring that simulation runs at least at 25 frames per second (i.e., \(t_{\mathrm{comp}} \le 0.04\,\hbox {s}\)), when only visual feedback is required. The highly optimised PBD implementation provided by NVIDIA FleX is able to keep the simulated and computation times always equal (\(t_{\mathrm{comp}} = h\)).

As a final metric, we assess stability of the methods by evaluating their capability to complete the experiments from the beginning to the end. For this analysis, we use the word “experiment” to refer to the process involving the application of the four increasing input deformations. This means that we perform one experiment per tumor, for a total of 10 (i.e. number of lesions) experiments. For each method, at each discretisation level, we evaluate the percentage of experiment which is successfully accomplished for each tumor, before the occurrence of any instabilities (Figure 1). If a method was able to complete all the 10 experiments, the associated average percentage would be 100%. The main advantage of the PBD method is its unconditional stability, which guarantees simulations to always remain stable (mean and median stability of 100%, in the range 100–100).

Discussion

In this work, we compared different strategies to model the interaction between the US probe and soft tissues in terms of accuracy, computation time and stability. From an accuracy point of view, all the methods achieve similar performances. It is interesting to notice that increasing volume mesh resolution leads to a slight reduction in the error dispersion but not to a significant improvement in the overall accuracy, which is comparable with average lesion dimensions and thus acceptable for biopsy targeting purposes. This suggests that probe–tissue interactions can be accurately reproduced even with coarse meshes, because of the smoothness of the induced deformations. The reached accuracy level is thus not limited by a poor spatial discretisation, but might have a upper bound due to registration and calibration errors and possibly the chosen temporal discretisation. If we analyse the computational performances, the fastest FE methods are those which rely on the simplest equation systems, i.e. PrescrDispl and Penalty. Modelling contacts through constraints is the most time consuming approach, despite the use of an optimised solver. Figure 3 shows that using fine meshes has a strong impact on the computation time, which increases for all the methods with the number of elements. A drop of computational performances at high mesh resolutions is particularly important for LMmethod, even though the number of active constraints remain constant in all simulations. It is interesting to notice that, despite relying solely on CPU, the FE approaches tested in this work can meet the real-time constraint for several different discretisations (especially PrescrDispl and Penalty). We expect that enhanced FE implementations taking advantage of the parallel capabilities of GPU would be able to further improve such computational performances, and we plan to assess this in future works. Although some GPU-based FE approaches have been already proposed, they have not been included in this study since they are either incompatible with hyperelastic simulations [13] or not available within the SOFA framework [23, 24]. The choice of relying on a common open-source simulation platform has allowed us not only to compare the different approaches but also to publicly share the simulation scenes. Furthermore, by providing general implementations of the various methods, SOFA allows to simulate any medical scenarios involving deformable structures, making it possible to exploit the approaches tested in this work to model any kind of tool–tissue interaction. PrescrDispl is the FE approach reaching the best performances in terms of stability. In general, simulations are more likely to become unstable at high deformations, when the effect of non-linearities becomes significant. An interesting result which emerges from Table 1 is that using high-resolution meshes leads to higher simulation instability, which may be due to the introduction of further numerical errors preventing simulations from being completed.

Within the considered context, a non-physically based method (PBD) has proved able to reach an optimal compromise among the different performance criteria: it achieves an accuracy which is comparable to FE simulations, meeting the real-time constraint and maintaining enhanced stability, which make it particularly suitable for employment within a robotic simulation framework. The main drawback of PBD is that model parameters need to be calibrated for each scenario, which represents a great challenge in the medical field. Another limitation is that PBD cannot provide an estimation of the interaction forces, which may be useful in a robotic context. On the other hand, all FE-based methods benefit from the possibility of using the real elastic parameters and estimating the stress distribution within the organs. Table 2 summarises the main advantages and disadvantages of the considered methods. The best trade-off among the performance criteria is achieved when the interaction is modelled by prescribing the displacement of surface nodes. The simpler mathematical description of the physical problem, without the involvement of any external forces, allows to achieve high speed while guaranteeing high simulation stability. The limitation of this method lies in the assumption that the breast–probe contact area is a-priori known and does not vary during the procedure. This represents a major constraint when the simulation is required to run online during the scanning itself, such as in freehand acquisitions. However, this constraint is not a strong limitation in a robotic scenario, where the robot motion is commonly planned in advance, thus enabling the a-priori estimation of the contact surface. The most general ways to describe probe–tissue interaction with FE involve collision handling. The penalty method can reach close to real-time performances, but its stability highly depends on the contact stiffness value, which has to be tuned for each specific problem. This modelling strategy is likely to represent the most appropriate choice in scenarios which are less complex than the one described in this work (for example, with smaller input deformations and/or elastic materials) [8]. Describing the problem as a constraint (LM) is the most general and widely applicable approach. In addition to their independence on a specific parametrisation, LM-based methods are able to provide a direct measure of the interaction forces through Lagrange multipliers values. The main limitation of this approach is the computation time, which becomes prohibitive if fine spatial discretisation is needed. The high computation burden of LMmay be due to the fact that the system matrix \(\mathbf {A}\) is factorised at each simulation step by the direct solver. Being matrix factorisation one of the most demanding steps, we performed some tests using an alternative approach where \(\mathbf {A}^{-1}\) is updated less frequently (every 5 time steps, as in [13]). However, only a slight improvement in computational performances was achieved, at the expenses of stability. It means that the assumption that the system matrix \(\mathbf {A}\) does not change significantly between consecutive time steps does not always hold for hyperelastic objects, causing divergence in the simulation if an approximation of the real matrix is used. Overall, our results allow us to conclude that LMcan be exploited to model probe–tissue interaction in a stable way and at interactive rates using coarse meshes, without introducing a significant loss of accuracy.

In this work, we compared several strategies that can be employed for the modelling of the interaction between the US probe and soft tissues, considering different spatial resolutions. Currently, our acquisitions are limited to compression experiments, being this kind of input the most relevant case, causing the greatest anatomical deformations. However, we plan to extend the experimental protocol in order to include also the sliding interactions. Furthermore, although the use of a hyperelastic formulation makes our problem challenging, we are modelling the breast as homogeneous and with very simple boundary conditions. These modelling assumptions might not hold when we deal with clinical cases, and will require us to extend our model with more complex formulations. Future works will also include the integration of SOFA within a robot simulation framework and test its performances in supporting the development of an autonomous RUS. Although data in this work are acquired with a freehand system, we expect it would be straightforward to repeat the same experiments with the probe held by a robotic manipulator. Furthermore, we will also investigate new emerging methods, which model the tool–tissue interaction combining FEM with machine learning. This approach has the potential of having the same accuracy of FEM, but also improving the stability and computational performance at the same level of non-physically based methods [25].

Conclusion

In this work, we evaluated the capability of different strategies to model the interaction between the ultrasound probe and soft tissues, relying on the finite element method. The approach achieving the best trade-off between accuracy, speed and stability imposes anatomy deformation by prescribing the displacement of surface mesh nodes. The most general formulations describe the interaction by explicitly modelling the contacts, but they usually introduce a lack of either computational performances or stability. The presented methodologies can be applied to describe the interaction between rigid tools and soft tissues in general. Therefore, this work can be seen a reference for researchers looking for the most appropriate strategy to model tool-induced anatomical deformations, which meets the requirements of the target clinical application.

References

Pheiffer TS, Thompson RC, Rucker DC, Simpson AL, Miga MI (2014) Model-based correction of tissue compression for tracked ultrasound in soft tissue image-guided surgery. Ultrasound Med Biol 40(4):788–803

Burcher MR, Han L, Noble JA (2001) Deformation correction in ultrasound images using contact force measurements. In: Proceedings IEEE workshop on mathematical methods in biomedical image analysis (MMBIA 2001). IEEE, pp 63–70

Priester AM, Natarajan S, Culjat MO (2013) Robotic ultrasound systems in medicine. IEEE Trans Ultrason Ferroelectr Freq Control 60(3):507–523

Huang Q, Lan J, Li X (2018) Robotic arm based automatic ultrasound scanning for three-dimensional imaging. IEEE Trans Industr Inf 15(2):1173–1182

Reckhaus M, Hochgeschwender N, Paulus J, Shakhimardanov A, Kraetzschmar GK (2010) An overview about simulation and emulation in robotics. In: Proceedings of SIMPAR, pp 365–374

Flack B, Makhinya M, Goksel O (2016) Model-based compensation of tissue deformation during data acquisition for interpolative ultrasound simulation. In: 2016 IEEE 13th international symposium on biomedical imaging (ISBI). pp 502–505

Camara M, Mayer E, Darzi A, Pratt P (2017) Simulation of patient-specific deformable ultrasound imaging in real time. In: Imaging for patient-customized simulations and systems for point-of-care ultrasound. Springer, pp 11–18

Petrinec K, Savitsky E, Terzopoulos D (2014) Patient-specific interactive simulation of compression ultrasonography. In: 2014 IEEE 27th international symposium on computer-based medical systems. pp 113–118

Ivaldi S, Peters J, Padois V, Nori F (2014) Tools for simulating humanoid robot dynamics: a survey based on user feedback. In: 2014 IEEE-RAS international conference on humanoid robots. IEEE, pp 842–849

Tagliabue E, Dall’Alba D, Magnabosco E, Tenga C, Peterlik I, Fiorini P (2019) Position-based modeling of lesion displacement in ultrasound-guided breast biopsy. IJCARS 14:1329–1339

Selmi SY, Promayon E, Sarrazin J, Troccaz J (2014) 3D interactive ultrasound image deformation for realistic prostate biopsy simulation. In: Bello F, Cotin S (eds) Biomedical simulation. Springer, Cham, pp 122–130

Horak PC, Trinkle JC (2019) On the similarities and differences among contact models in robot simulation. IEEE Robot Autom Lett 4(2):493–499

Courtecuisse H, Allard J, Kerfriden P, Bordas SP, Cotin S, Duriez C (2014) Real-time simulation of contact and cutting of heterogeneous soft-tissues. Med Image Anal 18(2):394–410

Mahmoud MZ, Aslam M, Alsaadi M, Fagiri MA, Alonazi B (2018) Evolution of robot-assisted ultrasound-guided breast biopsy systems. J Radiat Res Appl Sci 11(1):89–97

Guo R, Lu G, Qin B, Fei B (2017) Ultrasound imaging technologies for breast cancer detection and management: a review. Ultrasound Med Biol 44:37–70

Schenk O, Gärtner K (2004) Solving unsymmetric sparse systems of linear equations with Pardiso. Future Gener Comput Syst 20(3):475–487

Duriez C, Andriot C, Kheddar A (2004) Signorini’s contact model for deformable objects in haptic simulations. In: 2004 IEEE/RSJ international conference on intelligent robots and systems (IROS 2004), vol 4. Proceedings. IEEE, pp 3232–3237

Duriez C, Guébert C, Marchal M, Cotin S, Grisoni L (2009) Interactive simulation of flexible needle insertions based on constraint models. In: International conference on medical image computing and computer-assisted intervention. Springer, pp 291–299

Miller K, Lu J (2013) On the prospect of patient-specific biomechanics without patient-specific properties of tissues. J Mech Behav Biomed Mater 27:154–166

Visentin F, Groenhuis V, Maris B, Dall’Alba D, Siepel F, Stramigioli S, Fiorini P (2018) Iterative simulations to estimate the elastic properties from a series of MRI images followed by MRI-US validation. Med Biol Eng Comput 57:913–924

Marchal M, Allard J, Duriez C, Cotin S (2008) Towards a framework for assessing deformable models in medical simulation. In: International symposium on biomedical simulation. Springer, pp 176–184

Faure F, Duriez C, Delingette H, Allard J, Gilles B, Marchesseau S, Talbot H, Courtecuisse H, Bousquet G, Peterlik I, Cotin S (2012) Sofa: a multi-model framework for interactive physical simulation. In: Soft tissue biomechanical modeling for computer assisted surgery. Springer, pp 283–321

Han L, Hipwell J, Mertzanidou T, Carter T, Modat M, Ourselin S, Hawkes D (2011) A hybrid FEM-based method for aligning prone and supine images for image guided breast surgery. In: 2011 IEEE international symposium on biomedical imaging: from nano to macro. IEEE, pp 1239–1242

Miller K, Joldes G, Lance D, Wittek A (2007) Total lagrangian explicit dynamics finite element algorithm for computing soft tissue deformation. Commun Numer Methods Eng 23(2):121–134

Mendizabal A, Tagliabue E, Brunet JN, Dall’Alba D, Fiorini P, Cotin S (2019) Physics-based deep neural network for real-time lesion tracking in ultrasound-guided breast biopsy. Comput Biomech Med Workshop MICCAI

Acknowledgements

This project has received funding from the European Research Council (ERC) under the European Union’s Horizon 2020 research and innovation programme (grant agreement No 742671 “ARS” and No 688188 “MURAB”).

Author information

Authors and Affiliations

Corresponding author

Ethics declarations

Conflict of Interest

The authors declare that they have no conflict of interest.

Ethical approval

This article does not contain any studies with human participants or animals performed by any of the authors.

Additional information

Publisher's Note

Springer Nature remains neutral with regard to jurisdictional claims in published maps and institutional affiliations.

Rights and permissions

About this article

Cite this article

Tagliabue, E., Dall’Alba, D., Magnabosco, E. et al. Biomechanical modelling of probe to tissue interaction during ultrasound scanning. Int J CARS 15, 1379–1387 (2020). https://doi.org/10.1007/s11548-020-02183-2

Received:

Accepted:

Published:

Issue Date:

DOI: https://doi.org/10.1007/s11548-020-02183-2